1. Introduction

Target tracking is an important branch of computer vision, which belongs to the interdisciplinary field of imaging and image processing, tracking, and control. Target tracking is a technology that can detect targets in real time from image signals, extract target position information, and automatically track target motion. After decades of continuous development and exploration, target tracking technology has made significant progress and is widely used in intelligent video surveillance [

1,

2], human–computer interaction [

3], medical image diagnosis [

4], aviation reconnaissance [

5], precision guidance [

6], military strikes [

7] and other fields. However, due to the diversity of real-world scenarios, achieving precise and robust tracking in complex environments remains a challenging task [

8].

The existing target tracking algorithms can be roughly divided into three categories: generative methods, discriminative methods, and deep learning-based methods. Generative methods include optical flow methods [

9,

10], Bayesian theory-based methods [

11,

12,

13], mean shift-based methods [

14,

15,

16], and subspace analysis-based methods [

17,

18,

19]. This type of method focuses on characterizing the appearance model of the target, and locates it in subsequent image sequences by searching for candidate regions that are most similar to the target model. Although generative methods have strong representation capabilities, they do not utilize discriminative information between foreground and background, and perform poorly in complex scenes such as occlusion and background clutter. The deep learning-based methods [

20,

21,

22,

23,

24,

25,

26,

27] have higher tracking accuracy due to their ability to learn more robust features, but require a large amount of data for training, are computationally time-consuming, and have a large architecture, which is not conducive to deployment on various platforms. Discriminant methods can fully utilize the information difference between the target and background, with a simple architecture and low resource consumption. In particular, methods based on discriminative correlation filtering have high robustness and real-time performance, making them highly favored in mainstream tracking algorithms.

Bolme et al. [

28] proposed MOSSE (Minimum Output Sum of Squared Error), which is the first application of correlation filtering in the field of tracking. When calculating the similarity between the target area and the candidate area, this algorithm utilizes the discrete Fourier transform (DFT) with conjugate symmetry, to transform the time-domain kernel correlation operation into a frequency-domain element wise multiplication, significantly reducing computational complexity and improving tracking speed. Henriques et al. [

29] proposed CSK (Circular Structure with Kernels), which approximates dense sampling by cyclically shifting template samples, and establishes the relationship between correlation filtering and Fourier transform. Subsequently, Henriques et al. [

30] proposed KCF (Kernelized Correlation Filter), which introduces multi-channel hog (Histogram of Oriented Gradient) features to characterize targets. At the same time, the target tracking problem is transformed into a ridge regression problem, and a Gaussian kernel function is added to the ridge regression, which can transform nonlinear problems into linear problems, greatly improving tracking performance, and sparking a research boom in discriminative correlation filtering target tracking algorithms. Subsequently, scholars have conducted research on integrating different features [

31,

32], optimizing scale estimation methods [

33,

34], and improving model update mechanisms [

28,

35], significantly enhancing the tracking performance of filters. However, these algorithms still have some inherent problems. Firstly, discriminative correlation filtering adopts a cyclic shift method for sampling, which assumes periodicity and causes discontinuity at the boundaries of the training samples, resulting in boundary effects that affect the performance of the filter. Secondly, when the target faces scenarios such as background clutter and interference from similar objects, due to the similar features of the interfering objects and the target, they also have high response values on the response map, which affects the accurate extraction of positive and negative samples and causes certain errors. This error will be introduced into the template during the model update process, and converted into the appearance error of the template. As appearance errors accumulate during the tracking process, the target frame gradually deviates from its true position, resulting in drift phenomenon.

There are usually two solutions for the above problems in existing algorithms: (1) Constructing a more robust filter model by adding spatial–temporal regularizations, thereby suppressing boundary effects and preventing filter contamination. (2) Setting a reliability judgment mechanism to supervise the tracking results and correct unreliable results, which can prevent model drift or even tracking failure. In terms of adding spatial–temporal regularization constraints, Danelljan et al. [

36] proposed the SRDCF (Spatially Regularized Discriminative Correlation Filters). The SRDCF introduces spatial regularization terms in the filter learning process, which apply larger penalty coefficients to pixels farther away from the target, thereby alleviating boundary effects. Galoogahi et al. [

37] proposed BACFs (Background-Aware Correlation Filters), which densely samples real negative samples from the background, overcomes boundary effects, improves sample quality, and suppresses interference from background information. Li et al. [

38] first introduced the temporal regularization term into SRDCFs, and proposed STRCF (Spatial-Temporal Regularized Correlation Filters). By suppressing filter distortion between adjacent frames, they ensured the continuity of the filter in time and alleviated model degradation. However, fixed spatial–temporal regularization parameters make it difficult for the filter to adapt to changes in the appearance of the target, which to some extent limits the performance of the filter. To this end, Li et al. [

7] proposed Auto Track, which utilizes the change information of the response map to automatically adjust the spatial–temporal regularization parameters. Although this method has improved the ability of the filter to cope with complex scenes to a certain extent, due to the limited information that the response map can convey, the spatial–temporal regularization parameters have not fully utilized the discriminative features of the target and background, resulting in poor anti drift ability of the filter. In terms of establishing a reliability judgment mechanism, Bolme et al. [

28] proposed the Peak Sidelobe Ratio (PSR) using the local fluctuation degree near the main peak of the response map, while Wu et al. [

39] utilized the global fluctuation degree of the response map to obtain the Average Peak Correlation Energy (APCE). The larger the values of these two, the higher the reliability of the tracking results. Although they can reflect the reliability of tracking results to a certain extent, they are very sensitive to the degree of distortion of the target. In the actual tracking process, it is difficult to find a certain threshold to judge the degree of distortion of the target. Therefore, in some scenarios with low confidence but accurate tracking results, it is easy to cause misjudgment.

In this paper, we propose a distortion-aware dynamic spatial–temporal regularized correlation filtering target tracking algorithm (DADSTRCF), to better address the aforementioned issues. Based on good Auto Track, we use color histograms to generate masks and construct dynamic spatial regularization terms, which fully explore the discriminative information between the target and background. This enables accurate suppression of interfering objects in the background, especially those with similar features to the target. At the same time, a distortion perception function was constructed by combining the local and global fluctuations of the response map, which can clearly reflect the degree of distortion of the target and accurately judge the reliability of the tracking results. We integrate the Kalman tracker into the correlation filtering framework, and use the Kalman tracker to predict the tracking results when the target undergoes severe distortion. Experiments on multiple datasets have shown that our DADSTRCF has high robustness and adaptability, and can effectively cope with scenarios such as background clutter and interference from similar targets.

The main contributions of this article are as follows:

(1) A dynamic spatial regularization term based on color histogram has been designed to adapt to changes in the appearance of the target during tracking. By assigning a larger penalty coefficient to non-target areas, the filter not only mitigates boundary effects, but also suppresses the influence of interference factors, effectively alleviating model drift caused by boundary effects, background clutter, similar interferences, etc.

(2) A distortion perception function has been proposed, which can accurately reflect the degree of distortion of the target. Evaluate the reliability of tracking results based on the degree of distortion of the target. When the target undergoes severe distortion, it is determined that the tracking result is unreliable, and a Kalman filter is used to predict the target position to prevent model drift or tracking failure.

(3) The performance of DADSTRCF was tested on the OTB-50, OTB-100, UAV123, and DTB70 datasets. The experimental results showed that the DP score and AUC score of the algorithm exceeded the existing mainstream methods, and exhibited excellent performance under attributes such as background clutter, occlusion, and similar object interference, demonstrating the effectiveness of the proposed method.

Next, in

Section 2, this article first reviews the related work of DCF trackers, and then in

Section 3, it provides a detailed introduction to DADSTRCF, including a baseline algorithm, algorithm framework, objective function, optimization and solution process of filter model, construction of dynamic spatial regularization term, and construction of distortion perception function.

Section 4 presents the experimental results and analysis of our algorithm and comparative algorithms, including qualitative and quantitative analysis. Finally, the conclusion of this work and the outlook for the future are presented.

2. Related Work

This section provides a brief review of the most relevant DCF trackers to our work, including trackers that optimize filter models, color-based trackers, and trackers with reliability decision mechanisms.

The spatial regularization term can suppress boundary effects, while the temporal regularization term can prevent model degradation. Both effectively improve the robustness of the filter, and make outstanding contributions to alleviating model drift and preventing tracking failures. In recent years, scholars have made many efforts to establish more robust filter models. Dai et al. [

40] proposed ASRCF, which combines SRDCFs with BACFs. ASRCF uses bowl shaped spatial regularization terms as reference weights, which can enable the filter to adjust the spatial regularization term coefficients during the tracking process adaptively. Huang et al. [

41] proposed SRCDCF, which penalizes the spatial regularization coefficients based on the spatial position and target size of DCF, achieving adaptive spatial regularization. Huang et al. [

42] proposed ARCF, which integrates spatial regularization and distortion regularization into the DCF framework. By suppressing response map distortion between adjacent frames, ARCF can suppress interference from similar targets. Wang et al. [

43] proposed FWRDCF, which introduces feature weights as an independent regularization term into the DCF framework. By constraining the changes in feature weights, it enhances the temporal smoothness of feature changes, and suppresses the interference of background noise. Some scholars have also integrated mask maps that highlight the target into the objective function to guide the training of filters. Yu et al. [

44] proposed LDECF, which integrates spatial regularization terms and their second-order differences as independent regularization terms into the DCF framework. By constraining the historical rate of change in spatial regularization terms, the adaptability of the filter to target and background changes is improved. Cao et al. [

45] proposed DTSRT, which combines the mask generated by saliency detection with spatial regularization terms to divide the spatial regularization coefficient matrix into target and non-target regions, and assigns larger penalty coefficients to pixels in non-target regions to suppress interfering pixels. Fu et al. [

46] proposed DRCF, which integrates the masks generated by saliency detection into both spatial regularization and ridge regression terms, constructing a dual regularization model, which can suppress background interference and guide feature selection. Yang et al. [

47] proposed CRCF, which not only integrates saliency information into spatial and temporal regularization terms, but also adds it as an independent regularization term to the objective function, further highlighting the objective information in both space and time.

Early correlation filtering target tracking algorithms typically used grayscale and hog features to track targets. Although they were not sensitive to changes in lighting and motion blur, they were prone to drift when the background contained texture features similar to the target. Color features, due to their robustness to changes in the appearance and edge information of the target, can effectively compensate for the shortcomings of HOG features. The Staple proposed by Bertinetto et al. [

48] utilizes the complementary properties of hog features and CN features for learning, improving the robustness of the filter in scenarios such as motion blur, lighting changes, and target deformation. Lukežič et al. [

49] proposed CSR-DCF, which utilizes a color histogram model to construct a spatial reliability map, allowing the filter to focus on learning the features of the target area during training. Ma et al. [

50] proposed a Color Salience Sensing (CSA) module, which utilizes the synergistic effect of color histograms and saliency detection to generate a smoother and more fitting mask, that can better suppresses background information and enhances the confidence of color statistics in Staple. Hao et al. [

51] proposed CPT, which utilizes a color histogram model and a correlation filtering model for parallel tracking, and adaptively selects tracking results in each frame, significantly improving the adaptability of the filter to deformation.

The fluctuation level of the response map is an important evaluation indicator in the correlation filtering target tracking algorithm. The fluctuation level can intuitively reflect the reliability of tracking results, and also includes information such as the discrimination between the target and background and changes in the appearance of the target. Therefore, most reliability judgment mechanisms are based on measuring the degree of fluctuation in the response map. Yue et al. [

52] proposed a new confidence threshold based on the historical mean of the PSR, to solve the problem that a single threshold cannot adapt to all scenarios. Shao et al. [

53] proposed a confidence function based on the global variation in the response map, and used this function and its historical information to determfine the reliability of the tracking results. Zhang et al. [

54] were inspired by the APCE and proposed a novel judgment mechanism that can reflect the degree of fluctuation in the response map. While judging the reliability of the tracking results, it guides the adjustment of the distortion regularization term and time regularization term parameters. Liang et al. [

55] proposed a dual threshold reliability judgment mechanism by combining the PSR, the APCE, and their historical information. The tracking result is considered reliable only when both the PSR and the APCE are greater than the partial historical mean. Ma et al. [

56] judged the reliability of the tracking results based on the fluctuation of the response map in adjacent frames, while guiding the update of the filter model.

Unlike these works, we propose a novel dynamic spatial–temporal regularized correlation filtering target tracking algorithm. Firstly, we use color histogram information to adjust the spatial regularization coefficient, combined with the color features and spatial position of the target, to distinguish the target from similar interferences, so that the filter can accurately highlight the target information, suppress interferences, and improve the discrimination ability of the filter. Secondly, we cleverly combine the local and global variations in the response map to supervise the degree of distortion of the target, and then make reliable judgments on the tracking results to improve the robustness of the filter.

3. Proposed Method

In this section, we first briefly review the baseline Auto Track [

39], and then provide a detailed introduction to DADSTRCF, including the construction of dynamic spatial regularization terms, optimization of filter models, target localization strategies and model update methods, the construction process of distortion perception functions, and the Kalman filter tracking process.

This article uses color histograms to construct dynamic spatial regularization terms to suppress background interference factors during tracking. At the same time, the distortion perception function is used to determine the degree of target distortion, and the Kalman filter is integrated into the DCF framework to correct the tracking results when the target undergoes severe distortion. The flowchart of DADSTRCF is shown in

Figure 1.

3.1. Revisit of Auto Track

The Auto Track implements the adaptive spatial–temporal regularity term based on the STRCF algorithm. In Auto Track, the local response variation

is first defined, where the

element

is defined as:

where

is the shift operator to make two peaks in two response maps

and

coincide with each other, in order for removing the motion influence.

denotes the

element in response map

. The adaptive spatial–temporal regularization in Auto Track are implemented as follows:

Adaptive spatial regularization: the amount of local response variation is used to measure the confidence of each pixel in the search region of the current frame, and the filter pixel points corresponding to the drastic response variations have lower confidence and larger penalty coefficients. The spatial regularization term weights are expressed as follows:

where

is used to tailor the center position where the target is located in the filter,

is a constant to adjust the weight of the local response variation, and

is a matrix of static spatial regular terms to mitigate the effects of boundary effects.

Adaptive temporal regularization: the amount of global response variation is used to regulate the rate of change in the filter between two adjacent frames. The temporal regularization term weights are defined as:

where

and

are hyperparameters; when the global variation is greater than the threshold

, this means that there is distortion in the response map and the filter will stop learning; when the global variation is less than or equal to the threshold

, the more drastic the response map changes, the smaller the penalty coefficient is, so that the filter learns faster in the case of large changes in the target appearance.

The objective function of the Auto Track filter model is shown in Equation (4):

where

is the feature of size

extracted in frame

,

denotes the number of feature channels,

is the desired Gaussian shape response, and

denotes the filter of the

channel trained in frame

.

denotes the correlation operator,

denotes the Hadamard product.

is the spatial regularization term, and

is the temporal regularization term.

3.2. Dynamic Spatial Regular Terms

In order to fully utilize the information difference between the target and the background, and enhance the discriminative ability of the filter towards the target, DADSTRCF constructs a dynamic spatial regularization term using color histograms.

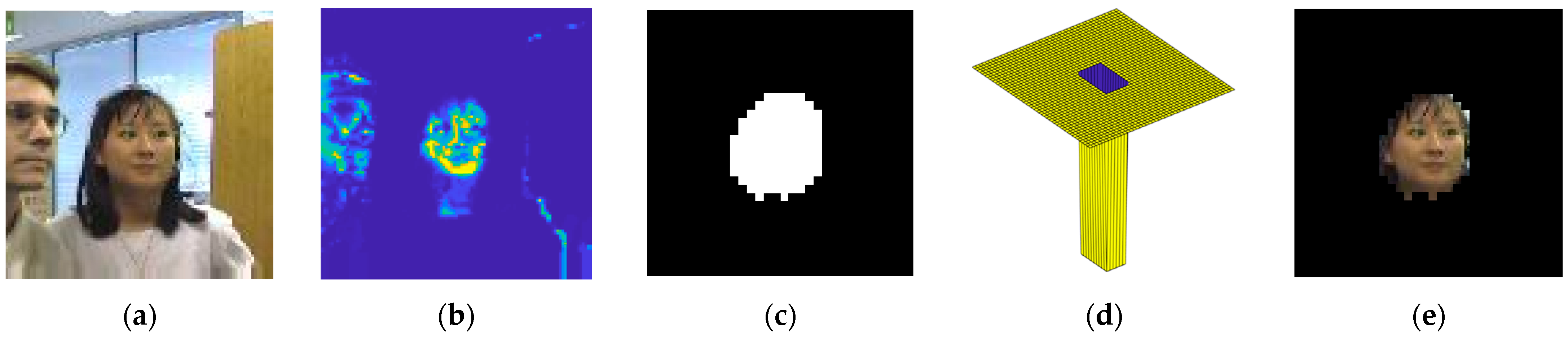

Figure 2 shows the principle of constructing dynamic spatial regularization terms for color histograms, which is mainly divided into three parts: (1) Constructing appearance likelihood probability maps: using foreground color histograms and background color histograms to model the search area separately, obtaining foreground appearance likelihood probability maps and background appearance likelihood probability maps; (2) generating a mask map: combining the appearance likelihood probability map, spatial likelihood probability map, and prior probabilities of foreground and background, using the total probability formula to obtain a posterior probability map representing the foreground, and binarizing it to form a mask map, achieving foreground–background separation; (3) merging the mask image with the static spatial regularization term to generate the dynamic spatial regularization term.

The core of constructing a dynamic spatial regular term for the color histogram is to generate a mask map that fits the appearance of the target. Each pixel point in the mask has a value of

, with

indicating that the pixel belongs to the foreground and

indicating that the pixel belongs to the background.

takes a value that is related to both the position

, where the pixel is located, and the appearance

of the target. The joint probability of each pixel is:

where

is the appearance likelihood probability,

is the spatial likelihood probability, and

is the prior probability of foreground and background.

(1) Constructing the appearance likelihood probability map: the color histograms

are extracted for the foreground and background regions, respectively. A symmetric Epanechnikov kernel function is introduced as a spatial prior when counting the foreground color histograms, which is shown in Equation (6):

where

is the distance between the pixel point to be counted and the target center, and

corresponds to the target minimum bounding box. We assign different spatial weights to each pixel value in the target region beforehand; the closer to the target center, the larger the weight, and the farther away from the target center, the smaller the weight. When the pixel to be counted falls in one of the color intervals of the histogram, this weight value is added to the interval instead of plus one. The foreground color histogram is then normalized and back-projected to the search region, i.e., the foreground appearance likelihood probability map

is obtained. In this way, all pixel points with similar color characteristics to the target will have high probability values and the interferences will be identified. When counting the background color histogram, the pixel points in the target region are not counted, and the remaining pixel points in the search region correspond to the color interval of the color histogram plus one. The background color histogram is then normalized and back-projected to the search interval to obtain a background appearance likelihood probability map

. The background appearance likelihood probability map thus obtained has a lower probability value in the target region.

(2) Generating the mask map: the spatial likelihood probabilities used for foreground and background separation are determined by a modified symmetric Epanechnikov kernel function. The foreground spatial likelihood probability is restricted to the range

such that the prior probability of the target being at the center is 0.9 and the prior probability of being away from the center is 0.5 as shown in Equation (7):

In order to increase the difference between the probability values of the target pixel in the foreground and the background to better highlight the target pixel, the background spatial likelihood probability is restricted to the range

such that the prior probability of the target at the center is 0.1 and that of the target away from the center is 0.5 as shown in Equation (8):

The prior probabilities of foreground and background are determined by the ratio of the area sizes of the extracted target and background histograms. In this paper, we set

,

. Finally, the posterior probability map of the foreground is obtained from Equation (5), and after binarization processing, a mask

that fits the appearance of the target is obtained, as shown in

Figure 2c, achieving foreground–background separation.

(3) Establish dynamic spatial regularization term: Combine the mask

separated from the foreground and background with the static spatial regularization term

shown in

Figure 2d to suppress pixels that do not belong to the target spatial area or the target appearance area, and obtain the weights of dynamic spatial regularization term

:

In summary, the objective function for constructing the filter is as follows:

In the formula, is the dynamic spatial regularization term for background suppression, is the reference weight during training, is the weight to be optimized, and is the spatial regularization term parameter.

3.3. Algorithm Optimization

The objective function is a convex function of least squares regularization, and the relaxation variable

is introduced to construct the original unconstrained optimization problem into a convex optimization problem with equality constraints. The objective function is further written as:

The augmented Lagrangian function is:

Among them,

is the Lagrange multiplier and

is the step parameter. If

is defined as the dual variable of scaling, the above equation can be transformed into:

Using the ADMM multiplier method, the above equation can be decomposed into the following subproblems for solution:

Among them, is the number of iterations.

(1) Subproblem

: Using the Parseval’s theorem and the convolution theorem, transform it into the Fourier domain:

Here, for easier explanation, the superscript is omitted. represents complex conjugation, and represents discrete Fourier transform (DFT) with symmetry.

It can be observed that the

row and

column elements

of the ideal Gaussian Fourier transform are only related to the

row and

column elements

corresponding to the overall channel and the feature sample

. Let

, concatenate the

elements on all

channels together, and decompose the above equation into

independent subproblems, each of which takes the form of:

By taking the derivative of

and setting it to zero, and taking the identity matrix as

, we can obtain the following solution:

Since

is a rank 1 matrix, the Sherman–Morrison formula can be used to solve the above equation:

(2) Subproblem

: Since the subproblem

does not involve convolution operations in the time domain, the derivative of

can be directly set to zero and solved in the time domain. Its closed form solution is:

(4) Subproblem

: Given other variables, the optimal solution for

can be determined as:

(5) Iterative update: In each iteration,

is updated using the last line of Equation (14). Step parameter

update:

Among them, is the maximum value of , and is the step size factor.

3.4. Object Localization and Model Update

Calculate the response map

using the obtained optimal filter

, as shown in Equation (23):

Among them,

is the inverse Fourier transform. The position corresponding to the maximum value of response map

is the center of the target. The filter is updated using Equation (24), where

is the learning rate:

The scale estimation of the target is the same as the DSST. A one-dimensional scale filter is trained by extracting multi-scale samples at the target position, and the scale filter obtained from the previous frame is correlated with the scale sample of the current frame to obtain the scale response function. The scale corresponding to the maximum value of the scale response function is the target scale of the current frame.

3.5. Distortion Perception Function

In order to make reliable judgments on the tracking results of the filter, this paper proposes a distortion perception function.

The Average Peak Correlation Energy (APCE) is a commonly used mechanism for measuring the degree of target distortion, which is determined by calculating the global oscillation degree of the response map. The calculation formula is as follows:

Among them,

and

represent the maximum and minimum values of the response map, respectively.

is the response value for row

and column

. Taking the OTB-100 dataset as an example,

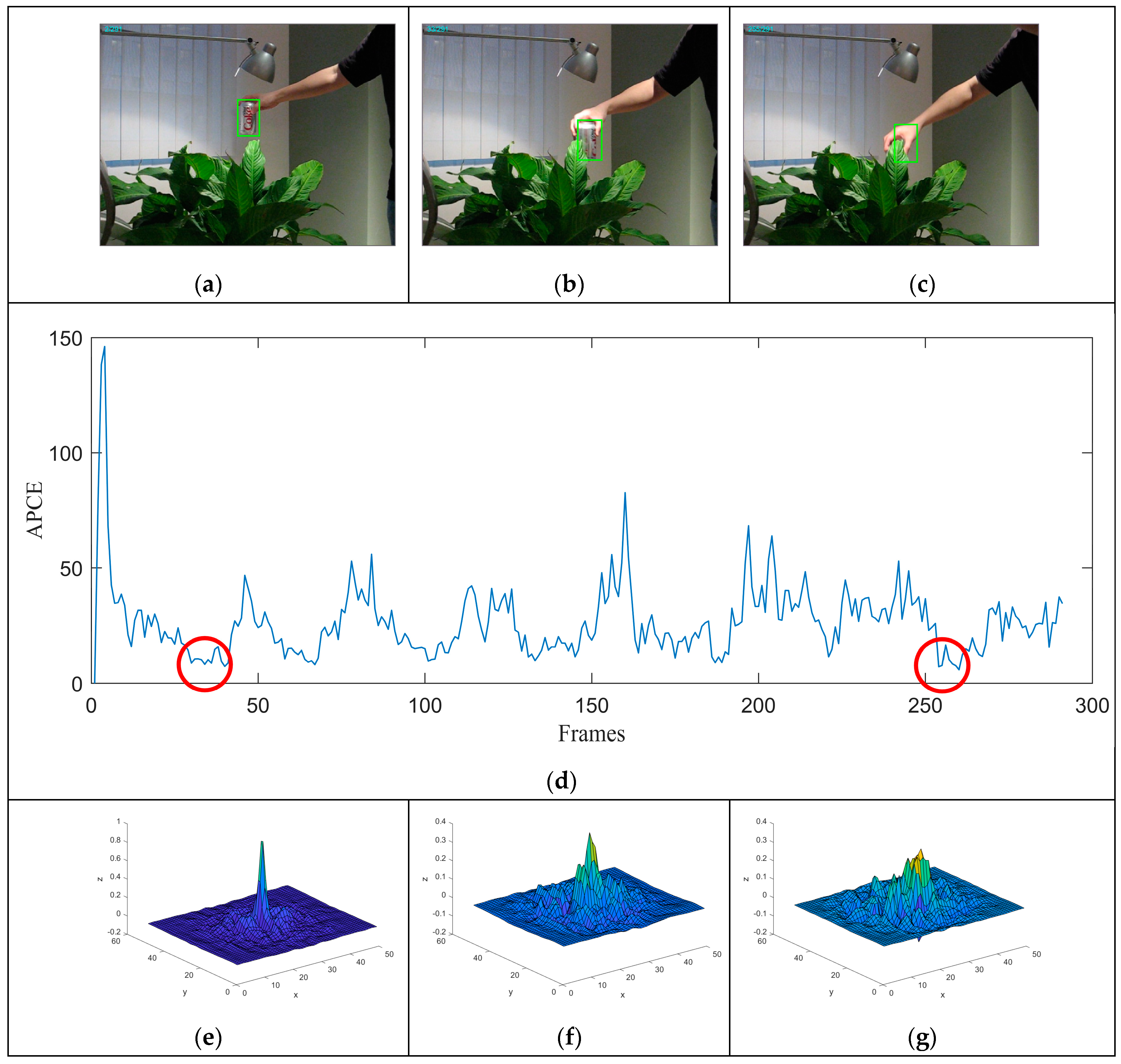

Figure 3a–c show the tracking results of frame 2, 33, and 255, corresponding to the states of no distortion, slight distortion, and severe distortion of the target, respectively.

Figure 3d shows the variation in APCE values of coke sequences with frames in the OTB-100 dataset. It is not difficult to find that the APCE value is very sensitive to the degree of distortion of the target. The APCE values of the target in the case of slight distortion and severe distortion are very close. Therefore, it is difficult to find a definite threshold to determine the degree of distortion of the target, which can easily lead to misjudgment in some scenarios with low confidence but accurate tracking results.

During stable tracking, the main peak of the response map is usually prominent and sharp. Under the influence of occlusion and background clutter, multiple pseudo peaks will appear around the main peak, making it no longer sharp.

Figure 3e–g show the response maps of the coke sequence under the conditions of no distortion, slight distortion, and severe distortion of the target, respectively. When the target is in an undistorted state, the response map is a sharp unimodal function of Gaussian shape. When the target is in a slightly distorted state, a secondary peak appears near the main peak in the response map, and the peak value of the main peak decreases and is no longer sharp. When the target is in a severely distorted state, multiple pseudo peaks appear in the response map, and the peak values of the pseudo peaks are slightly higher than those of the main peak, causing the filter to misjudge. Therefore, the sharpness of the main peak in the response map can to some extent reveal the degree of distortion in the tracking results. Based on this, this article proposes the Peak Relative Intensity (PRI) to measure the sharpness of the main peak in the response map:

where

is the maximum value of the response map.

and

are the mean and variance of the remaining pixels within the

window around the peak, except for the peak.

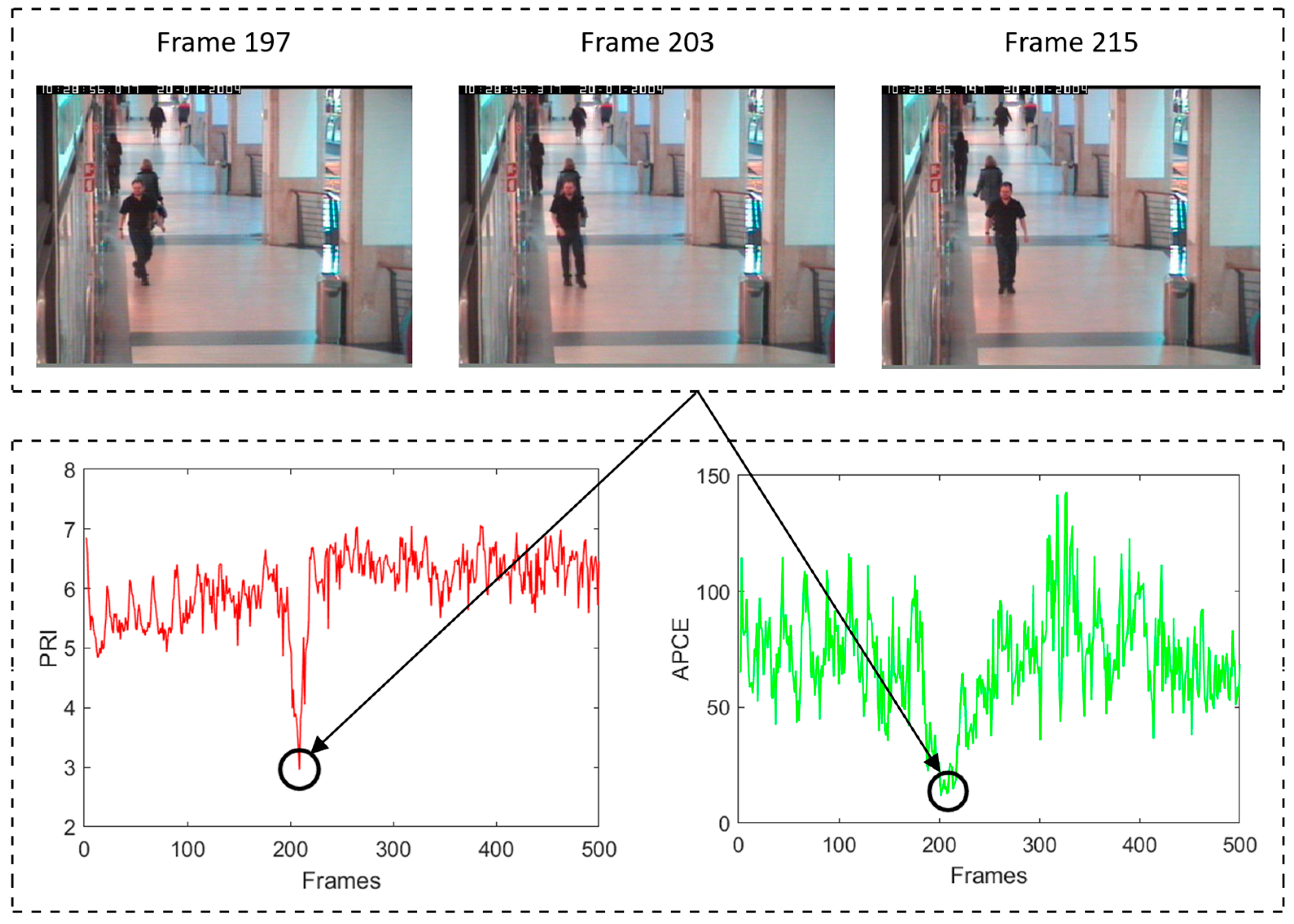

Taking the Walking2 sequence as an example,

Figure 4 illustrates the frame variation in the PRI and the APCE. The Walking2 sequence experiences occlusion around frame 203. As shown in the figure, when the target is distorted, both APCE and PRI values show a decreasing trend, with a more significant decrease in the PRI, making it easier to distinguish the distorted state.

The PRI reflects the degree of local oscillation in the response map and focuses more on the target and its surrounding environment, making it more sensitive to deformation, occlusion, and other situations; the APCE reflects the degree of global oscillation in the response map and focuses more on the global environment of the search area, making it more sensitive to lighting conditions, rapid motion, and other situations. Based on this, this article proposes a distortion perception function (DPF) that combines PRI and APCE values, and is more comprehensive and accurate to determine the degree of distortion of the target. The DPF is defined as:

Table 1 shows the meanings and ranges of APCE, PRI, and DPF values. From this table, it can be seen that there is a significant difference in the range of values between the APCE and PRI. In order to highlight the difference in amplitude corresponding to different degrees of distortion of the target, this paper combines the two in an exponential manner.

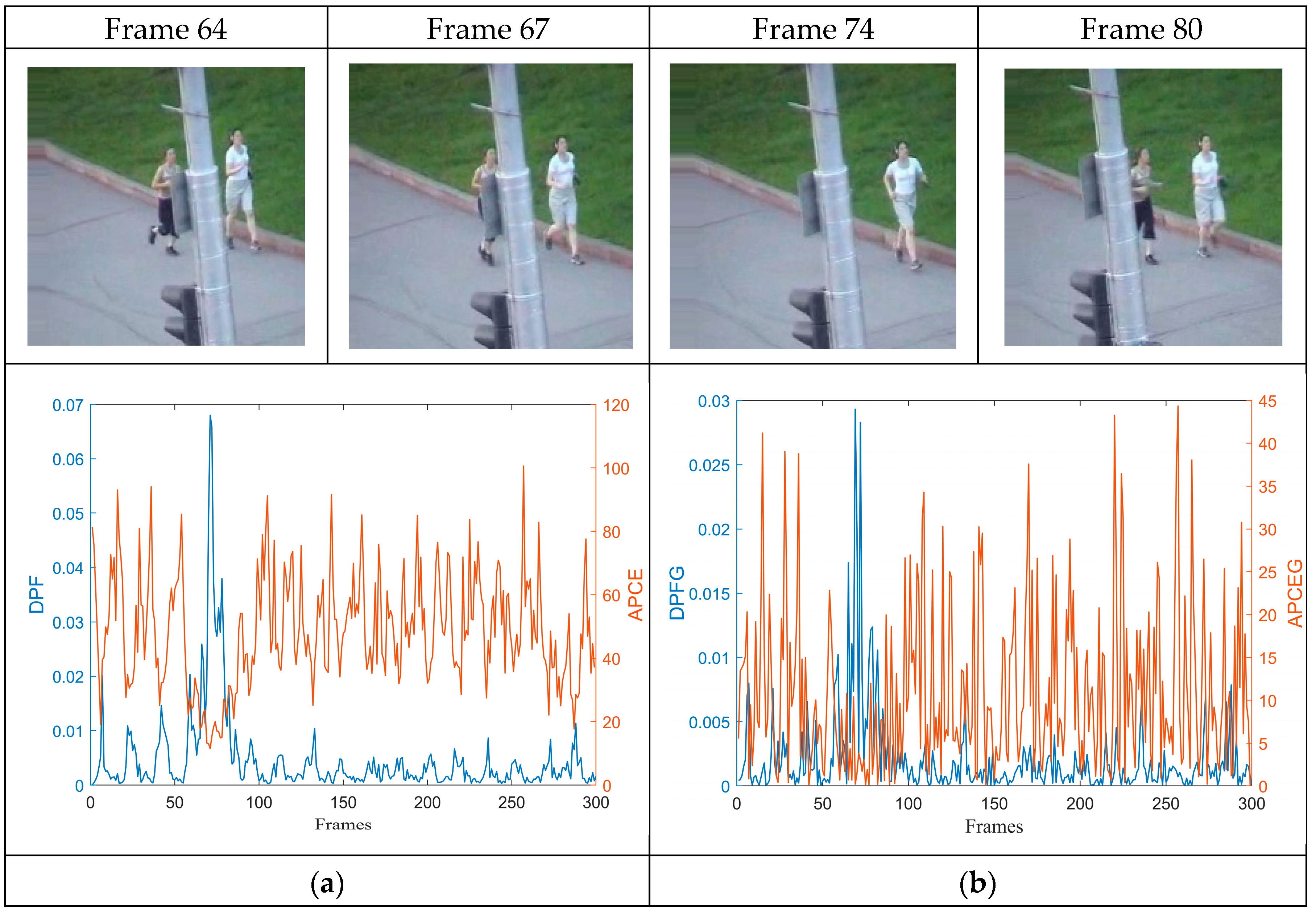

For the convenience of comparison, taking the joking1 sequence as an example, the numerical changes in the DPF and the APCE are compared, as shown in

Figure 5. It can be observed that compared to the sensitivity of APCE values to the degree of target distortion, the DPF is only higher and accompanied by significant fluctuations when the target undergoes severe distortion due to severe occlusion, while the rest of the frame changes relatively smoothly.

In order to demonstrate more clearly that the DPF can effectively determine the degree of target distortion, taking the first 500 frames of the Girl2 sequence as an example, the variation in DPF values with frames and the degree of target distortion are visualized, as shown in

Figure 6. The target experiences similar background interference around frame 55, partial occlusion around frame 106, complete occlusion around frame 110, and deformation around frame 280. The DPF corresponding to several situations vary greatly, and the DPF value is highest when the target undergoes severe distortion due to occlusion.

This article assumes that when the condition is met, the current frame target is in a severely distorted state, and the tracking results are unreliable.

3.6. Kalman Filter Tracking

The Kalman filter [

11] is an efficient recursive estimation method that utilizes prior knowledge and observation data of the system to make optimal estimates of the system state in the presence of noise. In this article, when the tracking results of the target are unreliable due to severe distortion, we use a Kalman filter to predict the tracking results. The state equation and observation equation of the Kalman filter can be expressed as:

where

and

represent the states of frame

and

, respectively, which can be described as

, where

represents the center position of the target.

and

represent the horizontal and vertical velocities.

represents the state transition matrix from frame

to

,

is the observation vector, and

represents the observation matrix. Since the Kalman filter only serves as a correction in DADSTRCF, this paper assumes that the motion model of the target between adjacent frames is a uniform linear motion. When using the Kalman filter for target tracking, the target is treated as a point. Therefore, the state transition and measurement matrix are defined as:

and , respectively, represent process noise and measurement noise, both of which follow normal distributions with covariance matrices Q and R, respectively.

The true value, predicted value, and optimal estimated value of the target in frame

are represented as

,

,

. The prediction error covariance matrix and estimation error covariance matrix of the Kalman filter are defined as:

Kalman filtering mainly includes two parts: prediction and correction.

- (1)

Prediction section:

The prediction part mainly includes state prediction and error covariance matrix prediction. They are, respectively, expressed as:

- (2)

Correction section:

The correction part mainly includes state correction, Kalman gain correction, and error covariance correction. They are, respectively, expressed as:

where

is the Kalman gain.

5. Conclusions

When facing scenarios such as background clutter and interference from similar objects, DCF trackers are prone to drift or tracking failure. To address this issue, this paper proposes a distortion-aware dynamic spatial–temporal regularization correlated filtering (DADSTRCF) target tracking algorithm. DADSTRCF utilizes color histograms to construct dynamic spatial regularization terms, and proposes a distortion perception function (DPF) to determine the reliability of tracking results. When the tracking results are unreliable, the Kalman filter is used to predict in a timely manner, effectively alleviating the effects of background clutter, similar interferences, and boundary effects, enhancing the robustness and adaptability of the filter, and significantly reducing the risk of model drift or even tracking failure. Comparative experiments were conducted on the OTB-50, OTB-100, UAV123, and DTB70 datasets, and the experimental results showed that DADSTRCF had the highest DP score and AUC score on all four datasets, with improvements of (6.3%, 9.3%), (8.4%, 9.3%), (2.0%, 2.5%), and (6.4%, 3.9%) compared to Auto Track, respectively. Under similar background interference, background clutter, occlusion and other attributes, the tracking performance of DADSTRCF is significantly better than other trackers, fully demonstrating the superiority of DADSTRCF.

However, during the experiments, it was found that DP scores of DADSTRCF in low resolution and other scenarios lack competitiveness. Given the limited representation capability of handcrafted features, future work will explore the integration of deep features with handcrafted features, aiming to enhance tracking performance without compromising real-time efficiency. In addition, the fixed model update strategy limits the adaptability of tracker to complex scenes, and increases the risk of model drift. Therefore, the next step is to combine the distortion perception function with the model update mechanism to achieve adaptive model update.