CAGM-Seg: A Symmetry-Driven Lightweight Model for Small Object Detection in Multi-Scenario Remote Sensing

Abstract

1. Introduction

- (1)

- An innovative attention fusion mechanism is proposed, effectively enhancing the model’s feature learning capability under limited training samples and significantly suppressing overfitting caused by data scarcity.

- (2)

- A multi-level feature fusion and enhancement strategy was constructed, strengthening the ability to distinguish and locate small targets, thereby maintaining high recognition accuracy even in complex backgrounds.

- (3)

- Through the synergistic design of lightweight network architecture and training strategies, the model’s cross-scenario adaptability and generalization performance are significantly improved while effectively controlling model complexity.

2. Materials and Methods

2.1. Remote Sensing Image Sources and Preprocessing

2.2. Dataset Preparation

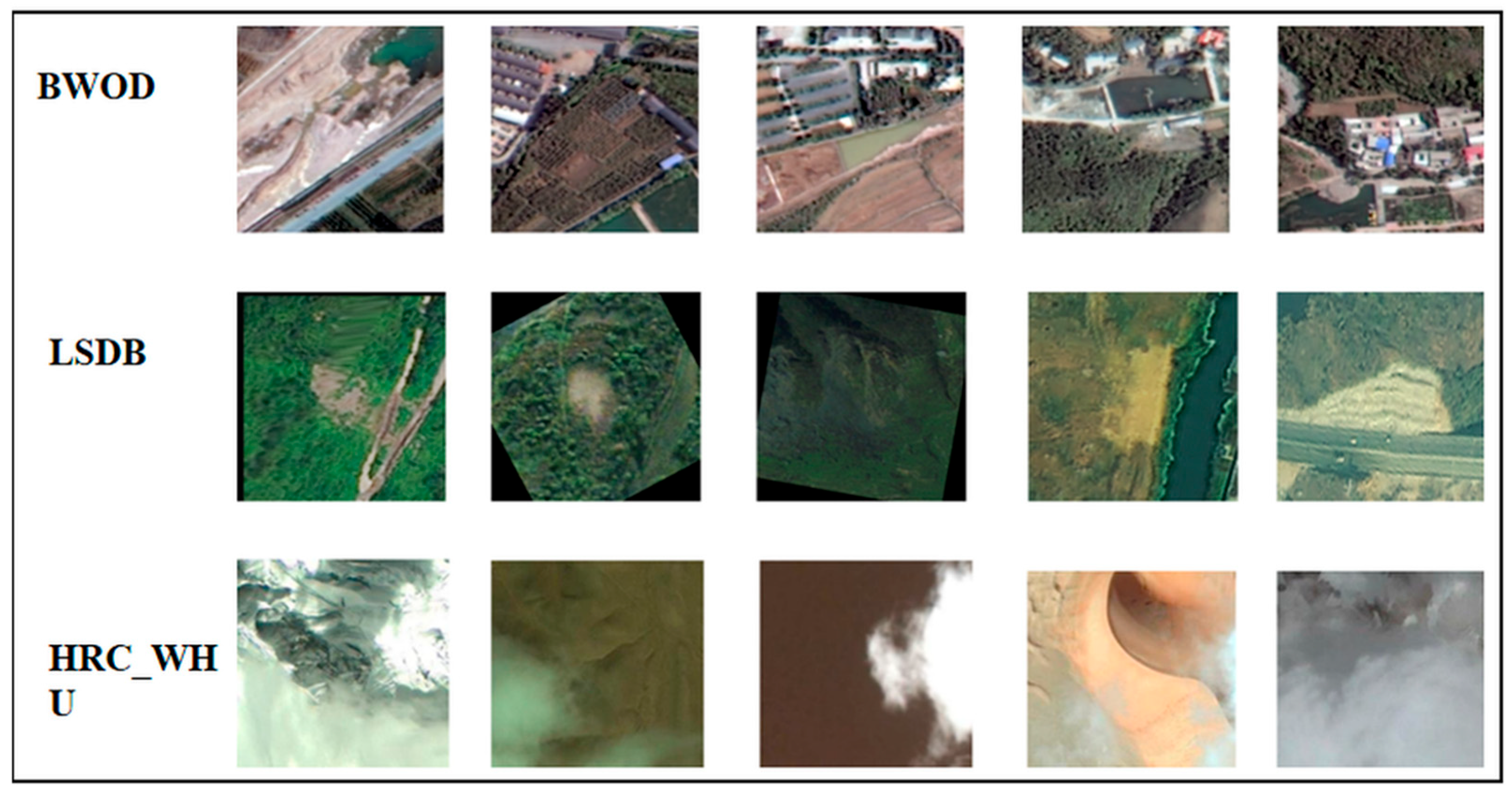

2.2.1. The Datasets Not Yet Augmented

2.2.2. The Augmented Datasets

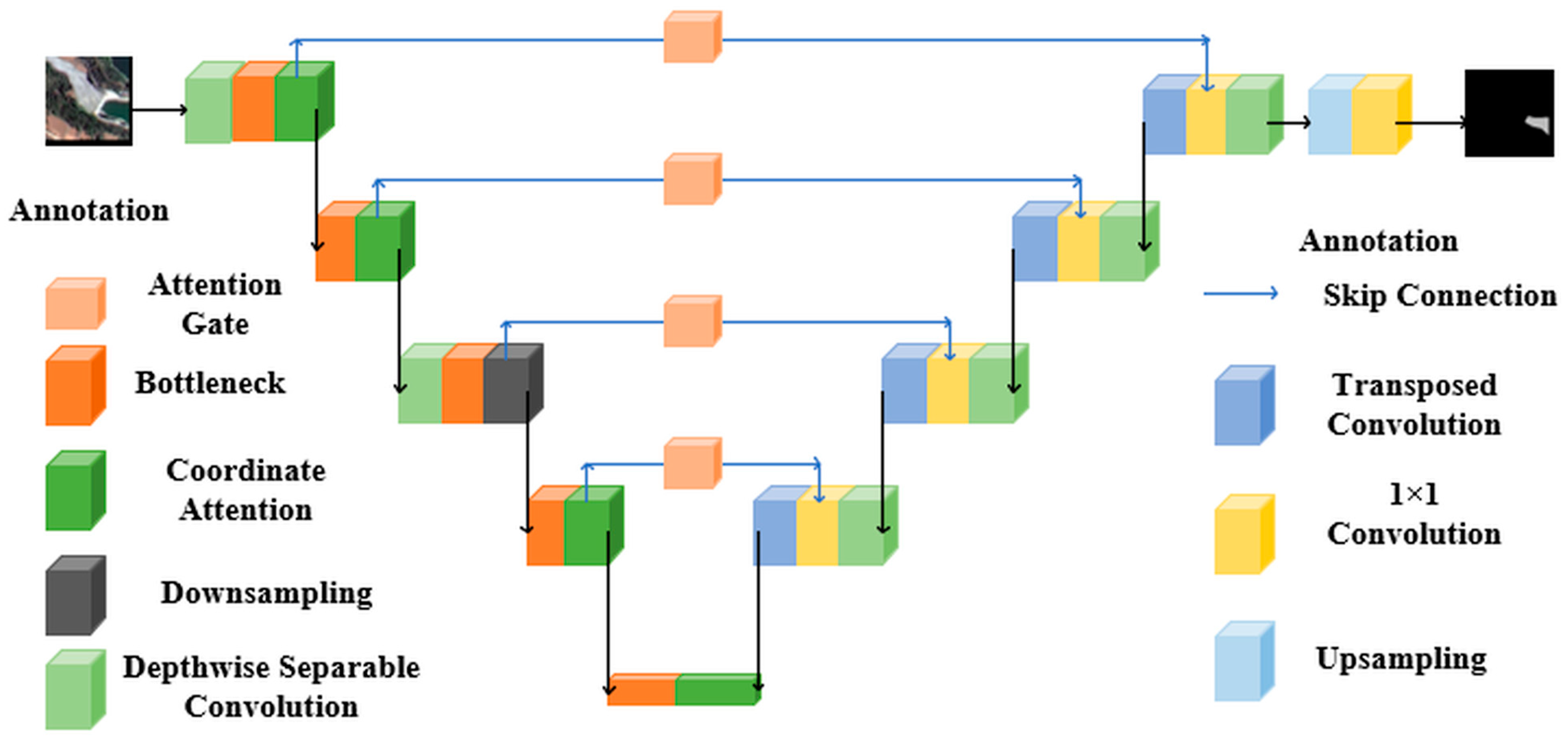

2.3. CAGM-Seg

2.3.1. Model Architecture

2.3.2. MobileNetV3-Large

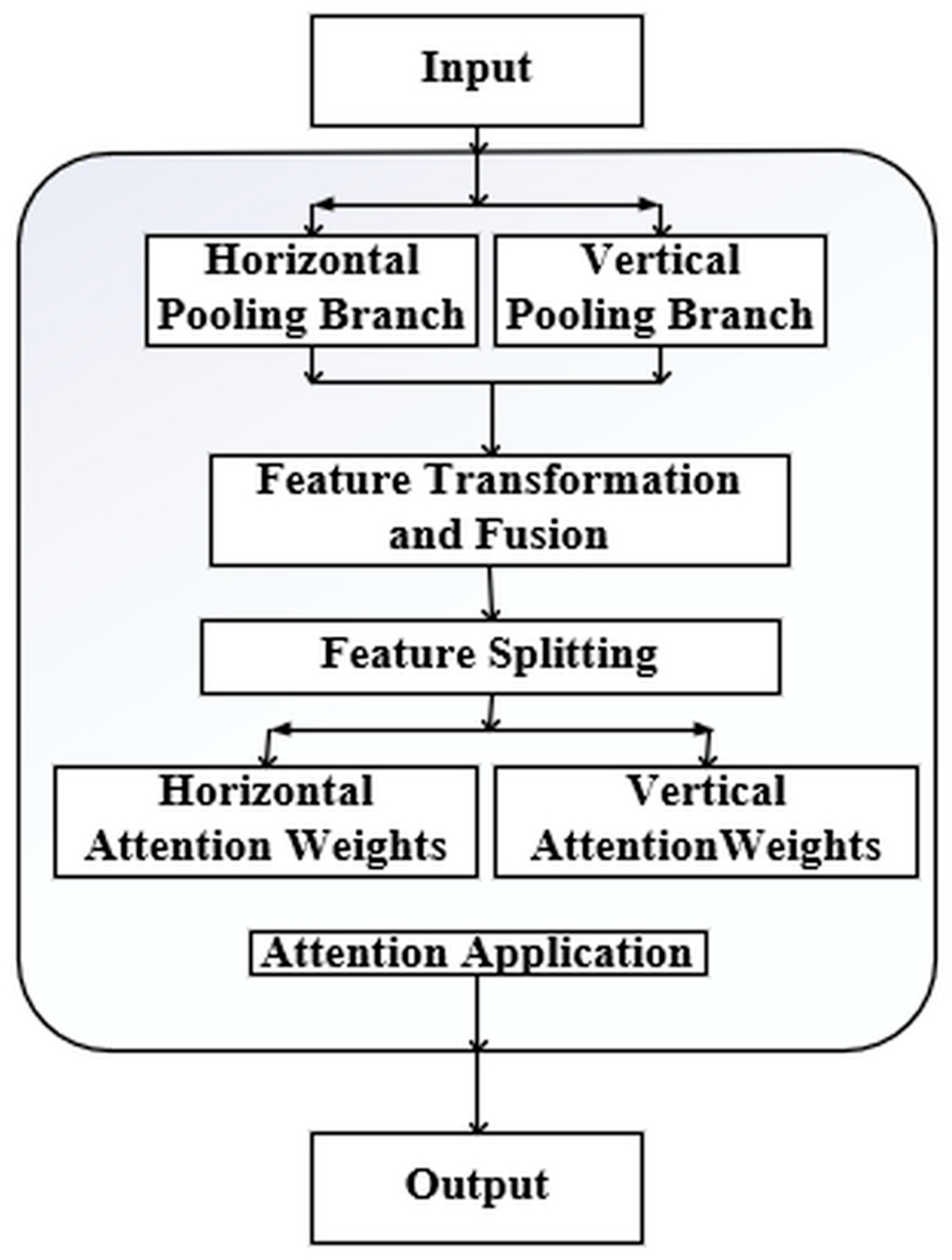

2.3.3. Coordinate Attention

2.3.4. Decoder

2.3.5. Attention Gate

2.3.6. Loss Function and Optimizer

2.4. Model Performance and Reliability Evaluation

2.4.1. Experimental Setup and Evaluation Metrics

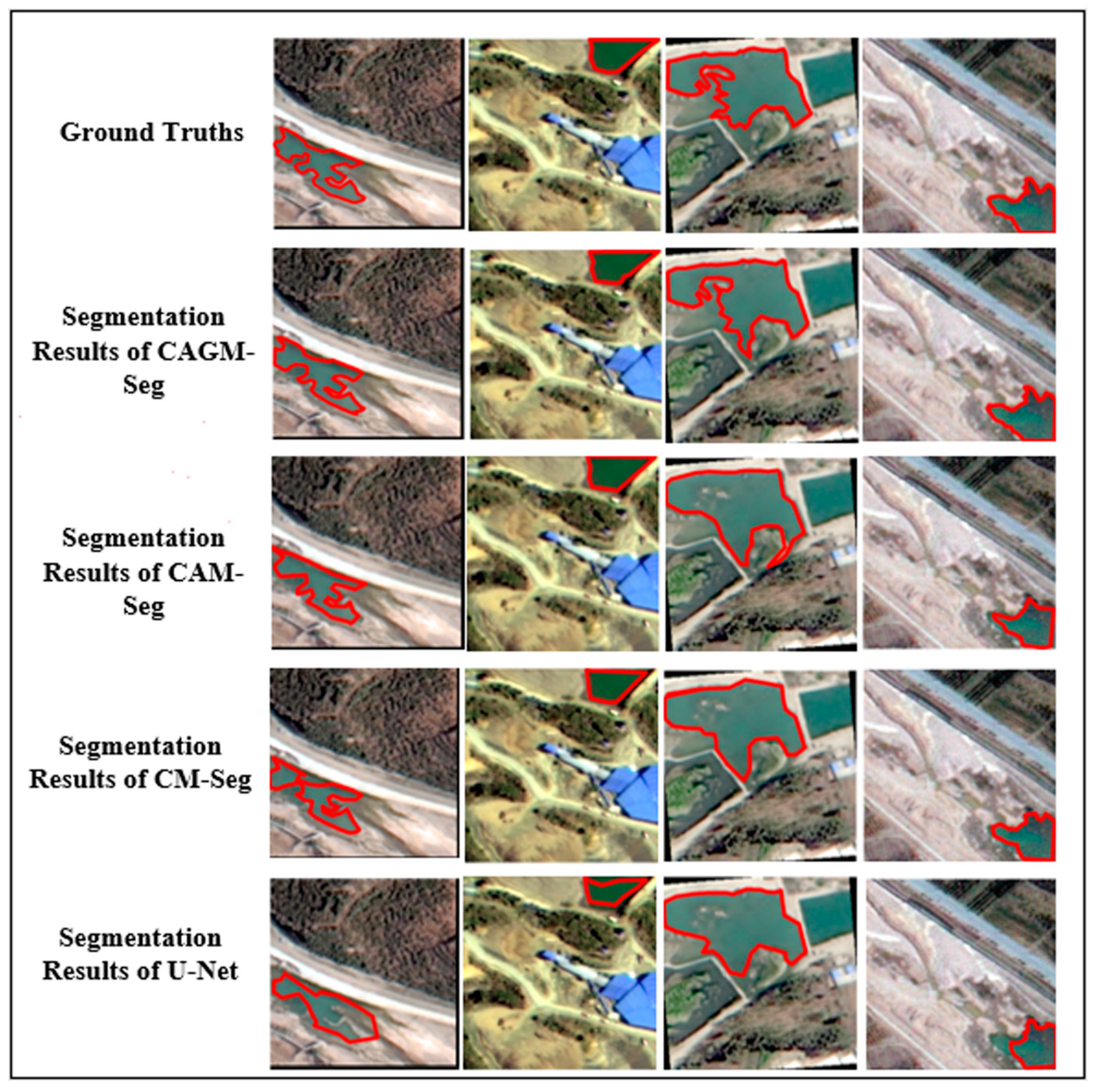

2.4.2. Comparative Experiments

2.4.3. Ablation Studies

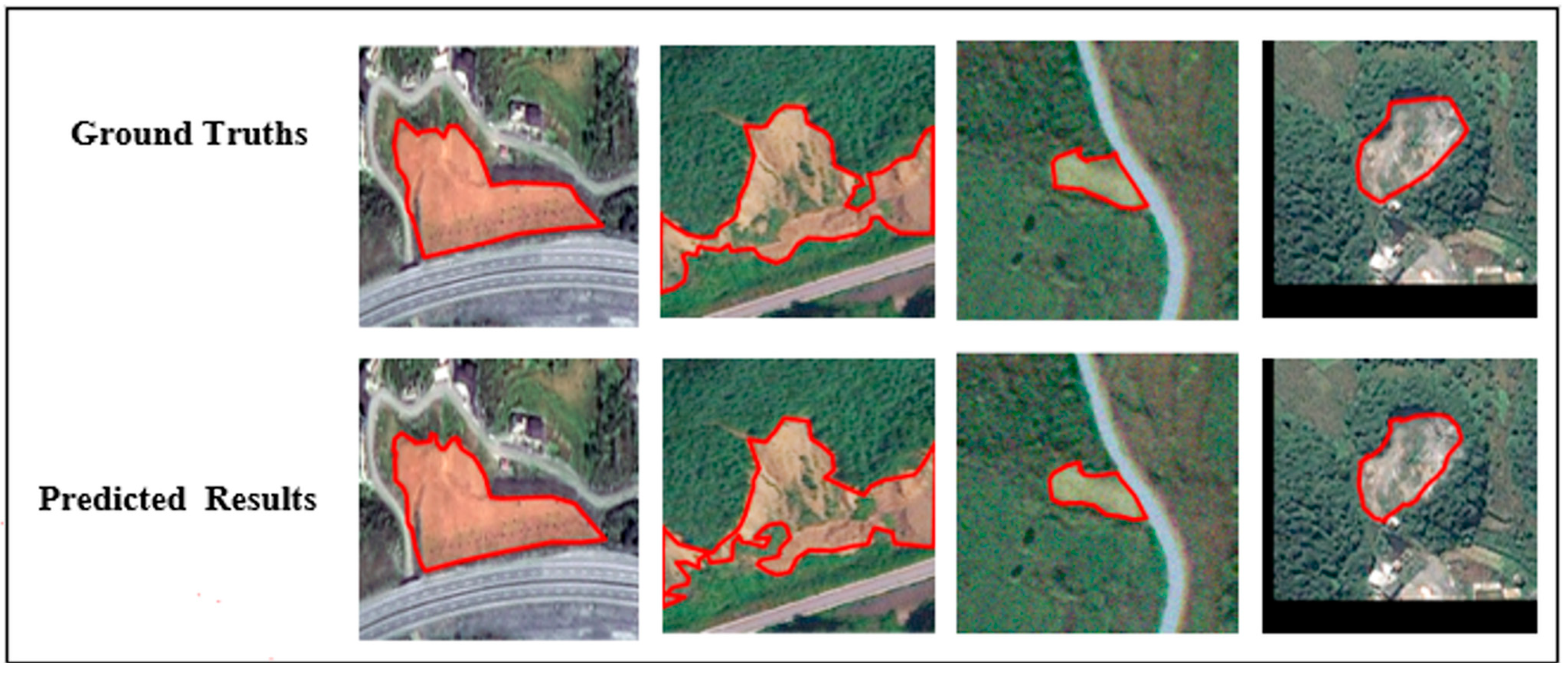

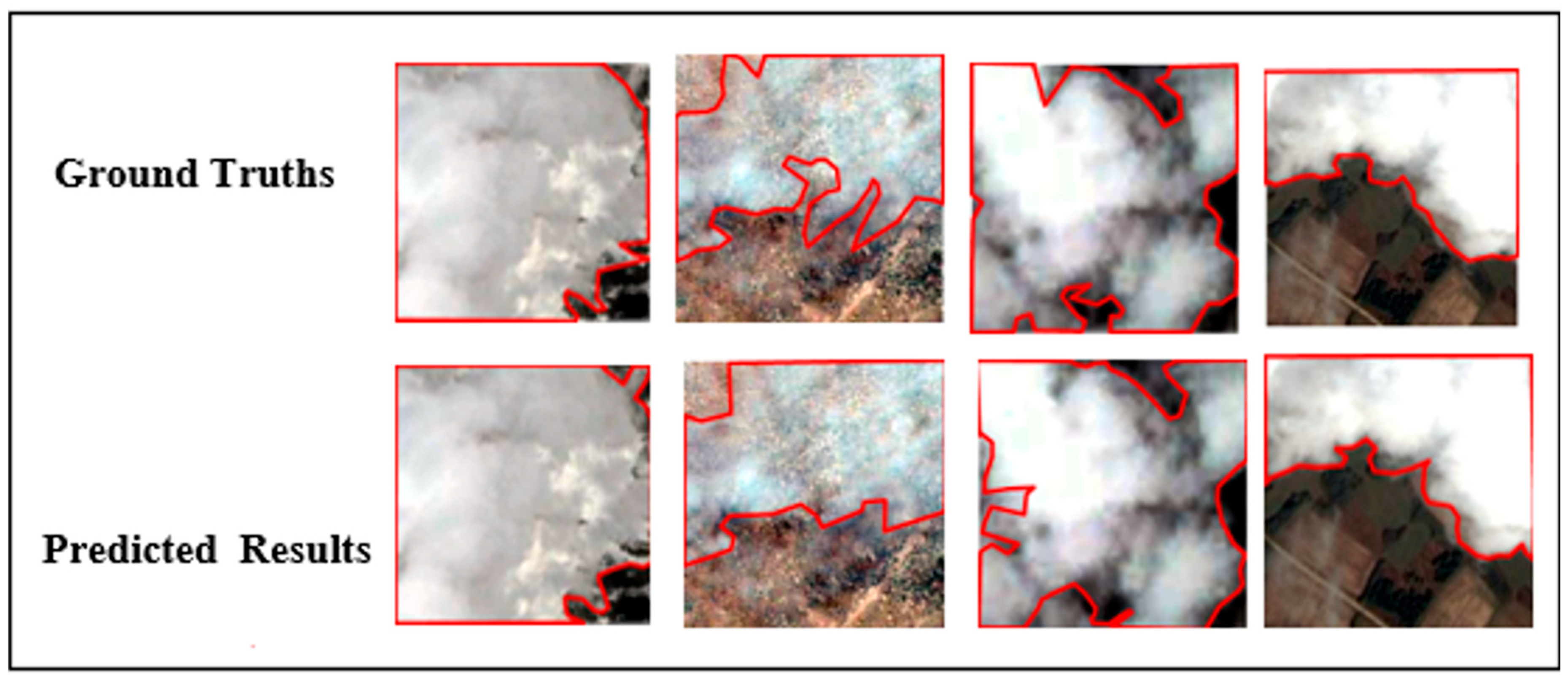

2.4.4. Generalization Capability Testing

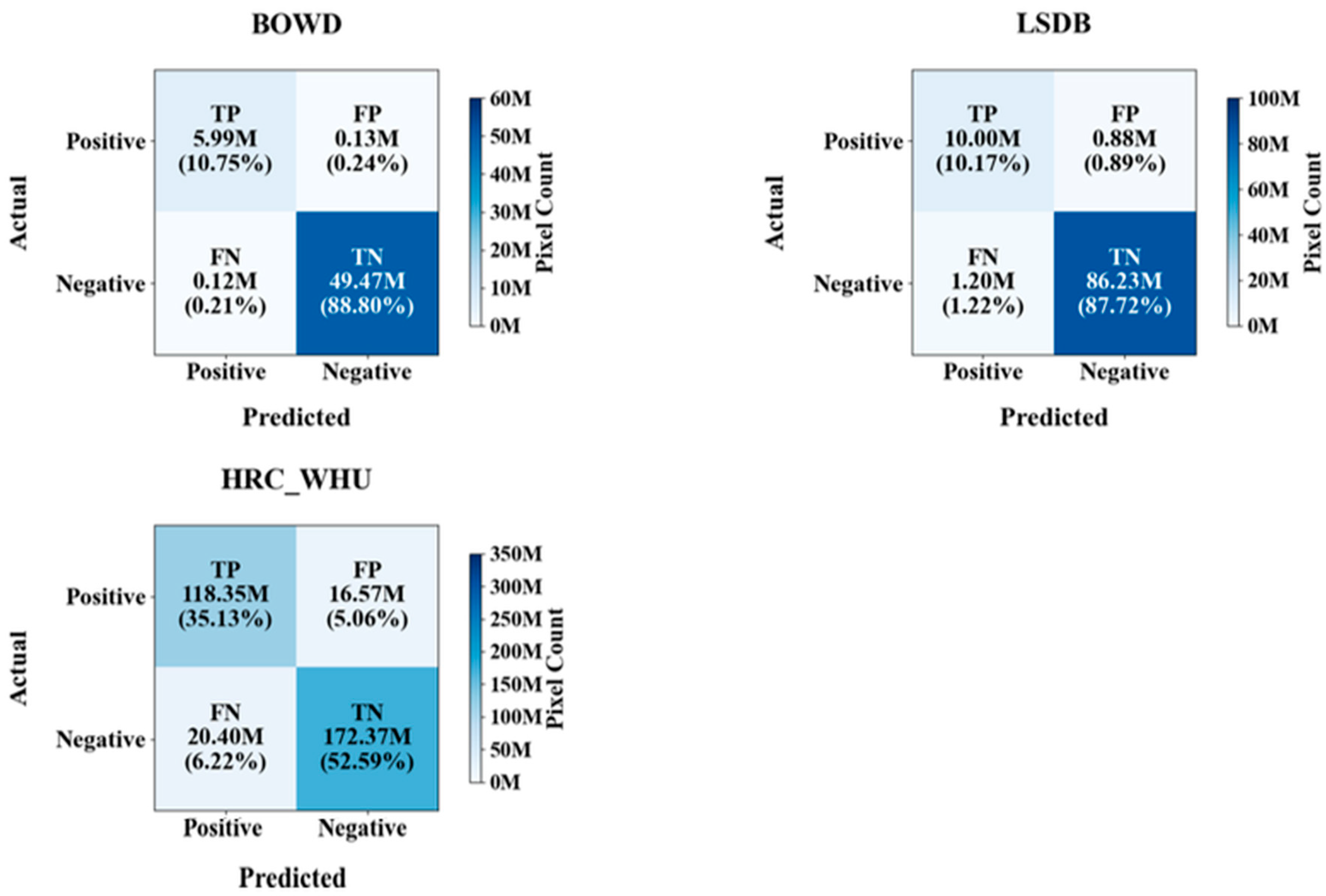

2.4.5. Confusion Matrix Analysis

3. Results

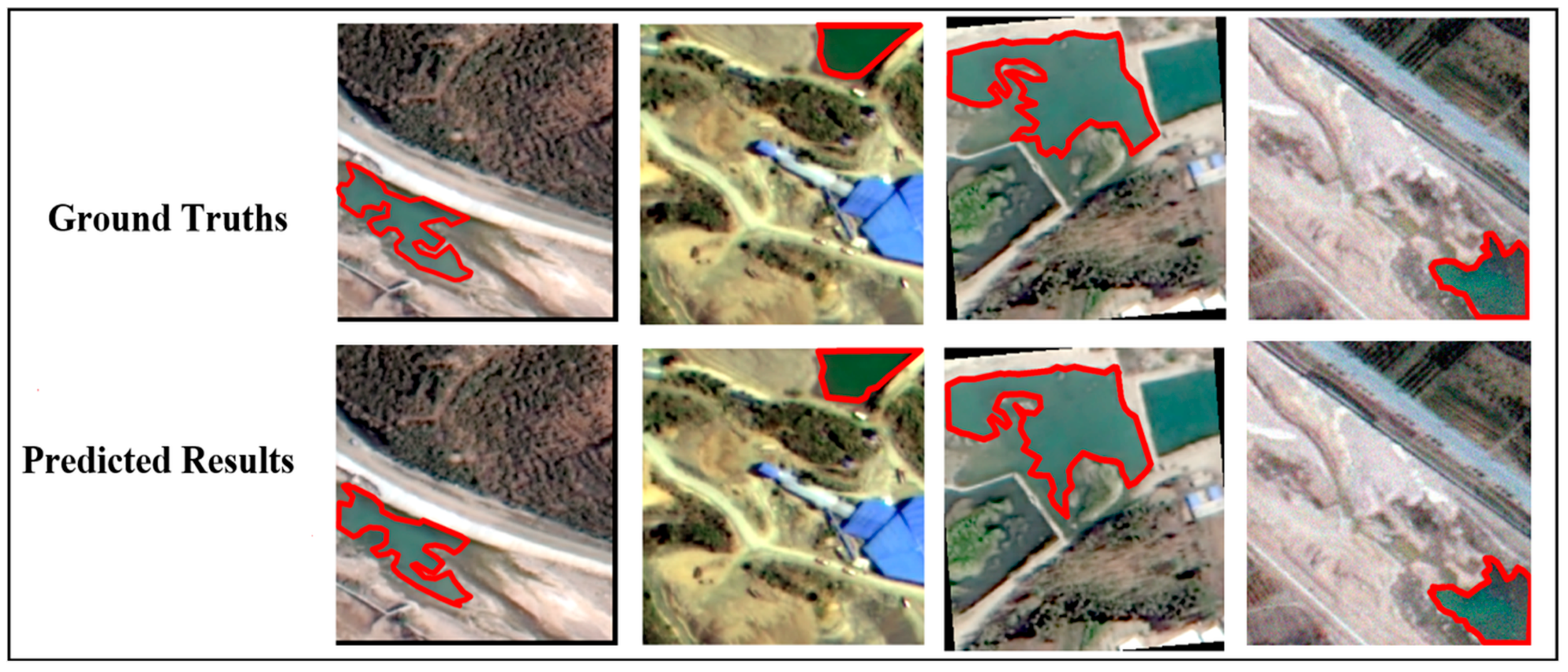

3.1. Comparative Experimental Results

3.2. Ablation Experiment Results

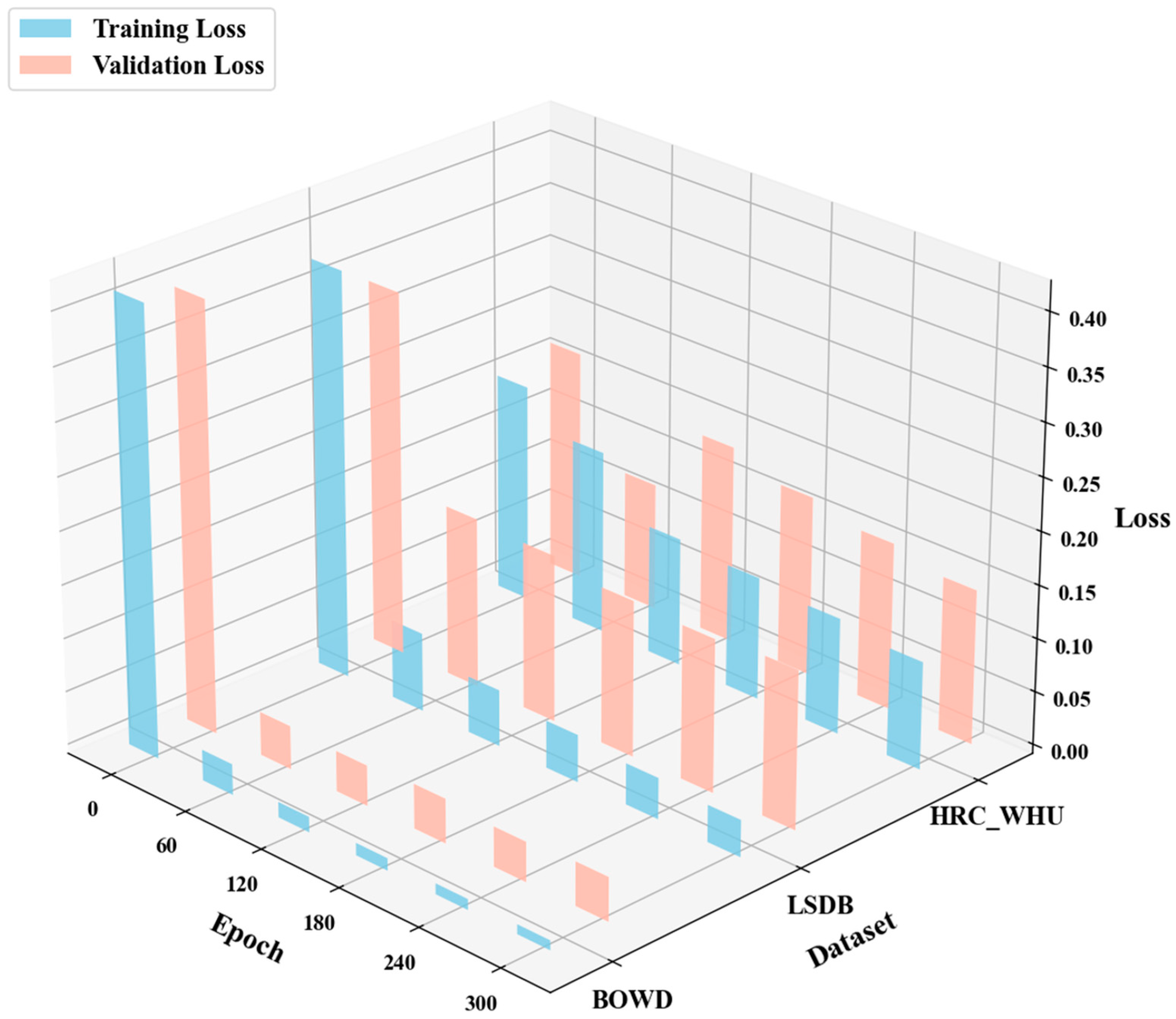

3.3. Generalization Capability Test Results

3.4. Confusion Matrix Analysis Results

4. Discussion

4.1. Mechanistic Analysis of CAGM-Seg’s Generalization and Recognition Capabilities

4.2. Limitations and Future Directions

- (1)

- Improve the model’s adaptability to data from diverse sensor types and spatial scales, with an emphasis on stabilizing performance across varying resolutions to enhance generalization in diverse global geographical settings.

- (2)

- Systematically investigate model compression and inference acceleration techniques. While maintaining recognition accuracy, further reduce model complexity and computational cost to enable efficient deployment in resource-limited scenarios.

- (3)

- Develop more robust training mechanisms that are less sensitive to label noise and class imbalance, thereby improving model stability and reliability in real-world complex environments.

- (4)

- Advance the model toward a unified multi-task learning framework that supports collaborative processing of semantic segmentation, object detection, and change detection tasks.

5. Conclusions

- (1)

- The CAGM-Seg model achieves an excellent balance between recognition accuracy and lightweight design. It significantly outperforms mainstream models such as PIDNet, SegFormer, Mask2Former, and SegNeXt in identifying black and odorous water bodies. Through its carefully designed architecture, the model reduces the number of parameters by approximately 80% compared to the conventional U-Net, successfully fulfilling the dual objectives of high-precision recognition and operational efficiency.

- (2)

- Ablation experiments confirm the effectiveness and necessity of each core module. The study shows that during the evolution from the baseline U-Net to the complete CAGM-Seg model, the CA module significantly enhances positional awareness of small-object boundaries. The attention gate module effectively optimizes multi-level feature fusion, while depthwise separable convolution substantially improves parameter efficiency without sacrificing performance. The synergistic interaction among these modules validates the rationality of the overall architecture and the effectiveness of the technical approach.

- (3)

- With a total of 3,488,961 parameters (including 517,009 trainable parameters), the model exhibits strong cross-task generalization capabilities while having clearly defined applicability boundaries. For landslide detection, it achieved an IoU of 82.77% and an F1-score of 90.57%, demonstrating effective knowledge transfer enabled by its efficient parameterization. However, when applied to global multi-resolution cloud detection across extensive geographical areas, the model showed a reasonable performance decline, indicating that its strengths are more prominent in fixed-resolution, regional-scale recognition tasks.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sivasubramanian, A.; Vr, P.; V, S.; Ravi, V. Transformer based ensemble deep learning approach for remote sensing natural scene classification. Int. J. Remote Sens. 2024, 45, 3289–3309. [Google Scholar] [CrossRef]

- Xu, C.; Geng, Z.; Wu, L.; Zhu, D. Enhanced semantic segmentation in remote sensing images with SAR-optical image fusion (IF) and image translation (IT). Sci. Rep. 2025, 15, 35433. [Google Scholar] [CrossRef] [PubMed]

- Qiu, J.; Chang, W.; Ren, W.; Hou, S.; Yang, R. MMFNet: A Mamba-Based Multimodal Fusion Network for Remote Sensing Image Semantic Segmentation. Sensors 2025, 25, 6225. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S. HCGNet: A Hybrid Change Detection Network Based on CNN and GNN. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4401412. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, Z.; Shen, Y.; Sui, Z.; Lin, K. Hqsan: A hybrid quantum self-attention network for remote sensing image scene classification. J. Supercomput. 2025, 81, 1422. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Han, W.; Zheng, X.; Wang, S.; Wang, J.; Wang, L. A vision-language foundation model-based multi-modal retrieval-augmented generation framework for remote sensing lithological recognition. ISPRS J. Photogramm. Remote Sens. 2025, 225, 328–340. [Google Scholar] [CrossRef]

- Liu, K.; Li, M.; Liu, S. Selective Prototype Aggregation for Remote Sensing Few-Shot Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5644619. [Google Scholar] [CrossRef]

- Perantoni, G.; Bruzzone, L. A Novel Technique for Robust Training of Deep Networks with Multisource Weak Labeled Remote Sensing Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5402915. [Google Scholar] [CrossRef]

- Rußwurm, M.; Wang, S.; Kellenberger, B.; Roscher, R.; Tuia, D. Meta-learning to address diverse Earth observation problems across resolutions. Commun. Earth Environ. 2024, 5, 37. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, H.; Tian, Y.; Yang, G.; Li, L.; Yuan, C.; Li, F. A grazing pressure mapping method for large-scale, complex surface scenarios: Integrating deep learning and spatio-temporal characteristic of remote sensing. ISPRS J. Photogramm. Remote Sens. 2025, 227, 691–713. [Google Scholar] [CrossRef]

- Xu, N.; Xin, H.; Wu, J.; Yao, J.; Ren, H.; Zhang, H.-S.; Xu, H.; Luan, H.; Xu, D.; Song, Y. ResWLI: A new method to retrieve water levels in coastal zones by integrating optical remote sensing and deep learning. GIScience Remote Sens. 2025, 62, 2524891. [Google Scholar] [CrossRef]

- Bose, S.; Chowdhury, R.S.; Pal, D.; Bose, S.; Banerjee, B.; Chaudhuri, S. Multiscale probability map guided index pooling with attention-based learning for road and building segmentation. ISPRS J. Photogramm. Remote Sens. 2023, 206, 132–148. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote-Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2004612. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, R.; Qian, S.; Sun, S. Diffractive Neural Network Enabled Spectral Object Detection. Remote Sens. 2025, 17, 3381. [Google Scholar] [CrossRef]

- Yan, R.; Yan, L.; Cao, Y.; Geng, G.; Zhou, P.; Meng, Y.; Wang, T. Global–Local Semantic Interaction Network for Salient Object Detection in Optical Remote Sensing Images with Scribble Supervision. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6004305. [Google Scholar] [CrossRef]

- Zheng, J.; Wen, Y.; Chen, M.; Yuan, S.; Li, W.; Zhao, Y.; Wu, W.; Zhang, L.; Dong, R.; Fu, H. Open-set domain adaptation for scene classification using multi-adversarial learning. ISPRS J. Photogramm. Remote Sens. 2024, 208, 245–260. [Google Scholar] [CrossRef]

- Tolan, J.; Yang, H.-I.; Nosarzewski, B.; Couairon, G.; Vo, H.V.; Brandt, J.; Spore, J.; Majumdar, S.; Haziza, D.; Vamaraju, J.; et al. Very high resolution canopy height maps from RGB imagery using self-supervised vision transformer and convolutional decoder trained on aerial lidar. Remote Sens. Environ. 2024, 300, 113888. [Google Scholar] [CrossRef]

- Liu, Z.; Ng, A.H.-M.; Wang, H.; Chen, J.; Du, Z.; Ge, L. Land subsidence modeling and assessment in the West Pearl River Delta from combined InSAR time series, land use and geological data. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103228. [Google Scholar] [CrossRef]

- Sitaula, C.; Kc, S.; Aryal, J. Enhanced multi-level features for very high resolution remote sensing scene classification. Neural Comput. Appl. 2024, 36, 7071–7083. [Google Scholar] [CrossRef]

- Wu, A.N.; Biljecki, F. InstantCITY: Synthesising morphologically accurate geospatial data for urban form analysis, transfer, and quality control. ISPRS J. Photogramm. Remote Sens. 2023, 195, 90–104. [Google Scholar] [CrossRef]

- Dai, W.; Fan, J.; Miao, Y.; Hwang, K. Deep Learning Model Compression with Rank Reduction in Tensor Decomposition. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 1315–1328. [Google Scholar] [CrossRef]

- Sunkara, R.; Luo, T. YOGA: Deep object detection in the wild with lightweight feature learning and multiscale attention. Pattern Recognit. 2023, 139, 109451. [Google Scholar] [CrossRef]

- Zhang, Y.; Fang, Z.; Fan, J. Generalization analysis of deep CNNs under maximum correntropy criterion. Neural Netw. 2024, 174, 106226. [Google Scholar] [CrossRef] [PubMed]

- Amir, G.; Maayan, O.; Zelazny, T.; Katz, G.; Schapira, M. Verifying the Generalization of Deep Learning to Out-of-Distribution Domains. J. Autom. Reason. 2024, 68, 17. [Google Scholar] [CrossRef]

- Zhang, W.; Cai, M.; Zhang, T.; Zhuang, Y.; Li, J.; Mao, X. EarthMarker: A Visual Prompting Multimodal Large Language Model for Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5604219. [Google Scholar] [CrossRef]

- Wang, D.; Hu, M.; Jin, Y.; Miao, Y.; Yang, J.; Xu, Y.; Qin, X.; Ma, J.; Sun, L.; Li, C.; et al. HyperSIGMA: Hyperspectral Intelligence Comprehension Foundation Model. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 6427–6444. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Zhao, Y.; He, X.; Pan, S.; Bai, Y.; Wang, D.; Li, T.; Gong, F.; Zhang, X. Satellite retrievals of water quality for diverse inland waters from Sentinel-2 images: An example from Zhejiang Province, China. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 104048. [Google Scholar] [CrossRef]

- Fang, C.; Fan, X.; Wang, X.; Nava, L.; Zhong, H.; Dong, X.; Qi, J.; Catani, F. A globally distributed dataset of coseismic landslide mapping via multi-source high-resolution remote sensing images. Earth Syst. Sci. Data 2024, 16, 4817–4842. [Google Scholar] [CrossRef]

- Aybar, C.; Ysuhuaylas, L.; Loja, J.; Gonzales, K.; Herrera, F.; Bautista, L.; Yali, R.; Flores, A.; Diaz, L.; Cuenca, N.; et al. CloudSEN12, a global dataset for semantic understanding of cloud and cloud shadow in Sentinel-2. Sci. Data 2022, 9, 782. [Google Scholar] [CrossRef]

- Tong, C.; Wang, H.; Zhu, L.; Deng, X.; Wang, K. Reconstruct SMAP brightness temperature scanning gaps over Qinghai-Tibet Plateau. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103090. [Google Scholar] [CrossRef]

- Elias, P.; Benekos, G.; Perrou, T.; Parcharidis, I. Spatio-Temporal Assessment of Land Deformation as a Factor Contributing to Relative Sea Level Rise in Coastal Urban and Natural Protected Areas Using Multi-Source Earth Observation Data. Remote Sens. 2020, 12, 2296. [Google Scholar] [CrossRef]

- Palsson, B.; Sveinsson, J.R.; Ulfarsson, M.O. Blind Hyperspectral Unmixing Using Autoencoders: A Critical Comparison. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1340–1372. [Google Scholar] [CrossRef]

- Wu, X.; Guo, P.; Sun, Y.; Liang, H.; Zhang, X.; Bai, W. Recent Progress on Vegetation Remote Sensing Using Spaceborne GNSS-Reflectometry. Remote Sens. 2021, 13, 4244. [Google Scholar] [CrossRef]

- Ji, S.; Yu, D.; Shen, C.; Li, W.; Xu, Q. Landslide detection from an open satellite imagery and digital elevation model dataset using attention boosted convolutional neural networks. Landslides 2020, 17, 1337–1352. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef]

- Lu, Y.; Chen, Q.; Poon, S.K. A Deep Learning Approach for Repairing Missing Activity Labels in Event Logs for Process Mining. Information 2022, 13, 234. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Yao, X.; Zeng, Y.; Gu, M.; Yuan, R.; Li, J.; Ge, J. Multi-level video captioning method based on semantic space. Multimed. Tools Appl. 2024, 83, 72113–72130. [Google Scholar] [CrossRef]

- Wang, G.-H.; Gao, B.-B.; Wang, C. How to Reduce Change Detection to Semantic Segmentation. Pattern Recognit. 2023, 138, 109384. [Google Scholar] [CrossRef]

- Yan, S.; Shao, H.; Wang, J.; Zheng, X.; Liu, B. LiConvFormer: A lightweight fault diagnosis framework using separable multiscale convolution and broadcast self-attention. Expert Syst. Appl. 2024, 237, 121338. [Google Scholar] [CrossRef]

- Lin, G.; Jiang, J.; Bai, J.; Su, Y.; Su, Z.; Liu, H. Frontiers and developments of data augmentation for image: From unlearnable to learnable. Inf. Fusion 2025, 114, 102660. [Google Scholar] [CrossRef]

- Cui, T.; Tang, C.; Su, M.; Zhang, S.; Li, Y.; Bai, L.; Dong, Y.; Gong, X.; Ouyang, W. Geometry-enhanced pretraining on interatomic potentials. Nat. Mach. Intell. 2024, 6, 428–436. [Google Scholar] [CrossRef]

- Bai, Z.; Xu, H.; Ding, Q.; Zhang, X. FATL: Frozen-feature augmentation transfer learning for few-shot long-tailed sonar image classification. Neurocomputing 2025, 648, 130652. [Google Scholar] [CrossRef]

- Majeed, A.F.; Salehpour, P.; Farzinvash, L.; Pashazadeh, S. Multi-Class Brain Lesion Classification Using Deep Transfer Learning with MobileNetV3. IEEE Access 2024, 12, 155295–155308. [Google Scholar] [CrossRef]

- Bai, L.; Zhao, Y.; Huang, X. A CNN Accelerator on FPGA Using Depthwise Separable Convolution. IEEE Trans. Circuits Syst. II Express Briefs 2018, 65, 1415–1419. [Google Scholar] [CrossRef]

- Ding, W.; Liu, A.; Chen, X.; Xie, C.; Wang, K.; Chen, X. Reducing calibration efforts of SSVEP-BCIs by shallow fine-tuning-based transfer learning. Cogn. Neurodyn. 2025, 19, 81. [Google Scholar] [CrossRef]

- Castro, R.; Pineda, I.; Lim, W.; Morocho-Cayamcela, M.E. Deep Learning Approaches Based on Transformer Architectures for Image Captioning Tasks. IEEE Access 2022, 10, 33679–33694. [Google Scholar] [CrossRef]

- Begum, S.S.A.; Syed, H. GSAtt-CMNetV3: Pepper Leaf Disease Classification Using Osprey Optimization. IEEE Access 2024, 12, 32493–32506. [Google Scholar] [CrossRef]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Liu, R.; Cui, B.; Fang, X.; Guo, B.; Ma, Y.; An, J. Super-Resolution of GF-1 Multispectral Wide Field of View Images via a Very Deep Residual Coordinate Attention Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5513305. [Google Scholar] [CrossRef]

- Wang, C.; Xu, M.; Zhang, Q.; Zhang, D. A Novel Lightweight Rotating Mechanical Fault Diagnosis Framework with Adaptive Residual Enhancement and Multigroup Coordinate Attention. IEEE Trans. Instrum. Meas. 2025, 74, 3514517. [Google Scholar] [CrossRef]

- Li, X.; Li, Y.; Chen, H.; Peng, Y.; Pan, P. CCAFusion: Cross-Modal Coordinate Attention Network for Infrared and Visible Image Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 866–881. [Google Scholar] [CrossRef]

- Xiong, L.; Xu, C.; Zhang, X.; Fu, Z. EFCA-DIH: Edge Features and Coordinate Attention-Based Invertible Network for Deep Image Hiding. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 9895–9908. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Zhang, P.; Wu, Y.; Yang, H. A MultiScale Coordinate Attention Feature Fusion Network for Province-Scale Aquatic Vegetation Mapping from High-Resolution Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 1752–1765. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. Modality specific U-Net variants for biomedical image segmentation: A survey. Artif. Intell. Rev. 2022, 55, 5845–5889. [Google Scholar] [CrossRef]

- Cui, M.; Li, K.; Chen, J.; Yu, W. CM-Unet: A Novel Remote Sensing Image Segmentation Method Based on Improved U-Net. IEEE Access 2023, 11, 56994–57005. [Google Scholar] [CrossRef]

- Tang, H.; He, S.; Yang, M.; Lu, X.; Yu, Q.; Liu, K.; Yan, H.; Wang, N. CSC-Unet: A Novel Convolutional Sparse Coding Strategy Based Neural Network for Semantic Segmentation. IEEE Access 2024, 12, 35844–35854. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef]

- Touil, A.; Kalti, K.; Conze, P.-H.; Solaiman, B.; Mahjoub, M.A. A New Collaborative Classification Process for Microcalcification Detection Based on Graphs and Knowledge Propagation. J. Digit. Imaging 2022, 35, 1560–1575. [Google Scholar] [CrossRef]

- Chen, Q.; Huang, J.; Zhu, H.; Lian, L.; Wei, K.; Lai, X. Automatic and visualized grading of dental caries using deep learning on panoramic radiographs. Multimed. Tools Appl. 2022, 82, 23709–23734. [Google Scholar] [CrossRef]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef] [PubMed]

- Yeung, M.; Sala, E.; Schönlieb, C.-B.; Rundo, L. Unified Focal loss: Generalising Dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Zhou, P.; Xie, X.; Lin, Z.; Yan, S. Towards Understanding Convergence and Generalization of AdamW. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6486–6493. [Google Scholar] [CrossRef]

- Wu, X.; Ren, X.; Zhai, D.; Wang, X.; Tarif, M. Lights-Transformer: An Efficient Transformer-Based Landslide Detection Model for High-Resolution Remote Sensing Images. Sensors 2025, 25, 3646. [Google Scholar] [CrossRef]

- Rajpal, D.; Garg, A.R. Deep Learning Model for Recognition of Handwritten Devanagari Numerals with Low Computational Complexity and Space Requirements. IEEE Access 2023, 11, 49530–49539. [Google Scholar] [CrossRef]

- Shi, Z.; He, S.; Sun, J.; Chen, T.; Chen, J.; Dong, H. An Efficient Multi-Task Network for Pedestrian Intrusion Detection. IEEE Trans. Intell. Veh. 2023, 8, 649–660. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Q.; Yang, Y.; Liu, N.; Chen, Y.; Gao, J. Seismic Facies Segmentation via a Segformer-Based Specific Encoder–Decoder–Hypercolumns Scheme. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5903411. [Google Scholar] [CrossRef]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. DN-DETR: Accelerate DETR Training by Introducing Query DeNoising. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2239–2251. [Google Scholar] [CrossRef]

- Cui, P.; Meng, X.; Zhang, W. Road Extraction from High-Resolution Remote Sensing Images of Open-Pit Mine Using D-SegNeXt. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6009005. [Google Scholar] [CrossRef]

- Sun, Y.; Zheng, H.; Zhang, G.; Ren, J.; Shu, G. CGF-Unet: Semantic Segmentation of Sidescan Sonar Based on Unet Combined with Global Features. IEEE J. Ocean. Eng. 2024, 49, 963–975. [Google Scholar] [CrossRef]

- Yuan, K.; Zhuang, X.; Schaefer, G.; Feng, J.; Guan, L.; Fang, H. Deep-Learning-Based Multispectral Satellite Image Segmentation for Water Body Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7422–7434. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Shahabi, H.; Crivellari, A.; Homayouni, S.; Blaschke, T.; Ghamisi, P. Landslide detection using deep learning and object-based image analysis. Landslides 2022, 19, 929–939. [Google Scholar] [CrossRef]

- Li, X.; Wessels, K.; Armston, J.; Hancock, S.; Mathieu, R.; Main, R.; Naidoo, L.; Erasmus, B.; Scholes, R. First validation of GEDI canopy heights in African savannas. Remote Sens. Environ. 2023, 285, 113402. [Google Scholar] [CrossRef]

- Ying, M.; Zhu, J.; Li, X.; Hu, B. A Functional Connectivity-Based Model with a Lightweight Attention Mechanism for Depression Recognition Using EEG Signals. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 4240–4248. [Google Scholar] [CrossRef]

- Siswanto, E.; Sasai, Y.; Matsumoto, K.; Honda, M.C. Winter–Spring Phytoplankton Phenology Associated with the Kuroshio Extension Instability. Remote Sens. 2022, 14, 1186. [Google Scholar] [CrossRef]

- Tang, X.; Lu, Z.; Fan, X.; Yan, X.; Yuan, X.; Li, D.; Li, H.; Li, H.; Raj Meena, S.; Novellino, A.; et al. Mamba for Landslide Detection: A Lightweight Model for Mapping Landslides with Very High-Resolution Images. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5637117. [Google Scholar] [CrossRef]

- Zhu, S.; Li, Z.; Shen, H. Transferring Deep Models for Cloud Detection in Multisensor Images via Weakly Supervised Learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5609518. [Google Scholar] [CrossRef]

| Time | Number of GF-2 Remote-Sensing Images (Unit: Scenes) |

|---|---|

| June | 8 |

| July | 0 |

| August | 1 |

| Total | 9 |

| Band | Band Number | Spatial Resolution (m) | Wavelength Range (μm) |

|---|---|---|---|

| Panchromatic | Pan | 0.8 | 0.45–0.9 |

| Multispectral | Band 1 (Blue) | 3.2 | 0.45–0.52 |

| Band 2 (Green) | 0.52–0.59 | ||

| Band 3 (Red) | 0.63–0.69 | ||

| Band 4 (NIR) | 0.77–0.89 |

| Augmentation Technique | BOWD Number of Uses | LSDB Number of Uses | HRC_WHU Number of Uses |

|---|---|---|---|

| Flip | 399 | 426 | 835 |

| Rotation | 428 | 405 | 773 |

| Translation | 434 | 404 | 765 |

| Brightness Adjustment | 313 | 316 | 643 |

| Contrast Adjustment | 341 | 320 | 641 |

| Sharpness Adjustment | 304 | 311 | 645 |

| Gaussian Noise | 321 | 279 | 577 |

| Combined Augmentation | 513 | 492 | 953 |

| Saturation Adjustment | 0 | 299 | 638 |

| Dataset Name | Data Source | Spatial Resolution (m) | Number of Samples | Total Pixel Count | Target Pixel Count | Mean Proportion of Target Pixels per Sample |

|---|---|---|---|---|---|---|

| BOWD | GF-2 | 0.8 | 850 | 55,705,600 | 6,104,011 | 10.96% |

| LSDB | Beijing-2 | 0.8 | 1500 | 98,304,000 | 11,200,996 | 11.36% |

| HRC_WHU | Google Earth | 0.5–15 | 5000 | 327,680,000 | 138,746,618 | 42.34% |

| Model Name | Precision | Recall | Iou | Accuracy | Kappa | F1 Score | Total Parameters | Trainable Parameters | Boundary F1 Score | FLOPs (Giga) |

|---|---|---|---|---|---|---|---|---|---|---|

| CAGM-Seg | 97.85% | 98.08% | 96.01% | 99.55% | 97.71% | 97.76% | 3,488,961 | 517,009 | 89.30% | 0.69 |

| PIDNet | 93.85% | 92.78% | 87.46% | 98.54% | 92.50% | 93.31% | 1,205,465 | 1,205,465 | 81.67% | 1.46 |

| SegFormer | 94.54% | 95.79% | 90.94% | 98.95% | 94.65% | 95.25% | 3,056,578 | 3,056,578 | 86.47% | 0.78 |

| Mask2Former | 95.49% | 94.94% | 90.86% | 98.90% | 94.59% | 95.21% | 73,511,711 | 73,511,711 | 87.69% | 40.24 |

| SegNeXt | 95.40% | 88.38% | 84.77% | 98.21% | 90.76% | 91.76% | 4,308,450 | 4,308,450 | 84.57% | 9.14 |

| Model Name | Precision | Recall | Iou | Accuracy | Kappa | F1 Score | Total Parameters | Trainable Parameters | Boundary F1 Score | FLOPs (Giga) |

|---|---|---|---|---|---|---|---|---|---|---|

| CAGM-Seg | 97.85% | 98.08% | 96.01% | 99.55% | 97.71% | 97.76% | 3,488,961 | 517,009 | 89.30% | 0.69 |

| CAM-Seg | 95.18% | 95.52% | 91.11% | 98.98% | 94.77% | 95.35% | 3,856,493 | 884,541 | 85.77% | 1.32 |

| CM-Seg | 93.77% | 95.13% | 89.48% | 98.77% | 93.76% | 94.45% | 3,842,625 | 870,673 | 81.34% | 1.3 |

| U-Net | 81.53% | 87.78% | 73.22% | 96.48% | 82.53% | 84.54% | 17,263,042 | 17,263,042 | 67.32% | 80.07 |

| Dataset Name | Precision | Recall | Iou | Accuracy | Kappa | F1-Score | Boundary F1 Score |

|---|---|---|---|---|---|---|---|

| HRC_WHU (CAGM-Seg) | 87.72% | 85.30% | 76.20% | 88.72% | 76.81% | 86.48% | 73.27% |

| LSDB (CAGM-Seg) | 91.93% | 89.26% | 82.77% | 97.88% | 89.38% | 90.57% | 80.13% |

| HRC_WHU (SegFormer) | 85.09% | 88.27% | 86.41% | 89.06% | 85.19% | 85.73% | 82.24 |

| LSDB (SegFormer) | 79.41% | 80.46% | 76.39% | 83.11% | 71.56% | 80.01% | 81.65% |

| HRC_WHU (Mask2Former) | 85.94% | 95.45% | 81.77% | 89.01% | 85.13% | 85.70% | 76.57% |

| LSDB (Mask2Former) | 77.35% | 76.90% | 73.60% | 80.11% | 76.62% | 77.12% | 66.91% |

| HRC_WHU (PIDNet) | 84.47% | 83.50% | 78.71% | 88.69% | 87.94% | 83.98% | 73.45% |

| LSDB (PIDNet) | 77.90% | 77.01% | 72.59% | 81.79% | 76.78% | 77.45% | 68.57% |

| Resolution (m) | Number of Samples | Sample Percentage |

|---|---|---|

| 0.5–2 | 840 | 16.80% |

| 2–5 | 1260 | 25.20% |

| 5–10 | 1137 | 22.74% |

| 10–15 | 1763 | 35.26% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, H.; Li, Y.; Feng, W.; Zhu, J.; Yan, H.; Zhang, S.; Zhao, H. CAGM-Seg: A Symmetry-Driven Lightweight Model for Small Object Detection in Multi-Scenario Remote Sensing. Symmetry 2025, 17, 2137. https://doi.org/10.3390/sym17122137

Yao H, Li Y, Feng W, Zhu J, Yan H, Zhang S, Zhao H. CAGM-Seg: A Symmetry-Driven Lightweight Model for Small Object Detection in Multi-Scenario Remote Sensing. Symmetry. 2025; 17(12):2137. https://doi.org/10.3390/sym17122137

Chicago/Turabian StyleYao, Hao, Yancang Li, Wenzhao Feng, Ji Zhu, Haiming Yan, Shijun Zhang, and Hanfei Zhao. 2025. "CAGM-Seg: A Symmetry-Driven Lightweight Model for Small Object Detection in Multi-Scenario Remote Sensing" Symmetry 17, no. 12: 2137. https://doi.org/10.3390/sym17122137

APA StyleYao, H., Li, Y., Feng, W., Zhu, J., Yan, H., Zhang, S., & Zhao, H. (2025). CAGM-Seg: A Symmetry-Driven Lightweight Model for Small Object Detection in Multi-Scenario Remote Sensing. Symmetry, 17(12), 2137. https://doi.org/10.3390/sym17122137