1. Introduction

The determination of the spectrum

matrix is a fundamental problem in almost all spheres of science. In fact, if the eigenpairs are known, then this is a complete description of the associated linear operator. In most cases, the solution of the characteristic equation

is unmanageable for large matrix dimensions

n. In this case, numerical methods suffice, for example, the

algorithm [

1]. In some cases, it is only the location of the spectrum that is required, whilst in other instances, knowledge of the extreme eigenvalues is sufficient. Weinstein bounds [

2] and Kato bounds [

3] depend on an approximate eigenpair, which may be evaluated numerically. For real symmetric matrices, Lehmann’s method [

4] provides both upper and lower bounds for the eigenvalues. The Temple quotient [

5] provides a lower bound for the smallest eigenvalue of such a matrix and is a special case of Lehmann’s method. Brauer [

6] provides a method to calculate the outer bounds for

for such matrices. The Rayleigh quotient [

7] is often used when some approximate eigenpair is known to improve the eigenvalue. The Gerschgorin theorem and ovals of Cassini [

8] depend only on knowledge of the entries of a matrix, yet they yield powerful results, especially for large sparse matrices. Trace bounds use only the diagonal elements of a matrix; however, they can yield some very impressive bounds for the eigenvalues. These have been studied extensively by Wolcowicz and Styan [

9]. Sharma et al. [

10] extended and improved the work of Wolcowiz and Styan, whilst Singh et al. [

11,

12] generalized the work of the previous two authors by employing functions of a matrix. Recently, Singh et al. [

13] have introduced a precursor to this article by using all matrix entries. Here we introduce an optimizing parameter which improves the inner bounds. Recently, there has been a resurgence in the effort to efficiently locate eigenvalue bounds for interval matrices [

14] and symmetric tridiagonal matrices [

15].

2. Theory

Let

be a

real symmetric matrix, with eigenvalues

, and denote the associated set of orthonormal eigenvectors by

. We shall assume that the eigenvalues are arranged in the order

Let denote the standard inner product in .

Proof. Let

, where

, then

where

Thus, . The left hand side of (1) is proved in a similar manner. □

Lemma 1. If , where S is a subspace of and n is assumed to be even, then Let , where are orthogonal. An obvious parametric choice for and is the following:

Let

and

, where

are the standard unit vectors in

, with one in the

position and zeros elsewhere. We may thus write

Thus, if

, then

and

It may seem plausible that

and

may be chosen to maximize/minimize

f. However, this is not the case. For constants

and

, we have that

Thus,

, and since the unit ball in

S is independent of the two non-parallel vectors that span

S, it must be true that the extreme values of

f are independent of the choice of

and

of the form given by (2)–(3). It will therefore suffice to use the rather simpler form

where we have chosen the normalized form.

Theorem 2. Let be an orthonormal set in , where n is even; then, the extreme values of , , are given by Proof. Differentiate (8) partially with respect to

and

to obtain

Setting

yields a linear system of equations, which may be cast in the matrix vector form

It is now obvious that the extreme values of f are the eigenvalues of the coefficient matrix in (9). The solution of the characteristic polynomial then yields (6). □

We partition the matrix

into

blocks as follows:

If

and

are chosen as in (4) and (5), we have

where

is the sum of the elements of

. Similarly, we can show that

where

is the sum of the elements of

and

is the sum of the elements of

. Substituting (10)–(12) into (6), we have

We now let the

component of

be replaced by a parameter

,

and

where

and

. For convenience, we shall write (6) as

where

,

, and

.

may be simplified as follows:

where

is the sum of the elements of the

row of

. Similarly, we can show the following results:

where

is the sum of the elements of the

row of

. Since

and

are functions of

, we may differentiate them with respect to alpha to show the following results:

Equation (18) is simplified to yield

In a similar manner, we may show that

Equation (20) may be simplified to the form

From (15) and (16), we have

To find the extreme values of

, (14) is differentiated with respect to

to yield the equation

Equation (23) simplifies to

Equation (24) is now multiplied by

and terms are grouped to yield

The terms in the brackets in Equation (25) can be simplified by using Equations (17), (19), (21), (22) and (25), written in the form

where

Linearizing

about

by using

and finding a zero which we shall call

yields

where

and

; thus,

is now a component in the vector

. From (6), we have

where

Note that

. Let

Thus,

is the sum of the

row of

. Similarly,

Thus,

is the sum of the

column of

. We may thus replace

by

,

by

,

by

,

by

,

by

,

by

, and

by

in our derivations. Thus, from (27)–(29), we have

The solution of

in (26) and

in (31) is easily effected by using a Newton method with starting values

and

given in (30) and (32). This yields single values for the parameter

that is used to optimize both

and

simultaneously using (14). However, this may result in the maximization of

and/or the minimization of

, clearly a situation that we do not desire. However, this routine is simple to implement and automatic. An alternative yet more accurate approach is to minimize

separately and maximize

separately by setting

In this case, an initial value of

chosen arbitrarily can lead to divergence of the Newton routine. It may also lead to the maximization of

or minimization of

. Recall that

is what complicates the issue. A workaround is to sketch the corresponding curves

and

to determine the appropriate initial guesses. This is unfortunately not readily automated. However, the preceding process yields superior results, as we will illustrate. In order to deal with the case of

n odd, we shall use a principal submatrix

of

by deleting the

row and column of

, where

. It is well known that

from the Cauchy interlacing theorem [

16]. In this case, we will be evaluating inner bounds for the submatrix, which are still bounds for the original matrix. The efficiency of this procedure is however restricted by the spectral distribution of

. Another possibility is to pad the original matrix to even order by adding a

row and

column,

so that the extreme eigenvalues of

are unaffected. Here

means to prepend the row and column,

means insert the row and column immediately after row one and column one of

, while

means append the row and column to

. The example for

is illustrated below:

Here refers to the trace of . The well known inequality ensures that the original extreme eigenvalues are not affected. Instead of the trace, one could use as .

Example 1. Here we consider the matrix [

17]

given by The solution for

and

using (26) and (31) is shown in

Table 1. From this, we can accept 2.328878 as an upper bound for

and 28.807700 as a lower bound for

. These are acceptable given the relative ease of computation. In order to obtain a superior lower bound, we solve (33) for optimal

and substitute into (14). These results are summarized in

Table 2. Similarly, to obtain a superior upper bound, we solve (34) for optimal

and substitute into (14). The corresponding results are summarized in

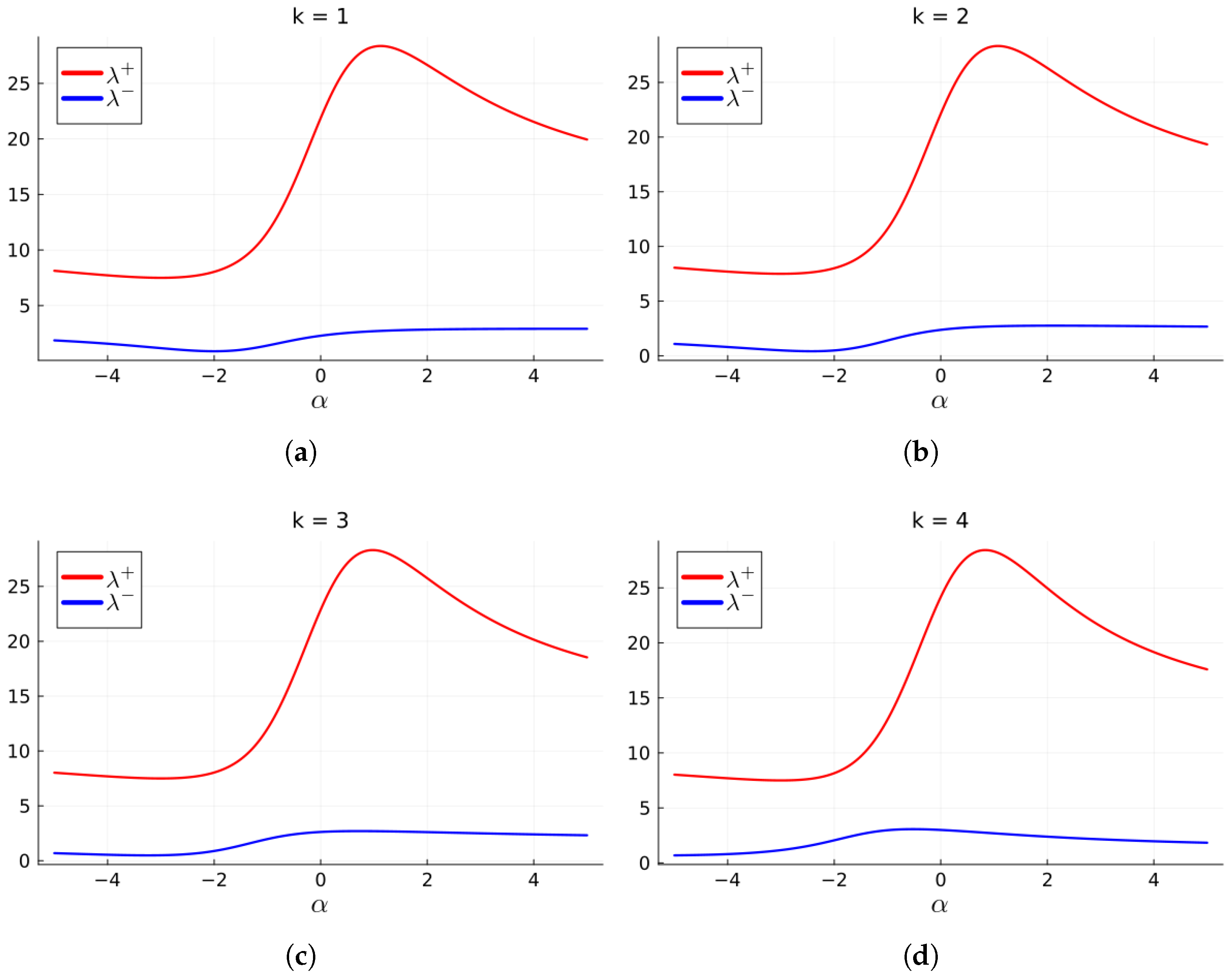

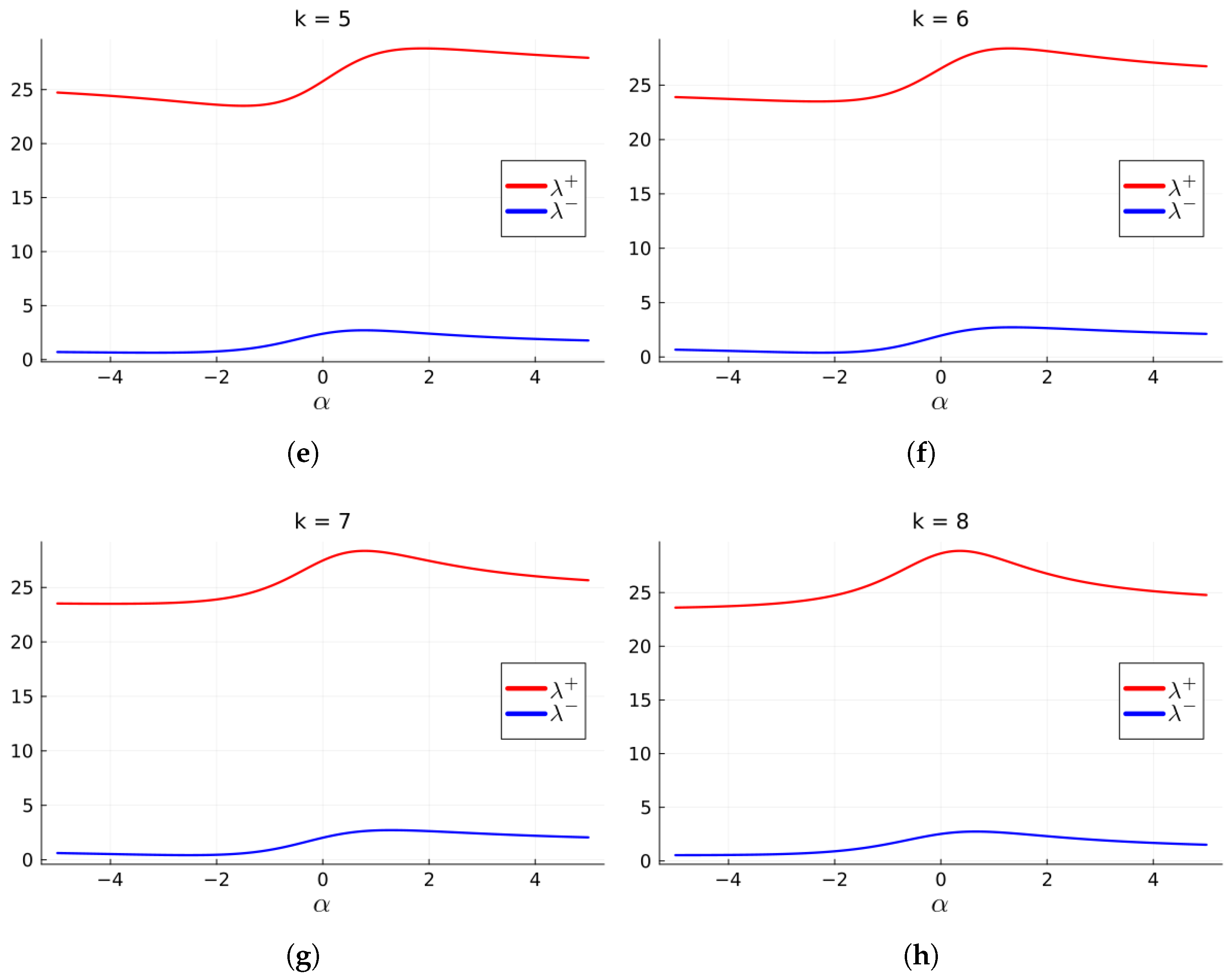

Table 3. From these, we obtain the lower bound 0.394002 and the upper bound 28.882906 However, the latter process requires a good enough starting value for

in the corresponding Newton routine, failing which there is divergence, a value of

that maximizes the lower bound, a value of

that minimizes the upper bound, or convergence to a complex zero. These starting values are obtained graphically for different values of

k, as depicted in

Figure 1. We shall thus not generate these results for further examples.

Example 2. Here we consider the pentadiagonal matrix of odd order [

17]

given by For Example 2, we merely omit the last row and last column of the matrix to obtain and even-order submatrix. However, omission of the

row and column will achieve a similar purpose, though with different results. From

Table 4, we accept 0.089968 as an upper bound for

and 5.260788 as a lower bound for

. We additionally summarize in

Table 5 the best bounds obtained by deleting each of the other rows and corresponding columns. From

Table 5, the best choices for extreme inner bounds are obviously −0.066734 and 5.262994 In

Table 6, we summarize the effect of padding the matrix to even order. Several results are similar, since the padding position does not affect the spectrum of all such padded matrices. This is because each padded matrix is similar to the other by a permutation similarity transformation. From this table, −0.107061 and 5.095542 are acceptable bounds.

Example 3. Here we consider the dense matrix of order 9 given by , where , , and is a chosen diagonal matrix. Since is an involution, . The results using deletion are presented in Table 7. From this the best bounds are −5.823999 and 12.713623 Results using padding are presented in Table 8. Here the accepted bounds are 1.980210 and 12.506700 A similar pattern of results are obtained as for padding in Example 2. If padding is to be used, then it is only necessary to prepend or append the padding to the matrix. Example 4. Here we consider the Hilbert matrix of order 10 given by This is a highly ill-conditioned matrix with and .

The results are depicted in

Table 9. The minimum upper bound for

is accepted as 0.098587, while the maximum lower bound for

is given by 1.563673.