APDP-FL: Personalized Federated Learning Based on Adaptive Differential Privacy

Abstract

1. Introduction

- (1)

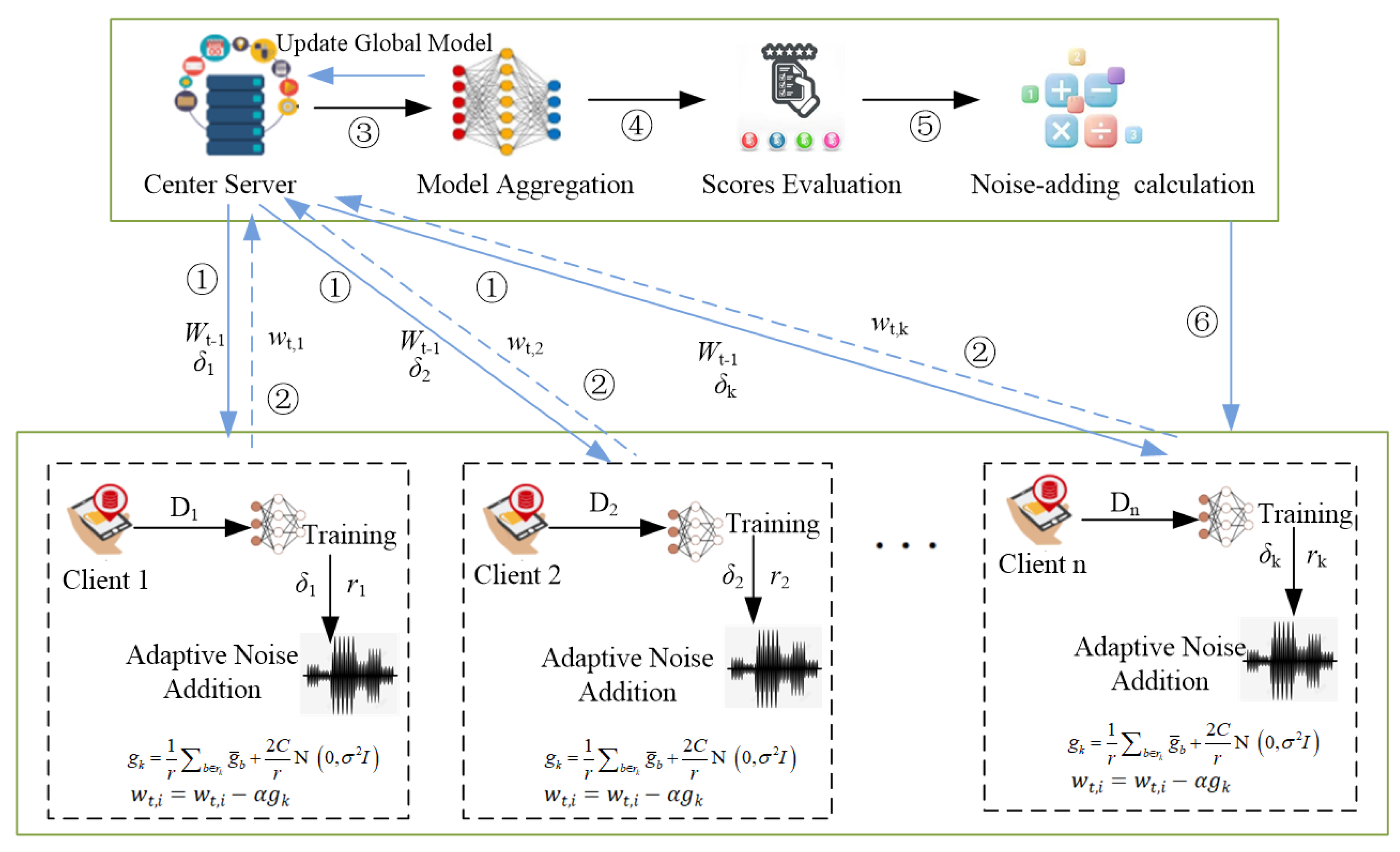

- This study propose the APDP-FL architecture to solve the three problems of personalized privacy requirements, accuracy reduction, and model convergence difficulties in FL.

- (2)

- An adaptive noise addition method is proposed to score each parameter for each round of the training process, and then, it dynamically adjusts the size of the added noise for the next round, which consumes less of the privacy budget and speeds up the model’s convergence.

- (3)

- A personalized privacy protection strategy is designed, which can add noise for the client; it meets its own needs according to the user’s privacy preference, solving the problem of insufficient or excessive privacy protection for some clients due to the same set of privacy budgets, and it realizes the personalized privacy protection of the client.

- (4)

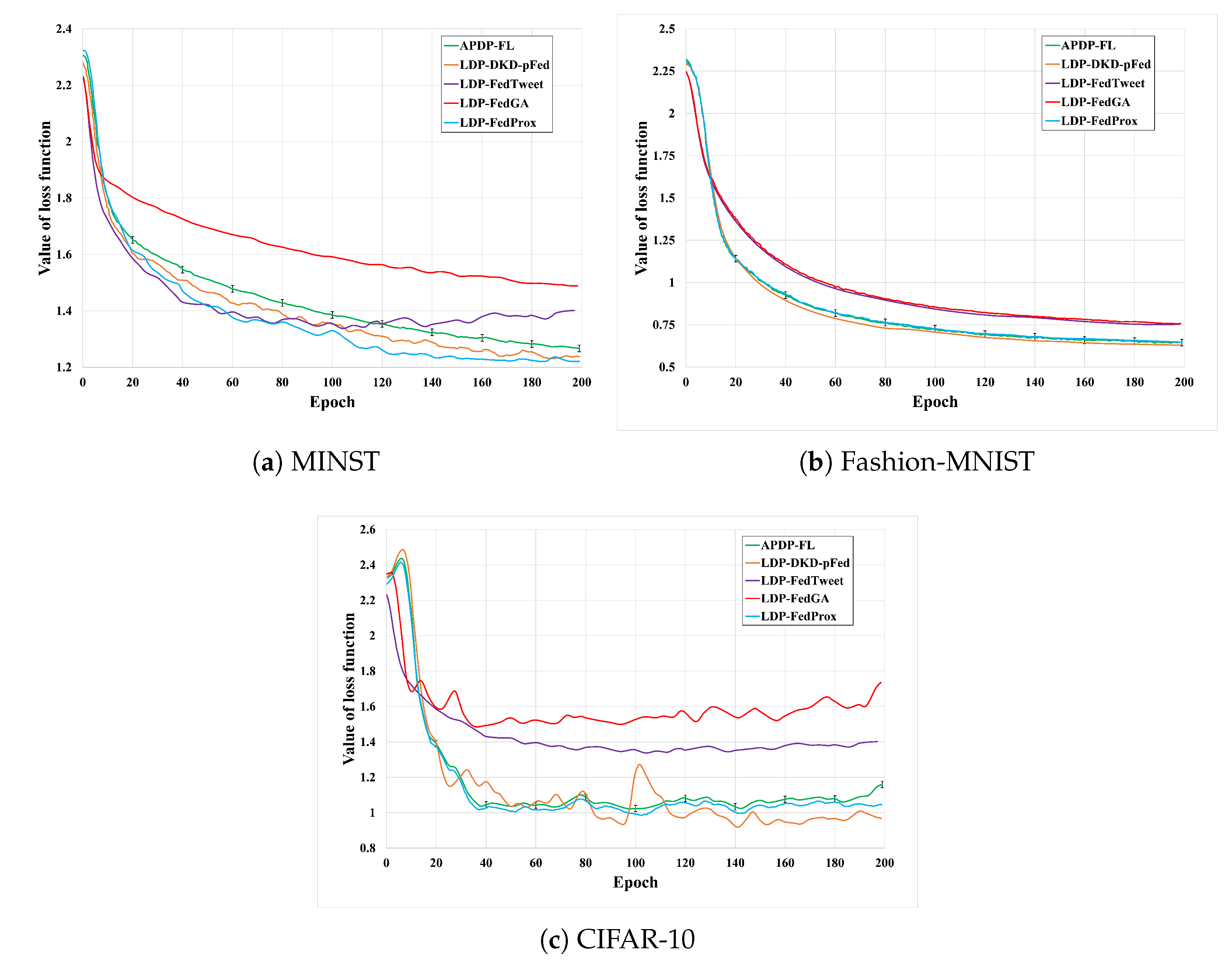

- Extensive experiments are carried out on three real datasets: MNIST, FMNIST, and CIFAR-10. The results show that APDP-FL is superior to state-of the-art baselines in terms of privacy protection, model accuracy, and convergence speed.

2. Related Work

2.1. Homomorphic Encryption

2.2. Secure Multi-Party Computation

2.3. Differential Privacy

3. Background and Problem Setting

3.1. Federated Learning

3.2. Differential Privacy

3.3. System Model

3.4. Attack Model

- (1)

- The ability to eavesdrop the intermediate parameters of the interaction between the central server and the user through the public channel to infer the private information of the user.

- (2)

- The ability to hack the central server and use the intermediate parameters or aggregation results of the server to infer the private information of the user.

- (3)

- The ability to hack into one or more users and use their information to infer private data about other users but not the ability to manipulate all users at once.

3.5. Design Objectives

- (1)

- Data Privacy: Ensure that Attacker A cannot directly or indirectly use the local model parameters and the global model aggregated by the central server to infer the private data of users.

- (2)

- Personalized Privacy Protection: Different users possess different sensitivities to data, and the scheme should ensure that all participants can set their own privacy parameters at the beginning of training to meet their personalized privacy protection needs.

- (3)

- Model Accuracy: The server needs to provide noise addition coefficients for participating users, and the scheme needs to ensure that the noise added in each round meets privacy protection needs while not affecting the model’s accuracy as much as possible.

4. Adaptive Personalized Differential Privacy Federated Learning Algorithm (APDP-FL)

4.1. Adaptive Noise Addition

- (1)

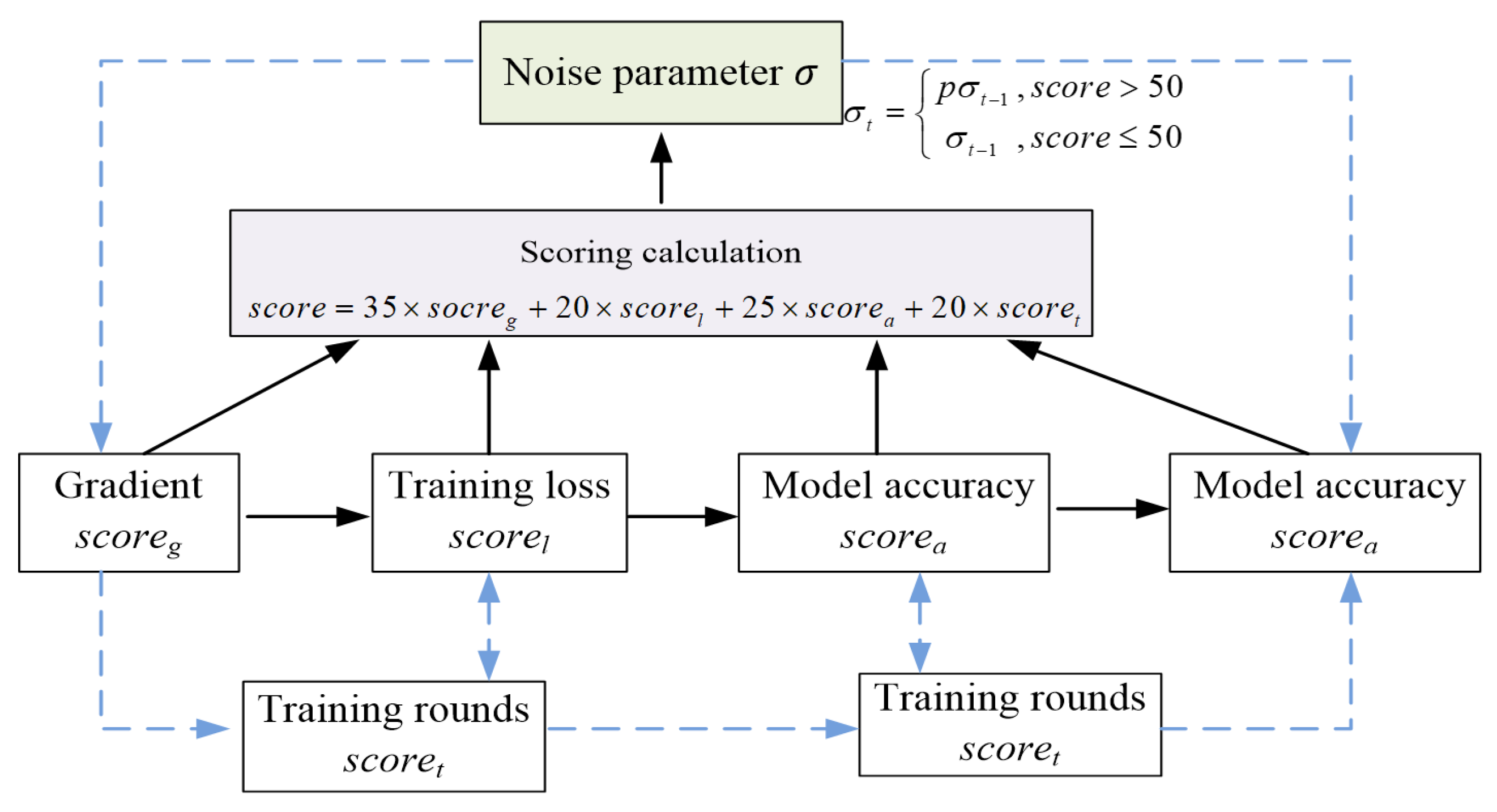

- Gradient: Let be the gradient biparadigm at the time of global model update in the t-th round of communication, g represents gradient, and let be the maximum value of the historical gradient biparadigm. The scoring of with the gradient as the scoring criterion is calculated using the following formula:To minimize added noise when the global model gradient paradigm is small, the opposite of the ratio of to plus 1 was used as the scoring criterion for the gradient, and the size of was limited to no more than .When the global model gradient paradigm is small, the model approaches convergence, and noise needs to be reduced to avoid interfering with optimization. Using the inverse of the ratio of the current gradient, two normal forms were used relative to the historical average plus 1 as the score. Dynamic denoising is as follows: The smaller the gradient, the higher the score, and the stronger the denoising. Limit the rating to ≤100% to ensure privacy protection and prevent noise from disappearing.

- (2)

- Training Loss: Let be the loss on the test set after the t-th round of communication, and let be the loss on the round. This module’s score is calculated as follows:If the training loss of the current round is greater than the training loss of the previous round, this means that the noise added during the training of this round may be too large, so during the next round of training, as little noise as possible should be added.

- (3)

- Model Accuracy: Let be the accuracy of the global model on the test set after the t-th round of communication, and let , , , and be the accuracy of the model in the first four rounds. The model accuracy module score is calculated as follows:is based on the mean value of the model accuracy of the last five times (a represents accuracy). If the model accuracy of the last round is lower than the mean value, this means that the model’s accuracy has shown a downward trend during the training process, so it is necessary to minimize the addition of noise and reduce the interference of added noise on the model’s accuracy.

- (4)

- Training Rounds: Set as the maximum number of communication rounds set. The training process needs to gradually reduce the addition of noise; thus, the training rounds that prevail in are calculated as follows:Combining the above scoring formula and giving different weights to each module, the final scoring calculation method can be derived as follows:The comprehensive scoring function dynamically adjusts noise intensities by the weighted fusion of individual scores, where the weight coefficients , , and delta satisfy and dynamically allocate , and according to task requirements. The coefficients can be dynamically adjusted.Relying on this scoring function, adaptive noise additions to the model during the training process can be realized, where the details of variations in the noise parameter are as follows:where is a constant, representing the proportion of reduced noise addition. The process of calculating noise parameters is shown in Figure 2.

4.2. Personalized Privacy Protection

4.3. APDP-FL Training Process

4.3.1. Server-Side Training Process

| Algorithm 1 APDP-FL server-side algorithm |

|

4.3.2. Client-Side Training Process

| Algorithm 2 APDP-FL client-side algorithm |

|

5. Performance Analysis

5.1. Privacy Protection

- (1)

- Membership Inference Attack (MIA)Attackers use model outputs (such as prediction confidence) to determine whether a certain piece of datum participated in training. For example, in medical federated learning, attackers may infer whether a specific patient participated in the training set of a disease study. Differential privacy reduces the success rate of MIA by adding noise to blur data correlations. However, attacks may still infer member identities based on the differences in statistical model outputs.

- (2)

- Reconstruction AttackAttackers use model gradients or parameters to infer the original training data. For example, gradient inversion attack utilizes optimization algorithms to reconstruct image data from gradients, and gradient cropping and noise addition can increase the difficulty of reconstruction. But if the noise intensity is insufficient, attackers may still approach the real data through multiple iterations of optimization.

- (3)

- Model Inversion AttackAttackers generate adversarial samples by querying the model’s API and inferring the distribution of training data. For example, in medical federated learning, attackers may use GANs to generate fake images that are similar to training data. However, although differential privacy reduces data distinguishability, it cannot completely prevent attackers from using generative models to simulate data distribution.

- (4)

- Backdoor AttackThe attacker implants specific triggers in the training data, causing the model to output preset results under specific inputs. For example, in image classification, attackers may add tiny markers in the corners of the image, forcing the model to classify it into a specific category. Differential privacy has no direct defense against backdoor attacks, and attackers may bypass noise interference by poisoning data, especially when triggers overlap with normal data distributions.

5.2. Complexity Analysis

6. Experiment

6.1. Experimental Settings

- (1)

- Dataset IntroductionWe set up a federated learning system with multiple clients and a central server for collaborative training until the global model converged; then, training stopped. In this paper, we use three commonly used image classification datasets, MNIST, Fashion-MNIST, and CIFAR-10, for the image classification task. The MNIST dataset consists of 60,000 training samples with 10,000 test samples, the size of each image is , and the content of the image is handwritten numbers from 0 to 9. Fashion-MNIST similarly consists of 60,000 training samples with 10,000 test samples, the size of each image is , and the image content includes 10 different kinds of goods. CIFAR-10 includes 60,000 natural images divided into 10 classes, and each image is .

- (2)

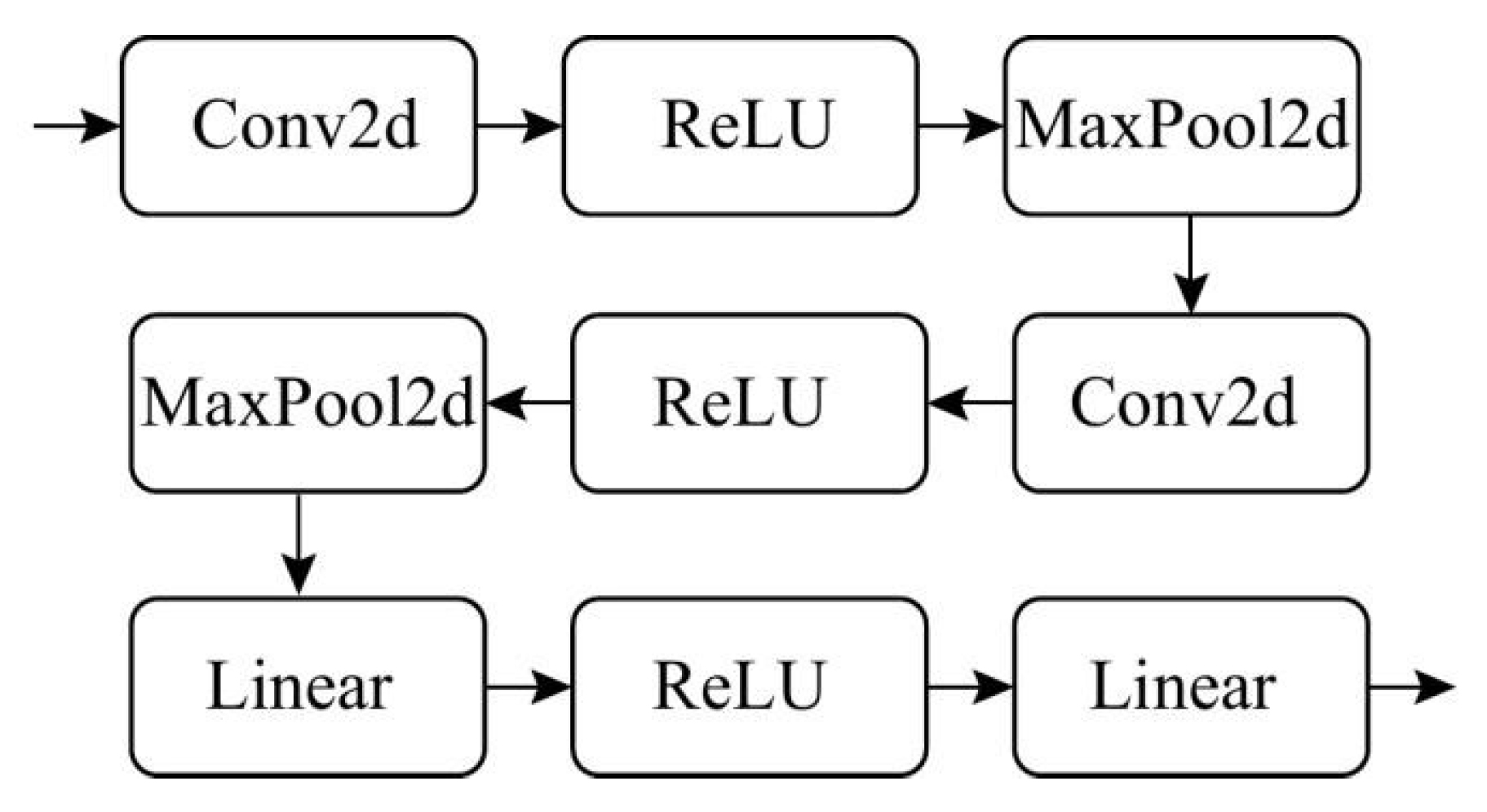

- Neural Network ArchitectureThe experiment used a two-layer convolutional neural network on both the FMNIST dataset and the CIFAR10 dataset, as shown in Figure 3. The model structures on the two datasets are the same, but due to different inputs and outputs, the number of parameters in the model is slightly different.

- (3)

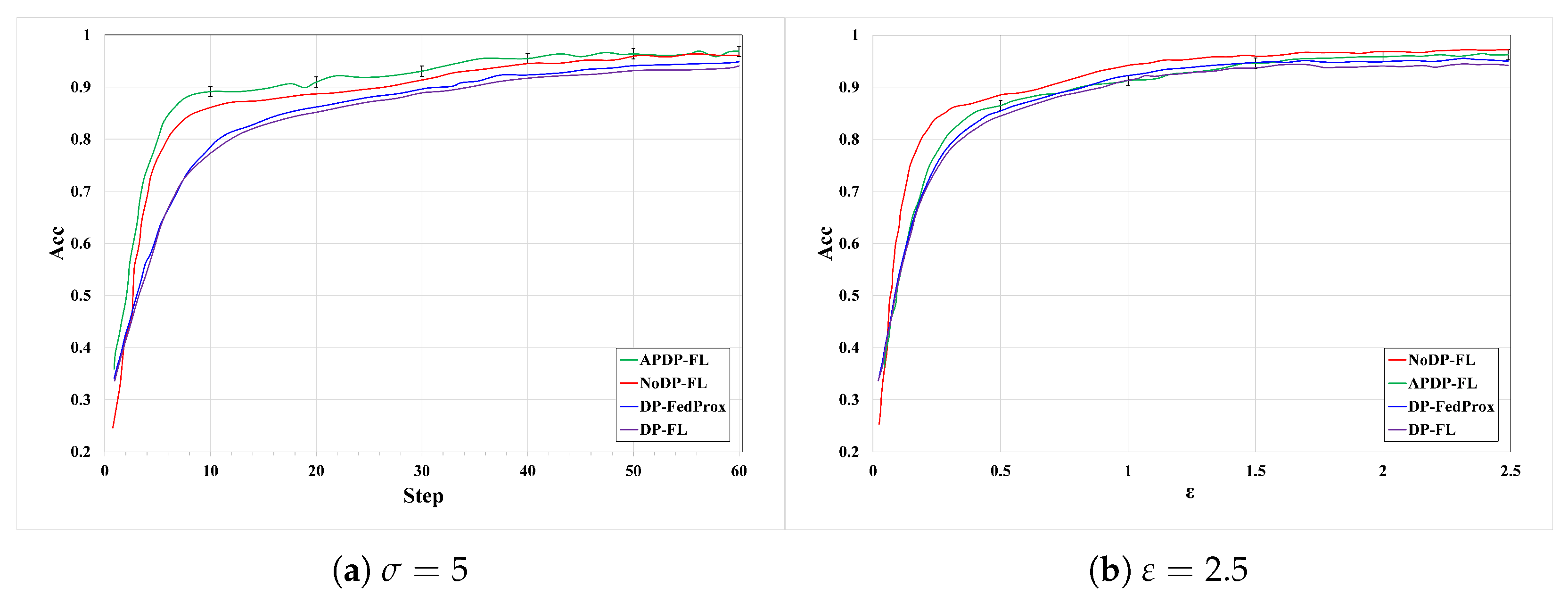

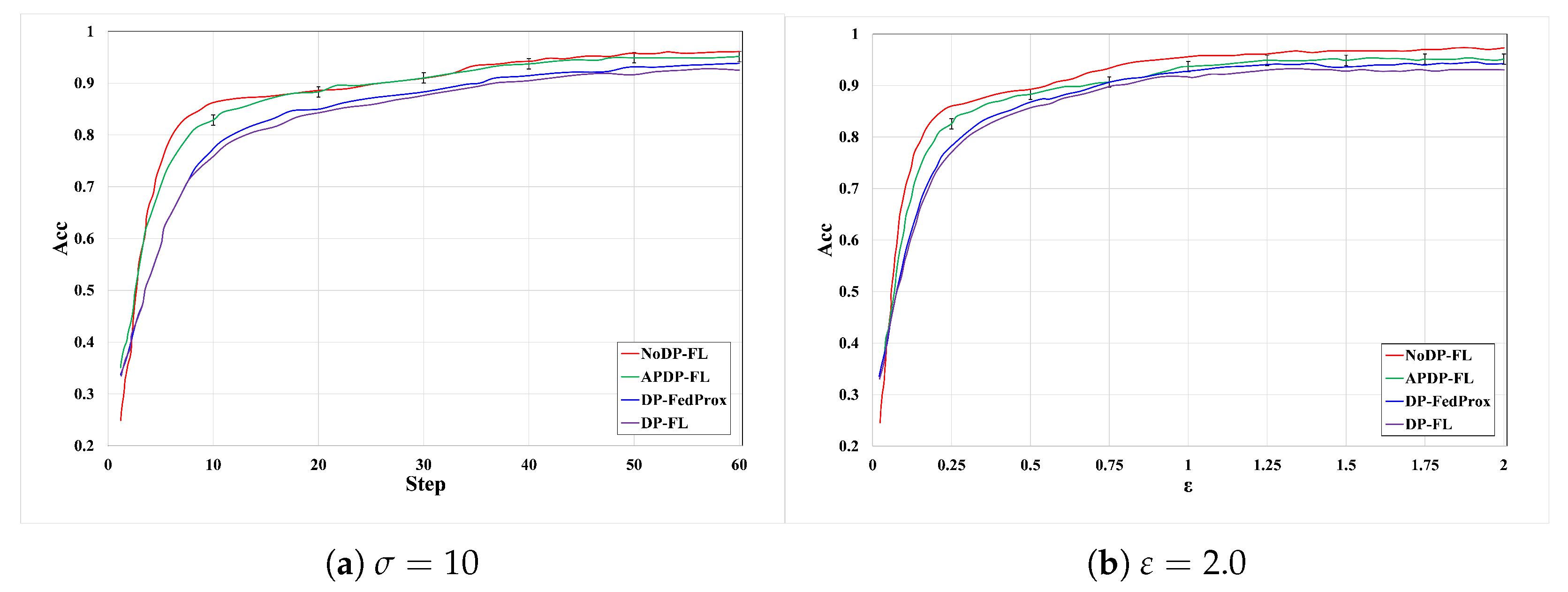

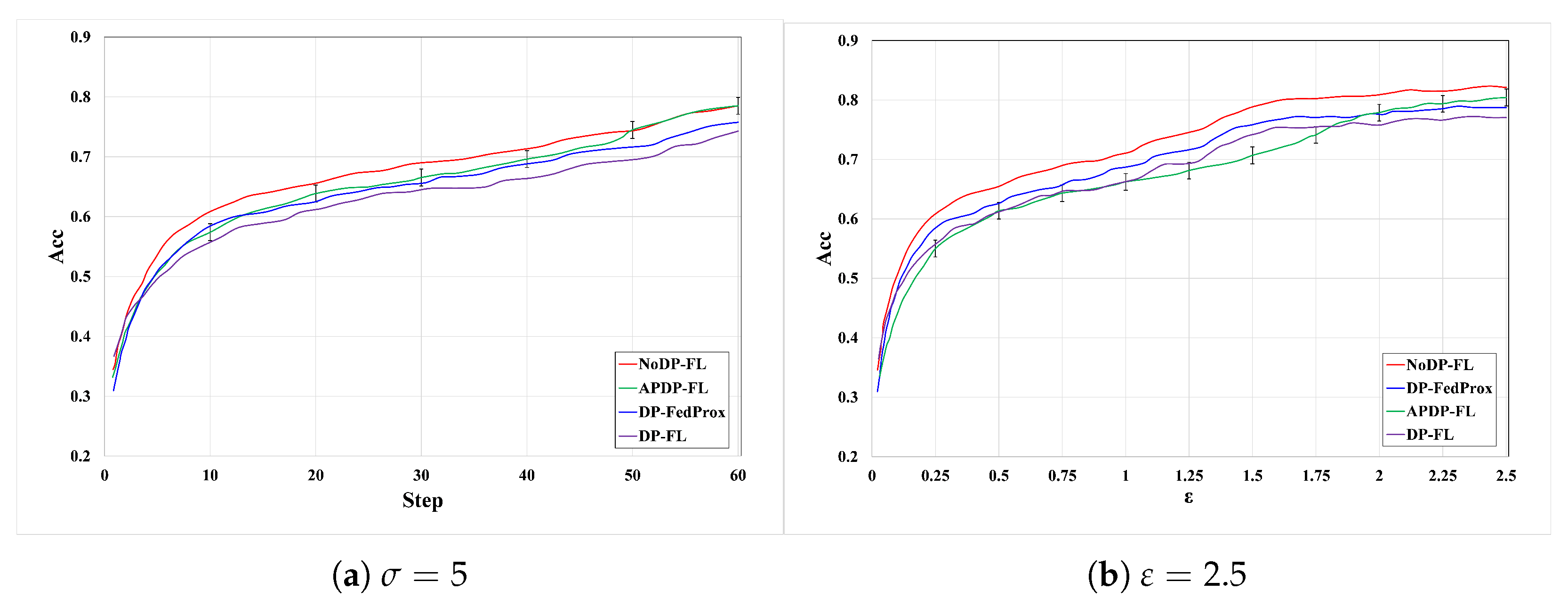

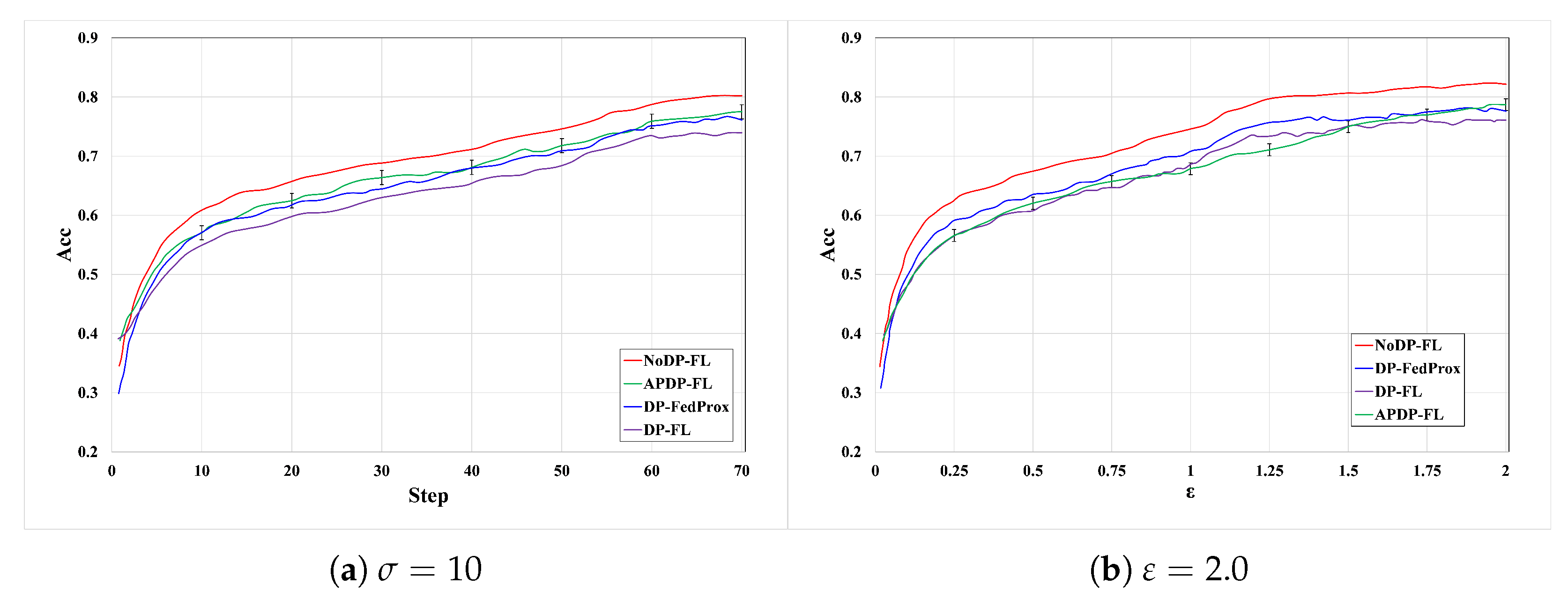

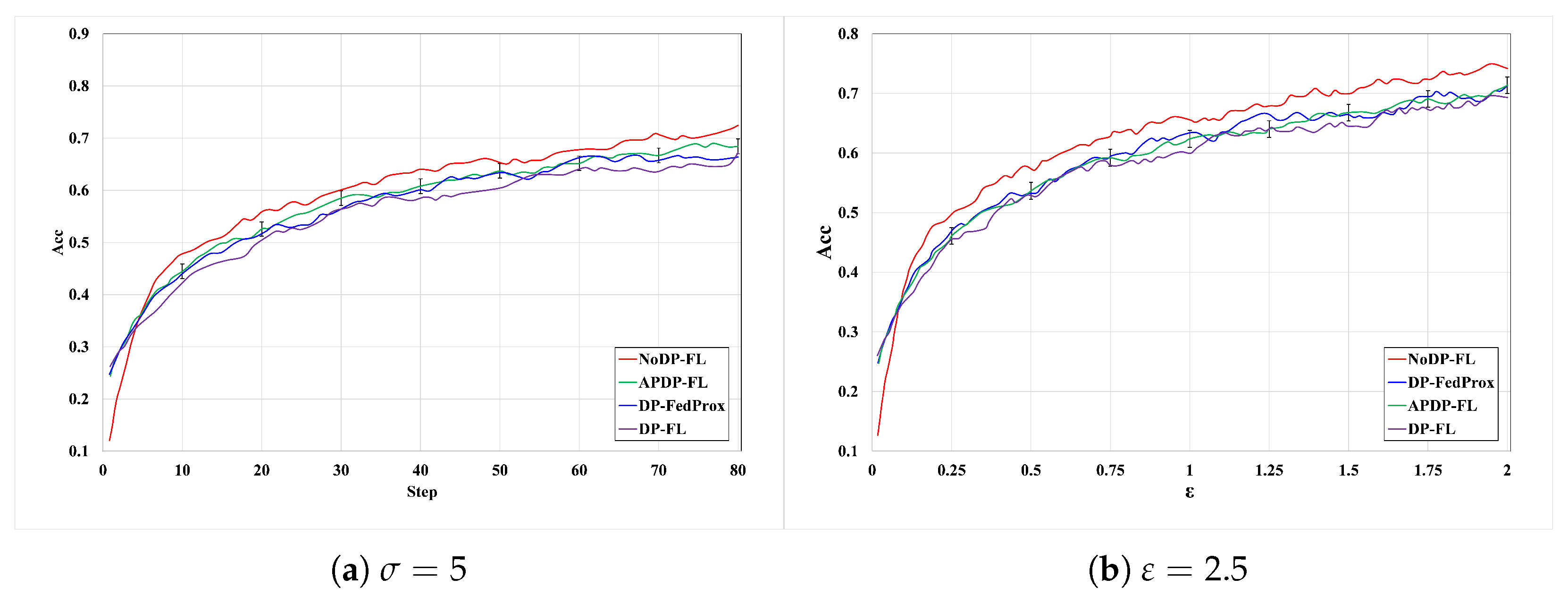

- Hyperparameter settingThe privacy preference levels selected by users are set to conform to normal distributions. The initial Gaussian noise parameters, , are 5 and 10; the learning rate is 0.05; the number of participating users is 100; the noise reduction coefficient q is 0.90/0.94/0.98 (MNIST/Fashion-MNIST/CIFAR-10); the client’s selection rate in each round is 0.1. The maximum number of training rounds is 100, and the number of local training iterations is 5/10/20 (MNIST/Fashion-MNIST/CIFAR-10).

- (4)

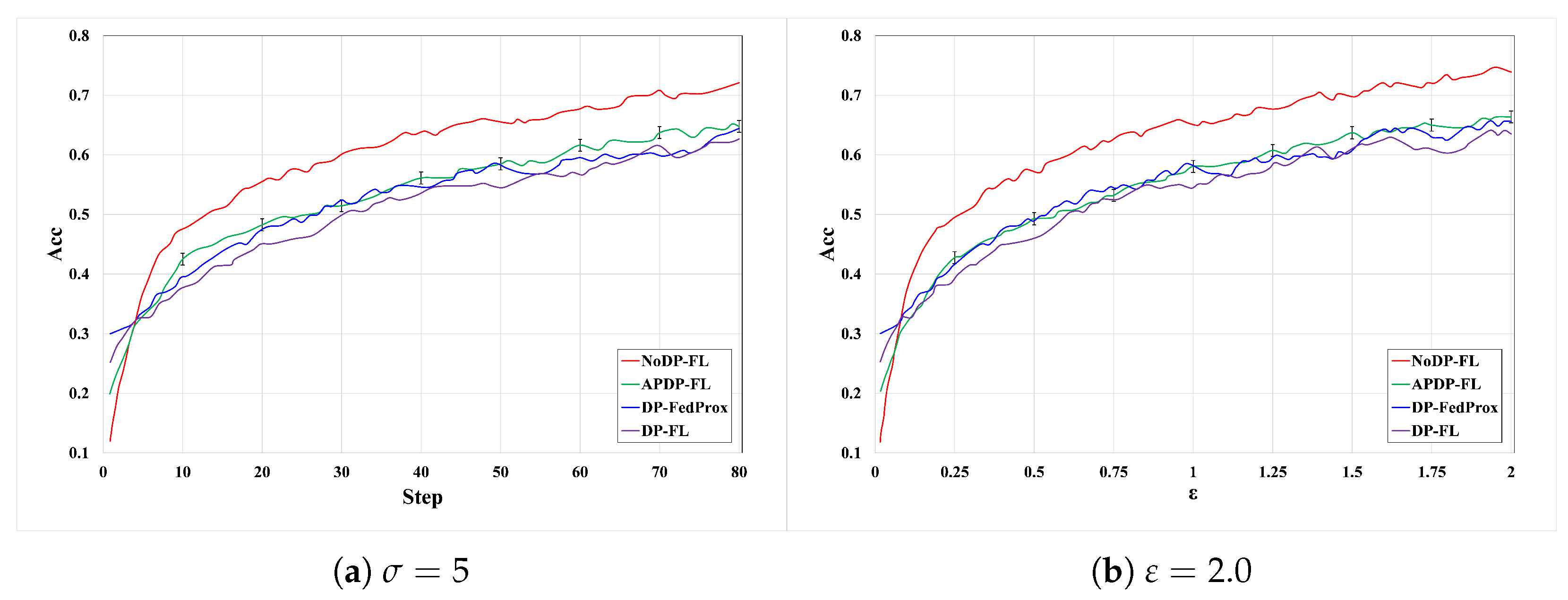

- Test metricsAccuracy is used as the evaluation index of model utility for comparison, which is calculated as follows:where is the number of correctly predicted samples, and is the number of samples in the whole test set. In order to visualize the progress of federated learning in the process of privacy budget consumption, this paper stretches the privacy budget consumed by APDP-FL and the comparison scheme to a uniform scale for graphical comparison.

- (5)

- BaselinesThe comparison schemes in the experiment include NoDP-FL, DP-FL, and DP-FLProx, where NoDP-FL [29] represents the federated learning scheme without adding noise; NoDP-FL represents no privacy budget consumption; DP-FL [30] represents the generalized federated learning scheme for differential privacy, which adds a unified noise; and DP-FLProx [31] represents the privacy-preserving scheme using differential privacy on FedProx.

- (6)

- Evaluation Metrics: Main task accuracy (MTA) [32] indicates the accuracy of a model with respect to its main (benign) task, which is used in this experiment to measure the performance of the defense method. The formula is as follows:where represents the labels that are categorized correctly, and denotes the benign model. The larger the , the better the performance of the method.

6.2. Experimental Performance Analysis

6.2.1. Experiments on the MNIST Dataset

6.2.2. Experiments on the Fashion-MNIST Dataset

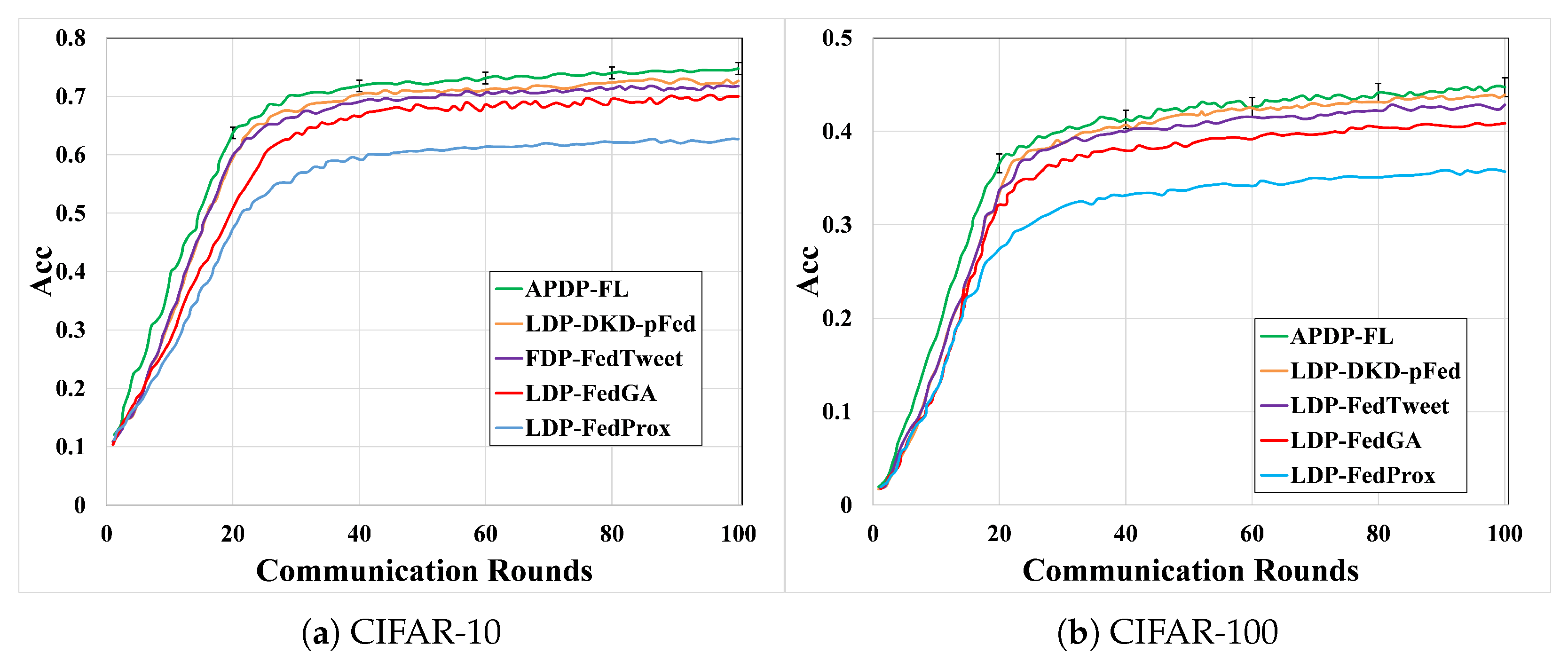

6.2.3. Experiments on the CIFAR-10 Dataset

6.2.4. Comparison with Other Methods

6.2.5. Model Convergence

6.2.6. Computational Overhead and Communication Rounds

- (1)

- Comparison of computational overhead between APDP-FL and DP-FLFrom Table 2, it can be seen that, in non-IID data, APDP-FL obtains the global model through local model weighting, and the optimization process requires more local iterations, resulting in slightly higher computational overhead than DP-FL. As the client data size increases (from 250 to 1000 client samples) or the local training epochs increase (from 1 to 10), the efficiency difference between the two methods gradually narrows. For example, when the client data volume is N = 1000, the computational cost of APDP-FL is only about 3–8% higher than that of DP-FL.

- (2)

- Comparison of communication rounds between baselinesFrom Table 3, it can be seen that due to the addition of noise parameters, the communication cost of the privacy scheme is about 5% higher than that of the non-privacy scheme (420 vs. 400 MB). The personalized plan increases costs by approximately 4.8% (440 vs. 420 MB) due to the transmission of more local adjustment information. If personalized needs need to be taken into account, personalized solutions may have slightly higher costs, but privacy protection is more precise.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sayal, A.; Jha, J.; Gupta, V.; Gupta, A.; Gupta, O.; Memoria, M. Neural networks and machine learning. In Proceedings of the 2023 IEEE 5th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), Hamburg, Germany, 7–8 October 2023; IEEE: New York, NY, USA, 2023; pp. 58–63. [Google Scholar] [CrossRef]

- Peddiraju, V.; Pamulaparthi, R.R.; Adupa, C.; Thoutam, L.R. Design and Development of Cost-Effective Child Surveillance System using Computer Vision Technology. In Proceedings of the 2022 International Conference on Recent Trends in Microelectronics, Automation, Computing and Communications Systems (ICMACC), Hyderabad, India, 28–30 December 2022; IEEE: New York, NY, USA, 2022; pp. 119–124. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar]

- Konečnỳ, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Zhang, T.; Gao, L.; He, C.; Zhang, M.; Krishnamachari, B.; Avestimehr, A.S. Federated learning for the internet of things: Applications, challenges, and opportunities. IEEE Internet Things Mag. 2022, 5, 24–29. [Google Scholar] [CrossRef]

- Alazab, M.; Rm, S.P.; Maddikunta, P.K.R.; Gadekallu, T.R.; Pham, Q.V. Federated learning for cybersecurity: Concepts, challenges, and future directions. IEEE Trans. Ind. Inform. 2021, 18, 3501–3509. [Google Scholar] [CrossRef]

- Al-Huthaifi, R.; Li, T.; Huang, W.; Gu, J.; Li, C. Federated learning in smart cities: Privacy and security survey. Inf. Sci. 2023, 632, 833–857. [Google Scholar] [CrossRef]

- Geiping, J.; Bauermeister, H.; Dröge, H.; Moeller, M. Inverting gradients-how easy is it to break privacy in federated learning? Adv. Neural Inf. Process. Syst. 2020, 33, 16937–16947. [Google Scholar]

- Paillier, P. Public-key cryptosystems based on composite degree residuosity classes. In Proceedings of the International Conference on the Theory and Applications of Cryptographic Techniques, Prague, Czech Republic, 2–6 May 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 223–238. [Google Scholar]

- Zhao, C.; Zhao, S.; Zhao, M.; Chen, Z.; Gao, C.Z.; Li, H.; Tan, Y.a. Secure multi-party computation: Theory, practice and applications. Inf. Sci. 2019, 476, 357–372. [Google Scholar] [CrossRef]

- Li, Y.; Yin, Y.; Gao, H.; Jin, Y.; Wang, X. Survey on privacy protection in non-aggregated data sharing. J. Commun. 2021, 42, 195–212. [Google Scholar]

- Hu, C.; Li, B. Maskcrypt: Federated learning with selective homomorphic encryption. IEEE Trans. Dependable Secur. Comput. 2024, 22, 221–233. [Google Scholar] [CrossRef]

- Kumbhar, H.R.; Rao, S.S. Federated learning enabled multi-key homomorphic encryption. Expert Syst. Appl. 2025, 268, 126197. [Google Scholar] [CrossRef]

- Wang, B.; Li, H.; Wang, J.; Guo, Y. Federated learning scheme for privacy-preserving of medical data. J. Xidian Univ. 2023, 50, 166–177. [Google Scholar]

- Cai, Y.; Ding, W.; Xiao, Y.; Yan, Z.; Liu, X.; Wan, Z. Secfed: A secure and efficient federated learning based on multi-key homomorphic encryption. IEEE Trans. Dependable Secur. Comput. 2023, 21, 3817–3833. [Google Scholar] [CrossRef]

- Tan, Z.; Le, J.; Yang, F.; Huang, M.; Xiang, T.; Liao, X. Secure and accurate personalized federated learning with similarity-based model aggregation. IEEE Trans. Sustain. Comput. 2024, 10, 132–145. [Google Scholar] [CrossRef]

- Ciucanu, R.; Delabrouille, A.; Lafourcade, P.; Soare, M. Secure Protocols for Best Arm Identification in Federated Stochastic Multi-Armed Bandits. IEEE Trans. Dependable Secur. Comput. 2022, 20, 1378–1389. [Google Scholar] [CrossRef]

- Kalapaaking, A.P.; Stephanie, V.; Khalil, I.; Atiquzzaman, M.; Yi, X.; Almashor, M. Smpc-based federated learning for 6g-enabled internet of medical things. IEEE Netw. 2022, 36, 182–189. [Google Scholar] [CrossRef]

- Xiao, Y.; Xu, L.; Wu, Y.; Sun, J.; Zhu, L. PrSeFL: Achieving Practical Privacy and Robustness in Blockchain-Based Federated Learning. IEEE Internet Things J. 2024, 11, 40771–40786. [Google Scholar] [CrossRef]

- Lyu, L.; Yu, H.; Ma, X.; Chen, C.; Sun, L.; Zhao, J.; Yang, Q.; Yu, P.S. Privacy and robustness in federated learning: Attacks and defenses. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 8726–8746. [Google Scholar] [CrossRef]

- Tang, X.; Peng, L.; Weng, Y.; Shen, M.; Zhu, L.; Deng, R.H. Enforcing Differential Privacy in Federated Learning via Long-Term Contribution Incentives. IEEE Trans. Inf. Forensics Secur. 2025, 20, 3102–3115. [Google Scholar] [CrossRef]

- Fukami, T.; Murata, T.; Niwa, K.; Tyou, I. Dp-norm: Differential privacy primal-dual algorithm for decentralized federated learning. IEEE Trans. Inf. Forensics Secur. 2024, 19, 5783–5797. [Google Scholar] [CrossRef]

- Sun, L.; Qian, J.; Chen, X. LDP-FL: Practical private aggregation in federated learning with local differential privacy. arXiv 2020, arXiv:2007.15789. [Google Scholar]

- Bhowmick, A.; Duchi, J.; Freudiger, J.; Kapoor, G.; Rogers, R. Protection against reconstruction and its applications in private federated learning. arXiv 2018, arXiv:1812.00984. [Google Scholar]

- Xie, R.; Li, C.; Yang, Z.; Xu, Z.; Huang, J.; Dong, Z. Differential privacy enabled robust asynchronous federated multitask learning: A multigradient descent approach. IEEE Trans. Cybern. 2025, 55, 3546–3559. [Google Scholar] [CrossRef]

- Mironov, I. Rényi differential privacy. In Proceedings of the 2017 IEEE 30th Computer Security Foundations Symposium (CSF), Santa Barbara, CA, USA, 21–25 August 2017; IEEE: New York, NY, USA, 2017; pp. 263–275. [Google Scholar]

- Wang, Y.X.; Balle, B.; Kasiviswanathan, S.P. Subsampled rényi differential privacy and analytical moments accountant. In Proceedings of the The 22nd International Conference on Artificial Intelligence and Statistics, PMLR, Okinawa, Japan, 16–18 April 2019; pp. 1226–1235. [Google Scholar]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial intelligence and Statistics, PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Arachchige, P.C.M.; Bertok, P.; Khalil, I.; Liu, D.; Camtepe, S.; Atiquzzaman, M. Local differential privacy for deep learning. IEEE Internet Things J. 2019, 7, 5827–5842. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Zhuang, H.; Yu, M.; Wang, H.; Hua, Y.; Li, J.; Yuan, X. Backdoor federated learning by poisoning backdoor-critical layers. arXiv 2023, arXiv:2308.04466. [Google Scholar]

- Cong, Y.; Zeng, Y.; Qiu, J.; Fang, Z.; Zhang, L.; Cheng, D.; Liu, J.; Tian, Z. Fedga: A greedy approach to enhance federated learning with non-iid data. Knowl.-Based Syst. 2024, 301, 112201. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, W.; Wang, X.; Zhang, H.; Wu, X.; Yang, M. FedTweet: Two-fold knowledge distillation for non-IID federated learning. Comput. Electr. Eng. 2024, 114, 109067. [Google Scholar] [CrossRef]

- Su, L.; Wang, D.; Zhu, J. DKD-pFed: A novel framework for personalized federated learning via decoupling knowledge distillation and feature decorrelation. Expert Syst. Appl. 2025, 259, 125336. [Google Scholar] [CrossRef]

| Method | MNIST | Fashion-MNIST | CIFAR-10 | |||

|---|---|---|---|---|---|---|

| NoDP-FL | 97.21 | 96.08 | 82.17 | 79.96 | 74.06 | 75.62 |

| DP-FL | 94.27 | 93.11 | 77.18 | 75.92 | 69.11 | 63.55 |

| DP-FedProx | 95.18 | 94.23 | 78.18 | 77.06 | 70.07 | 65.66 |

| APDP-FL | ||||||

| Clients Number | DP-FL | APDP-FL | Overhead Variance Rate |

|---|---|---|---|

| 250 | 12.4 | 13.1 | 5.5% |

| 500 | 10.8 | 11.3 | 4.4% |

| 1000 | 10.4 | 10.8 | 3.7% |

| Schemes | Client Upload Data Size | Server Distributed Data Size | Total Communication Cost | Additional Cost Sources |

|---|---|---|---|---|

| APDP-FL | 11.0 MB × 20 = 220 MB | 11.0 MB × 20 = 220 MB | 440 MB | Personalized noise parameters + model adjustment |

| FedAvg | 10 MB × 20 = 200 MB | 10 MB × 20 = 200 MB | 400 MB | Model parameter transmission |

| NoDP-FL | 10.5 MB × 20 = 210 MB | 10.5 MB × 20 = 210 MB | 420 MB | Encryption or redundant parameters |

| DP-FL | 10.5 MB × 20 = 210 MB | 10.5 MB × 20 = 210 MB | 420 MB | Additional noise parameters |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, F.; Wang, R.; Wang, J.; Yang, C.; Liu, Z.; Li, H. APDP-FL: Personalized Federated Learning Based on Adaptive Differential Privacy. Symmetry 2025, 17, 2023. https://doi.org/10.3390/sym17122023

Guo F, Wang R, Wang J, Yang C, Liu Z, Li H. APDP-FL: Personalized Federated Learning Based on Adaptive Differential Privacy. Symmetry. 2025; 17(12):2023. https://doi.org/10.3390/sym17122023

Chicago/Turabian StyleGuo, Feng, Ruoxu Wang, Jiuru Wang, Chen Yang, Zhuo Liu, and Hongtao Li. 2025. "APDP-FL: Personalized Federated Learning Based on Adaptive Differential Privacy" Symmetry 17, no. 12: 2023. https://doi.org/10.3390/sym17122023

APA StyleGuo, F., Wang, R., Wang, J., Yang, C., Liu, Z., & Li, H. (2025). APDP-FL: Personalized Federated Learning Based on Adaptive Differential Privacy. Symmetry, 17(12), 2023. https://doi.org/10.3390/sym17122023