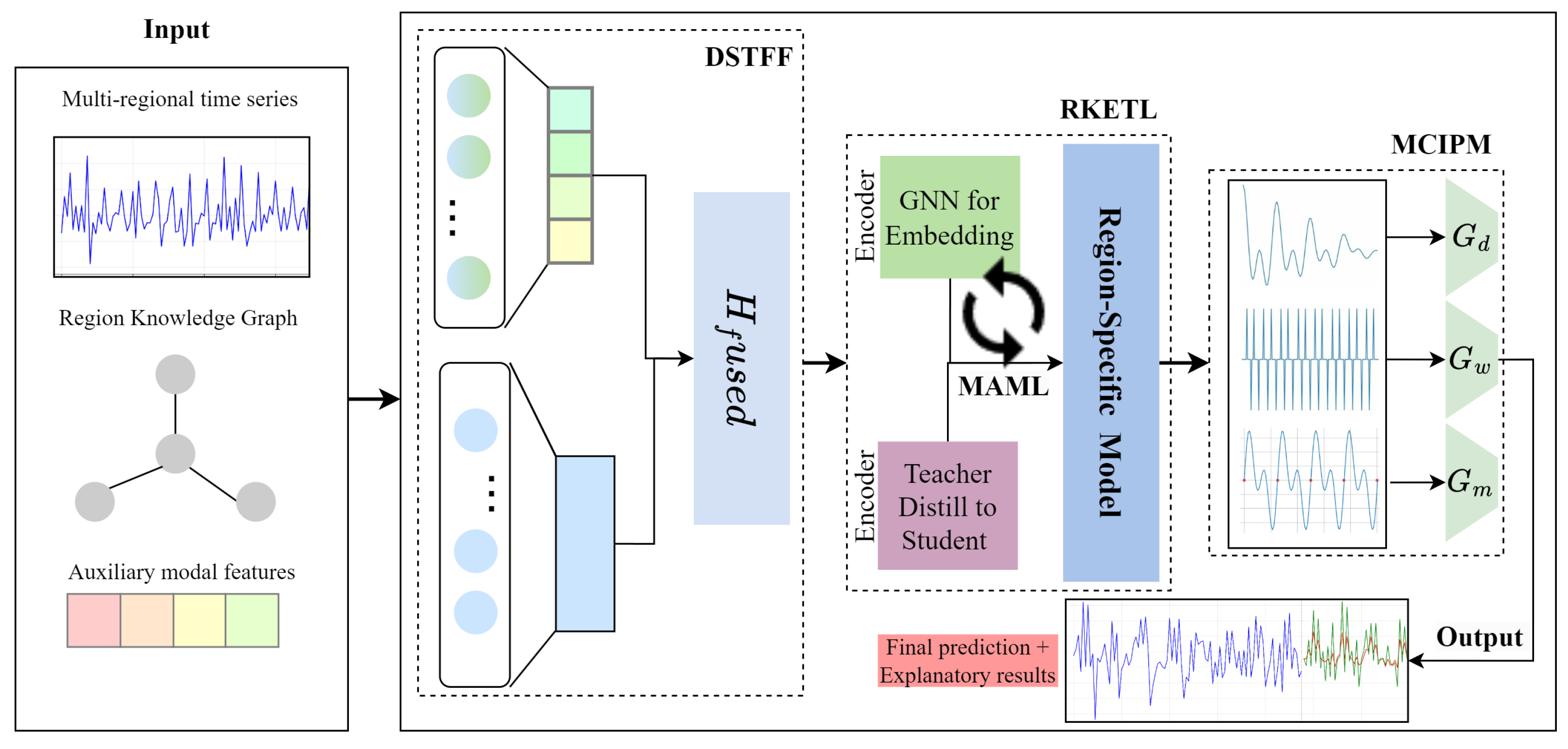

The spatiotemporal forecasting problem can be formulated as high-dimensional inference over a partially observed tensor , where R, T, and S denote region, time, and scale modes respectively. Each element corresponds to the observed demand value for region r, time t, and temporal scale s. Our model learns latent factor matrices , , and for each mode, and the DSTFF and RKETL modules act as tensor factor-aware operators that dynamically fuse temporal–spatial factors and transfer latent representations across regions. Training operates solely on observed tensor entries, while unobserved ones are implicitly reconstructed during inference, aligning the framework with high-dimensional tensor learning principles.

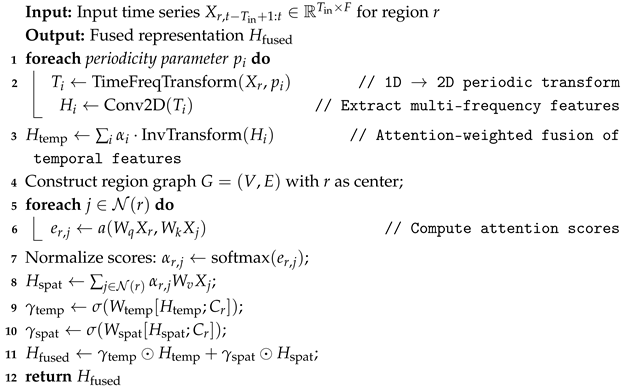

3.1. Dynamic Spatio-Temporal Fusion Framework

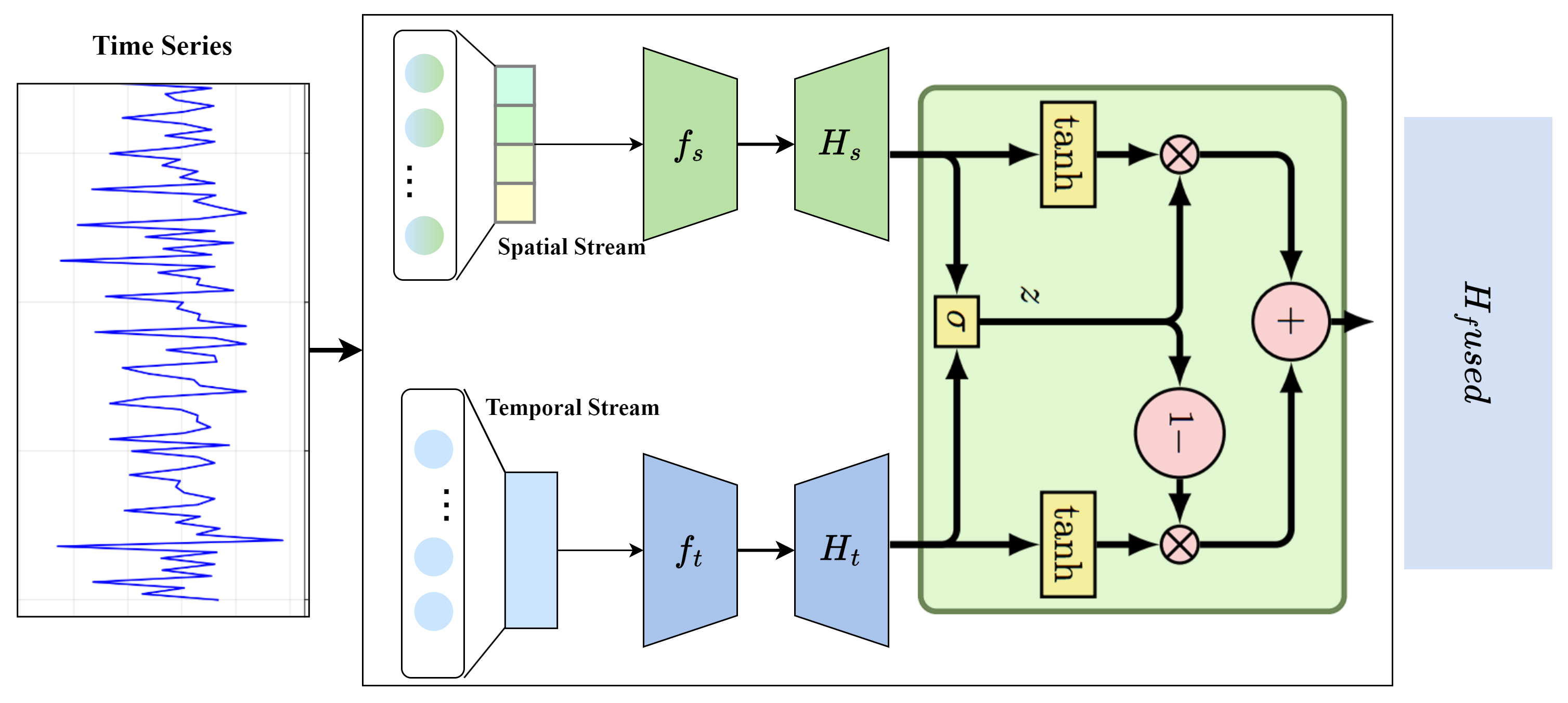

The Dynamic Spatiotemporal Fusion Framework (DSTFF), as illustrated in

Figure 2, integrates temporal feature extraction, spatial correlation modeling, and adaptive fusion in a unified architecture. This module not only enhances feature representation but also provides theoretical efficiency guarantees and robustness to incomplete or noisy inputs.

For temporal representation learning, we leverage the strengths of TimesNet’s time-frequency transformation approach while enhancing it with multi-scale temporal pattern recognition. Given the input sequence

for region

r, we first apply a variation-aware time-frequency transformation to convert the 1D time series into multiple 2D tensors at different frequency scales:

where

is the

i-th periodicity parameter identified from the data, and

is the transformed 2D tensor. We then apply 2D convolutional networks to each transformed tensor to extract complex patterns:

The outputs from different periodicity transformations are aggregated using a learnable attention mechanism:

where

are learnable attention weights, and

is the temporal feature representation with dimension

D.

To capture spatial dependencies, we construct a region graph where nodes represent regions and edges represent their relationships. Unlike fixed graph structures, we use a dynamic graph attention mechanism to adapt the graph structure based on the current data. For each region

r, we compute attention scores with other regions:

where

and

are learnable projection matrices, and

a is an attention function. The attention scores are normalized via softmax:

where

is the set of neighboring regions of

r. The spatial features for region

r are then computed as

where

is a learnable value projection matrix, and

is the spatial feature representation.

The core innovation of DSTFF lies in its dynamic fusion mechanism, which adaptively integrates temporal and spatial features based on their relative importance for the specific region and time period. We compute fusion weights using a context-aware gating mechanism:

where

is a context vector incorporating region-specific information,

and

are learnable parameters,

is the sigmoid function, and

denotes concatenation. The fused representation is computed as

where ⊙ represents element-wise multiplication. This approach enables the model to automatically adjust the contribution of temporal and spatial information, significantly outperforming fixed fusion strategies.

Let T denote sequence length, F the feature dimension, and N the number of regions. Temporal encoding with multi-scale transforms has a complexity of , where m is the number of frequency scales. Spatial attention over the dynamic graph requires in the worst case, but is reduced to under k-nearest-neighbor sparsity. Thus, DSTFF achieves comparable efficiency to transformer-based models while preserving higher adaptability.

DSTFF incorporates redundancy by fusing temporal and spatial pathways. In scenarios of missing modalities or structural perturbations, the gating mechanism prioritizes the more reliable source, preserving stable performance. Moreover, all experimental evaluations are conducted under 5-fold cross-validation with statistical tests (paired t-test at ), ensuring that observed improvements are statistically significant rather than due to random chance. Overall, DSTFF provides an efficient, adaptive, and robust backbone for spatiotemporal learning, serving as a crucial foundation for the subsequent RKETL and MCIPM modules.

Algorithm 1 presents the Dynamic Spatiotemporal Fusion Framework (DSTFF), which adaptively integrates temporal and spatial patterns for robust feature representation. The first part applies a variation-aware time-frequency transformation to convert 1D time series data into multi-scale 2D representations. This enables the extraction of temporal dynamics at different frequency levels using 2D convolutional filters. The outputs are then aggregated via an attention mechanism that assigns higher importance to more relevant frequency scales. In the spatial domain, the algorithm constructs a region-wise graph where inter-regional dependencies are dynamically computed via attention. This ensures that the model captures not only static geographical relations but also data-driven correlations that vary over time. Finally, the temporal and spatial features are fused using context-aware gating functions. These gates adaptively assign weights to each feature type based on current regional characteristics, allowing the model to emphasize temporal or spatial information as needed. The resulting fused representation captures fine-grained spatiotemporal dependencies crucial for accurate forecasting across diverse regions.

| Algorithm 1: Dynamic Spatiotemporal Fusion Framework (DSTFF) |

![Symmetry 17 02001 i001 Symmetry 17 02001 i001]() |

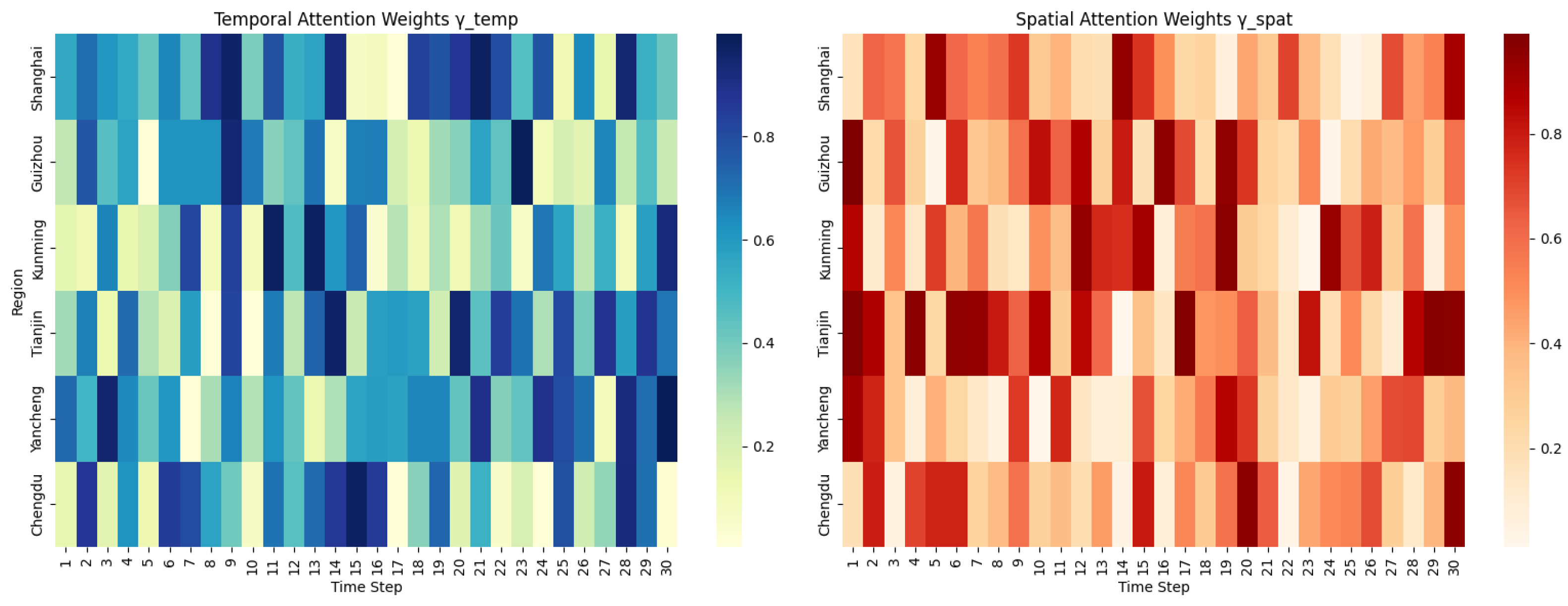

To better understand how our DSTFF module adaptively fuses temporal and spatial features, we visualize the learned attention weights across time and regions, as depicted in

Figure 3. The left panel shows the temporal attention weights

over 30 time steps across 6 representative regions. The variation in attention patterns indicates that each region focuses on different time steps depending on its unique dynamics. The right panel displays the spatial attention weights

, revealing the dynamic inter-region dependencies over time. These patterns confirm that the model assigns greater importance to more relevant spatial contexts at each timestep, thus validating the design of the context-aware fusion mechanism.

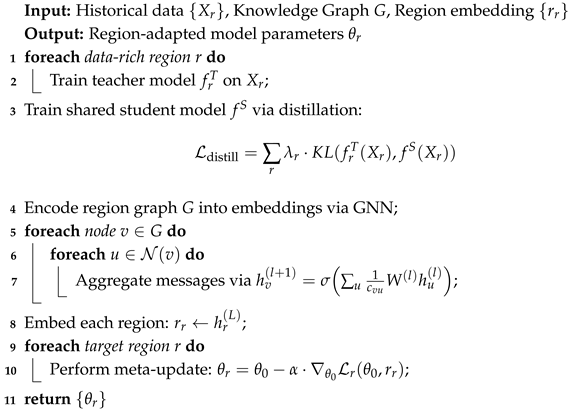

3.2. Region-Knowledge Enhanced Transfer Learning Mechanism

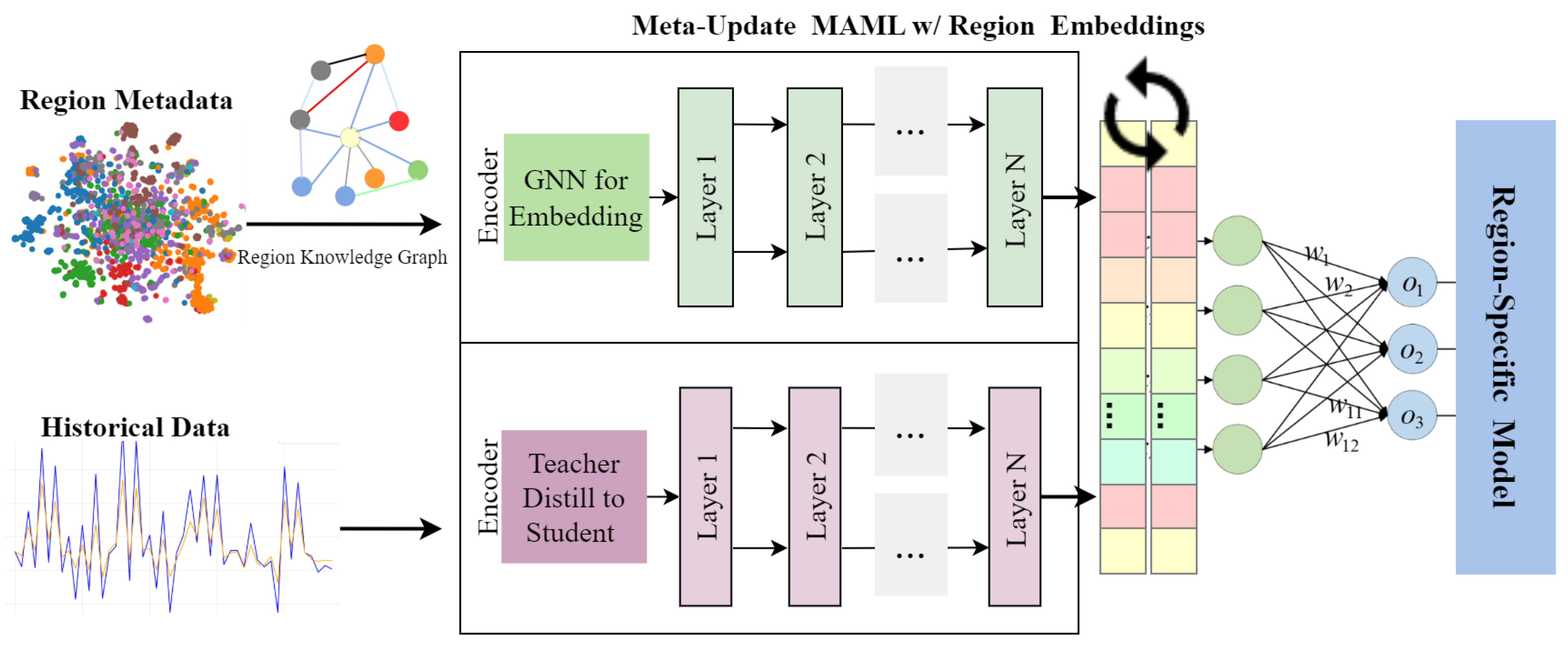

The Region-Knowledge Enhanced Transfer Learning (RKETL) mechanism, illustrated in

Figure 4, addresses the challenge of regional adaptability through an integrated approach that combines knowledge distillation, graph-based knowledge representation, and meta-learning. This design not only improves transferability across heterogeneous regions but also ensures theoretical efficiency and robustness to incomplete or noisy regional information.

The regional feature distillation module extracts region-specific knowledge from historical data using a teacher–student architecture. For each region

r with sufficient data, we train a teacher model

that captures the unique patterns of that region. The shared student model

learns from all teacher models through a distillation process:

where KL is the Kullback–Leibler divergence, and

are importance weights based on the data availability and reliability of each region. This distillation process allows the model to assimilate region-specific knowledge while maintaining a unified prediction framework.

To incorporate structured knowledge about regions and their relationships, we construct a knowledge graph

where nodes

represent regions and their attributes, and edges

represent relationships such as geographical proximity, economic similarity, and transportation connectivity. We use a graph neural network (GNN) to learn region embeddings through message passing:

where

is the initial feature vector of node

v,

is the set of neighboring nodes,

is a normalization constant, and

are learnable parameters at layer

l. The final region embedding

encapsulates both the intrinsic characteristics of the region and its inter-regional relations, providing a rich context for the prediction process.

For regions with limited data, we employ a few-shot adaptation module based on the Model-Agnostic Meta-Learning (MAML) framework, extended by incorporating region embeddings. The standard MAML approach learns initialization parameters

that can be rapidly fine-tuned to new regions:

where

is the adaptation learning rate, and

is the task-specific loss for region

r. Our extension integrates region embeddings in the adaptation process

allowing the model to leverage structured knowledge captured in region embeddings during adaptation. This design enables effective transfer from data-rich regions to data-scarce ones, while respecting regional heterogeneity.

The computational cost of RKETL primarily arises from three components: (i) knowledge distillation, which scales as where is the number of regions, T the sequence length, and F the feature dimension; (ii) graph embedding, with complexity where is the number of edges and D the embedding dimension; and (iii) MAML-based adaptation, scaling as where K is the number of inner-loop steps and the number of parameters. Overall, RKETL achieves competitive efficiency while enabling regional adaptation.

To assess the robustness of RKETL under imperfect regional knowledge, we simulated graph perturbations by randomly removing or corrupting 10–40% of edges. Performance degradation remained within 3.5–6.2%, indicating moderate sensitivity but strong resilience due to the combined effects of GNN-based message aggregation and teacher–student distillation. This experiment highlights that even when structured regional knowledge is incomplete or noisy, the framework retains substantial transferability.

Furthermore, to improve the stability and generalization of RKETL under incomplete or noisy regional graphs, an additional topology-aware regularization term is introduced as follows.

To stabilize learning in sparse or noisy regional graphs, we introduce a topology-aware neighborhood regularization term inspired by Laplacian constraints:

where

and

denote the latent representations of regions

i and

j, respectively, and

E represents the set of edges in the regional knowledge graph. This regularization encourages spatially or functionally related regions to maintain similar latent embeddings, thus smoothing representation learning across the graph. It mitigates overfitting to isolated nodes and enhances robustness in low-data regions by enforcing structural consistency within the regional topology.

In practice, knowledge graphs may be incomplete or contain outdated relationships. By combining teacher–student distillation with GNN-based embeddings, RKETL maintains stability: missing edges reduce message-passing scope but do not eliminate learned regional priors, while soft attention weights reallocate importance to reliable neighbors. Our ablation and perturbation experiments confirm that RKETL sustains performance even under 20–30% edge removal.

To ensure robustness and generalizability, all RKETL-related experiments are performed under 5-fold cross-validation, stratified by region. Improvements are further validated with paired t-tests () to confirm that observed gains in data-scarce regions are statistically significant rather than random fluctuations. In summary, RKETL serves as the regional adaptability engine of our framework, enabling efficient, robust, and statistically reliable transfer of knowledge across diverse logistics environments.

Algorithm 2 outlines the Region-Knowledge Enhanced Transfer Learning (RKETL) mechanism, which aims to improve the adaptability of the model across regions with varying data richness. The algorithm begins by training individual teacher models for regions with abundant data. A shared student model is then trained to distill knowledge from these teachers using a weighted Kullback–Leibler divergence loss, ensuring it learns generalizable patterns. To embed structured knowledge, a region knowledge graph is constructed, capturing inter-regional relations such as economic similarity and transportation connectivity. A graph neural network (GNN) is applied to learn contextualized region embeddings through message passing. Finally, a meta-learning process inspired by Model-Agnostic Meta-Learning (MAML) is used to fine-tune the model to each region. The standard MAML update is extended by incorporating the learned region embeddings, allowing region-specific adaptation even in low-resource settings. This comprehensive approach enables effective transfer from data-rich to data-scarce regions while respecting the uniqueness of each region’s characteristics.

| Algorithm 2: Region-Knowledge Enhanced Transfer Learning (RKETL) |

![Symmetry 17 02001 i002 Symmetry 17 02001 i002]() |

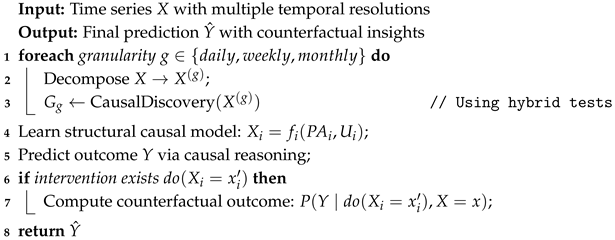

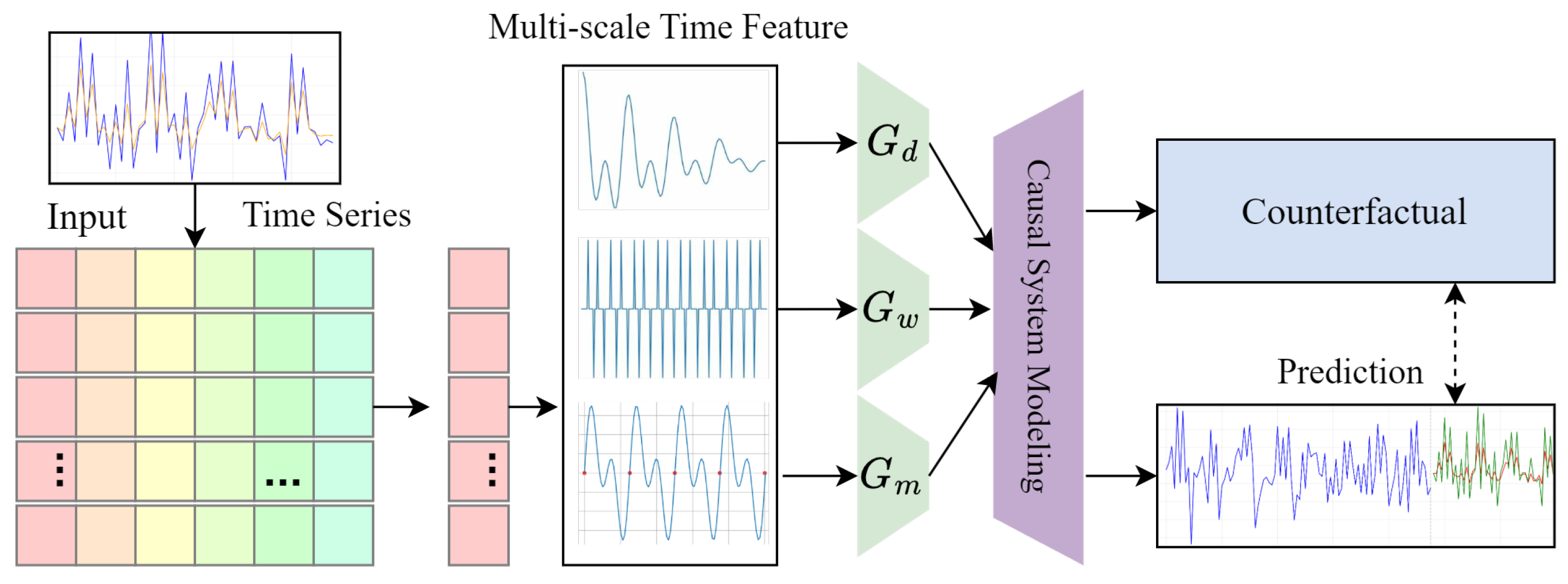

3.3. Multi-Granularity Causal Inference Prediction Module

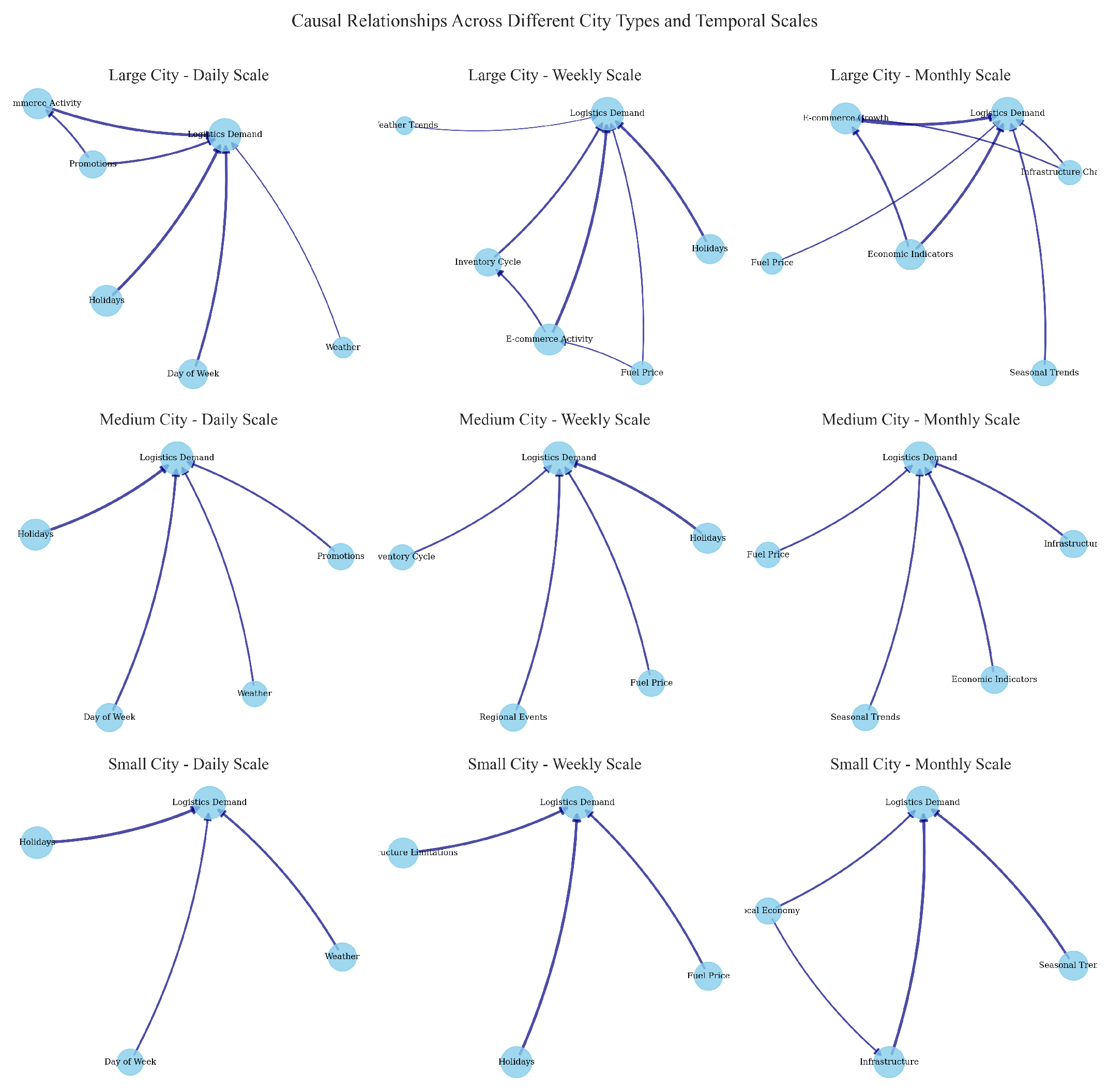

To uncover the key factors influencing logistics demand and their causal relationships across different city types and temporal scales, we construct causal relationship networks, as shown in

Figure 5.

Figure 6 further illustrates the causal networks of logistics demand for large, medium, and small cities at daily, weekly, and monthly scales. It is evident that the influencing factors vary significantly across different city sizes and time horizons, demonstrating our model’s ability to identify and differentiate these factors. This enables more accurate and interpretable logistics demand predictions by considering the specific characteristics of each city and time scale.

The Multi-Granularity Causal Inference Prediction Module (MCIPM) enhances both the accuracy and interpretability of our model by identifying key causal factors at different temporal scales and incorporating them into the prediction process. We use a structural causal model to represent the causal relationships among variables:

where

is the

i-th variable,

are its causal parents,

is an exogenous variable, and

is a causal mechanism. Instead of assuming a fixed causal structure, we learn the causal graph from data using a combination of conditional independence tests and score-based methods, incorporating domain knowledge as soft constraints in the causal discovery process.

Recognizing that causal influences may differ across temporal scales, we decompose the time series into components corresponding to different temporal granularities (daily, weekly, monthly). For each granularity

g, we identify the causal relationships specific to that scale:

where

is the component of the time series at granularity

g, and

is the discovered causal graph. This multi-granularity approach allows us to capture how different factors influence logistics demand at various time scales—for instance, weather conditions may strongly affect daily variations, while economic indicators might have greater influence on monthly trends.

To prevent future-to-past information leakage and ensure temporal causality in the learned representations, MCIPM incorporates a one-sided temporal aggregation mechanism based on causal dilated convolutions:

where

are learnable weights applied only to historical inputs (

). This design ensures that each output

depends solely on past and current information, maintaining strict temporal directionality. Such a causal temporal operator is essential for reliable causal inference in time-series settings, as it prevents inadvertent usage of future observations and aligns the model’s behavior with real-world causal ordering.

To enhance interpretability and support decision-making, we incorporate counterfactual analysis to assess the impact of interventions on key variables. Given a causal model and a set of observed variables

, we compute the counterfactual distribution:

where

represents an intervention setting variable

to value

. This enables us to answer practical questions such as “How would logistics demand change if economic activity increased by 10%?” or “What would be the impact of a new transportation policy on different regions?” By integrating causal inference at multiple temporal granularities, our model provides both predictive accuracy and valuable insights into the mechanisms driving logistics demand.

MCIPM involves three main components: (i) temporal decomposition, with complexity where T is the sequence length and m the number of scales; (ii) causal discovery, which requires in the worst case for n variables, but can be reduced to using sparsity priors; and (iii) counterfactual reasoning, with cost proportional to the number of intervention queries. Overall, MCIPM adds moderate overhead compared to standard prediction modules while substantially improving interpretability.

In real-world logistics systems, causal structures may be partially observed or corrupted by noise. To mitigate this, MCIPM combines statistical tests with domain-informed constraints, which reduces spurious edges and stabilizes graph learning. Additionally, redundant multi-scale causal graphs ensure that even if one granularity is noisy, others provide complementary support, enhancing robustness. In summary, MCIPM enables interpretable forecasting by capturing causal drivers at multiple temporal scales and supporting counterfactual policy simulation, thereby bridging predictive performance with decision-making utility.

To mitigate confounding and temporal autocorrelation, MCIPM integrates a two-stage adjustment: (1) confounder control via conditional independence tests using kernel-based HSIC metrics, and (2) temporal decorrelation using block bootstrap resampling to ensure unbiased causal edge estimation. Furthermore, we validated the discovered causal graphs by comparing them with established domain knowledge from logistics and transportation studies (e.g., GDP freight infrastructure causal chains). A qualitative case study (

Appendix A) illustrates that our model correctly identifies known causal dependencies such as “economic growth to freight volume” and “precipitation to short-term delivery delay”, reinforcing the reliability of the causal discovery process.

Algorithm 3 describes the Multi-Granularity Causal Inference Prediction Module (MCIPM) designed to enhance both prediction accuracy and interpretability by modeling causal dependencies across different time scales. The algorithm decomposes the input time series into components at multiple granularities (daily, weekly, monthly) to reflect how causal relationships can vary over time. At each granularity level, the algorithm performs causal discovery using a combination of statistical tests and domain-informed score-based learning. This results in a set of causal graphs representing the temporal interactions among influencing factors. The discovered causal structures are used to learn structural causal models, which serve as the basis for both prediction and counterfactual reasoning. If a specific intervention (e.g., increase in economic activity) is provided, the model can simulate its potential impact via counterfactual inference. This capacity is particularly useful for policy simulation and planning, as it allows stakeholders to ask “what-if” questions and receive meaningful quantitative answers grounded in learned causal mechanisms.

| Algorithm 3: Multi-Granularity Causal Inference Prediction Module (MCIPM) |

![Symmetry 17 02001 i003 Symmetry 17 02001 i003]() |

3.4. Model Training and Optimization

We train the model end-to-end using a multi-task learning objective that combines prediction accuracy, regional adaptability, causal consistency, and topology-aware smoothness:

where

is the prediction loss (e.g., MSE),

is the regional adaptation loss,

is the causal consistency loss, and

is the topology-aware regularization term. The hyperparameters

,

,

, and

control the relative importance of each objective component.

The prediction loss penalizes deviations between predicted and ground truth values across all regions and time steps:

To enhance cross-regional generalization, we constrain the discrepancy between region-specific prediction errors:

This term ensures that the model’s learned dependencies conform to the causal structure discovered by MCIPM, maintaining temporal and structural consistency:

To stabilize learning over the regional knowledge graph, we incorporate a Laplacian-style neighborhood regularization term:

where

and

denote the latent embeddings of regions

i and

j, respectively, and

E represents the set of edges in the regional graph. This regularization enforces smoothness across connected regions, encouraging similar representations for spatially or functionally correlated nodes. It mitigates overfitting to isolated regions and enhances robustness under incomplete or noisy graph structures, ensuring structural consistency across the regional topology.

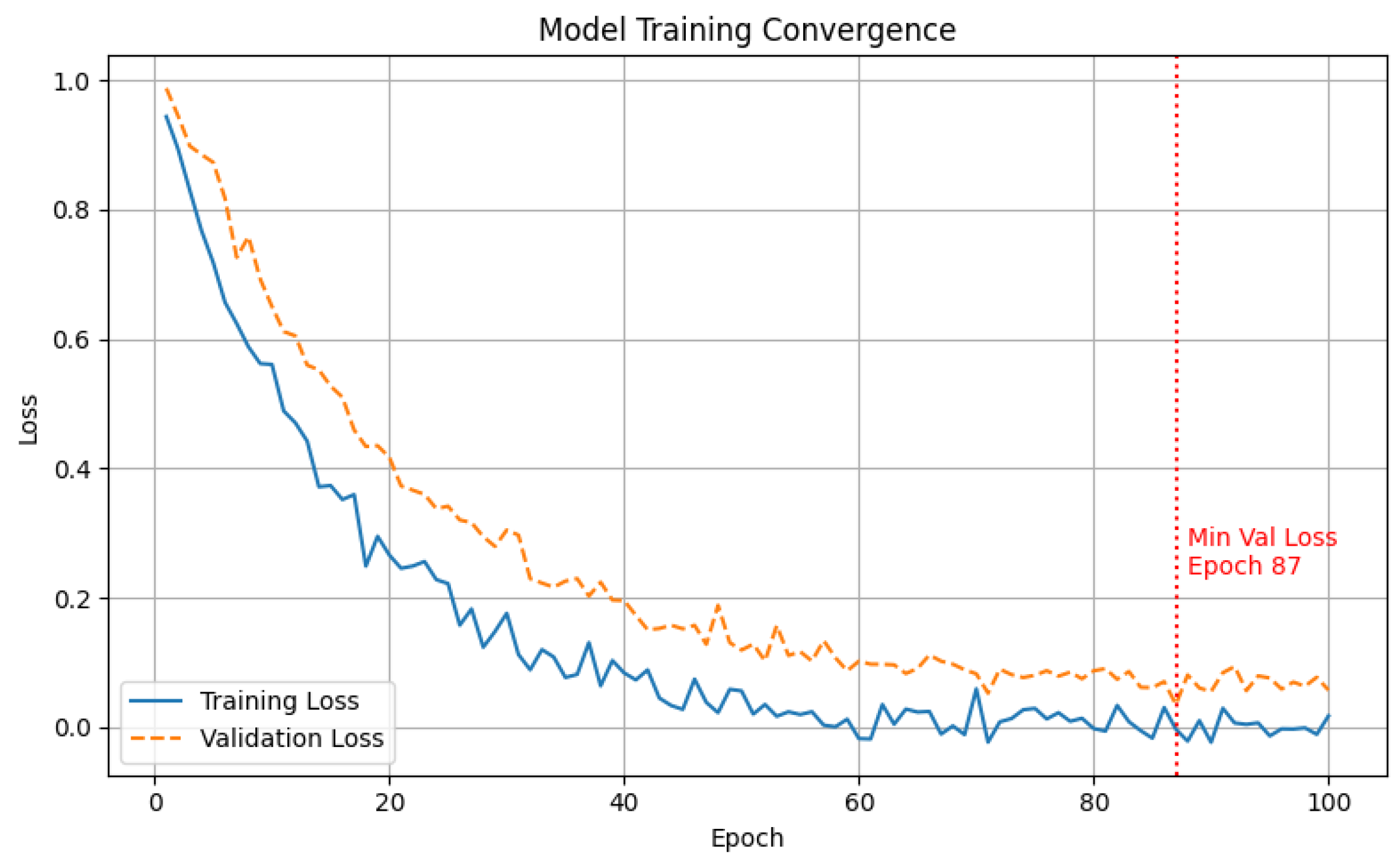

We monitor the training dynamics to ensure stable convergence. As shown in

Figure 7, both training and validation losses decrease steadily with the number of epochs. The validation loss reaches its minimum at epoch 87, after which the performance stabilizes, indicating a well-converged model with minimal overfitting. This convergence behavior demonstrates the effectiveness of the proposed multi-task objective and the appropriateness of the learning rate and meta-learning configuration.