Intelligent Q&A System for Welding Processes Based on a Symmetric KG-DB Hybrid-RAG Strategy

Abstract

1. Introduction

- (1)

- Conflict between the retrieval paradigm and logical reasoning: The welding process involves complex question-answering tasks that rely on multi-parameter coupled decisions across various material performance indicators. Traditional RAG uses a “retrieve first, then generate” blind recall strategy. This method aggressively retrieves a large number of document fragments, leading to an explosion of retrieval noise. Especially in complex process Q&A that involves selecting multiple parameters based on several material performance metrics, the model’s logical chain easily breaks, and structural information is lost. This makes the output unreliable for high-risk welding decision support, where precision is critical. There is an urgent need for an innovative approach to isolate retrieval noise before the recall step.

- (2)

- Lightweight and real-time knowledge updating: Industry standards, classification society rules, and internal specifications change rapidly; However, existing GraphRAG methods require recalculation of the embeddings for the entire graph, resulting in prohibitively high update costs (O()). Once a new rule is issued after system deployment, it often necessitates several hours of downtime for knowledge reconstruction [6]. This contradicts the manufacturing sector’s requirement for real-time knowledge and continuous system operation.

- (3)

- Lack of answer credibility and auditability: For safety-critical applications in the shipbuilding industry, every engineering decision derived from the Q&A system must be fully traceable and justifiable. Current RAG strategies only provide an opaque black-box score based on text similarity. This lack of transparent graph paths and standard clause citations prevents engineers from confidently making judgments, failing to meet crucial auditability and accountability requirements.

- (1)

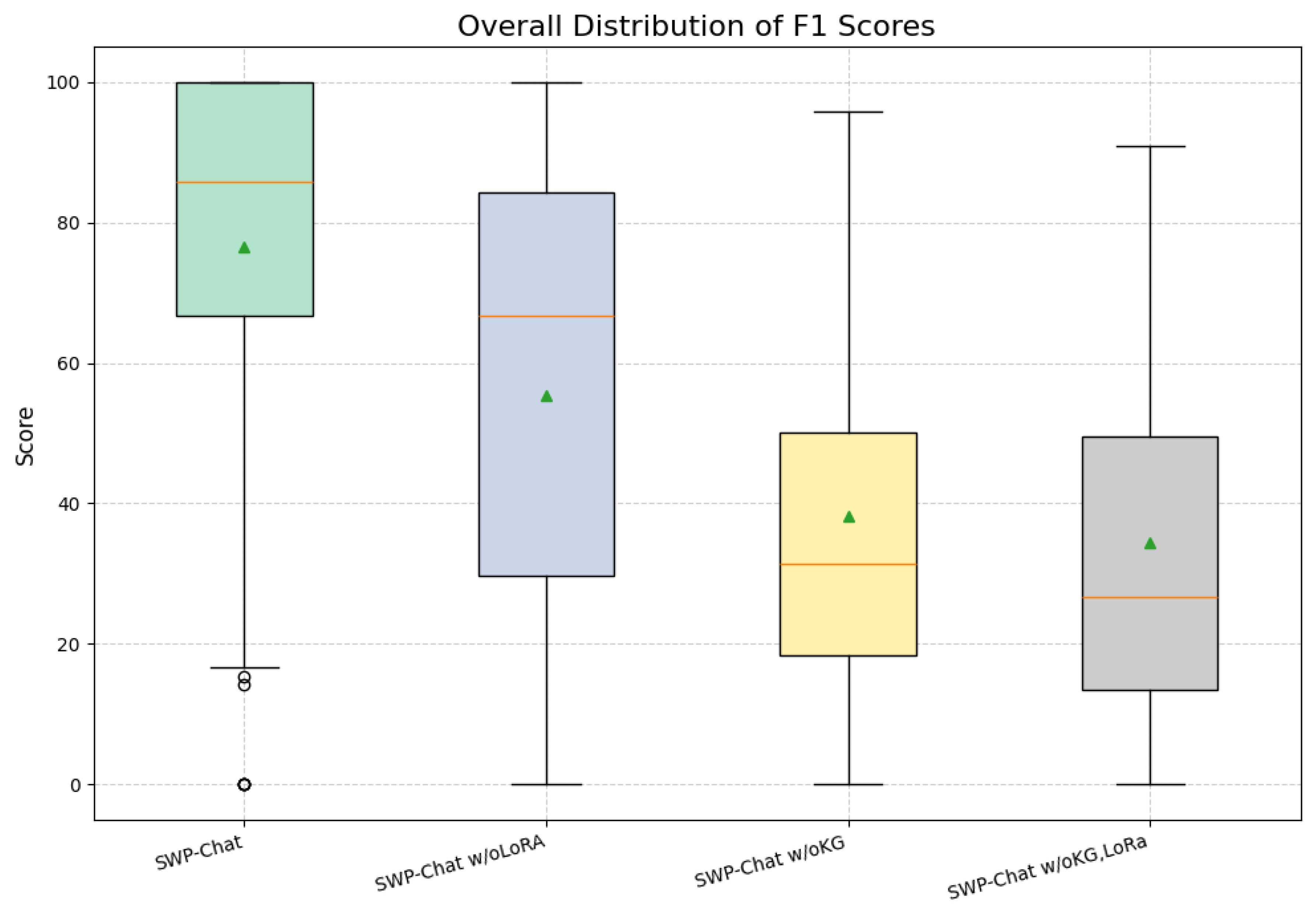

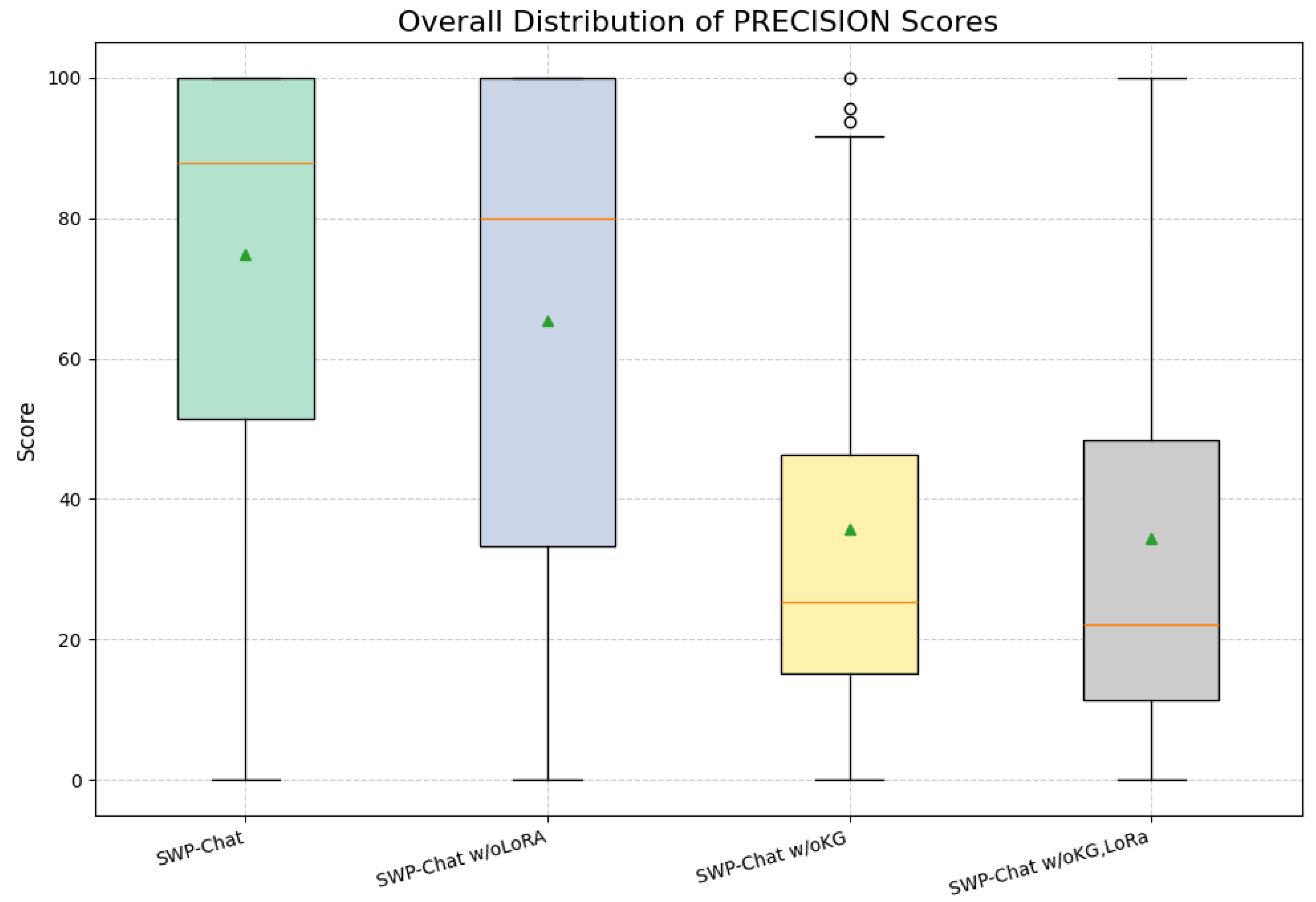

- This study builds a symmetric KG+Vector dual engine that supports 11 types of welding entities, making it the first to apply symmetrical design to ship welding technology. This architecture achieves mirrored balance between the vector channel and knowledge graph channel. This bidirectional symmetry mechanism fundamentally isolates pre-retrieval noise, significantly enhancing the accuracy of complex reasoning and the coherence of question answering, with an F1 score improvement of 40.89%.

- (2)

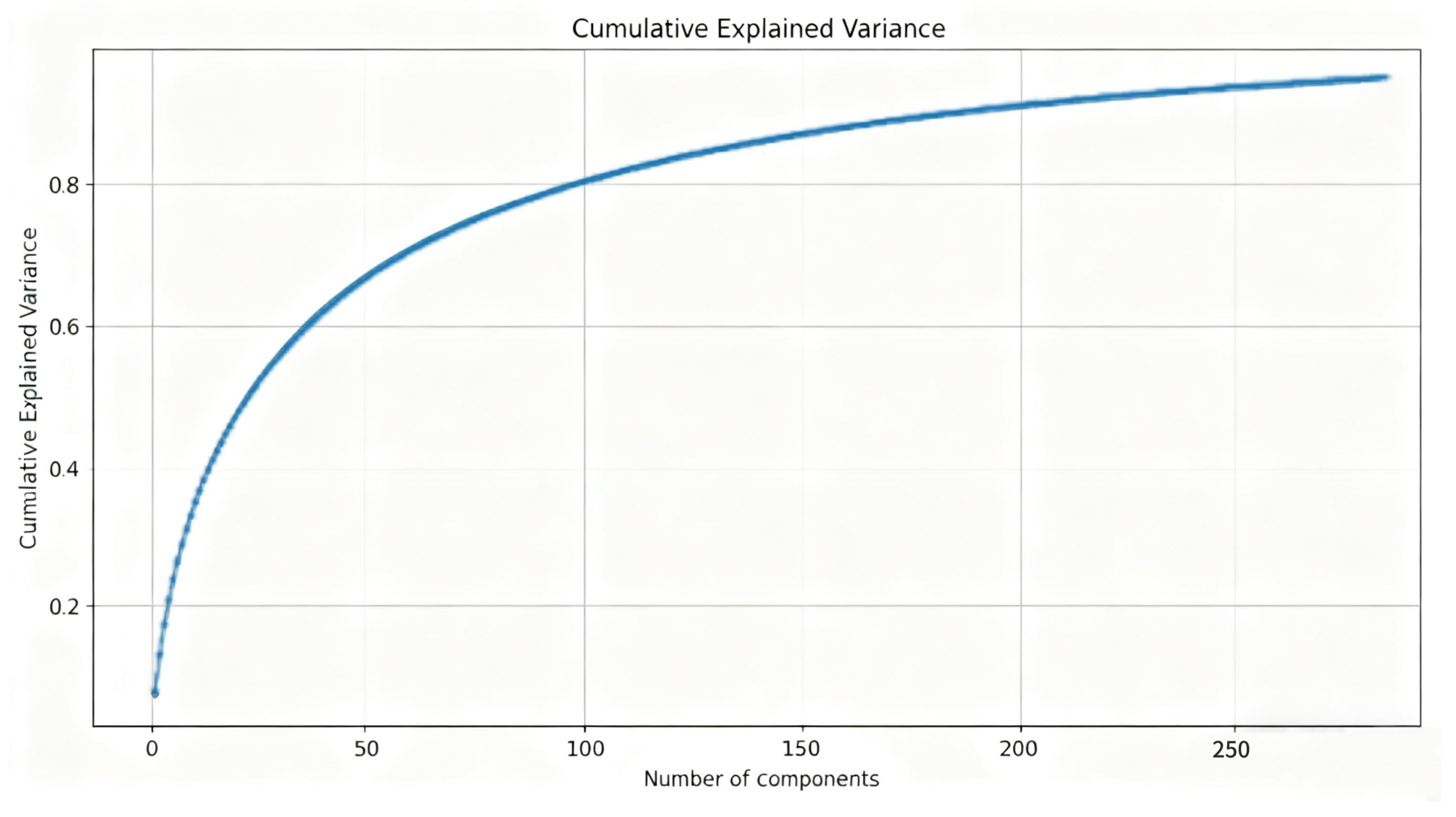

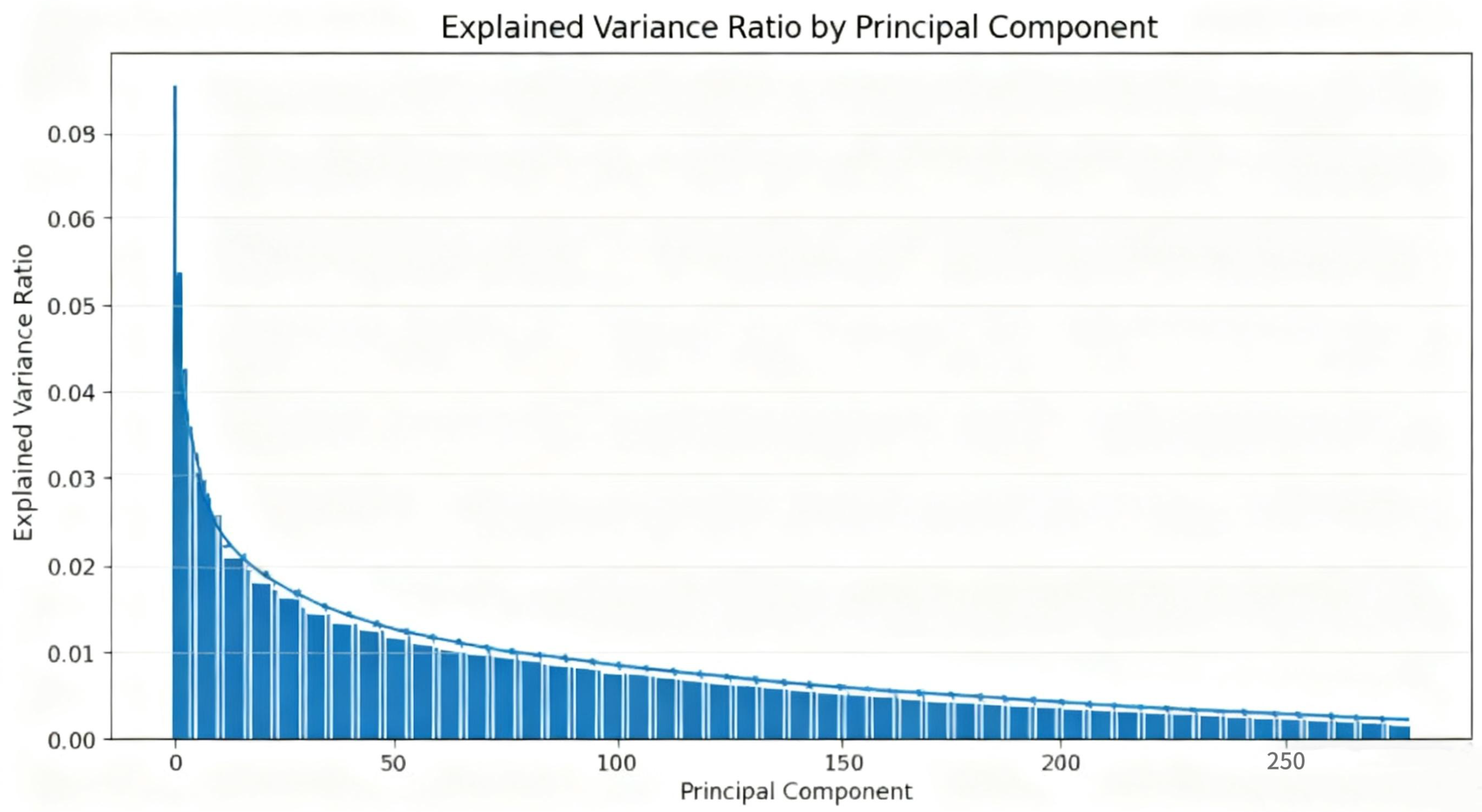

- This paper avoids global graph embedding by explicitly adopting Neo4j Cypher symbolic queries. Furthermore, by integrating PCA vector dimensionality reduction technology, we successfully shortened the average retrieval latency by 31%. This achievement enables agile, minute-level updates of industrial knowledge, thereby circumventing the lag and system interruptions caused by the high recalculation cost of GraphRAG’s graph embedding and ensuring the continuity of knowledge services.

- (3)

- This work suggests an explainable fusion process that strikes a balance between source weighting and structural confidence. It offers transparent, auditable reliability proof for every choice made in shipbuilding scenarios, guaranteeing complete outcome traceability and adherence to strict safety regulations.

2. Related Work

2.1. Process Knowledge Graph

- (1)

- Insufficient integration of unstructured knowledge: Many industrial process documents are text-based and unstructured. This knowledge is underutilized, which results in incomplete representation within knowledge graphs.

- (2)

- Weak intelligent question answering and interactivity: Existing KG-based systems focus mainly on retrieval and recommendation. They lack flexible natural language interaction, making it difficult to support engineers’ daily needs for quick querying and reasoning.

2.2. Industrial RAG Technology

- (1)

- Insufficient utilization of structured knowledge: RAG focuses on document fragment retrieval, making it difficult to directly manipulate process entities and relationships, leading to imprecise understanding of parameter associations and process flows.

- (2)

- Limited complex logical reasoning: For complex queries involving cross-document, multi-table, or multi-parameter dependencies, RAG often misses key reasoning steps, leading to incomplete answers.

- (3)

- Lagging knowledge updates and evolution: RAG retrieval repositories rely on manual document imports for updates, failing to reflect real-time iterations of new materials and processes.

2.3. Hybrid-RAG Technology

3. Methodology

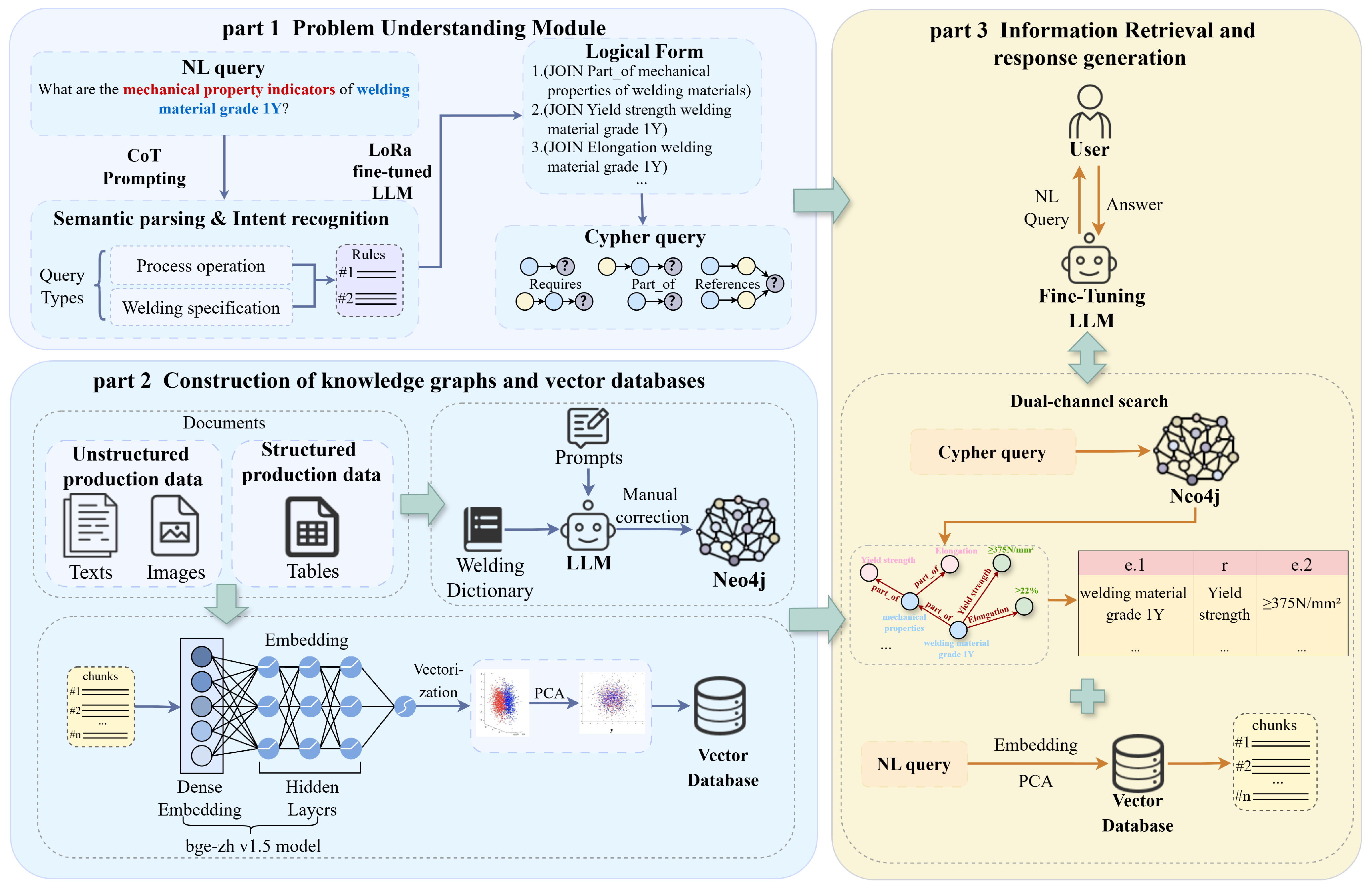

3.1. Overall System Architecture

3.2. Problem Analysis and Logic Generation

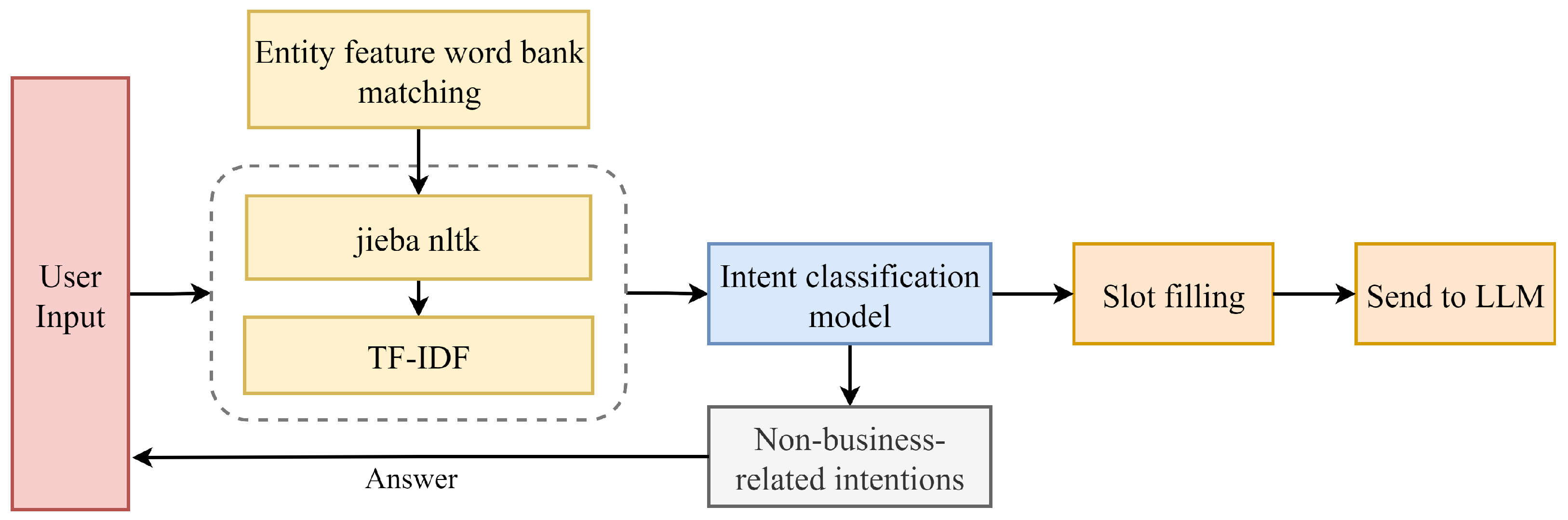

3.2.1. Query Parsing and Intent Recognition

3.2.2. LLM Logic Generation and LoRA Fine Tuning

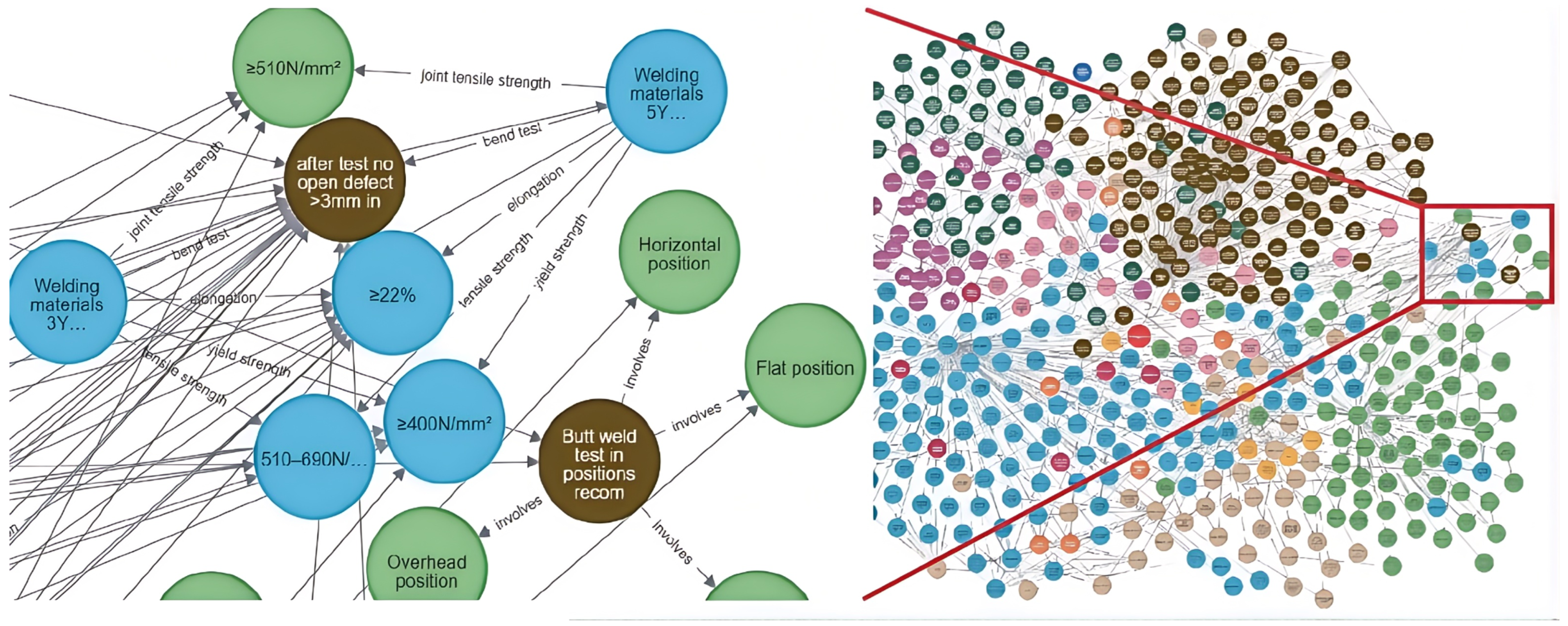

3.3. Construction of Knowledge Graphs and Vector Databases

3.3.1. Definition of Welding Process Entities

3.3.2. Graph Construction Based on Large Language Models

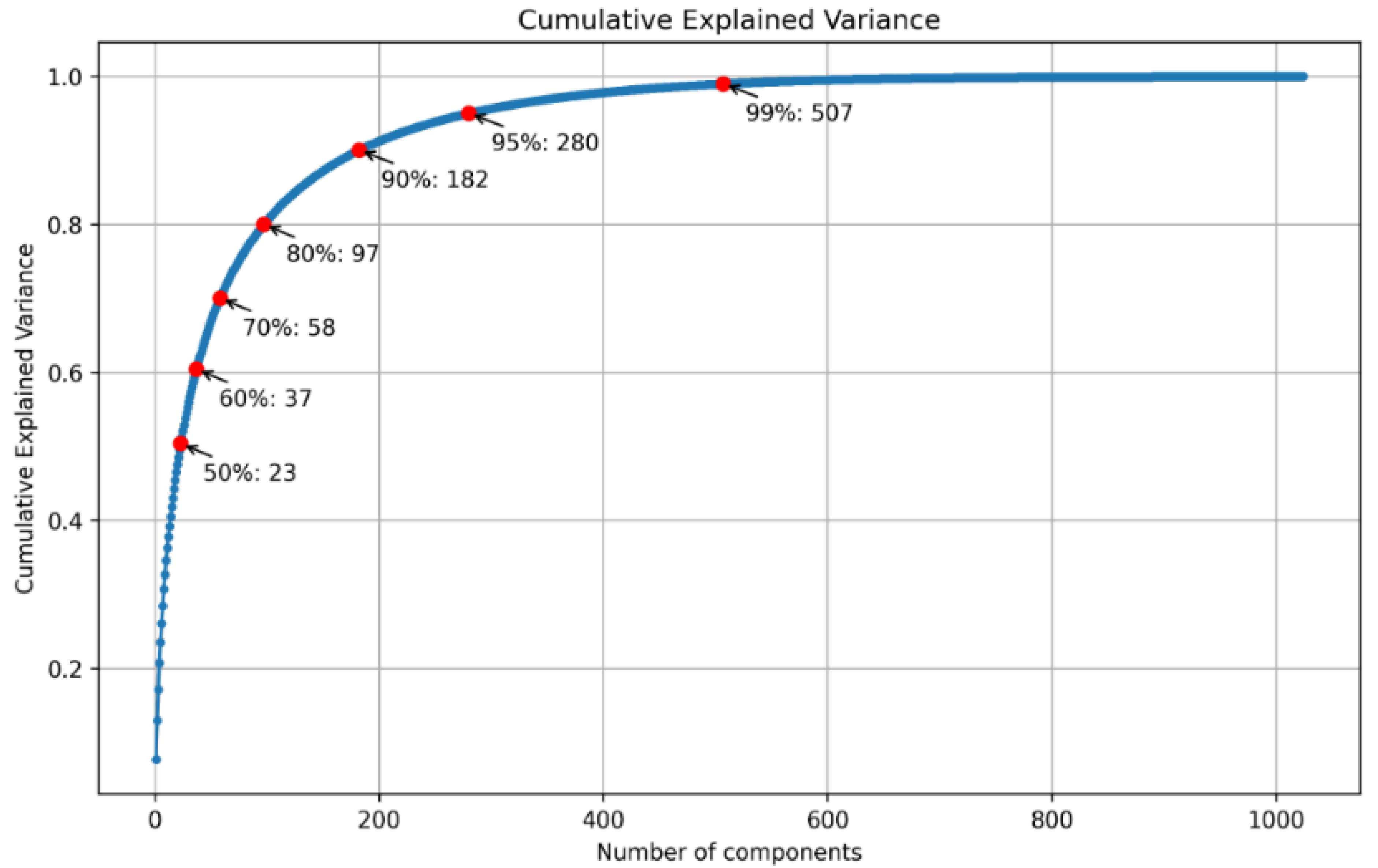

3.3.3. Document Vector Database Construction

| Algorithm 1: PCA-Based Vector Dimension Reduction |

| Input: Set of original high-dimensional vectors |

| Output: Set of reduced-dimension vectors |

|

3.4. Hybrid Information Retrieval and Response Generation

3.4.1. Knowledge Graph Retrieval

3.4.2. Vector Database Retrieval

3.4.3. Hybrid-RAG Fusion Ranking Mechanism

| Algorithm 2: Dual-Channel Fusion Sorting Pseudocode |

| Input: Knowledge graph retrieval results: (entities, relations, attributes, etc.) |

| Vector database retrieval results: (relevant document fragments) |

| Original User Query: Q |

| Output: The sorted results after integration |

|

4. Experimental Design and Evaluation

4.1. Experimental Equipment and Parameters

4.2. Dataset

4.3. Evaluation Metrics

- PrecisionConceptual Q&A performance is evaluated by checking whether the generated answer aligns with the key content of the reference answer. The corresponding precision rate calculation formula is expressed as follows:where denotes correct words generated by the model and denotes total words generated by the model.

- RecallThe model’s recall indicates its capacity to recognize and incorporate all pertinent data. The following is the precise formula used to determine recall:where denotes the number of correct words generated by the model and os total number of words in the standard answer.

- F1 ScoresF1-scores combine precision and recall, making them more suitable for scenarios where answers contain partial matches. The following is the precise formula used to determine F1 scores:

- MAP@6Mean average precision at k () is a metric used to evaluate the quality of a model’s predictions in information retrieval tasks. It computes the average across all samples and assesses the significance of the top k outcomes anticipated by the model.where M represents the number of welding process issues, and denotes the Average Precision at k for the i-th issue, calculated as follows:denotes the accuracy at the i-th position in the prediction list, calculated as follows:where is an indicator function: if the i-th prediction is in the actual answer list, ; otherwise, . Because graph retrieval may produce multiple keywords or text segments, MAP@6 is used for evaluation.

5. Experimental Results and Discussion

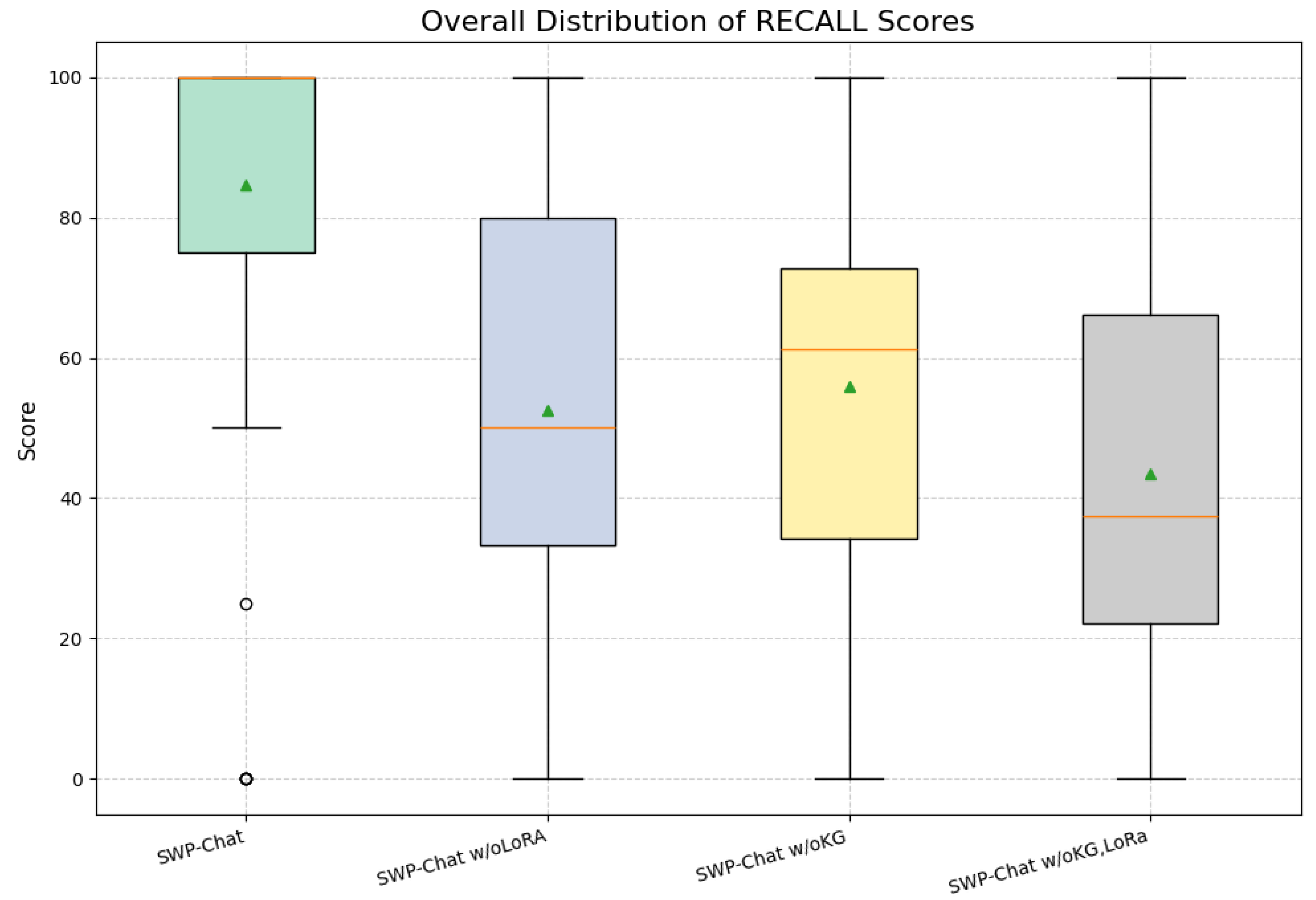

5.1. Ablation Experiment

5.2. Comparative Experiments

5.3. System Usability Analysis

5.4. Significance Testing

5.4.1. Wilcoxon Signed-Rank Test

5.4.2. Results Analysis

6. Conclusions

- (1)

- Knowledge graph maintenance and coverage: Real-time updates rely on manual review, and incomplete KG coverage limits structured reasoning, even when relevant documents exist in the vector database. Expanding multi-source data integration and automated update mechanisms will improve consistency.

- (2)

- Segmentation granularity of the vector database: Paragraph-based segmentation can compress key procedural details into short references, causing fragments to lose critical context during retrieval. Adaptive merging strategies based on syntax or topic coherence will improve semantic integrity.

- (3)

- Ambiguity in user queries: Without explicit disambiguation, identical terms may have different meanings in different process contexts, producing partial or incorrect answers. Future work will add LLM-based entity disambiguation and clarification questions to reduce ambiguity.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLM | Large Language Model |

| RAG | Retrieval-Augmented Generation |

| KG | Knowledge Graph |

Appendix A. LoRA Fine-Tuning Hyperparameter Configuration

Hyperparameter Settings for LoRA Fine Tuning

| Parameter | Value |

|---|---|

| Base Model | DeepSeek-V3 |

| LoRA Rank (r) | 8 |

| LoRA Scaling Factor () | 16 |

| LoRA Dropout Rate | 0.1 |

| Optimizer | AdamW |

| Learning Rate | |

| Batch Size | 4 |

| Target Modules | q_proj, k_proj, v_proj, o_proj |

| Training Epochs | 3 |

| Max Sequence Length | 512 |

Appendix B. Sensitivity Analysis of Weighting Coefficients

Appendix B.1. Sensitivity Analysis of Weighting Coefficients (α,β, and γ)

- (1)

- Logical form confidence derived from knowledge graph retrieval ();

- (2)

- Semantic relevance from vector database recall ();

- (3)

- LLM-based answer plausibility score ().

Appendix B.2. Sensitivity Test Design

| Setting ID | |||

|---|---|---|---|

| S1 | 0.8 | 0.1 | 0.1 |

| S2 | 0.7 | 0.2 | 0.1 |

| S3 | 0.6 | 0.3 | 0.1 |

| S4 | 0.5 | 0.4 | 0.1 |

| S5 | 0.4 | 0.5 | 0.1 |

| Setting | Precision | Recall | F1-Score | MAP@6 |

|---|---|---|---|---|

| S1 (0.8, 0.1, 0.1) | 68.32% | 77.12% | 72.45% | 76.59% |

| S2 (0.7, 0.2, 0.1) | 72.58% | 81.02% | 76.57% | 80.01% |

| S3 (0.6, 0.3, 0.1) | 74.74% | 84.57% | 79.35% | 81.48% |

| S4 (0.5, 0.4, 0.1) | 69.25% | 80.36% | 74.39% | 78.66% |

| S5 (0.4, 0.5, 0.1) | 66.51% | 77.62% | 71.42% | 75.38% |

Appendix B.3. Sensitivity Result Analysis

- Performance changes significantly as and vary, demonstrating that the fusion coefficients directly affect system behavior.

- Increasing excessively (favoring semantic recall) introduces retrieval noise, reducing precision and F1 (S4 → S5).

- Overweighting (favoring strict logical paths) reduces semantic coverage, causing missing context (S1 → S2).

- The (0.6, 0.3, 0.1) configuration provides the best trade-off between (1) structural reasoning from the knowledge graph, (2) semantic richness from vector recall;, and (3) output plausibility from the LLM.

Appendix C. End-to-End Auditability Example

References

- The State Council of the People’s Republic of China. China Remains World’s Top Manufacturer for 15 Consecutive Years: Official. English News Release—Official Statistics; 9 July 2025. Available online: https://english.www.gov.cn/archive/statistics/202507/09/content_WS686e064dc6d0868f4e8f3fe1.html (accessed on 12 November 2025).

- Iatrou, C.P.; Ketzel, L.; Graube, M.; Häfner, M.; Urbas, L. Design classification of aggregating systems in intelligent information system architectures. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 745–752. [Google Scholar]

- Wang, B.; Wang, G.; Huang, J.; You, J.; Leskovec, J.; Kuo, C.-C. Inductive learning on commonsense knowledge graph completion. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Zeng, Z.; Cheng, Q.; Si, Y. Logical rule-based knowledge graph reasoning: A comprehensive survey. Mathematics 2023, 11, 4486. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H.; Wang, H. Retrieval-augmented generation for large language models: A survey. arXiv 2023, arXiv:2312.10997v2. [Google Scholar]

- Knollmeyer, S.; Caymazer, O.; Grossmann, D. Document GraphRAG: Knowledge graph enhanced retrieval augmented generation for document question answering within the manufacturing domain. Electronics 2025, 14, 2102. [Google Scholar] [CrossRef]

- Shaftee, S. Integration of product and manufacturing design: A systematic literature review. Procedia CIRP 2023, 121, 19–24. [Google Scholar] [CrossRef]

- Yahya, M.; Ali, A.; Mehmood, Q.; Yang, L.; Breslin, J.G.; Ali, M.I. A benchmark dataset with Knowledge Graph generation for Industry 4.0 production lines. Semant. Web 2024, 15, 461–479. [Google Scholar] [CrossRef]

- Meckler, S. Procedure model for building knowledge graphs for industry applications. arXiv 2024, arXiv:2409.13425. [Google Scholar]

- Guo, L.; Li, X.; Yan, F.; Lu, Y.; Shen, W. A method for constructing a machining knowledge graph using an improved transformer. Expert Syst. Appl. 2024, 237, 121448. [Google Scholar] [CrossRef]

- Liu, X.; Wang, H. Knowledge Graph Construction and Decision Support Towards Transformer Fault Maintenance. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Dalian, China, 5–7 May 2021; IEEE: Dalian, China, 2021; pp. 661–666. [Google Scholar]

- Zhang, Y.; Zhang, S.; Huang, R.; Huang, B.; Liang, J.; Zhang, H.; Wang, Z. Combining deep learning with knowledge graph for macro process planning. Comput. Ind. 2022, 140, 103668. [Google Scholar] [CrossRef]

- Dong, J.; Jing, X.; Lu, X.; Liu, J.; Li, H.; Cao, X.; Du, C.; Li, J.; Li, L. Process knowledge graph modeling techniques and application methods for ship heterogeneous models. Sci. Rep. 2022, 12, 2911. [Google Scholar] [CrossRef]

- Zhou, B.; Bao, J.; Chen, Z.; Liu, Y. KGAssembly: Knowledge graph-driven assembly process generation and evaluation for complex components. Int. J. Comput. Integr. Manuf. 2022, 35, 1151–1171. [Google Scholar] [CrossRef]

- Shi, X.; Tian, X.; Gu, J.; Yang, F.; Ma, L.; Chen, Y.; Su, T. Knowledge graph-based assembly resource knowledge reuse towards complex product assembly process. Sustainability 2022, 14, 15541. [Google Scholar] [CrossRef]

- Lin, L.; Zhang, S.; Fu, S.; Liu, Y. FD-LLM: Large language model for fault diagnosis of complex equipment. Adv. Eng. Inform. 2025, 65, 103208. [Google Scholar] [CrossRef]

- Meng, S.; Wang, Y.; Yang, C.-F.; Peng, N.; Chang, K.-W. LLM-A*: Large language model enhanced incremental heuristic search on path planning. arXiv 2024, arXiv:2407.02511. [Google Scholar]

- Liu, Y.; Zhou, Y.; Liu, Y.; Xu, Z.; He, Y. Intelligent fault diagnosis for CNC through the integration of large language models and domain knowledge graphs. Engineering 2025, 53, 311–322. [Google Scholar] [CrossRef]

- Werheid, J.; Melnychuk, O.; Zhou, H.; Huber, M.; Rippe, C.; Joosten, D.; Keskin, Z.; Wittstamm, M.; Subramani, S.; Drescher, B.; et al. Designing an LLM-based copilot for manufacturing equipment selection. arXiv 2024, arXiv:2412.13774. [Google Scholar] [CrossRef]

- Jiang, X.; Qiu, R.; Xu, Y.; Zhu, Y.; Zhang, R.; Fang, Y.; Xu, C.; Zhao, J.; Wang, Y. RAGraph: A general retrieval-augmented graph learning framework. Adv. Neural Inf. Process. Syst. 2024, 37, 29948–29985. [Google Scholar]

- Wang, J.; Fu, J.; Wang, R.; Song, L.; Bian, J. PIKE-RAG: SPecIalized KnowledgE and Rationale augmented generation. arXiv 2025, arXiv:2501.11551. [Google Scholar]

- Barron, R.C.; Grantcharov, V.; Wanna, S.; Eren, M.E.; Bhattarai, M.; Solovyev, N.; Tompkins, G.; Nicholas, C.; Rasmussen, K.Ø.; Matuszek, C.; et al. Domain-specific retrieval-augmented generation using vector stores, knowledge graphs, and tensor factorization. In Proceedings of the 2024 International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 18–20 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1669–1676. [Google Scholar]

- Opoku, D.O.; Sheng, M.; Zhang, Y. DO-RAG: A domain-specific QA framework using knowledge graph-enhanced retrieval-augmented generation. arXiv 2025, arXiv:2505.17058. [Google Scholar]

- Russell-Gilbert, A. RAAD-LLM: Adaptive Anomaly Detection Using LLMs and RAG Integration. Ph.D. Dissertation, Mississippi State University, Starkville, MS, USA, 2025. [Google Scholar]

- Sarmah, B.; Mehta, D.; Hall, B.; Rao, R.; Patel, S.; Pasquali, S. HybridRAG: Integrating knowledge graphs and vector retrieval augmented generation for efficient information extraction. In Proceedings of the ICAIF’24: 5th ACM International Conference on AI in Finance Brooklyn NY USA, 14–17 November 2024; ACM: New York, NY, USA, 2024; pp. 608–616. [Google Scholar]

- Tram, M.H.B. Efficacy of GraphRAG (Knowledge Graph-enhanced RAG) Beyond Keyword Matching for Information Retrieval. Ph.D. Dissertation, University of Jyväskylä, Jyväskylä, Finland, 2025. [Google Scholar]

- Zhai, S.; Ji, H.; Zhang, K.; Wu, Y.; Ma, Z. Approximate Query for Industrial Fault Knowledge Graph Based on Vector Index. Int. J. Softw. Eng. Knowl. Eng. 2025, 35, 525–545. [Google Scholar] [CrossRef]

- Berant, J.; Chou, A.; Frostig, R.; Liang, P. Semantic parsing on Freebase from question-answer pairs. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, DC, USA, 18–21 October 2013; Association for Computational Linguistics: Seattle, WA, USA, 2013; pp. 1533–1544. [Google Scholar]

- Maswadi, K.; Ghani, N.A.; Hamid, S.; Rasheed, M.B. Human activity classification using decision tree and naïve Bayes classifiers. Multimed. Tools Appl. 2021, 80, 21709–21726. [Google Scholar] [CrossRef]

- Guan, K.; Du, L.; Yang, X. Relationship extraction and processing for knowledge graph of welding manufacturing. IEEE Access 2022, 10, 103089–103098. [Google Scholar] [CrossRef]

- China Classification Society. Rules for Materials and Welding (2024) released by China Classification Society (CCS). Ship Stand. Eng. 2024, 57, 2, (In Chinese with English abstract). [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, L.; Chen, W.; Chen, Y. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Ben Zaken, E.; Goldberg, Y.; Ravfogel, S. BitFit: Simple Parameter-Efficient Fine-Tuning for Transformer-Based Masked Language-Models. arXiv 2022, arXiv:2106.10199. [Google Scholar]

| Query | Logical Forms |

|---|---|

| What are the mechanical property indicators of welding material grade 1Y? | 1. (JOIN Part_of mechanical properties of welding materials) 2. (JOIN Yield strength welding material grade 1Y) 3. (JOIN Elongation welding material grade 1Y) |

| ID | Entity Label | Entity Example |

|---|---|---|

| 1 | Welding materials | Welding rods, flux, and associated gases |

| 2 | Welding method | Shielded metal arc welding, gas shielded welding |

| 3 | Welding equipment | Torches, automatic gravity feeders, and gas chisels |

| 4 | Welding operation | Groove preparation, arc striking, tack welding, and workpiece heating |

| 5 | Welding base metal | Steel and aluminum alloys |

| 6 | Welded structure | Welding position, groove type, and joint design |

| 7 | Welding quality | Weld formation factors, welding defects, and various tests |

| 8 | Process parameter | Current, voltage, diameter, and wire feed speed |

| 9 | Table | - |

| 10 | Image | - |

| 11 | Others | - |

| Metric | Time Performance (ms) | Accuracy Performance (MAE) |

|---|---|---|

| Cosine Similarity | 0.5365 | 0.3799 |

| Cosine Similarity-PCA | 0.1692 | 0.3814 |

| Equipment | Configuration |

|---|---|

| GPU | RTX 2080Ti 12G |

| CUDA | 11.4 |

| Python | 3.9 |

| Neo4j | 5.18.1 |

| LLM | DeepSeek-V3 BGE-Reranker-m3 BGE-zh-v1.5 |

| Model | Precision (%) | Recall (%) | F1 Score (%) | MAP@6 (%) |

|---|---|---|---|---|

| SWP-Chat | 74.74 | 84.57 | 79.35 | 81.48 |

| SWP-Chat w/o KG | 35.77 | 55.92 | 43.63 | - |

| SWP-Chat w/o LoRA | 66.20 | 53.24 | 59.02 | 63.30 |

| SWP-Chat w/o KG, LoRA | 34.43 | 43.56 | 38.46 | - |

| Metric | Precision (%) | Recall (%) | F1 Score (%) | Processing Time (s) | |

|---|---|---|---|---|---|

| Model | |||||

| DeepSeek-R1 | 28.13 | 32.68 | 30.23 | 4.20 | |

| ERNIE4.5-8k | 29.17 | 33.42 | 31.15 | 3.87 | |

| Llama3-70b | 28.93 | 33.07 | 30.86 | 3.69 | |

| ChatGLM3-32k | 28.71 | 33.51 | 30.92 | 3.67 | |

| Qwen3-32B | 29.40 | 33.33 | 31.24 | 3.88 | |

| Ours | 78.08 | 84.65 | 81.23 | 5.50 | |

| Metric | Precision (%) | Recall (%) | F1-Scores (%) | Processing Time (s) | |

|---|---|---|---|---|---|

| Model | |||||

| DeepSeek-R1 | 38.88 | 53.07 | 44.88 | 4.36 | |

| ERNIE4.5-8k | 41.69 | 48.81 | 44.97 | 3.03 | |

| Llama3-70b | 38.64 | 51.39 | 44.11 | 3.22 | |

| ChatGLM3-32k | 38.36 | 51.24 | 43.87 | 3.16 | |

| Qwen3.5-144b | 38.90 | 51.98 | 44.50 | 3.35 | |

| Ours | 71.97 | 84.50 | 77.73 | 5.42 | |

| Model | Results | Remark |

|---|---|---|

| Only-KG | Query statement: MATCH (n:Other{name:“Hydrogen Content Grade H15”})-[r:Measurement Method]-(m); MATCH (n:Other{name:“Hydrogen Content Grade H15”})-[r:Diffusion Hydrogen Content]-(q) RETURN m,q Query Results: []; []; [Mercury Method]; [Thermal Conductivity Method]; [Glycerin Method] | This requires knowledge of Cypher, increasing the operational threshold and limiting usability for non-technical users; query results are returned as unordered nodes (content and method), preventing employees from directly associating specific methods with content. |

| SWP-Chat w/o KG, LoRA | Hydrogen content grade H15 may be determined using the mercury method, thermal conductivity method specified in ISO 3690 [31], or the glycerol method specified in 2.3.6.2. The corresponding diffusion hydrogen measurement procedure and specimen preparation quantity shall be performed according to Table 2.3.6.1 (the thermal conductivity method must meet the minimum collection time requirements at different test temperatures specified in Table 2.3.6.1). The hydrogen content grade is H15. | Without a knowledge graph structure, the system cannot accurately extract key information, leading to redundant and sometimes ambiguous responses. In addition, The system merely returns isolated strings such as “Table 2.3.6.1”, stripped of any explanatory context. |

| SWP-Chat w/o LoRA | The definition of hydrogen content grade H15 cannot be found in the provided information. Applicable measurement methods include the glycerol method. Diffusion hydrogen content refers to the hydrogen content collected through hydrogen measurement tests (such as thermal conductivity, gas chromatography, or carrier gas thermal extraction) under specific | Without LoRA fine tuning, the model exhibits insufficient intent recognition and logical generation capabilities, resulting in recall failures and an inability to generate correct Cypher statements. This leads to poor system reliability and incomplete information retrieval. |

| SWP-Chat w/o KG | Hydrogen content grade H15 can be measured using the mercury method, thermal conductivity method, or glycerol method. The corresponding diffusion hydrogen content cannot be determined from the information provided. | Vector-only retrieval introduces high semantic noise and lacks precise inference, making it difficult to determine exact hydrogen diffusion values. |

| SWP-Chat | The measurement methods corresponding to hydrogen content grade H15 include the mercury method, thermal conductivity method, and glycerin method. When using the glycerin method, the diffusion hydrogen content is ; for other methods, the diffusion hydrogen content is . | The combination of LLM-based logic generation and automatic Cypher execution enables zero-threshold natural interaction, providing concise and accurate answers, regardless of user expertise. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, S.; Cai, L.; Zhang, Y.; Xin, X.; Jiang, B.; Qi, L. Intelligent Q&A System for Welding Processes Based on a Symmetric KG-DB Hybrid-RAG Strategy. Symmetry 2025, 17, 1994. https://doi.org/10.3390/sym17111994

Ye S, Cai L, Zhang Y, Xin X, Jiang B, Qi L. Intelligent Q&A System for Welding Processes Based on a Symmetric KG-DB Hybrid-RAG Strategy. Symmetry. 2025; 17(11):1994. https://doi.org/10.3390/sym17111994

Chicago/Turabian StyleYe, Shuxia, Liwen Cai, Yongwei Zhang, Xiaoqi Xin, Bo Jiang, and Liang Qi. 2025. "Intelligent Q&A System for Welding Processes Based on a Symmetric KG-DB Hybrid-RAG Strategy" Symmetry 17, no. 11: 1994. https://doi.org/10.3390/sym17111994

APA StyleYe, S., Cai, L., Zhang, Y., Xin, X., Jiang, B., & Qi, L. (2025). Intelligent Q&A System for Welding Processes Based on a Symmetric KG-DB Hybrid-RAG Strategy. Symmetry, 17(11), 1994. https://doi.org/10.3390/sym17111994