In scenarios with sparse local interactions and dynamic heterogeneity, we introduce a graph attention mean field theory based on adversarial IRL to establish a reward without a symmetrical ambiguity problem and a strategy optimization model within a graph network framework. The model uses graph attention mechanisms to capture local dependencies between agents, whereas the typed mean-field approximation propagates group strategy distributions to achieve global strategy coordination.

4.1. Problem Description

In dynamic heterogeneous multi-agent systems, let

be a set of agents. Suppose that each agent

belongs to a certain type

at time

t. The type

and strategy

may change over time. Therefore, the dynamic heterogeneous multi-agent IRL is modeled as a dynamic heterogeneous graph network

, where the nodes

represent the agents, and the edges

represent the interaction relationships between them. Under expert demonstrations, the main goal is to learn each agent’s strategy

, maximize long-term cumulative rewards, and adapt to dynamic changes in the type and topology. Actual expert demonstration data can be used as expert demonstrations. Alternatively, we can refer to references [

21,

27] and use the entropy-regularized MFNE strategy to generate expert demonstration trajectories. Given a joint policy

generated via the entropy MFNE

, the entropy regularized MFNE for an

. Then,

is the optimal solution to the optimization problem:

where

indicates the KL divergence,

, and

. The trajectory distribution increases exponentially with cumulative rewards without a symmetrical ambiguity problem. Additionally, let

be type,

represent the individual strategy of the type-specific mean field

, and

be the population distribution, where

is the state-action distribution of type

.

4.2. Improved IRL Based on Graph Attention Mean Field

First, we can obtain the expert demonstration trajectories sampled through the entropy regularized MFNE

. Assuming that these expert demonstration trajectories are optimal, we can maximize the likelihood function of the entropy-regularized MFNE static distribution for the expert trajectories, which is in turn induced by

-parameterized rewards

. These expert policies conform to the characteristics of the mean-field Nash equilibrium with some unknown parameterized rewards.

represents the entropy regularized MFNE induced by

. Then, the learning of rewards can be regarded as an adjustment to

. The probability of a trajectory

is generated via the entropy regularized MFNE policies with

,

where

denotes the stationary joint distributions,

is the distribution of the initial mean field flow, and

represents the state transition probability.

The classical maximum entropy IRL proposed via Ziebart et al. [

40] learns the reward function by maximizing the likelihood function of the observed expert demonstration trajectory distribution defined in Equation (

1). Inspired by this approach, the learning of the reward function in a dynamic heterogeneous multi-agent IRL is reduced to

where

is the partition function, which is defined as a summation of all expert demonstration trajectories.

However, IRL faces dynamic heterogeneous multi-agents in the process of reward learning and strategy optimization, resulting in large differences in neighboring strategies. This is the main challenge we face in this study. Therefore, we propose a hierarchical graph attention mean-field multi-agent inverse reinforcement learning, which infers implicit reward functions

from expert demonstration trajectories through AIRL and learns dynamic heterogeneous policies

, where

m is the mean-field distribution, thereby adapting to dynamic changes in type and topology. First, to capture the dynamic interactions between multiple agents and reduce the computational complexity, we model the latent variables of dynamic heterogeneous multi-agents. Under the expert demonstration trajectory, for the variational encoder VAE

, the input is

, and the output latent variable is

:

. The type

of agent

i is determined by its latent variable, and the update formula is

. Thus, we obtain the type probability distribution of agents as follows:

where

is the prototype vector of type

, which is updated through the sliding window expectation–maximization (EM) algorithm to ensure the timeliness of type estimation and achieve online clustering.

The pseudocode of the online type inference and clustering via the sliding window EM is shown in Algorithm 1.

| Algorithm 1 Online type inference and clustering via sliding window EM |

- Require:

Sliding window size W, number of types K, type encoder , current time t. - Ensure:

Agent types and updated prototypes . - 1:

Initialize: Maintain a buffer of recent latent variables for . - 2:

for each agent do - 3:

Encode latent variable: - 4:

Add to the buffer ; remove the oldest entries if . - 5:

end for - 6:

E-step (Responsibility): For each latent-agent pair , compute the responsibility that type k has for z: - 7:

M-step (Maximization): Update each type prototype : - 8:

for each agent do - 9:

Re-assign type based on updated prototypes: - 10:

end for

|

The process of online type inference and clustering is detailed in Algorithm 1. The core of this process is a sliding window EM algorithm that operates on a fixed-size buffer containing the most recent latent variables of all agents. This buffer, with size W, ensures that the type prototypes adapt to recent agent behaviors, providing robustness against non-stationarity.

The algorithm proceeds as follows: First, for each agent, the type encoder generates a new latent variable based on its current state and previous action (Line 3–4). These new latent variables are added to the sliding window buffer . The E-step (Line 6) computes the responsibility , which is the probability that the latent variable z belongs to type k, based on the current prototypes. The M-step (Line 7) then updates each prototype as the weighted average of all latent variables in the buffer, with weights given by their responsibilities . Finally, each agent is assigned to the type whose updated prototype is closest to its current latent variable (Line 9).

This loop runs at every time step t, allowing the types and their representations to evolve continuously as the agents’ strategies and the environment change. The use of a sliding window prevents the prototypes from being overly influenced by outdated behavior, striking a balance between adaptability and stability.

Then, based on the definition in

Section 3.1, the feature of node

in the heterogeneous dynamic graph

of the graph attention average field is

, and the neighbor set is

, where

is the interaction radius and

is the maximum number of neighbors. To achieve local feature updating, the graph attention network (GAT) is used to update the features of the graph nodes,

In Equation (

12), the coefficient of the attention mechanism is

, where

and

are type-specific weight matrices,

is the attention parameter for the type pair

. For each type

, the type mean field

depends on the strategies

of all agents of the same type, and

is influenced by the reward function through the parameter

, so the type mean field

is also influenced by the reward function and thus depends on the parameter

. The nature of these mean fields (MFs) makes the MF flow and strategy in the entropy regularized MFNE interdependent in Equation (

10); therefore, it is not possible to directly obtain the type mean field

. Furthermore, the state transition probabilities in Equation (

10) also depend on

and the dynamic changes in the multi-agent environment. Directly solving the optimization problem in Equation (

10) is difficult, which increases the computational complexity of learning rewards and optimizing strategies in a multi-agent dynamic environment. We consider using MLP to map the average of node features to obtain the type mean field

,

where

is a set of agents of type

at time

t.

The strategy of agents depends on the type mean field

. Therefore, all types of mean fields are aggregated to form global information

. The dynamic heterogeneous strategy of all agents is calculated as follows:

Owing to the overall consistency condition in GAMF-DHIRL (proven in the later sections), the mapping of the average node feature of an agent at each time step matches that of the mean field. Simultaneously, the type mean field

in Equation (

14) makes the transfer function

depend only on

, thereby decoupling

from the reward parameter

. Therefore, in the likelihood function of Equation (

10), the reward parameter

is omitted, and we obtain the maximum likelihood estimation of GAMF-DHIRL:

where

represents the rewards that are dependent on

m, and

indicates the partition function. Based on the optimization objective of the maximum entropy IRL proposed by Ziebart et al. [

40], we can obtain the maximum likelihood estimation objective in Equation (

10).

Theorem 1.

Consider a multi-agent system with dynamic agent types and a graph structure. Let the expert demonstration trajectories be independent and identical, which are sampled with MAT-MFNE induced by some unknown rewards. Assume that, for all , , and , is differentiable with respect to reward parameter . Then, under Assumptions A1–A3, when , the equation satisfies , which has a root that tends toward the maximum value of the likelihood function in Equation (10). Assumption 1.

The mean-field distribution is a consistent estimator of the true empirical distribution for all t, and the error introduced by the typed decomposition is bounded by .

Assumption 2.

In the dynamic graph , the graph diameter is bounded for any time step t, ensuring information propagation in the system. The agent type inference is statistically consistent.

Assumption 3.

The reward function is twice continuously differentiable in ω for all , , and , and the Hessian of the log-pseudolikelihood is negative definite in a neighborhood of .

Proof. Consider a standard game with players and a reward function

. Assume that the expert demonstration

is generated by

, where

represents the true value of the parameter. The pseudolikelihood objective is to maximize Equation (

17). Based on Equation (

16), the gradient of

with respect to

is as follows:

where

, and

. Note that our objective

depends on the type-based mean-field distribution

, which is a direct consequence of our MAT-MFNE framework. Under Assumption A1, we can treat

as an exogenous input that converges to its true value, allowing us to differentiate

with respect to

. The gradient is derived as:

Let

represent the empirical expert demonstration trajectory distribution. Based on Equation (

17), we can obtain the following equation,

when

, by the law of large numbers and the Assumption A1,

tends toward the true trajectory distribution

. Let

be the maximizer of the likelihood objective in Equation (

10). Furthermore, under Assumption A2, the dynamic graph and type inference ensure that the model distribution

is well-defined. When the number of expert demonstration samples approaches the limit

, and optimality

, we have:

Substituting the result from Equation (

19) into Equation (

18), the gradient of Equation (

18) is 0. □

4.3. Graph Attention Mean Field Adversarial IRL

Theorem 1 bridges the gap between the original intractable objective of the MLE in Equation (

10) and the tractable empirical objective of the MLE in Equation (

16). However, as discussed in

Section 3.3, the exact computation of the partition function

is typically difficult. Similar to the AIRL in [

41], we employ importance sampling to estimate the

of the adaptive sampler. Because the policies in MFGs are time-varying, we use a set of

T adaptive samplers

, where each

serves as a parameterized policy. Our proposed GAMF-DHIRL infers implicit reward functions

from expert demonstration trajectories

and learns strategies that adapt to dynamic heterogeneity, where

is the mean field distribution. This framework optimizes rewards and strategies through a game between the generator and discriminator, enabling agents to dynamically adapt to type changes and the non-stationarity of population distributions. The discriminator

is designed to distinguish between expert strategies

and generative strategies

and can output the estimation for rewards:

, where

is the reward function. The optimization objective is as follows:

The generator can maximize the discriminator reward while satisfying the graph attention mean field game equilibrium:

The objective of the generator is optimized as follows:

where the optimization of

is equivalent to estimating the reward function

. The policy gradient includes a mean-field term:

, where

. The update of the policy parameter

is interleaved with the update of the reward parameter

. Intuitively, tuning

can be viewed as a policy optimization process, that is, finding the GAMF-DHIRL’s policy induced by the current reward parameter to minimize the variance of importance sampling. Training

estimates the reward function by distinguishing between the observed trajectories and those generated by the current adaptive sampler

. We can train

using backward induction, that is, adjusting

based on

. In the optimal case,

approximates the latent reward function of GAMF-DHIRL, while

approximates the observed policy. The agent achieves efficient and adaptive collaboration with neighboring agents through sparse graph attention, and ensures the statistical consistency of group strategies through typed mean fields. Finally, we obtain the reward model:

where

captures the impact of population distribution.

Theorem 2. (Consistency of Adversarial Rewards) Under the AIRL framework [4] and assuming that the expert policy and the learner’s policy induce state-action mean field distributions that are absolutely continuous with respect to each other, the reward function recovered by the optimally trained discriminator in the AIRL setting satisfies: - 1.

Policy Invariance: At Nash equilibrium, the learned policy perfectly recovers the expert policy , and the induced mean field distributions are indistinguishable, i.e., .

- 2.

Reward Authenticity: The recovered reward is a monotonic transformation of the true underlying reward . Specifically, there exists a strictly increasing function g and a function dependent only on the state and mean field such that:

Proof. The proof follows and extends the theoretical foundations of AIRL [

4] to the graph-based mean field multi-agent setting.

The discriminator

, parameterized to distinguish expert trajectories from generated ones, is trained to minimize the cross-entropy loss:

For a fixed generator policy

, the optimal discriminator for this objective is known to be:

where

is the state-action-mean field distribution induced by policy

.

Following the AIRL equation [

4], we parameterize the discriminator as:

where

is a learned function. Substituting this parameterization into the optimal discriminator form in Equation (

26) and equating them at equilibrium yields:

Taking the logarithm on both sides, we obtain:

Under the maximum entropy policy framework [

40], the expert’s trajectory distribution is proportional to the exponential of the cumulative true reward:

. This implies that the state-action distribution satisfies

, where

is a log-partition function that dependents only on the state and mean field. A similar relation holds for

:

, where

A is the advantage function.

Substituting these into Equation (

29) and simplifying, we get:

Crucially, in the single-agent case, AIRL [

4] proves that, if

is decomposed as

, and the policy is trained with a discount factor

, the advantage term

cancels out the action-dependent log-policy term, leaving a reward that is state-only. In our multi-agent mean field context, we make a corresponding assumption that the learned function

can recover a reward function

that is disentangled from the policy. Thus, at convergence (

), we have:

where

is an arbitrary function. This completes the proof, showing that the recovered reward is equivalent to the true reward up to a constant shift dependent only on

, fulfilling the condition for reward authenticity. □

The pseudo-code of the graph attention mean field adversarial inverse reinforcement learning is shown in Algorithm 2. The overall structural framework of graph attention mean field adversarial inverse reinforcement learning is shown in

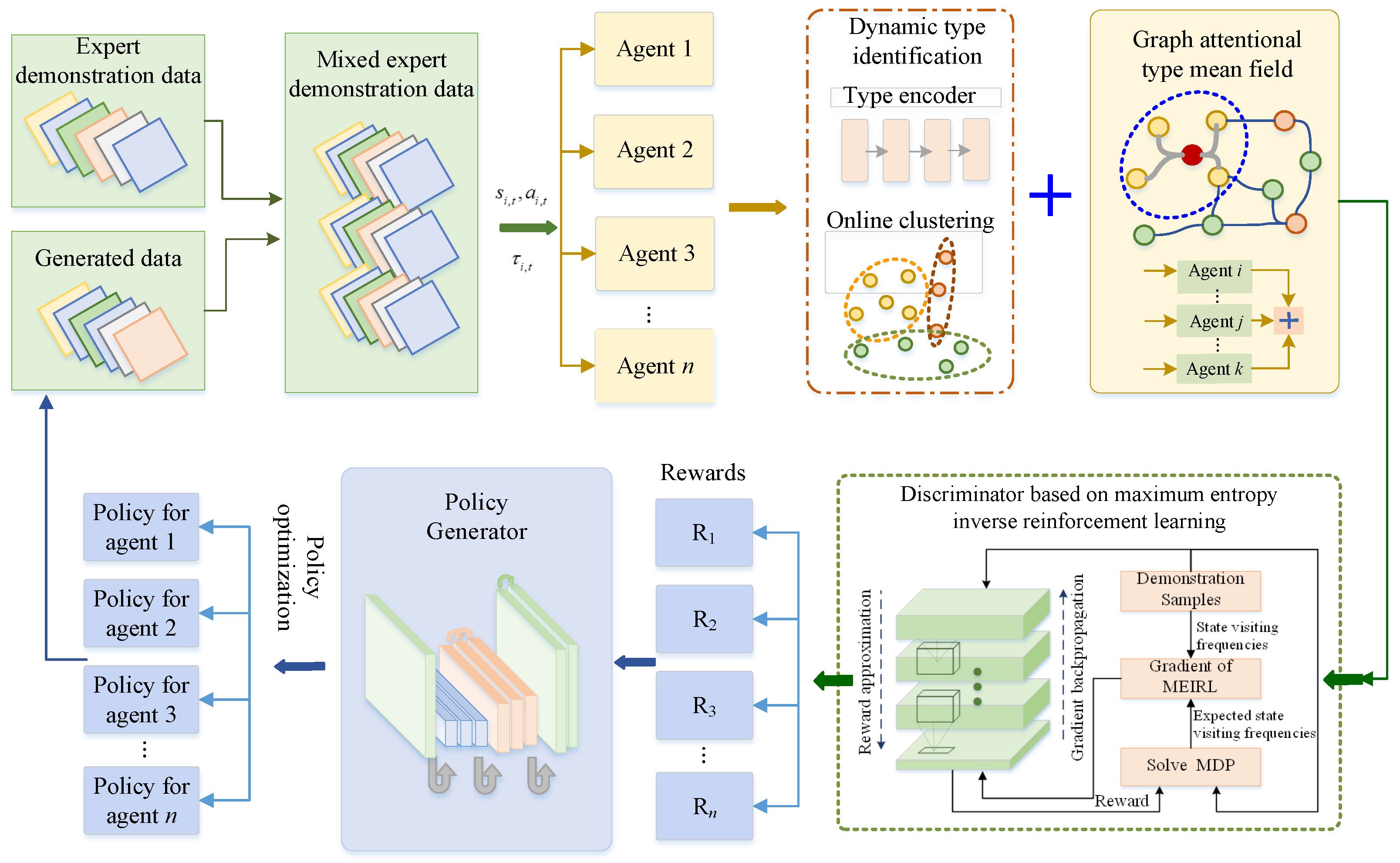

Figure 1. First, we need to obtain mixed expert demonstrations, which consist of the trajectory data (state-action sequences) of one or more experts (or different types of multi-agents) performing tasks in the environment. Under mixed expert demonstrations, the system can achieve cooperation or competition among heterogeneous multi-agent systems, thereby completing dynamic type identification and accurately distinguishing the behavior categories of different agents in the multi-agent system. On this basis, the system further encodes the identified agent types into low-dimensional and computable representation vectors to capture the essential characteristics of agent behaviors. Subsequently, through online clustering methods, the agent behaviors are clustered in real time, and the type labels of the agents are dynamically allocated and updated to continuously optimize the accuracy of type representation. Next, the discriminator based on maximum entropy inverse reinforcement learning uses graph attention networks to calculate the interaction weights between agents, enabling each agent to adaptively aggregate information based on the type encoding of other agents. Simultaneously, the average field theory is used to approximate the complex multi-agent interactions as the interaction between individual and group average behaviors. The main goal of the discriminator is to distinguish as accurately as possible whether a certain trajectory comes from “expert demonstration data” or “generated data”, and gradually learn the implicit reward function without a symmetrical ambiguity problem during this process. Meanwhile, the policy generator continuously adjusts its behavior strategy under the restored reward to generate trajectories that are closer to the expert demonstration. Finally, the system integrates these newly generated high-quality data with the original expert data to form a new generation of hybrid expert demonstration data, thereby continuously expanding and diversifying the scale and diversity of expert data and forming a self-enhancing closed-loop learning process.

| Algorithm 2 Graph Attention Mean Field Adversarial Inverse Reinforcement Learning |

- 1:

Input: MFG with parameters and observed trajectories , type space , learning rate , discount factor , number of types K. - 2:

Initialization: Randomly initialize policy network , discriminator , type encoder , type prototype , and mean field for all . - 3:

Estimate the mean field flow from . - 4:

for

do - 5:

for each agent do - 6:

Obtain the encoded latent variables - 7:

Allocate type: , and update the type prototype - 8:

Calculate the neighbor attention weights and update the node features with Equation ( 12) - 9:

Calculate type mean field with Equation ( 13) - 10:

if or then - 11:

Sample expert data batch , and generate policy batch - 12:

Update the discriminator and minimize the cross-entropy: - 13:

Update the generator and maximize the rewards: - 14:

else - 15:

Skip discriminator and generator update for efficiency - 16:

end if - 17:

if convergence condition is met then - 18:

For each type , select the optimal policy using Equation ( 21) - 19:

Update the current policy: - 20:

else - 21:

Continue with current policy - 22:

end if - 23:

end for - 24:

end for - 25:

Output: Policy network parameters , reward parameters , type prototype

|

4.4. Analysis of Time Complexity

We assume that the number of agents N and the number of types K dynamically change, and . The state and action dimensions are respectively, T represents time steps, the latent variable dimension is , and the average number of neighbors representing the graph sparsity satisfies .

Theorem 3.

The complexity of GAMF-DHIRL is , where .

Proof. The proof consists of analyzing the computational cost of each module in GAMF-DHIRL. First, in the dynamic type identification, the type encoder is used to calculate latent variables , resulting in a complexity of for a single agent and a complexity of for all agents. Online clustering is then performed using a sliding window EM, requiring a complexity of for each update and a total complexity of , where K is the number of types (). Because K and are constants, this term is .

Furthermore, in the graph attention average field module, the neighbor aggregation complexity of node feature updates in the GAT layer is , where is the number of edges, is the average number of neighbors, and the hidden layer dimension is a constant. Therefore, the complexity of the typed average field aggregation is .

Finally, during strategy optimization and adversarial training, the policy network

has an input dimension of

and an output dimension of

, leading to a forward propagation complexity of

. The discriminator

, which implements the objective in Equation (

20), has an input dimension of

and an output dimension of 1, resulting in a complexity of

.

In summary, the single-step time complexity is . Under the assumption of constant dimensions, , , , , and K are constants, which can be simplified as . For time steps T, the total complexity is . □