A Novel Cooperative Game Approach for Microgrid Integrated with Data Centers in Distribution Power Networks

Abstract

1. Introduction

- (1)

- A task allocation and scheduling mechanism is proposed and integrated into the optimization model of the MDC system to achieve load balancing of servers in each MDC on a spatiotemporal scale. In particular, the proposed task allocation and scheduling mechanism actively considers the server operation status and the economy of processing tasks in each data center, which effectively reduces the task processing cost and taps the standby potential of servers among data centers.

- (2)

- For the MDCs system with task allocation and scheduling mechanisms and energy sharing, an optimization model based on game theory and a solution framework is proposed. Specifically, the Nash bargaining game model is used to describe the interaction relationship between operators of MDCs. In addition, the solution framework integrates the greedy algorithm and the distributed alternating direction method of multipliers (ADMM) to obtain the optimal task processing scheme and the Nash bargaining solution (NBS) of the game model.

2. The Cooperative Operation Framework of the MDCs System

2.1. The MDCs System Operation Mode Considering Task Allocation and Scheduling

2.2. The Cooperative Operational Mechanism of the MDCs System

3. Optimal Operation Model of MDCs Considering Task Allocation and Scheduling

3.1. Local Tasks Allocation Considering the Processing Capacity of MDCs

3.1.1. Constraints on the Decision Variable

3.1.2. The Task Processing Capacity Metrics of MDC

3.2. Delay-Tolerant Task Scheduling Model of MDC

3.3. Objective Function for the Optimal Operation of MDC

3.4. The Constraints for the Optimal Operation of MDC

3.4.1. Distributed Energy Resources Operating of MDC

3.4.2. Network Constraints

3.4.3. Power Balance Among MDCs

4. Nash Bargaining Game Model and Interactive Distributed Solution Framework

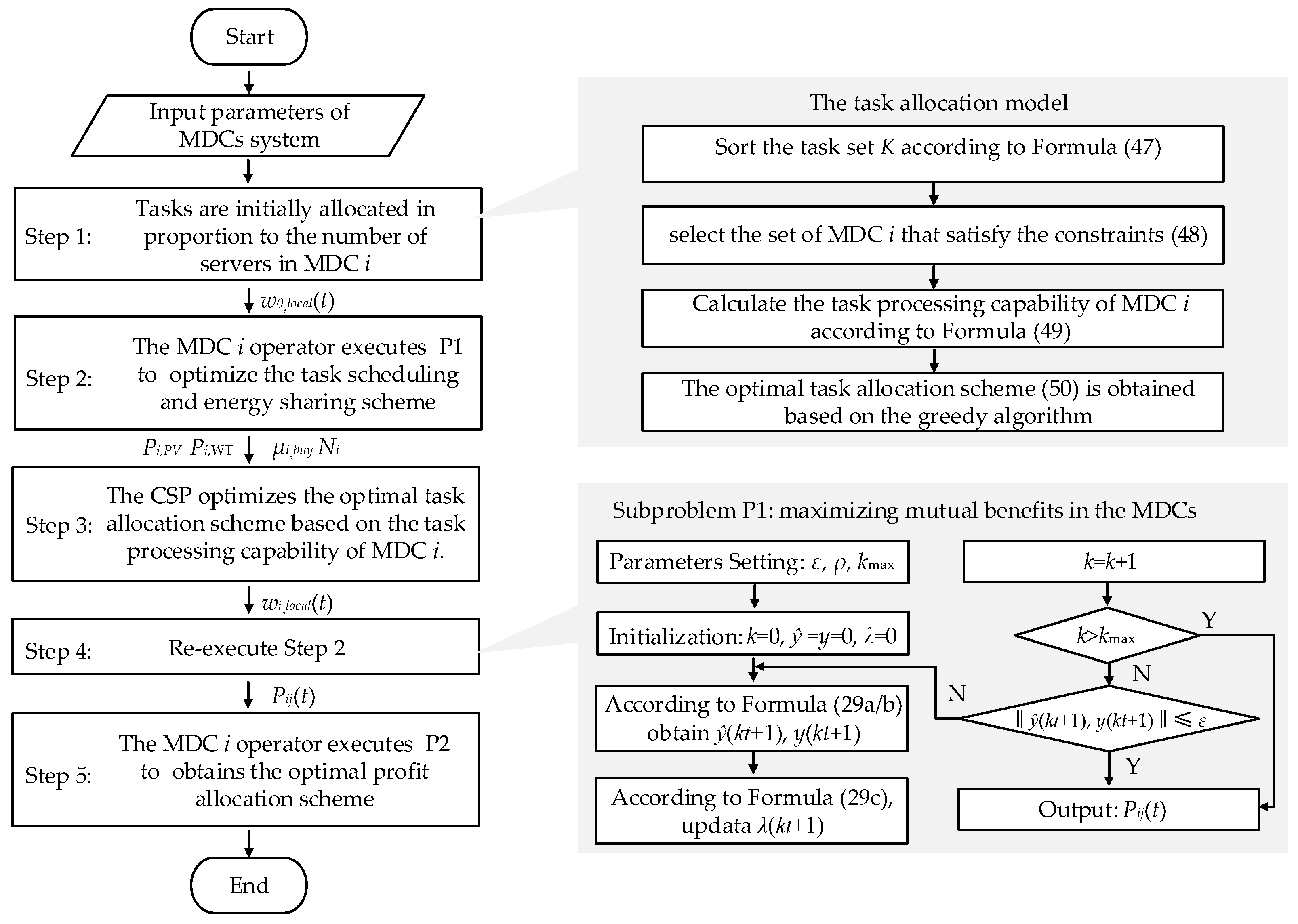

4.1. Solution Process

4.2. Solution of the Task Allocation Model

| Algorithm 1. Task allocation algorithm | |

| Input: | The set of tasks to be assigned, the number of active servers, the time-of-use electricity prices, and the renewable energy output. |

| Output: | The MDC to which the task is assigned. |

| Initial Step: | Initialize the task allocation result (where −1 indicates not yet assigned) |

| Step 1: | Sort the task K in chronological order. |

| Step 2: | For each k(m), select the candidate Dk that satisfies the capacity constraints: |

| Step 3: | Calculate the overall score for each MDC based on Formulas (5)–(7). |

| Step 4: | Update the task allocation k(m) to the MDC with the highest score, and update the current tasks of the MDC i. |

| Step 5: | Return the allocation results for all tasks. |

4.3. Solution of the Nash Bargaining Game Model

4.3.1. Establishment of the Nash Bargaining Game Model

4.3.2. Solution of the Nash Bargaining Game Model Based on ADMM

- (1)

- Upon receiving the latest updated λ(kt) and y(kt), MDC i updates ŷ(kt + 1) as Equation (59) and sends it to MDC y.

- (2)

- Upon receiving the latest updated ŷ(kt + 1), MDC j updates y(kt + 1) as Equation (60).

- (3)

- According to the latest updated ŷ(kt + 1) and y(kt + 1), update λ(kt + 1) as Equation (61).

| Algorithm 2: ADMM-Based Distributed Algorithm | |

| Input: | The set of pending tasks, data center equipment parameters, predicted output of renewable energy, dispatchable generator parameters, and time-of-use electricity price. |

| Output: | The optimal Task Scheduling Scheme, The optimal Output Power of Dispatchable Devices, Output power of each MDC. |

| Initial Step: | Set the maximum number of iterations, convergence accuracy, and penalty factor, with the iteration count kt = 0; initialize the Lagrange multipliers. |

| Repeat: | At kt-th iteration. |

| Step 1: | For MDC i, given ρ1, y(kt), λ(kt). |

| solve problem (56), and obtain ŷ(kt + 1) | |

| Step 2: | For MDC j, given ρ, ŷ(kt + 1), λ(kt), |

| solve problem (56), and obtain y(kt + 1). | |

| Step 3: | Update the dual variables using Equation (61). |

| Until: | The iterative convergence condition is satisfied, i.e., or k = kmax. |

5. Results

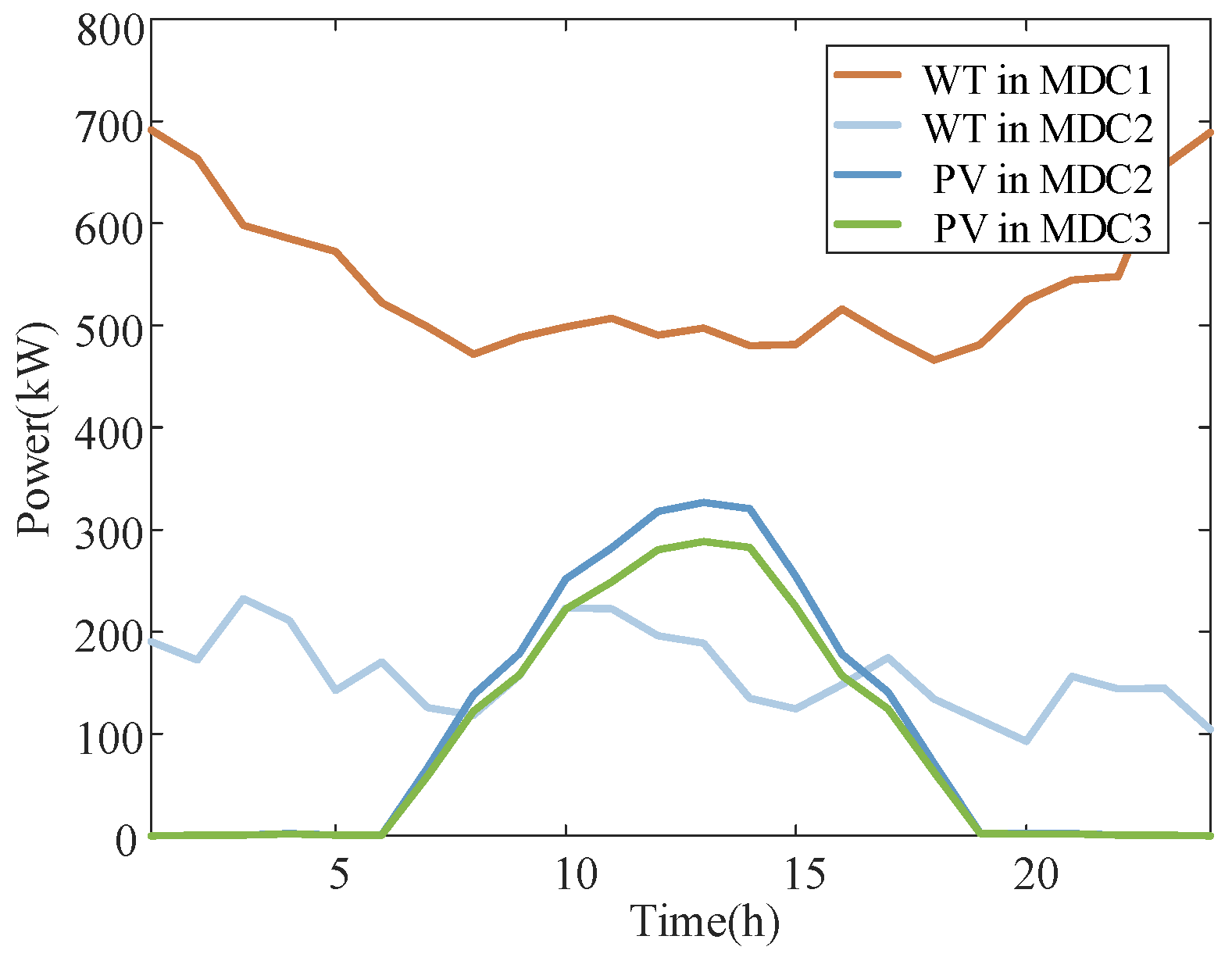

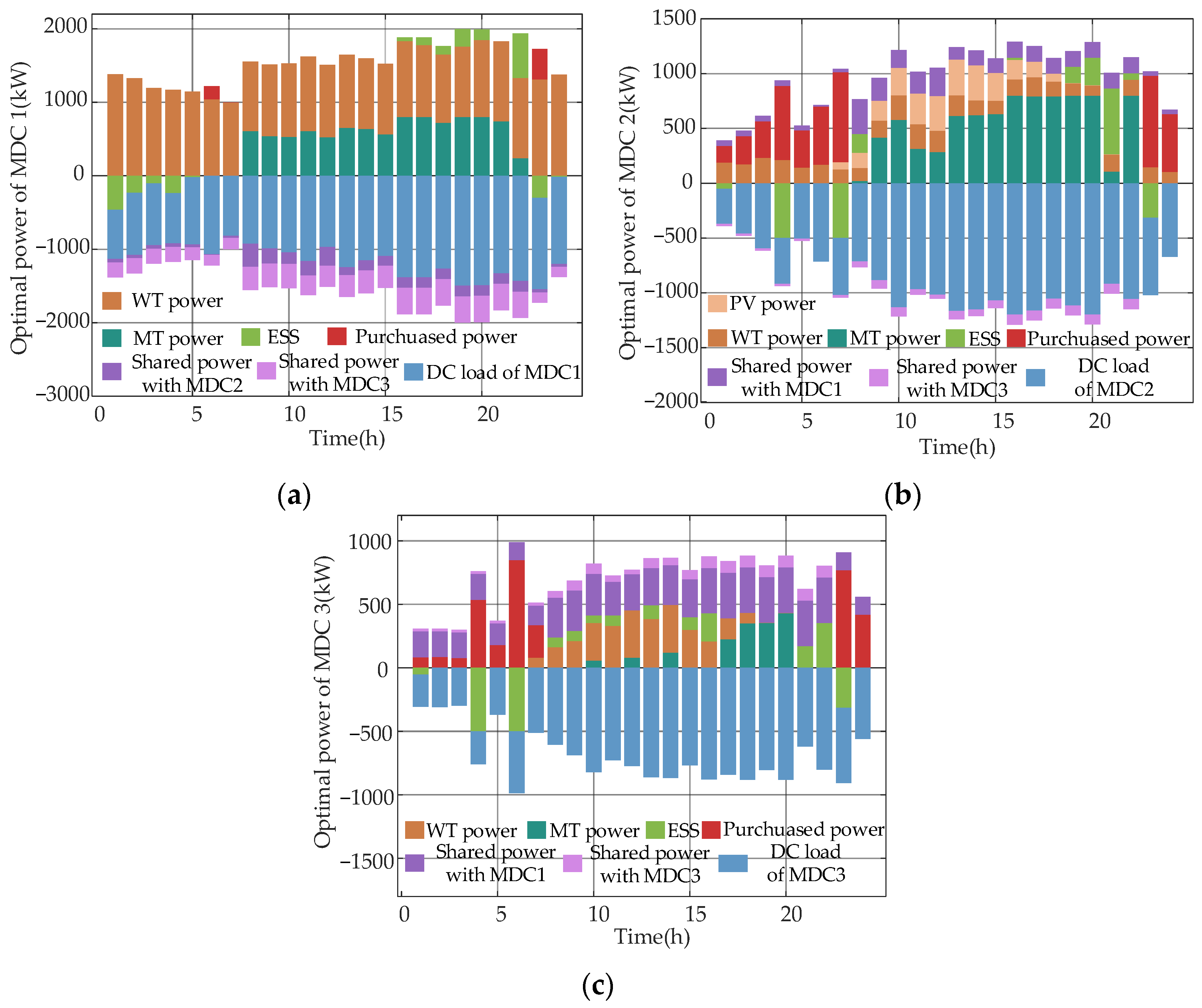

5.1. Test System

5.2. Case Introduction

5.3. Comparative Analysis of Optimization Results

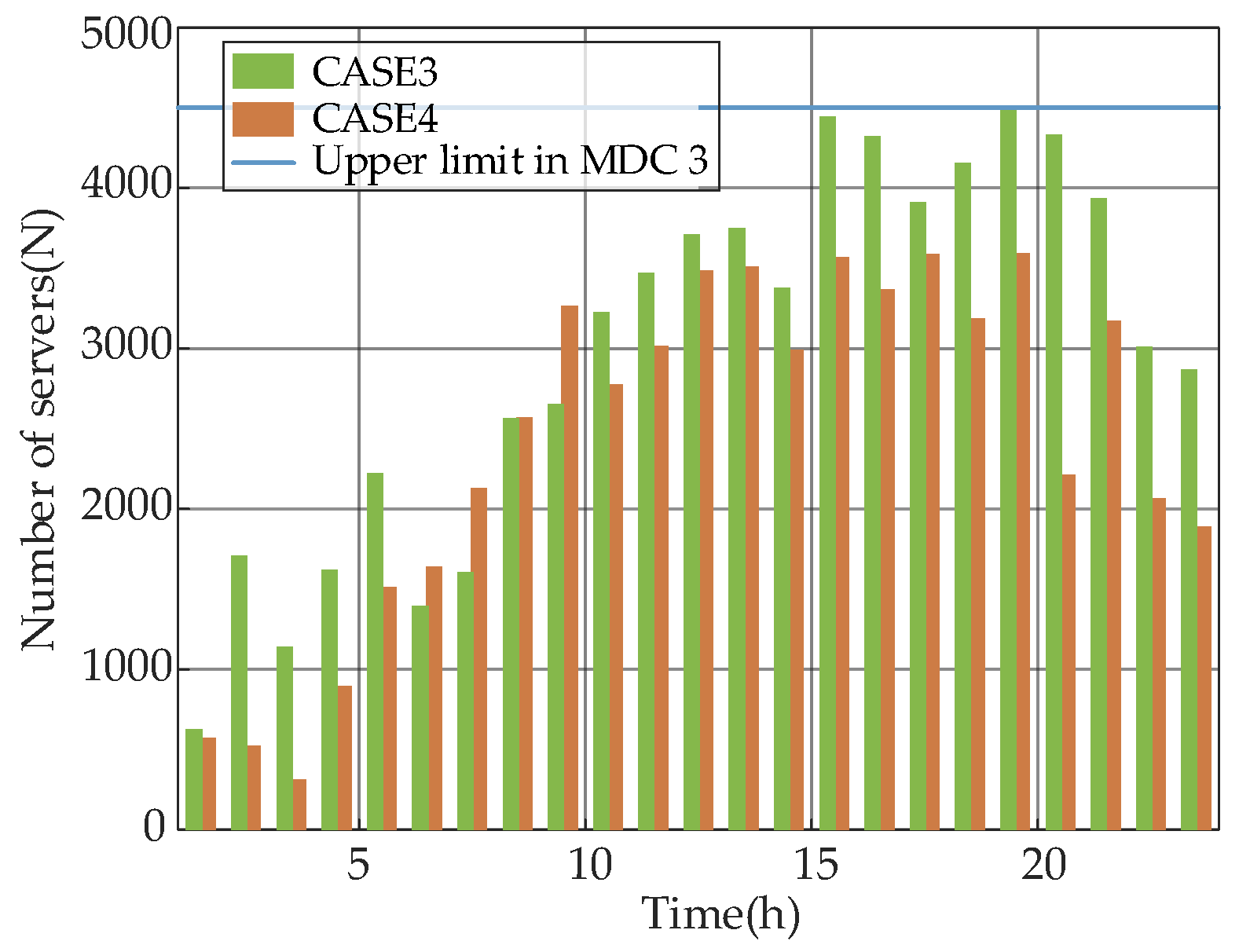

5.4. Benefits for Integrating Task Allocation and Scheduling in the Cooperative Operation Model of MDCs System

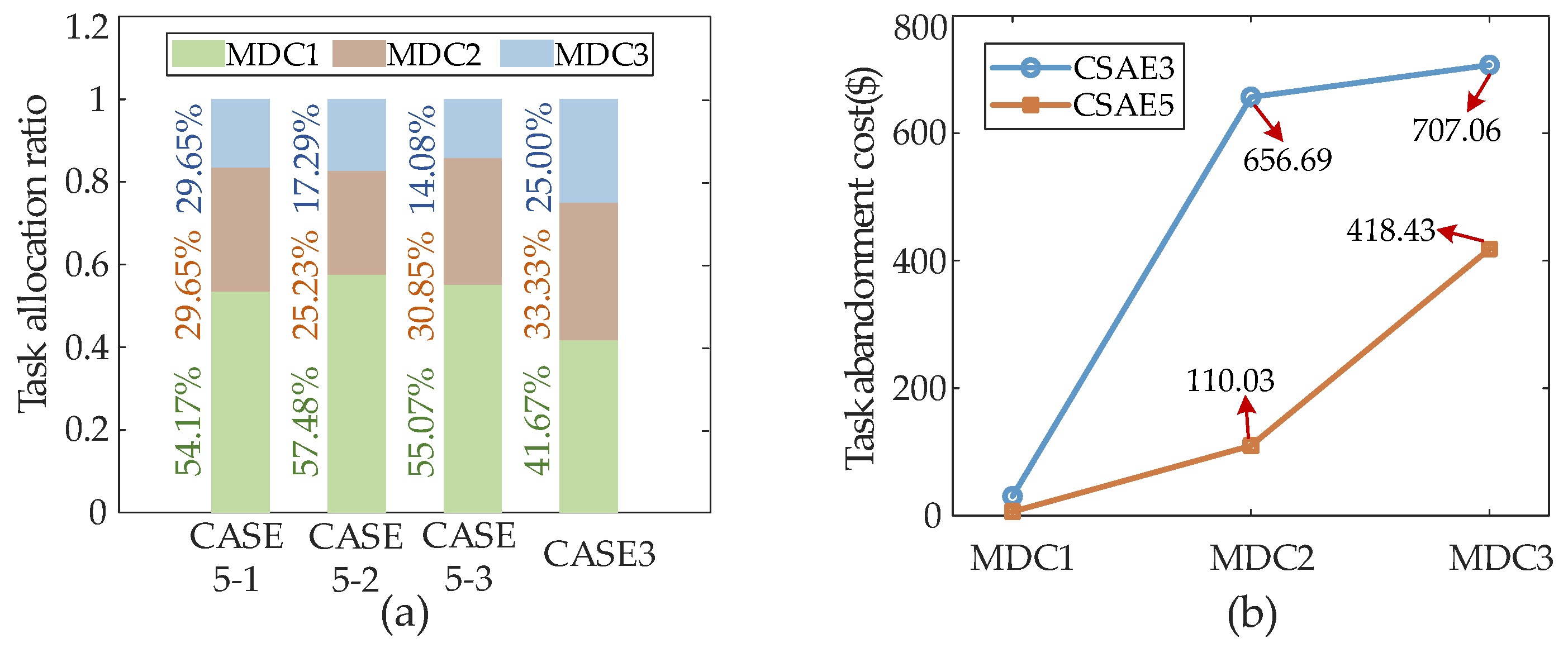

5.5. Sensitivity Analysis of the Impact of Renewable Energy Intermittency

- (1)

- The impact of low renewable energy penetration on task allocation in the computing side.

- (2)

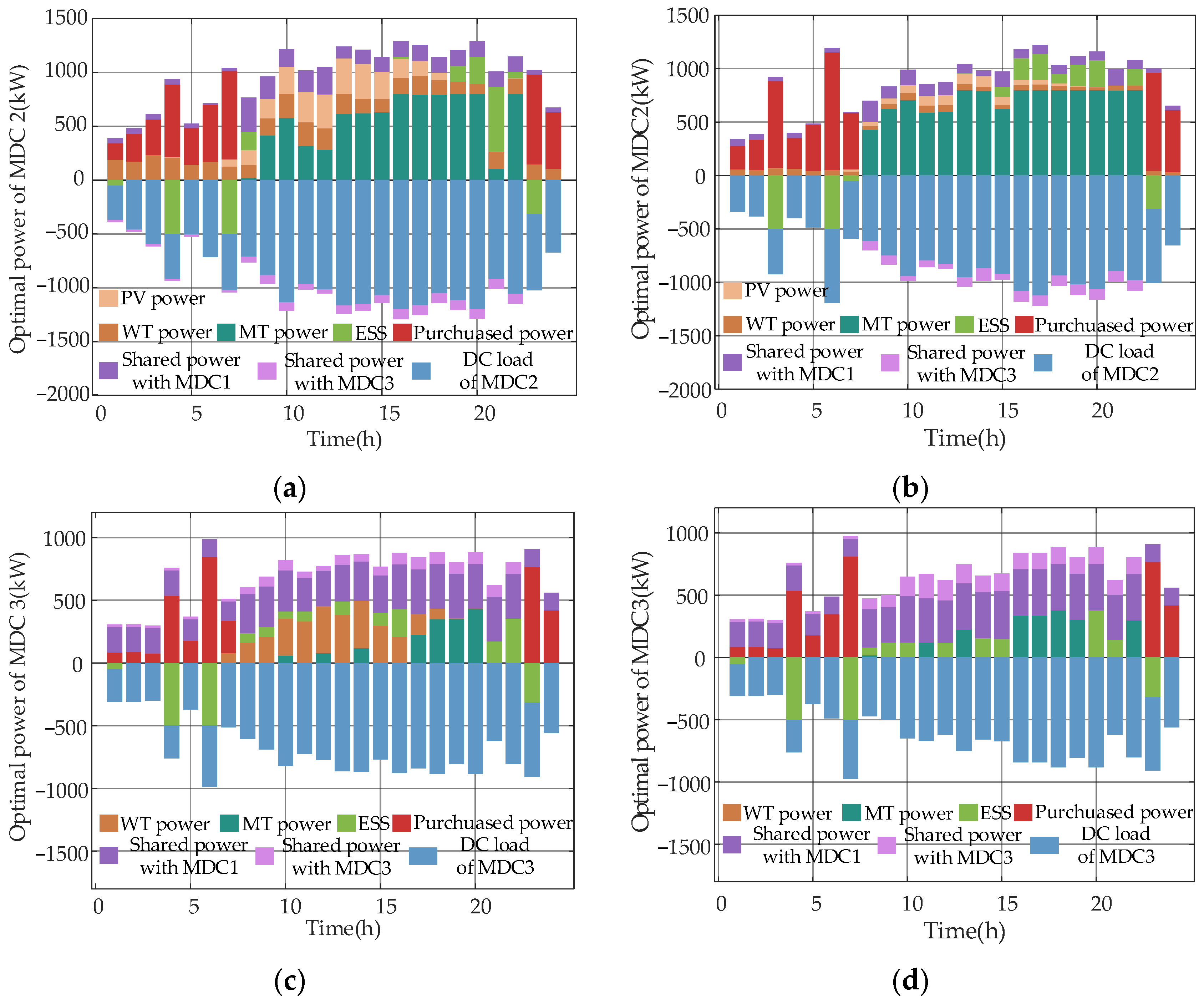

- The impact of low renewable energy penetration on the optimal operation results of MDCs on the power side.

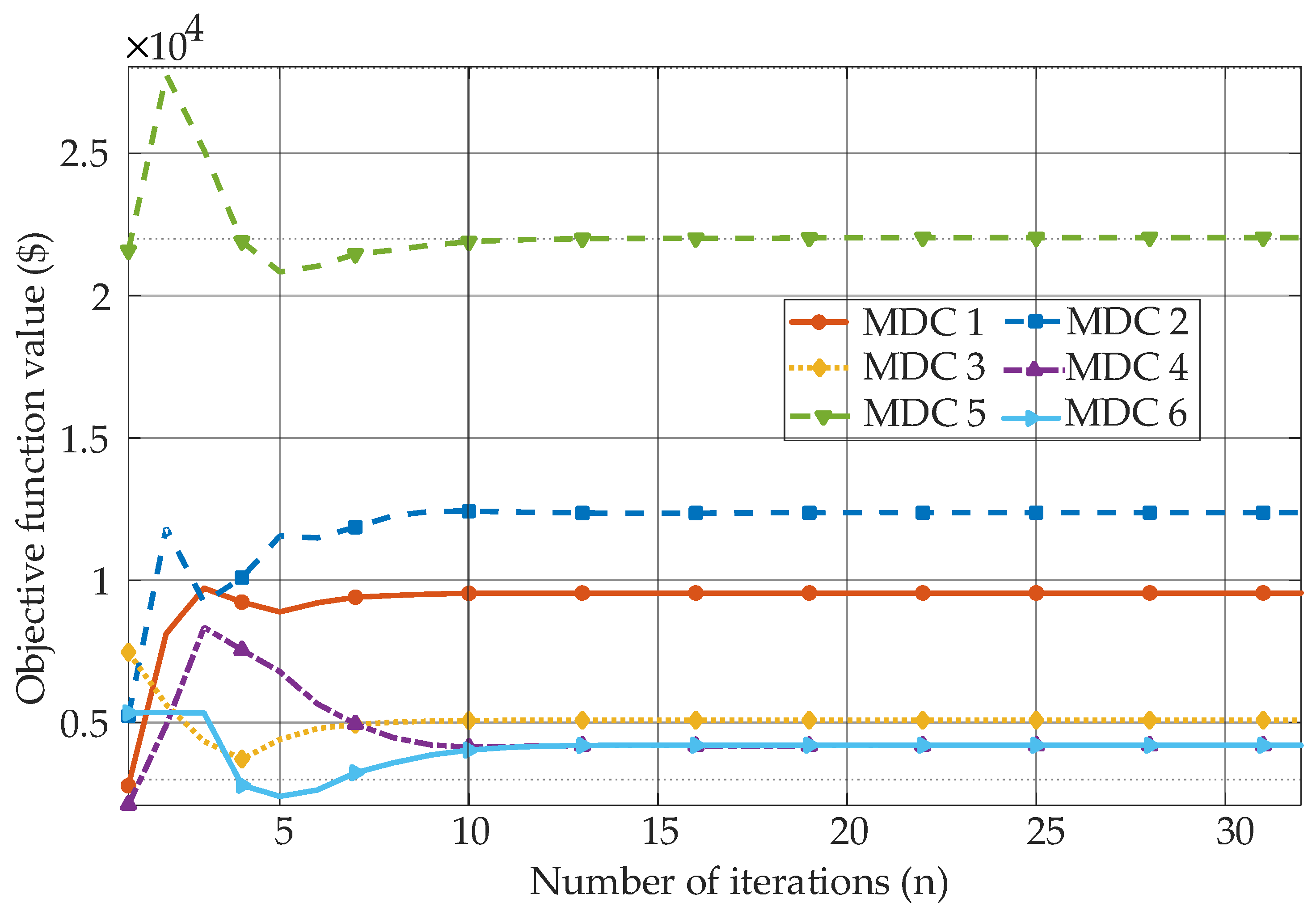

5.6. Scalability Analysis

6. Conclusions

- (1)

- The proposed task allocation and scheduling mechanism enables load balancing of MDCs across both spatial and temporal dimensions, with the overall cost of task processing effectively reduced. Meanwhile, the backup capacity of servers among MDCs is leveraged, which enhances the stability of the system and addresses the asymmetric operational risks among MDCs.

- (2)

- Compared with other cases, significant economic benefits are achieved by the Nash game-theoretic model for the MDCs system, which integrates task allocation, scheduling, and energy sharing. Specifically, operating costs are reduced by up to 9.48%. Additionally, the optimal task processing strategy is generated, and the demand for electricity from the up-level grid is lowered.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| Abbreviation | |

| DC | data center |

| DPN | distribution power networks |

| MDC | microgrid-integrated data centers |

| IT | Information Technology |

| ADMM | alternating direction method of multipliers |

| NBS | Nash bargaining solution |

| ESS | energy storage system |

| MT | micro gas turbine |

| Indexes and Sets | |

| i | MDC index |

| t | Time index |

| k | Tasks index |

| l | Line index |

| n | distribution power network nodes index |

| ΩMDC | Set of MDCs |

| N | Set of distribution power network nodes index |

| L | Set of lines |

| Parameters | |

| Ci,MDC | The objective of MDC i |

| (t), (t) | The maintenance cost and operational cost on the power side of MDC i at time slot t, respectively |

| μdel, μsus | The compensation cost coefficients for tasks being processed with delay and being suspended, respectively |

| μi,buy, μi,sell | the time-of-use electricity prices for purchasing and selling, respectively |

| αi,MT, βi,MT | The cost coefficients of the MT |

| Pi,WT,max, Pi,WT,max, Pi,MT,max | The upper limits of the output power of the PV, WT, and MT in MDC i, respectively |

| Pi,dis,max, Pi,ch,max | The upper limits of the charging and discharging power, respectively |

| ηi,ch, ηi,dis | The charge and discharge efficiency |

| Ei,ess,max, Ei,ess,min | The upper and lower limits of energy stored |

| Pij,min, Pij,max | The upper and lower limits of energy sharing between MDC i and j |

| Variables | |

| Ni(t) | distribution power networks |

| Pi,ser(t) | The power consumption of the server in MDC i at time t |

| Pi,DC(t) | The power consumption of the data center in MDC i at time t |

| Pi,WT(t), Pi,PV(t), Pi,MT(t) | The output power of the PV, WT, and MT in the MDC i at time slot t, respectively |

| Pi,ch(t), Pi,dis(t) | The charging and discharging powers of the ESS in MDC i at time slot t |

| Pij(t) | The shared power between MDC i and MDC j at time slot t |

| Pi,sell(t), Pi,buy(t) | The electricity purchased from and sold to the distribution power network operator by the MDC i at time slot t |

References

- Xiao, Q.; Li, T.X.; Jia, H.J.; Mu, Y.F.; Jin, Y.; Qiao, J.; Blaabjerg, F.; Guerrero, J.M.; Pu, T. Electrical circuit analogy-based maximum latency calculation method of internet data centers in power-communication network. IEEE Trans. Smart Grid 2025, 16, 449–452. [Google Scholar] [CrossRef]

- Sun, Y.M.; Ding, Z.H.; Yan, Y.J.; Wang, Z.Y.; Dehghanian, P.; Lee, W. Privacy-preserving energy sharing among cloud service providers via collaborative job scheduling. IEEE Trans. Smart Grid 2025, 16, 1168–1180. [Google Scholar] [CrossRef]

- Xiao, Q.; Yu, H.L.; Jin, Y.; Jia, H.J.; Mu, Y.F.; Zhu, J.B.; Liu, H.Q.; Teodorescu, R.; Blaabjerg, F. Adaptive virtual inertia emulation and control scheme of cascaded multilevel converters to maximize its frequency support ability. IEEE Trans. Ind. Electron. 2025. early access. [Google Scholar]

- Wang, P.; Cao, Y.J.; Ding, Z.H.; Tang, H.; Wang, X.Y.; Cheng, M. Stochastic programming for cost optimization in geographically distributed internet data centers. CSEE J. Power Energy Syst. 2022, 8, 1215–1232. [Google Scholar]

- Yu, L.; Jiang, T.; Zou, Y.L. Distributed real-time energy management in data center microgrids. IEEE Trans. Smart Grid 2018, 9, 3748–3762. [Google Scholar] [CrossRef]

- Zhou, S.B.; Zhou, M.; Wu, Z.Y.; Wang, Y.Y.; Li, G.Y. Energy-aware coordinated operation strategy of geographically distributed data centers. Int. J. Electr. Power Energy Syst. 2024, 159, 110032. [Google Scholar] [CrossRef]

- Chen, M.; Gao, C.W.; Song, M.; Chen, S.S.; Li, D.Z.; Liu, Q. Internet data centers participating in demand response: A comprehensive review. Renew. Sustain. Energy Rev. 2020, 117, 109466. [Google Scholar] [CrossRef]

- Wan, J.X.; Duan, Y.D.; Gui, X.; Liu, C.Y.; Li, L.X.; Ma, Z.Q. Safecool: Safe and energy-efficient cooling management in data centers with model-based reinforcement learning. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 7, 1621–1635. [Google Scholar] [CrossRef]

- Jin, C.Q.; Bai, X.L.; Yang, C.; Mao, W.X.; Xu, X. A review of power consumption models of servers in data centers. Appl. Energy 2020, 265, 114806. [Google Scholar] [CrossRef]

- Hu, Y.Y.; Yang, J.; Ruan, X.L.; Chen, Y.L.; Li, C.J.; Zhang, Z.H.; Zhang, W. Green optimization for micro data centers: Task scheduling for a combined energy consumption strategy. Appl. Energy 2025, 393, 126031. [Google Scholar] [CrossRef]

- Yin, X.H.; Ye, C.J.; Ding, Y.; Song, Y.H. Exploiting internet data centers as energy prosumers in integrated electricity-heat system. IEEE Trans. Smart Grid 2023, 14, 167–182. [Google Scholar] [CrossRef]

- Yin, X.H.; Ye, C.J.; Ding, Y.; Song, Y.H.; Wang, L. Combined heat and power dispatch against cold waves utilizing responsive internet data centers. IEEE Trans. Sustain. Energy 2024, 15, 819–834. [Google Scholar] [CrossRef]

- Cao, Y.J.; Cao, F.; Wang, Y.J.; Wang, J.X.; Wu, L.; Ding, Z.H. Managing data center cluster as non-wire alternative: A case in balancing market. Appl. Energy 2024, 360, 122769. [Google Scholar] [CrossRef]

- Yang, T.; Jiang, H.; Hou, Y.C.; Geng, Y.N. Carbon management of multi-datacenter based on spatio-temporal task migration. IEEE Trans. Cloud Comput. 2023, 11, 1078–1090. [Google Scholar] [CrossRef]

- Long, X.X.; Li, Y.Z.; Li, Y.; Ge, L.J.; Gooi, H.B.; Chung, C.Y.; Zeng, Z.G. Collaborative response of data center coupled with hydrogen storage system for renewable energy absorption. IEEE Trans. Sustain. Energy 2024, 15, 986–1000. [Google Scholar] [CrossRef]

- Bian, Y.F.; Xie, L.R.; Ma, L.; Zhang, H.G. A novel two-stage energy sharing method for data center cluster considering ‘Carbon-Green Certificate’ coupling mechanism. Energy 2024, 313, 133991. [Google Scholar] [CrossRef]

- Zhang, H.Q.; Xiao, Y.; Bu, S.R.; Yu, F.R.; Niyato, D.; Han, Z. Distributed resource allocation for data center networks: A hierarchical game approach. IEEE Trans. Cloud Comput. 2020, 8, 778–789. [Google Scholar] [CrossRef]

- Kaur, K.; Garg, S.; Kumar, N.; Aujla, G.S.; Choo, K.R.; Obaidat, M.S. An adaptive grid frequency support mechanism for energy management in cloud data centers. IEEE Syst. J. 2020, 14, 1195–1205. [Google Scholar] [CrossRef]

- Ye, G.S.; Gao, F.; Fang, J.Y.; Zhang, Q. Joint workload scheduling in geo-distributed data centers considering UPS power losses. IEEE Trans. Ind. Appl. 2023, 59, 612–626. [Google Scholar] [CrossRef]

- Wang, L.L.; Zhu, Z.; Jiang, C.W.; Li, Z. Bi-level robust optimization for distribution system with multiple microgrids considering uncertainty distribution locational marginal price. IEEE Trans. Smart Grid 2021, 12, 1104–1117. [Google Scholar] [CrossRef]

- Chen, L.D.; Liu, N.; Wang, J.H. Peer-to-peer energy sharing in distribution networks with multiple sharing regions. IEEE Trans. Ind. Inform. 2020, 16, 6760–6771. [Google Scholar] [CrossRef]

- Tian, S.; Xiao, Q.; Li, T.X.; Jin, Y.; Mu, Y.F.; Jia, H.J.; Li, W.; Teodorescu, R.; Guerrero, J.M. An Optimization Strategy for EV-Integrated Microgrids Considering Peer-to-Peer Transactions. Sustainability 2024, 16, 8955. [Google Scholar] [CrossRef]

- Ji, H.R.; Zheng, Y.X.; Yu, H.; Zhao, J.L.; Song, G.Y.; Wu, J.Z.; Li, P. Asymmetric bargaining-based SOP planning considering peer-to-peer electricity trading. IEEE Trans. Smart Grid 2025, 16, 942–956. [Google Scholar] [CrossRef]

- Lin, C.R.; Hu, B.; Shao, C.Z.; Xie, K.G.; Peng, J.C. Computation offloading for cloud-edge collaborative virtual power plant frequency regulation service. IEEE Trans. Smart Grid 2024, 15, 5232–5244. [Google Scholar] [CrossRef]

- Jia, Y.B.; Wan, C.; Cui, W.K.; Song, Y.H.; Ju, P. Peer-to-peer energy trading using prediction intervals of renewable energy generation. IEEE Trans. Smart Grid 2023, 14, 1454–1465. [Google Scholar] [CrossRef]

- Fan, S.L.; Ai, Q.; Piao, L.J. Bargaining-based cooperative energy trading for distribution company and demand response. Appl. Energy 2018, 226, 133991. [Google Scholar] [CrossRef]

- Yuan, Z.P.; Li, P.; Li, Z.L.; Xia, J. A fully distributed privacy-preserving energy management system for networked microgrid cluster based on homomorphic encryption. IEEE Trans. Smart Grid 2024, 15, 1735–1748. [Google Scholar] [CrossRef]

- Li, Y.Z.; Long, X.X.; Zhou, C.J.; Yang, K.; Zhao, Y.; Zeng, Z.G. Coordinated operations of highly renewable power systems and distributed data centers. Sci. Sin. Technol. 2023, 54, 119–135. [Google Scholar] [CrossRef]

- Yan, D.X.; Chow, M.Y.; Chen, Y. Low-carbon operation of data centers with joint workload sharing and carbon allowance trading. IEEE Trans. Cloud Comput. 2024, 12, 750–761. [Google Scholar] [CrossRef]

- Jin, T.Y.; Bai, L.Q.; Yan, M.Y.; Chen, X.Y. Unlocking spatio-temporal flexibility of data centers in multiple regional peer-to-peer energy transaction markets. IEEE Trans. Power Syst. 2025, 40, 3914–3927. [Google Scholar] [CrossRef]

- Han, J.P.; Fang, Y.C.; Li, Y.W.; Du, E.S.; Zhang, N. Optimal planning of multi-microgrid system with shared energy storage based on capacity leasing and energy sharing. IEEE Trans. Smart Grid 2025, 16, 16–31. [Google Scholar] [CrossRef]

| Ref. | Game Theory | Tasks Allocation | Tasks Scheduling | Energy Sharing | Synergy Dimension |

|---|---|---|---|---|---|

| [8,9,10] | - | - | - | - | Energy side (as a load) |

| [11,12] | - | - | √ | - | Energy side (as a load) |

| [13] | Non-Cooperative Game Theory | - | √ | - | Computing side (task scheduling) |

| [14] | Cooperative Game Theory | - | √ | - | Computing side (task scheduling) |

| [15,16] | Cooperative Game Theory | - | √ | √ | Energy side (as a load) |

| [17] | Non-Cooperative Game Theory | - | √ | - | Computing side (task scheduling) |

| [18] | - | - | √ | - | Computing side (UPS scheduling) |

| This paper | Cooperative Game Theory | √ | √ | √ | Computing side and energy side |

| Time Slot | Value ($/kWh) |

|---|---|

| off-peak period (01:00–7:00, 23:00–24:00) | 0.4011 |

| peak period (12:00–14:00, 19:00–22:00) | 1.2033 |

| flat period (08:00–11:00, 15:00–18:00) | 0.7534 |

| Comparison | MDC1 | MDC2 | MDC3 | Total |

|---|---|---|---|---|

| CASE 1 | 32.430 | 34.998 | 30.583 | 98.012 |

| CASE 2 | 37.213 | 34.124 | 24.293 | 95.630 |

| CASE 3 | 30.127 | 33.889 | 25.084 | 89.101 |

| CASE 4 | 35.215 | 33.266 | 20.297 | 88.778 |

| Comparison | CASE 3 | CASE 4 |

|---|---|---|

| MDC 1 (Power/kW) | 968.10 | 591.08 |

| MDC 2 (Power/kW) | 4862.96 | 4473.17 |

| MDC 3 (Power/kW) | 4933.98 | 3249.30 |

| Total cost ($) | 5892.37 | 3325.42 |

| Comparison | The Proportion of Tasks Allocated to Each MDC (M) | ||

|---|---|---|---|

| MDC 1 | MDC 2 | MDC 3 | |

| CASE 4 | 58.99% | 27.74% | 13.27% |

| CASE 6-1 | 62.72% | 19.27% | 18.01% |

| CASE 6-2 | 59.74% | 33.06% | 7.2% |

| Comparison | MDC1 | MDC2 | MDC3 | Total |

|---|---|---|---|---|

| CASE4 | 35.215 | 33.266 | 20.297 | 88.778 |

| CASE R3-1 | 40.642 | 31.397 | 25.958 | 97.997 |

| CASE R3-2 | 39.348 | 34.682 | 23.382 | 97.412 |

| Number of MDCs (n) | Total Computation Time (s) | Number of Iterations (n) |

|---|---|---|

| 3 | 215 | 22 |

| 6 | 1033 | 32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Li, T.; Jin, Y.; Xiao, Q.; Tian, S.; Mu, Y.; Jia, H. A Novel Cooperative Game Approach for Microgrid Integrated with Data Centers in Distribution Power Networks. Symmetry 2025, 17, 1950. https://doi.org/10.3390/sym17111950

Zhang X, Li T, Jin Y, Xiao Q, Tian S, Mu Y, Jia H. A Novel Cooperative Game Approach for Microgrid Integrated with Data Centers in Distribution Power Networks. Symmetry. 2025; 17(11):1950. https://doi.org/10.3390/sym17111950

Chicago/Turabian StyleZhang, Xi, Tianxiang Li, Yu Jin, Qian Xiao, Sen Tian, Yunfei Mu, and Hongjie Jia. 2025. "A Novel Cooperative Game Approach for Microgrid Integrated with Data Centers in Distribution Power Networks" Symmetry 17, no. 11: 1950. https://doi.org/10.3390/sym17111950

APA StyleZhang, X., Li, T., Jin, Y., Xiao, Q., Tian, S., Mu, Y., & Jia, H. (2025). A Novel Cooperative Game Approach for Microgrid Integrated with Data Centers in Distribution Power Networks. Symmetry, 17(11), 1950. https://doi.org/10.3390/sym17111950