2. Methods

The proposed unsupervised multimodal equipment entity alignment model employs a dual-space embedding strategy to effectively capture the complex relational hierarchies and semantic information inherent in multimodal data from equipment knowledge graphs, thereby enabling unsupervised entity alignment. First, a pseudo-seed generation module is utilized to compute similarities across visual and textual features, automatically constructing initial aligned entity pairs. During the iterative process, the seed set is progressively expanded with high-confidence pairs, thereby reducing reliance on manually annotated data. Second, embedding learning is performed in both Euclidean and hyperbolic spaces. In Euclidean space, a graph attention network aggregates neighborhood information to capture local structural relationships among entities. In contrast, hyperbolic embedding leverages its negative curvature to accurately model hierarchical structures and global distribution patterns. Finally, an information fusion mechanism dynamically integrates embeddings from both spaces through weighted combination, while a tailored loss function enforces closeness of representations for the same entity across spaces and maintains sufficient separability between different entities. The overall framework of the model is illustrated in

Figure 1. To further improve readability,

Table 2 summarizes the inputs, outputs, and core innovations of each module, complementing

Figure 1 by providing a concise overview of how semantic, visual, structural, relational, and attribute information are successively processed and fused through the dual-space embedding pipeline.

2.1. Pseudo-Seed Generation Module

In unsupervised multimodal equipment entity alignment, the absence of manually annotated ground-truth aligned pairs necessitates the use of pseudo-seeds to initialize the alignment process. Pseudo-seeds refer to collections of high-confidence aligned entity pairs that provide reliable supervisory signals for subsequent iterative optimization. Most existing approaches generate pseudo-seed sets primarily from visual information; however, visual features are highly sensitive to image quality, and issues such as low resolution or occlusion can undermine the reliability of similarity estimation.

To address this limitation, we propose a pseudo-seed generation method that leverages Pairwise BERT [

17] for semantic feature extraction and VGG-16 [

18] for visual feature extraction, integrating both modalities to construct high-quality pseudo-seed sets. The selection of VGG-16 and Pairwise BERT as visual and semantic feature extractors is motivated by their demonstrated stability and compatibility in multimodal alignment tasks. VGG-16 provides robust low- and mid-level visual representations that effectively capture structural and texture-based similarities between entities, while Pairwise BERT directly models semantic relationships between paired textual descriptions, enabling precise estimation of contextual similarity. Both models have been extensively validated in prior cross-modal retrieval and entity alignment studies, ensuring reliable and transferable representations for the pseudo-seed generation stage.

Furthermore, the integration of semantic and visual modalities allows the pseudo-seed generation process to exploit complementary information sources. This multimodal fusion enhances the confidence and diversity of selected pairs, thereby providing a more stable supervisory signal for subsequent iterative optimization.

- (1)

Semantic Feature Extraction

In equipment entity alignment tasks, textual information (e.g., equipment descriptions) is an important source of semantic representation. To obtain semantic embeddings of entities, we employ Pairwise BERT. Specifically, let

denote an entity and

textual description. The text is encoded with Pairwise BERT as

, where

represents the semantic embedding vector of

. The semantic similarity between two entities and is then measured using cosine similarity, as defined in Equation (

1):

By computing the similarity among all equipment pairs, the semantic similarity matrix

is obtained, as defined in Equation (

2):

where

and

denote two different knowledge graphs, and

and

represent entities from

and

, respectively.

- (2)

Visual Feature Extraction

Entities are often associated with rich image information. To obtain their visual features, we employ the VGG-16 neural network to generate image embeddings. Let the image corresponding to entity

be denoted as

. Using a pretrained VGG-16 model, the image features are extracted, and the visual representation vector

for

is derived according to Equation (

3). Analogous to the semantic features, visual similarity is measured using cosine similarity, as defined in Equation (

4):

By computing the similarity among all entity pairs, the visual similarity matrix

is obtained, as shown in Equation (

5).

To effectively integrate semantic and visual information,

and

are fused through weighted combination to form the final multimodal similarity matrix:

where

is a hyperparameter that controls the relative contribution of semantic and visual information.

Based on the multimodal similarity matrix M, entity pairs are iteratively selected in descending order of similarity. Once a pair is selected, any other associations involving these two entities are discarded, thereby ensuring uniqueness among the selected pairs. This procedure continues until the entity pairs, representing the most similar pairs between and in both semantic and visual features, are obtained. Finally, the automatically generated list of unique, high-similarity entity pairs is used to initiate the iterative process, progressively expanding the seed set and providing reliable initial data support for subsequent model training.

2.2. Dual-Space Embedding Module

In equipment knowledge graphs, entities often exhibit complex hierarchical structures and diverse relational patterns. Traditional single-space embedding methods (e.g., Euclidean) are effective in capturing local information but are often insufficient for modeling global hierarchical structures. By contrast, hyperbolic space, with its unique geometric properties, is well-suited for representing relational hierarchies and tree-like structures.

To accurately represent the multi-level hierarchy and complex local relations in equipment knowledge graphs, we employ a dual-space embedding strategy. The Euclidean space focuses on modeling local interactions between closely related entities, such as components within the same subsystem. In contrast, the hyperbolic space provides an efficient representation of global hierarchies, reflecting the tree-like organization of systems and subsystems. Combining the two spaces yields complementary representations: Euclidean embeddings ensure precise local similarity, while hyperbolic embeddings preserve long-range hierarchical consistency. This dual-space design maintains a geometric symmetry between local and global structures, which is particularly beneficial for the hierarchical characteristics of equipment knowledge graphs.

The dual-space embedding module performs joint representation learning in both Euclidean and hyperbolic spaces. Specifically, neighborhood aggregation is first conducted in Euclidean space to capture fine-grained local relations among equipment entities. The resulting representations are then mapped into hyperbolic space through exponential and logarithmic transformations to preserve global hierarchical information. This parallel embedding process ensures that both local and hierarchical structural cues are encoded consistently. By integrating the learned embeddings from the two spaces, the model achieves robust and geometry-aware representations that enhance entity alignment performance.

- (1)

Euclidean Space Embedding

Consider a knowledge graph

, where

V denotes the set of entities and

E denotes the edges representing relations among them. The embedding of each entity

in Euclidean space is learned using a GAT, as defined in Equation (

7):

where

denotes the neighbor set of entity

;

is a nonlinear activation function;

K is the number of attention heads;

and

denote the attention coefficients and weight matrix of the

head, respectively;

is the feature vector of a neighbor; and

is the embedding representation of entity

in Euclidean space.

The graph attention network (GAT) assigns different weights based on the feature similarity between nodes, giving Euclidean embeddings the following properties:

Adaptivity: Through the attention mechanism, the model adaptively aggregates task-relevant neighbor information, thereby improving the discriminative power of the embeddings.

Locality: Euclidean embeddings primarily capture neighborhood-level information, making them well-suited for modeling compact local structures among entities.

- (2)

Hyperbolic Space Embedding

Hyperbolic space, with its negative curvature, is particularly suitable for representing relational hierarchies and tree-like structures in data. In equipment knowledge graphs, the hierarchical relations between components and the whole often exhibit a distinct tree-like structure. Consequently, employing hyperbolic embeddings enables more effective modeling of global hierarchical structures.

To map embeddings from Euclidean space to hyperbolic space, we employ exponential and logarithmic mappings. Let the Euclidean embedding be

. Its mapping to the Poincaré ball model is given by Equation (

8):

When

, we define

. Conversely, the logarithmic mapping from hyperbolic space back to Euclidean space is given in Equation (

9).

With these two mappings, bidirectional conversion between Euclidean and hyperbolic embeddings is enabled, thereby preserving consistency of information. In this paper, we adopt the HGCN model to capture the hierarchical structures of graphs in hyperbolic space. Specifically, the Euclidean embedding

is mapped to hyperbolic space using the exponential mapping, as expressed in Equation (

10):

Here,

denotes the initial embedding

in hyperbolic space. For the

l-th layer, the subsequent hyperbolic embedding is derived via hyperbolic feature aggregation, as defined in Equation (

11):

where

A denotes the symmetrically normalized adjacency matrix,

represents the

activation function, and

is a trainable weight matrix. Finally, the output

represents the final embedding of entity

in hyperbolic space.

Implementation details. In the Poincaré ball model, the curvature parameter

c is fixed to

throughout training for stable optimization, following established practice in prior hyperbolic embedding studies [

13,

19]. To ensure numerical stability during the exponential and logarithmic mappings, the norm of each Euclidean vector

is restricted to remain below

(

), and the outputs of the tanh and

functions are clipped near the manifold boundary to prevent overflow. Gradient clipping within the range

is applied during backpropagation to avoid exploding updates, and all computations are performed in 32-bit floating-point precision. These stabilization techniques guarantee smooth optimization and reproducibility of the dual-space embedding process without altering the overall model architecture.

- (3)

Loss Function

To preserve the structural properties of Euclidean embeddings without distortion, we employ contrastive learning to enforce consistency between Euclidean and hyperbolic embeddings.

Equation (

12) is used to compute the contrastive loss of a single entity

between its Euclidean embedding

and hyperbolic embedding

. The numerator

measures the similarity between the two embeddings of the current entity, while the denominator

represents the total similarity between the Euclidean and hyperbolic embeddings of all entities. By taking the logarithm and the negative sign, the equation ensures that the higher the similarity of the two embeddings for the current entity, the smaller the loss. In Equation (

12), the denominator iterates over all candidate Euclidean embeddings

with

, where

denotes the set of all entities in the current knowledge graph (or mini-batch). The term

in the numerator corresponds to the positive pairof the same entity

, while all other terms

,

, represent negative pairs. This contrastive formulation encourages the Euclidean and hyperbolic embeddings of the same entity to be close, while pushing apart embeddings of different entities.

Equation (

13) calculates the contrastive loss between two knowledge graphs (e.g., the source graph

and the target graph

). Here,

denotes the summation over the two graphs,

is a normalization factor, and

represents the number of entities in knowledge graph

n. The term

computes the loss for each entity

in graph

n. The expressions

represent the contrastive losses of entity

under different embedding space combinations. By accumulating these losses,

quantifies the overall consistency of all entities between Euclidean and hyperbolic embeddings across the two knowledge graphs.

2.3. Adaptive Feature Fusion Module

In multimodal knowledge graph entity alignment tasks, each modality of information (e.g., textual descriptions, images, structural features, relations, and attributes) has its own characteristics. The key challenge lies in effectively fusing these modalities. Traditional feature fusion methods often adopt simple weighted summation or concatenation, while ignoring interdependencies among modalities, which results in low information utilization. To address this issue, this chapter proposes a feature fusion method based on fully connected (FC) networks and gating mechanisms. The method dynamically adjusts the weights of different modalities and generates a unified embedding representation for each entity.

Before feature fusion, it is necessary to extract features from each modality. For each entity , the following representations are obtained:

Structural information: Graph Attention Networks (GATs) are used to extract structural information, yielding the structural embedding .

Semantic information: The pre-trained Pairwise BERT model is employed to encode textual descriptions, resulting in the semantic embedding .

Relational and attribute information: The bag-of-words model is applied to obtain the relational embedding and attribute embedding .

Visual information: The VGG-16 convolutional neural network is used to extract image features, producing the visual embedding .

Since the importance of each modality may vary under different circumstances, a dynamic weighting mechanism is required. In this work, a two-layer fully connected network with ReLU activation is applied to perform nonlinear mapping and generate weight scores for each modality, as shown in Equation (

14):

Here, denotes the concatenated feature vector; and are learnable parameter matrices; and are bias terms; and denotes the sigmoid function, which ensures that the weight values lie within the interval . In this way, a score vector that assigns weights to all modalities is obtained.

Since directly adopting

may result in scale inconsistency among modality features, softmax normalization is applied to guarantee that the weights across all modalities sum to 1, thereby preventing any particular modality from dominating or being neglected, as shown in Equation (

15):

Here,

denotes the normalized weight of modality

m. After obtaining the weights of all modalities, the modality features are combined through weighted concatenation to derive the final representation of entity

e, as shown in Equation (

16):

In our design, the adaptive fusion network adopts a two-layer fully connected structure, as defined in Equation (

14), to model nonlinear interactions among different modality features. The first fully connected (FC) layer transforms the concatenated modality vector into a hidden representation with 512 dimensions, followed by a ReLU activation to introduce nonlinearity. The second FC layer maps this hidden representation to five scalar values corresponding to the five modality types (structural, textual, relational, attribute, and visual). A sigmoid function is then applied to constrain the outputs to the range (0, 1), and a subsequent softmax normalization (Equation (

15)) ensures that the modality weights sum to one. Dropout with a rate of 0.2 is applied after the ReLU activation to improve generalization.

This configuration is lightweight yet expressive enough to capture inter-modality dependencies without introducing excessive parameters. We found that a single-layer version failed to adequately model nonlinear correlations, while deeper fusion networks (three or more layers) led to slight overfitting and unstable convergence. The empirical comparison in

Section 3.8 further supports that the two-layer configuration achieves the best balance between performance and stability.

2.4. Iterative Constraint Mechanism

In multimodal entity alignment tasks, particularly in equipment-related scenarios, the complexity of the data and the incompleteness of multimodal information make it challenging to directly obtain large-scale, high-quality annotated alignment seeds. To address this, an iterative strategy is introduced. Its core idea is to use a small number of initially generated pseudo-seed pairs as the starting point, and then, through model training and updating, iteratively extract new high-confidence alignment pairs from the unaligned entities. This gradually expands the seed set while simultaneously enhancing the embedding representations of the model. The process is repeated until the number of newly generated entity pairs falls below a predefined threshold, at which point the iteration terminates.

The rationale for introducing the iterative constraint mechanism is to provide a stable and progressive learning framework in the absence of sufficient supervision. Instead of relying solely on a limited initial seed set, the iterative process allows the model to continuously refine alignment quality while controlling error propagation through constraint strategies.

Nevertheless, during the iterative process, erroneous entity pairs may be mistakenly incorporated into the seed set and subsequently propagated during training, leading to the accumulation of errors and a decline in alignment accuracy. To mitigate this, a refined filtering strategy is required to ensure the reliability of newly aligned pairs. This chapter introduces constraint strategies including bidirectional nearest neighbor, similarity threshold filtering, and delayed confirmation, thereby constructing a robust iterative optimization framework.

- (1)

Bidirectional Nearest Neighbor Constraint.

In equipment knowledge graph alignment, each entity ideally corresponds to a unique match. However, due to heterogeneous data sources and varying entity descriptions, direct similarity-based matching can lead to mismatches. To mitigate this, a bidirectional nearest neighbor constraint is applied.

For each entity

, its most similar entity in

is identified as:

and vice versa for

:

Only when

are mutual nearest neighbors, i.e.,

is the pair regarded as a reliable alignment candidate. This constraint effectively filters asymmetric matches and reduces noise propagation.

- (2)

Similarity Threshold Filtering.

Even if

are mutual nearest neighbors, low-similarity pairs may still be erroneous. To improve confidence, we apply a similarity threshold

, retaining only those pairs that exceed this threshold:

- (3)

Delayed Confirmation Mechanism.

To further prevent the accumulation of alignment errors, a delayed confirmation strategy is introduced. Instead of immediately adding newly filtered pairs to the next iteration’s seed set, they are first placed into a candidate pool . If a pair remains consistent with both the bidirectional constraint and the similarity threshold over n consecutive iterations, it is then promoted into the official seed set . This mechanism effectively mitigates the impact of transient noise and improves the reliability of incremental learning.

- (4)

Overall Algorithm.

The entire iterative constraint process is summarized in Algorithm 1, which integrates all aforementioned steps into a reproducible procedure. This explicit pseudocode ensures clarity and replicability for future studies.

| Algorithm 1 Iterative Constraint Mechanism for Pseudo-Seed Expansion |

- Require:

- Ensure:

- 1:

for to T do - 2:

Step 1: Train model using to update embeddings of . - 3:

Step 2: For each find nearest in ; for each find nearest in . - 4:

. - 5:

Step 3: . - 6:

Step 4: Add to candidate pool . Promote pairs that are valid for n consecutive iterations to . - 7:

if then - 8:

break - 9:

end if - 10:

end for - 11:

return

|

It is worth noting that all backbone networks, including VGG-16, Pairwise BERT, and GAT, are employed in their standard forms without structural modification. This design choice ensures reproducibility and fair comparison with existing multimodal alignment methods. The main contribution of UMEAD lies in the integration of these components through the dual-space embedding and iterative constraint mechanisms, rather than altering their internal architectures.

2.5. Model Alignment Loss

The model’s loss function consists of two parts: (1) the contrastive loss for aligning Euclidean and hyperbolic space embeddings, and (2) the margin-based alignment loss for the entity alignment task.

The contrastive loss for aligning Euclidean and hyperbolic space embeddings is derived from Equation (

13). The margin-based alignment loss is calculated as shown in Equation (

21):

where

represents the positive part of

x, i.e.,

;

;

is an entity from

;

is an entity from

;

is the composite embedding representation of

;

is the composite embedding representation of

;

is the margin parameter that defines the distance between positive and negative samples; and

S and

are the sets of positive and negative samples, respectively, with

being the set of negative samples generated through a nearest-neighbor sampling approach.

By combining these two losses, the final training objective is achieved, as shown in Equation (

22):

where

is a hyperparameter used to balance the impact of the two losses on the overall optimization objective.

3. Experiment

3.1. Datasets

To comprehensively evaluate the proposed UMEAD framework, we conduct experiments on both the Encyclopedia Media Multimodal Entity Alignment Dataset (EMMEAD) and the public multimodal benchmark MMKG [

8].

Proprietary dataset (EMMEAD). The EMMEAD dataset is constructed to capture multimodal and hierarchical characteristics of equipment-related knowledge. It contains three main categories of entities:

Ships: 4 major categories and 28 subcategories, including passenger ships (e.g., high-speed passenger ships, tourist ships), cargo ships (e.g., dry cargo, container, roll-on/roll-off), auxiliary transport ships (e.g., supply ships, hospital ships), and special-purpose ships (e.g., tugboats, research ships).

Aircraft: 15 categories, including cargo aircraft, passenger aircraft, medical rescue aircraft, business jets, survey aircraft, and others.

Other related entity types: 11 categories, such as countries/regions, manufacturers, institutions, enterprises, airports, ports, electronic equipment, logistics facilities, and air routes.

The dataset integrates relational triples, attribute triples, and visual data (images). It also contains SameAs links between the encyclopedia and media sources to support entity alignment. Due to the sensitivity of military-related information, this dataset is not publicly available. Its statistics are summarized in

Table 3.

Public datasets (MMKG). We also evaluate on the multimodal entity alignment dataset MMKG [

8], which combines three widely used knowledge bases: Freebase [

20], DBpedia [

21], and YAGO [

22]. Specifically, FB15K-DB15K is constructed from FB15K (a subset of Freebase) and DB15K (a subset of DBpedia), while FB15K-YAGO15K is derived from FB15K and YAGO15K (a subset of YAGO).

Since many original image links in MMKG are outdated or invalid, entity-related images are supplemented through controlled web retrieval from publicly accessible sources (e.g., Wikipedia, Wikimedia Commons, and major search engines), following the protocols of prior multimodal alignment studies [

9,

23]. All collected images are de-duplicated via perceptual hashing and manually screened to remove irrelevant content, ensuring that only representative and non-redundant samples are used. To avoid data leakage and maintain fair comparison, the retrieved images are used solely for experimental evaluation. This practice ensures that the dataset remains consistent with the MMKG benchmark while mitigating the impact of missing modalities.

3.2. Evaluation Metrics

To evaluate the alignment performance, we adopt two widely used metrics: Hits@k and Mean Reciprocal Rank (MRR). These metrics are standard in knowledge graph alignment and information retrieval, as they jointly measure both ranking quality and retrieval accuracy.

Hits@k. Hits@k (with

in our experiments) calculates the proportion of correctly aligned entities that appear within the top-

k candidates. It reflects the model’s ability to rank the true counterpart among the most relevant predictions. Formally:

where

denotes the number of test entities,

is the rank of the correct counterpart for the

i-th entity, and

is an indicator function:

Mean Reciprocal Rank (MRR). MRR measures the average reciprocal rank of the true counterparts across all test entities, thus providing a more fine-grained evaluation of ranking performance:

Higher values of Hits@k and MRR indicate better alignment performance.

Statistical reliability. To ensure the robustness and reproducibility of the reported results, all experiments are conducted over multiple independent runs with different random seeds. This procedure mitigates the influence of random initialization and sampling variations, providing statistically reliable estimates of model performance.

In addition, to verify the significance of improvements over strong baselines such as UMAEA and MCLEA, paired significance tests (two-tailed t-test and Wilcoxon signed-rank test) are performed on the per-run results. Performance differences reaching the 0.05 significance level are considered statistically significant, though not explicitly annotated in the result tables for clarity. This evaluation protocol follows standard practices in recent alignment studies and ensures that the reported gains reflect consistent algorithmic advantages rather than random fluctuations.

3.3. Experimental Setup

In this experiment, the hidden layer size of the GAT is set to 300, the output dimension of the VGG-16 model is 4096, and the encoding dimension for relationships and attributes is set to 1000. During training, two configurations are considered: the purely unsupervised setting (UMEAD) and a semi-supervised variant (UMEAD-s) for controlled comparison. Both configurations rely entirely on automatically generated pseudo-seeds rather than manually annotated alignment pairs.

In the unsupervised UMEAD configuration, the model starts with a small set of high-confidence pseudo-aligned entity pairs automatically produced by the pseudo-seed generation module; no ground-truth seeds are used at any stage. The “alignment seed ratio” reported in

Table 4 refers to the proportion of pseudo-seeds relative to the total number of candidate alignments at initialization, rather than human-labeled seeds. For consistency, the same parameter settings are used when comparing the semi-supervised UMEAD-s variant, where a subset of gold alignments (20%) is provided only for reference comparison in certain ablation scenarios.

The pseudo-seed generation weight parameter is set to

, and the number of pseudo-seeds is

. Other parameters are listed in

Table 4, and the experimental environment is summarized in

Table 5.

To ensure clarity, all subsequent tables explicitly specify whether the results correspond to UMEAD (unsupervised, 0% gold seeds) or UMEAD-s (semi-supervised, with a small proportion of gold seeds for controlled comparison).

The hyperparameters listed in

Table 4 were chosen to balance empirical validation and established practice, and their final defaults were confirmed by the sensitivity analysis reported in

Section 3.8. In practice we proceeded as follows: for core fusion and loss weights (

,

) we performed small-scale grid-style tuning on a held-out validation split (evaluating coarse candidate values) and selected values that offered the best trade-off between multimodal fusion quality and alignment stability; this yielded

and

. The pseudo-seed related parameters (initial seed count

k and similarity threshold

) were set after preliminary experiments that examined the balance between seed diversity and noise—

provides sufficient coverage while

effectively filters low-confidence pairs. Architectural and optimizer defaults (embedding dimension, GAT hidden size, VGG output dimension, relation/attribute encoding size, learning rate, weight decay, batch size, and number of epochs) follow commonly used settings in recent EA and multimodal works and were verified to converge stably on our datasets; specifically, embedding dimension = 100 and GAT hidden size = 300 were adopted for a good trade-off between representation capacity and computational cost. The delayed confirmation period

n was set conservatively to 3 to reduce transient errors during iterative expansion. We refer readers to

Section 3.8 for the full sensitivity plots and brief discussion demonstrating that model performance is relatively stable within nearby parameter ranges.

3.4. Comparative Experiments

To comprehensively evaluate the performance of the proposed unsupervised multimodal entity alignment model (UMEAD) and its semi-supervised variant (UMEAD-s), we compare them with a series of representative baseline methods across three datasets: FB15K-DB15K, FB15K-YAGO15K, and EMMEAD. UMEAD-s serves as a semi-supervised version of UMEAD, where the pseudo-seed generation module is replaced with a small set of pre-aligned seed pairs that are iteratively expanded during training.

Baseline methods. The selected baselines span three main categories:

Structure-based method:MTransE [

3] extends the TransE framework to cross-graph settings by jointly learning entity and relation embeddings across different knowledge graphs. It serves as a fundamental and widely used baseline for structure-only alignment.

GNN-based methods: GCN-Align [

24] employs Graph Convolutional Networks to capture neighborhood information for alignment; RDGCN [

25] models interactions between the entity graph and its relational dual graph; HGCN [

19] jointly learns entity and relation embeddings without pre-aligned relation seeds; and DSEA [

26] integrates multimodal features through self-attention to enhance entity matching.

Semi-supervised methods: BootEA [

4] adopts a bootstrapping framework to iteratively label new aligned pairs; NAEA [

27] employs neighborhood-aware attention to capture structural dependencies; SEA [

28] leverages semi-supervised adversarial training to mitigate degree distribution bias; and RNM [

29] enhances alignment via relation-aware neighborhood matching.

Unsupervised methods: IMUSE [

30] jointly considers relation and attribute triples for alignment; MCLEA [

9] applies multimodal contrastive learning to generate pseudo-seeds; SelfKG [

31] adopts self-supervised negative sampling; and UMAEA [

23] addresses missing visual modalities for robust multimodal alignment, and MMEA [

32] performs joint visual–textual embedding learning with static multimodal fusion for entity matching.

For all baseline models, we reproduced the reported results on our datasets using the authors’ official implementations and the hyperparameter settings provided in their papers. All experiments were conducted under the same data partitions, preprocessing procedures, and evaluation metrics as UMEAD, ensuring consistent experimental conditions across methods.

Results of UMEAD-s.

Table 6 reports the performance of UMEAD-s against GNN-based and semi-supervised methods. Traditional GNN baselines (e.g., GCN-Align, RDGCN) achieve low accuracy since they primarily exploit structural information while ignoring multimodal cues. HGCN shows some improvements by incorporating hyperbolic embeddings, but its performance remains limited. DSEA outperforms other GNN-based models due to its adaptive self-attention mechanism. Among semi-supervised methods, RNM performs the strongest, confirming the benefit of relation-aware neighborhood modeling. However, UMEAD-s consistently achieves higher performance across FB15K-YAGO15K and EMMEAD, with Hits@1 values of 31.45% and 34.85%, respectively. This demonstrates the effectiveness of combining dual-space embeddings with multimodal pseudo-seeds, even under limited supervision. This improvement stems from the joint learning of Euclidean and hyperbolic spaces, which enables UMEAD-s to capture both local relational similarity and global hierarchical semantics that structure-based, GNN-based, and semi-supervised baselines fail to represent effectively. By integrating graph topology with multimodal cues, UMEAD-s achieves richer feature interactions and more comprehensive representation learning than models that rely solely on structural or unimodal information. Moreover, the multimodal pseudo-seed generation reduces noise during iterative expansion, resulting in more reliable alignment pairs even under limited supervision.

Results of UMEAD.

Table 7 presents the comparison of UMEAD with unsupervised baselines. IMUSE and SelfKG underperform across all datasets, with Hits@1 values below 23%, highlighting the limitations of relying solely on structural and attribute triples. MCLEA achieves stronger results by leveraging multimodal contrastive learning, yet still struggles to capture complex hierarchical patterns. UMAEA performs best among baselines, particularly under scenarios of incomplete visual modalities. Nevertheless, UMEAD consistently surpasses UMAEA on FB15K-YAGO15K and EMMEAD, with Hits@1 improvements of 1.56% and 6.05%, respectively. This demonstrates that dual-space embeddings combined with iterative pseudo-seed expansion yield superior performance, especially in equipment-oriented multimodal KGs where both local and global structures are critical. This superiority can be attributed to the dual-space embedding mechanism, which provides geometric complementarity between Euclidean and hyperbolic spaces and enhances the model’s capacity to integrate multimodal information. While the Euclidean component preserves local neighborhood consistency, the hyperbolic space captures hierarchical dependencies, leading to a balanced and expressive representation. Additionally, the iterative constraint mechanism filters low-confidence pairs, preventing error accumulation during unsupervised training and further improving robustness compared with existing multimodal and unimodal baselines.

Summary. Overall, UMEAD and UMEAD-s demonstrate superior alignment accuracy compared with representative baselines. The results highlight three key findings: (1) multimodal information provides substantial benefits over structure-only baselines; (2) dual-space embeddings effectively model both local neighborhood and global hierarchical features; and (3) iterative pseudo-seed expansion enhances the robustness of alignment, reducing the dependency on manual labels while maintaining a high performance in real-world multimodal equipment knowledge graphs. In particular, the integration of geometry-aware embeddings and adaptive fusion enables UMEAD to align entities with higher semantic precision, which explains its consistent performance gains across different datasets.

Further Analysis of Results. Beyond the overall performance comparison, we further analyze the results from three perspectives. First, the clear gap between UMEAD(-s) and traditional embedding-based methods such as MTransE confirms that incorporating multimodal information effectively mitigates the structural sparsity problem in equipment knowledge graphs. In addition, UMEAD(-s) consistently outperforms GNN-based models (e.g., GCN-Align, RDGCN, HGCN), indicating that multimodal cues provide complementary information beyond structural connections. Second, while advanced multimodal methods such as MMEA and other contrastive or fusion-based approaches achieve strong results through visual—textual integration, UMEAD surpasses them by introducing a dual-space embedding mechanism that simultaneously captures local relational regularities and global hierarchical semantics, particularly on FB15K-YAGO15K where hierarchical relations are dominant. Third, compared with semi-supervised and unsupervised competitors, the iterative pseudo-seed mechanism enhances robustness by filtering noisy alignments during training, which ensures stable convergence and prevents overfitting on small seed sets. These analyses collectively demonstrate that the improvement of UMEAD is not incidental but arises from its architectural innovations and adaptive learning mechanisms.

3.5. Experiments on the Number of Pseudo-Seeds

To examine the sensitivity of UMEAD to the size of the initial pseudo-seed set, we varied the threshold parameter

k in the pseudo-seed generation module, thereby generating seed sets of different scales.

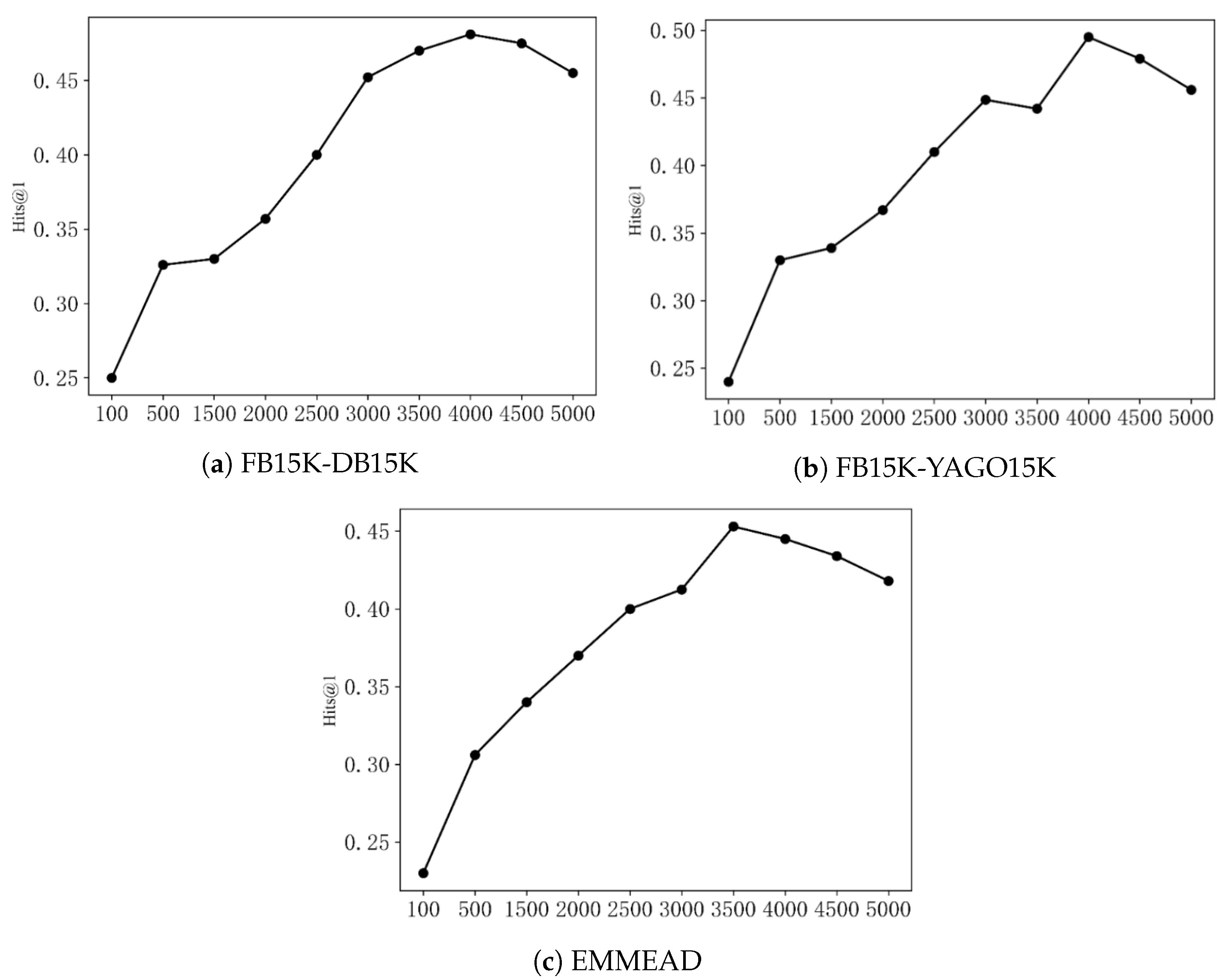

Figure 2 summarizes the results on the three datasets.

The overall trend shows that increasing the number of pseudo-seeds initially improves alignment performance, but performance declines once the seed set grows beyond an optimal scale. Specifically, the best Hits@1 values are observed when the number of pseudo-seeds reaches approximately 3500 on EMMEAD and around 4000 on both FB15K-DB15K and FB15K-YAGO15K. Notably, even with as few as 500 pseudo-seeds, Hits@1 remains above 30% on all datasets, demonstrating the robustness of UMEAD under low-resource conditions.

The decline observed at larger seed sizes is likely due to noise introduced during pseudo-seed expansion. While a moderate number of pseudo-seeds effectively capture key multimodal features (e.g., text and image cues of naval vessels), excessive expansion increases the probability of including low-quality or noisy pairs, which can obscure fine-grained structural distinctions and degrade performance.

In summary, these experiments confirm that UMEAD achieves stable alignment with relatively few pseudo-seeds, and that carefully controlling the scale of the pseudo-seed set is critical to balancing informativeness and noise in multimodal equipment knowledge graph alignment.

3.6. Ablation Study

To comprehensively evaluate the contribution of each functional module in UMEAD, a series of ablation experiments were conducted to isolate the effects of three core components introduced in

Section 2: (1) the pseudo-seed generation framework (which integrates multimodal feature extraction and adaptive feature fusion), (2) the dual-space embedding strategy (combining Euclidean and hyperbolic representations), and (3) the iterative constraint mechanism for progressive alignment refinement. Each ablation variant was designed to disable or simplify one or more of these components while keeping the remaining parts intact, ensuring a complete and interpretable module-level evaluation.

Four ablation variants were constructed, namely UMEAD(-se), UMEAD(-v), UMEAD(-il), and UMEAD(-h). UMEAD(-se) excludes semantic information and uses only visual features to generate pseudo-seeds, while UMEAD(-v) removes visual information and relies solely on textual semantics. Together, these two variants reveal the effect of the multimodal pseudo-seed generation and adaptive feature fusion modules, since removing either modality prevents the fusion mechanism from functioning effectively. UMEAD(-il) disables the iterative constraint mechanism for seed expansion, while UMEAD(-h) removes hyperbolic space embedding, using only Euclidean embeddings for entity representation. The performance of these variants was compared against the complete UMEAD model.

As shown in

Table 8, the exclusion of visual information in UMEAD(-v) results in the largest performance drop. On the EMMEAD dataset, Hits@1 decreases by 15.63% compared with the complete model, indicating that visual information plays a decisive role in distinguishing equipment entities, as image features contain unique structural and appearance cues that complement textual semantics. Conversely, UMEAD(-se), which removes semantic features, also suffers a notable performance degradation, with MRR values reduced by 13.01% on FB15K-DB15K. This demonstrates the essential role of semantic information in capturing naming variations, textual descriptions, and hierarchical relationships that visual information alone cannot represent effectively. Together, these results highlight the necessity of multimodal integration. When both modalities are used jointly, the adaptive fusion mechanism dynamically balances their contributions, leading to significantly higher alignment reliability and robustness.

Although the adaptive feature fusion mechanism is not independently ablated, its contribution is implicitly reflected in the performance gap between UMEAD(-se)/UMEAD(-v) and the complete UMEAD model. These single-modality variants represent extreme cases where adaptive fusion cannot operate. The consistent performance improvement of the full model thus verifies the fusion mechanism’s effectiveness in mitigating modality bias and enhancing multimodal complementarity.

In the case of UMEAD(-il), where the iterative constraint mechanism is disabled, Hits@1 decreases by more than 8.0% on average across all datasets. Without iterative refinement, error propagation from noisy seeds cannot be effectively mitigated, and the alignment process becomes vulnerable to compounding inaccuracies. The iterative mechanism helps eliminate low-quality pairs and progressively refines the pseudo-seed pool with more reliable alignments, demonstrating its critical role in ensuring stability and robustness.

For UMEAD(-h), removing the hyperbolic space embedding leads to consistent performance declines across all datasets, with the largest drop observed on FB15K-YAGO15K, where Hits@1 decreases by 12.48%. This result clearly validates the critical role of hyperbolic geometry in modeling hierarchical structures. Equipment knowledge graphs often exhibit tree-like and multi-level dependencies that are difficult to represent effectively in Euclidean space. Beyond quantitative evidence, the superiority of the dual-space embedding arises from its geometric and representational complementarity. Euclidean space preserves local neighborhood similarity, enabling fine-grained relational learning, but suffers from distortion when encoding large hierarchical or tree-like patterns. Hyperbolic space, in contrast, provides exponentially increasing representational capacity, allowing entities at different hierarchical depths to be arranged with minimal overlap and preserving global consistency. By jointly optimizing embeddings in both Euclidean and hyperbolic geometries, UMEAD captures fine-grained local regularities and coarse-grained hierarchical dependencies simultaneously. This complementary design not only mitigates structural information loss but also stabilizes alignment under incomplete or noisy multimodal conditions—an advantage that single-space approaches fail to achieve.

In summary, the ablation results confirm that each component of UMEAD contributes indispensably to the overall performance. The pseudo-seed generation (including multimodal fusion) provides strong initial alignment cues, the iterative constraint mechanism enhances reliability through progressive filtering, and the dual-space embedding with hyperbolic geometry preserves global hierarchical consistency. These modules collectively ensure that UMEAD achieves a consistently superior performance across heterogeneous multimodal datasets.

Computational cost and failure analysis. To provide additional practical context, we analyze the computational efficiency and qualitative failure patterns of UMEAD and its ablated variants. All experiments were conducted on an NVIDIA RTX3090 ×2 GPU platform (as detailed in

Table 5). On average, the complete UMEAD model required approximately 1.2× the training time of the simplest variant (UMEAD(-h)), primarily due to the additional optimization in hyperbolic space and the iterative constraint mechanism. Parameter counts ranged from 38.2 M for UMEAD(-h) to 46.1M for the complete model, indicating that the observed performance gains stem mainly from architectural design rather than increased model size.

From a qualitative perspective, removing the visual modality (UMEAD(-v)) most severely affects classes of entities with distinctive appearance patterns—such as surface ships and aircraft — where structural cues play a crucial role in disambiguation. In contrast, removing semantic information (UMEAD(-se)) primarily impacts entities with visually similar forms but different operational functions, such as electronic equipment. This observation highlights the complementary strengths of visual and semantic modalities: visual cues enhance local discriminability, whereas textual semantics contribute functional and contextual differentiation. Such insights further illustrate why adaptive fusion and multimodal integration are essential for reliable alignment in complex equipment knowledge graphs.

3.7. Qualitative Case Analysis

To further understand the behavior of UMEAD beyond overall quantitative metrics, we conducted a case-level analysis of representative best and worst alignment results. The goal is to illustrate in which situations the model performs reliably and where it still encounters difficulties.

Selection and evaluation. For each dataset, we selected several aligned entity pairs from the test set with the highest and lowest final similarity scores . The high-confidence pairs represent cases where UMEAD achieved correct and stable alignment, while the low-confidence pairs correspond to mismatches or uncertain predictions. Each case is analyzed according to the available modality combinations (structure, relation, attribute, text, or visual embeddings) and its neighborhood consistency in the knowledge graph.

Observations. The analysis reveals several consistent patterns. High-confidence (best) cases usually occur when: (1) multiple modalities provide mutually consistent evidence (e.g., structural and relational embeddings reinforce each other); (2) entities have dense graph connections that yield reliable contextual aggregation; or (3) pseudo-seeds from similar categories help guide the alignment process. In contrast, low-confidence (worst) cases often arise when: (1) some modalities are missing or incomplete, leading to insufficient cross-modal signals; (2) attributes or relations are sparsely connected, causing structural ambiguity; or (3) pseudo-seeds contain residual noise that misleads the iterative alignment expansion.

Insights and implications. These qualitative findings align with the quantitative results reported earlier: the model performs most robustly when multimodal signals are complementary and structurally consistent, but its reliability decreases under sparse or noisy modality conditions. Such insights suggest two potential directions for improvement: (1) introducing uncertainty-aware fusion weights to down-weight unreliable modalities, and (2) enhancing the pseudo-seed refinement strategy to further suppress noise during iterative updates. Overall, this analysis provides a clearer understanding of UMEAD’s strengths and remaining challenges in complex multimodal alignment scenarios.

3.8. Hyperparameter Sensitivity Analysis

To evaluate the robustness of UMEAD with respect to key hyperparameters, we conducted sensitivity experiments on

,

,

, and the number of fusion layers.

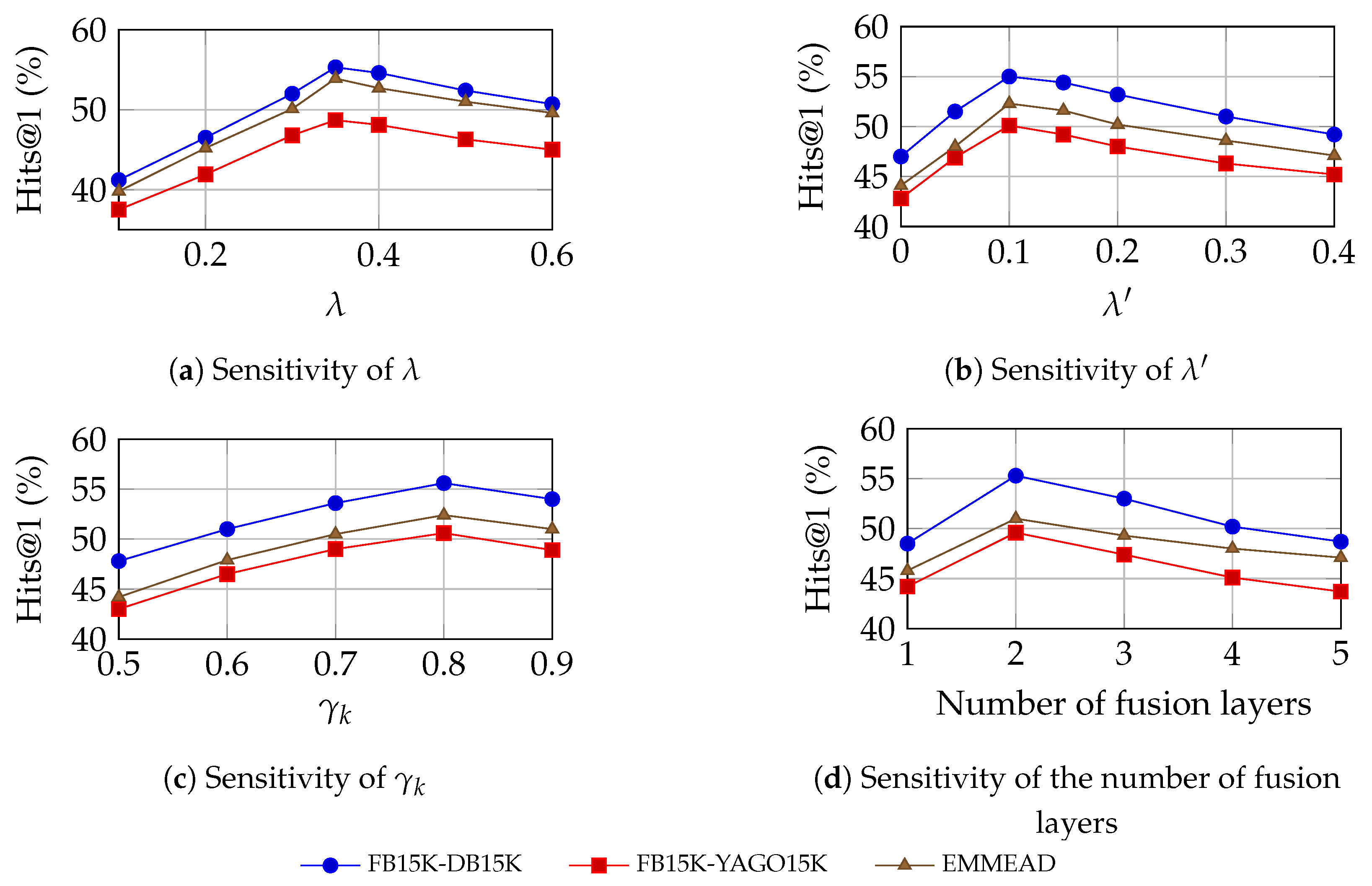

Figure 3 illustrate the variation of Hits@1 on the FB15K-DB15K, FB15K-YAGO15K, and EMMEAD datasets when each parameter is adjusted while keeping the others fixed at their default values.

As shown in the figures, the performance of UMEAD remains stable across datasets within a broad range of hyperparameter settings. The optimal configuration is achieved when , , , and the number of fusion layers is two.

Across all datasets, the model shows consistent sensitivity trends. When varies within [0.3, 0.4], Hits@1 remains stable, indicating that the pseudo-seed fusion weight has a moderate but consistent influence on alignment quality. For , which controls the balance between alignment loss and contrastive loss, performance peaks around 0.1 across datasets and declines slightly when the contrastive loss becomes dominant. The iteration similarity threshold exhibits optimal performance near 0.8, confirming that a stricter filtering criterion effectively removes noisy candidate pairs while maintaining sufficient positive matches. Regarding the number of fusion layers, using two fully connected layers achieves the best trade-off between feature interaction and overfitting, while deeper networks tend to slightly reduce performance.

Overall, these results demonstrate that UMEAD remains robust and dataset-agnostic across a broad range of key hyperparameter settings. The chosen default settings (, , , two fusion layers) provide a stable and well-balanced configuration for multimodal entity alignment across heterogeneous datasets.