Decoding Self-Imagined Emotions from EEG Signals Using Machine Learning for Affective BCI Systems

Abstract

1. Introduction

2. Materials and Methods

2.1. Self-Imagined Emotion Paradigms

2.2. EEG Acquisition

2.3. EEG Signal Preprocessing

2.4. Feature Extraction

2.4.1. Feature Parameters

2.4.2. EEG Channel Selections

2.5. Machine Learning Classification

2.6. Performance Evaluation

3. Results

3.1. Verification of EEG Channel Selection Pattern

3.2. Model Classification Evaluation

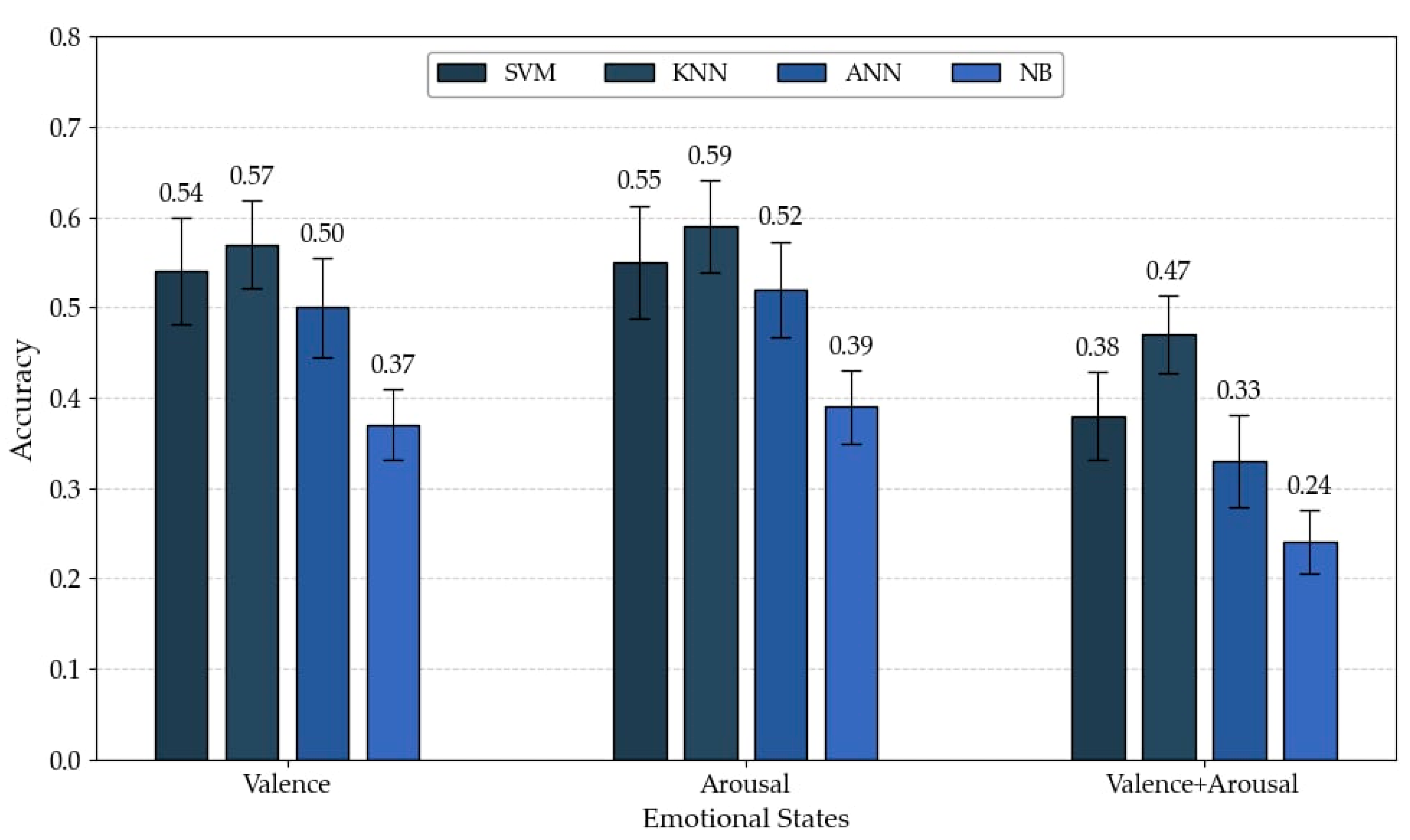

3.3. Model Generalization Across Subjects (LOSO Validation)

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AC | Accuracy |

| AI | Artificial intelligence |

| ANN | Artificial neural network |

| ANS | Autonomic nervous system |

| BCI | Brain–computer interface |

| CNN | Convolutional neural network |

| CNS | Central nervous system |

| DEAP | Dataset for emotion analysis using physiological signals |

| ECG | Electrocardiogram |

| EDA | Electrodermal activity |

| EEG | Electroencephalogram |

| ERP | Event-related potential |

| FN | False negative |

| FP | False positive |

| FFT | Fast Fourier transform |

| GSR | Galvanic skin response |

| HMM-MAR | Hidden Markov model with multivariate autoregressive parameters |

| HCI | Human–computer interaction |

| IAPS | International Affective Picture System |

| ICA | Independent component analysis |

| IM | Independent modulator |

| KNN | K-nearest neighbor |

| LOSO | Leave-one-subject-out |

| ML | Machine learning |

| MLP | Multilayer perceptron |

| NB | Naive Bayes |

| PSD | Power spectral density |

| RF | Random forest |

| STFT | Short-time Fourier transform |

| SMOTE | Synthetic minority oversampling technique |

| SVM | Support vector machine |

| TN | True negative |

| TP | True positive |

Appendix A

Subject-Wise Classification Performance Under LOSO Validation

| Subject | Valence | Arousal | Valence and Arousal | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SVM | KNN | ANN | NB | SVM | KNN | ANN | NB | SVM | KNN | ANN | NB | |

| 1 | 0.50 | 0.51 | 0.47 | 0.42 | 0.53 | 0.57 | 0.52 | 0.43 | 0.36 | 0.39 | 0.32 | 0.27 |

| 2 | 0.49 | 0.49 | 0.50 | 0.41 | 0.49 | 0.48 | 0.49 | 0.43 | 0.33 | 0.31 | 0.28 | 0.26 |

| 3 | 0.50 | 0.49 | 0.45 | 0.25 | 0.50 | 0.55 | 0.55 | 0.31 | 0.35 | 0.41 | 0.38 | 0.17 |

| 4 | 0.53 | 0.55 | 0.48 | 0.41 | 0.56 | 0.60 | 0.56 | 0.40 | 0.37 | 0.48 | 0.29 | 0.24 |

| 5 | 0.61 | 0.63 | 0.56 | 0.44 | 0.59 | 0.61 | 0.57 | 0.44 | 0.40 | 0.48 | 0.39 | 0.29 |

| 6 | 0.53 | 0.57 | 0.52 | 0.32 | 0.53 | 0.64 | 0.54 | 0.32 | 0.35 | 0.48 | 0.31 | 0.22 |

| 7 | 0.53 | 0.59 | 0.54 | 0.40 | 0.55 | 0.62 | 0.54 | 0.37 | 0.36 | 0.49 | 0.27 | 0.18 |

| 8 | 0.66 | 0.65 | 0.55 | 0.41 | 0.65 | 0.69 | 0.55 | 0.46 | 0.52 | 0.58 | 0.39 | 0.29 |

| 9 | 0.62 | 0.59 | 0.56 | 0.40 | 0.59 | 0.62 | 0.59 | 0.44 | 0.45 | 0.52 | 0.39 | 0.26 |

| 10 | 0.54 | 0.65 | 0.50 | 0.27 | 0.55 | 0.62 | 0.51 | 0.30 | 0.34 | 0.56 | 0.23 | 0.18 |

| 11 | 0.61 | 0.63 | 0.57 | 0.39 | 0.62 | 0.65 | 0.58 | 0.45 | 0.46 | 0.53 | 0.36 | 0.25 |

| 12 | 0.50 | 0.57 | 0.46 | 0.42 | 0.55 | 0.62 | 0.56 | 0.46 | 0.36 | 0.50 | 0.30 | 0.26 |

| 13 | 0.54 | 0.60 | 0.49 | 0.28 | 0.50 | 0.57 | 0.50 | 0.32 | 0.43 | 0.52 | 0.37 | 0.21 |

| 14 | 0.54 | 0.62 | 0.53 | 0.39 | 0.59 | 0.66 | 0.58 | 0.44 | 0.39 | 0.57 | 0.31 | 0.28 |

| 15 | 0.54 | 0.62 | 0.51 | 0.41 | 0.59 | 0.59 | 0.54 | 0.41 | 0.41 | 0.48 | 0.38 | 0.22 |

| 16 | 0.49 | 0.54 | 0.48 | 0.29 | 0.50 | 0.59 | 0.46 | 0.32 | 0.39 | 0.50 | 0.35 | 0.25 |

| 17 | 0.57 | 0.59 | 0.47 | 0.42 | 0.57 | 0.66 | 0.48 | 0.44 | 0.39 | 0.50 | 0.33 | 0.24 |

| 18 | 0.54 | 0.52 | 0.46 | 0.34 | 0.50 | 0.50 | 0.47 | 0.41 | 0.35 | 0.38 | 0.30 | 0.21 |

| 19 | 0.50 | 0.47 | 0.52 | 0.38 | 0.48 | 0.49 | 0.47 | 0.38 | 0.33 | 0.34 | 0.31 | 0.25 |

| 20 | 0.47 | 0.49 | 0.42 | 0.33 | 0.48 | 0.47 | 0.44 | 0.33 | 0.32 | 0.35 | 0.31 | 0.22 |

| Average | 0.54 | 0.57 | 0.50 | 0.37 | 0.55 | 0.59 | 0.52 | 0.39 | 0.38 | 0.47 | 0.33 | 0.24 |

References

- Hamzah, H.A.; Abdalla, K.K. EEG-based emotion recognition systems; Comprehensive study. Heliyon 2024, 10, e31485. [Google Scholar] [CrossRef]

- Torres, E.P.; Torres, E.A.; Hernández-Álvarez, M.; Yoo, S.G. EEG-Based BCI Emotion Recognition: A Survey. Sensors 2020, 20, 5083. [Google Scholar] [CrossRef]

- Bhise, P.R.; Hulkarni, S.B.; Aldhaheri, T.A. Brain Computer Interface based EEG for Emotion Recognition System: A Systematic Review. In Proceedings of the 2020 2nd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA), Bangalore, India, 5–7 March 2020; pp. 327–334. [Google Scholar] [CrossRef]

- Samal, P.; Hashmi, M.F. Role of machine learning and deep learning techniques in EEB-based BCI emotion recognition system: A review. Artif. Intell. Rev. 2024, 57, 50. [Google Scholar] [CrossRef]

- Perur, S.D.; Kenchannava, H.H. Enhancing Mental Well-Being Through OpenBCI: An Intelligent Approach to Stress Measurement. In Proceedings of the Third International Conference on Cognitive and Intelligent Computing, ICCIC 2023, Hyderabad, India, 8–9 December 2023; Cognitive Science and Technology. Springer: Singapore, 2025; Volume 1. [Google Scholar] [CrossRef]

- Ferrada, F.; Camarinha-Matos, L.M. Emotions in Human-AL Collaboration Navigating Unpredictability: Collaborative Networks in Non-linear Worlds. In Proceedings of the PRO-VE 2024, Albi, France, 28–29 October 2024; IFIP Advances in Information and Communication Technology. Springer: Cham, Switzerland, 2024; Volume 726, pp. 101–117. [Google Scholar] [CrossRef]

- Kolomaznik, M.; Petrik, V.; Slama, M.; Jurik, V. The role of socio-emotional attributes in enhancing human-AI collaboration. Front. Psychol. 2024, 15, 1369957. [Google Scholar] [CrossRef]

- Pervez, F.; Shoukat, M.; Usama, M.; Sandhu, M.; Latif, S.; Qadir, J. Affective Computing and the Road to an Emotionally Intelligent Metaverse. IEEE Open J. Comput. Soc. 2024, 5, 195–214. [Google Scholar] [CrossRef]

- Zhu, H.Y.; Hieu, N.Q.; Hoang, D.T.; Nguyen, D.N.; Lin, C.-T. A Human-Centric Metaverse Enabled by Brain-Computer Interface: A Survey. IEEE Commun. Surv. Tutorials 2024, 26, 2120–2145. [Google Scholar] [CrossRef]

- Lee, C.-H.; Huang, P.-H.; Lee, T.-H.; Chen, P.-H. Affective Communication: Designing Semantic Communication for Affective Computing. In Proceedings of the 33rd Wireless and Optical Communications Conference (WOCC), Hsinchu, Taiwan, 25–26 October 2024; pp. 35–39. [Google Scholar] [CrossRef]

- Faria, D.R.; Weinberg, A.I.; Ayrosa, P.P. Multi-modal Affective Communication Analysis: Fusing Speech Emotion and Text Sentiment Using Machine Learning. Appl. Sci. 2024, 14, 6631. [Google Scholar] [CrossRef]

- Emanuel, A.; Eldar, E. Emotions as computations. Neurosci. Biobehav. Rev. 2023, 144, 104977. [Google Scholar] [CrossRef]

- Alhalaseh, R.; Alasasfeh, S. Machine-Learning-Based Emotion Recognition System Using EEG Signals. Computers 2020, 9, 95. [Google Scholar] [CrossRef]

- Kawala-Sterniuk, A.; Browarska, N.; Al-Bakri, A.; Pelc, M.; Zygarlicki, J.; Sidikova, M.; Martinek, R.; Gorzelanczyk, E.J. Summary of over Fifty Years with Brion-Computer Interfaces—A Review. Brain Sci. 2021, 11, 43. [Google Scholar] [CrossRef]

- Peksa, J.; Mamchur, D. Stste-of-the-Art on Brain-Computer Interface Technology. Sensors 2023, 23, 6001. [Google Scholar] [CrossRef]

- Bano, K.S.; Bhuyan, P.; Ray, A. EEG-Based Brain Computer Interface for Emotion Recognition. In Proceedings of the 5th International Conference on Computational Intelligence and Networks (CINE), Bhubaneswar, India, 1–3 December 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Suhaimi, N.S.; Mountstephens, J.; Teo, J. EEG-Based Emotion Recognition: A State-of-the-Art Review of Current Trends and Opportunities. Comp. Intell. Neurosci. 2020, 2020, 8875426. [Google Scholar] [CrossRef] [PubMed]

- Li, J. Optimal Modeling of College Students’ Mental Health Based on Brain-Computer Interface and Imaging Sensing. In Proceedings of the 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; pp. 772–775. [Google Scholar] [CrossRef]

- Beauchemin, N.; Charland, P.; Karran, A.; Boasen, J.; Tadson, B.; Sénécal, S.; Léger, P.M. Enhancing learning experiences: EEG-based passive BCI system adapts learning speed to cognitive load in real-tine, with motivation as catalyst. Front. Hum. Neurosci. 2024, 18, 1416683. [Google Scholar] [CrossRef] [PubMed]

- Semertzidis, N.; Vranic-Peters, M.; Andres, J.; Dwivedi, B.; Kulwe, Y.C.; Zambetta, F.; Mueller, F.F. Neo-Noumena: Augmenting Emotion Communication. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 25–30 April 2020; ACM: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Papanastasiou, G.; Drigas, A.; Skianis, C.; Lytras, M. Brain computer interface based applications for training and rehabilitation of students with neurodevelopmental disorder. A literature review. Heliyon 2020, 6, e04250. [Google Scholar] [CrossRef] [PubMed]

- Alimardani, M.; Hiraki, K. Passive Brain-Computer Interfaces for Enhanced Human-Robot Interaction. Front. Robot. AI 2020, 7, 125. [Google Scholar] [CrossRef]

- Wu, M.; Teng, W.; Fan, C.; Pei, S.; Li, P.; Lv, Z. An Invertigation of Olfactory-Enhanced Video on EEG-Based Emotion Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 1602–1613. [Google Scholar] [CrossRef]

- Wang, Q.; Wang, M.; Yang, Y.; Zhang, X. Multi-modal emotion recognition using EEG and speech signals. Comput. Biol. Med. 2022, 149, 105907. [Google Scholar] [CrossRef]

- Zhou, T.H.; Liang, W.; Liu, H.; Wang, L.; Ryu, K.H.; Nam, K.W. EEG Emotion Recognition Applied to the Effect Analysis of Music on Emotion Changes in Psychological Healthcare. Int. J. Environ. Res. Public. Health 2022, 20, 378. [Google Scholar] [CrossRef]

- Zaidi, S.R.; Khan, N.A.; Hasan, M.A. Bridging Neuroscience and Machine Learning: A Gender-Based Electroencephalogram Framework for Guilt Emotion Identification. Sensors 2025, 25, 1222. [Google Scholar] [CrossRef]

- Er, M.B.; Çiğ, H.; Aydilek, İ.B. A new approach to recognition of human emotions using brain signals and music stimuli. Appl. Acoust. 2021, 175, 107840. [Google Scholar] [CrossRef]

- Huang, H.; Xie, Q.; Pan, J.; He, Y.; Wen, Z.; Yu, R.; Li, Y. An EEG-Based Brain Computer Interface for Emotion Recognition and Its Application in Patients with Disorder of Consciousness. IEEE Trans. Affect. Comput. 2021, 12, 832–842. [Google Scholar] [CrossRef]

- Polo, E.M.; Farabbi, A.; Mollura, M.; Paglialonga, A.; Mainardi, L.; Barbieri, R. Comparative Assessment of Physiological Responses to Emotional Elicitation by Auditory and Visual Stimuli. IEEE J. Transl. Eng. Health Med. 2024, 12, 171–181. [Google Scholar] [CrossRef]

- Lian, Y.; Zhu, M.; Sun, Z.; Liu, J.; Hou, Y. Emotion recognition based on EEG signals and face images. Biomed. Signal Process Control 2025, 103, 107462. [Google Scholar] [CrossRef]

- Mutawa, A.M.; Hassouneh, A. Multi-modal Real-Time Patient Emotion Recognition System using Facial Expressions and Brain EEG Signals based on Machine Learning and Log-Sync Methods. Biomed. Signal Process Control 2024, 91, 105942. [Google Scholar] [CrossRef]

- Chouchou, F.; Perchet, C.; Garcia-Larrea, L. EEG changes reflecting pain: Is alpha suppression better than gamma enhancement? Neurophysiol. Clin. 2021, 51, 209–218. [Google Scholar] [CrossRef] [PubMed]

- Mathew, J.; Perez, T.M.; Adhia, D.B.; Ridder, D.D.; Main, R. Is There a Difference in EEG Characteristics in Acute, Chronic, and Experimentally Induce Musculoskeletal Pain State? A Systematic Review. Clin. EEG Neurosci. 2022, 55, 101–120. [Google Scholar] [CrossRef]

- Feng, L.; Li, H.; Cui, H.; Xie, X.; Xu, S.; Hu, Y. Low Back Pain Assessment Based on Alpha Oscillation Changes in Spontaneous Electroencephalogram (EEG). Neural Plast. 2021, 2021, 8537437. [Google Scholar] [CrossRef]

- Wang, L.; Xiao, Y.; Urman, R.D.; Lin, Y. Clod pressor pain assessment based on EEG power spectrum. SN Appl. Sci. 2020, 2, 1976. [Google Scholar] [CrossRef]

- Kothe, C.A.; Makeig, S.; Onton, J.A. Emotion Recognition from EEG during Self-Paced Emotional Imagery. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, ACII 2013, Geneva, Switzerland, 2–5 September 2013; pp. 855–858. [Google Scholar] [CrossRef]

- Hsu, S.H.; Lin, Y.; Onton, J.; Jung, T.P.; Makeig, S. Unsupervised learning of brain state dynamics during emotion imagination using high-density EEG. NeuroImage 2022, 249, 118873. [Google Scholar] [CrossRef]

- Ji, Y.; Dong, S.Y. Deep learning-based self-induced emotion recognition using EEG. Front. Neurosci. 2022, 16, 985709. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Pischedda, F. Measuring brain potentials of imagination linked to physiological needs and motivational states. Front. Hum. Neurosci. 2023, 17, 1146789. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Cesati, F. Neural correlates of recalled sadness, joy, and fear states: A source reconstruction EEG study. Front. Psychiatry 2024, 15, 1357770. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Tacchini, M.; Jiang, K. What do you have in mind? ERP markers of visual and auditory imagery. Brain Cogn. 2023, 166, 105954. [Google Scholar] [CrossRef]

- Piţur, S.; Tufar, I.; Miu, A.C. Auditory imagery and poetry-elicited emotions: A study on the hard of hearing. Front. Psychol. 2025, 16, 1509793. [Google Scholar] [CrossRef]

- Kilmarx, J.; Tashev, I.; Millán, J.D.R.; Sulzer, J.; Lewis-Peacock, J. Evaluating the Feasibility of Visual Imagery for an EEG-Based Brain–Computer Interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2209–2219. [Google Scholar] [CrossRef]

- Bainbridge, W.A.; Hall, E.H.; Baker, C.I. Distinct Representational Structure and Localization, for Visual Encoding and Recall during Visual Imagery. Cereb. Cortex 2021, 31, 1898–1913. [Google Scholar] [CrossRef] [PubMed]

- Russell, J.A. A circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open-source toolbox for analysis of EEG dynamics. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Qu, G.; Wen, S.; Bi, J.; Liu, J.; Wu, Q.; Han, L. EEG Emotion Recognition of Different Brain Regions Based on 2DCNN-DGRU. In Proceedings of the 2023 IEEE 13th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Qinhuangdao, China, 10–14 July 2023; pp. 692–697. [Google Scholar] [CrossRef]

- Duhamel, P.; Vetterli, M. Fast Fourier transforms: A tutorial review and A state of the art. Signal Process 1990, 19, 259–299. [Google Scholar] [CrossRef]

- Youngworth, R.N.; Gallagher, B.B.; Stamper, B.L. An overview of Power Spectral Density (PSD) calculations. In Optical Measurement Systems for Industrial Inspection IV; SPIE: Bellingham, WA, USA, 2005; Volume 5869, p. 58690U. [Google Scholar] [CrossRef]

- Gannouni, S.; Aledaily, A.; Belwafi, K.; Aboalsamh, H. Emotion detection using electroencephalography signals and a zero-time windowing-based epoch estimation and relevant electrode identification. Sci. Rep. 2021, 11, 7071. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Mishra, S.; Srinivasan, N.; Tiwary, U.S. Dynamic Functional Connectivity of Emotion Processing in Beta Band with Naturalistic Emotion Stimuli. Brain Sci. 2022, 12, 1106. [Google Scholar] [CrossRef]

- Aljribi, K.A. A comparative analysis of frequency bands in EEG-based emotion recognition system. In Proceedings of the 7th International Conference on Engineering & MIS (ICEMIS 2021), Almaty, Kazakhstan, 11–13 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Ali, P.J.M.; Faraj, R.H. Data Normalization and Standardization: A Technical Report. Mach. Learn. Rep. 2014, 1, 1–6. [Google Scholar] [CrossRef]

- Aggarwal, S.; Chugh, N. Review of Machine Learning Techniques for EEG Based Brain Computer Interface. Arch. Comp. Methods Eng. 2022, 29, 3001–3020. [Google Scholar] [CrossRef]

- Rasheed, S. A Review of the Role of Machine Learning Techniques towards Brain—Computer Interface Applications. Mach. Learn. Knowl. Extr. 2021, 3, 835–862. [Google Scholar] [CrossRef]

- Brookshire, G.; Kasper, J.; Blauch, N.M.; Wu, Y.C.; Glatt, R.; Merrill, D.A.; Gerrol, S.; Yoder, K.J.; Quirk, C.; Lucero, C. Data Leakage in Deep Learning Studies of Translational EEG. Front. Neurosci. 2024, 18, 1373515. [Google Scholar] [CrossRef] [PubMed]

- Ou, Y.; Sun, S.; Gan, H.; Zhou, R.; Yang, Z. An improved self-supervised learning for EEG classification. Math. Biosci. Eng. 2022, 19, 6907–6922. [Google Scholar] [CrossRef]

- Sujbert, L.; Orosz, G. FFT-Based Spectrum Analysis in the Case of Data Loss. IEEE Trans. Instrum. Meas. 2016, 65, 968–976. [Google Scholar] [CrossRef]

- Abdulaal, M.J.; Casson, A.J.; Gaydecki, P. Critical Analysis of Cross-Validation Methods and Their Impact on Neural Networks Performance Inflation in Electroencephalography Analysis. Int. Conf. J. Electr. Comput. Eng. 2021, 44, 75–82. [Google Scholar] [CrossRef]

- Knyazev, G.G. EEG Correlates of Self-Referential Processing. Front. Hum. Neurosci. 2013, 7, 264. [Google Scholar] [CrossRef]

- Wei, X.; Zhang, J.; Zhang, J.; Li, Z.; Li, Q.; Wu, J.; Yang, J.; Zhang, Z. Investigating the Human Brain’s Integration of Internal and External Reference Frames: The Role of the Alpha and Beta Bands in a Modified Temporal Order Judgment Task. Hum. Brain Mapp. 2025, 46, e70196. [Google Scholar] [CrossRef] [PubMed]

| Author | Task | Trigger | Algorithm | Outputs |

|---|---|---|---|---|

| Kothe et al. [36] | Closed-eye, imagine an emotional scenario | Voice-guided | ICA + Machine Learning (ML) |

|

| Hsu et al. [37] | Closed-eye, imagine an emotional scenario or recall an experience | Voice-guided | Unsupervised Learning on High-density EEG |

|

| Ji and Dong [38] | Closed-eye, imagine an emotional scenario or recall an experience | Voice-guided | Deep Learning |

|

| Proverbio and Pischedda [39] | Mental imagery of internal bodily sensations and motivational needs | Pictograms | ERP analysis (P300, N400 components) |

|

| Proverbio and Cesati [40] | Silent recall of emotional states | Pictograms | Source Reconstruction (sLORETA) |

|

| Emotion | Valence Level | Arousal Level |

|---|---|---|

| Surprise | High (Positive) | High (Active) |

| Excitement | High (Positive) | High (Active) |

| Happiness | High (Positive) | High (Active) |

| Pleasantness | High (Positive) | Low (Calm) |

| Relaxation | High (Positive) | Low (Calm) |

| Calmness | High (Positive) | Low (Calm) |

| Boredom | Low (Negative) | Low (Calm) |

| Depression | Low (Negative) | Low (Calm) |

| Sadness | Low (Negative) | Low (Calm) |

| Disgust | Low (Negative) | High (Active) |

| Anger | Low (Negative) | High (Active) |

| Fear | Low (Negative) | High (Active) |

| Frequency Analysis | Feature Parameters | EEG Channel Selections | Model Classifiers | Evaluation Metrics |

|---|---|---|---|---|

|

|

|

|

|

| Models | Model |

|---|---|

| SVM | C = 10, γ = 0.1, kernel = ‘rbf’ |

| KNN | n_neighbors = 3, weights = ‘distance’, metric = ‘euclidean’ |

| ANN | activation = ‘relu’, alpha = 0.001, hidden_layer_sizes = (50, 50), learning_rate_init = 0.01 |

| EEG Channel Selection Pattern | Average Classification Accuracy | |||||

|---|---|---|---|---|---|---|

| FFT Method | PSD Method | |||||

| Max | Mean ± SD | 95% CI | Max | Mean ± SD | 95% CI | |

| A1 | 0.86 | 0.66 ± 0.14 | [0.60–0.72] | 0.73 | 0.59 ± 0.10 | [0.55–0.63] |

| B1 | 0.79 | 0.59 ± 0.11 | [0.54–0.64] | 0.68 | 0.53 ± 0.09 | [0.49–0.57] |

| B2 | 0.79 | 0.59 ± 0.11 | [0.54–0.64] | 0.69 | 0.53 ± 0.09 | [0.49–0.57] |

| B3 | 0.77 | 0.55 ± 0.11 | [0.50–0.60] | 0.65 | 0.51 ± 0.09 | [0.47–0.55] |

| B4 | 0.72 | 0.51 ± 0.10 | [0.47–0.55] | 0.63 | 0.47 ± 0.08 | [0.43–0.51] |

| B5 | 0.62 | 0.47 ± 0.08 | [0.44–0.50] | 0.58 | 0.45 ± 0.07 | [0.42–0.48] |

| B6 | 0.66 | 0.50 ± 0.08 | [0.47–0.53] | 0.61 | 0.47 ± 0.08 | [0.44–0.50] |

| B7 | 0.59 | 0.45 ± 0.06 | [0.43–0.47] | 0.54 | 0.44 ± 0.06 | [0.42–0.46] |

| C1 | 0.82 | 0.62 ± 0.13 | [0.56–0.68] | 0.71 | 0.55 ± 0.10 | [0.51–0.59] |

| C2 | 0.81 | 0.59 ± 0.12 | [0.54–0.64] | 0.68 | 0.53 ± 0.10 | [0.49–0.57] |

| C3 | 0.79 | 0.59 ± 0.12 | [0.54–0.64] | 0.69 | 0.54 ± 0.09 | [0.50–0.58] |

| C4 | 0.81 | 0.59 ± 0.12 | [0.54–0.64] | 0.69 | 0.54 ± 0.09 | [0.50–0.58] |

| C5 | 0.81 | 0.64 ± 0.13 | [0.58–0.70] | 0.72 | 0.57 ± 0.10 | [0.53–0.61] |

| C6 | 0.82 | 0.62 ± 0.12 | [0.57–0.67] | 0.70 | 0.56 ± 0.09 | [0.52–0.60] |

| C7 | 0.80 | 0.64 ± 0.13 | [0.58–0.70] | 0.71 | 0.57 ± 0.10 | [0.53–0.61] |

| C8 | 0.79 | 0.60 ± 0.12 | [0.55–0.65] | 0.69 | 0.54 ± 0.09 | [0.50–0.58] |

| Model Features | Average Accuracy Rate of Emotion Classification | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Two-Class Valence | Two-Class Arousal | Four-Class Valence–Arousal | ||||||||||

| SVM | KNN | ANN | NB | SVM | KNN | ANN | NB | SVM | KNN | ANN | NB | |

| 0.65 | 0.75 | 0.64 | 0.43 | 0.63 | 0.74 | 0.63 | 0.42 | 0.45 | 0.57 | 0.43 | 0.27 | |

| 0.70 | 0.80 | 0.70 | 0.42 | 0.73 | 0.80 | 0.69 | 0.46 | 0.51 | 0.65 | 0.45 | 0.28 | |

| 0.72 | 0.86 | 0.70 | 0.44 | 0.74 | 0.86 | 0.74 | 0.44 | 0.55 | 0.76 | 0.50 | 0.28 | |

| 0.72 | 0.80 | 0.69 | 0.44 | 0.73 | 0.82 | 0.71 | 0.41 | 0.54 | 0.69 | 0.47 | 0.27 | |

| 0.72 | 0.79 | 0.72 | 0.42 | 0.72 | 0.78 | 0.70 | 0.43 | 0.52 | 0.65 | 0.46 | 0.28 | |

| 0.76 | 0.78 | 0.70 | 0.43 | 0.74 | 0.79 | 0.70 | 0.47 | 0.53 | 0.64 | 0.46 | 0.29 | |

| 0.72 | 0.80 | 0.69 | 0.42 | 0.73 | 0.80 | 0.69 | 0.43 | 0.53 | 0.67 | 0.45 | 0.27 | |

| 0.74 | 0.79 | 0.69 | 0.45 | 0.74 | 0.79 | 0.71 | 0.45 | 0.54 | 0.67 | 0.46 | 0.31 | |

| 0.75 | 0.80 | 0.71 | 0.45 | 0.74 | 0.80 | 0.69 | 0.45 | 0.53 | 0.67 | 0.43 | 0.31 | |

| Average | 0.72 | 0.80 | 0.69 | 0.43 | 0.72 | 0.80 | 0.70 | 0.44 | 0.52 | 0.66 | 0.46 | 0.28 |

| Model Features | Average Accuracy Rate of Emotion Classification | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Two-Class Valence | Two-Class Arousal | Four-Class Valence–Arousal | ||||||||||

| SVM | KNN | ANN | NB | SVM | KNN | ANN | NB | SVM | KNN | ANN | NB | |

| 0.56 | 0.63 | 0.67 | 0.55 | 0.53 | 0.63 | 0.50 | 0.40 | 0.36 | 0.46 | 0.32 | 0.27 | |

| 0.60 | 0.61 | 0.65 | 0.59 | 0.57 | 0.63 | 0.58 | 0.40 | 0.40 | 0.47 | 0.33 | 0.25 | |

| 0.67 | 0.66 | 0.72 | 0.64 | 0.66 | 0.70 | 0.64 | 0.45 | 0.46 | 0.54 | 0.41 | 0.28 | |

| 0.65 | 0.68 | 0.73 | 0.66 | 0.65 | 0.71 | 0.65 | 0.40 | 0.48 | 0.58 | 0.42 | 0.26 | |

| 0.66 | 0.62 | 0.69 | 0.61 | 0.63 | 0.66 | 0.61 | 0.42 | 0.45 | 0.48 | 0.35 | 0.26 | |

| 0.68 | 0.61 | 0.66 | 0.62 | 0.64 | 0.63 | 0.60 | 0.43 | 0.42 | 0.46 | 0.34 | 0.28 | |

| 0.67 | 0.63 | 0.68 | 0.66 | 0.65 | 0.65 | 0.62 | 0.41 | 0.45 | 0.48 | 0.38 | 0.29 | |

| 0.66 | 0.63 | 0.71 | 0.64 | 0.64 | 0.68 | 0.62 | 0.40 | 0.45 | 0.53 | 0.36 | 0.25 | |

| 0.63 | 0.61 | 0.66 | 0.61 | 0.63 | 0.65 | 0.59 | 0.40 | 0.43 | 0.49 | 0.36 | 0.26 | |

| Average | 0.64 | 0.63 | 0.69 | 0.62 | 0.62 | 0.66 | 0.60 | 0.41 | 0.43 | 0.50 | 0.36 | 0.27 |

| Model | SVM | KNN | ANN | NB | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | PS | RC | F1 | PS | RC | F1 | PS | RC | F1 | PS | RC | F1 |

| Neutral | 0.80 | 1.00 | 0.90 | 0.92 | 1.00 | 0.99 | 0.84 | 0.99 | 0.91 | 0.47 | 0.62 | 0.54 |

| Negative | 0.68 | 0.56 | 0.62 | 0.85 | 0.83 | 0.84 | 0.58 | 0.50 | 0.54 | 0.41 | 0.33 | 0.37 |

| Positive | 0.69 | 0.60 | 0.64 | 0.90 | 0.76 | 0.82 | 0.62 | 0.60 | 0.61 | 0.40 | 0.35 | 0.38 |

| Model | SVM | KNN | ANN | NB | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | PS | RC | F1 | PS | RC | F1 | PS | RC | F1 | PS | RC | F1 |

| Neutral | 0.74 | 0.97 | 0.84 | 0.72 | 1.00 | 0.85 | 0.74 | 0.95 | 0.82 | 0.48 | 0.53 | 0.51 |

| Active | 0.59 | 0.49 | 0.54 | 0.66 | 0.58 | 0.61 | 0.56 | 0.42 | 0.48 | 0.37 | 0.31 | 0.34 |

| Calm | 0.61 | 0.52 | 0.56 | 0.71 | 0.48 | 0.57 | 0.55 | 0.57 | 0.56 | 0.44 | 0.47 | 0.45 |

| Model | SVM | KNN | ANN | NB | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | PS | RC | F1 | PS | RC | F1 | PS | RC | F1 | PS | RC | F1 |

| Neutral | 0.55 | 0.81 | 0.65 | 0.58 | 0.92 | 0.71 | 0.55 | 0.76 | 0.64 | 0.30 | 0.42 | 0.35 |

| HVHA | 0.33 | 0.34 | 0.33 | 0.44 | 0.51 | 0.48 | 0.32 | 0.36 | 0.34 | 0.25 | 0.19 | 0.21 |

| HVLA | 0.35 | 0.28 | 0.31 | 0.49 | 0.41 | 0.45 | 0.37 | 0.28 | 0.32 | 0.20 | 0.11 | 0.14 |

| LVHA | 0.37 | 0.28 | 0.32 | 0.45 | 0.38 | 0.41 | 0.33 | 0.23 | 0.27 | 0.22 | 0.26 | 0.24 |

| LVLA | 0.38 | 0.36 | 0.37 | 0.46 | 0.27 | 0.34 | 0.37 | 0.39 | 0.38 | 0.28 | 0.31 | 0.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bouyam, C.; Siribunyaphat, N.; Sahoh, B.; Punsawad, Y. Decoding Self-Imagined Emotions from EEG Signals Using Machine Learning for Affective BCI Systems. Symmetry 2025, 17, 1868. https://doi.org/10.3390/sym17111868

Bouyam C, Siribunyaphat N, Sahoh B, Punsawad Y. Decoding Self-Imagined Emotions from EEG Signals Using Machine Learning for Affective BCI Systems. Symmetry. 2025; 17(11):1868. https://doi.org/10.3390/sym17111868

Chicago/Turabian StyleBouyam, Charoenporn, Nannaphat Siribunyaphat, Bukhoree Sahoh, and Yunyong Punsawad. 2025. "Decoding Self-Imagined Emotions from EEG Signals Using Machine Learning for Affective BCI Systems" Symmetry 17, no. 11: 1868. https://doi.org/10.3390/sym17111868

APA StyleBouyam, C., Siribunyaphat, N., Sahoh, B., & Punsawad, Y. (2025). Decoding Self-Imagined Emotions from EEG Signals Using Machine Learning for Affective BCI Systems. Symmetry, 17(11), 1868. https://doi.org/10.3390/sym17111868