1. Introduction

With the rapid expansion of urban areas, urban rail transit (URT) has become an increasingly essential component of public transportation. It plays a key role in addressing rising travel demands, easing surface traffic congestion, and mitigating environmental pollution from vehicular emissions [

1,

2]. In large metropolitan areas, URT, characterized by high service frequency and dense operational intensity, effectively accommodates the massive commuting demand on weekdays and has evolved into the backbone of the urban public transportation system [

3,

4]. However, due to factors such as urban spatial planning, network topology, and the diversity of passenger travel behaviors, URT exhibit pronounced temporal heterogeneity in passenger demand patterns. To ensure operational efficiency and achieve the optimal allocation of limited transit resources, train operation schemes must be flexibly adjusted in accordance with the temporal dynamics of passenger demand. A widely adopted strategy in practice is to divide the service day into multiple distinct times (e.g., peak and off-peak hours [

5,

6,

7]), within which key scheduling parameters, such as headways, train allocations, and travel times, are adjusted according to anticipated demand. Hence, accurate division of operating periods underpins demand-responsive strategies in URT, supporting timetable optimization, crew scheduling, and station-level resource allocation.

However, in practical applications, the division of operational periods often relies heavily on the subjective judgment of operators [

8,

9], which is typically based on limited experience or imprecise analyses of passenger demand data [

10]. A common approach is to depict the temporal distribution of total passenger flow throughout the day and manually determine period breakpoints based on significant changes in passenger flow [

10]. Such practices give rise to two main issues. First, subjective factors may lead to inappropriate period divisions; second, passenger flow volume alone cannot comprehensively capture the multidimensional characteristics of passenger demand. Moreover, influenced by urban planning and development, different lines within the URT network undertake distinct spatial functions and serve varying areas. Consequently, the intensity, temporal distribution, and variation patterns of passenger demand often differ significantly across lines and directions. For instance, on lines connecting suburban districts and the urban areas, passenger flows predominantly move toward downtown during the day, whereas in the evening, the reverse flow toward the suburbs becomes more pronounced [

11], resulting in evident temporal asymmetry in passenger flow between the two directions of the same line. Operators often employ a unified time-period segmentation strategy across the entire network or apply symmetric operational time arrangements, assigning identical peak and off-peak periods to both directions of the same line. Such practices may obscure the intrinsic differences in passenger flow characteristics and fail to capture the spatiotemporal complexity and variability of passenger demand, which arise from variations in passengers’ departure time preferences, route choices, and commuting regularities. The supply of train capacity should be precisely matched with passenger travel demand to achieve the rational allocation of resources. For directions with high passenger volumes, higher train departure frequencies should be implemented to accommodate concentrated travel demand, whereas for directions with lower demand, operating at lower frequencies can still ensure an adequate level of service quality. Furthermore, the time windows of high- and low-demand passenger flows differ across lines and travel directions. Therefore, applying a unified operating period scheme and corresponding departure frequency may lead to either excess or insufficient capacity, directly affecting the operational efficiency and cost of urban rail transit systems.

Consequently, relying on empirical judgment and uniform division impedes the ability of URT to accurately respond to dynamic passenger demand patterns and undermines the precision and efficiency of resource allocation. Passenger flow analysis methods based on macroscopic statistics are limited in their ability to comprehensively characterize the spatiotemporal distribution of passenger demand and to accurately identify its asymmetry. The widespread deployment of automated fare collection (AFC) systems has enabled the acquisition of large-scale, high-resolution passenger flow data, providing new opportunities for refined, data-driven operational planning in URT. In this context, it is imperative to develop robust methodologies capable of handling diverse and dynamically changing passenger flow patterns while precisely dividing operational periods.

Although prior studies have explored data-driven approaches for operating period division—such as time series clustering [

12], hierarchical clustering [

13,

14], and optimization-based breakpoint detection [

15]—several challenges remain in their practical application to URT. Time-series clustering methods are often constructed based solely on passenger flow features or statistical measures derived from passenger flow [

12,

16], thereby neglecting the multi-dimensional nature of passenger demand. Fisher’s least squares partition algorithm [

17] is commonly employed in time-series clustering. Preserving the temporal order during clustering, however, renders the results highly sensitive to outliers and thus susceptible to noise. Hierarchical clustering, which is not constrained by temporal ordering, evaluates similarity between samples using inter-sample distances [

18], and has been applied to problems such as intersection signal timing optimization [

13] and the division of bus operation periods [

14]. Nevertheless, its performance often deteriorates when dealing with datasets characterized by complex structures [

19]. Heuristic-based clustering methods for operating period division are essentially optimization problems, in which the quality of the clustering results depends strongly on the choice of model and algorithm, and they are prone to becoming trapped in local optima. In contrast, K-means provides relatively high computational efficiency and a certain degree of interpretability, making it suitable for clustering multi-dimensional time-period samples [

9]. However, its performance depends largely on the initialization of the centroid. When dealing with high-dimensional data characterized by strong fluctuations and non-spherical clusters, K-means often produces suboptimal results. Moreover, the clustering results should not only reflect the effectiveness of period division but also ensure practical interpretability and robustness. This allows the results to remain consistent and rational even in the presence of noise and data fluctuations, thereby improving the reliability of operational management.

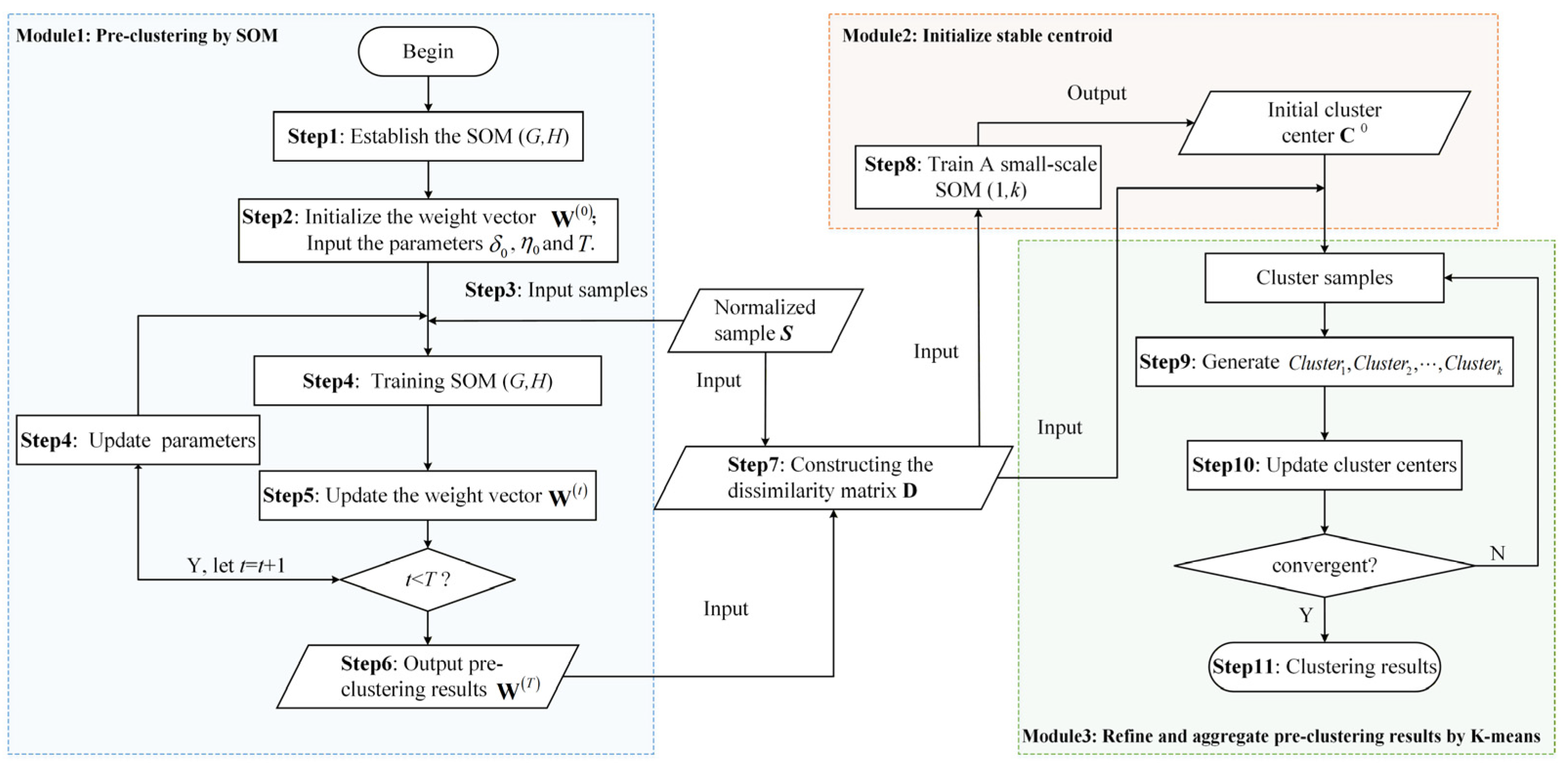

To address these limitations, this study employs a hybrid SOM–K-means approach. The self-organizing map (SOM) is a type of feedforward neural network whose primary advantage lies in its ability to map high-dimensional input data onto a low-dimensional (typically two-dimensional) grid, thereby enabling intuitive visualization of clustering results. During this process, SOM generates a set of prototype vectors (i.e., weight vectors) that characterize the distribution of the original sample data. Each prototype vector represents the local average of neighboring samples, effectively smoothing out random noise in the original data [

20]. Previous studies have demonstrated that SOM exhibits strong robustness when clustering data containing noise [

21,

22,

23]. When clustering operational periods based on passenger travel data derived from AFC systems, the inherent randomness of passenger demand may introduce noise into the sample data, thereby hindering the accurate identification of cluster boundaries and reducing the stability and reliability of clustering results. Moreover, relying solely on passenger flow volume is insufficient to comprehensively capture the behavioral characteristics and latent patterns of passenger demand. Therefore, constructing a multidimensional feature framework is essential for a more holistic representation of passenger flow samples and for achieving operational period segmentation that more accurately aligns with actual demand.

Benefiting from the superior clustering performance and robustness of SOM in handling multidimensional noisy data, this study employs SOM for pre-clustering the original passenger flow samples, effectively filtering out noise and smoothing the raw data. However, the number of clusters generated by SOM is often substantially higher than the actual number required, making the pre-clustering results difficult to directly translate into operationally meaningful period divisions, thereby reducing the interpretability of the clustering outcomes. To address this limitation, K-means is employed as a post-processing step to refine the initial SOM clusters, thereby enhancing their interpretability and applicability for operational period division. Such a two-stage clustering framework has been shown to be more effective in identifying potential data partitions and enhancing the interpretability of clustering outcomes [

23]. Nevertheless, most existing studies on the SOM–K-means approach have largely overlooked the potential risk of convergence to local optima caused by the random initialization of K-means centroids [

24,

25,

26], which compromises the stability of clustering outcomes and poses challenges to obtaining consistent operational period divisions in practice. To overcome this issue, a small-scale SOM network is specifically designed in this study to initialize the K-means cluster centers, significantly improving the stability and accuracy of the final results by mitigating the uncertainties associated with random initialization.

To evaluate the effectiveness of this framework, a case study utilizing real-world AFC data from the Tianjin Rail Transit is conducted. The main contributions of this study include:

This study establishes a novel multi-dimensional time-period sample space that incorporates total volume, microscopic variations, and macroscopic distribution of passenger flow. Unlike previous studies that constructed clustering samples solely based on total passenger flow [

8,

12,

16], the proposed framework captures passenger flow characteristics more comprehensively, thereby providing finer insights into passenger travel demand.

To address the limitations of conventional single clustering methods [

12,

13,

14] in processing multi-dimensional and noisy passenger flow data, this study proposes a hybrid self-organizing map (SOM)–K-means framework. The SOM is employed to pre-cluster the original sample data, effectively filtering and smoothing noise [

20]. Subsequently, the K-means refines and merges the preliminary clusters to generate final clusters with improved interpretability and practical applicability. Moreover, a small-scale SOM network is specifically designed to initialize K-means centroids, mitigating the uncertainty associated with random initialization and enhancing the robustness and accuracy of URT operational period division. Notably, this improvement has been largely overlooked in previous studies employing the SOM–K-means framework [

24,

25,

26].

In contrast to prior studies that predominantly examine a single line or travel direction [

8,

9,

12], this study employs real-world URT passenger travel data to empirically reveal the intrinsic asymmetry of operational periods across different lines and travel directions, driven by heterogeneous passenger demand structures and spatiotemporal distribution patterns. The findings verify the effectiveness and applicability of the proposed method in supporting fine-grained operational management.

The remainder of this paper is organized as follows.

Section 2 reviews the related literature.

Section 3 describes the construction of the sample space in detail.

Section 4 introduces the SOM–K-means clustering framework.

Section 5 presents a case study on Tianjin Rail Transit Lines 1 and 2 to validate the effectiveness of the proposed hybrid clustering method. Finally,

Section 6 concludes the main findings and discusses directions for future work.

2. Literature Review

Research on operating period division in the transportation domain originated from studies on optimizing time-of-day (TOD) signal timing plans. Smith et al. [

13] applied hierarchical clustering to traffic flow and occupancy data collected via intelligent transportation system sensors, determining optimal breakpoints for adaptive signal timing. Similarly, Park et al. [

27] used historical traffic volume data and a genetic algorithm to dynamically determine time breakpoints, reducing total intersection delays. Chen et al. [

28] compared K-means, hierarchical clustering, and Fisher’s ordered clustering on directional traffic volume samples, finding that Fisher’s method delivered superior performance in minimizing control delay, queue length, and stop frequency, offering a more nuanced and responsive TOD segmentation framework. These studies typically focus on a single feature—traffic volume—and construct relatively simple sample structures, allowing classical clustering algorithms to efficiently process the data and achieve satisfactory clustering results.

Parallel research in public bus transit has focused on timetable formulation and service reliability. Salicrú et al. [

14] introduced a hierarchical classification algorithm to segment daily operating hours, enabling more punctual and demand-responsive timetables. Bie et al. [

10] analyzed GPS-based dwell times and travel times to establish segmentation thresholds for daily operations. Mendes-Moreira et al. [

29] applied decision tree methodologies to identify recurring daily operational patterns throughout the year. This enabled the generation of stratified timetables better aligned with seasonal variations in travel demand. In another notable contribution, Shen et al. [

30] refined K-means centroid initialization and distance metrics to achieve more accurate segmentation of daily service hours using one-way trip time as the core feature vector. Jin et al. [

15] further extended this line of research by integrating multi-source data, such as passenger demand and vehicle operational data into an optimization model. Their work employed a genetic algorithm to determine optimal segmentation breakpoints aimed at minimizing fleet operating costs, offering a more holistic perspective that considers both service provision and resource efficiency. Overall, in studies on dividing bus operating period, time-period samples have been constructed using multi-dimensional features, and clustering algorithms suitable for high-dimensional data have increasingly attracted attention. Nevertheless, these studies remain limited in capturing the temporal dynamics of passenger flow or traffic volume.

Compared with studies in the contexts of bus systems and traffic signal control, research on URT operating period division remains relatively limited. Existing studies have primarily focused on constructing time samples by extracting features from inbound passenger volumes or sectional flows between adjacent stations and then applying clustering-based methods to group periods with similar passenger flow characteristics. For instance, Zeng et al. [

12] constructed unidirectional OD probability matrices based on intra-period passenger flow data and applied an ordered sample clustering method, incorporating similar interstation-passenger-transfer rules to generate operational period divisions. Although this approach accounts for the spatial transfer characteristics of passenger flows, the sample features are primarily based on statistical metrics of flow volume and fail to adequately capture the temporal dynamics of passenger demand. Wang et al. [

8] constructed time samples using inbound passenger volumes and employed the affinity propagation algorithm to cluster and merge samples with similar characteristics. The optimized division of operating periods significantly reduced the average passenger waiting time. However, the study relied solely on inbound volumes, which provides an incomplete representation of dynamic passenger demand across periods. Chen et al. [

9] constructed a feature set comprising six indicators—including the maximum, minimum, mean, standard deviation, and rise/fall time ratios of passenger volume—to characterize the temporal patterns of passenger flow. Subsequently, the K-means clustering algorithm was employed to merge representative periods, resulting in a more refined operational period division scheme. However, the constructed samples primarily reflect prominent trends in passenger flow variation and do not provide quantitative measures of intra-period variability. In addition, the robustness and stability of K-means clustering when applied to multi-dimensional feature sets remain unexamined. Tang et al. [

16] emphasized the pronounced spatiotemporal heterogeneity of URT passenger flows and proposed an ordered clustering method that integrates both temporal dynamics and spatial distribution characteristics. The resulting clusters exhibit strong interpretability and practical applicability, significantly enhancing the accuracy of operating period division. While the present approach better accounts for variations across space and time, it still relies on sectional flows as the primary feature, thereby neglecting other critical dimensions of passenger demand, such as intra-period variability.

Although the aforementioned studies have laid a foundation for dividing operational periods in URT, several limitations remain to be addressed: (1) they primarily focus on single-dimensional features of passenger flow, neglecting intra-period dynamics, which may result in divisions that inadequately reflect actual passenger demand; (2) single clustering algorithms exhibit limited stability when handling multi-dimensional and noisy data. Algorithms such as K-means, affinity propagation algorithm, and ordered sample segmentation algorithm mainly rely on sample distance variations during clustering, rendering the results highly sensitive to outliers. However, the stability of cluster outcomes has been largely overlooked in previous studies; (3) most existing research concentrates on individual lines or travel directions, lacking systematic validation of the proposed methods across different lines or directions in the URT network. Notably, the potential asymmetry of operational periods among different lines or travel directions remains largely unexplored.

To address these research gaps, this study first constructs a multi-dimensional feature indicator system for time samples in terms of three dimensions of passenger flow: total volume, microscopic fluctuation, and macroscopic distribution, thereby overcoming the limitations of prior studies that relied solely on passenger volume. Second, a hybrid SOM–K-means clustering framework is proposed to effectively handle high-dimensional, noisy data, attenuate the influence of outliers, and ensure robust and stable clustering outcomes. Third, the scope of the analysis is extended across multiple lines and directions of URT, revealing inherent asymmetries in operational period schemes.

5. Case Study

5.1. Case Study Description

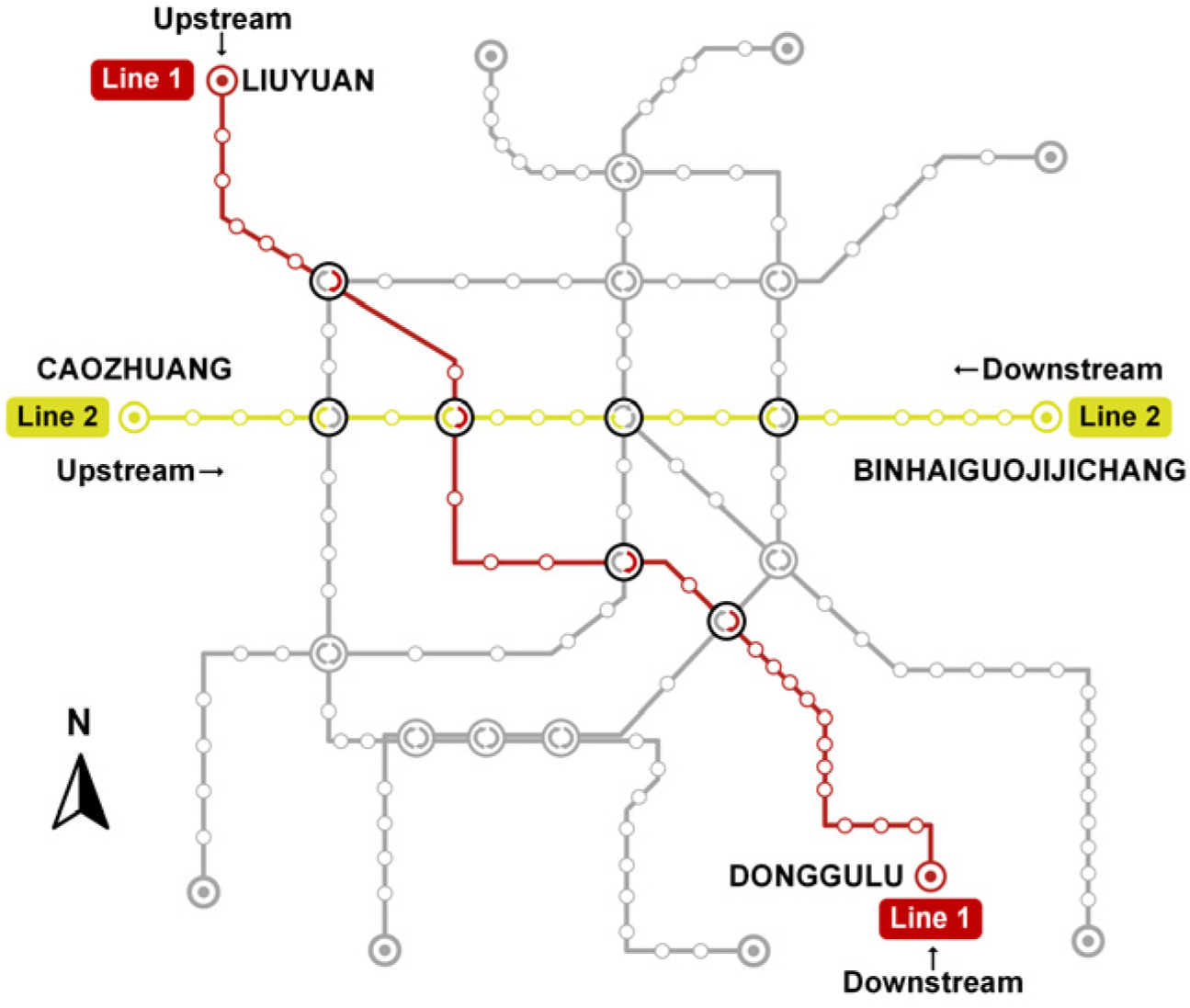

This section employs Tianjin URT Lines 1 and 2 as case studies to validate the effectiveness of the proposed clustering algorithm. Lines 1 and 2 serve as the primary north–south and east–west corridors of the Tianjin URT network, respectively, and constitute essential components of the city’s daily commuting system, as illustrated in

Figure 2.

On a typical working day, the average passenger flow on both lines exhibits regular temporal fluctuations, which display a clear bimodal distribution, as illustrated in

Figure 3. The current division of operational periods is summarized in

Table 1.

As shown in

Table 1, the existing time divisions for Lines 1 and 2 are generally consistent, with the only difference being the end time of the late off-peak period, which is caused by variations in last train operation times. The division of operational periods for the upstream and downstream directions of the same line also demonstrates a certain degree of temporal symmetry. However,

Figure 3 reveals that the passenger flow characteristics of the two lines differ, a discrepancy that is not reflected in the current period division schemes.

To ensure consistency in the temporal scope of all samples, the operational period for both directions of Lines 1 and 2 is standardized to 06:00–23:59. Based on Equation (11), the sample space is constructed accordingly, consisting of 1079 samples and 9 features.

All algorithms in this study were implemented in MATLAB 2021b and executed on a personal computer equipped with an Intel i7-14700HX CPU (Intel Corporation, Santa Clara, CA, USA) and 16 GB of RAM (Ramaxel Co., Ltd., Shenzhen, China), and the Windows 11 operating system. Prior to the clustering analysis, the sample data are normalized using min-max standardization.

5.2. Clustering Results and Discussion

This section first presents the preliminary clustering results of the SOM applied to the initial sample space.

5.2.1. SOM Topology Size

A two-dimensional SOM network of size is employed to perform preliminary clustering on the original samples, where denotes the number of neurons along each dimension. The topology size directly affects the network’s ability to represent the input space with sufficient granularity, as well as the computational efficiency. An undersized SOM may fail to capture the intrinsic structure of the data, leading to reduced clustering performance, whereas an oversized topology may result in redundant representations and increased training time.

To evaluate the impact of different SOM network sizes (

) on overall clustering performance, a performance metric

is designed to simultaneously reflect clustering accuracy and training efficiency under varying topological scales, as expressed in Equation (22).

Here,

denotes the sum of the distance between each sample and the weight vector of its corresponding neuron in the clustering results obtained from the SOM network of size

, as defined in Equation (23).

represents the training time (in seconds) of the SOM network with topology size

.

where

denotes the weight vector of the neuron corresponding to sample

after SOM training. To eliminate dimensional differences, both

and

were standardized prior to calculating

. A smaller value of the performance metric

indicates better overall clustering performance.

Figure 4 illustrates the variation in

with the SOM topology size

across different lines. In each scenario, the network was trained independently 20 times with an initial learning rate of

and 1000 training iterations, and the average value of

was reported. The parameter

was varied from 3 to 32. Based on the results, the optimal SOM network structures were determined to be 14 × 14 for Line 1 (both directions) and Line 2 (upstream), and 12 × 12 for Line 2 (downstream).

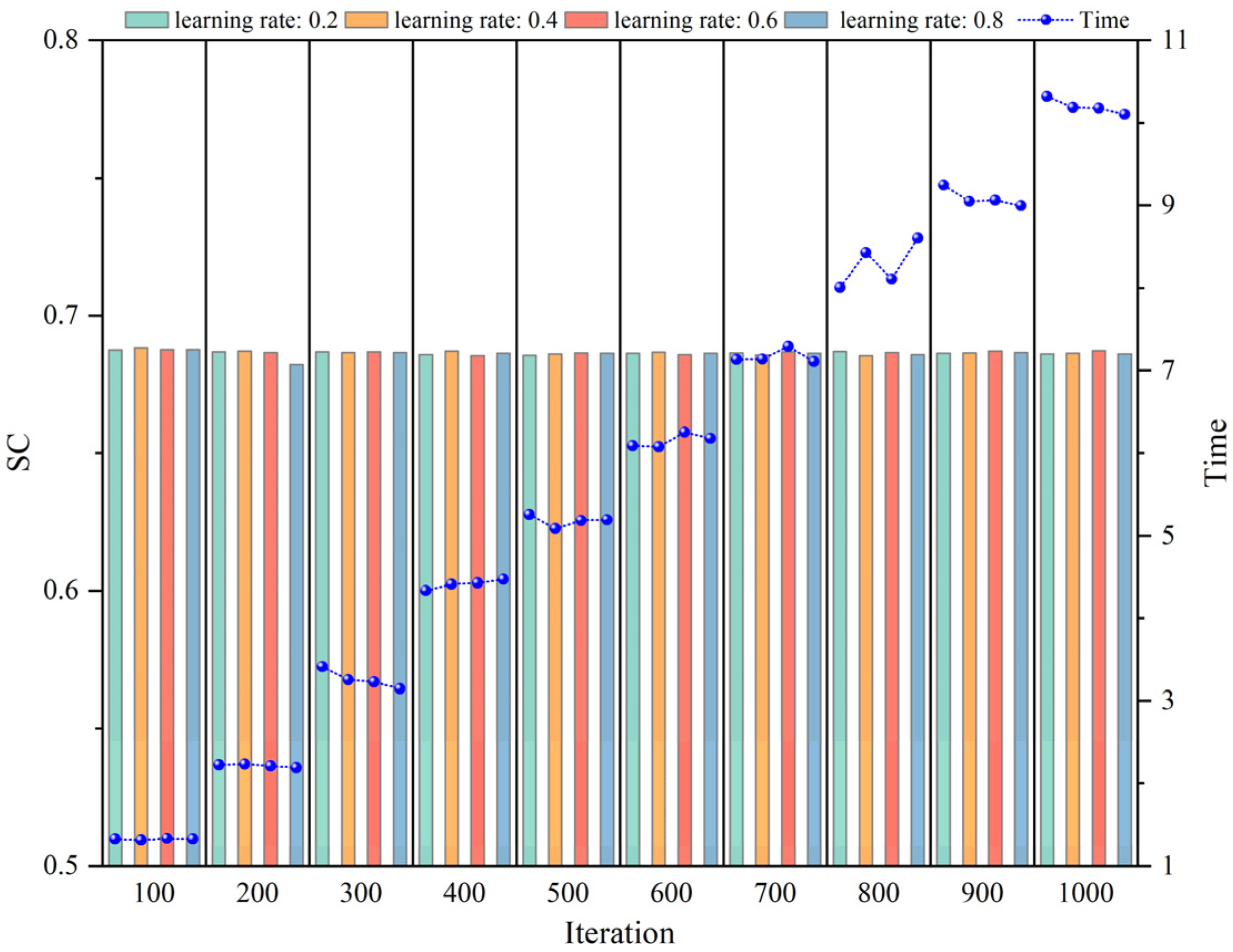

5.2.2. Parameter Sensitivity Analysis

To systematically evaluate the sensitivity of the SOM model to parameter settings, the upstream direction of Line 1 was selected as a case study. Two parameters were examined: the number of iterations and the initial learning rate. These parameters play distinct roles in SOM training: the former primarily determines the convergence of network weights, while the latter affects both the convergence speed and the adequacy of training. Multiple parameter combinations were designed, and the resulting silhouette coefficients and computation times were compared to assess the stability and computational cost of SOM under different configurations, as illustrated in

Figure 5.

As shown in

Figure 5, the silhouette coefficient remained nearly constant across different parameter combinations, with only negligible fluctuations. This indicates that the clustering boundaries and intra-cluster compactness of SOM–K-means are largely unaffected by parameter variations. Specifically, increasing the number of iterations did not yield further improvements in clustering performance, suggesting that the algorithm achieves convergence rapidly. Similarly, while different initial learning rates influenced the rate of weight updates during training, the final clustering results were consistent. These findings demonstrate the robustness of SOM–K-means in operating period division, with clustering performance insensitive to parameter perturbations.

In contrast, the computational efficiency exhibited clear dependency on parameter settings. For a fixed number of iterations, varying the initial learning rate had almost no effect on runtime, indicating that the step size of updates contributes little to the overall computational complexity. However, increasing the number of iterations substantially prolonged computation time in an approximately linear manner. This trend is consistent with the iterative mechanism of SOM, where each additional iteration requires a full traversal of the samples and weight updates, resulting in computation time proportional to the number of iterations.

In summary, SOM–K-means exhibited stable clustering performance across a wide range of parameter values, with neither the number of iterations nor the initial learning rate significantly affecting clustering validity. Nevertheless, runtime was highly sensitive to the number of iterations, where excessive iterations markedly increased the computational burden without performance gains. Considering this trade-off, the parameter combination of 100 iterations and an initial learning rate of 0.4 was selected in this study, achieving a balance between accuracy and efficiency while avoiding unnecessary computational redundancy.

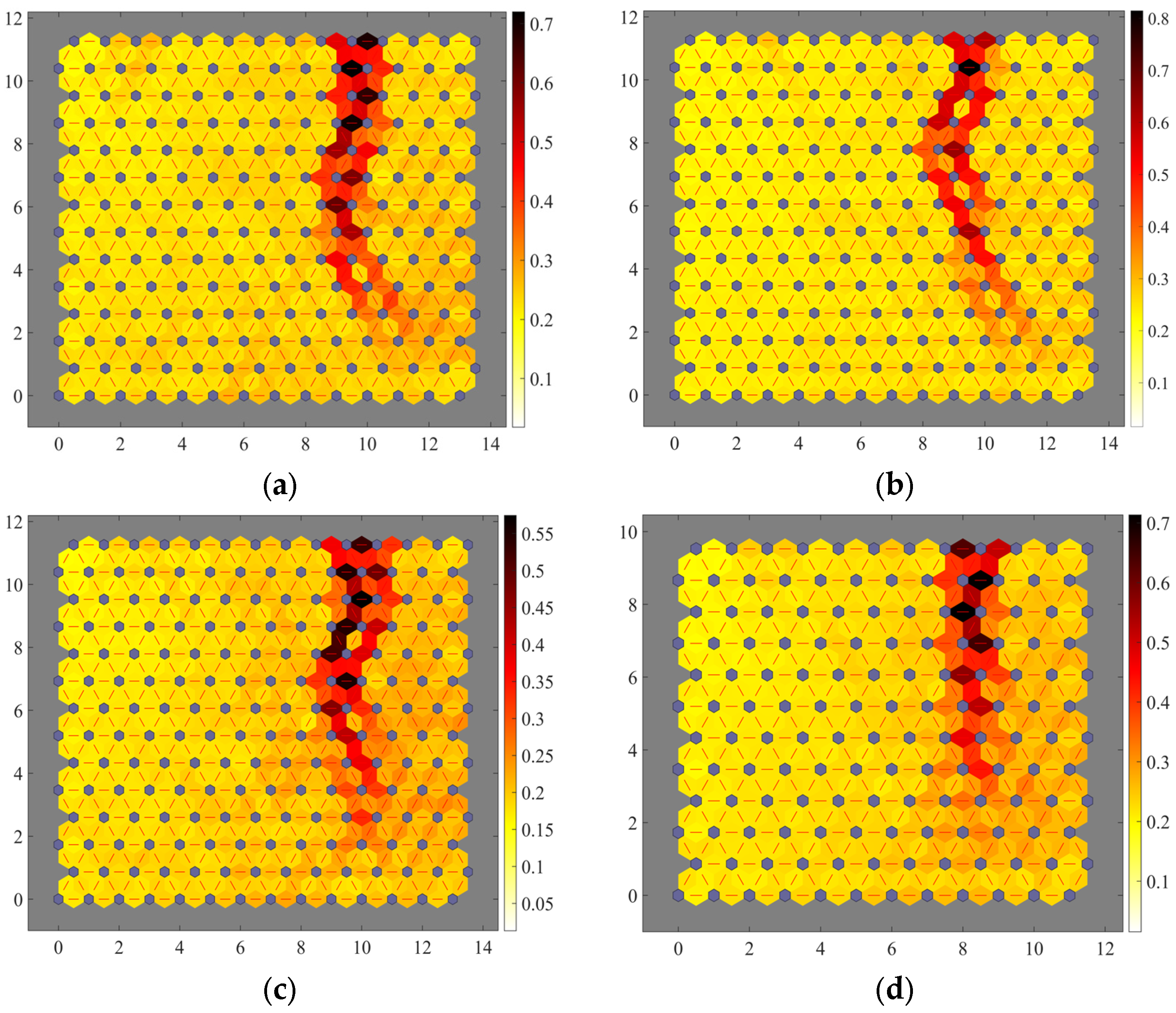

5.2.3. Pre-Clustering Results by SOM

Based on the SOM–K-means algorithm proposed in

Section 4, this subsection first applies the SOM component to perform pre-clustering on the raw data. The core advantage of SOM lies in its topology-preserving property—it can map high-dimensional passenger flow features onto a low-dimensional (two-dimensional) neuron grid while retaining the similarity structure of the original data [

39]. Meanwhile, SOM provides a visualization technique for multidimensional clustering results, enabling an intuitive understanding of the relationships among data [

40]. Through SOM pre-clustering, data samples with similar passenger flow patterns are merged into adjacent neurons, whereas those with distinct patterns are assigned to neurons with larger distances. The U-matrix is then used to visualize the topological relationships among neurons.

Figure 6 illustrates the preliminary clustering results using the U-matrix. The U-matrix depicts the distance distribution between neighboring neurons after SOM training. In the figure, light-colored regions indicate small differences between adjacent neurons, reflecting similar passenger flow patterns, whereas dark-colored regions correspond to larger differences, representing potential cluster boundaries.

Across all four directions, the U-matrix visualization reveals a prominent dark boundary that divides the map. Overall, the left side of the map exhibits large, contiguous light-colored regions, indicating high feature homogeneity among the corresponding time periods. Considering the actual URT operation, this region corresponds to off-peak periods characterized by stable and low-variation passenger demand. In contrast, the right side of the boundary contains several smaller light-colored regions, representing relatively consistent patterns of limited scope, which correspond to typical peak-hour periods. The dark nodes between these regions indicate blurred cluster boundaries, reflecting the complexity of passenger flow dynamics, such as the ramp-up and ramp-down phases of morning and evening peaks. Notably, isolated dark nodes are also observed in boundary regions, likely corresponding to time periods with abnormal characteristics, such as the start of service or late-night low-demand intervals.

Overall, the U-matrix visualization demonstrates that the SOM effectively captures the main structures of passenger flow patterns, while also highlighting issues of overly fine clustering and ambiguous boundaries. From an operational perspective, directly adopting the preliminary clustering results could lead to an excessively fragmented timetable, which presents several challenges. First, too many time intervals would cause frequent changes in train headways, increasing vehicle circulation complexity and the risk of operational delays. Second, crew scheduling would become more difficult, further complicating dispatching tasks. These factors could ultimately reduce the reliability and robustness of the timetable, adversely affecting passenger service quality. Furthermore, due to the inherent topological constraints of SOM, relationships between non-adjacent neurons cannot be effectively captured. Therefore, in practice, it is necessary to further integrate and refine the preliminary clustering results to develop a more concise and robust operating period scheme, thereby ensuring operational feasibility in practice. This is the core reason for the subsequent introduction of K-means in this study.

5.2.4. Clustering Results Refined by K-Means

To address the limitations of SOM pre-clustering, K-means clustering is introduced in this section to overcome the local topological constraints of SOM. From a global perspective, it merges feature-similar pre-clustering results and generates an operational period division scheme that supports practical operational decision-making.

First, a dissimilarity matrix

is constructed based on the weight vectors of all neurons in the trained SOM network and the original sample data. Each element in the matrix represents the Euclidean distance between a sample and a neuron, effectively projecting the original high-dimensional data into a distance space defined by the neurons. In the process of operational time-period division, compared with directly measuring sample similarity based on “sample-to-sample distance”, the “sample-to-neuron distance” not only preserves the inherent structural differences among original samples but also smooths data noise, reducing the impact of outliers on the clustering results. As previously mentioned in

Section 4.1, the dissimilarity matrix

is used as the input for K-means and captures the similarity between samples by measuring their relative distances to all neurons.

The high dimensionality of the dissimilarity matrix makes K-means highly sensitive to the initialization of cluster centers. Randomly selecting samples from as the initial cluster centers may cause the algorithm to fall into a local optimum, thereby directly lead to instability and reduced clustering accuracy. To address this issue, a SOM network with a topology of is constructed to generate initial cluster centers. The training parameters of this SOM are consistent with those used for the pre-clustering SOM, except that the number of training iterations is reduced to 20 to improve the efficiency of cluster centers initialization.

Theoretically, after a small number of training iterations, this small-scale SOM exhibits a certain degree of distance and distinction among its weight vectors. Using these weight vectors as the initial centroids for K-means enables the centroids to be evenly distributed across different passenger flow pattern groups, thereby preventing the initial centroids from being too close to each other, accelerating the convergence of the algorithm toward the global optimum, and improving clustering stability.

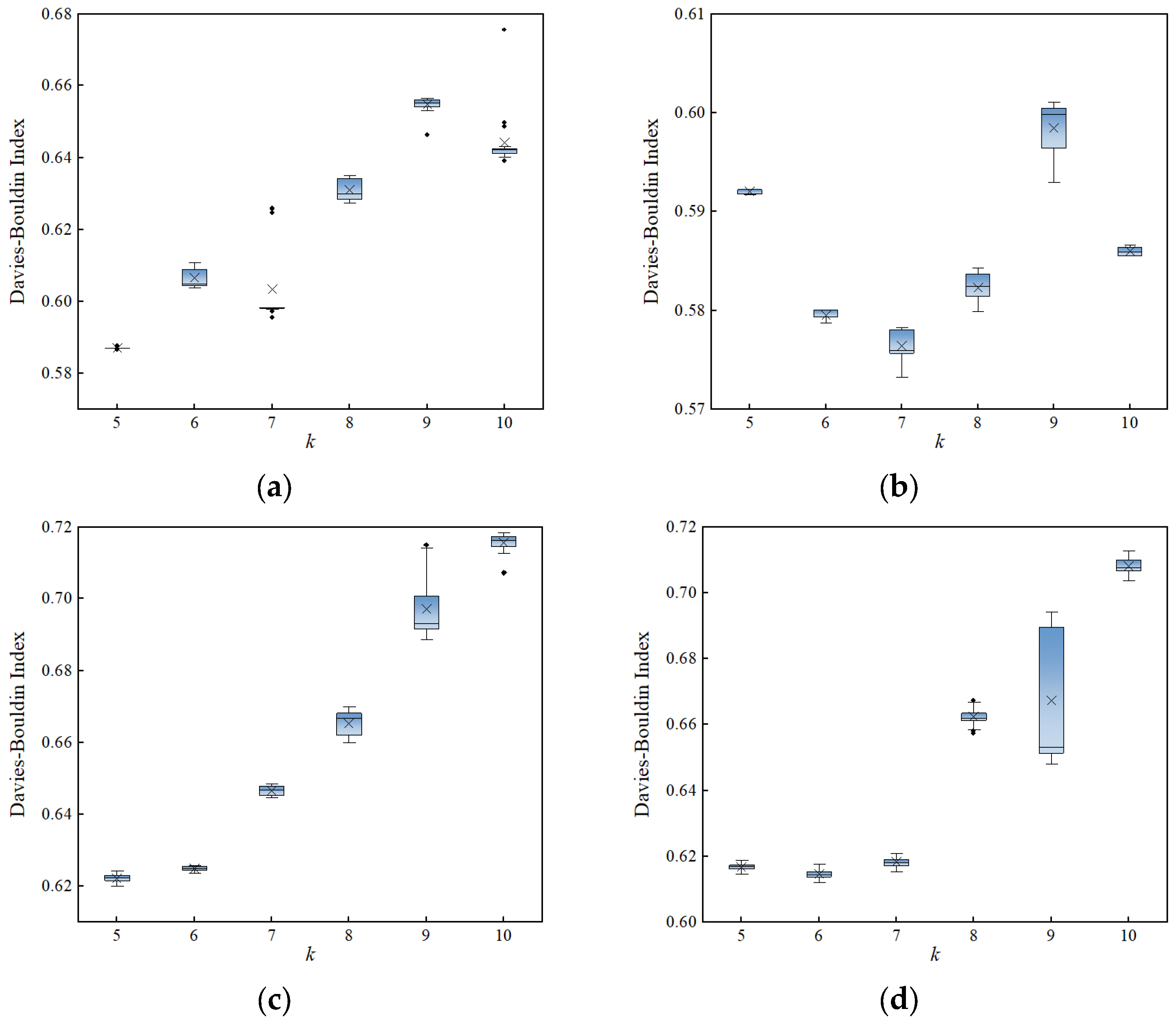

The value of

is set from 5 to 10 with an increment of 1. The improved K-means is then applied to cluster the dissimilarity matrix

. For each value of

, the algorithm is independently executed 20 times. The DBI values are calculated according to Equation (19) and illustrated in

Figure 7.

As shown in

Figure 7, applying K-means clustering with initial centers generated by the SOM network yields more stable clustering results, particularly for the upstream direction of Line 2. For each set of repeated experiments, the maximum variation in the DBI does not exceed 0.05, with the largest fluctuation observed in the downstream direction of Line 2 (at

). According to the definition of DBI, relatively low DBI values indicate that clusters are well separated and internally consistent, which means that the passenger demand patterns represented by samples in different periods have clearer boundaries. The train scheduling plan formulated based on the clustering-derived period schemes can more accurately match the passenger demand patterns of each cluster. Conversely, high DBI values indicate insufficient distinction between clusters, and some operating periods may simultaneously contain multiple different passenger demand patterns. This mixed characteristic makes it difficult to develop targeted train operation plans, reducing the precision with which train scheduling meets passenger demand. The DBI analysis shows that the optimal number of clusters for the four directions shown in

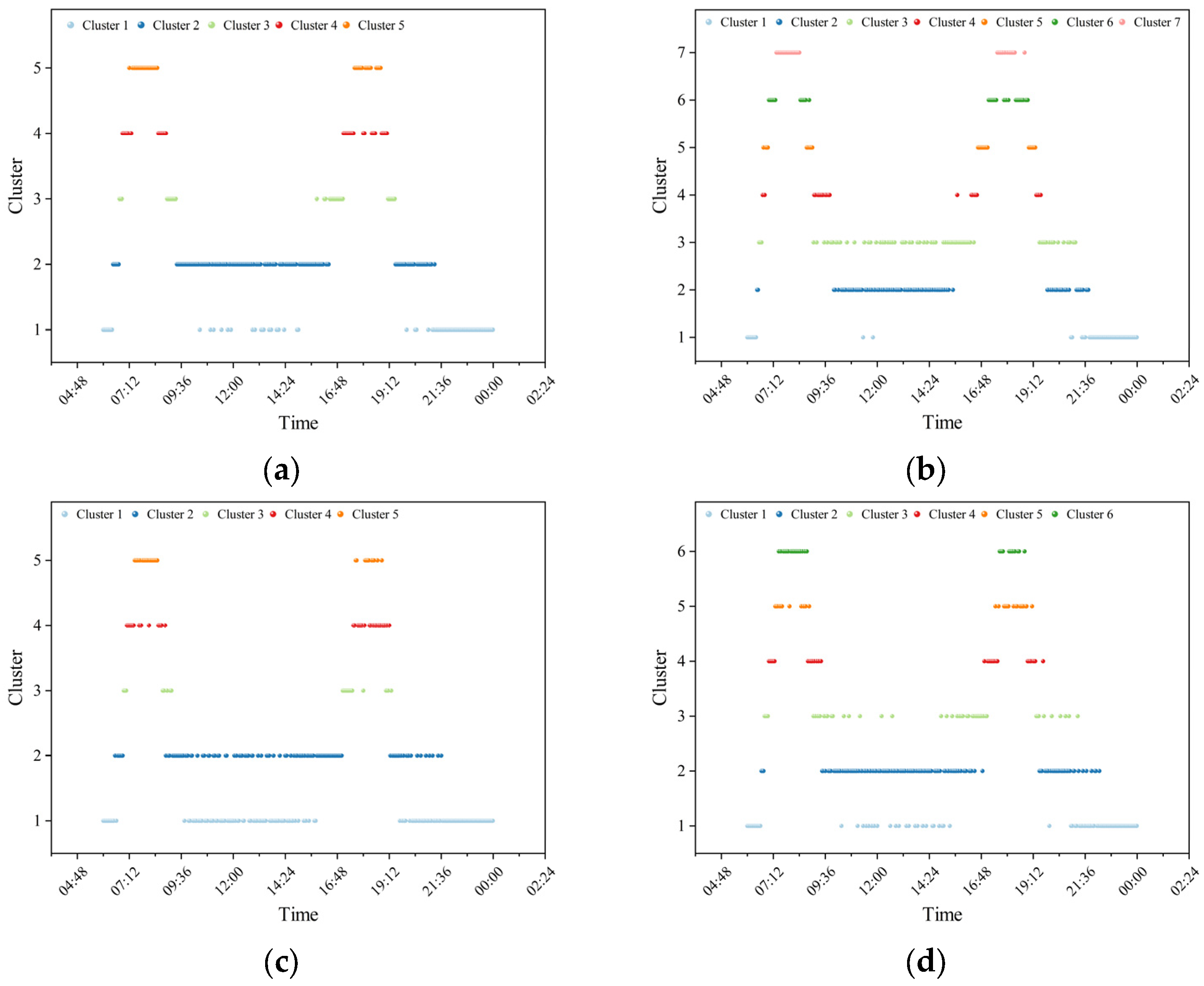

Figure 7 is determined to be 5, 7, 5, and 6, respectively.

Figure 8 illustrates the clustering-based division of operating periods across different lines. Unlike previous studies [

8,

9,

16], this paper constructs clustering samples at a 1 min resolution, which significantly improves the accuracy of the period division scheme. Overall, the clustering results clearly show the various operational stages throughout the full-service day. Although the number of clusters differs across lines, the outcomes consistently capture temporal variations in passenger demand. However, such fine granularity also leads to the occurrence of isolated points in the clustering results. Therefore, further refinement and integration of the clustering outcomes is necessary.

Taking

Figure 8a as an example, Cluster 1 represents both the initial start-up stage of daily service and the closing stage near the end of operations, during which passenger volumes are the lowest of the day but exhibit relatively rapid fluctuations. Cluster 2 corresponds to the longest off-peak periods—including the morning, midday, and evening off-peaks—characterized by relatively low and stable passenger volumes, thereby differentiating them from the samples in Cluster 1. Notably, samples belonging to Cluster 1 also appear between 09:30 and 14:30, which can be regarded as outliers relative to Cluster 2.

Clusters 3 and 4 represent transitional stages between off-peak and peak periods, where passenger flows rise rapidly but to varying levels. A clear asymmetry is observed between morning and evening peaks: while the morning peak and its adjacent transitional periods exhibit distinct boundaries, the evening peak shows less pronounced separation. This feature is also evident in

Figure 8b. By comparing with the passenger flow patterns shown in

Figure 3, it can be observed that the morning peak on weekdays is highly concentrated due to the strong temporal regularity of commuting and schooling activities, whereas the evening peak displays a multi-modal pattern, with some time samples exhibiting characteristics of transitional periods. Although Line 2 exhibits weaker commuting attributes than Line 1, it demonstrates a similar pattern.

The analysis indicates that the introduction of K-means clustering can effectively integrate the SOM pre-clustering results, enabling samples to be accurately distinguished and transforming the pre-clustering outcomes into operationally feasible and interpretable time period divisions. The clustering results effectively capture both the macroscopic and microscopic characteristics of passenger flows, thereby accurately identifying the comprehensive passenger demand reflected by each sample.

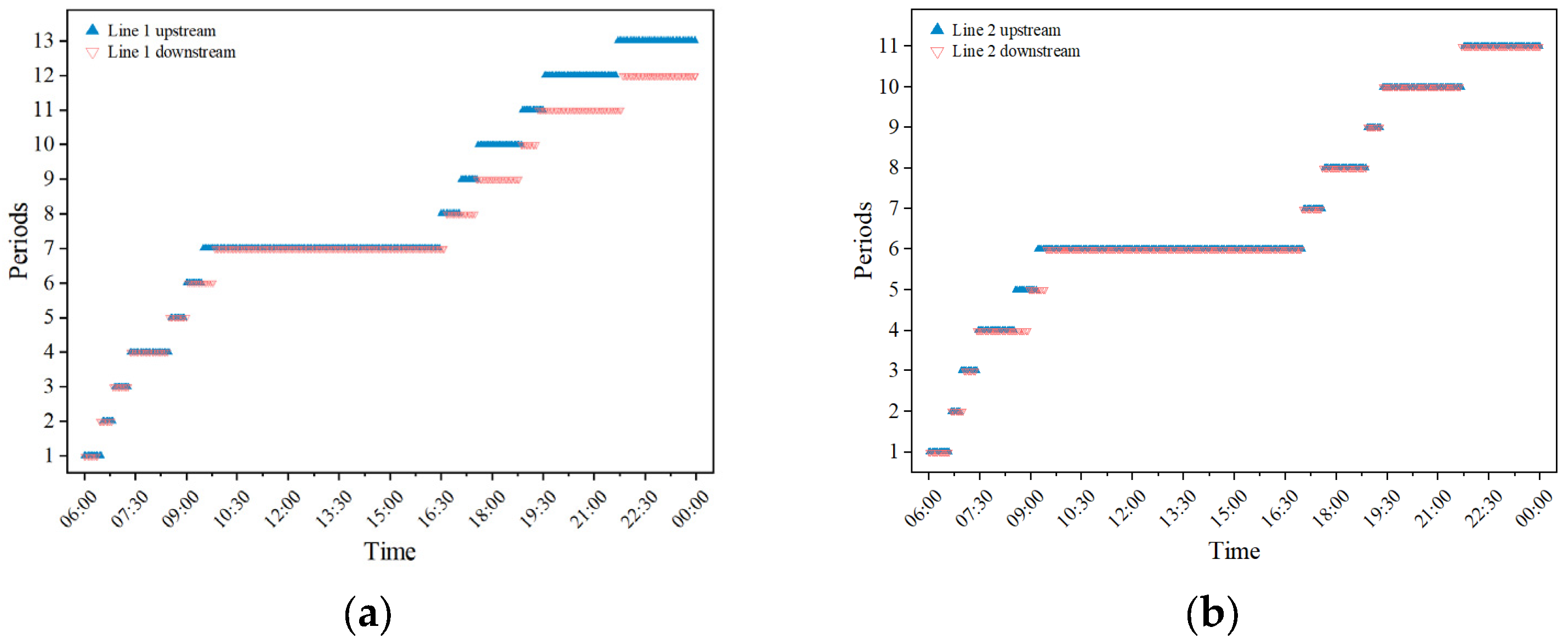

Based on the distinct boundaries revealed by the clustering results, the full-day operating hours can be directly delineated. In this paper, isolated points and time slices with durations of less than 20 min are processed as follows: if the adjacent time samples before and after belong to the same cluster, the slice is merged into that period; otherwise, it is assigned to the subsequent period.

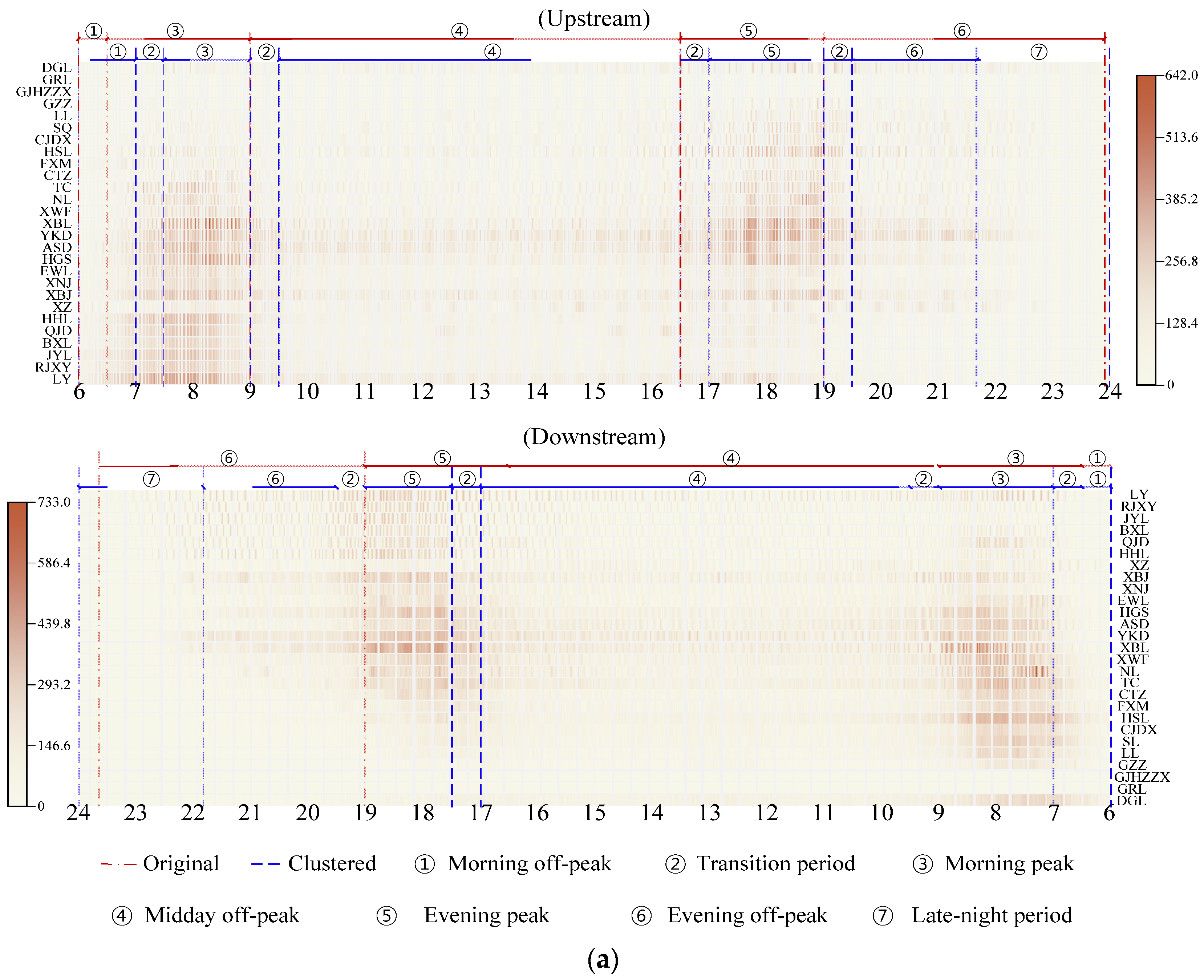

Figure 9 illustrates the resulting division of operating periods for different URT lines. As shown in

Figure 9a, the upstream direction of Line 1is divided into 13 operating periods. Compared with the existing scheme, the proposed segmentation further refines the major intervals—morning peak (06:30–09:00), midday off-peak (09:00–16:30), evening peak (16:30–19:00), and late off-peak (19:00–23:59)—capturing subtle variations in passenger flow patterns within each period. The downstream direction is divided into 12 periods, also reflecting diverse passenger flow characteristics. In the case of Line 2, although both upstream and downstream directions are divided into 11 operating periods, there are noticeable differences in the temporal coverage of each period, as shown in

Figure 9b. Overall, the newly defined operating periods for both lines exhibit clear asymmetry between directions, which contrasts with the previously established symmetric structure.

In summary, this study achieves a precise division of urban rail transit operation periods through a two-stage approach of “SOM pre-clustering—K-means cluster refinement.” The SOM, leveraging its strengths in high-dimensional data processing, performs noise smoothing and dimensionality reduction visualization on the original multi-dimensional passenger flow data, and constructs a dissimilarity matrix based on weight vectors, thereby providing a structural foundation for accurate period segmentation. However, the pre-clustering results of SOM are constrained by local topological structures, which may lead to cluster fragmentation. To address this, K-means utilizes the initialization centroids provided by the small-scale SOM to stably aggregate the pre-clustering results into an operation period division scheme that balances feature accuracy and operational feasibility. In

Section 5.3, the SOM–K-means method is compared with other clustering algorithms in terms of clustering performance and stability, further highlighting the applicability and effectiveness of this hybrid clustering framework in the division of urban rail transit operation periods.

5.2.5. Asymmetry in the Operating Periods

Based on the clustering results presented in this study, the initially derived division scheme can be further adjusted to enhance its applicability in practice.

Table 2 and

Table 3 show a comparison of the adjusted division schemes for each line direction with the original scheme.

Compared with the original scheme, the adjusted operating periods exhibit pronounced asymmetry, both across different lines and between opposite directions of the same line. Specifically, on Line 1, the morning peak in the upstream direction begins 30 min later than the downstream direction, though both end at 09:00. During the evening peak, the upstream direction starts earlier by 30 min, while both directions conclude simultaneously at 19:00. On Line 2, differences between directions are mainly observed during the morning peak, with the upstream period from 07:30 to 08:30 and the downstream period from 07:00 to 09:00, lasting twice as long as the upstream period.

The observed asymmetry in operating periods arises from the uneven distribution of passenger flows, highlighting the necessity of analyzing operating period divisions based on actual passenger flow patterns.

Figure 10 intuitively illustrates the relationship between the divided operating periods and the spatiotemporal distribution of passenger flow by overlaying the division results of each line on the corresponding passenger flow heatmap. The passenger flow at each station and time interval represents the total number of entries and exits over five consecutive working days. Overall, passenger flow on all lines exhibits a typical bimodal pattern. However, significant differences in flow intensity are observed within the same times, and the peak-hour demand centers differ between the two directions. For instance, Line 1 displays a distinct tidal pattern during both morning and evening peaks, with peak demand occurring at different times for each direction. In contrast, Line 2 shows greater asymmetry in passenger flow patterns. During the morning peak, the upstream direction experiences a more concentrated demand, with peak passenger volume lasting only one hour, yet the total flow is noticeably higher than that of the downstream direction. This directional asymmetry highlights the need for differentiated operating period divisions.

The clustering-based period boundaries (blue dashed line) align closely with these observed variations. Taking Line 1 as an example, the morning off-peak in the up direction is extended to 06:00–07:00, corresponding to the relatively low passenger volume during this time. Subsequently, the period from 07:01 to 07:30 serves as a transition period, during which passenger flow rapidly increases from a low to a high level. The morning peak period (07:31–9:00) maintains a consistently high passenger volume, followed by a transition stage (09:01–9:30) when passenger demand gradually declines. The subsequent off-peak period (09:31–16:30) remains stable at a low level. From 16:30 to 17:00, the system enters a transition from midday off-peak to the evening peak, during which passenger flow rises sharply. The evening peak (17:01–19:00) lasts for two hours, showing a high-level passenger volume, followed by a short decline between 19:01 and 19:30. Then came the evening off-peak period (19:31–21:40), with passenger flow levels similar to the midday off-peak period, maintaining a low and stable level. During the late-night period, passenger demand further decreases until it approaches zero.

In contrast, the morning off-peak period in the down direction lasts only 30 min (06:00–06:30), after which passenger volume increases rapidly. The subsequent transition stage (06:31–7:00) also lasts for half an hour, leading to the morning peak occurring approximately 30 min earlier than in the up direction, lasting for two hours (07:01–9:00). During the evening, the peak in the downward direction (17:31–19:00) is delayed by about 30 min compared with the upward direction (17:01–19:00), indicating that high-density passenger flows are more concentrated in the later period. After the short transition phase (19:01–19:30), passenger demand gradually decreases to a low level, marking the start of the evening off-peak. The down-direction evening off-peak is about 10 min longer than that of the up direction, suggesting that late-night passenger patterns are relatively consistent between the two directions.

Overall, the differences in clustering results between the two directions are mainly concentrated in the morning and evening peaks. The up direction exhibits a more concentrated morning travel pattern, resulting in a shorter peak duration, while the down direction demonstrates a more concentrated evening travel demand. In contrast, the original symmetric scheme (red dashed line) adopts identical operation period divisions in both directions, with morning and evening peaks each lasting 2.5 h (06:31–09:00 and 16:30–19:00), thereby failing to accurately reflect the differences in passenger flow.

These results demonstrate that the proposed clustering-based asymmetric division effectively captures inter-period variations in passenger demand while maintaining strong internal consistency within each period. It further offers valuable implications for operational planning. Specifically, the train departure frequency and the number of operating trains can be flexibly adjusted according to the directional distribution of passenger demand, thereby achieving a precise match between service capacity and travel demand and enhancing the utilization efficiency of transport resources. Furthermore, during periods of significant passenger flow fluctuations (such as transition periods and late at night), train departure intervals can be dynamically adjusted to further optimize capacity allocation and increase train load factors. Overall, this demand-based train operation organization strategy helps promote the refinement and efficiency of urban rail transit operation management and provides a useful reference for building an intelligent dispatching system.

5.3. Evaluation of Clustering Performance and Stability

As previously mentioned, this study adopts the Silhouette Coefficient (SC) as the primary clustering quality metric to evaluate the effectiveness and stability of the proposed method. The SC measures the aggregation of samples within the same cluster and their separation from samples in other clusters, thereby capturing both intra-cluster compactness and inter-cluster distinctiveness. A higher SC value indicates a tighter and more clearly defined clustering structure, which corresponds to superior clustering performance. Owing to its computational efficiency and strong interpretability, SC has been widely applied in unsupervised learning and clustering validation tasks [

41,

42,

43].

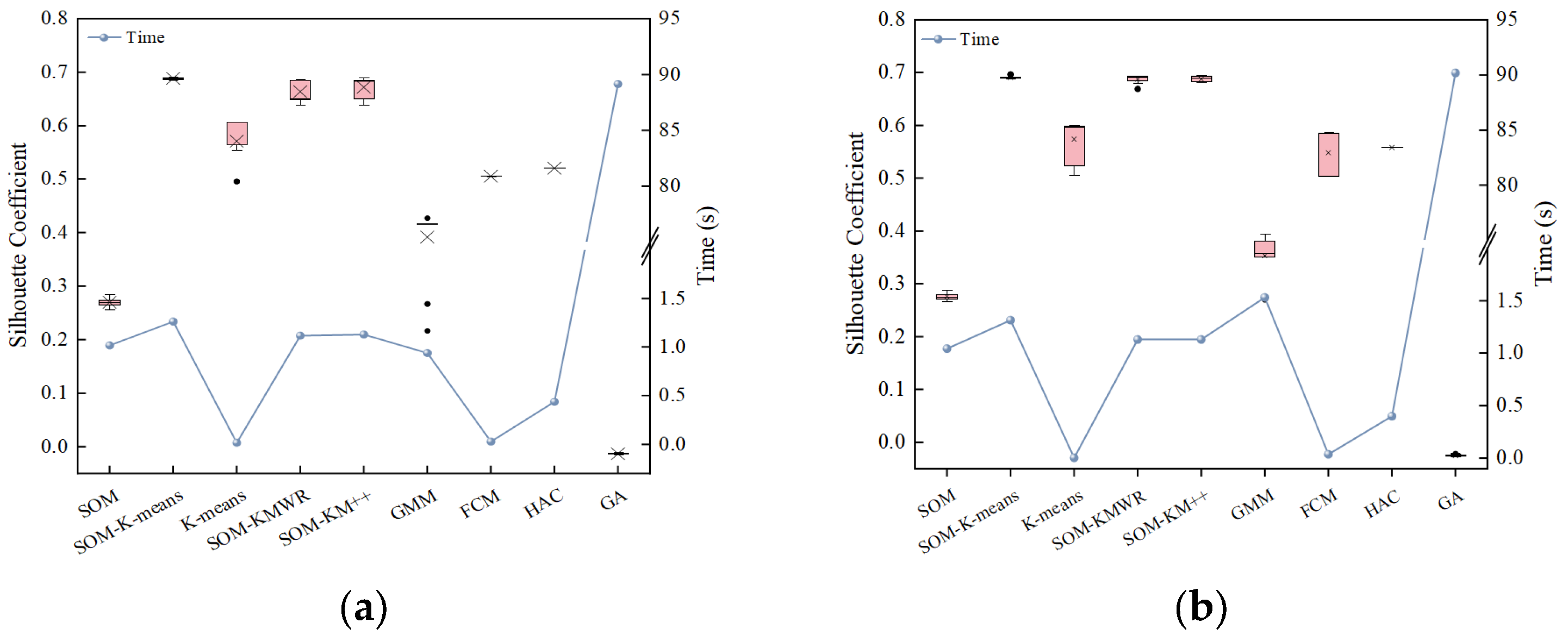

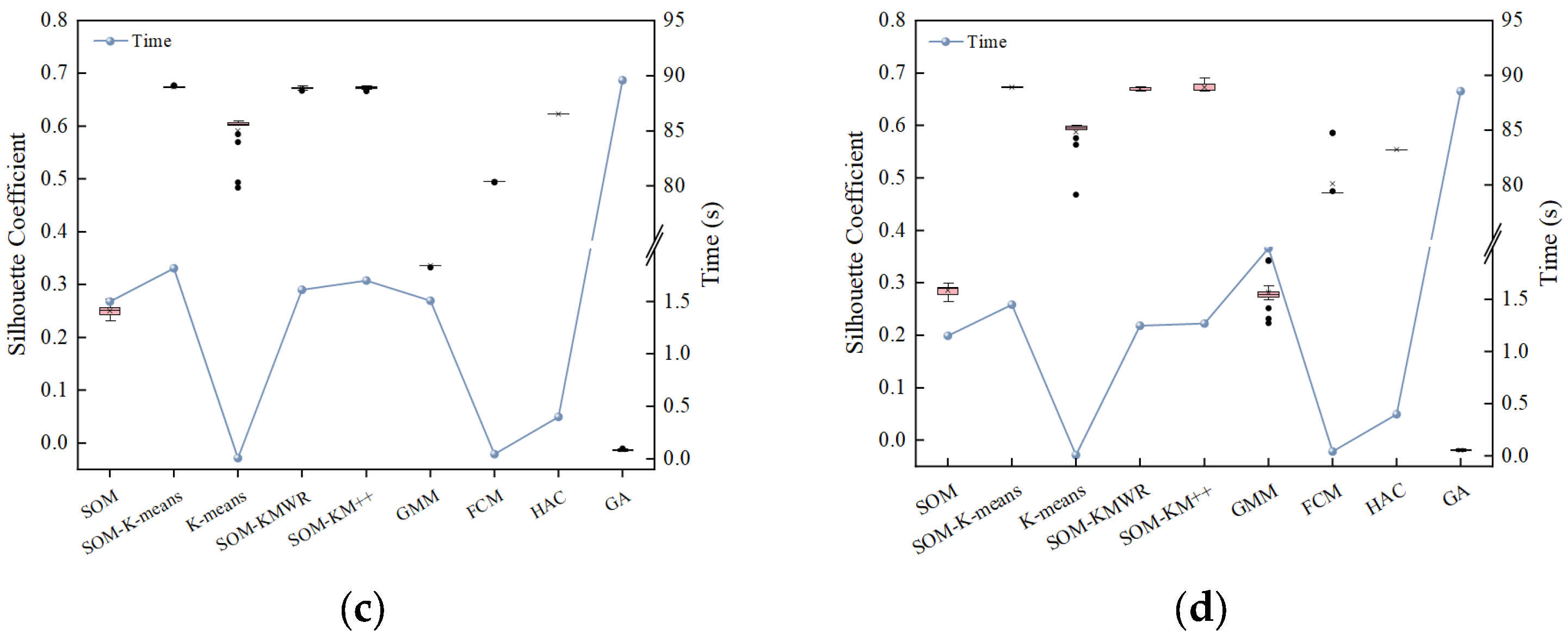

Figure 11 illustrates the clustering performance of multiple algorithms, including SOM–K-means, SOM, K-means, SOM–K-means with randomly initialized cluster centers (SOM–KMWR), SOM–K-means++ (SOM–KM++), Gaussian Mixture Model (GMM), Fuzzy C-Means (FCM), Hierarchical Agglomerative Clustering (HAC) and Genetic Algorithm (GA). Each algorithm was independently executed 20 times, with 100 iterations per run. The SC values of the clustering results and the corresponding average computation time were recorded.

As shown in

Figure 11, SOM–K-means consistently achieves high SC values across all four URT lines, demonstrating its excellent clustering capability for operational time period segmentation. SOM–KMWR and SOM–KM++ achieve SC values close to those of SOM–K-means, whereas SOM alone exhibits relatively poor performance, and K-means shows moderate performance, falling between the other algorithms. These results indicate that combining SOM with K-means substantially enhances clustering performance.

Table 4 further summarizes the mean and maximum SC values for each method, providing a detailed comparison. The quantitative results further corroborate these findings. Across all four scenarios, the mean and maximum SC values produced by SOM–K-means are very close to those of SOM–KMWR and SOM–KM++, with differences within 4%. However, the improvement over other algorithms is considerable, with the vast majority of cases exceeding 10%. Notably, in the downstream direction of Line 2, SOM–KM++ slightly surpasses SOM–K-means in maximum SC value by 2.43%. The GA consistently exhibited the poorest performance across all experimental scenarios, with its maximum SC values remaining negative, indicating that the algorithm was trapped in local optima. According to the definition of SC, a value closer to 1 indicates that each sample is more accurately assigned to its corresponding cluster, suggesting that the passenger flow demand patterns during different periods can be more precisely aligned with their respective time intervals. A scheduling plan developed based on such high-precision clustering can achieve a high degree of correspondence with passenger travel demand, thereby improving system efficiency while ensuring service quality Therefore, to guarantee the specificity and effectiveness of the scheduling strategy, clustering results with higher SC values should be prioritized as the basis for period division. In contrast, clustering results with lower SC values are less likely to yield reasonable operational period segmentation schemes.

Regarding computational time, all algorithms except GA complete 100 iterations within 2 s, with K-means, FCM, and HAC requiring less than 1 s. For the four SOM-based algorithms, runtime is primarily influenced by the number of neurons, showing almost synchronous variation across the four scenarios. GMM, however, exhibits some variability in runtime. GA consumed substantially more computational resources, with runtimes of approximately 90 s in all scenarios, which was markedly higher than those of the other algorithms.

In terms of clustering stability, SOM–K-means, HAC and GA show minimal variation in SC values across 20 independent trials, reflecting their robustness and consistent performance. Due to sensitivity to initial cluster centers, SOM–KMWR and SOM–KM++ still exhibit some stochasticity. This suggests that, with SOM assistance, K-means can effectively mitigate the impact of initialization. By contrast, K-means, GMM, and FCM display larger variability and frequent outliers, reflecting their sensitivity to initialization as well as to the dimensionality and noise inherent in the original samples.

In summary, the SOM–K-means algorithm not only enhances clustering precision but also significantly improves result stability, thereby demonstrating both its effectiveness and adaptability in the context of operating period division for URT.

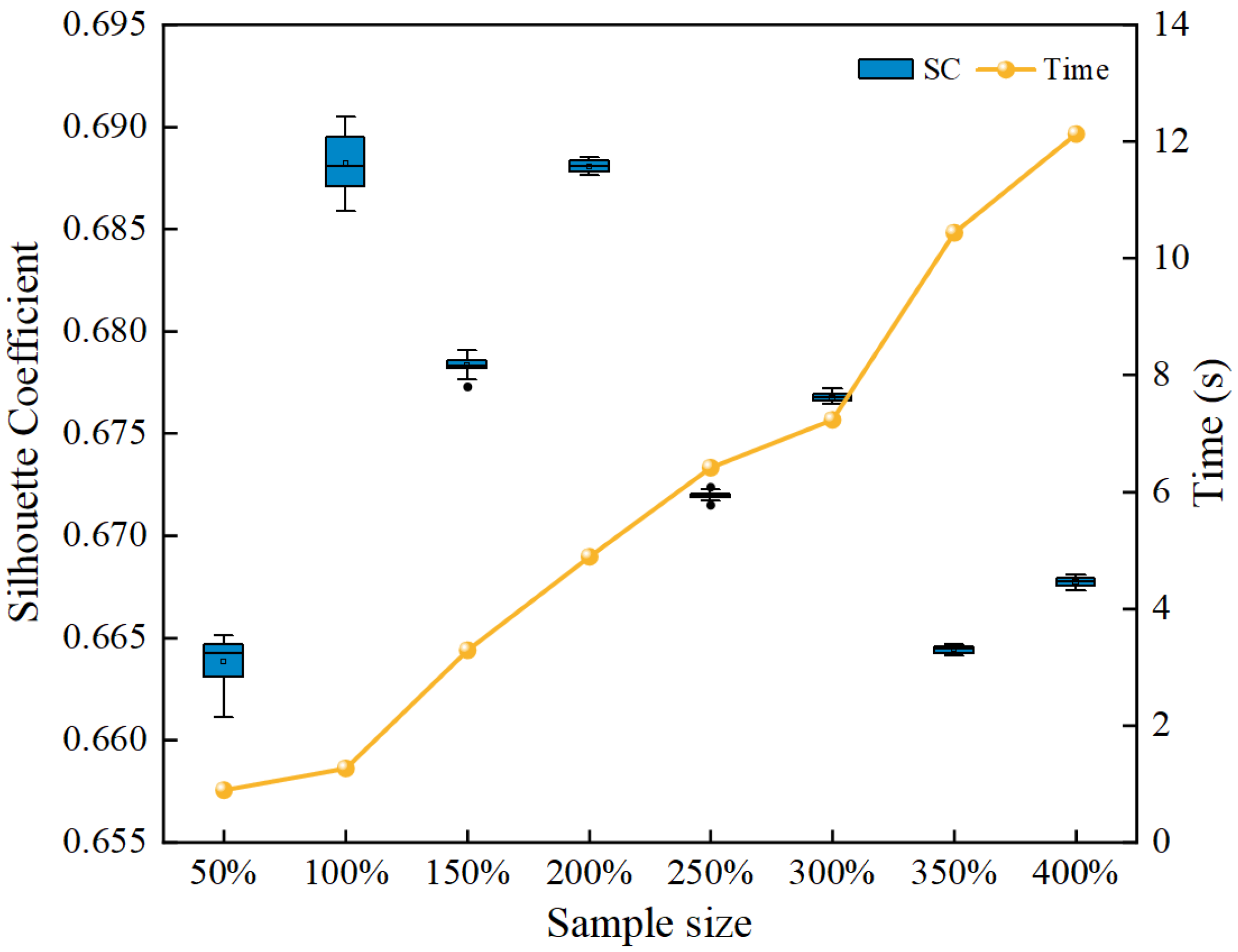

5.4. Sensitivity Analysis of Clustering Performance and Stability to Sample Size Variations

To evaluate the robustness of the proposed SOM–K-means algorithm, experiments were conducted on the upstream direction of Line 1 by varying the sample size to assess the algorithm’s sensitivity to changes in input data volume. The number of neurons was determined using an empirical formula, set as five times the square root of the sample size [

26]. For each sample size, the algorithm was independently executed 20 times, and the silhouette coefficient along with the mean computation time were recorded, as shown in

Figure 12.

The results indicate that the algorithm achieves consistently high clustering accuracy across different data scales, with only minor fluctuations in silhouette coefficient values (within 0.03), demonstrating robust clustering performance. The slight variations observed over multiple runs further confirm the algorithm’s stability. Additionally, computation time increases approximately linearly with sample size, with the time increment roughly 1.5 times that of the corresponding increase in sample size, indicating low computational complexity and favorable scalability. These findings suggest that SOM–K-means is applicable to clustering datasets of varying sizes.

5.5. Practical Implications

In practical operations, URT operators can utilize the division of operating periods after clustering to re-optimize train headways in line with different operational objectives. Taking the upstream direction of Line 1 as an illustrative example, two alternative headway schemes were simulated to evaluate passenger waiting times and train operations, as summarized in

Table 5. Here, “the last time in the period” refers to the departure time of the last train.

In Scheme 1, the original headways are retained during off-peak hours, while train frequency is increased during morning and evening peak periods to better accommodate passenger demand. Transitional periods adopted the mean value between peak and off-peak headways, whereas headways were moderately extended during late-night operations when passenger demand decreases. The results indicate that the average passenger waiting time remained nearly unchanged, with only a slight increase of 0.38 s, while the daily end-of-service time is advanced by 11 min. This earlier termination of service is considered favorable by operators, as it not only reduces operating costs but also facilitates subsequent maintenance activities after service hours.

Scheme 2 builds upon Scheme 1 by slightly extending peak-period headways by approximately 10 s, while keeping all other settings identical. This adjustment results in a modest increase in average passenger waiting time (about 2.16%), but enables the elimination of one train operation, thereby achieving direct cost savings for the operator.

Adjusting train headways based on operational objectives is inherently a train timetable optimization problem [

44], which requires balancing passenger demand with operational constraints, such as safety requirements and equipment utilization limits. This study provides decision support for headway adjustments from the perspective of passenger demand. Taking the morning off-peak to peak period as an example: during the early off-peak phase, passenger flow is generally low but increases rapidly; under operational constraints, headways can be moderately extended to make full use of available capacity. In the transitional phase, passenger flow is high and grows quickly, necessitating a gradual reduction in headways to increase train deployment and accommodate the rapidly rising demand. During the peak phase, passenger flow remains high with relatively minor fluctuations, warranting dense and evenly spaced headways to minimize passenger waiting time and enhance operational efficiency.

6. Conclusions

This study develops a data-driven framework integrating Self-Organizing Maps (SOM) and the K-means algorithm for clustering-based division of operating periods in urban rail transit (URT), aiming to overcome the subjectivity and limitations associated with manually predefined time intervals. Clustering samples are constructed from passenger travel data recorded by the AFC system, with feature selection encompassing total volume, microscopic variations, and macroscopic distribution of passenger flow, thereby providing a comprehensive characterization of temporal variations in passenger demand.

Empirical analysis of Lines 1 and 2 of the Tianjin URT demonstrates the effectiveness of the proposed method. The SOM–K-means method demonstrates remarkable overall performance in clustering multi-dimensional time samples driven by passenger flow data. Compared to the existing operating period division scheme, the new scheme effectively identifies transitional phases between off-peak and peak periods and distinguishes the late-night operation period from the evening off-peak hours, thereby producing a more precise division of operating periods. We can apply these results to enhance the efficiency of transportation resource utilization and support fine-grained train scheduling. Moreover, the method successfully captures the uneven distribution of passenger flows across different lines and directions, which is reflected in the pronounced asymmetry observed in the resulting operating period schemes. Accordingly, URT can implement differentiated operational management for different lines. The results demonstrate that data-driven approaches can enhance the rationality and efficiency of operational decision-making in URT, effectively overcoming the limitations of manual methods. In addition, the proposed framework is extensible and can be applied to other transit systems to address similar problems.

This study has potential limitations. Only weekday passenger flows make up the limited scope of the observed data. Special events, such as extreme weather or major public activities, may induce abrupt changes in passenger demand patterns, requiring further validation and adaptation of the proposed method. The selection of clustering features also warrants more profound analysis. Since this study is based solely on a single city and its metro network, the proposed framework may encounter new challenges when applied to other transit systems or cities. For instance, passenger flow data from urban bus systems often lack information on alighting flows. In other cities, the granularity of AFC data in URT may not be consistent with that used in this study, potentially limiting the direct transferability of the model. In addition, the practical applicability of the results is constrained by the availability of the data, preventing a more comprehensive exploration.

Future research will primarily focus on the following aspects: (1) Enhancing the generalizability of the proposed framework by incorporating instance-based transfer learning [

45,

46], thereby extending its applicability to other cities or transit networks, improving the model’s adaptability to diverse passenger flow patterns, and providing more targeted operating period division schemes. This is a major obstacle to practical implementation. (2) Employing deep learning techniques to achieve end-to-end clustering [

47], optimizing the automatic extraction and selection of passenger flow features, and improving the accuracy and reliability of clustering analysis. (3) Integrating optimization techniques to extend the present findings, thereby enabling the design of differentiated strategies for distinct operating periods and improving the efficiency and quality of transit services. (4) Broadening the research scope to explore coordinated operating period division across multiple modes of transportation, with the aim of achieving seamless multi-modal integration.