3.1. Dynamics Modeling of the Meat Cut’s Center of Gravity

This paper systematically investigates the dynamic evolution of the center of gravity (CoG) of meat cuts during the grasping process. A dynamics model is developed to characterize the spatiotemporal behavior of the CoG, accurately capturing the relationship between its position and the grasping time. In this way, a theoretical basis is provided for the subsequent design of control strategies. In establishing the dynamic model, this paper disregards the friction effects between the gripper and the meat surface. This assumption is based on the characteristics of the gripping process and the experimental apparatus structure: the gripper employs a wrap-around design with flexible silicone pads, ensuring uniform contact and minimal slippage. Consequently, friction between the gripper and the meat surface is neglected. Under a typical friction coefficient 0.05–0.1, the resulting friction torque accounts for less than 5% of the gravitational torque, rendering its impact on the overall center-of-gravity motion negligible.

To begin, the geometry of the meat cuts is discretized into a Finite Element Method (FEM) mesh. To enable efficient dynamic modeling of the deformable meat cuts, the continuous body is approximated through a finite-volume discretization scheme. The object domain

is partitioned into a finite set of small elements, each occupying a subvolume

V. For each element

i, the lumped mass is computed as follows:

where

represents the local density distribution within the meat tissue. The motion of each discrete element follows the local force balance equation:

where

denotes the position vector of element

i,

is the external force (including gravity and gripping contact), and

is the internal elastic restoring force contributed by neighboring elements. In practice,

can be approximated by a linearized elastic coupling model:

where

represents the neighbor index set of element

i and

is the effective stiffness coefficient describing local inter-element elasticity.

This discretization transforms the continuous soft body into a finite-dimensional dynamical system that retains the essential characteristics of mass redistribution and deformation while ensuring computational tractability for real-time CoG trajectory prediction. The displacement field of each discrete element is represented in three-dimensional space as

where

denotes the position coordinate of a discrete element in three-dimensional space and

represents the displacement of that element at discrete time

, consisting of displacement components

,

, and

. The position vector of the center of gravity

is defined as the mass-weighted average of the positions of all discrete elements of the meat cuts. Assuming that the density

of the meat cuts is uniformly distributed within the volume

V, the center of gravity can be expressed as

where

M is the total mass of the object within the volume domain

V and

denotes the unit volume of a discrete element.

Considering that the density may vary among the discrete elements of the meat cuts, assuming a perfectly uniform density would introduce errors into the CoG shift model. To mitigate such errors, this paper defines the density of a discrete element as follows:

where

represents the average density of the meat cuts and

is the perturbation term for the density of a discrete element, used to correct the uniform-density assumption. Collectively,

reflects the non-uniformity of the density distribution. To prevent the perturbation term from introducing new errors,

is constrained such that its total contribution within the volume domain

V is zero, ensuring that the total mass of the meat cuts remains unchanged, that is,

During the grasping and manipulation process of the meat cut, deformation alters its mass distribution, leading to a change in the relative position of the center of gravity. If the displacement field of the meat cut at a discrete time

is provided by

, then, within the object’s volume domain

, the resulting shift in the center of gravity is expressed as follows:

The kinetic energy generated by the movement and deformation of the meat cuts can be expressed as

where

denotes the rate of change of the CoG position

with respect to time, i.e., the velocity of the center of gravity. The degree of deformation of an object is typically characterized by the strain tensor. To describe the relationship between the external forces acting on the meat cuts and their deformation, this study introduces the strain tensor

, defined as follows:

According to Hooke’s law, the stress tensor can be derived as follows:

where the stress tensor

describes the internal force distribution within the object,

E is Young’s modulus, and

is Poisson’s ratio. The elastic potential energy of the meat cuts can be expressed as the integral of the stress–strain relationship:

where: denotes the bilinear tensor product. After including the gravitational potential energy

, the total potential energy

of the meat cuts can be expressed as

The Lagrangian of the system is then provided by

where

L represents the difference between the kinetic and potential energies of the system. Considering the CoG variation during the force-induced motion of the meat cuts, the Lagrange equation of motion is expressed as

where

represents the contribution of the resultant external force to the CoG displacement.

The external forces acting on the CoG include both the grasping force and gravity, which can be formulated as

where

denotes the resultant grasping force exerted by

n gripper fingers. The resultant force on the discrete elements along the contact boundary

can be expressed as

with

representing the

e-th discrete element on the boundary. Substituting the momentum

L and

into the Lagrangian equations yields

By introducing the discrete time

into the above equation, the discretized results of the CoG position

, velocity

, and acceleration

are obtained as follows:

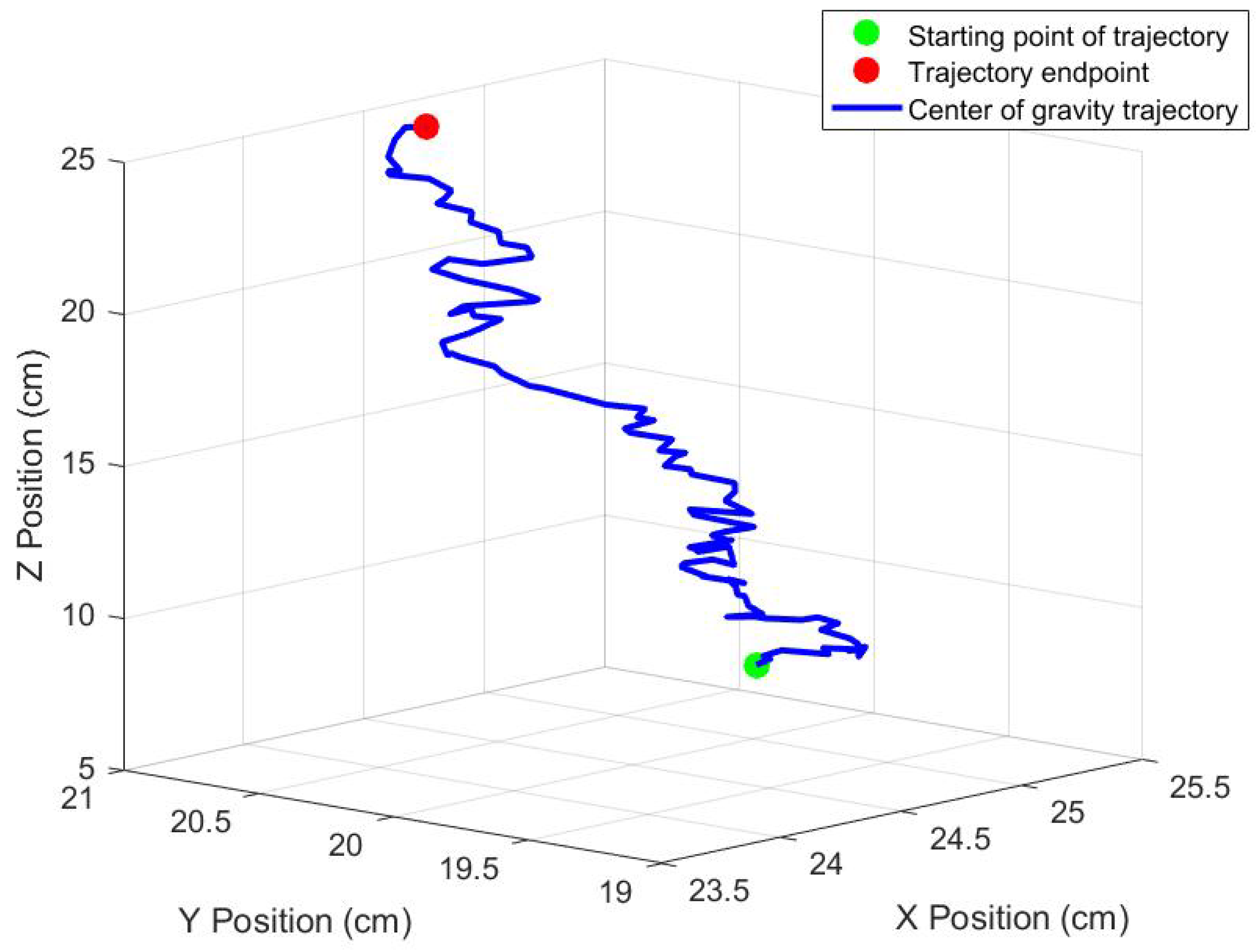

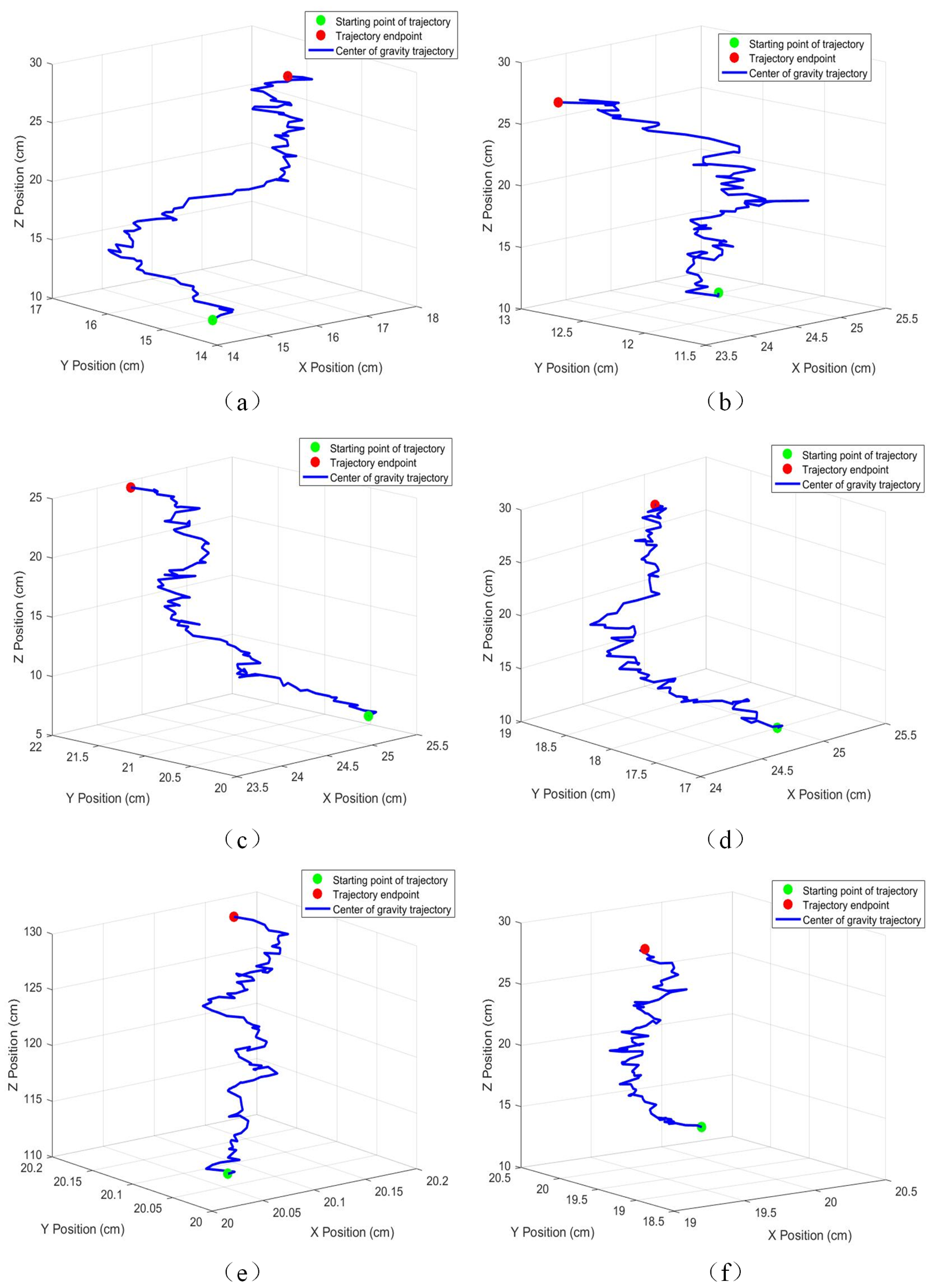

Consequently, the finite set describing centroidal positional distributions during grasping is defined as .

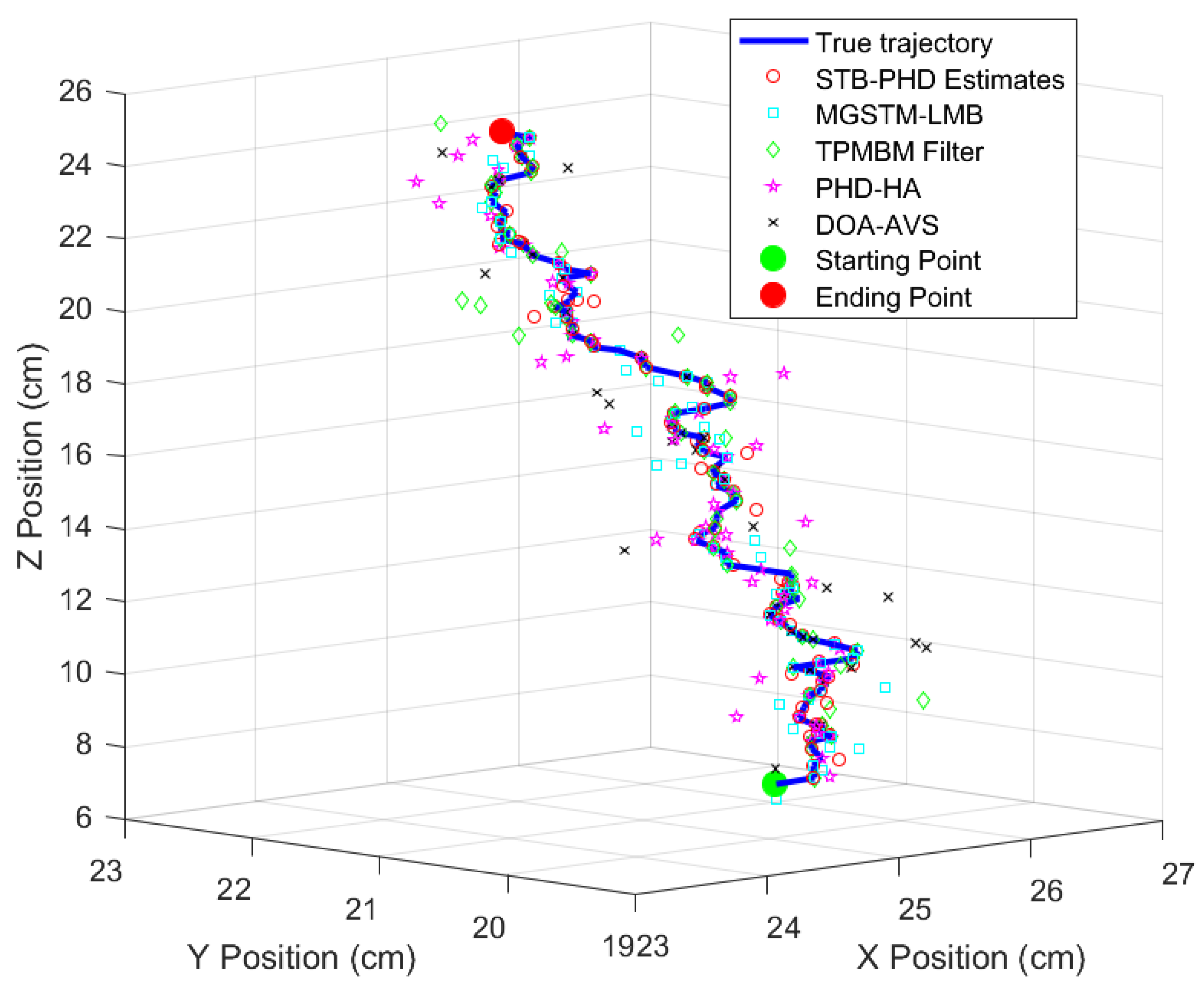

3.2. STB-PHD

When the meat cuts are subjected to forces from the gripper, their original state of motion is altered, leading to random offsets in the coordinates of the center of gravity (CoG) within the established world coordinate system. To address this issue, this study establishes a CoG prediction model based on the theory of Random Finite Sets (RFS).

The grasping and manipulation process of the meat cuts is modeled as a stochastic nonlinear hybrid system. The target variable is the CoG coordinates

, while the six-dimensional force data from the gripper,

, is treated as the control input. At time

t, the state space

contains

target states from time 0 to

t, denoted as

. The measurement space

contains

measurements,

. In this system, both the target states and measurements as well as

and

are treated as random variables, and their order carries no specific physical meaning. At time

t, the target RFS and measurement RFS are respectively defined as

where the finite set

represents the target states within the state space

and

represents the measurement states in the observation space

.

Based on the principles of set calculus, the Bayesian filter within the RFS framework enables prediction of the target state at time

t. Assuming that the updated probability density of the target at time

is

, the predicted probability density at time

t can be expressed via the Chapman–Kolmogorov equation:

where

denotes the accumulated measurement RFS from time 0 to

. The function

is the state transition function of the target RFS, which describes the temporal evolution of the target states. Specifically, it provides the probability of the target state set

at time

evolving into the target state set

at time

t, expressed as

If the measurement RFS at time

is

, then the updated probability density at time

t can be obtained via the Bayesian recursive formula:

where the updated probability density

incorporates all historical constraints from the measurement sequence

. The likelihood function

, which quantifies the match between state and measurement, is expressed as

where

R is the measurement noise covariance matrix and

is the predicted measurement corresponding to the target state

. As such, the updated probability density

represents the posterior distribution of the target state set

. The individual elements

within

represent the predicted CoG coordinates of the meat cuts at the current time.

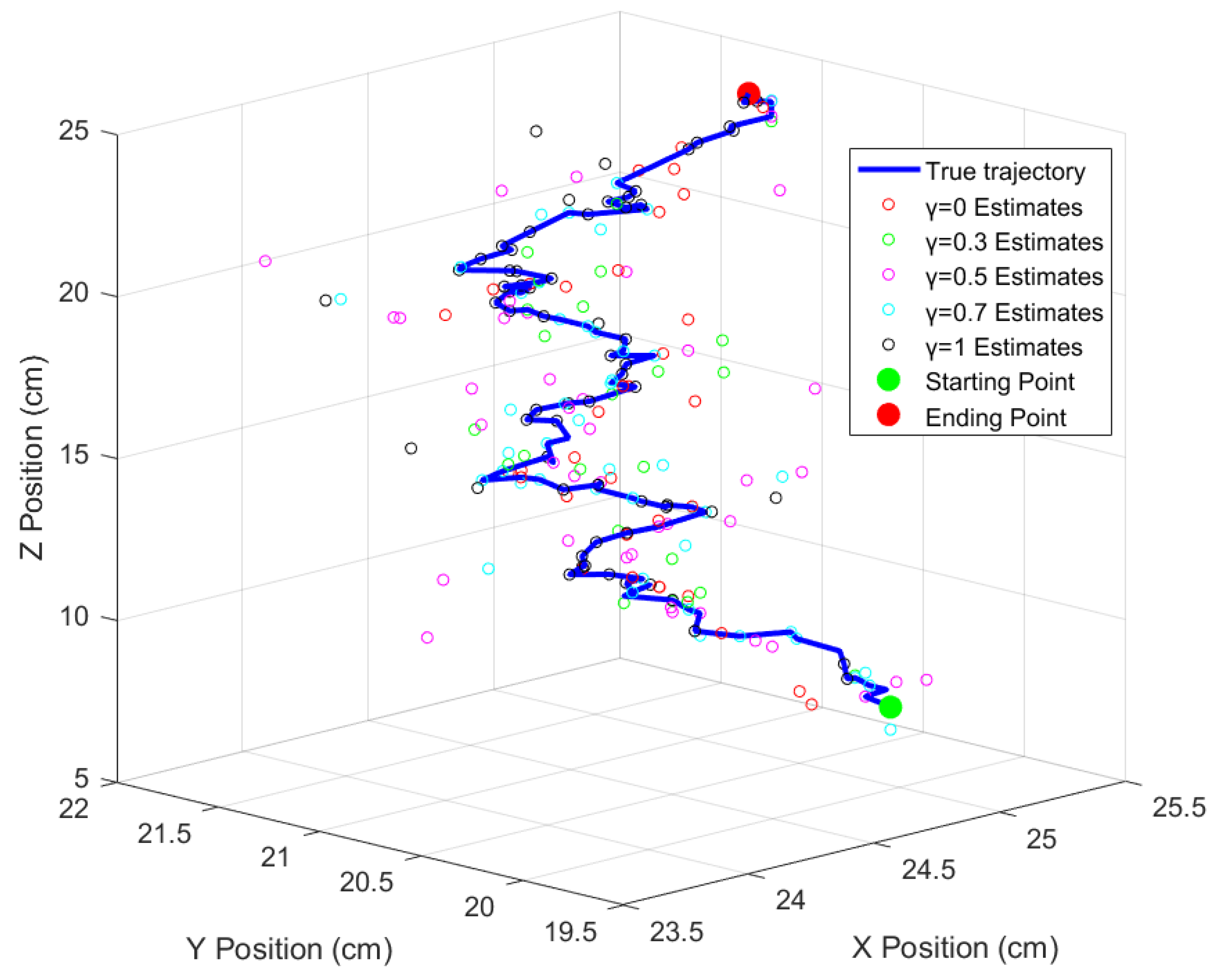

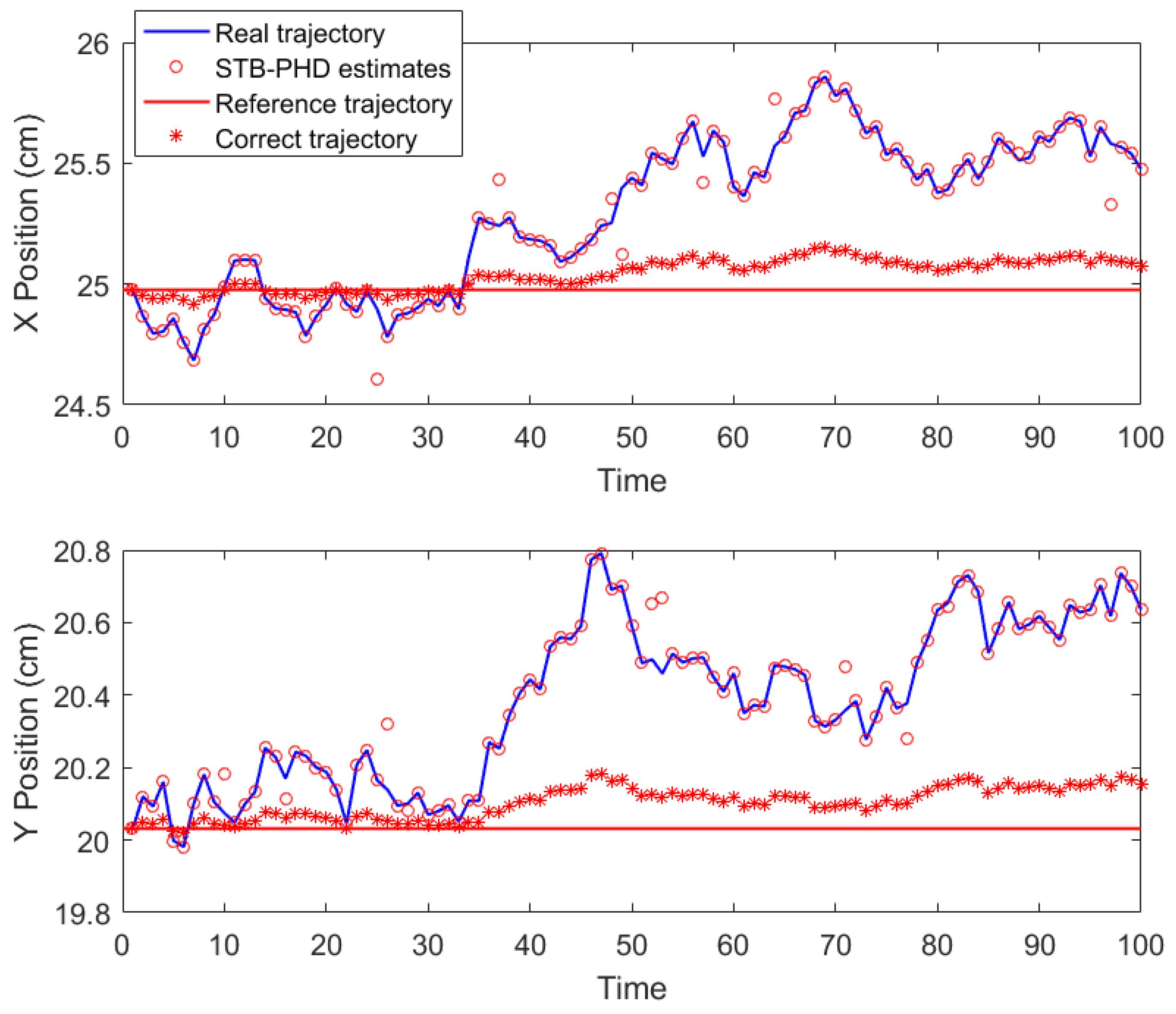

Based on the CoG shift model established in

Section 3.1, the analytical solution of the dynamics equation at time

provides the reference CoG position

at time

t. This study quantitatively evaluates the consistency between the predicted state

and the reference

using a discrepancy measure. Let

and

denote the predicted and reference distributions, respectively. The Gaussian density of the reference value is defined as follows:

The consistency between

and

is quantified using the Kullback–Leibler Divergence (KLD):

where

indicates perfect consistency between prediction and reference. If

falls within a predefined threshold, the prediction is considered accurate; otherwise, error compensation is required. To address this, an improved Bayesian filtering method is employed, dynamically adjusting filter weights based on historical errors. The linear relationship between

and

is expressed through their covariance:

where

and

denote the mean values of the predicted and reference distributions, respectively. The covariance between the elements in the sets

and

can be expressed as follows:

The error compensation covariance matrix is then defined as

By adjusting the weight , the probability in high-error regions is reduced, thereby enhancing the robustness of the prediction results.