An Intelligent Playbook Recommendation Algorithm Based on Dynamic Interest Modeling for SOAR

Abstract

1. Introduction

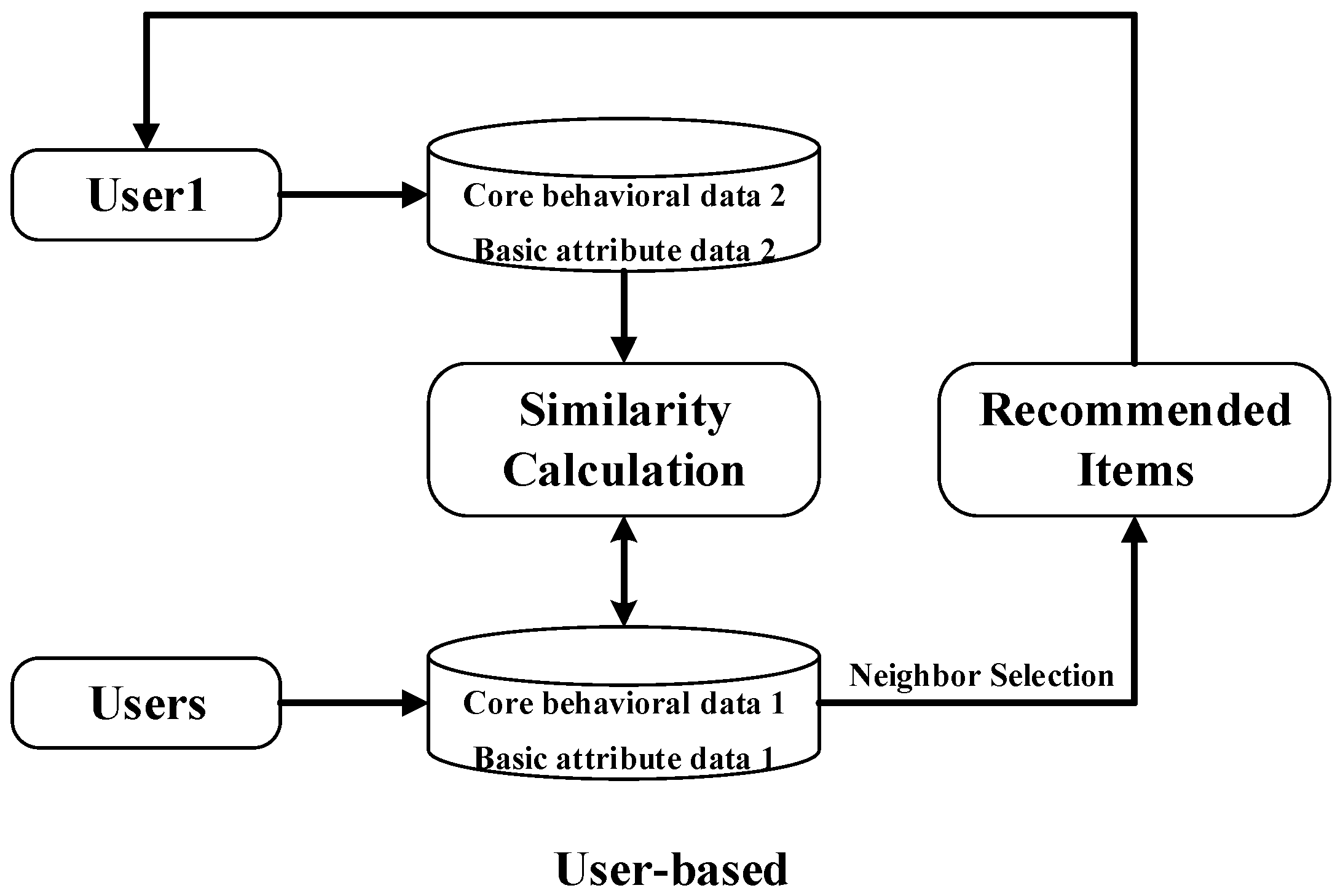

2. Related Work

3. Research Methods

3.1. Research Framework

3.2. Data Preprocessing and Analysis

Data Structure

3.3. Alarm Behavior Sequence Construction

- Determining the window size: First, the window size needs to be determined according to the practical requirements of network security operations. The choice of window size depends on the frequency of security events as well as business needs. A smaller window can capture more fine-grained dynamic variations, while a larger window is capable of reflecting long-term behavioral patterns. In this study, we use alarm data with a five-minute window to represent the short-term interests of the current alarm and a one-week window to represent its long-term interests.

- Window sliding with segmentation: On the basis of a fixed window size, the sliding window divides the alarm behavior sequence into multiple time intervals. For example, within each five-minute window, the system collects all historical response data associated with the alarm during that period and generates a feature vector based on this data to represent the alarm behavior within the corresponding time segment.

3.4. Data Encoding Method

4. Model Training for Playbook Recommendation

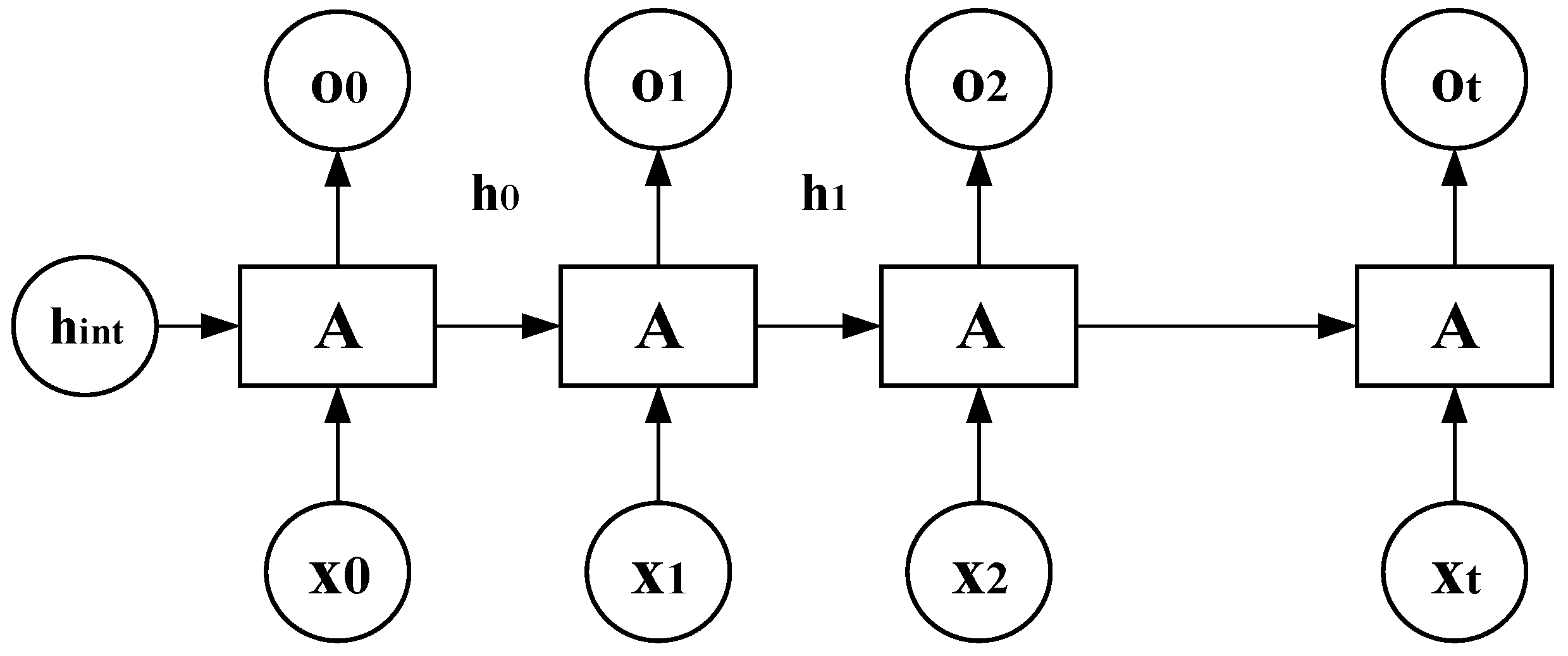

4.1. Core Mechanism of Long- and Short-Term Interest Modeling

| Algorithm 1. The sliding window method is used to extract alarm behavior records |

| Input: Historical data of all alarms in json format |

| Output: A record of long and short term behavior of all alarms |

| 1: define the long and short interest time span LONG_TERM_SPAN, SHORT_TERM_SPAN |

| 2: for iterates over all alarms |

| 3: Extract the lifetime of the current alarm (including all the corresponding records of the playbook) |

| 4: define The current time variable current_time |

| 5: While: current_time during the lifetime: |

| 6: Long-term window extraction |

| 7: Short-term window extraction |

| 8: Sliding step size according to LONG_TERM_SPAN, SHORT_TERM_SPAN |

| 9: Record the current alarm result |

| 10: return alert long-term interest history, alert short-term interest history |

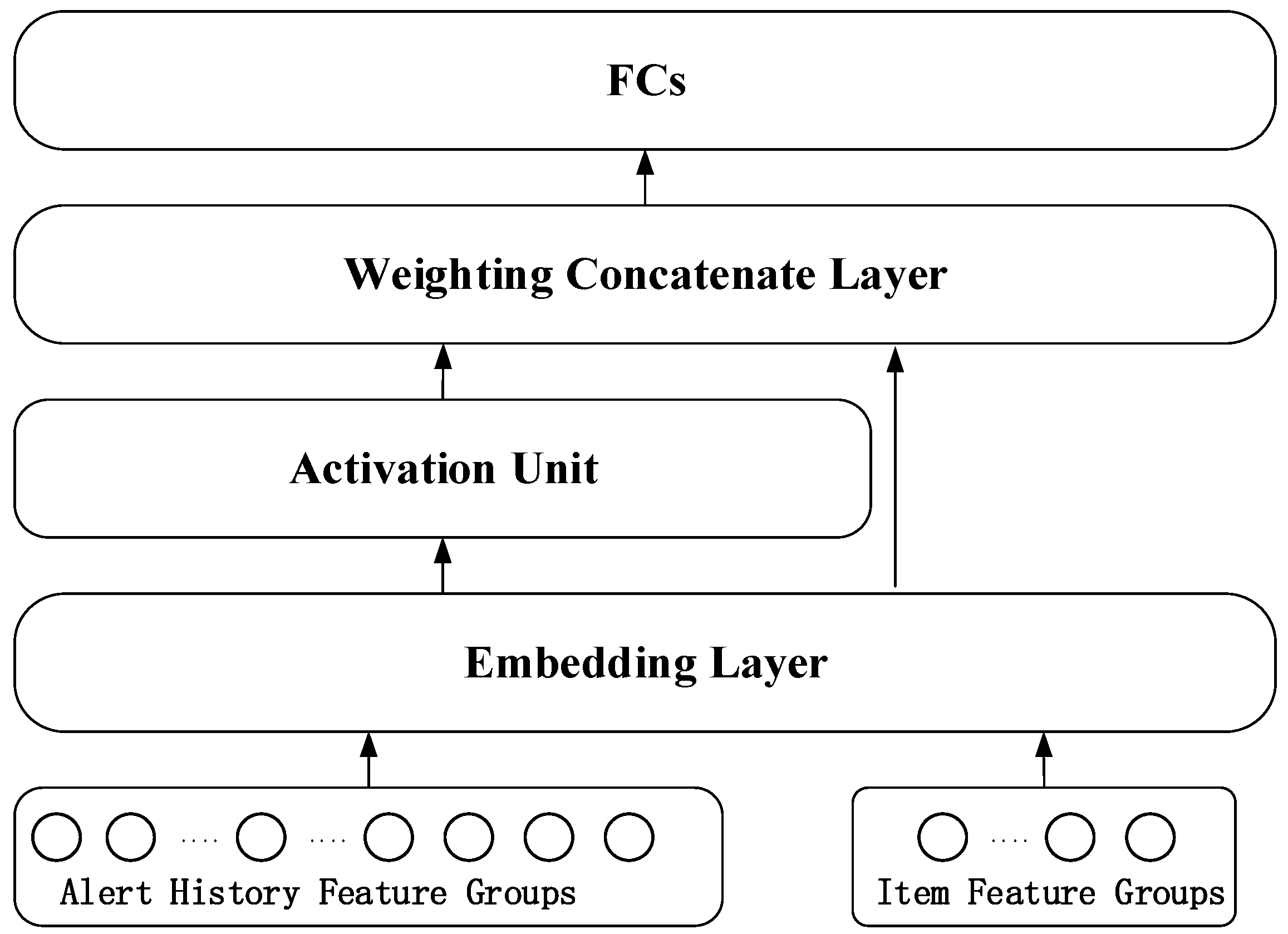

4.2. Intelligent Recommendation Framework Based on Dynamic Interest Modeling

| Algorithm 2. Recommendation Framework Based on Dynamic Interest Modeling |

| Input: static alert features user_features, playbook features item_features, historical alert behavior sequence user_behavior_seq |

| Output: The probability that the alarm matches the playbook |

| 1: define the attention unit attention_mlp |

| 2: Complete the playbook feature embedding e_c |

| 3: Complete the alarm history behavior sequence embedding e_i |

| 4: stitching characteristics concat_features = e_i||e_c||(e_i-e_c)||(e_i × e_c) |

| 5: calc calculates attention scores attention_scores=attention_mlp(concat_features) |

| 6: Weighted aggregated alarm interest representation v_u = e_i × attention_scores |

| 7: combined = v_u||e_c||user_features |

| 8: combined is fed into the fully connected layer to predict the matching probability |

| 9: return prediction probability |

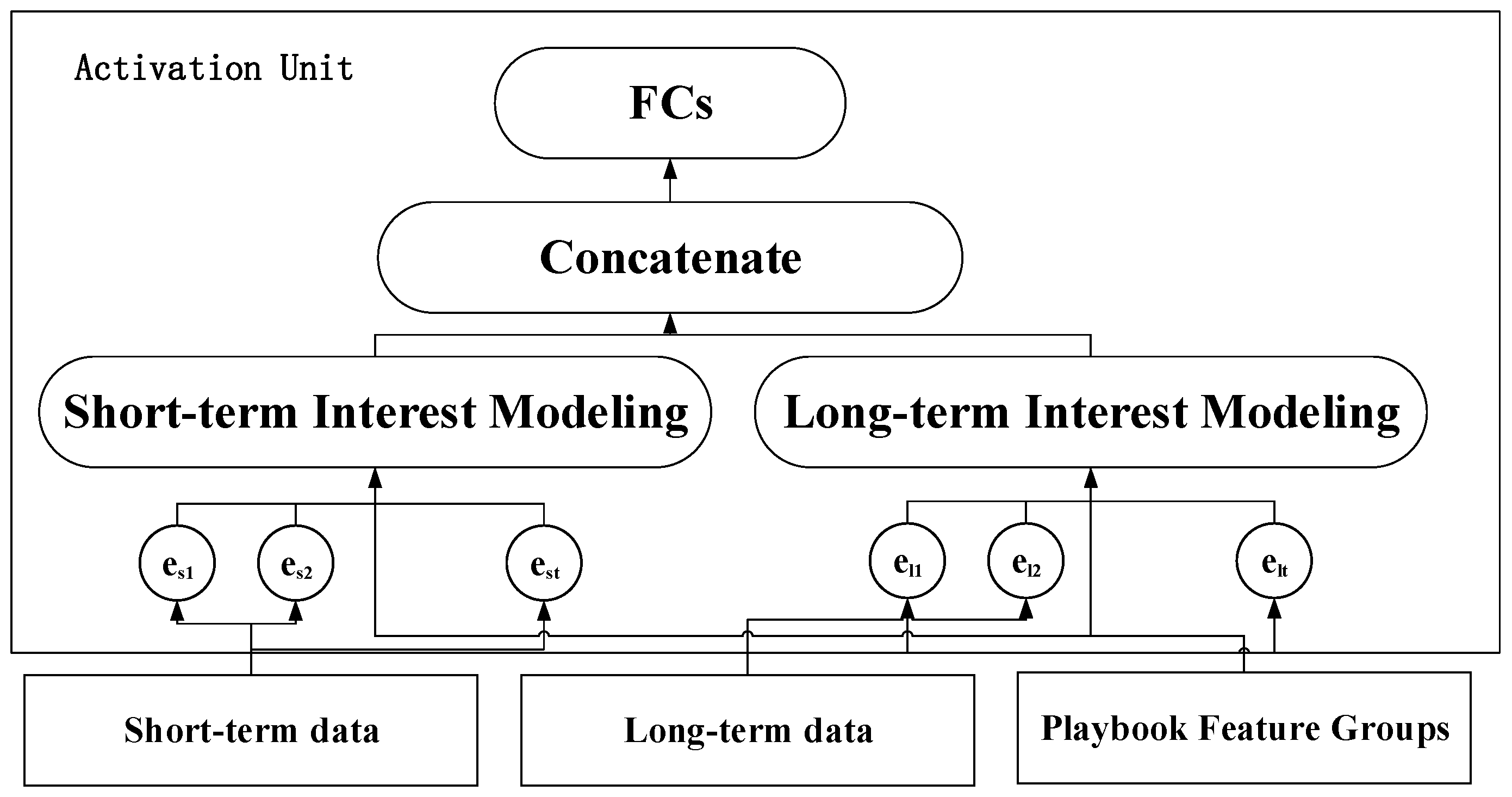

4.3. Implementation of Recommendation Algorithm Based on Dynamic Interest Modeling

| Algorithm 3. Activates the unit module process |

| Input: alert long-term interest history, alert short-term interest history, playbook feature item_features |

| Output: The weighted weight of the alarm historical behavior sequence |

| 1: define the short-term interest modeling layer LSTM_encoder |

| 2: define the long-term interest modeling layer transformer_encoder |

| 3: Concatenate short-term interest behavior and playbook features as the input of LSTM_encoder combined_input_short |

| 4: Concatenate long-term interest behavior and playbook features as input to transformer_encoder combined_input_long |

| 5: calc calculate long-term featureslong_term_interest=transformer_encoder(combined_input_long) |

| 6: rnn_out,(h_n,c_n)=LSTM_encoder(combined_input_short) |

| 7: Take the last hidden state as the short-term feature short_term_interest=h_n[−2]||h_n[−1] |

| 8: combined_interest=short_term_interest||long_term_interest |

| 9: The long-term and short-term features are fed into the fully connected layer to obtain the weighted weight Weight_history of the historical behavior |

| 10: return Weight_history |

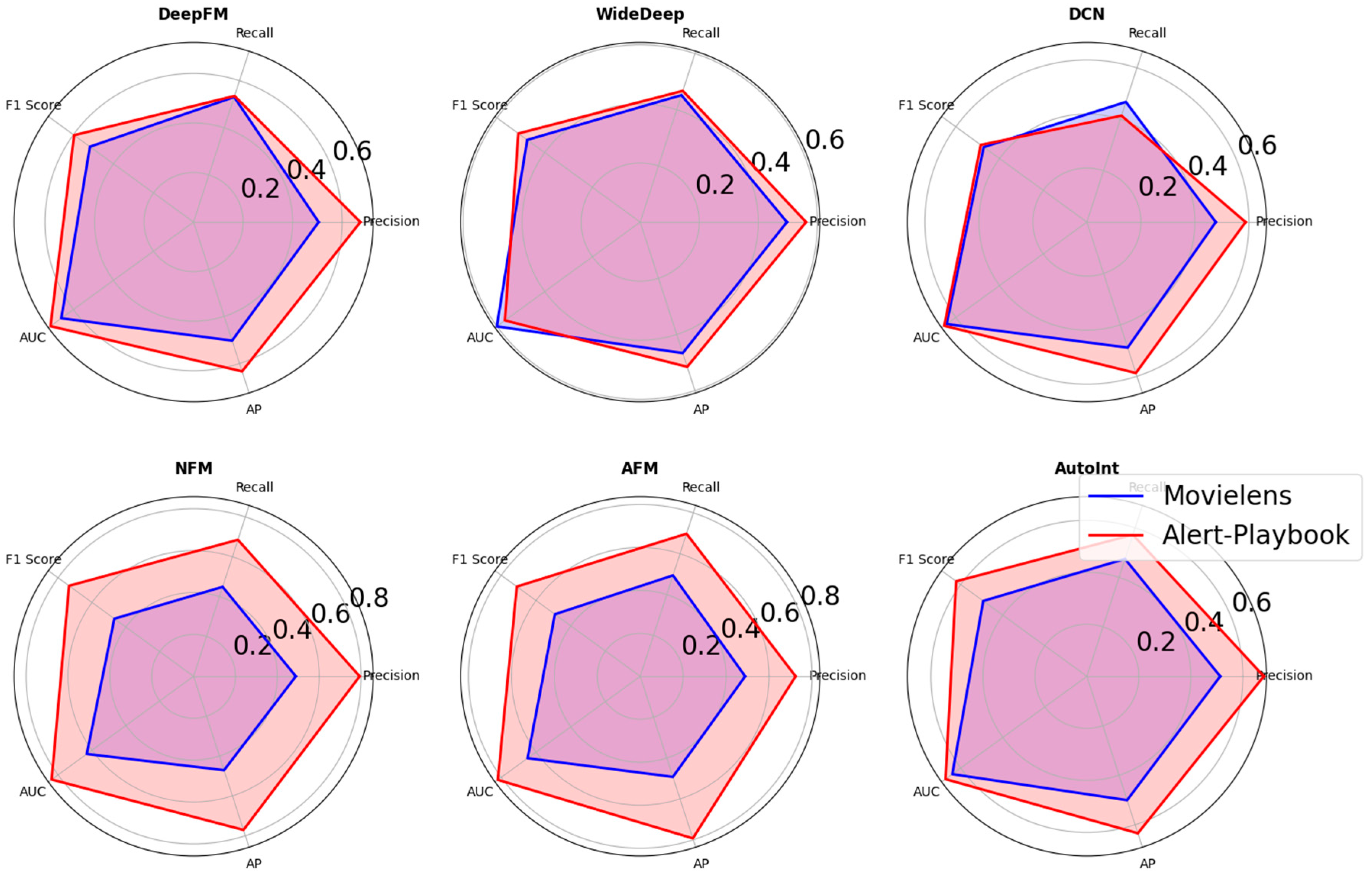

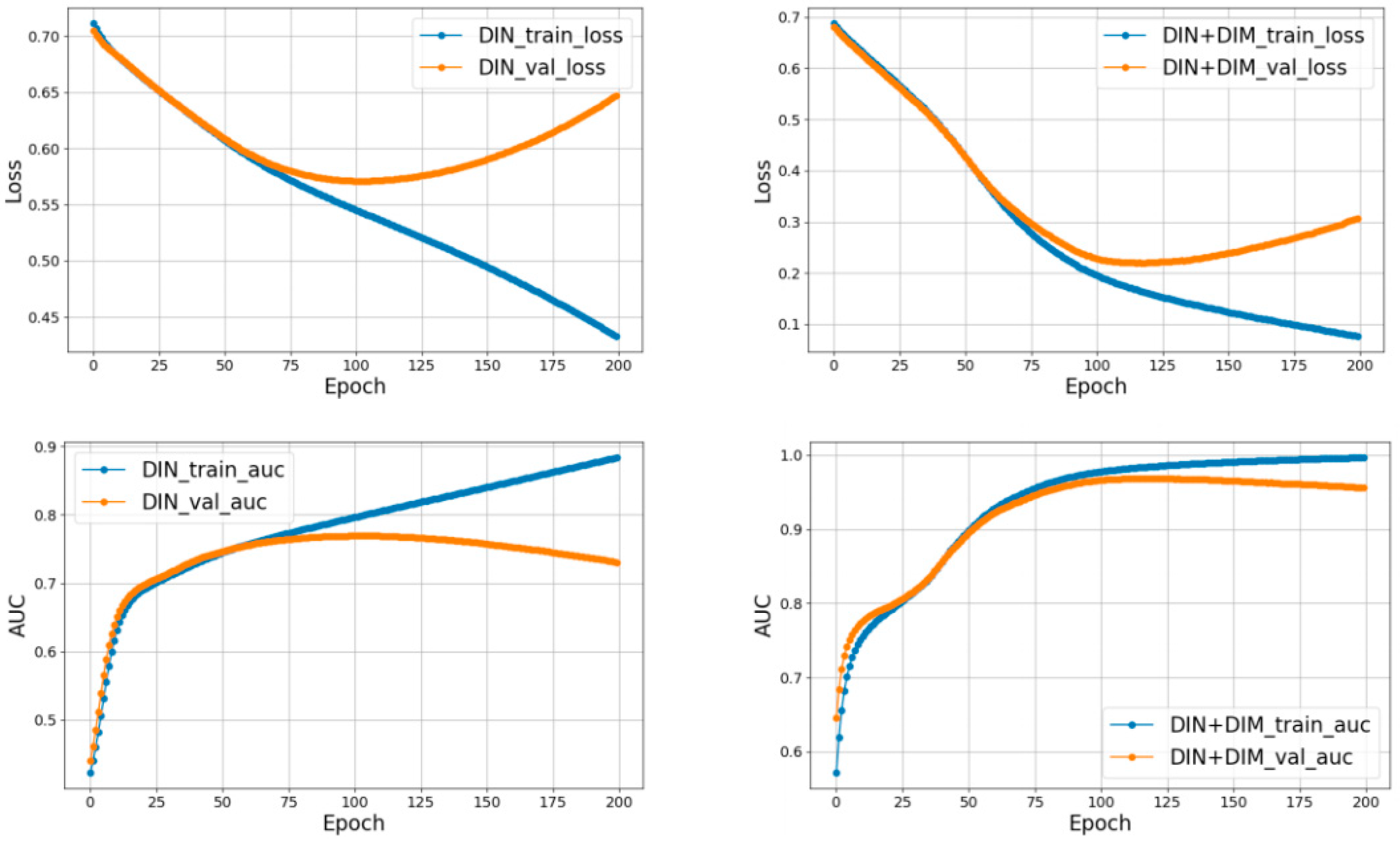

5. Experimental Results and Analysis

5.1. Evaluation Index

5.2. Data Set

5.2.1. Movielens Data Set

5.2.2. The Alert-Playbook Data Set

5.3. Feasibility and Results

Feasibility Analysis

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Batewela, S.; Ranaweera, P.; Liyanage, M.; Zeydan, E.; Ylianttila, M. Addressing Security Orchestration Challenges in Next-Generation Networks: A Comprehensive Overview. IEEE Open J. Comput. Soc. 2025, 6, 669–687. [Google Scholar] [CrossRef]

- Bartwal, U.; Mukhopadhyay, S.; Negi, R.; Shukla, S. Security orchestration, automation, and response engine for deployment of behavioural honeypots. In Proceedings of the 2022 IEEE Conference on Dependable and Secure Computing (DSC), Edinburgh, UK, 22–24 June 2022; pp. 1–8. [Google Scholar]

- Neiva, C.; Lawson, C.; Bussa, T.; Sadowski, G. Market Guide for Security Orchestration, Automation and Response Solutions. Gartner, Inc.: Stamford, CT, USA, 2020. [Google Scholar]

- Dwivedi, S.; Rajendran, B.; Akshay, P.; Acha, A.; Ampatt, P.; Sudarsan, S.D. IntelliSOAR: Intelligent Alert Enrichment Using Security Orchestration Automation and Response (SOAR). In Proceedings of the International Conference on Information Systems Security, Jaipur, India, 16–20 December 2024; pp. 453–462. [Google Scholar]

- Deng, Z.; Sun, R.; Xue, M.; Ma, W.; Wen, S.; Nepal, S.; Xiang, Y. Hardening LLM Fine-Tuning: From Differentially Private Data Selection to Trustworthy Model Quantization. IEEE Trans. Inf. Forensics Secur. 2025, 20, 7211–7226. [Google Scholar] [CrossRef]

- Ko, H.; Lee, S.; Park, Y.; Choi, A. A survey of recommendation systems: Recommendation models, techniques, and application fields. Electronics 2022, 11, 141. [Google Scholar] [CrossRef]

- Deng, Z.; Guo, Y.; Han, C.; Ma, W.; Xiong, J.; Wen, S.; Xiang, Y. Ai agents under threat: A survey of key security challenges and future pathways. ACM Comput. Surv. 2025, 57, 182. [Google Scholar] [CrossRef]

- Huang, L.; Jiang, B.; Lv, S.; Liu, Y.; Li, D. A Review of Recommendation Systems Based on Deep Learning. J. Comput. Sci. 2018, 41, 29. [Google Scholar]

- Ma, W.; Wang, D.; Song, Y.; Xue, M.; Wen, S.; Li, Z.; Xiang, Y. TrapNet: Model Inversion Defense via Trapdoor. IEEE Trans. Inf. Forensics Secur. 2025, 20, 4469–4483. [Google Scholar] [CrossRef]

- Sheng, C.; Zhou, W.; Han, Q.-L.; Ma, W.; Zhu, X.; Wen, S.; Xiang, Y. Network traffic fingerprinting for IIoT device identification: A survey. IEEE Trans. Ind. Inform. 2025, 21, 3541–3554. [Google Scholar] [CrossRef]

- Pazzani, M.J.; Billsus, D. Content-Based Recommendation Systems. In The Adaptive Web: Methods and Strategies of Web Personalization; Springer: Berlin/Heidelberg, Germany, 2007; pp. 325–341. [Google Scholar]

- Herlocker, J.L.; Konstan, J.A.; Borchers, A.; Riedl, J. An Algorithmic Framework for Performing Collaborative Filtering. ACM SIGIR Forum 2017, 51, 227–234. [Google Scholar] [CrossRef]

- Badrul, S.; George, K.; Joseph, K.; John, R. Item-based collaborative filtering recommendation algorithmus. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, 1–5 May 2001. [Google Scholar]

- Cheng, H.-T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & Deep Learning for Recommender Systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- Guo, H.; Tang, R.; Ye, Y.; Li, Z.; He, X. DeepFM: A Factorization-Machine based Neural Network for CTR Prediction. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- He, X.; Chua, T.-S. Neural Factorization Machines for Sparse Predictive Analytics. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 355–364. [Google Scholar]

- Wang, R.; Fu, B.; Fu, G.; Wang, M. Deep & Cross Network for Ad Click Predictions. In Proceedings of the KDD ’17: The 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017. [Google Scholar]

- Mao, K.; Zhu, J.; Su, L.; Cai, G.; Li, Y.; Dong, Z. FinalMLP: An enhanced two-stream MLP model for CTR prediction. Proc. AAAI Conf. Artif. Intell. 2023, 37, 4552–4560. [Google Scholar] [CrossRef]

- Sedhain, S.; Menon, A.K.; Sanner, S.; Xie, L. AutoRec: Autoencoders Meet Collaborative Filtering. In Proceedings of the International Conference on World Wide Web, Florence, Italy, 18–22 May 2015. [Google Scholar]

- Wang, H.; Zhang, F.; Xie, X.; Guo, M. DKN: Deep Knowledge-Aware Network for News Recommendation. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018. [Google Scholar]

- Sun, Z.; Yang, J.; Zhang, J.; Bozzon, A.; Huang, L.-K.; Xu, C. Recurrent knowledge graph embedding for effective recommendation. In Proceedings of the RecSys ’18: Twelfth ACM Conference on Recommender Systems, Vancouver, BC, Canada, 2 October 2018. [Google Scholar]

- Zhu, X.; Zhou, W.; Han, Q.-L.; Ma, W.; Wen, S.; Xiang, Y. When software security meets large language models: A survey. Ieee/caa J. Autom. Sin. 2025, 12, 317–334. [Google Scholar] [CrossRef]

- Zhou, G.; Song, C.; Zhu, X.; Fan, Y.; Zhu, H.; Ma, X.; Yan, Y.; Jin, J.; Li, H.; Gai, K. Deep Interest Network for Click-Through Rate Prediction. In Proceedings of the KDD ’18: The 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, London, UK, 19–23 August 2017. [Google Scholar]

- Liao, W. Exploration and Scenario Practice of Security Orchestration and Automated Response. Netinfo Secur. 2020, S2, 102–105. [Google Scholar]

- Liang, J.; Chen, J.; Zhang, X.; Zhou, Y.; Lin, J. Anomaly detection based on single-heat coding and convolutional neural networks. J. Tsinghua Univ. (Sci. Technol.) 2019, 59, 523–529. [Google Scholar]

- Huang, D. Research on User Dynamic Interest Model in Recommendation Systems. Master’s Thesis, South China University of Technology, Guangzhou, China, 2018. [Google Scholar]

- Chen, X.; Li, C.; Wang, D.; Wen, S.; Zhang, J.; Nepal, S.; Xiang, Y.; Ren, K. Android HIV: A study of repackaging malware for evading machine-learning detection. IEEE Trans. Inf. Forensics Secur. 2019, 15, 987–1001. [Google Scholar] [CrossRef]

- Deng, Z.; Ma, W.; Han, Q.-L.; Zhou, W.; Zhu, X.; Wen, S.; Xiang, Y. Exploring DeepSeek: A Survey on Advances, Applications, Challenges and Future Directions. Ieee/caa J. Autom. Sin. 2025, 12, 872–893. [Google Scholar] [CrossRef]

- Zhang, K. Analysis and Defense of DDoS Attacks. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2004. [Google Scholar]

- Wang, Y.; Wang, L.; Li, Y.; He, D.; Liu, T.Y.; Chen, W. A Theoretical Analysis of NDCG Type Ranking Measures. In Proceedings of the Conference on Learning Theory (COLT 2013); PMLR: Princeton, NJ, USA, 2013; pp. 25–54. [Google Scholar]

- Kishida, K. Property of Mean Average Precision as Performance Measure in Retrieval Experiment. IPSJ SIG Tech. Rep. 2001, 74, 97–104. [Google Scholar]

- González, Á.; Ortega, F.; Pérez-López, D.; Alonso, S. Bias and unfairness of collaborative filtering based recommender systems in MovieLens dataset. IEEE Access 2022, 10, 68429–68439. [Google Scholar] [CrossRef]

- Teng, Y.; Wu, Y.; Shi, H.; Ning, X.; Dai, G.; Wang, Y.; Li, Z.; Liu, X. DiM: Diffusion Mamba for Efficient High-Resolution Image Synthesis. arXiv 2024, arXiv:2405.14224. [Google Scholar]

| ID | Alarm Properties | Examples | Description |

|---|---|---|---|

| 1 | Alert ID | d2387y8o2hu696c | The alert ID is a unique identifier for each alert event. During the incident response process, the alert ID is used to track and manage the lifecycle of the alert, with each alert ID corresponding to a specific security event. |

| 2 | Alert Type | Cloud Firewall Attack | The alert type describes the nature of the event, such as virus infection, intrusion detection, or DDoS attack. |

| 3 | Alert Severity | High | The alert level is usually categorized as low, medium, or high, indicating the severity of the event. |

| 4 | Source IP | 192.168.1.1 | The source IP address of the attack or anomalous behavior. |

| 5 | Target IP | 192.168.2.5 | The target IP address refers to the attacked host or device, usually the victim. |

| 6 | Timestamp | 2024-03-01T18:05:35.073+08:00 | The event time of the alert, which helps analyze the timing of attacks, identify patterns, and detect attack windows. |

| 7 | Attack Signature | SQL Injection attack matching | Attack characteristics are the matched information between the alert and known attack patterns, typically generated by systems such as firewalls, IDS, or IPS. |

| 8 | Attack Chain Phase | Intrusion | According to the attack lifecycle model, an alert may correspond to a specific attack phase, such as reconnaissance, intrusion, or propagation. |

| Id | Playbook Properties | Examples | Description |

|---|---|---|---|

| 1 | Playbook Name | Attack link analysis alarm notification | The unique name of the play, used for identification and reference. |

| 2 | Playbook ID | c3534638-wh9u-e5fa740bdb89 | An ID that uniquely identifies the playbook and is used to associate alarms with tasks and response actions in the playbook. |

| 3 | Trigger Conditions | One violation of access was detected | A scenario triggers conditions, usually based on specific events or alerts. |

| 4 | Response Type | Notification | The response to an incident can be defensive, restorative, notification, and so on. |

| 5 | Priority | High | The priority of the playbook, indicating the urgency or importance of the processing. |

| 6 | Automated Actions | Alarm email notification | Actions that are performed automatically, often through API integration to interact with other systems. |

| 7 | Integrations Support | Integration with firewalls and intrusion prevention systems | Integration of playbook with other security tools, platforms, and services. |

| 8 | Status & Error Handling | On the fly | Handle errors or exceptions during playbook execution to ensure that the playbook is executed correctly. |

| Timestamp | Alert Type | Severity | Source IP | Target IP | Playbook |

|---|---|---|---|---|---|

| 2025-02-17 08:01:00 | IDS | High | 192.168.1.1 | 192.168.2.5 | Intrusion detection response playbook |

| 2025-02-17 08:03:15 | High | 192.168.1.1 | 192.168.2.7 | Malware Detection and Isolation playbook | |

| 2025-02-17 08:05:30 | Low | 192.168.1.1 | 192.168.2.5 | Port Scan detection and protection playbook | |

| 2025-02-17 08:07:45 | High | 192.168.1.1 | 192.168.2.6 | Intrusion detection response playbook | |

| 2025-02-17 08:09:00 | Low | 192.168.1.1 | 192.168.2.5 | Port Scan detection and protection playbook | |

| 2025-02-17 08:12:30 | High | 192.168.1.1 | 192.168.2.5 | Malware Detection and Isolation playbook |

| Window 1: 17 February 2025 08:00:00 to 17 February 2025 08:05:00 | |

| Source IP | [192.168.1.1, 192.168.1.1, 192.168.1.1] |

| Target IP | [192.168.2.5, 192.168.2.7, 192.168.2.5] |

| Playbook | [Intrusion detection response playbook, malware detection and isolation playbook, port scan detection and protection playbook] |

| Window 2: 17 February 2025 08:02:00 to 17 February 2025 08:07:00 | |

| Source IP | [192.168.1.1, 192.168.1.1, None] |

| Target IP | [192.168.2.5, 192.168.2.7, None] |

| Playbook | [Malware detection and isolation playbook, port scan detection and protection playbook, None] |

| DeepFM | WideDeep | DCN | NFM | AFM | DIN | AutoInt | DIN+DIM | |

|---|---|---|---|---|---|---|---|---|

| Precision | 0.6734 | 0.5617 | 0.5876 | 0.7931 | 0.7237 | 0.6786 | 0.6804 | 0.8679 |

| Recall | 0.5352 | 0.4671 | 0.4141 | 0.6851 | 0.6978 | 0.6530 | 0.5721 | 0.9067 |

| F1 Score | 0.5964 | 0.5101 | 0.4858 | 0.7351 | 0.7105 | 0.6656 | 0.6216 | 0.8878 |

| AUC | 0.7145 | 0.5664 | 0.6531 | 0.8381 | 0.8197 | 0.7650 | 0.6724 | 0.9607 |

| AP | 0.6335 | 0.5151 | 0.5874 | 0.7709 | 0.793 | 0.7247 | 0.6344 | 0.9537 |

| Hit Ratio | 0.4501 | 0.4723 | 0.4028 | 0.5041 | 0.5543 | 0.5177 | 0.4893 | 0.5200 |

| NDCG | 0.5604 | 0.6383 | 0.5341 | 0.8335 | 0.9522 | 0.4915 | 0.7236 | 0.6432 |

| MAP | 0.4501 | 0.4723 | 0.4028 | 0.5041 | 0.5543 | 0.4933 | 0.4893 | 0.5253 |

| MRR | 0.4501 | 0.4723 | 0.4028 | 0.5041 | 0.5543 | 0.4933 | 0.4893 | 0.5200 |

| Symbol Meaning | Symbol Representation | Set Value |

|---|---|---|

| Learning rate | Learning Rate | 1 × 10−4 |

| Optimizer | Optimizer | Adam |

| Batch size | Batch Size | 64 |

| Number of iterations | Epochs | 100 |

| DIN | DIN+DIM | |

|---|---|---|

| Precision | 0.6786 | 0.8679 |

| Recall | 0.6530 | 0.9067 |

| F1 Score | 0.6656 | 0.8878 |

| AUC | 0.7650 | 0.9607 |

| AP | 0.7247 | 0.9537 |

| Hit Ratio | 0.5177 | 0.5200 |

| NDCG | 0.4915 | 0.6432 |

| MAP | 0.4933 | 0.5253 |

| MRR | 0.4933 | 0.5200 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, H.; Zhang, L.; Zhang, Z.; Yao, X.; Wu, X. An Intelligent Playbook Recommendation Algorithm Based on Dynamic Interest Modeling for SOAR. Symmetry 2025, 17, 1851. https://doi.org/10.3390/sym17111851

Hu H, Zhang L, Zhang Z, Yao X, Wu X. An Intelligent Playbook Recommendation Algorithm Based on Dynamic Interest Modeling for SOAR. Symmetry. 2025; 17(11):1851. https://doi.org/10.3390/sym17111851

Chicago/Turabian StyleHu, Hangyu, Liangrui Zhang, Zhaoyu Zhang, Xingmiao Yao, and Xia Wu. 2025. "An Intelligent Playbook Recommendation Algorithm Based on Dynamic Interest Modeling for SOAR" Symmetry 17, no. 11: 1851. https://doi.org/10.3390/sym17111851

APA StyleHu, H., Zhang, L., Zhang, Z., Yao, X., & Wu, X. (2025). An Intelligent Playbook Recommendation Algorithm Based on Dynamic Interest Modeling for SOAR. Symmetry, 17(11), 1851. https://doi.org/10.3390/sym17111851