Predicting the Forest Fire Duration Enriched with Meteorological Data Using Feature Construction Techniques

Abstract

1. Introduction

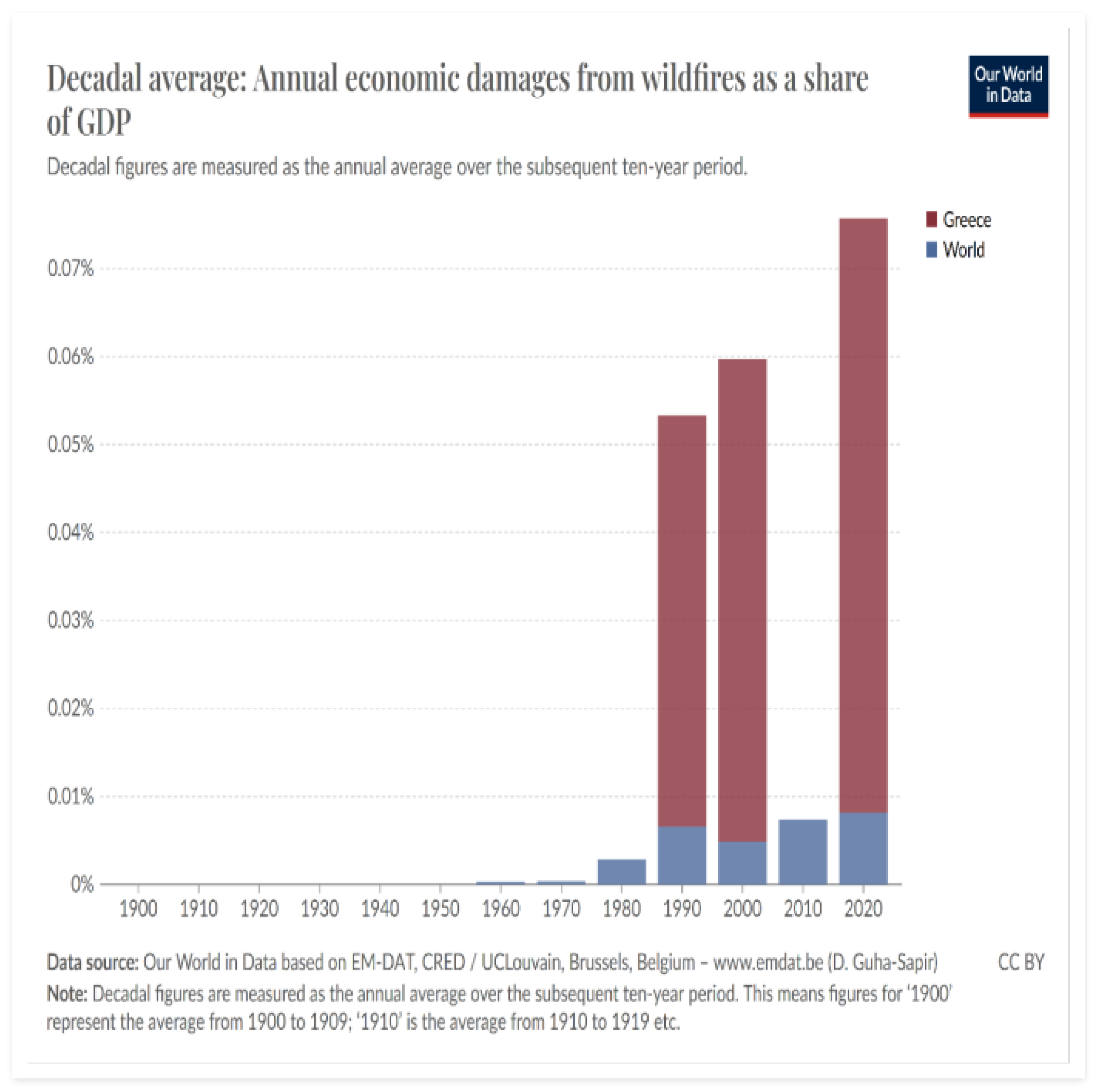

- The cost of fire is estimated at about 1% to 2% of the annual GDP.

- About 1% of fires are responsible for more than 50% of the costs.

- The number of people that die in fires is estimated at 2.2 deaths per 100,000 inhabitants (based on 35 countries) [5].

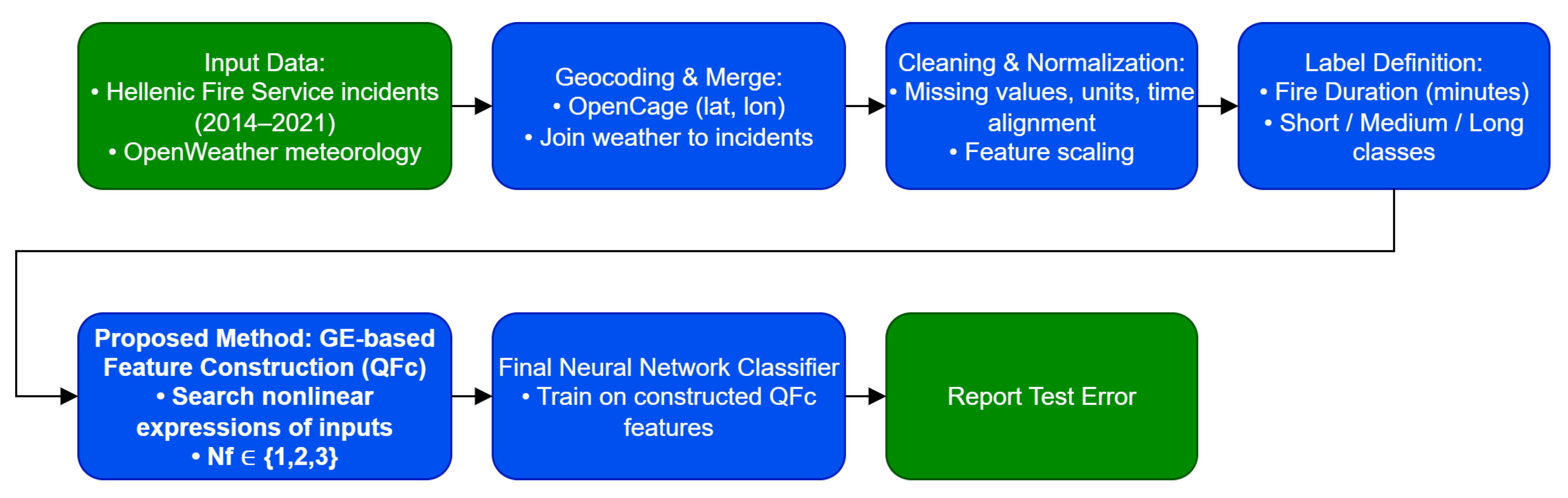

- The incorporation of Greek Forest Fire data from the years 2014–2021 (57,904 entries).

- The inclusion of meteorological data for each fire event.

- The classification of forest fires according to their duration.

- The usage of Feature Construction techniques.

- The prediction of the forest fire duration.

- Main contributions: Estimating fire duration and analyzing the interaction between environmental conditions and fire duration.

2. Materials and Methods

2.1. The Used Dataset

2.1.1. Data Preprocessing and Weather Feature Extraction

- Temperature at 2 m: The air temperature near the ground level.

- Relative Humidity at 2 m: The percentage of moisture in the air relative to its maximum capacity.

- Dew Point at 2 m: The temperature at which air reaches saturation and moisture condenses.

- Precipitation: The amount of rainfall during the specific time interval.

- Weather Code: A classification of the general weather conditions (e.g., clear, cloudy, rainy).

- Cloud Cover: The percentage of the sky obscured by clouds.

- Evapotranspiration (ET0): The potential evapotranspiration measured using the FAO Penman–Monteith method, indicating water loss from the surface and vegetation.

- Vapour Pressure Deficit (VPD): The difference between the amount of moisture in the air and the maximum it can hold.

- Wind Speed at 10 m and 100 m: Wind velocity measured at heights of 10 m and 100 m.

- Wind Direction at 10 m and 100 m: The directional angle of the wind at the respective heights.

- Daylight Duration: The total hours of daylight during the day.

- Sunshine Duration: The total hours of direct sunlight during the day.

2.1.2. Definition of the Output Variable

- Up to 360 min (6 h) is considered to be a fire, of short duration.

- From 361–7200 min (6 h–5 days) is a fire of medium duration.

- More than 7201 min (5 days- and more), which is considered a long duration fire.

2.2. The Used Feature Construction and Selection Methods

2.2.1. The PCA Method

2.2.2. The MRMR Method

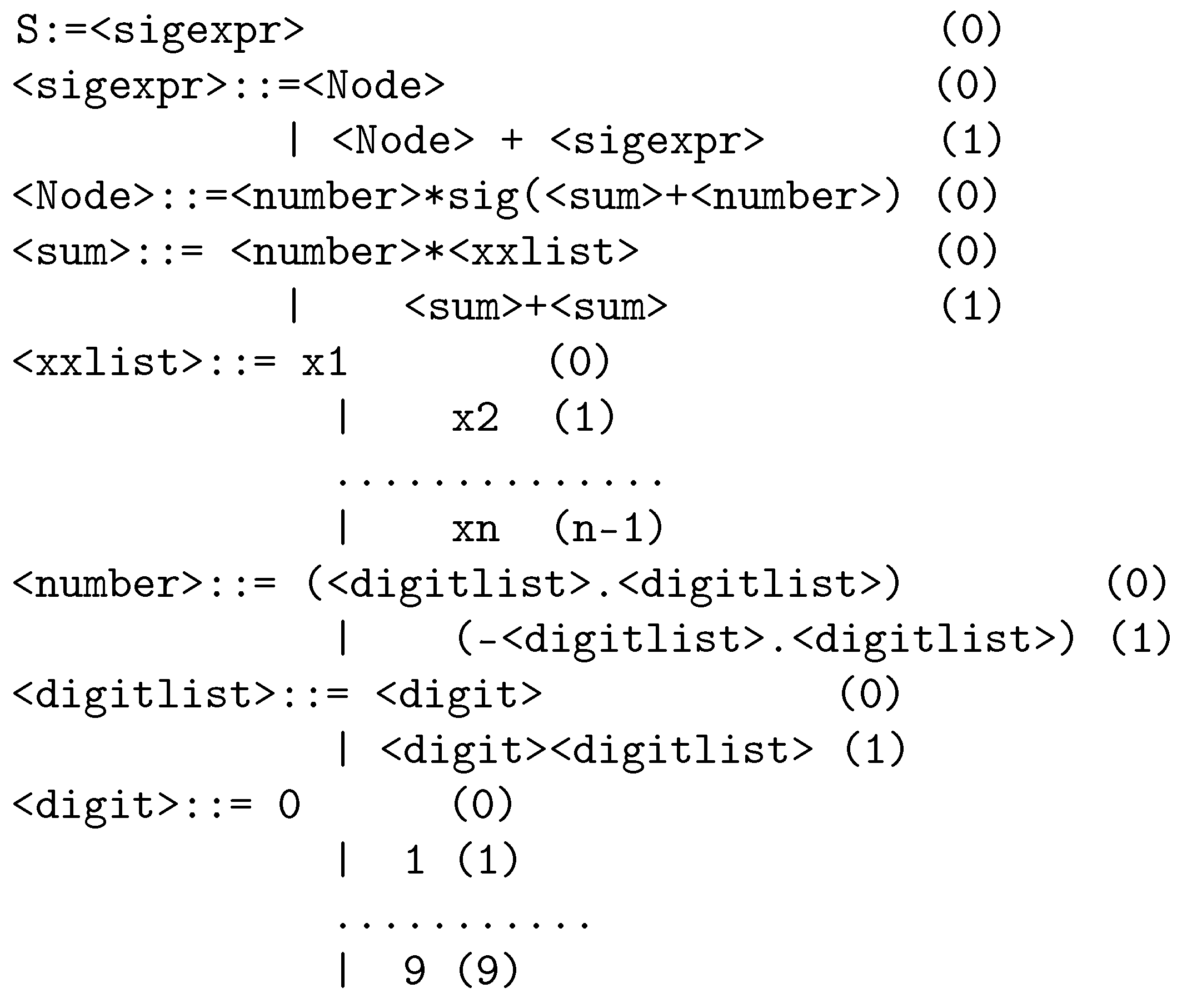

2.2.3. The Neural Network Construction Method

- Initialization step.

- (a)

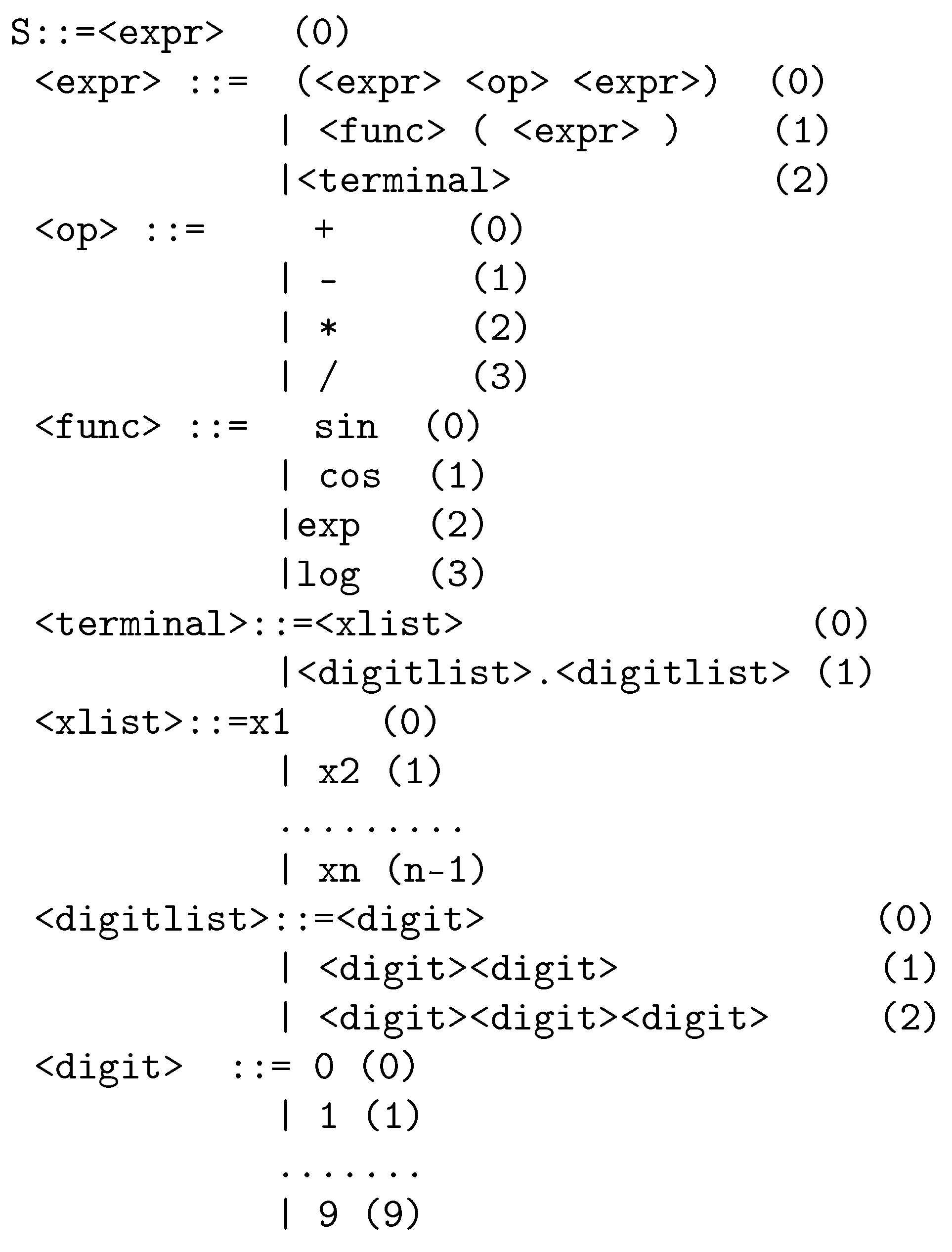

- Set the number of used chromosomes . Each chromosome is a set of randomly selected integers. These integer values represent rule number in the extended BNF grammar previously presented.

- (b)

- Set the maximum number of allowed generations .

- (c)

- Set the selection rate and the mutation rate .

- (d)

- Set , the generation number.

- Fitness calculation step.

- (a)

- For each chromosome ,

- Create using the grammar of Figure 4 the corresponding neural network

- Set as the fitness of chromosome i. The set stands for the train set of the objective problem.

- (b)

- End

- Genetic operations step.

- (a)

- Application of Selection operator. The chromosomes of the population are sorted according to their fitness values and the best chromosomes are copied to the next generation. The remaining are replaced by new chromosomes produced during crossover and mutation.

- (b)

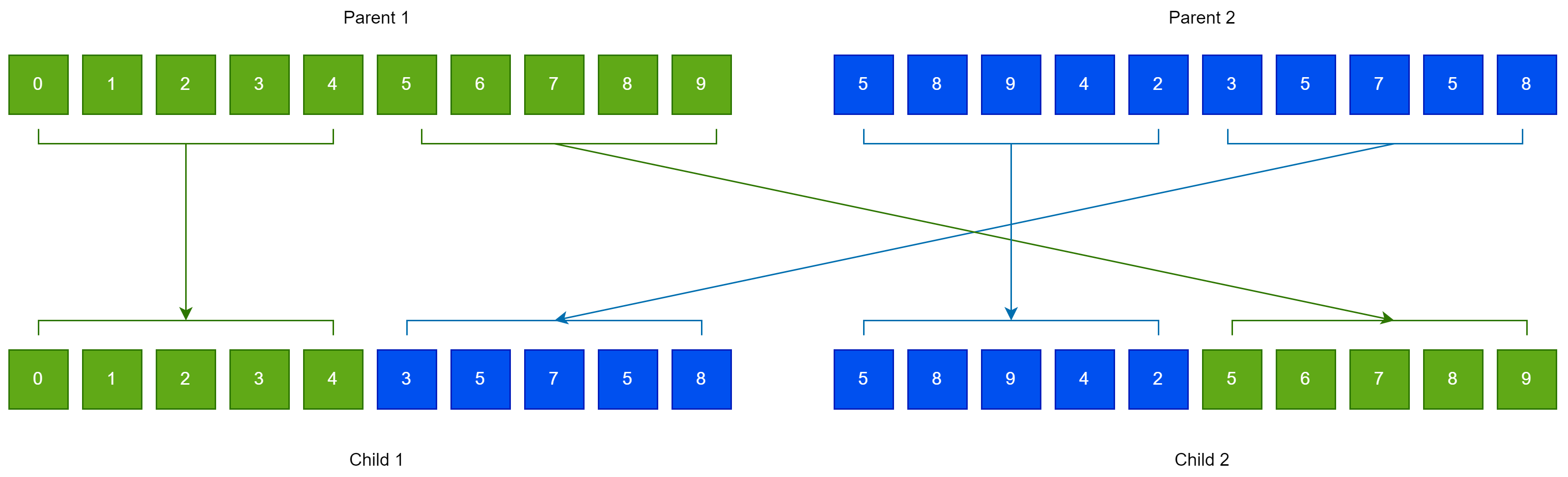

- Application of Crossover operator. In this step new chromosomes will be created from the original ones. For each set of new chromosomes that will be created, two chromosomes and are selected from the old population using tournament selection. The new chromosomes are created using one-point crossover between and . An example of this operation is shown graphically in Figure 5.

- (c)

- Application of Mutation operator. For each element of every chromosome, a random number is selected. The corresponding element is changed randomly when .

- Termination check step.

- (a)

- Set

- (b)

- If then go to Fitness Calculation Step.

- Application to the test set.

- (a)

- Obtain the best chromosome from the genetic population.

- (b)

- Create the corresponding neural network .

- (c)

- Apply this neural network to the test set of the objective problem and report the corresponding error (test error).

2.2.4. The Feature Construction Method

- Initialization step.

- (a)

- Define the number of used chromosomes .

- (b)

- Define the maximum number of allowed generations .

- (c)

- Set the selection rate and the mutation rate .

- (d)

- Set as the number of desired features that will be created.

- (e)

- Set , the generation number.

- Fitness calculation step.

- For ,

- (a)

- Create, with the assistance of Grammatical Evolution, a set of artificial features from the original ones, for chromosome .

- (b)

- Transform the original train set using the previously produced features. Represent the new set as

- (c)

- Apply a machine learning model denoted as C on set TR and train this model and denote as the output of this model for any input pattern x.

- (d)

- Calculate the fitness as:

- End

- Genetic operations step. Perform the same genetic operators as in the case of construction neural networks, discussed previously.

- Termination check step.

- (a)

- Set

- (b)

- If then go to Fitness Calculation Step.

- Application to the test set.

- (a)

- Obtain the chromosome with the lowest fitness value.

- (b)

- Create the artificial features that correspond to this chromosome.

- (c)

- Apply the features to the train set and produce the mapped training set

- (d)

- (e)

- Apply the new features to the test set of the objective problem and create the set

- (f)

- Apply the machine learning model on set TT and report the test error.

3. Results

- The column YEAR denotes the year of recording.

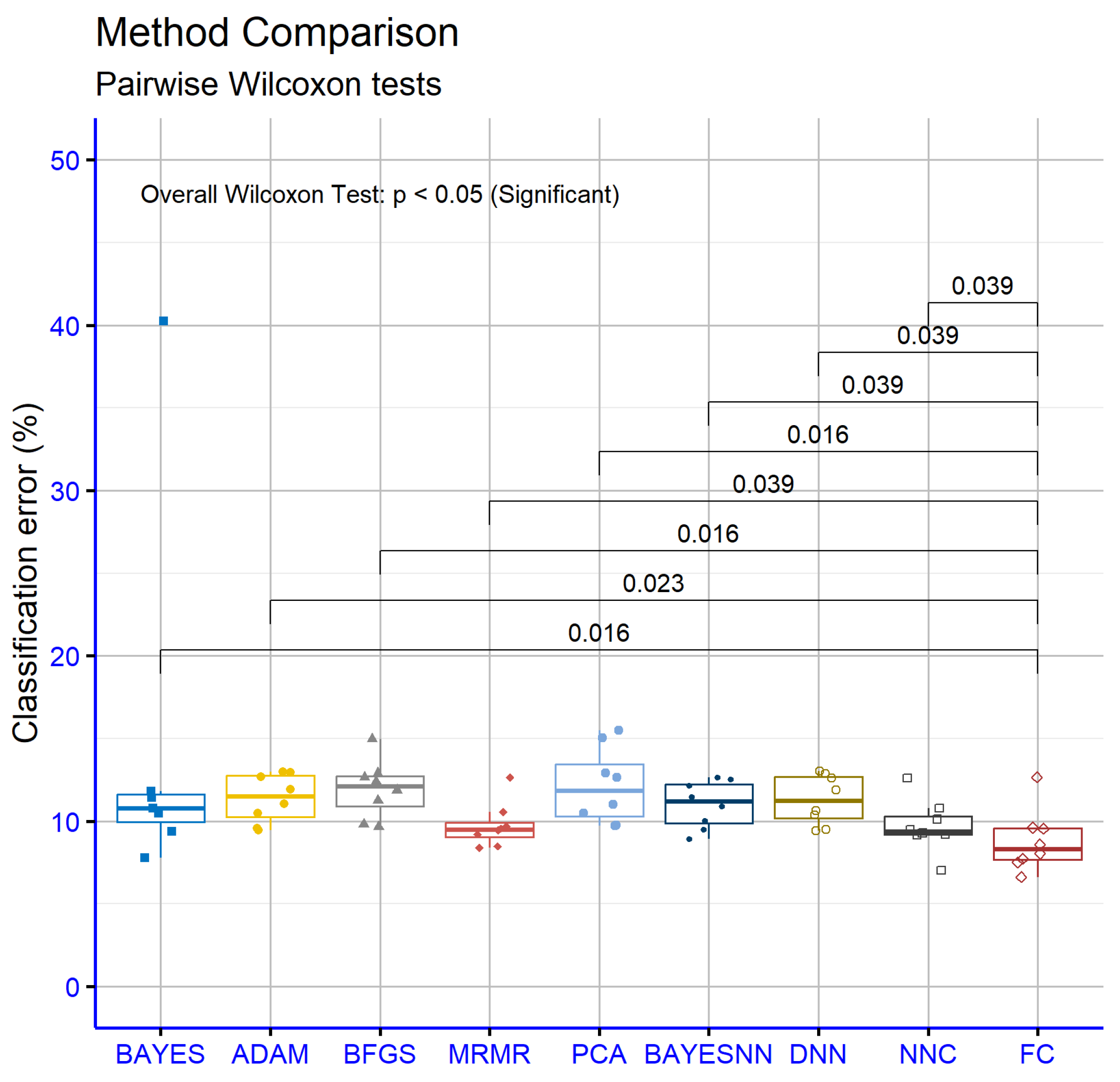

- The column BAYES the application of the Naive Bayes [100] method to the corresponding dataset.

- The column ADAM represents the usage of the ADAM optimizer [101] for the training of a neural network with processing nodes.

- The column BFGS denotes the incorporation of the BFGS optimizer [93] to train a neural network with processing nodes.

- The column MRMR denotes the results obtained by the application of a neural network trained with the BFGS optimizer on two features selected using the MRMR technique.

- The column PCA stands for the results obtained by the application of a neural network trained with the BFGS optimizer on two features created using the PCA technique. The PCA variant implemented in MLPACK software [102] was incorporated to create these features.

- The column BAYESNN presents results using the Bayesian optimizer from the open-source BayesOpt library [103]. This method was used to train a neural network with 10 processing nodes.

- The column DNN denotes the usage of a deep neural network as implemented in the Tiny Dnn library, which can be downloaded freely from https://github.com/tiny-dnn/tiny-dnn (accessed on 10 October 2025). The optimization method AdaGrad [104] was used to train the neural network in this case.

- The column NNC denotes the usage of the method of Neural Network Construction on the proposed datasets. The software that implements this method was obtained from [105].

- The column FC represents the usage of the previously mentioned method for constructing artificial features. For the purposes of this article, two artificial features were created. These features were produced and evaluated using the QFc software version 1.0 [106].

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Field, J.F. London, Londoners and the Great Fire of 1666: Disaster and Recovery; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Gowlett, J.A.J. The discovery of fire by humans: A long and convoluted process. Philos. Trans. Biol. 2016, 371, 20150164. [Google Scholar] [CrossRef] [PubMed]

- Heinonen, K. The Fire of Prometheus: More Than Just a Gift to Humanity. Greek Mythology. 19 November 2024. Available online: https://greek.mythologyworldwide.com/the-fire-of-prometheus-more-than-just-a-gift-to-humanity/ (accessed on 29 November 2024).

- McCaffrey, S. Thinking of wildfire as a natural hazard. Soc. Nat. Resour. 2004, 17, 509–516. [Google Scholar] [CrossRef]

- Van Hees, P. The Burning Challenge of Fire Safety. ISO, International Organization for Standardization. Available online: https://www.iso.org/news/2014/11/Ref1906.html (accessed on 3 December 2024).

- UNEP. United Nations Environment Programme. Number of Wildfires to Rise by 50 per Cent by 2100 and Governments Are Not Prepared, Experts Warn. 23 February 2022. Available online: https://www.unep.org/news-and-stories/press-release/number-wildfires-rise-50-cent-2100-and-governments-are-not-prepared (accessed on 4 December 2024).

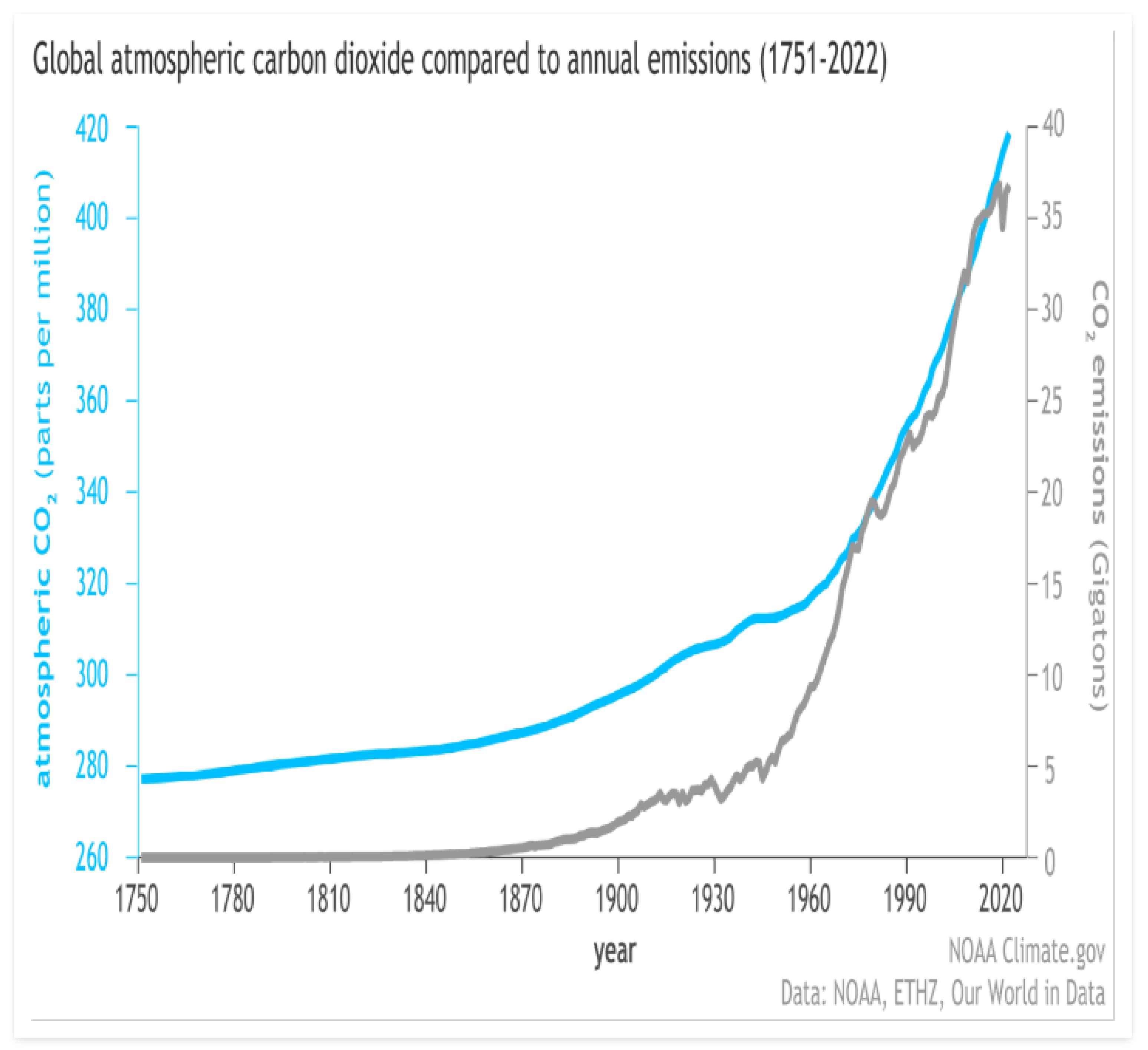

- NASA. Carbon Dioxide, Vital Signs. October 2024. Available online: https://climate.nasa.gov/vital-signs/carbon-dioxide/?intent=121 (accessed on 29 November 2024).

- Giorgi, F. Climate change hot-spots. Geophys. Res. Lett. 2006, 33, 783–792. [Google Scholar] [CrossRef]

- Iliopoulos, N.; Aliferis, I.; Chalaris, M. Effect of Climate Evolution on the Dynamics of the Wildfires in Greece. Fire 2024, 7, 162. [Google Scholar] [CrossRef]

- Satish, M.; Prakash; Babu, S.M.; Kumar, P.P.; Devi, S.; Reddy, K.P. Artificial Intelligence (AI) and the Prediction of Climate Change Impacts. In Proceedings of the 2023 IEEE 5th International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), Hamburg, Germany, 7–8 October 2023. [Google Scholar]

- Walsh, D. Tackling Climate Change with Machine Learning. MIT Management Sloan School. Climate Change. 24 October 2023. Available online: https://mitsloan.mit.edu/ideas-made-to-matter/tackling-climate-change-machine-learning (accessed on 29 November 2024).

- ISO. Machine Learning (ML): All There Is to Know. International Organization for Standardization. Available online: https://www.iso.org/artificial-intelligence/machine-learning (accessed on 30 November 2024).

- Watson, I. How Alan Turing Invented the Computer Age. Scientific American. Published: 26 April 2012. Available online: https://blogs.scientificamerican.com/guest-blog/how-alan-turing-invented-the-computer-age/ (accessed on 30 November 2024).

- Jain, P.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Xiao, H. Estimating fire duration using regression methods. arXiv 2023, arXiv:2308.08936. [Google Scholar]

- Linardos, V.; Drakaki, M.; Tzionas, P.; Karnavas, Y.L. Machine learning in disaster management: Recent developments in methods and applications. Mach. Learn. Knowl. Extr. 2022, 4, 446–473. [Google Scholar] [CrossRef]

- Sevinc, V.; Kucuk, O.; Goltas, M. A Bayesian network model for prediction and analysis of possible forest fire causes. For. Ecol. Manag. 2020, 457, 117723. [Google Scholar] [CrossRef]

- Chen, F.; Jia, H.; Du, E.; Chen, Y.; Wang, L. Modeling of the cascading impacts of drought and forest fire based on a Bayesian network. Int. J. Disaster Risk Reduct. 2024, 111, 104716. [Google Scholar] [CrossRef]

- Kim, B.; Lee, J. A Bayesian network-based information fusion combined with DNNs for robust video fire detection. Appl. Sci. 2021, 11, 7624. [Google Scholar] [CrossRef]

- Nugroho, A.A.; Iwan, I.; Azizah, K.I.N.; Raswa, F.H. Peatland Forest Fire Prevention Using Wireless Sensor Network Based on Naïve Bayes Classifier. Kne Soc. Sci. 2019, 3, 20–34. [Google Scholar]

- Zainul, M.; Minggu, E. Classification of Hotspots Causing Forest and Land Fires Using the Naive Bayes Algorithm. Interdiscip. Soc. Stud. 2022, 1, 555–567. [Google Scholar] [CrossRef]

- Karo, I.M.K.; Amalia, S.N.; Septiana, D. Wildfires Classification Using Feature Selection with K-NN, Naïve Bayes, and ID3 Algorithms. J. Softw. Eng. Inf. Commun. Technol. (SEICT) 2022, 3, 15–24. [Google Scholar] [CrossRef]

- Vilar del Hoyo, L.; Martín Isabel, M.P.; Martínez Vega, F.J. Logistic regression models for human-caused wildfire risk estimation: Analysing the effect of the spatial accuracy in fire occurrence data. Eur. J. For. Res. 2011, 130, 983–996. [Google Scholar] [CrossRef]

- de Bem, P.P.; de Carvalho Júnior, O.A.; Matricardi, E.A.T.; Guimarães, R.F.; Gomes, R.A.T. Predicting wildfire vulnerability using logistic regression and artificial neural networks: A case study in Brazil’s Federal District. Int. Wildland Fire 2018, 28, 35–45. [Google Scholar] [CrossRef]

- Nhongo, E.J.S.; Fontana, D.C.; Guasselli, L.A.; Bremm, C. Probabilistic modelling of wildfire occurrence based on logistic regression, Niassa Reserve, Mozambique. Geomat. Hazards Risk 2019, 10, 1772–1792. [Google Scholar] [CrossRef]

- Peng, W.; Wei, Y.; Chen, G.; Lu, G.; Ye, Q.; Ding, R.; Cheng, Z. Analysis of Wildfire Danger Level Using Logistic Regression Model in Sichuan Province, China. Forests 2023, 14, 2352. [Google Scholar] [CrossRef]

- Hossain, F.A.; Zhang, Y.; Yuan, C.; Su, C.Y. Wildfire flame and smoke detection using static image features and artificial neural network. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Lall, S.; Mathibela, B. The application of artificial neural networks for wildfire risk prediction. In Proceedings of the 2016 International Conference on Robotics and Automation for Humanitarian Applications (RAHA), Amritapuri, India, 18–20 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Sayad, Y.O.; Mousannif, H.; Al Moatassime, H. Predictive modeling of wildfires: A new dataset and machine learning approach. Fire Saf. J. 2019, 104, 130–146. [Google Scholar] [CrossRef]

- Gao, K.; Feng, Z.; Wang, S. Using multilayer perceptron to predict forest fires in jiangxi province, southeast china. Discret. Dyn. Nat. Soc. 2022, 2022, 6930812. [Google Scholar] [CrossRef]

- Latifah, A.L.; Shabrina, A.; Wahyuni, I.N.; Sadikin, R. Evaluation of Random Forest model for forest fire prediction based on climatology over Borneo. In Proceedings of the 2019 International Conference on Computer, Control, Informatics and Its Applications (IC3INA), Tangerang, Indonesia, 23–24 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4–8. [Google Scholar]

- Malik, A.; Rao, M.R.; Puppala, N.; Koouri, P.; Thota, V.A.K.; Liu, Q.; Chiao, S.; Gao, J. Data-driven wildfire risk prediction in northern California. Atmosphere 2021, 12, 109. [Google Scholar] [CrossRef]

- Song, S.; Zhou, X.; Yuan, S.; Cheng, P.; Liu, X. Interpretable artificial intelligence models for predicting lightning prone to inducing forest fires. J. Atmos. Sol.-Terr. Phys. 2025, 267, 106408. [Google Scholar] [CrossRef]

- Gao, C.; Lin, H.; Hu, H. Forest-fire-risk prediction based on random forest and backpropagation neural network of Heihe area in Heilongjiang province, China. Forests 2023, 14, 170. [Google Scholar] [CrossRef]

- Hu, Z.; Zhao, J.; Zhang, S.; Ma, H.; Zhang, J. Development and Validation of a Novel Method to Predict Flame Behavior in Tank Fires Based on CFD Modeling and Machine Learning. Reliab. Eng. Syst. Saf. 2025, 264, 111368. [Google Scholar] [CrossRef]

- Andela, N.; Morton, D.C.; Giglio, L.; Paugam, R.; Chen, Y.; Hantson, S.; van der Werf, G.R.; Randerson, J. The Global Fire Atlas of individual fire size, duration, speed and direction. Earth Syst. Sci. Data 2019, 11, 529–552. [Google Scholar] [CrossRef]

- Kc, U.; Aryal, J.; Hilton, J.; Garg, S. A surrogate model for rapidly assessing the size of a wildfire over time. Fire 2021, 4, 20. [Google Scholar] [CrossRef]

- Xie, Z.C.; Xu, Z.D.; Gai, P.P.; Xia, Z.H.; Xu, Y.S. A deep learning-based surrogate model for spatial-temporal temperature field prediction in subway tunnel fires via CFD simulation. J. Dyn. Disasters 2025, 1, 100002. [Google Scholar] [CrossRef]

- Liang, H.; Zhang, M.; Wang, H. A neural network model for wildfire scale prediction using meteorological factors. IEEE Access 2019, 7, 176746–176755. [Google Scholar] [CrossRef]

- Zhai, X.; Kong, W.; Hu, Z.; Zhang, C.; Ma, H.; Zhao, J. Prediction method and application of temperature distribution in typical confined space spill fires based on deep learning. Process Saf. Environ. Prot. 2025, 198, 107127. [Google Scholar] [CrossRef]

- Xi, D.D.; Dean, C.B.; Taylor, S.W. Modeling the duration and size of wildfires using joint mixture models. Environmetrics 2021, 32, e2685. [Google Scholar] [CrossRef]

- WFCA. Western Fire Chiefs Association. How Long Do Wildfires Last? October 2022. Available online: https://wfca.com/wildfire-articles/how-long-do-wildfires-last/ (accessed on 4 December 2024).

- Flato, G.M.; Boer, G.J. Warming asymmetry in climate change simulations. Geophys. Lett. 2001, 28, 195–198. [Google Scholar] [CrossRef]

- Whitmarsh, L. Behavioural responses to climate change: Asymmetry of intentions and impacts. J. Environ. 2009, 29, 13–23. [Google Scholar] [CrossRef]

- Xu, Y.; Ramanathan, V. Latitudinally asymmetric response of global surface temperature: Implications for regional climate change. Geophys. Res. Lett. 2012, 39. [Google Scholar] [CrossRef]

- Ji, S.; Nie, J.; Lechler, A.; Huntington, K.W.; Heitmann, E.O.; Breecker, D.O. A symmetrical CO2 peak and asymmetrical climate change during the middle Miocene. Earth Planet. Sci. Lett. 2018, 499, 134–144. [Google Scholar] [CrossRef]

- Gao, C.; An, R.; Wang, W.; Shi, C.; Wang, M.; Liu, K.; Wu, X.; Wu, G.; Shu, L. Asymmetrical lightning fire season expansion in the boreal forest of Northeast China. Forests 2021, 12, 1023. [Google Scholar] [CrossRef]

- Smith, M.G.; Bull, L. Genetic Programming with a Genetic Algorithm for Feature Construction and Selection. Genet. Program. Evolvable Mach. 2005, 6, 265–281. [Google Scholar] [CrossRef]

- Maćkiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Cadima, J.; Jolliffe, I.T. Principal Component analysis: A Review and Recent Developments, National Library of Medicine. 2016. Available online: https://pmc.ncbi.nlm.nih.gov/articles/PMC4792409/ (accessed on 16 November 2024).

- i2tutorials. What Are the Pros and Cons of the PCA? 1 October 2019. Available online: https://www.i2tutorials.com/what-are-the-pros-and-cons-of-the-pca/ (accessed on 16 November 2024).

- Park, S.C. Physical Meaning of Principal Component Analysis for Lattice Systems with Translational Invariance. arXiv 2024, arXiv:2410.22682. [Google Scholar] [CrossRef]

- Sarma, O.; Rather, M.A.; Shahnaz, S.; Barwal, R.S. Principal Component Analysis of Morphometric Traits in Kashmir Merino Sheep. J. Adv. Biol. Biotechnol. 2024, 27, 362–369. [Google Scholar] [CrossRef]

- Gambardella, C.; Parente, R.; Ciambrone, A.; Casbarra, M. A Principal Components Analysis-Based Method for the Detection of Cannabis Plants Using Representation data by Remote Sensing. Data 2021, 6, 108. [Google Scholar] [CrossRef]

- Slavkovic, M.; Jevtic, D. Face Recognition Using Eigenface Approach. Serbian J. Electr. Eng. 2012, 9, 121–130. [Google Scholar] [CrossRef]

- Hargreaves, C.A.; Mani, C.K. The Selection of Winning Stocks Using Principal Component Analysis. Am. J. Mark. 2015, 1, 183–188. [Google Scholar]

- Xu, Z.; Guo, F.; Ma, H.; Liu, X.; Gao, L. On Optimizing Hyperspectral Inversion of Soil Copper Content by Kernel Principal Component Analysis. Remote Sens. 2024, 16, 183–188. [Google Scholar]

- Zhang, H.; Srinivasa, R.; Yang, X.; Ahrentzen, S.; Coker, E.S.; Alwisy, A. Factors influencing indoor air pollution in buildings using PCA-LMBP neural network: A case study of a university campus. Build. Environ. 2022, 225, 109643. [Google Scholar] [CrossRef]

- Lourakis, M.I. A brief description of the Levenberg-Marquardt algorithm implemented by levmar. Found. Res. Technol. 2005, 4, 1–6. [Google Scholar]

- Akinnuwesi, B.A.; Macaulay, B.O.; Aribisala, B.S. Breast cancer risk assessment and early diagnosis using Principal Component Analysis and support vector machine techniques. Inform. Med. Unlocked 2020, 21, 100459. [Google Scholar] [CrossRef]

- Awad, M.; Khanna, R.; Awad, M.; Khanna, R. Support vector machines for classification. In Efficient Learning Machines: Theories, Concepts, and Applications for Engineers and System Designers; Apress: Berkeley, CA, USA, 2015; pp. 39–66. [Google Scholar]

- Guan, R. Predicting forest fire with linear regression and random forest. Highlights Sci. Eng. Technol. 2023, 44, 1–7. [Google Scholar] [CrossRef]

- Nikolov, N.; Bothwell, P.; Snook, J. Developing a gridded model for probabilistic forecasting of wildland-fire ignitions across the lower 48 States. In USFS-CSU Joint Venture Agreement Phase 2 (2019–2021)-Final Report; US Department of Agriculture, Forest Service, Rocky Mountain Research Station: Collins, CO, USA, 2022; 33p. [Google Scholar]

- Nikolov, N.; Bothwell, P.; Snook, J. Probalistic forecasting of lightning strikes over the Continental USA and Alaska: Model development and verification. Fire 2024, 7, 111. [Google Scholar] [CrossRef]

- Ding, C.; Peng, H. Minimum redundancy feature selection from microarray gene expression data. J. Bioinform. Comput. Biol. 2005, 3, 185–205. [Google Scholar] [CrossRef]

- Ramírez-Gallego, S.; Lastra, I.; Martínez-Rego, D.; Bolón-Canedo, V.; Benítez, J.M.; Herrera, F.; Alonso-Betanzos, A. Fast-mRMR: Fast Minimum Redundancy Maximum Relevance Algorithm for High-Dimensional Big Data. Int. J. Intell. Syst. 2017, 32, 134–152. [Google Scholar] [CrossRef]

- Zhao, Z.; Anand, R.; Wang, M. Maximum relevance and minimum redundancy feature selection methods for a marketing machine learning platform. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 442–452. [Google Scholar]

- Rigatti, S.J. Random forest. J. Insur. 2017, 47, 31–39. [Google Scholar] [CrossRef]

- Wu, H.; Yang, T.; Li, H.; Zhou, Z. Air quality prediction model based on mRMR–RF feature selection and ISSA–LSTM. Sci. Rep. 2023, 13, 12825. [Google Scholar] [CrossRef]

- Elbeltagi, A.; Nagy, A.; Szabo, A.; Nxumalo, G.S.; Bodi, E.B.; Tamas, J. Hyperspectral indices data fusion-based machine learning enhanced by MRMR algorithm for estimating maize chlorophyll content. Front. Plant Sci. Sect. Tech. Adv. Plant Sci. 2024, 15, 1419316. [Google Scholar]

- Liu, J.; Sun, H.; Li, Y.; Fang, W.; Niu, S. An improved power system transient stability prediction model based on mRMR feature selection and WTA ensemble learning. Appl. Sci. 2020, 10, 2255. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Eristi, B. A New Approach based on Deep Features of Convolutional Neural Networks for Partial Discharge Detection in Power Systems. IEEE Access 2024, 12, 117026–117039. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Zhu, H.; Yan, R.; Liu, Y.; Sun, L.; Zeng, Z. Recognition algorithm of acoustic emission signals based on conditional random field model in storage tank floor inspection using inner detector. Shock Vib. 2015, 2015, 173470. [Google Scholar] [CrossRef]

- Karamouz, M.; Zahmatkesh, Z.; Nazif, S.; Razmi, A. An evaluation of climate change impacts on extreme sea level variability: Coastal area of New York City. Water Resour. 2014, 28, 3697–3714. [Google Scholar] [CrossRef]

- O’Neill, M.; Ryan, C. Grammatical evolution. IEEE Trans. Evol. Comput. 2001, 5, 349–358. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Gavrilis, D.; Glavas, E. Neural network construction and training using grammatical evolution. Neurocomputing 2008, 72, 269–277. [Google Scholar] [CrossRef]

- Papamokos, G.V.; Tsoulos, I.G.; Demetropoulos, I.N.; Glavas, E. Location of amide I mode of vibration in computed data utilizing constructed neural networks. Expert Syst. Appl. 2009, 36, 12210–12213. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Gavrilis, D.; Glavas, E. Solving differential equations with constructed neural networks. Neurocomputing 2009, 72, 2385–2391. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Mitsi, G.; Stavrakoudis, A.; Papapetropoulos, S. Application of Machine Learning in a Parkinson’s Disease Digital Biomarker Dataset Using Neural Network Construction (NNC) Methodology Discriminates Patient Motor Status. Front. ICT 2019, 6, 10. [Google Scholar] [CrossRef]

- Christou, V.; Tsoulos, I.G.; Loupas, V.; Tzallas, A.T.; Gogos, C.; Karvelis, P.S.; Antoniadis, N.; Glavas, E.; Giannakeas, N. Performance and early drop prediction for higher education students using machine learning. Expert Syst. Appl. 2023, 225, 120079. [Google Scholar] [CrossRef]

- Toki, E.I.; Pange, J.; Tatsis, G.; Plachouras, K.; Tsoulos, I.G. Utilizing Constructed Neural Networks for Autism Screening. Appl. Sci. 2024, 14, 3053. [Google Scholar] [CrossRef]

- Backus, J.W. The Syntax and Semantics of the Proposed International Algebraic Language of the Zurich ACM-GAMM Conference. In Proceedings of the International Conference on Information Processing, UNESCO, Paris, France, 15–20 June 1959; pp. 125–132. [Google Scholar]

- Gavrilis, D.; Tsoulos, I.G.; Dermatas, E. Selecting and constructing features using grammatical evolution. Pattern Recognit. Lett. 2008, 29, 1358–1365. [Google Scholar] [CrossRef]

- Gavrilis, D.; Tsoulos, I.G.; Dermatas, E. Neural Recognition and Genetic Features Selection for Robust Detection of E-Mail Spam. In Hellenic Conference on Artificial Intelligence; Advances in Artificial Intelligence of the Series Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3955, pp. 498–501. [Google Scholar]

- Georgoulas, G.; Gavrilis, D.; Tsoulos, I.G.; Stylios, C.; Bernardes, J.; Groumpos, P.P. Novel approach for fetal heart rate classification introducing grammatical evolution. Biomed. Signal Process. Control 2007, 2, 69–79. [Google Scholar] [CrossRef]

- Smart, O.; Tsoulos, I.G.; Gavrilis, D.; Georgoulas, G. Grammatical evolution for features of epileptic oscillations in clinical intracranial electroencephalograms. Expert Syst. Appl. 2011, 38, 9991–9999. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Tzallas, A.T.; Tsoulos, I.; Tsipouras, M.G.; Giannakeas, N.; Androulidakis, I.; Zaitseva, E. Classification of EEG signals using feature creation produced by grammatical evolution. In Proceedings of the 24th Telecommunications Forum (TELFOR), Belgrade, Serbia, 22–23 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–4. [Google Scholar][Green Version]

- Park, J.; Sandberg, I.W. Universal Approximation Using Radial-Basis-Function Networks. Neural Comput. 1991, 3, 246–257. [Google Scholar] [CrossRef]

- Yu, H.; Xie, T.; Paszczynski, S.; Wilamowski, B.M. Advantages of Radial Basis Function Networks for Dynamic System Design. IEEE Trans. Ind. Electron. 2011, 58, 5438–5450. [Google Scholar] [CrossRef]

- Bishop, C. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Powell, M.J.D. A Tolerant Algorithm for Linearly Constrained Optimization Calculations. Math. Program. 1989, 45, 547–566. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Charilogis, V.; Kyrou, G.; Stavrou, V.N.; Tzallas, A. OPTIMUS: A Multidimensional Global Optimization Package. J. Open Source Softw. 2025, 10, 7584. [Google Scholar] [CrossRef]

- Hall, M.; Frank, F.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. ACM Sigkdd Explor. Newsl. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Aher, S.B.; Lobo, L.M.R.J. Data mining in educational system using weka. In International conference on emerging technology trends. Found. Comput. Sci. 2011, 3, 20–25. [Google Scholar]

- Hussain, S.; Dahan, N.A.; Ba-Alwib, F.M.; Ribata, N. Educational data mining and analysis of students’ academic performance using WEKA. Indones. J. Electr. Eng. Comput. Sci. 2018, 9, 447–459. [Google Scholar] [CrossRef]

- Sigurdardottir, A.K.; Jonsdottir, H.; Benediktsson, R. Outcomes of educational interventions in type 2 diabetes: WEKA data-mining analysis. Patient Educ. Couns. 2007, 67, 21–31. [Google Scholar] [CrossRef] [PubMed]

- Amin, M.N.; Habib, A. Comparison of different classification techniques using WEKA for hematological data. Am. J. Eng. Res. 2015, 4, 55–61. [Google Scholar]

- Webb, G.I.; Keogh, E.; Miikkulainen, R. Naïve Bayes. Encycl. Mach. Learn. 2010, 15, 713–714. [Google Scholar]

- Kingma, D.P.; Ba, J.L. ADAM: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Curtin, R.R.; Cline, J.R.; Slagle, N.P.; March, W.B.; Ram, P.; Mehta, N.A.; Gray, A.G. MLPACK: A Scalable C++ Machine Learning Library. J. Mach. Learn. Res. 2013, 14, 801–805. [Google Scholar]

- Martinez-Cantin, R. BayesOpt: A Bayesian Optimization Library for Nonlinear Optimization, Experimental Design and Bandits. J. Mach. Learn. Res. 2014, 15, 3735–3739. [Google Scholar]

- Ward, R.; Wu, X.; Bottou, L. Adagrad stepsizes: Sharp convergence over nonconvex landscapes. J. Mach. Learn. Res. 2020, 21, 1–30. [Google Scholar]

- Tsoulos, I.G.; Tzallas, A.; Tsalikakis, D. NNC: A tool based on Grammatical Evolution for data classification and differential equation solving. SoftwareX 2019, 10, 100297. [Google Scholar] [CrossRef]

- Tsoulos, I.G. QFC: A Parallel Software Tool for Feature Construction, Based on Grammatical Evolution. Algorithms 2022, 15, 295. [Google Scholar] [CrossRef]

| Year | Raw Data | Deleted | Final Data |

|---|---|---|---|

| 2014 | 6834 | 1158 | 5676 |

| 2015 | 8117 | 1358 | 6759 |

| 2016 | 10,258 | 1642 | 8616 |

| 2017 | 10,355 | 1678 | 8677 |

| 2018 | 8005 | 1363 | 6642 |

| 2019 | 9499 | 2280 | 7219 |

| 2020 | 11,798 | 4579 | 7096 |

| 2021 | 9513 | 2417 | 7096 |

| TOTAL | 74,379 | 16,475 | 57,904 |

| Name | Meaning | Value |

|---|---|---|

| Chromosomes | 500 | |

| Generations | 200 | |

| Selection rate | 0.1 | |

| Mutation rate | 0.05 | |

| Number of features | 2 | |

| H | Number of weights | 10 |

| Iterations for BFGS | 2000 | |

| Iterations for ADAM | 2000 | |

| Parameter for ADAM | 0.9 | |

| Parameter for ADAM | 0.999 |

| Year | BAYES | ADAM | BFGS | MRMR | PCA | BAYESNN | DNN | NNC | FC |

|---|---|---|---|---|---|---|---|---|---|

| 2014 | 11.41% | 13.00% | 12.38% | 9.68% | 15.50% | 12.14% | 13.01% | 9.21% | 8.04% |

| 2015 | 10.49% | 11.94% | 11.25% | 8.49% | 15.03% | 11.48% | 11.89% | 9.17% | 7.51% |

| 2016 | 10.79% | 12.95% | 11.88% | 9.45% | 12.93% | 12.53% | 12.89% | 10.12% | 8.60% |

| 2017 | 53.36% | 12.68% | 12.65% | 12.65% | 12.64% | 12.65% | 12.62% | 12.61% | 12.66% |

| 2018 | 9.39% | 10.48% | 14.97% | 9.21% | 10.49% | 10.02% | 10.38% | 9.29% | 7.72% |

| 2019 | 7.79% | 9.44% | 9.66% | 8.39% | 9.72% | 8.94% | 9.41% | 7.03% | 6.62% |

| 2020 | 40.26% | 9.56% | 9.80% | 9.55% | 9.76% | 9.50% | 9.50% | 9.50% | 9.61% |

| 2021 | 11.81% | 11.06% | 12.90% | 10.57% | 11.03% | 10.90% | 10.62% | 10.80% | 9.55% |

| AVERAGE | 18.88% | 10.88% | 11.25% | 9.25% | 11.63% | 10.50% | 10.75% | 9.38% | 8.25% |

| Year | ADAM | BFGS | MRMR | PCA | BAYESNN | DNN | NNC | FC |

|---|---|---|---|---|---|---|---|---|

| 2014 | 0.06 | 0.47 | 0.07 | 0.10 | 0.33 | 0.06 | 0.99 | 0.17 |

| 2015 | 0.08 | 0.19 | 0.12 | 0.06 | 0.22 | 0.11 | 0.70 | 0.17 |

| 2016 | 0.04 | 0.12 | 0.07 | 0.03 | 0.20 | 0.06 | 0.57 | 0.19 |

| 2017 | 0.05 | 0.03 | 0.06 | 0.14 | 0.05 | 0.04 | 0.11 | 0.04 |

| 2018 | 0.06 | 0.51 | 0.16 | 0.05 | 0.20 | 0.08 | 0.47 | 0.17 |

| 2019 | 0.07 | 0.45 | 0.12 | 0.03 | 0.29 | 0.05 | 0.49 | 0.18 |

| 2020 | 0.05 | 0.07 | 0.03 | 0.04 | 0.04 | 0.04 | 0.12 | 0.19 |

| 2021 | 0.08 | 0.12 | 0.14 | 0.13 | 0.26 | 0.08 | 0.48 | 0.18 |

| Year | |||

|---|---|---|---|

| 2014 | 8.36% | 8.04% | 7.95% |

| 2015 | 8.10% | 7.51% | 7.24% |

| 2016 | 8.61% | 8.60% | 8.15% |

| 2017 | 12.68% | 12.66% | 12.46% |

| 2018 | 7.68% | 7.72% | 7.51% |

| 2019 | 6.77% | 6.62% | 6.49% |

| 2020 | 9.50% | 9.61% | 9.58% |

| 2021 | 10.53% | 9.55% | 9.62% |

| AVERAGE | 8.50% | 8.25% | 8.13% |

| NNC | FC | |||||

|---|---|---|---|---|---|---|

| Year | ||||||

| 2014 | 9.90% | 9.78% | 9.21% | 8.74% | 8.77% | 8.04% |

| 2015 | 9.13% | 9.07% | 9.17% | 8.24% | 8.30% | 7.51% |

| 2016 | 9.98% | 10.18% | 10.12% | 8.98% | 8.92% | 8.60% |

| 2017 | 12.63% | 12.63% | 12.61% | 12.66% | 12.71% | 12.66% |

| 2018 | 8.58% | 8.80% | 9.29% | 7.96% | 7.85% | 7.72% |

| 2019 | 7.40% | 7.48% | 7.03% | 6.55% | 6.69% | 6.62% |

| 2020 | 9.43% | 9.51% | 9.50% | 9.50% | 9.50% | 9.61% |

| 2021 | 10.97% | 10.98% | 10.80% | 9.65% | 9.90% | 9.55% |

| AVERAGE | 9.13% | 9.25% | 9.38% | 8.38% | 8.39% | 8.25% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kopitsa, C.; Tsoulos, I.G.; Miltiadous, A.; Charilogis, V. Predicting the Forest Fire Duration Enriched with Meteorological Data Using Feature Construction Techniques. Symmetry 2025, 17, 1785. https://doi.org/10.3390/sym17111785

Kopitsa C, Tsoulos IG, Miltiadous A, Charilogis V. Predicting the Forest Fire Duration Enriched with Meteorological Data Using Feature Construction Techniques. Symmetry. 2025; 17(11):1785. https://doi.org/10.3390/sym17111785

Chicago/Turabian StyleKopitsa, Constantina, Ioannis G. Tsoulos, Andreas Miltiadous, and Vasileios Charilogis. 2025. "Predicting the Forest Fire Duration Enriched with Meteorological Data Using Feature Construction Techniques" Symmetry 17, no. 11: 1785. https://doi.org/10.3390/sym17111785

APA StyleKopitsa, C., Tsoulos, I. G., Miltiadous, A., & Charilogis, V. (2025). Predicting the Forest Fire Duration Enriched with Meteorological Data Using Feature Construction Techniques. Symmetry, 17(11), 1785. https://doi.org/10.3390/sym17111785