1. Introduction

Underwater image enhancement (UIE) aims to restore the visual quality of imagery degraded by the underwater optical environment. Central to this work are two of the most prevalent and challenging degradations: color distortion caused by the wavelength-selective attenuation of light, and haze-like blurring accompanied by significant contrast reduction resulting from light scattering. The effective correction of these issues is critical for reconstructing obscured details, enhancing contrast, and restoring a natural visual appearance [

1]. Advances in underwater imaging systems have enabled large-scale and convenient image acquisition, providing a valuable data foundation for marine science and engineering applications [

2]. Consequently, the ability to reliably address these core problems has established UIE as a pivotal research direction in marine computer vision, with broad applications spanning marine resource exploration [

3], underwater robotic navigation [

4], environmental monitoring, and archaeological surveying [

5].

Early UIE methods can be broadly categorized into two paradigms: physical model-based and non-physical model-based approaches [

6]. Methods grounded in physical models, such as the Underwater Dark Channel Prior (UDCP) [

7] and red-channel restoration techniques [

8], leverage principles of underwater optics to estimate transmission maps and perform degradation compensation. However, these methods often face challenges in accurately estimating scene-dependent parameters under dynamically changing underwater conditions. Non-physical approaches, including histogram stretching in color spaces [

9], multi-scale fusion strategies [

5,

10], and Retinex-based decomposition models [

11,

12], operate primarily in the pixel or frequency domains to improve perceptual quality, while these methods demonstrate effectiveness in certain scenarios, they often produce visually inconsistent or physically implausible results—primarily because they fail to account for the underlying optical mechanisms governing underwater image formation. Wang et al. [

13] further categorized these methods via experimental review, laying groundwork for later data-driven approaches.

The advent of data-driven methodologies, particularly deep learning, has markedly reshaped the UIE landscape. These methods learn complex degradation-to-clean mappings from large collections of paired underwater images, enabling end-to-end enhancement without explicit physical assumptions. Convolutional Neural Networks (CNNs) have seen widespread application in this field. For instance, the seminal UWCNN framework [

14] adopts a multi-branch design to decouple and hierarchically process color and structural information, efficiently learning feature representations tailored for UIE. Despite their success, the local inductive bias of convolution operations limits their ability to capture long-range, spatially variant degradation patterns. Generative Adversarial Networks (GANs) have also gained prominence. Models such as WaterGAN [

15] incorporate physical priors into the generator to simulate realistic underwater scenes and employ adversarial learning to improve perceptual authenticity. Nevertheless, GAN-based methods remain prone to training instability and mode collapse, directly leading to the generation of artifacts and inconsistent outputs in practical UIE tasks.

Recent years have witnessed data-driven approaches advancing UIE, though they also bring new challenges. Transformer-based models, such as the architecture proposed by Lu et al. [

16], integrate transformer blocks into a ResNet-50 backbone and leverage multiscale feature fusion to capture global contextual information. These methods often incorporate multi-dimensional attention mechanisms to identify regions requiring enhancement and employ multi-color-space inputs (e.g., RGB, HSV, LAB) to improve restoration performance across diverse degradation types. Although effective in handling spatially varying degradations, their high computational demands—stemming from the quadratic complexity of self-attention—and large parameter counts hinder deployment on resource-constrained underwater platforms. Conversely, lightweight models—such as UResNet [

17] (with Sobel-filter and squeeze-and-excitation variants) and Zhang et al.’s efficient CNN [

18]—prioritize computational economy. The latter; for example, uses a compact CNN and YUV post-processing to enable real-time enhancement. Yet these approaches typically apply uniform processing, lacking distinction between clean and severely degraded regions, which leads to localized over- or under-enhancement. Notably, both types of methods suffer from a fundamental symmetry-breaking issue: transformer-based models over-emphasize performance optimization at the cost of efficiency, while lightweight models prioritize efficiency at the expense of quality. This asymmetry between performance and efficiency violates the symmetry principle in system design, where balanced adaptation to task requirements is essential for robust practical deployment.

To address the aforementioned challenges, this paper proposes a Dual-Path Physics-Guided Mamba Network (DPPGM) for UIE. By integrating physical optics principles with deep learning, DPPGM not only effectively handles spatially heterogeneous degradations but also achieves a balanced trade-off between enhancement performance and computational efficiency—two critical requirements for practical underwater deployment. Central to this approach is the principle of symmetry, which we define as the dynamic equilibrium maintained between data-driven learning and physical constraints throughout the enhancement process.

Diverging from traditional techniques that rely exclusively on either rigid physical models (lacking adaptability to complex degradation variations) or unconstrained data-driven learning (prone to non-physical artifacts), DPPGM effectively combines these two paradigms through symmetric collaboration. The model operates through four key stages: First, a degradation-aware detector extracts shallow features while identifying initial degradation distribution. Second, a dual-path Mamba module processes clean and degraded regions in parallel—this design establishes symmetric functional paths where each region receives processing intensity proportional to its degradation level, avoiding the asymmetry resulting from uniform processing of conventional methods. Third, physics-guided optimization, rooted in the Jaffe–McGlamery underwater optical model, introduces symmetry constraints derived from optical propagation laws, ensuring enhancements align with real-world physics. Finally, a compact subspace fusion mechanism achieves symmetric aggregation of multi-stage features, preventing dominance of any single feature scale and balancing detail preservation with computational efficiency. By unifying degradation-adaptive processing, physical constraints, and efficient feature fusion, DPPGM provides a principled and deployable solution for high-quality underwater image restoration. The main contributions of this work are summarized as follows:

- (1)

A Dual-Path Physics-Guided Mamba Network (DPPGM) is tailored for underwater image enhancement, specifically targeting the handling of spatially varying optical degradations while balancing enhancement quality and computational efficiency. Distinguishing itself from conventional methods that use uniform processing or overlook physical–optical integration, this method employs a degradation-aware dual-path Mamba module to separately process clean and degraded regions, leveraging selective sequence modeling to capture long-range degradation dependencies.

- (2)

An integrated framework incorporating symmetry-constrained physics-guided optimization and compact subspace fusion. The former, constrained by the Jaffe–McGlamery model, ensures symmetric alignment between enhancements and optical laws, while the latter enables symmetric multi-stage feature integration, preserving critical information without efficiency loss. This synergy bridges physical principles and data-driven learning through symmetric collaboration, delivering balanced gains in quality and efficiency.

- (3)

Comprehensive experimental evaluations were conducted on three benchmark UIE datasets—UIEB, LSUI, and U45—to systematically assess the performance of the proposed DPPGM. Quantitative and qualitative results collectively demonstrate that DPPGM achieves accuracy comparable to, or even surpasses, current state-of-the-art (SOTA) techniques. Notably, this competitive performance is attained while maintaining lightweight computational complexity: the model has only 1.48 M trainable parameters and requires 25.39 G FLOPs for inference, far outperforming SOTA methods in terms of efficiency.

The remainder of this paper is structured as follows:

Section 2 reviews related work;

Section 3 details the proposed DPPGM framework;

Section 4 presents experimental settings and results; and

Section 5 concludes the study with future directions.

3. Proposed DPPGM Framework

3.1. Overview

Given a degraded underwater image

, where

H and

W denote spatial dimensions, the objective is to recover the corresponding clean image

. This restoration task is formulated as the following minimization problem:

where

represents the enhancement model parameterized by

, and

enotes a loss function quantifying the discrepancy between the enhanced image

and ground truth

J. The goal is to produce outputs that are both visually superior and physically consistent.

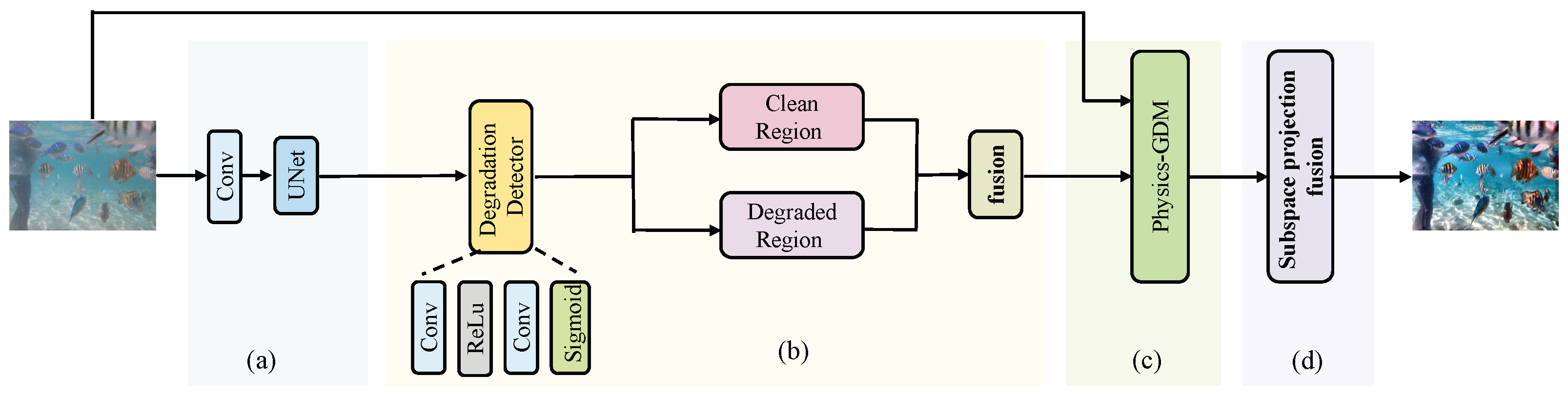

The proposed Dual-Path Physics-Guided Mamba Network (DPPGM) is designed to integrate adaptive degradation-aware processing with physically grounded constraints. This framework combines data-driven learning with physics-based optimization, leveraging their complementary strengths: the former captures complex and spatially variant degradation patterns, while the latter ensures strict fidelity to established underwater optical principles. As illustrated in

Figure 1, the DPPGM comprises four core components: (a) Shallow Feature Extractor: Uses a CNN for initial low-level feature extraction and a UNet for multiscale processing, building foundational representations for enhancement. (b) Dual-Path Mamba Module: Employs a lightweight degradation detector to guide separate processing of clean and degraded regions through Mamba blocks, enabling adaptive handling of spatially varying degradation. (c) Physics-Guided Gradient Descent Module: Integrates physical constraints derived from the Jaffe–McGlamery model to refine intermediate results toward physically plausible outputs. (d) Subspace Projection Fusion: Combines low-rank subspace projection for compact cross-stage feature integration (reducing redundancy while preserving critical information) and Mamba-based refinement to enhance feature consistency.

3.2. Shallow Feature Extractor

As the foundational feature extraction component of the DPPGM framework, this module employs convolutional operations to transform the raw underwater input into an initial set of low-level features. These features are subsequently processed by a UNet-based encoder–decoder architecture to capture multi-scale representations.

The extraction process consists of two primary steps. First, a convolutional layer projects the 3-channel RGB input into a 40-channel feature space, enhancing representational capacity while retaining local spatial structures. This expanded feature map then serves as input to a UNet structure, through a series of strided convolutions (for downsampling), transposed convolutions (for upsampling), and skip connections, the UNet effectively captures multi-scale information: the encoder pathway condenses spatial resolution to encode high-level semantics, while the decoder progressively reconstructs spatial details by integrating upsampled features with skip-connected encoder outputs. This design enables the module to represent both fine-grained textures and larger structural patterns, forming a critical foundation for subsequent enhancement stages.

Although the UNet excels at capturing local spatial correlations through its convolutional inductive bias, it remains limited in modeling long-range dependencies and adapting to spatially varying degradation patterns. These limitations are explicitly addressed in the subsequent Dual-Path Mamba Module, which incorporates selective state-space modeling to efficiently capture global context and dynamically focus on degraded regions. This combination ensures that the framework benefits from the robust local feature learning of the UNet while extending its capability to handle complex, non-uniform underwater degradation in a computationally efficient manner.

3.3. Dual-Path Mamba Module

As the adaptive core of the DPPGM, the Dual-Path Mamba Module provides a differentiated solution for addressing the spatially heterogeneous degradation specific to underwater images, including uneven turbidity gradients, localized backscattering hotspots, and wavelength-dependent light attenuation disparities. Unlike conventional uniform feature processing paradigms, this module fully leverages Mamba’s SSM capability to efficiently capture long-range cross-pixel degradation correlations with linear computational complexity. Simultaneously, a novel region-aware mechanism enables dynamic allocation of computational resources—prioritizing processing for severely degraded regions while preserving original details in clean regions, thereby achieving an optimal balance between restoration performance and computational efficiency. This design follows a symmetry principle by establishing a “degradation level-processing intensity” mapping between clean and degraded regions, avoiding symmetry-breaking from uniform processing.

The module takes feature maps from the shallow feature extractor (dimensions

, where

C = 40) as input. As illustrated in the Dual-Path Mamba Module (

Figure 1b, it processes features through three core stages—degradation mask generation, dual-path feature processing, and adaptive fusion—to produce degradation-adaptive feature maps for subsequent physics-guided refinement.

First, a lightweight degradation detector generates a pixel-level degradation mask

through a compact convolutional structure:

where the first convolution compresses channels from 40 to 5 (

), and the second produces a single-channel output. Physically,

corresponds to clean regions with preserved textures,

indicates severely degraded regions, and intermediate values represent transition zones.

Within the overall architecture, two parallel Mamba base units—designated as the Clean Region path and the Degraded Region path, as shown in

Figure 1b—operate concurrently under the guidance of the degradation mask

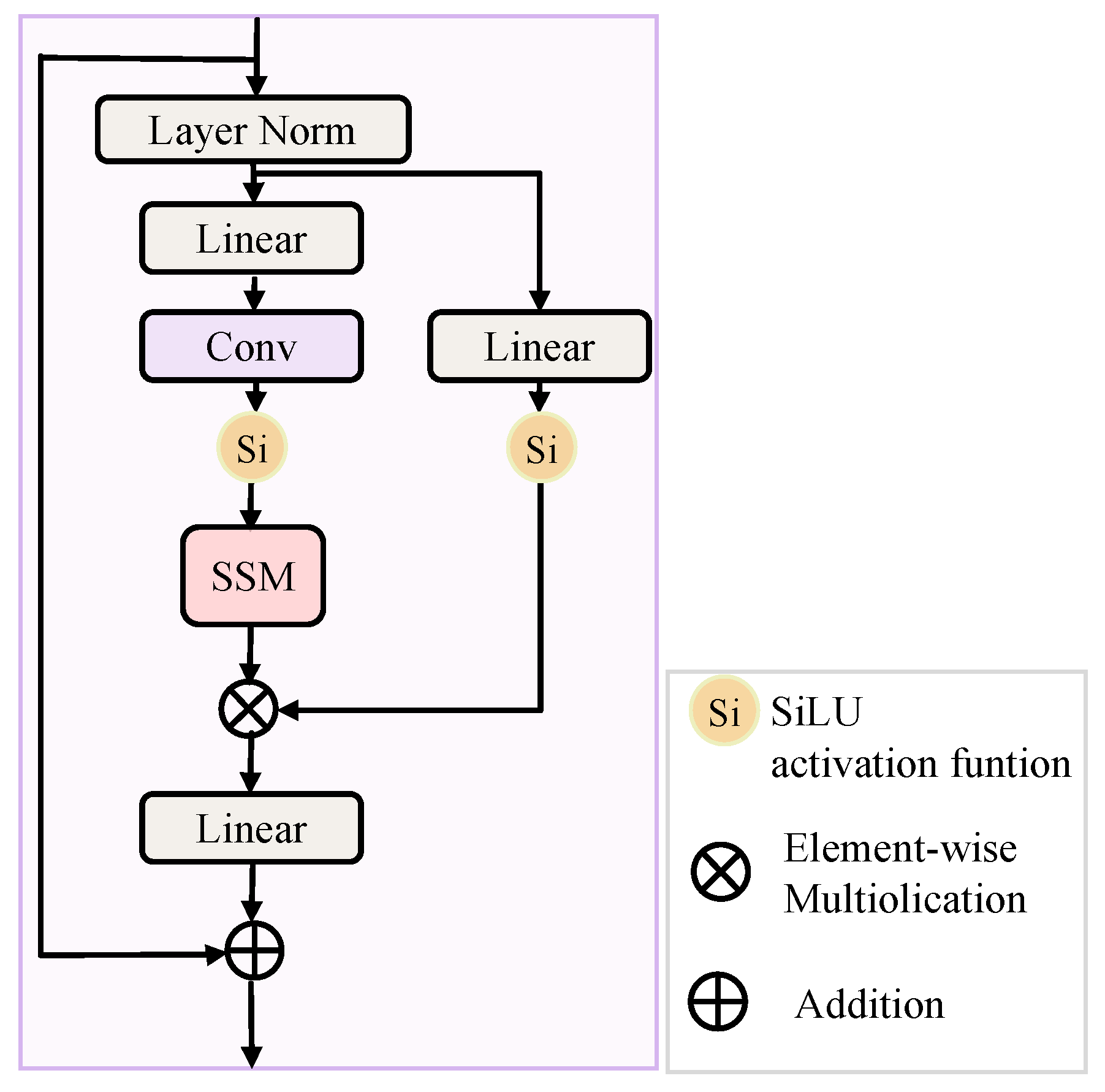

. It is noteworthy that both units share an identical architectural design, as depicted in

Figure 2.

The Clean Region unit specializes in preserving and refining features in less-corrupted areas, while the Degraded Region unit focuses on reconstructing and enhancing severely degraded regions. Both follow a consistent processing pipeline: initial feature normalization via Layer Norm, followed by a sequence comprising linear projection, convolutional operations, and SSM. Between these stages, SiLU activation functions introduce nonlinearity, while element-wise multiplication and addition operations facilitate the integration of intermediate features.

The selective state space mechanism implements Mamba’s core formulation:

with data-dependent parameterization:

and discrete-time transformation:

For computational efficiency, we employ parallel scan algorithms that maintain sequential complexity while enabling parallel depth. The selection of G = 4 and = 16 reflects a standard trade-off in lightweight model design, aiming for sufficient modeling capacity without excessive complexity. This configuration is consistent with effective practices established in related state-space literature.

The two Mamba units achieve functional specialization through mask-weighted gradient modulation: the clean-unit’s learning is weighted by , focusing on noise suppression and detail preservation; the degradation-unit’s learning is weighted by , specializing in turbidity modeling and backscatter suppression.

Finally, pixel-level adaptive fusion integrates the outputs:

This strategy ensures natural preservation in clean regions, effective restoration in degraded areas, and seamless transitions in boundary zones.

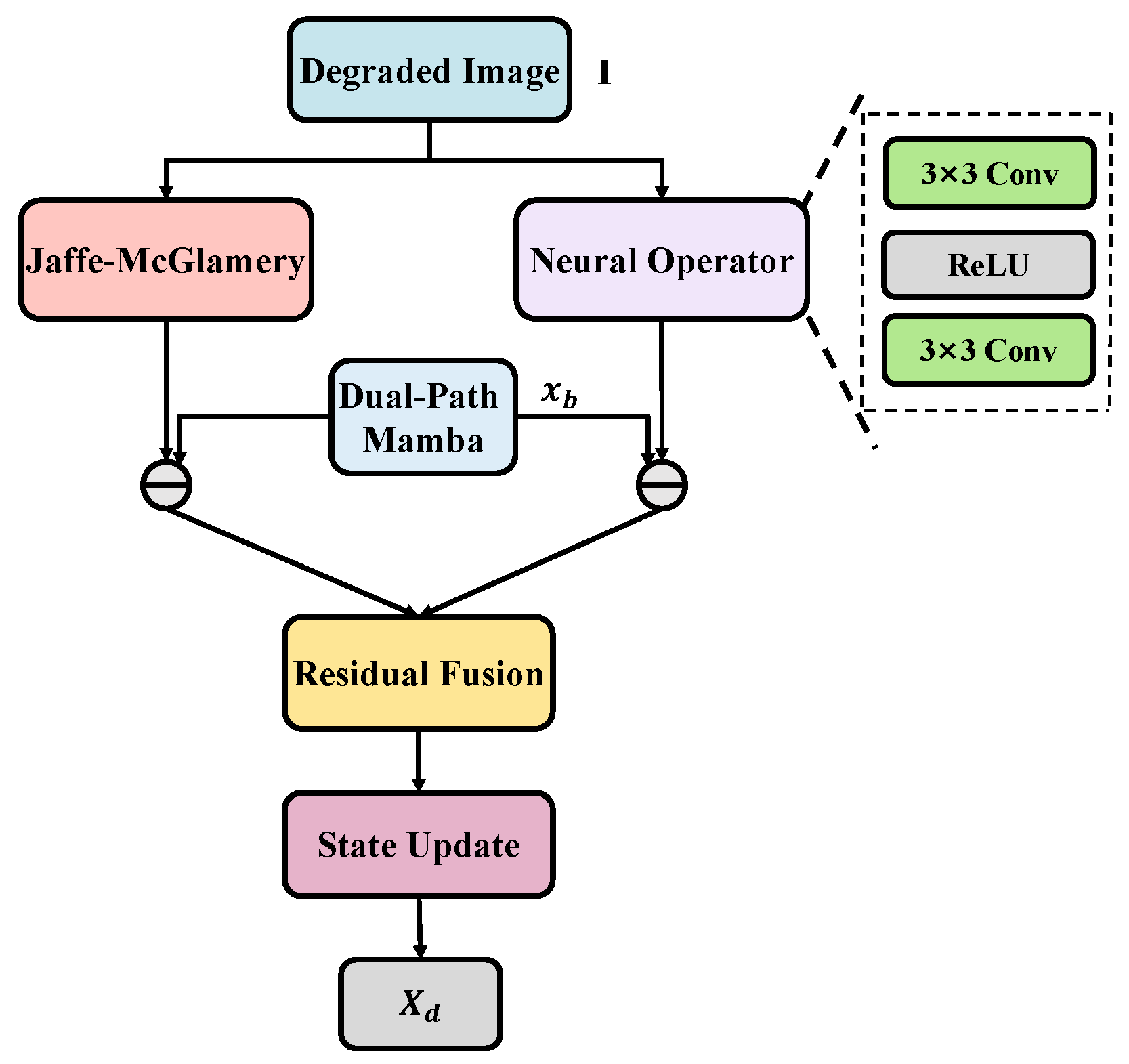

3.4. Physics-Guided Gradient Descent Module

The Physics-Guided Gradient Descent Module (PhysicsGDM) establishes a bidirectional integration framework that synergizes data-driven enhancement with underwater optical physics. As illustrated in

Figure 3, this module merges a physics-informed formulation with learned feature representations to ensure both physical plausibility and adaptive enhancement performance.

The core of PhysicsGDM is built upon a Jaffe–McGlamery-inspired optical model that explicitly incorporates depth-dependent light propagation, effectively capturing wavelength-specific attenuation and backscattering effects inherent in underwater environments. The scene radiance (restored optical signal) is computed as follows:

where

denotes transmittance (governed by the learnable attenuation coefficient

c),

is the depth map derived from wavelength-specific attenuation properties:

and

(background light) is estimated via dark channel prior and spatial pooling:

All physical parameters are constrained to positive values, ensuring consistency with optical principles while enabling adaptive adjustment to varying water conditions.

The module employs a dual-branch architecture that integrates physical modeling with data-driven learning through convolutional operators

and

. A physics-based residual

quantifies deviations from the optical model, while a neural residual

captures data-driven enhancement errors. These residuals are adaptively fused via a learned weighting factor

, where

denotes the sigmoid function and

is a trainable step size parameter:

The enhanced image is iteratively refined through physics-informed gradient descent:

where

denotes the learning rate.

This design preserves the well-posed inverse problem structure of underwater image enhancement by incorporating explicit depth-dependent scattering modeling, adaptively tuned physical parameters, and a stable fusion mechanism. The integration of these physical constraints serves to regularize the solution space, anchoring it to optically plausible outcomes. This regularization not only contributes to stable training convergence but also enhances the model’s generalization capability by reducing over-reliance on patterns specific to the training data, thereby supporting robust performance across diverse turbidity conditions through a balance between physics-derived plausibility and data-driven adaptability.

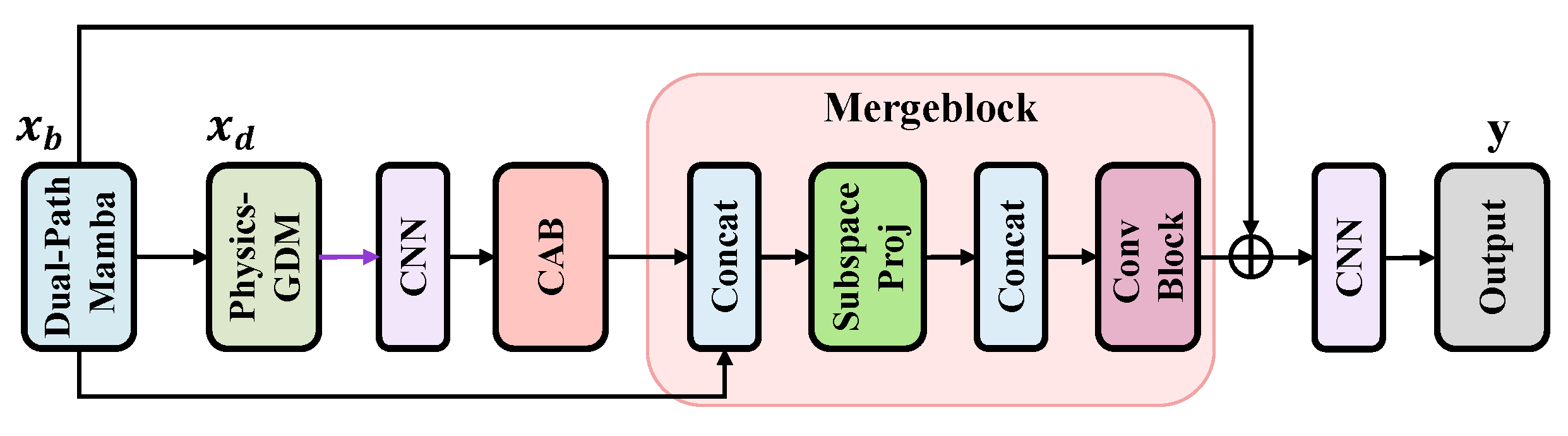

3.5. Subspace Projection Fusion

This module is designed for the efficient integration of multi-stage features, with a primary input stream originating from the Dual-Path Mamba Module. As illustrated in

Figure 4, it employs low-rank subspace projection to compactly combine features while preserving critical information, particularly from the Mamba-enhanced pathway.

Implemented within the MergeBlock class, the fusion process integrates two feature sources: the current-stage features and cross-stage features (which include outputs from the Dual-Path Mamba Module). The computational workflow proceeds as follows:

Feature Concatenation: The current-stage features

are processed by a CNN and a CAB (Convolution-based Attention Block). Then, the cross-stage features

are concatenated with the output of the processed

along the channel dimension:

Low-Rank Subspace Projection: The concatenated features are projected into a low-dimensional subspace to reduce redundancy while retaining essential structural and semantic information. A convolutional subnetwork

(

) generates a projection matrix

:

where N is a normalization term ensuring orthogonality. The transpose projection matrix is obtained through dimension permutation:

Orthogonal Reconstruction: Matrix inversion stabilizes the projection operation:

The bridge features b are then reconstructed in the low-rank subspace:

Feature Re-integration: The projected bridge features

are merged with the original features I via concatenation, followed by a

convolutional layer

and residual connection:

This design effectively integrates Mamba-enhanced features through a compact low-rank representation, reducing computational complexity while preserving critical information from the multi-stage processing pipeline. The subspace fusion mechanism ensures efficient feature recombination while maintaining enhancement performance across diverse underwater conditions.

4. Experiments

4.1. Dataset Description

To comprehensively evaluate the performance of the proposed DPPGM framework, experiments are conducted on two categories of test datasets: (1) full-reference datasets containing paired degraded and clear images, and (2) no-reference datasets without ground truth references.

4.1.1. Full-Reference Datasets

UIEB (Underwater Image Enhancement Benchmark): This benchmark includes 890 paired underwater images (e.g., coral reefs, marine fauna) and adopts a standard split: 800 pairs for training and 90 for testing. The model was trained and evaluated on this fixed partition under supervised learning, ensuring fair comparison with consistent data distribution. (Dataset available at:

https://www.kaggle.com/datasets/larjeck/uieb-dataset-raw, accessed on 20 October 2024).

LSUI (Large-Scale Underwater Image): Focused on challenging underwater conditions, these dataset contains 4279 paired images. We used a fixed random split (3879 training / 400 test pairs) and followed the same supervised learning protocol as UIEB for model training and evaluation. (Dataset available at:

https://github.com/LintaoPeng/U-shape_Transformer_for_Underwater_Image_Enhancement, accessed on 20 October 2024).

4.1.2. No-Reference Dataset

U45: These dataset comprises 45 underwater images exhibiting complex degradation patterns, including high turbidity and non-uniform illumination, without corresponding reference images. It serves as a challenging testbed to evaluate the generalization capability and practical applicability of enhancement methods in real-world scenarios. Since U45 lacks ground truth, it was only used for testing: after the model was fully trained on UIEB and LSUI’s training sets, its generalization was validated on U45’s 45 images. (Dataset available at:

https://github.com/qianday/U45, accessed on 23 September 2025).

Challenging60 (C60): This subset of the UIEB benchmark contains 60 particularly difficult images characterized by extreme turbidity, dense suspended particles, and severe visibility degradation. It represents some of the most challenging conditions encountered in practical underwater imaging. Following the standard evaluation protocol, we used C60 exclusively as a test set to rigorously assess the model’s robustness and generalization capability under near-zero visibility scenarios that are distinct from the training data distribution.(Dataset available at:

https://github.com/qianday/Challenging60, accessed on 23 September 2025).

To ensure a fair and reproducible comparison, the performance of all competing methods was obtained by either using officially released pre-trained models or by our strict re-implementation following the training details and data splitting strategies described in their respective original publications.

4.2. Experimental Details

Experimental Setting: All experiments are implemented using PyTorch 2.1.1 and conducted on a Linux workstation equipped with an NVIDIA GeForce RTX 4090 GPU. The manufacturer of this GPU is NVIDIA Corporation, and the GPU was procured in Yantai, Shandong Province, China. During training, multiple data augmentation techniques are applied to enhance diversity, including random flipping, rotation, transposition, mixing, and cropping. The model is trained with a batch size of 4 for both UIEB and LSUI datasets using the AdamW optimizer (, ) with an initial learning rate of . A CosineAnnealingLR scheduler is adopted to dynamically adjust the learning rate within the range of to , incorporating periodic warm-up phases to mitigate the risk of convergence to local minima. The model was trained for a total of 300 epochs.

Evaluation Metrics: For full-reference evaluation on UIEB and LSUI datasets, we employ the following metrics: A comprehensive set of metrics is employed to evaluate enhancement performance across different aspects. For full-reference assessment on UIEB and LSUI, we utilize: Peak Signal-to-Noise Ratio (PSNR) for pixel-level fidelity, Structural Similarity Index (SSIM) for structural consistency, and Learned Perceptual Image Patch Similarity (LPIPS) for deep feature-based perceptual quality. Underwater-specific metrics include the Underwater Color Image Quality Evaluation (UCIQE) for color restoration and the Underwater Image Quality Measure (UIQM) for integrated colorfulness, sharpness, and contrast performance. For the no-reference U45 and Challenging60 dataset, evaluation incorporates Total Variation (TV) for noise and smoothness assessment, Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) for naturalness estimation, and Natural Image Quality Evaluator (NIQE) for no-reference perceptual quality. UCIQE and UIQM are also reported to maintain consistency with full-reference benchmarks.

4.3. Comparison with State-of-the-Art Methods

4.3.1. Quantitative Results

In this section, we present a comprehensive evaluation of the proposed DPPGM framework across three benchmark datasets—UIEB, LSUI, and U45—and compare its performance against 13 state-of-the-art underwater image enhancement methods: WaterNet [

28], UWCNN [

14], AirNet [

29], PUIE-Net [

46], DeepWaveNet [

38], PUGAN [

32], U-Transformer [

33], DDFormer [

34], HCLR-Net [

30], Unfold [

35], HUPE [

47], UVZ [

36], and UDNet [

37]. The comparison encompasses both objective metric-based assessments and qualitative visual analyses to thoroughly demonstrate the effectiveness and superiority of our approach.

Comprehensive quantitative evaluations across three benchmark datasets demonstrate the superior performance of DPPGM compared to state-of-the-art methods. As detailed in

Table 1, on the UIEB dataset, DPPGM achieves the highest SSIM value of 0.921, surpassing the second-best method, UVZ (0.910), by a clear margin, while the absolute improvement in SSIM (0.011) may appear modest, it represents a consistent and meaningful enhancement observed across multiple test samples. This performance advantage is especially pronounced in complex underwater scenes containing fine details such as coral branches and fish fins, where DPPGM effectively preserves subtle structural information that is often compromised or oversmoothed by other methods. In terms of UIQM, which holistically evaluates color, sharpness, and contrast, DPPGM attains a score of 4.053, ranking among the top performers and underscoring its balanced enhancement capability. Furthermore, DPPGM maintains strong pixel-level fidelity with a PSNR of 24.16, remaining competitive with leading approaches such as UVZ and DDFormer.

On the LSUI dataset, which focuses on low-light underwater conditions, DPPGM demonstrates consistent performance advantages as shown in

Table 2. It achieves a leading SSIM of 0.931, representing a 0.032 point improvement over the second-best method HUPE (0.899)—a margin that substantially exceeds typical variations in underwater image enhancement benchmarks. Similarly, DPPGM attains a PSNR of 28.269, surpassing UVZ by 1.679 and HUPE by 2.802, differences that are considered substantial in visual quality assessment. The method also excels perceptually, achieving an LPIPS score of 0.0957. The consistent and substantial improvements across multiple independent metrics provide strong evidence of statistical significance.

For the no-reference U45 dataset, DPPGM again delivers outstanding results to

Table 3. It achieves the lowest NIQE score of 4.203, reflecting superior naturalness and perceptual quality in the absence of ground truth. With a UIQM value of 3.594, it ranks first among all compared methods, demonstrating comprehensive strength in color enhancement, sharpness improvement, and contrast adjustment. Moreover, DPPGM attains a TV value of 29.138, illustrating its ability to effectively suppress noise while preserving critical structural details, further validating its generalization capability in real-world underwater scenarios.

The evaluation is extended to the Challenging60 dataset to assess performance under more severe degradation conditions. As shown in

Table 4, DPPGM achieves a leading UIQM score of 3.626, demonstrating a substantial advantage in overall perceptual quality. The method also attains competitive scores in noise reduction (TV: 33.589) and naturalness preservation (NIQE: 5.847). The consistent superiority of DPPGM on this challenging benchmark, which contains turbidities distinct from the training data, underscores its strong generalization capability and practical utility in diverse underwater environments.

4.3.2. Qualitative Visual Analysis

To provide a concise yet comprehensive visual comparison, we select seven representative models from the thirteen state-of-the-art methods considered in this study. The selection criteria encompass overall performance across datasets, architectural diversity, and methodological representativeness, ensuring coverage of various technical paradigms—including physics-based, CNN-based, transformer-based, and lightweight approaches.

As supported by quantitative metrics, the visual results produced by our DPPGM consistently demonstrate superior performance across the UIEB, LSUI, and U45 datasets, with enhancements tailored to the specific characteristics of each scenario.

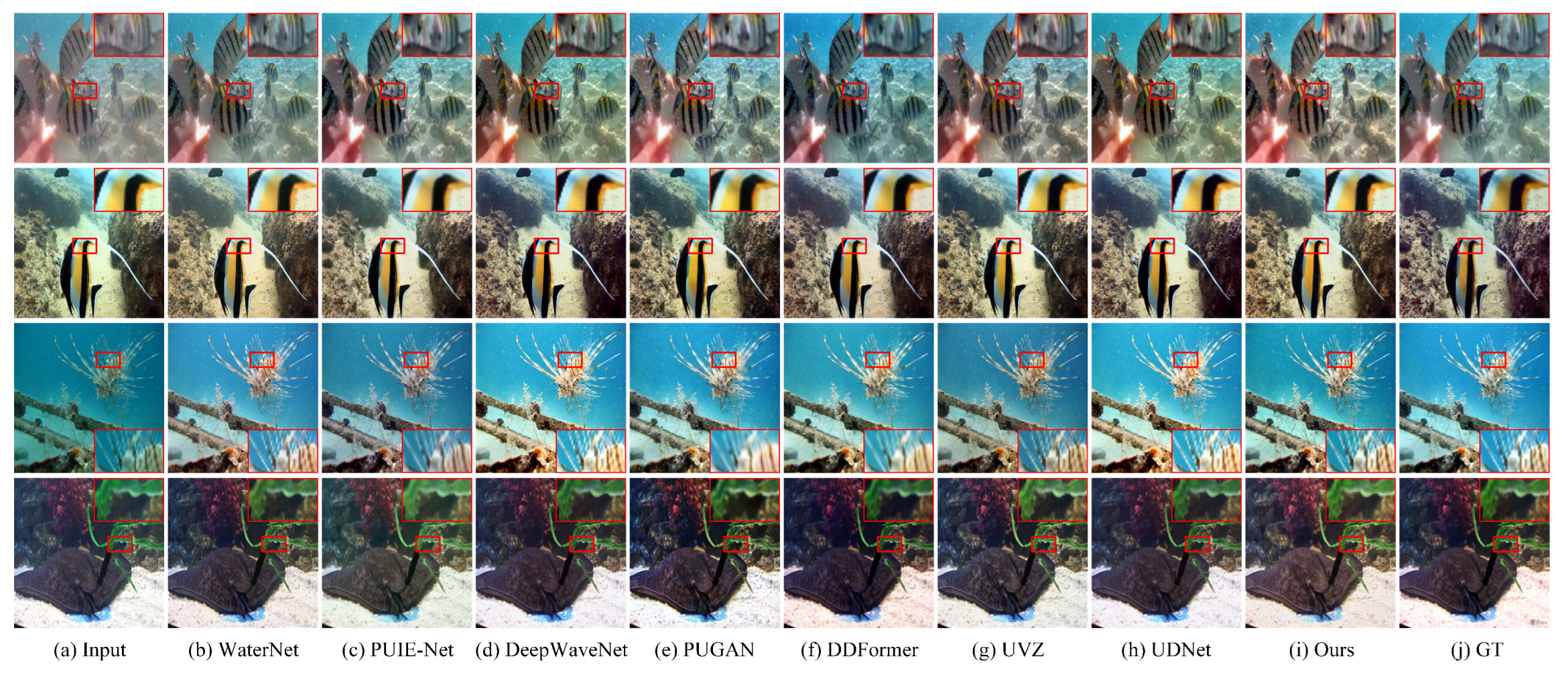

As illustrated in

Figure 5, DPPGM demonstrates exceptional performance on the UIEB dataset, particularly in preserving fine structural details and maintaining natural color fidelity across diverse marine scenes. In images featuring fish and complex aquatic vegetation, our method successfully retains delicate patterns including skin textures and fin contours, while effectively avoiding the over-saturation or excessive smoothing commonly observed in other approaches. Comparative analyses reveal that while DeepWaveNet tends to over-enhance colors and DDFormer often oversmooths subtle elements, DPPGM achieves a balanced and visually coherent reconstruction that closely aligns with natural underwater appearance. The consistent outperformance against models like UVZ and DDFormer underscores the effectiveness of our core innovation: the symmetry-constrained dual-path cooperative design. Unlike the sequential processing in these methods, our framework enables deeper synergy between physical principles and data-driven learning, leading to more balanced and robust enhancement.

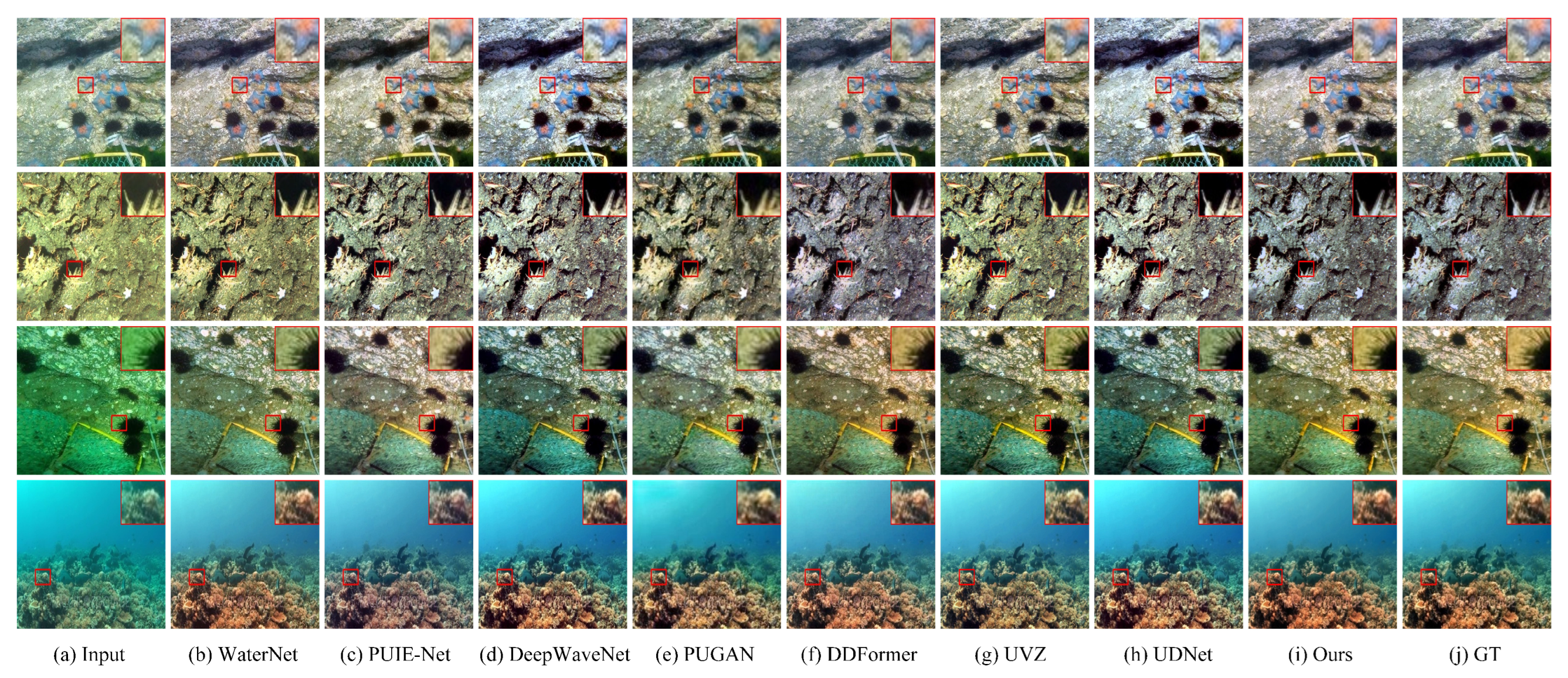

Figure 6 showcases DPPGM’s remarkable capability in enhancing poorly illuminated seabed environments from the LSUI low-light dataset. The visual results demonstrate effective restoration of topographic details including rock formations and coral branches without introducing unnatural artifacts or excessive noise. In contrast to PUIE-Net and WaterNet, which frequently fail to maintain structural consistency in dark regions, and UVZ, which may produce inconsistent local contrasts, DPPGM leverages its physics-informed design to ensure harmonized brightness adjustment and detail recovery.

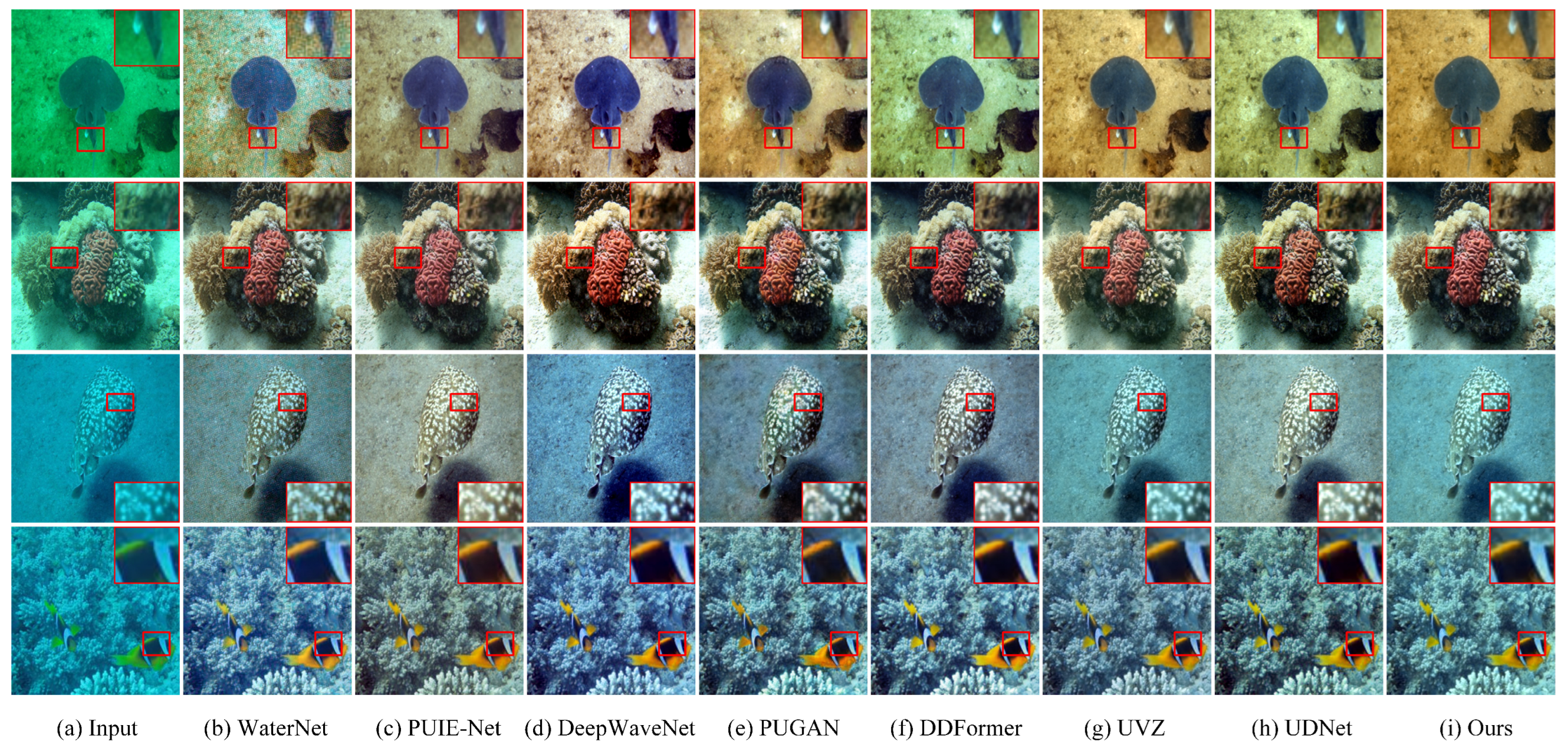

The performance on the challenging no-reference U45 dataset, depicted in

Figure 7, further confirms DPPGM’s robustness in handling complex coral reef imagery with significant color casts and spatial degradation. Our method achieves accurate color correction—effectively recovering the natural hues of coral communities—while preserving crucial textural sharpness and edge information. Comparative visual assessments show that UDNet tends to oversmooth detailed regions and PUGAN occasionally introduces color shifts, whereas DPPGM’s dual-path architecture enables adaptive handling of spatially varying degradation, producing enhancements that are both visually appealing and physically plausible.

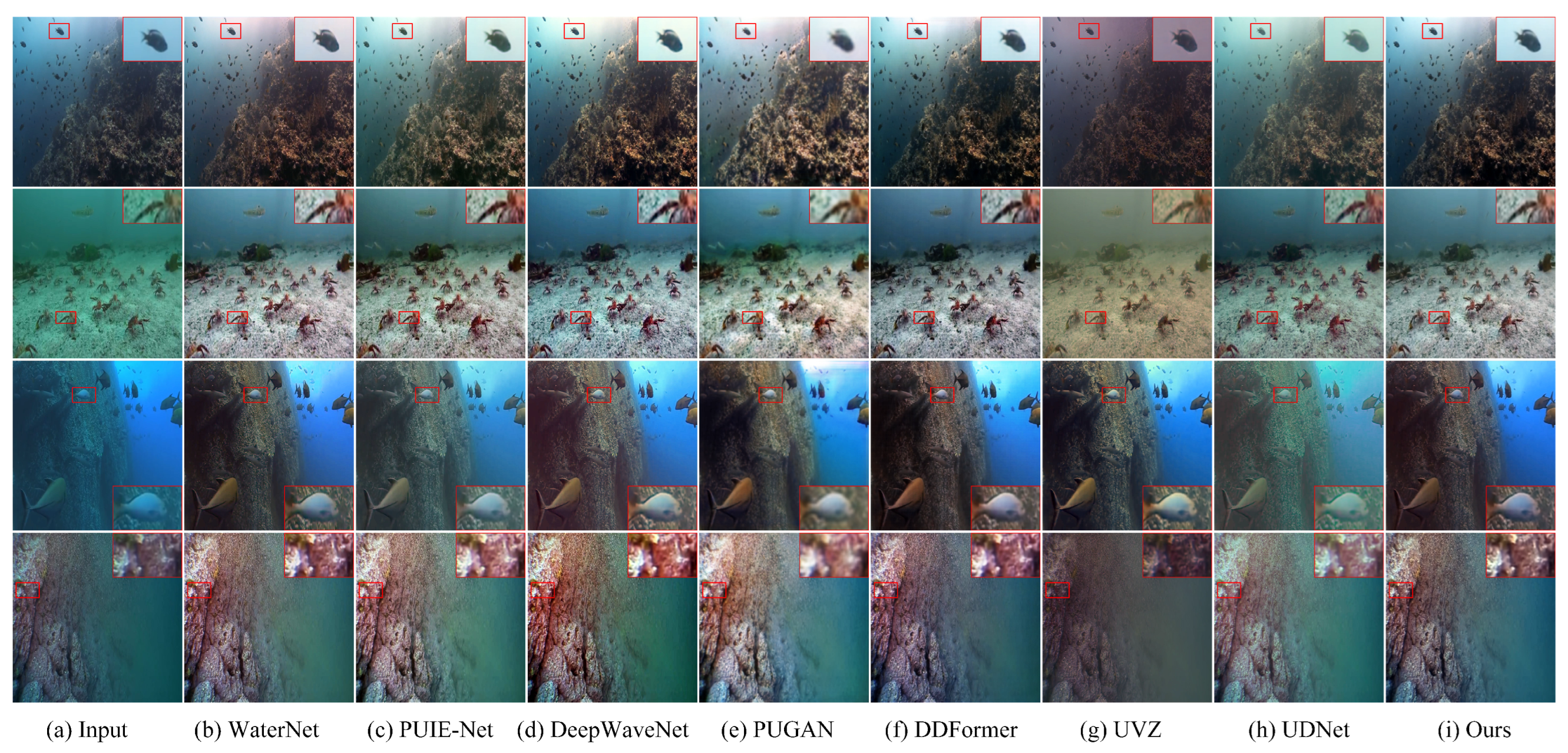

The generalization capability of DPPGM is further validated on the UIEB-Challenging60 dataset, as shown in

Figure 8. In scenes characterized by extreme turbidity and dense suspended particles, our method demonstrates exceptional haze penetration and contrast recovery capabilities, while compared methods like DeepWaveNet and DDFormer struggle with persistent haze or loss of detail in such demanding conditions, DPPGM effectively restores visibility while preserving the integrity of delicate structures like coral polyps and fish scales. The physics-guided pathway proves particularly beneficial in these scenarios, providing constraints that prevent the introduction of non-physical artifacts while recovering plausible scene radiance. This performance on genuinely challenging, real-world imagery underscores the practical viability of our approach for deployment in unpredictable underwater environments.

These qualitative outcomes align closely with the quantitative results, confirming that DPPGM effectively translates its architectural advantages into perceptually superior underwater image enhancement across a variety of challenging conditions.

4.4. Model Complexity

To provide a comprehensive evaluation of the trade-off between efficiency and performance—a key challenge related to symmetry in UIE—we conduct extensive comparisons with 13 state-of-the-art underwater image enhancement methods. As summarized in

Table 5, the evaluation encompasses both computational complexity (parameters and FLOPs) and enhancement performance across three benchmark datasets. Metric selection is tailored to each dataset’s characteristics: full-reference metrics (PSNR and SSIM) are employed for UIEB and LSUI, while no-reference metrics (UCIQE and UIQM) are adopted for U45, ensuring a holistic assessment of restoration quality and perceptual effectiveness.

As shown in

Table 5, DPPGM achieves a superior trade-off between computational efficiency and enhancement performance—effectively restoring the symmetry broken by most methods that prioritize one metric over the other. With only 1.48 M parameters and 25.39 G FLOPs, it maintains a lightweight architecture compared to most competitors: it is significantly more parameter-efficient than heavyweight models like WaterNet (24.81 M), PUGAN (95.66 M), and U-Transformer (65.60 M), while also outperforming methods with comparable complexity (e.g., PUIE-Net: 1.410 M, DDFormer: 7.581 M) in all performance metrics.

In terms of computational cost (FLOPs), DPPGM (25.39 G) is more efficient than resource-intensive methods such as AirNet (301.27 G) and HCLR-Net (401.96 G), and even rivals lightweight designs like UWCNN (5.23 G) and DDFormer (4.47 G) despite delivering substantially better results. Performance-wise, DPPGM leads across critical metrics: it achieves the highest SSIM (0.921) on UIEB and sets new state-of-the-art results on LSUI with PSNR (28.269) and SSIM (0.931). On the challenging U45 dataset, its UIQM score (3.594) outperforms all compared methods, demonstrating robust generalization to real-world underwater scenes. Notably, while methods like UVZ and UDNet show strong performance on specific datasets, they either incur higher computational costs (UVZ: 124.88 G FLOPs) or lag in cross-dataset consistency compared to DPPGM. This balance of efficiency and performance confirms that DPPGM avoids the common pitfall of excessive complexity in high-performance models, upholding the symmetry principle of balanced system design and making it suitable for practical deployment in resource-constrained underwater imaging systems.

4.5. Ablation Study

To quantify the contribution of each core component in DPPGM, we conduct ablation experiments by systematically removing three critical modules—dual-path structure (DPS), Mamba-based physics-guided degradation model (PGDM), and subspace projection fusion (SPF)—and evaluating performance changes. We use Peak Signal-to-Noise Ratio (PSNR, pixel-level fidelity) for UIEB and LSUI, and Underwater Image Quality Measure (UIQM, no-reference quality) for U45, with results summarized in

Table 6.

The results confirm each module’s indispensability and their synergistic effects: Case 1 (the full model integrating DPS, PGDM, and SPF) achieves the best performance across all metrics (UIEB PSNR: 24.17, LSUI PSNR: 28.27, U45 UIQM: 3.59), fully validating the framework’s efficacy. Removing SPF (Case 2) leads to consistent performance degradation, with UIEB PSNR decreasing by 0.29, LSUI PSNR by 0.18, and U45 UIQM by 0.27—this reflects SPF’s key role in aggregating fine-grained features to preserve underwater scene structural details. Disabling PGDM (Case 3) results in a 0.19 drop in UIEB PSNR, a 0.16 decline in LSUI PSNR, and a 0.24 reduction in U45 UIQM, confirming that PGDM’s embedded physical constraints effectively align enhancement results with real-world optical principles. The most significant performance loss occurs when excluding DPS (Case 4): UIEB PSNR decreases by 0.52, LSUI PSNR by 0.54, and U45 UIQM by 0.35, highlighting DPS’s critical value in addressing spatially heterogeneous degradation through separate processing of low-frequency global context and high-frequency local details. Retaining only DPS while removing PGDM and SPF (Case 5) leads to further performance drops, with UIEB PSNR reaching 23.47, LSUI PSNR 27.54, and U45 UIQM 3.21—this underscores that PGDM and SPF are necessary to balance pixel-level fidelity and physical plausibility, as DPS alone enables basic adaptive handling of heterogeneous degradation but lacks PGDM’s physical constraints and SPF’s efficient fusion, severely undermining the model’s ability to balance fidelity and plausibility. In Case 6, we replaced the learned degradation-aware mask with uniform weighting. The resulting performance drop (UIEB PSNR: 23.21, LSUI PSNR: 27.19, U45 UIQM: 3.13) compared to Case 5 confirms that the mask’s region-separation ability is crucial to the DPS module’s effectiveness.

Collectively, these findings demonstrate that all three core components are indispensable for DPPGM’s optimal performance.