Abstract

Training-free video summarization tackles the challenge of selecting the most informative keyframes from a video without relying on costly training or complex deep models. This work introduces C2FVS-DPP (Contextual Feature Fusion Video Summarization with Determinantal Point Process), a lightweight framework that generates concise video summaries by jointly modeling semantic importance, visual diversity, temporal structure, and symmetry. The design centers on a symmetry-aware fusion strategy, where appearance, motion, and semantic cues are aligned in a unified embedding space, and on a reward-guided optimization logic that balances representativeness and diversity. Specifically, appearance features from ResNet-50, motion cues from optical flow, and semantic representations from BERT-encoded BLIP captions are fused into a contextual embedding. A Transformer encoder assigns importance scores, followed by shot boundary detection and K-Medoids clustering to identify candidate keyframes. These candidates are refined through a reward-based re-ranking mechanism that integrates semantic relevance, representativeness, and visual uniqueness, while a Determinantal Point Process (DPP) enforces globally diverse selection under a keyframe budget. To enable reliable evaluation, enhanced versions of the SumMe and TVSum50 datasets were curated to reduce redundancy and increase semantic density. On these curated benchmarks, C2FVS-DPP achieves F1-scores of 0.22 and 0.43 and fidelity scores of 0.16 and 0.40 on SumMe and TVSum50, respectively, surpassing existing models on both metrics. In terms of compression ratio, the framework records 0.9959 on SumMe and 0.9940 on TVSum50, remaining highly competitive with the best-reported values of 0.9981 and 0.9983. These results highlight the strength of C2FVS-DPP as an inference-driven, symmetry-aware, and resource-efficient solution for video summarization.

1. Introduction

Video summarization aims to generate concise yet informative representations of lengthy videos by preserving their most essential content, thereby facilitating efficient video understanding and retrieval. Video summarization systems have become increasingly important in a variety of domains and are typically grouped into two major categories: visual summarization and semantic summarization. Visual summarization involves the extraction of keyframes or segments to create condensed previews, such as movie trailers [1,2] or summaries for surveillance footage [3], supporting fast navigation and content indexing. In contrast, semantic summarization seeks to convey the core meaning of a video using natural language, as exemplified in video captioning [4] and narrative generation [5].

Despite significant progress, prior works in unsupervised video summarization have not sufficiently addressed the problem of redundancy. Many methods retain repetitive or uninformative frames, leading to higher computational overhead and summaries that are less concise and informative [6]. At the same time, widely used benchmark datasets such as SumMe [7] and TVSum50 [8] themselves contain redundant frames and uneven ground-truth score distributions, which can further dilute evaluation reliability. These issues contribute to a misalignment between automatically generated summaries and human preferences for informativeness, diversity, and brevity, underscoring the need for frameworks and datasets that jointly emphasize conciseness and semantic density. Furthermore, while some studies argue for supervised learning to leverage annotated datasets, others emphasize unsupervised or training-free approaches to avoid expensive model training, highlighting diverging hypotheses within the field.

Keyframe extraction from a video clip is typically approached using either static or dynamic methods [9]. Static keyframe extraction techniques rely on low-level visual descriptors—such as color histograms, motion vectors, and texture features [9]—to identify representative frames. These methods involve segmenting the video into frames, extracting handcrafted features, and applying threshold-based filtering or clustering heuristics based on inter-frame similarity. While effective in reducing redundancy and retaining visual diversity, static methods often struggle with capturing semantic content and are sensitive to noise and parameter tuning, thereby limiting their accuracy and interpretability in complex scenarios [10].

In contrast, dynamic approaches leverage deep learning models to derive abstract, high-level semantic feature representations from video data. Convolutional Neural Networks (CNNs) [11], Long Short-Term Memory (LSTM) networks [12], and Transformer-based architectures [13] have been successfully employed to learn temporal dependencies and semantic patterns, enabling more precise and automated keyframe selection. Nevertheless, despite their progress, dynamic methods still struggle with high computational demands and inference latency, limiting their effectiveness in real-time scenarios. Moreover, deep models often lack interpretability, making it difficult to understand or justify their decisions [14]. Addressing key challenges such as computational efficiency, accuracy, interpretability, and symmetry-aware design therefore remains a critical direction for future research in keyframe extraction.

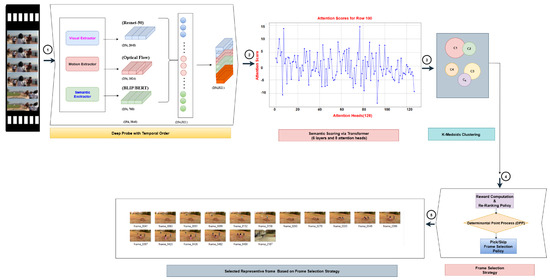

The aim of this work is to address these challenges by proposing a training-free framework for generating diverse, representative, symmetry-aware, and semantically meaningful video summaries while reducing redundancy. The proposed framework adopts a hybrid strategy for keyframe selection: feature representations are extracted using pre-trained deep learning models in conjunction with a deep probing mechanism; clustering is performed based on attention-derived semantic scores; and representative frames are ultimately selected using the Determinantal Point Process (DPP) [7], as illustrated in Figure 1.

Figure 1.

Proposed Proposed C2FVS-DPP framework for training-free keyframe selection, comprising five stages: (1) Feature Extraction, integrating appearance features (2048D ResNet-50), motion features (1024D optical flow), and textual semantics (768D BERT from BLIP captions); (2) Semantic Scoring via a Transformer with cross-modal attention to compute context-aware importance scores; (3) Clustering using K-Medoids with shot boundary detection to group semantically similar frames; (4) Reward Computation and Re-Ranking to optimize selection for diversity and representativeness; and (5) DPP-based Frame Selection with a pick/skip policy to generate compact and diverse keyframe summaries.

The contributions and main highlights of this research can be summarized as follows:

- We propose C2FVS-DPP, a training-free framework that generates diverse, representative, symmetry-aware, and visually unique video summaries. The novelty lies in two key aspects: (i) a contextual multimodal fusion strategy that projects appearance (ResNet-50), motion (optical flow), and semantic (BLIP→BERT) cues into a shared temporally encoded space for cross-modal comparability, and (ii) a reward-guided optimization logic that couples per-shot clustering with global DPP-based selection, ensuring semantic relevance, representativeness, and diversity under a keyframe budget.

- We curate a new benchmark dataset, derived from the SumMe [7] and TVSum50 [8] datasets using a statistical filtering method that retains frames with high ground-truth informativeness. This curated dataset reduces redundancy, increases semantic density, and provides a stronger testbed for future video summarization research.

- We introduce a novel evaluation metric, Hybrid Frame Similarity Assessment, that integrates semantic features from ResNet-50 and ViT with perceptual similarity from LPIPS. This adaptive metric captures both semantic fidelity and perceptual closeness, offering a more reliable comparison across models. Experimental results on the curated SumMe and TVSum50 datasets demonstrate that C2FVS-DPP consistently outperforms existing approaches in keyframe selection and overall summarization quality.

2. Related Work

Among existing techniques in video understanding, frame selection and summarization have attracted significant attention as key tasks for reducing redundancy and highlighting salient content. Early approaches primarily relied on low-level visual cues, whereas more recent works incorporate deep learning and attention mechanisms to capture semantic and temporal dependencies. Across these methods, a common trade-off emerges: while models aim to balance informativeness, diversity, and efficiency, most approaches face challenges in terms of scalability, interpretability, or generalization. Within this broader landscape, we review four main categories of summarization methods: clustering-based, feature-based, deep learning-based, and attention-based approaches.

2.1. Clustering-Based Approaches

Clustering methods group visually or semantically similar frames and select representatives to construct concise summaries. Early works emphasized handcrafted features: Khalid et al. [9] fused SURF and HOG descriptors, reduced dimensionality with covariance analysis, and applied Fuzzy C-Means clustering to preserve story continuity. Similarly, Tonge and Thepade [15] employed static shot segmentation and k-means clustering, which reduced storage demands but required manual cluster number selection. To improve automation, Wang et al. [16] introduced a hierarchical clustering method guided by silhouette optimization, while Gangwani and Ramteke [17] combined handcrafted SIFT features with VGG16 embeddings to enhance contextual awareness. Graph-based approaches have also been explored: Gharbi et al. [18] used SIFT and LBP to build repeatability graphs for modularity clustering. More recently, Kaur et al. [19] proposed hybrid feature fusion with Fuzzy C-Means and meta-heuristic optimization. These methods highlight the value of clustering but often remain sensitive to feature design choices or introduce additional algorithmic complexity.

2.2. Feature-Based Approaches

Feature-based keyframe extraction relies on low-level descriptors to identify informative frames. Rodriguez et al. [20] used histogram analysis with adaptive thresholding to detect significant variations, offering lightweight summarization. Nandinia et al. [21] employed Edge-LBP descriptors combined with shot detection to retain both textural and structural details, though preprocessing requirements increased complexity. Motion-driven summarization has also been investigated: Zhang et al. [22] introduced a spatiotemporal slice analysis framework that correlates motion with perceptual importance, well suited for surveillance but less effective in static scenarios. Beyond visual cues, Song et al. [8] proposed the TVSum dataset and demonstrated how video titles can guide semantic frame selection, though this reliance on metadata limits applicability to unlabeled content.

2.3. Deep Learning-Based Approaches

Deep learning models have enabled the learning of high-level semantics and temporal dependencies for video summarization. Yang et al. [23] proposed KFRFIF, which integrates intra- and inter-frame attributes through multi-head attention to capture fine-grained cues. Li et al. [24] employed sparse coding with dictionary learning for robust shot segmentation and keyframe identification. Alharbi et al. [25] introduced a channel-attention encoder–decoder framework with InceptionV3 features to improve multiscale representation. Zhang et al. [26] developed DGC-FNet, a dynamic graph-aware model combining Bi-LSTMs, GCNs, and contrastive learning to address long-range dependencies and noisy graph structures. In domain-specific contexts, Mehta et al. [27] proposed DF Sampler, a self-supervised method tailored for industrial human activity recognition. While effective in their respective scenarios, these approaches often rely on substantial computation or extensive annotations, which can limit their general-purpose applicability.

Building on unimodal methods, Singh et al. [28] propose MVSAT, a Transformer-based framework for multimodal video summarization, integrating visual and textual cues through cross-attention mechanisms. Frame-level features are extracted using GoogLeNet, while captions provide complementary semantic information. The Transformer aligns and fuses multimodal inputs, with self-attention layers modeling temporal dependencies. Segment importance is estimated through semantic relevance, spatial location, and center-ness, followed by temporal segmentation techniques for keyshot selection. By combining these components, MVSAT achieves improved semantic coherence and diversity, establishing a strong baseline for training-free and unsupervised summarization.

Extending this line of work, Guo et al. [29] introduce CFSum, which adopts a coarse-to-fine fusion strategy to integrate visual, textual, and optionally audio features. Coarse fusion captures global semantic alignment across modalities, while fine fusion refines local cross-modal interactions through attention mechanisms. A multi-layer Transformer encoder models long-range dependencies, and a regression head predicts segment importance before temporal segmentation. Compared to multimodal baselines such as MVSAT and MM-Transformer, CFSum demonstrates stronger summary coherence and modality synergy, providing a benchmark for evaluating multimodal summarization strategies.

2.4. Attention-Based Approaches

Attention mechanisms have been employed to improve semantic modeling and capture long-range dependencies. Ji et al. [30] proposed Attentive Encoder–Decoder Networks (AVS), combining BiLSTMs with attention variants and Kernel Temporal Segmentation to generate importance-weighted summaries. Hsu et al. [31] extended this with a Spatiotemporal Vision Transformer (STVT), which integrates inter-frame and intra-frame attention modules to jointly model temporal and spatial cues, significantly enhancing summary coherence. Beyond summarization, Guo et al. [32] explored Reinforced Multichoice Attention for multi-turn video question generation, illustrating how attention can support deeper semantic grounding in related tasks. Together, these methods underscore the role of attention in advancing contextual and structural modeling for video summarization.

3. Dataset

3.1. Benchmark Datasets

Two widely adopted benchmark datasets are used: SumMe [7] and TVSum50 [8]. SumMe consists of 25 user-generated videos in .mp4 format, with durations ranging from 1 to 6 min. Each video is annotated by 15 to 18 users, who provide frame-level importance scores reflecting the semantic contribution of each frame to the overall summary.

TVSum50 includes 50 YouTube videos grouped into 10 categories (five videos per category), with durations ranging from 2 to 10 min. Each video is annotated by 20 users, who rate short temporal segments on a scale from 1 to 5, indicating segment-level relevance. This section outlines the dataset curation process and the strategy employed for frame selection, followed by quantitative and qualitative evaluations of the curated dataset. A comparison with existing video summarization benchmarks is also presented, highlighting improvements in summary compactness and semantic richness.

3.2. Dataset Curation and Selection Strategy

Although the SumMe and TVSum50 datasets provide rich annotations, they also contain a significant number of redundant or low-importance frames, which can dilute the learning signal and hinder framework performance. To mitigate this limitation, a statistical threshold-and-average-based filtering strategy is proposed to curate a compact and semantically meaningful subset of frames. The primary objective is to enhance supervision clarity and improve semantic precision for tasks such as video summarization and captioning.

To ensure fair evaluation, the ground-truth (GT) importance scores for each video in the SumMe dataset are normalized to the range , following the protocol of the original authors. The same normalization is applied to the TVSum50 dataset, ensuring a consistent scale across both datasets while preserving the relative importance values assigned by the annotators. The complete dataset curation and frame selection procedure is detailed in Algorithm 1. The curated frame selection scores for each video clip, including ground-truth and selected frames, are provided in the Supplementary Materials (Supplementary Section).

| Algorithm 1 Statistical selection of informative frames based on GT scores. |

|

- : Sequence of video frames.

- : Ground-truth importance score for frame .

- : Threshold for filtering low-score frames ().

- : Average ground-truth score of valid frames.

- : Binary indicator (1 = important, 0 = not important).

- : A group of consecutive frames with frame_status = 1.

- : Middle frame of a valid sequence, selected as representative.

- : Final set of representative frames.

3.3. Statistical Analysis of the Dataset

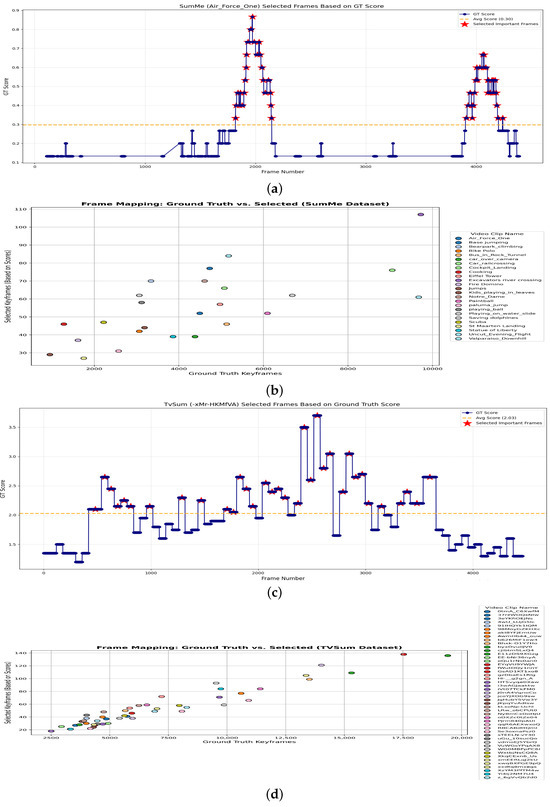

Figure 2 illustrates the statistical properties of the curated SumMe and TVSum50 datasets. Additional insights are provided in Figure 2a–d, which shows the distributions resulting from threshold-based frame selection. Frames with average ground-truth (GT) scores equal to or above the threshold values of 0.3 (for SumMe) and 2.03 (for TVSum50) are selected. Figure 2 further visualizes the distribution of all frame-level scores in the original datasets versus the selected frames. The illustrated examples are taken from the videos Airforce (SumMe) and -xMr-HKMfVA (TVSum50), respectively.

Figure 2.

Threshold-based frame selection results. (a,b) correspond to the SumMe dataset (Airforce video), and (c,d) correspond to the TVSum50 dataset (-xMr-HKMfVA video). Frames are selected with threshold values of 0.3 (SumMe) and 2.03 (TVSum50). (a) Selected frames at Avg. Score (SumMe–Airforce); (b) GT score distribution vs. selected frames (SumMe–Airforce); (c) selected frames at Avg. Score (TVSum50–xMr-HKMfVA); (d) GT score distribution vs. selected frames (TVSum50–xMr-HKMfVA).

Quantitative Metrics.

We define two quantitative indicators to assess the quality of the curated dataset:

- GT Score Improvement (Factor): This measures how much more representative the curated frames are compared to the original summary. It is computed as follows:

- Frame Reduction (%): This indicates the percentage of frames removed from the original dataset to produce a more compact summary. It is calculated as follows:where and denote the number of frames in the original and curated datasets, respectively.

Furthermore, Table 1 presents a comparative analysis between the original and curated versions of the SumMe [7] and TVSum50 [8] datasets used for video summarization. The curated datasets significantly reduce the number of frames—by 98.75% for SumMe and 99.25% for TVSum50—while still preserving essential content. This results in much shorter summaries: 1.25% of the original length for SumMe and 0.75% for TVSum50, compared to the typical 5–15% in the original datasets. Additionally, the average ground-truth (GT) scores are markedly higher in the curated datasets (0.47 for SumMe and 0.58 for TVSum50), reflecting improved semantic representativeness. Notably, the curated summaries achieve GT scores that are 3.62 and 1.66 times higher than the original summaries for SumMe [7] and TVSum50 [8], respectively. These findings confirm and demonstrate the effectiveness of the curation strategy in producing summaries that are both concise and semantically informative.

Table 1.

Comparison of original and curated video summarization datasets.

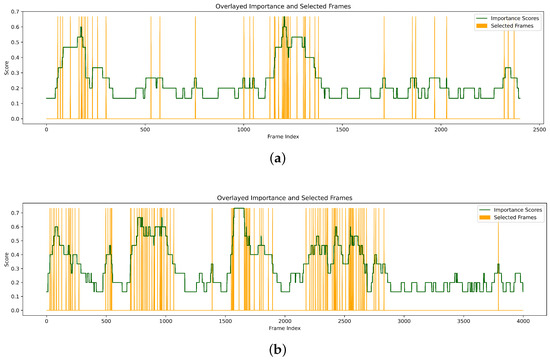

- Qualitative Results:

Qualitative comparison results for two exemplar videos from the original SumMe dataset and the curated SumMe dataset are shown in Figure 3. The overlaid line and interval plot depict frame-level importance scores (green line) along with the selected summary intervals (orange-shaded regions). The visualization demonstrates how the proposed method effectively identifies and retains high-importance segments while removing redundant and low-importance frames, including those with zero scores.

Figure 3.

Keyframe selection strategy: (a) Bus_in_Rock_Tunnel; (b) Excavators_river_crossing.

3.4. Comparison of Performance Metrics: Proposed vs. Existing Datasets

The curated datasets are evaluated using standard performance metrics: Precision [33], Recall [33], and F1-Score [34], which are defined as follows:

Here, TP (true positives) represents the number of correctly selected important frames, FP (false positives) denotes the number of incorrectly selected frames, and FN (false negatives) corresponds to the number of important frames that were not selected.

As shown in Table 2, although the F1-score is lower for the curated datasets (0.2023 vs. 0.3100 for SumMe and 0.1776 vs. 0.5400 for TVSum50), the Precision improves significantly, reaching a perfect score of 1.0000.

Table 2.

Comparison of evaluation metrics (F1-Score, Precision, Recall) for original and curated datasets.

This trade-off reflects the design goal of our curated dataset: to prioritize the selection of highly informative and non-redundant frames, even if it comes at the cost of reduced coverage. Such characteristics are particularly beneficial in downstream applications like detailed video captioning and highlight localization, where semantic clarity and precision are more critical than broad recall coverage.

4. The Proposed Methodology

The problem of video summarization is formulated as selecting a representative subset of keyframes from an input video , where T is the total number of frames and is the i-th frame. The objective is to maximize a multi-objective score function that captures semantic importance, visual diversity, and temporal coverage, subject to the constraint , where K is the desired number of keyframes. Formally,

where denotes a candidate subset of frames from , and is the optimal subset maximizing the objective function.

Our methodology is a hierarchical, training-free pipeline leveraging pre-trained models.

4.1. Frame Sampling and Preprocessing

To reduce computational complexity, frames from the video are sampled at a fixed interval . This yields the following sampled frame set:

Each sampled frame is resized to and normalized by subtracting the mean RGB values and scaling to , ensuring compatibility with pre-trained models and robustness to varying frame rates.

4.2. Contextual Feature Fusion

Each frame is represented by three modality-specific feature vectors:

- : Visual features extracted using ResNet-50 [35].

- : Motion features computed from dense optical flow via Farneback’s method [36].

- : Semantic features obtained by encoding BLIP-generated captions [37] with BERT [38].

These heterogeneous features are concatenated:

To unify the feature dimensions, a projection matrix maps the concatenated features into a 512-dimensional embedding:

To incorporate temporal ordering, sinusoidal positional encodings [39] are added:

with

where the notation means concatenating the sine and cosine terms for each embedding dimension k, producing a 512-dimensional positional encoding.

4.3. Context-Aware Semantic Scoring via Transformer

Given the embedding set , where , a pre-trained Transformer encoder with six layers and eight attention heads [39] captures contextualized frame representations:

A linear layer followed by a sigmoid computes each frame’s semantic score:

where , , and indicates semantic relevance.

4.4. Temporal Segmentation (Shot Detection)

The sampled sequence is partitioned into shots , where each shot . PySceneDetect’s ContentDetector [40] computes HSV color differences between consecutive frames, applying adaptive thresholds to detect shot boundaries.

4.5. Clustering with K-Medoids

For each shot , K-Medoids clustering [41] is applied to embeddings . The optimal cluster number is chosen by maximizing the silhouette score:

where silhouette is computed using cosine distances. The medoid of each cluster (the frame minimizing distances to others) is selected as a candidate keyframe.

4.6. Reward-Based Frame Selection

Each candidate frame is re-ranked with a composite reward:

where is semantic importance, and , , and are hyperparameters balancing the following terms:

- Diversity Reward (): This promotes temporal diversity by penalizing similarity with previously chosen frames.

- Representativeness Reward (): This measures closeness to the cluster centroid.

- Visual Diversity Reward (): This encourages visual distinctiveness using ResNet features.

For each cluster, the frame with the highest reward is retained in the candidate set .

4.7. DPP-Based Diversity-Aware Selection

From the candidate set , a subset of size is chosen with a Determinantal Point Process (DPP). The kernel is as follows:

where cosine similarity ensures consistent scaling across embeddings. With regularization , subsets maximizing both diversity and quality are selected [42]:

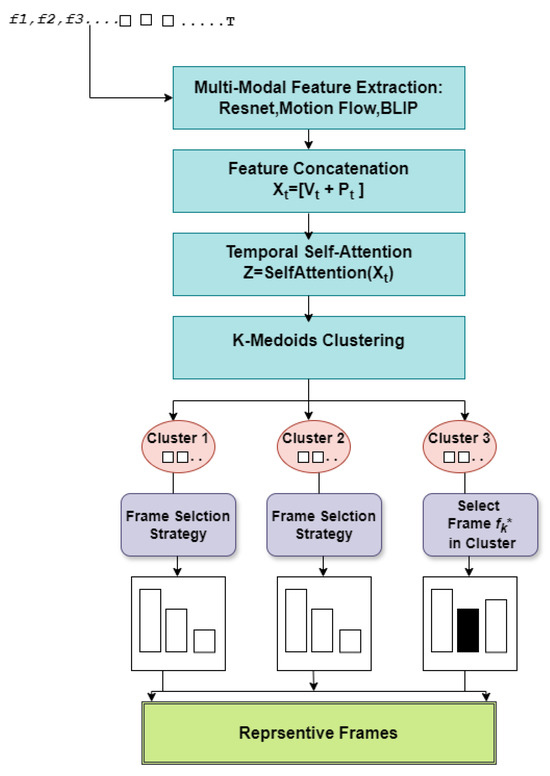

The complete procedure is summarized in Algorithm 2 (C2FVS-DPP) and illustrated in Figure 4.

| Algorithm 2 C2FVS-DPP: Contextual feature fusion-based video summarization with DPP-based diversity selection |

|

Figure 4.

Workflow of the proposed C2FVS-DPP framework for contextual feature fusion and diversity-aware keyframe selection.

The list of variables and their corresponding notations used in the proposed C2FVS-DPP framework are summarized in Table 3.

Table 3.

Summary of notations used in the proposed C2FVS-DPP framework.

5. Experiment

This section details the experimental design and evaluation strategy adopted for the curated dataset. The baseline methods used for comparison are introduced first, followed by a description of the evaluation metrics. An ablation study is then conducted to assess the contribution of individual components. Finally, a comprehensive performance analysis of the baseline methods is presented to demonstrate the effectiveness of the proposed approach.

5.1. Baseline Methods for Evaluation

To evaluate the effectiveness of the proposed method, comparisons are made against a set of baseline methods and techniques commonly employed in the domain of video summarization and frame selection. Fuzzy C-Means (FCM) [9] clustering is employed as a soft clustering baseline that assigns membership probabilities to each frame, allowing flexible grouping based on feature similarities. We also implement a Dynamic Frame Sampler (DF Sampler) [27], which adaptively selects frames by analyzing motion cues and temporal differences, serving as a baseline for dynamic selection approaches. For feature representation, the Edge-Local Binary Pattern (Edge-LBP) [21] is adopted as a traditional texture descriptor, capturing spatial edge features across video frames. In addition, Feature Intercross and Fusion (FIF) [23] is applied to combine appearance and motion features into a unified representation, providing a simple yet effective baseline technique. Furthermore, Unsupervised Learning Methods (ULMs) Gangwani and Ramteke [17], such as K-Means and FCM, are incorporated to assess the ability of clustering-based selection without reliance on labeled data. Histogram-Based Representations (HBRs) [20], including color and edge histograms, are used to measure frame similarity and serve as low-level feature baselines. Generative adversarial networks (GANs) [43] are employed to model frame importance and diversity in an unsupervised manner, serving as a robust baseline for generative approaches. The graph neural network (GNN) [44] formulates frame selection as a binary node classification task over a sparse temporal graph, providing a scalable and memory-efficient baseline compared to dense graph-based methods.

5.2. Experimental Setup and Implementation Details

Preprocessing: Video frames are extracted from each clip by sampling every 5th frame, corresponding to approximately 6 frames per second for a 30 fps video, in order to balance temporal coverage and computational efficiency. Each frame is resized to pixels using OpenCV’s default bilinear interpolation, converted from BGR to RGB format, and normalized to the range [0, 1]. Optical flow is computed between consecutive grayscale frames using the Farneback method with the following parameters: pyr_scale = 0.5, levels = 3, winsize = 15, iterations = 3, poly_n = 5, and poly_sigma = 1.2. The resulting motion vectors are flattened into 1024-dimensional representations and stored as 16-bit floats for memory efficiency.

Model Architecture: The proposed framework, C2FVS-DPP, performs contextual feature fusion for representative frame selection. Appearance features are extracted using the shallow layers of ResNet-50 [35], yielding 2048-dimensional vectors. Motion features are captured via the Farneback optical flow algorithm [36], producing 1024-dimensional vectors. Semantic visual features are obtained from CLIP (ViT-B/32) [45], while semantic text features are derived from BLIP-generated captions [37] and encoded with BERT [38], resulting in 768-dimensional vectors. The extracted features are concatenated into a 3840-dimensional fused representation and linearly projected into a 512-dimensional embedding space with positional encodings. A cross-modal attention module with 8 heads aligns 256-dimensional visual and textual embeddings derived from the fused representation, generating a 256-dimensional joint representation. This is further refined using a Transformer-based semantic scorer comprising 4 layers with 8 attention heads, which computes the final frame-level importance scores.

Within each video shot, K-Medoids clustering with cosine distance groups semantically similar frames, where the optimal cluster number is determined via silhouette analysis. Frame selection is further guided by a reinforcement learning-inspired reward function that balances semantic relevance, intra-cluster diversity, representativeness, and visual uniqueness. The influence of each component is controlled by tunable hyperparameters , , and . Finally, a Determinantal Point Process (DPP) [7] is applied to sample a compact subset of frames from each shot by constructing a kernel matrix from fused embeddings and scoring outputs, thereby ensuring diversity and comprehensive content coverage in the final summary.

Weight Initialization: Pre-trained weights from ImageNet (ResNet50), CLIP (ViT-B/32), BERT (base-uncased), and BLIP (image-captioning-base) were used to initialize feature extraction modules, leveraging robust representations without fine-tuning [35,37,38,45]. The CrossModalAttention and TransformerScorer modules employed Xavier initialization for linear layers to ensure stable gradients [46]. The projection layer () was implemented using PyTorch v2.3.1+cu121.

default initialization (Kaiming) [47]. Positional encodings were deterministically computed using sinusoidal functions (Equation (9)), requiring no random initialization.

Optimization: Due to limited computational resources, the proposed framework operates in inference mode, utilizing pre-trained models (ResNet50, CLIP, BERT, BLIP) without fine-tuning. Feature extraction is performed using batch processing with a batch size of 4 and mixed-precision inference via PyTorch’s autocast to reduce memory overhead. All features are stored in 16-bit float precision to minimize GPU memory usage. The K-Medoids algorithm is employed for clustering, with cosine distance optimized using . The number of clusters is determined by maximizing the silhouette score. Frame selection is guided by a reward function combining semantic score, diversity, representativeness, and visual diversity, with the corresponding weights , , and as tunable hyperparameters. A Determinantal Point Process (DPP) further refines selection by maximizing kernel diversity. All processes are designed for efficient single-pass inference on a Quadro P5000 (NVIDIA Corporation, Santa Clara, CA, USA) (16 GB VRAM) without training.

5.3. Evaluation

The proposed Hybrid Frame Similarity Assessment metric is employed to measure the alignment between frames generated by the Frame Selection Framework (C2FVS-DPP) and those obtained from baseline methods. The evaluation relies on a weighted combination of semantic embeddings extracted from ResNet-50 and ViT, together with perceptual similarity computed using LPIPS.

The weights for hybrid similarity scoring are heuristically assigned as , , and , balancing semantic contributions from ResNet-50 and ViT [48] with perceptual similarity from LPIPS [49]. These weights were later dynamically adjusted based on the variance of similarity scores in the respective feature spaces, allowing the model to emphasize more discriminative modalities depending on the frame content. The threshold T for frame matching was computed as the maximum between Otsu’s threshold and the 75th percentile of all similarity scores, enabling adaptive and robust filtering. The full procedure is detailed in Algorithm 3.

| Algorithm 3 Proposed hybrid frame similarity assessment metric leveraging ResNet-50, ViT, and LPIPS. |

|

- : Set of model-generated video frames.

- : Set of ground-truth video frames.

- : i-th frame from model-generated frames.

- : j-th frame from ground-truth frames.

- : ResNet50 features for and .

- : ViT features for and .

- : ResNet cosine similarity between and .

- : ViT cosine similarity between and .

- : LPIPS-based perceptual similarity between and .

- : Final hybrid similarity score.

- : Weights for ResNet, ViT, and LPIPS similarity, respectively.

- : Variances of respective similarity scores.

- T: Adaptive similarity threshold.

5.4. Results on Curated Dataset: SumMe and TVSum50

Several baseline methods are evaluated using the curated versions of the widely adopted SumMe and TVSum50 datasets. The baseline methods include FCM [9], FIF [23], DF Sampler [27], ULM [17], Edge-LBP [21], HBR [20], GAN [43], and GNN [44]. Each of these methods targets keyframe extraction and redundancy reduction through diverse strategies, including clustering, multimodal feature fusion, histogram analysis, edge-based descriptors, generative adversarial networks, and graph neural networks. Specifically, GAN models frame importance and diversity in an unsupervised manner, while GNN formulates frame selection as a binary node classification task over a sparse temporal graph, together providing robust and scalable baselines. Collectively, these approaches offer a comprehensive state-of-the-art comparison for evaluating the effectiveness of our training-free approach.

Following previous works [50,51,52,53] in video summarization evaluation, the F1-score, fidelity score, and compression ratio (CR) are adopted as key evaluation metrics. Table 4 and Table 5 provides a comparative analysis of all evaluated methods. The proposed approach consistently outperforms baseline models across both datasets. Notably, the incorporation of multi-descriptor similarity—leveraging ResNet50, ViT, and LPIPS—substantially enhances keyframe representativeness and visual fidelity. Our model achieves the highest average F1-score, reflecting superior alignment with human-annotated summaries. Furthermore, it records elevated fidelity scores and comparison ratios, underscoring the effectiveness of our method in generating compact summaries while preserving semantic content.

Table 4.

Performance comparison on curated SumMe datasets.

Table 5.

Performance comparison on curated TVSum50 dataset.

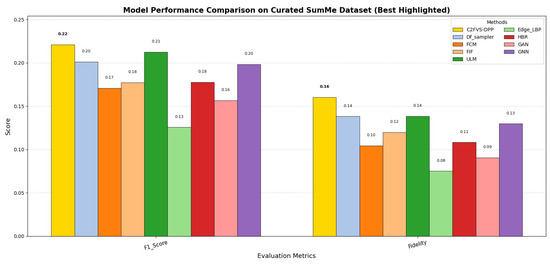

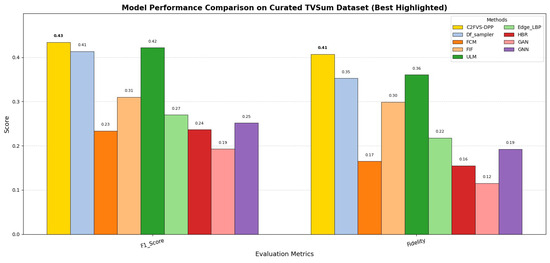

Furthermore, Figure 5 and Figure 6 illustrate the F1-score and fidelity score comparisons between the proposed model and baseline methods on the curated SumMe and TVSum50 datasets, respectively. In both cases, the proposed approach achieves the highest scores, demonstrating its effectiveness in generating accurate and semantically rich video summaries.

Figure 5.

Model performance comparison with baseline model on curated SumMe dataset.

Figure 6.

Model performance comparison with baseline model on curated TVSum50 dataset.

5.5. Ablation Study

To evaluate the contributions of key components in the proposed video summarization model, an ablation study was conducted on the curated SumMe and TVSum50 datasets. This study investigates the impact of various feature types—ResNet50 visual features, optical flow motion features, and BERT textual features—as well as reward components used within the extract_features module. Evaluation metrics include the F1-score, fidelity score, and compression ratio (CR).

For reward ablations on the curated SumMe dataset, as shown in Table 6, removing the diversity reward () led to a 43.1% F1-score decrease to 0.1258. Excluding the representativeness reward () resulted in a 43.2% reduction to 0.1256. Omitting the visual diversity reward () caused a 34.3% decrease, reducing the F1-score to 0.1453. All reward ablations maintained high CRs (≥0.998), indicating comprehensive content coverage. Nevertheless, the full model consistently achieved an optimal balance between coverage and semantic relevance.

Table 6.

Ablation on visual and representativeness rewards (diversity reward active) on curated SumMe dataset.

Table 7 presents the feature ablation study on the curated SumMe dataset. The full model achieved the highest performance, with an F1-score of 0.2212 and a fidelity score of 0.1603. Removing ResNet features resulted in a 40.0% drop in F1-score to 0.1326, while excluding motion features led to a 48.3% decline to 0.1144, underscoring the critical role of temporal structure. BERT-based textual information also proved significant—excluding it caused the F1-score to fall by 43.1% to 0.1258. Although CR remained consistently high (≥0.998), the F1 and fidelity metrics reflect the semantic degradation from each exclusion, highlighting the importance of integrating visual, motion, and textual modalities.

Table 7.

Feature ablation on curated SumMe dataset.

Table 8 shows the reward ablation on the curated TVSum50 dataset. The full model, with all three reward components—diversity, visual, and representativeness—achieved the highest F1-score of 0.4344 and a fidelity score of 0.4071. When both visual and representativeness rewards were removed, the F1-score dropped by 56.1% to 0.1908, with a fidelity score of 0.1188. Removing only the diversity reward resulted in a 60.2% F1-score reduction to 0.1730. Likewise, removing only visual and representativeness components (keeping diversity active) dropped the F1-score to 0.1716. These results confirm that the synergy of all three rewards is critical for optimal summarization performance on TVSum50, despite high CR values (>0.998) across all variants.

Table 8.

Ablation on visual and representativeness rewards (diversity reward active) on curated TVSum50 dataset.

Table 9 presents the feature ablation on the curated TVSum50 dataset. The full model again attained the best performance with an F1-score of 0.4344 and a fidelity score of 0.4071. Removing ResNet features led to a 59.1% drop in F1-score to 0.1778, while excluding motion features dropped it to 0.1724 (a 60.3% decline), indicating the essential role of temporal structure. BERT-based textual information also proved significant—excluding it caused the F1-score to fall by 61.8% to 0.1658. Although CR remained consistently high (>0.998), the F1 and fidelity metrics reflect the semantic degradation from each exclusion, underlining the importance of integrating visual, motion, and textual modalities.

Table 9.

Feature ablation on curated TVSum50 dataset.

5.6. Complexity and Efficiency Analysis

We report per-frame compute and runtime for the proposed C2FVS-DPP under the same settings used in our experiments ( input, ; batch size = 4 unless otherwise specified). All major components are included in the analysis, while CPU-bound steps such as optical flow, K-Medoids, and DPP are reported in terms of wall-time.

Module-wise complexity.Table 10 presents the per-module GFLOPs (forward pass only). The total cost in full mode is GFLOPs/frame, dominated by the feature extraction modules (BLIP: , CLIP: , ResNet-50: ). In contrast, the projection layer ( GFLOPs) and Transformer scorer ( GFLOPs) are lightweight. In edge-mode, amortizing BLIP across shots reduces the total cost to GFLOPs/frame, indicating that feature extraction is the primary computational bottleneck.

Table 10.

Module-wise computational cost of C2FVS-DPP (per frame at 224×224). GFLOPs are forward pass only, measured as 2 × MACs. Notes: FLOPs for the Transformer scorer estimated analytically; others measured via THOP. “Total (edge-mode)” amortizes BLIP across shots (example ∼50 frames/shot ⇒ BLIP GFLOPs/frame).

End-to-end efficiency.Table 11 reports latency, throughput, and memory usage. On a desktop GPU, full mode achieves ms/frame ( FPS) with GB peak GPU memory. In edge-mode, the runtime improves to ms/frame ( FPS) with GB peak GPU memory and reduced host RAM usage. A batch simulated-edge configuration records ms/frame ( FPS) while keeping GPU memory below 3 GB.

Table 11.

End-to-end efficiency of C2FVS-DPP under standardized settings ( input, , batch unless specified). Latency/FPS are mean ± std over 5 runs; video decoding excluded. Notes: Mixed precision when applicable; CUDA events for GPU timing and perf_counter for CPU timing. Peak GPU via NVML; host RAM via RSS.

Backbone and Embedding Variants. The results in Table 12 confirm that the combination of ResNet-50 + BLIP→BERT achieves the best accuracy–efficiency balance, matching or surpassing ViT/CLIP variants while requiring substantially fewer GFLOPs and lower GPU memory. While ViT and CLIP models are competitive in accuracy, they incur significantly higher computational cost without consistent improvements in summarization quality. Moreover, BLIP→BERT provides interpretable textual evidence (captions) that can be inspected, whereas joint CLIP embeddings tightly couple vision and language, sometimes biasing selection toward “captionable” content while neglecting visually distinct but semantically sparse frames. The edge-mode variant further reduces per-frame cost (51.67 → 18.61 GFLOPs) and peak GPU usage (4.38 → 2.72 GB) with negligible accuracy loss, highlighting the deployability of the proposed framework in resource-constrained settings.

Table 12.

Backbone and feature extractor choices within the proposed C2FVS-DPP framework: accuracy vs. efficiency trade-offs. Accuracy reported on curated SumMe and TVSum datasets. GFLOPs derived from Table 10; latency and peak GPU memory from Table 11. Notes: “Ours (no-CLIP)” removes the CLIP branch, reducing 8.73 GFLOPs; “Ours (edge-mode)” amortizes BLIP cost across shots. The compression ratio (CR) remained stable (≈0.995–0.998) across all variants and is omitted for brevity. Dashes (—) indicate measurements not performed.

5.7. Application to Surveillance Video Summarization

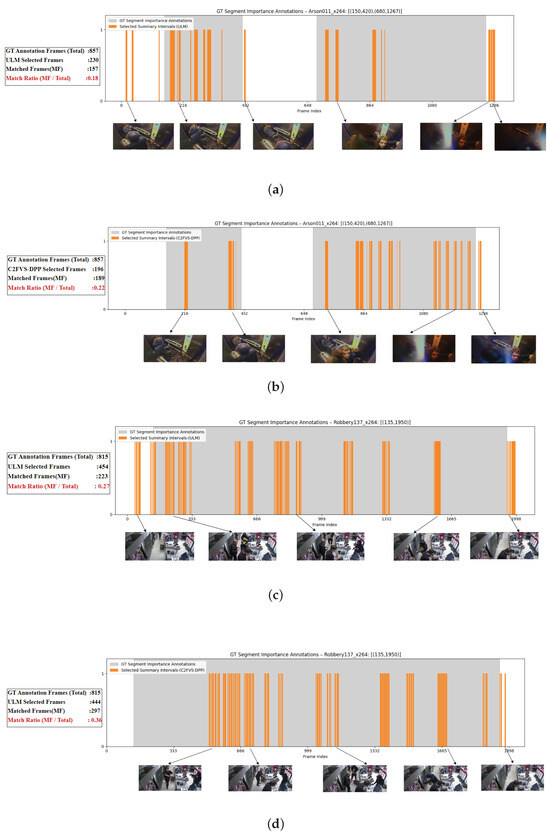

To evaluate the generalizability of the proposed C2FVS-DPP framework, we used the publicly available UCF-Crime Annotation (UCA) dataset [54,55], which provides temporally localized event annotations for real-world surveillance videos. We follow the UCA convention: frames inside an anomalous event interval are labeled with score (anomaly), and frames outside are labeled with score (normal). For demonstration, two videos—Arson011_x264 and Robbery137_x264—were selected. The annotated ground-truth importantframe intervals were and for Arson011_x264, and for Robbery137_x264.

Figure 7 comprises four panels: (a–b) “Arson011” and (c–d) “Robbery137”. In each pair, the upper panel corresponds to the baseline ULM [17] and the lower panel to the proposed C2FVS-DPP. Gray bars denote the annotated anomalous event intervals (score ), white regions denote normal frames (score ), and orange bars indicate the segments selected by each method.

Figure 7.

Qualitative comparison of frame-level importance scores for two surveillance videos: (a,b) “Arson011” and (c,d) “Robbery137”. Each pair compares the baseline ULM (top) with the proposed C2FVS-DPP (bottom). (a) ULM – Arson011_x264; (b) proposed C2FVS-DPP – Arson011_x264; (c) ULM – Robbery137_x264; (d) proposed C2FVS-DPP – Robbery137_x264.

These results indicate that the proposed method generates coherent, event-aware, and resource-conscious video summaries—properties that are particularly desirable for surveillance workflows where accurate event localization is critical. In our experiments, ResNet-50 and BERT are used for visual and textual feature extraction, respectively, due to their robustness and efficiency in training-free settings.

6. Discussion

The results of the C2FVS-DPP framework mark a significant step forward in training-free video summarization, offering a lightweight, inference-driven approach that integrates multimodal features to produce concise and semantically rich video summaries. This section discusses the evaluation outcomes, compares them with previous studies, addresses the framework’s implications, and highlights limitations and future research directions.

6.1. Evaluation and Comparison with Prior Work

The C2FVS-DPP framework achieves F1-scores of 0.22 and 0.43, as well as fidelity scores of 0.16 and 0.40, on the curated SumMe and TVSum50 datasets, respectively, as shown in Table 4 and Table 5. In terms of compression ratio, the framework attains 0.9959 on SumMe and 0.9940 on TVSum50, values that are close to the best-reported results of 0.9981 and 0.9983, respectively. These results outperform baseline methods such as FCM [9], FIF [23], DF Sampler [27], ULM [17], Edge-LBP [21], and HBR [20]. The superior performance validates the hypothesis that fusing appearance (ResNet-50), motion (optical flow), and semantic (BERT-encoded BLIP captions) features, combined with symmetry-aware DPP-based selection, enhances keyframe selection by balancing semantic relevance, visual diversity, and temporal coherence.

Compared to clustering-based methods such as Khalid et al. [9] and Tonge and Thepade [15], which rely on handcrafted or static features, C2FVS-DPP leverages pre-trained deep models to capture richer semantic and temporal information, resulting in higher F1 and fidelity scores. Unlike deep learning-based approaches such as Yang et al. [23], which incur high computational costs due to complex feature interactions, our framework operates efficiently in inference mode, making it suitable for resource-constrained environments. The incorporation of semantic features via BLIP captions extends the work of Song et al. [8], which used video titles for semantic guidance, by enabling applicability to videos without predefined metadata. Additionally, the DPP-based selection aligns with Gygli et al. [7] but improves scalability through greedy MAP inference, addressing redundancy more effectively than traditional clustering methods [16].

The ablation studies (Table 7 and Table 9) underscore the importance of each feature type, with motion features showing the largest impact (48.3% and 60.3% F1-score reductions when removed on SumMe and TVSum50, respectively), consistent with Zhang et al. [22]’s emphasis on temporal dynamics in video summarization. The reward-based re-ranking mechanism, balancing diversity, representativeness, and visual uniqueness (Table 6 and Table 8), addresses a persistent limitation in prior methods—the tendency to select redundant frames [15]. The Hybrid Frame Similarity Assessment metric (Algorithm 3), combining ResNet-50, ViT, and LPIPS features, provides a robust evaluation framework, improving upon static metrics used in Narasimhan et al. [50] by incorporating dynamic weight adjustments for adaptive similarity scoring.

6.2. Dataset Curation and Implications

The curated SumMe and TVSum50 datasets, reducing frames by approximately 98.8% and 99.3% while achieving GT score improvements of 3.62× and 1.66×, respectively (Table 1), address a critical limitation of the original datasets: redundancy and uneven score distributions. The statistical selection strategy (Algorithm 1) enhances semantic density, resulting in summaries with near-perfect compression ratios (0.9959 for SumMe and 0.9940 for TVSum50) close to the best-reported values. This trade-off prioritizes concise, high-quality summaries, aligning with the needs of applications like video captioning and highlight localization, where semantic clarity is paramount. Compared to Gygli et al. [7] and Song et al. [8], the curated datasets provide a more robust benchmark for evaluating summarization frameworks, as evidenced by the improved GT scores and reduced summary lengths (1.25% for SumMe and 0.75% for TVSum50).

The application to the UCF-Crime Annotation dataset (Figure 7) demonstrates C2FVS-DPP’s generalizability to surveillance contexts, where it aligns more closely with annotated event intervals than the unsupervised baseline [17]. This suggests practical utility in domains like security monitoring, where precise event localization is critical, offering an advantage over computationally intensive methods such as that by Yuan et al. [55].

6.3. Limitations and Future Work

Despite its strengths, C2FVS-DPP has certain limitations. The reliance on pre-trained models (ResNet-50, CLIP, BERT, BLIP) assumes that their representations generalize well across diverse video domains, which may not hold for highly specialized content such as medical or industrial videos. In line with the reviewer’s observation, we have expanded this discussion to explicitly acknowledge this limitation. While our framework mitigates some risks through motion features and diversity-aware selection, pre-trained encoders may not transfer perfectly. Future work may explore domain-adapted encoders or advanced vision–language models (e.g., CLIP, FLAVA, BLIP-2) under higher compute budgets. We also plan to investigate lightweight adaptation strategies such as parameter-efficient fine-tuning (e.g., LoRA, adapters) and few-shot domain-specific training to improve adaptability without compromising efficiency.

The fixed sampling interval () may miss rapid scene changes in high-frame-rate videos, potentially reducing temporal granularity. To address this, we introduce a preliminary adaptive sampling strategy in which the interval is dynamically adjusted based on optical flow intensity—using smaller intervals in high-motion regions and larger ones in static regions. This motion-driven scheme aims to preserve temporal granularity while reducing redundancy, thereby improving both efficiency and summary quality without altering the training-free nature of C2FVS-DPP.

We also recognize that several hyperparameters (e.g., clustering settings, reward weights, similarity thresholds) were chosen heuristically. Although preliminary experiments indicated stable performance trends, a more systematic sensitivity analysis could yield deeper insights. We plan to perform such an analysis to further validate parameter robustness.

To enhance robustness against geometric attacks and distortions, we plan to integrate distortion-invariant feature descriptors (e.g., SIFT, ORB) and lightweight alignment techniques inspired by anti-geometric attack strategies in the watermarking literature Wang et al. [56], Wen et al. [57].

Furthermore, adopting multi-level feature hierarchies, as demonstrated in robust human parsing frameworks, may strengthen the framework’s ability to handle complex and cluttered scenes. In particular, we aim to incorporate multi-level feature learning inspired by From Simple to Complex Scenes: Learning Robust Feature Representations for Accurate Human Parsing [58], where hierarchical visual features from ResNet-50’s shallow, intermediate, and deep layers will be aligned with motion and semantic features via lightweight attention. This integration is expected to enhance robustness in complex scenarios and complement our reward-guided and DPP-based selection strategies, improving both semantic and temporal coherence.

In addition, although the current framework focuses on visual and semantic modalities, extending it toward multimodal audio–visual–textual fusion represents a natural next step. Future work will explore how textual cues (e.g., video titles or transcripts) and audio signals can complement visual features to enhance summarization quality in multimodal scenarios.

Finally, while our experiments demonstrate strong average performance across benchmarks, detailed per-category breakdowns (e.g., sports, news, surveillance) remain constrained by dataset size and available annotations. We recognize this as an important direction and plan to conduct category-level evaluations once more balanced multimodal datasets become available.

In summary, future extensions of C2FVS-DPP will explore adaptive frame sampling, systematic parameter studies, distortion-resilient alignment, multi-level feature learning, lightweight fine-tuning, multimodal fusion with audio, and category-level evaluations. These directions will further strengthen the framework’s robustness, generalization, and semantic–temporal coherence.

6.4. Broader Implications

In the broader context, C2FVS-DPP bridges the gap between computational efficiency and semantic richness, offering a scalable, training-free solution that rivals deep learning-based methods while maintaining interpretability. Its modular design allows integration with emerging feature extractors or clustering algorithms, facilitating advancements in real-time video summarization. The framework’s high compression ratios and effectiveness in surveillance contexts (Figure 7) suggest potential for real-world applications, such as content indexing, video browsing, and automated surveillance analysis. By addressing redundancy and enhancing semantic density through dataset curation, C2FVS-DPP sets a new standard for evaluating video summarization frameworks, with implications for both research and practical deployment in resource-constrained environments.

7. Conclusions

The proposed C2FVS-DPP framework offers a robust, training-free solution for video summarization, effectively addressing the challenge of selecting semantically rich, diverse, and representative keyframes without the need for costly model training. By integrating appearance features from ResNet-50, motion cues from optical flow, and semantic representations from BERT-encoded BLIP captions, the framework achieves superior performance on the curated SumMe and TVSum50 datasets, with F1-scores of 0.221 and 0.434 and fidelity scores of 0.160 and 0.407, respectively. The combination of K-Medoids clustering, reward-guided re-ranking, and Determinantal Point Process (DPP) selection ensures summaries that balance semantic importance, visual diversity, temporal coherence, and symmetry.

Beyond methodological advances, this work contributes curated benchmark datasets that reduce frames by 98.75% (SumMe) and 99.25% (TVSum50), while improving ground-truth scores by factors of 3.62 and 1.66, respectively. Moreover, the introduction of a Hybrid Frame Similarity Assessment metric offers a robust evaluation tool that better aligns with human perceptual judgments. The framework’s generalizability is further confirmed on the UCF-Crime Annotation dataset, where it demonstrates strong performance in event localization for surveillance applications.

Despite its advantages, the proposed framework has certain limitations, including reliance on general-purpose pre-trained models, heuristic hyperparameter choices, and the use of a fixed sampling interval. These limitations point to several promising directions for future research: adaptive frame sampling strategies guided by optical flow, systematic threshold analysis, lightweight parameter-efficient fine-tuning (e.g., LoRA, adapters), integration of advanced vision–language models (e.g., CLIP, FLAVA, BLIP-2), incorporation of multi-level feature hierarchies, enhanced resilience against geometric distortions, multimodal audio–visual–textual fusion, and comprehensive category-level evaluations. Such extensions would further strengthen the framework’s robustness, adaptability, and semantic–temporal coherence while maintaining the efficiency of its training-free design.

In summary, the C2FVS-DPP framework establishes a new benchmark for efficient, interpretable, and scalable video summarization. By leveraging symmetry in both multimodal feature fusion and the trade-off between representativeness and diversity, the framework produces compact yet semantically meaningful summaries that preserve balance across visual and semantic dimensions. This symmetry-aware design has broad implications for applications in content indexing, video browsing, and automated surveillance, advancing resource-efficient and robust multimedia analysis.

Supplementary Materials

The curated frame selection scores for each video clip in the SumMe and TVSum50 datasets (CSV format). This file includes selected frames with their corresponding ground-truth scores for all videos used in this study. The dataset is available at: https://github.com/aparyayk/curated-dataset-summe-tvsum.git (accessed on 5 October 2025).

Author Contributions

Conceptualization, methodology, software, formal analysis, investigation, data curation, visualization, and writing—original draft preparation, A.K.; validation, resources, writing—review and editing, supervision, and project administration, C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Publicly available datasets were analyzed in this study. The SumMe dataset can be accessed at https://www.kaggle.com/datasets/brothers30sad/summe-dataset (accessed on 5 October 2025), the TVSum50 dataset at https://github.com/yalesong/tvsum (accessed on 5 October 2025), and the UCF-Crime dataset at https://github.com/Xuange923/Surveillance-Video-Understanding (accessed on 5 October 2025). Processed benchmark versions curated in this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors gratefully acknowledge the Department of Computer Science and Engineering, College of Engineering, Anna University, Guindy, Chennai, for providing the resources and support necessary to conduct this research. The use of the SumMe, TVSum50, and UCF-Crime Annotation (UCA) datasets is also acknowledged; these resources were indispensable for the development and evaluation of the C2FVS-DPP framework. The original dataset creators and annotators are sincerely recognized for their contributions in designing and collecting the datasets. They were not involved in the analysis, interpretation, or preparation of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Argaw, D.M.; Soldan, M.; Pardo, A.; Zhao, C.; Caba Heilbron, F.; Chung, J.S.; Ghanem, B. Towards automated movie trailer generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 7445–7454. [Google Scholar] [CrossRef]

- Irie, G.; Satou, T.; Kojima, A.; Yamasaki, T.; Aizawa, K. Automatic trailer generation. In Proceedings of the ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 839–842. [Google Scholar] [CrossRef]

- Muhammad, W.; Ahmed, I.; Ahmad, J.; Nawaz, M.; Alabdulkreem, E.; Ghadi, Y. A video summarization framework based on activity attention modeling using deep features for smart campus surveillance system. PeerJ Comput. Sci. 2022, 8, e911. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Zhang, Y.; Feng, R.; Zhang, T.; Fan, W. Stacked Multimodal Attention Network for Context-Aware Video Captioning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 31–42. [Google Scholar] [CrossRef]

- Aoki, N.; Mori, N.; Okada, M. Analysis of LLM-Based Narrative Generation using the Agent-based Simulation. In Proceedings of the 2023 15th International Congress on Advanced Applied Informatics Winter (IIAI-AAI-Winter), Tokyo, Japan, 11–13 December 2023. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhang, J. Unsupervised Video Summarization via Deep Reinforcement Learning With Shot-Level Semantics. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 445–456. [Google Scholar] [CrossRef]

- Gygli, M.; Grabner, H.; Riemenschneider, H.; Gool, L.V. Creating Summaries from User Videos. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Lecture Notes in Computer Science. Volume 8695, pp. 505–520. [Google Scholar] [CrossRef]

- Song, Y.; Vallmitjana, J.; Stent, A.; Jaimes, A. TVSum: Summarizing Web Videos Using Titles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5179–5187. [Google Scholar] [CrossRef]

- Khalid, E.T.; Jassim, S.A.; Saqaeeyan, S. Fuzzy C-mean Clustering Technique Based Visual Features Fusion for Automatic Video Summarization Method. Multimed. Tools Appl. 2024, 83, 87673–87696. [Google Scholar] [CrossRef]

- Ding, Y.; Shen, D.; Ye, L.; Zhu, W. A Keyframe Extraction Method Based on Transition Detection and Image Entropy. In Proceedings of the 7th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 18–20 November 2022. [Google Scholar] [CrossRef]

- Prabakaran, T.; S, P.; Kumar, L.; Deshpande, M.V.; S, A.; Fahlevi, M. Keyframe Extraction using Convolutional Neural Networks. In Proceedings of the 2nd International Conference on Technological Advancements in Computational Sciences (ICTACS), Tashkent, Uzbekistan, 10–12 October 2022. [Google Scholar] [CrossRef]

- Lin, J.; hua Zhong, S.; Fares, A. Deep hierarchical LSTM networks with attention for video summarization. Comput. Electr. Eng. 2021, 93, 107618. [Google Scholar] [CrossRef]

- Hu, Y.; Barth, E. Novel Design Ideas that Improve Video-Understanding Networks with Transformers. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Luebeck, Germany, 30 June–5 July 2024. [Google Scholar] [CrossRef]

- Shamsi, F.; Sindhu, I. Condensing Video Content: Deep Learning Advancements and Challenges in Video Summarization Innovations. IEEE Access 2025, 13, 3526068. [Google Scholar] [CrossRef]

- Tonge, A.; Thepade, S.D. A Novel Approach for Static Video Content Summarization Using Shot Segmentation and K-Means Clustering. In Proceedings of the 2022 IEEE 2nd Mysore Sub Section International Conference (MysuruCon), Mysuru, India, 16–17 October 2022. [Google Scholar] [CrossRef]

- Wang, F.; Chen, J.; Liu, F. Keyframe Generation Method via Improved Clustering and Silhouette Coefficient for Video Summarization. J. Web Eng. 2021, 20, 147–170. [Google Scholar] [CrossRef]

- Gangwani, V.S.; Ramteke, P.L. An Approach for Video Summarization Based on Unsupervised Learning Using Deep Semantic Features and Keyframe Extraction. In Proceedings of the 2024 5th International Conference on Image Processing and Capsule Networks (ICIPCN), Dhulikhel, Nepal, 3–4 July 2024. [Google Scholar] [CrossRef]

- Gharbi, H.; Bahroun, S.; Zagrouba, E. Keyframe Extraction for Video Summarization Using Local Description and Repeatability Graph Clustering. Signal Image Video Process. 2019, 13, 507–515. [Google Scholar] [CrossRef]

- Kaur, S.; Kaur, L.; Lal, M. An Effective Key Frame Extraction Technique Based on Feature Fusion and Fuzzy-C Means Clustering with Artificial Hummingbird. Sci. Rep. 2024, 14, 26651. [Google Scholar] [CrossRef]

- Rodriguez, J.M.D.; Yao, P.; Wan, W. Selection of Key Frames through the Analysis and Calculation of the Absolute Difference of Histograms. In Proceedings of the 2018 IEEE International Conference on Audio, Language and Image Processing (ICALIP), Shanghai, China, 16–17 July 2018. [Google Scholar] [CrossRef]

- Nandinia, H.M.; Chethan, H.K.; Rashmi, B.S. Shot based keyframe extraction using edge-LBP approach. J. King Saud Univ.–Comput. Inf. Sci. 2022, 34, 4537–4545. [Google Scholar] [CrossRef]

- Zhang, Y.; Tao, R.; Wang, Y. Motion-state-adaptive video summarization via spatiotemporal analysis. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1340–1352. [Google Scholar] [CrossRef]

- Yang, G.; He, Z.; Su, Z.; Li, Y.; Hu, B. Keyframe Recommendation Based on Feature Intercross and Fusion. Complex Intell. Syst. 2024, 10, 4955–4971. [Google Scholar] [CrossRef]

- Li, J.; Yao, T.; Ling, Q.; Mei, T. Detecting Shot Boundary with Sparse Coding for Video Summarization. Neurocomputing 2017, 266, 66–78. [Google Scholar] [CrossRef]

- Alharbi, F.; Habib, S.; Albattah, W.; Jan, Z.; Alanazi, M.D.; Islam, M. Effective Video Summarization Using Channel Attention-Assisted Encoder–Decoder Framework. Symmetry 2024, 16, 680. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, G.; Bi, X.; Cui, Y. Video Summarization Generation Network Based on Dynamic Graph Contrastive Learning and Feature Fusion. Electronics 2024, 13, 2039. [Google Scholar] [CrossRef]

- Mehta, N.K.; Prasad, S.S.; Saurav, S.; Singh, S. DF Sampler: A Self-Supervised Method for Adaptive Keyframe Sampling. In Proceedings of the 2024 IEEE 29th International Conference on Emerging Technologies and Factory Automation (ETFA), Padua, Italy, 10–13 September 2024. [Google Scholar] [CrossRef]

- Singh, K.; Mohite, N.R.; R, P.; R, M.H.; Nuthi, P.K. Multimodal Video Summarization using Attention-based Transformers (MVSAT). In Proceedings of the 2024 IEEE International Conference on Interdisciplinary Approaches in Technology and Management for Social Innovation (IATMSI), Bangalore, India, 14–16 March 2024. [Google Scholar] [CrossRef]

- Guo, Y.; Xing, J.; Hou, X.; Xin, S.; Jiang, J.; Terzopoulos, D.; Jiang, C.; Liu, Y. CFSum: A Transformer-Based Multi-Modal Video Summarization Framework with Coarse-Fine Fusion. In Proceedings of the 2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025. [Google Scholar] [CrossRef]

- Ji, Z.; Xiong, K.; Pang, Y.; Li, X. Video Summarization with Attention-Based Encoder–Decoder Networks. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1709–1717. [Google Scholar] [CrossRef]

- Hsu, T.C.; Liao, Y.S.; Huang, C.R. Video Summarization with Spatiotemporal Vision Transformer. IEEE Trans. Image Process. 2023, 32, 3013–3026. [Google Scholar] [CrossRef]

- Guo, Z.; Zhao, Z.; Wang, N.; Jin, W.; Wei, Z.; Yang, M.; Yuan, N.J. Multi-turn Video Question Generation via Reinforced Multi-Choice Attention Network. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1697–1710. [Google Scholar] [CrossRef]

- Cleverdon, C.W. The Cranfield Tests on Index Language Devices. Aslib Proc. 1967, 19, 173–194. [Google Scholar] [CrossRef]

- van Rijsbergen, C.J. Information Retrieval, 2nd ed.; Butterworth-Heinemann: London, UK, 1979. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-Frame Motion Estimation Based on Polynomial Expansion. In Image Analysis, Proceedings of the 13th Scandinavian Conference on Image Analysis (SCIA); Bigun, J., Gustavsson, T., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2749, pp. 363–370. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. In Proceedings of the 39th International Conference on Machine Learning (ICML); Baltimore, MD, USA, 17–23 July 2022, Proceedings of Machine Learning Research; Volume 162, pp. 12888–12900.

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (Long and Short Papers), pp. 4171–4186. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Castellano, B. PySceneDetect: A Python-Based Video Scene Detection Library. 2023. Available online: https://pyscenedetect.readthedocs.io (accessed on 5 October 2025).

- Kaufman, L.; Rousseeuw, P.J. Clustering by means of medoids. In Proceedings of the Statistical Data Analysis Based on the L1-Norm and Related Methods, Neuchâtel, Switzerland, 31 August–4 September 1987; pp. 405–416. [Google Scholar]

- Kulesza, A.; Taskar, B. Determinantal Point Processes for Machine Learning. Found. Trends Mach. Learn. 2012, 5, 123–286. [Google Scholar] [CrossRef]

- Minaidi, M.N.; Papaioannou, C.; Potamianos, A. Self-Attention Based Generative Adversarial Networks for Unsupervised Video Summarization. In Proceedings of the 31st European Signal Processing Conference (EUSIPCO), Helsinki, Finland, 4–8 September 2023; pp. 571–575. [Google Scholar]

- Chaves, J.M.R.; Tripathi, S. VideoSAGE: Video Summarization with Graph Representation Learning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 17–18 June 2024; pp. 259–264. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual Event, 18–24 July 2021; Volume 139. Available online: https://arxiv.org/abs/2103.00020 (accessed on 5 October 2025).

- Glorot, X.; Bengio, Y. Understanding the Difficulty of Training Deep Feedforward Neural Networks. In Proceedings of the 13th International Conference on Artificial Intelligence and Statistics (AISTATS), Chia Laguna Resort, Sardinia, Italy, 13–15 May 2010; JMLR: Workshop and Conference Proceedings. Volume 9, pp. 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar] [CrossRef]

- Narasimhan, M.; Rohrbach, A.; Darrell, T. CLIP-It! Language-Guided Video Summarization. In Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS), Virtual Event, 6–14 December 2021. [Google Scholar]

- Paul, M.K.A.; Kavitha, J.; Rani, P.A.J. Key-frame Extraction Techniques: A Review. Recent Patents Comput. Sci. 2018, 11, 3–16. [Google Scholar] [CrossRef]

- Apostolidis, E.; Adamantidou, E.; Metsai, A.I.; Mezaris, V.; Patras, I. Video Summarization Using Deep Neural Networks: A Survey. Proc. IEEE 2021, 109, 1838–1863. [Google Scholar] [CrossRef]

- Gharbi, H.; Bahroun, S.; Massaoudi, M.; Zagrouba, E. Key Frames Extraction Using Graph Modularity Clustering for Efficient Video Summarization. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 1502–1506. [Google Scholar] [CrossRef]

- Sultani, W.; Chen, C.; Shah, M. Real-World Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6479–6488. [Google Scholar] [CrossRef]

- Yuan, T.; Zhang, X.; Liu, K.; Liu, B.; Chen, C.; Jin, J.; Jiao, Z. Towards Surveillance Video-and-Language Understanding: New Dataset, Baselines, and Challenges. arXiv 2023, arXiv:2309.13925. [Google Scholar]

- Wang, C.; Zhang, Q.; Wang, X.; Zhou, L.; Li, Q.; Xia, Z.; Ma, B.; Shi, Y.Q. Light-Field Image Multiple Reversible Robust Watermarking Against Geometric Attacks. IEEE Trans. Dependable Secur. Comput. 2025, early access. [Google Scholar] [CrossRef]

- Wen, W.; Ye, Y.; Yuan, Z.; Qiu, B.; Hua, D. LFIZW-GRHFMR: Robust Zero-Watermarking with GRHFMR for Light Field Image. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2025, 21, 126:1–126:17. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, C.; Lu, M.; Yang, J.; Gui, J.; Zhang, S. From Simple to Complex Scenes: Learning Robust Feature Representations for Accurate Human Parsing. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5449–5463. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).