Using Large Language Models to Analyze Interviews for Driver Psychological Assessment: A Performance Comparison of ChatGPT and Google-Gemini

Abstract

1. Introduction

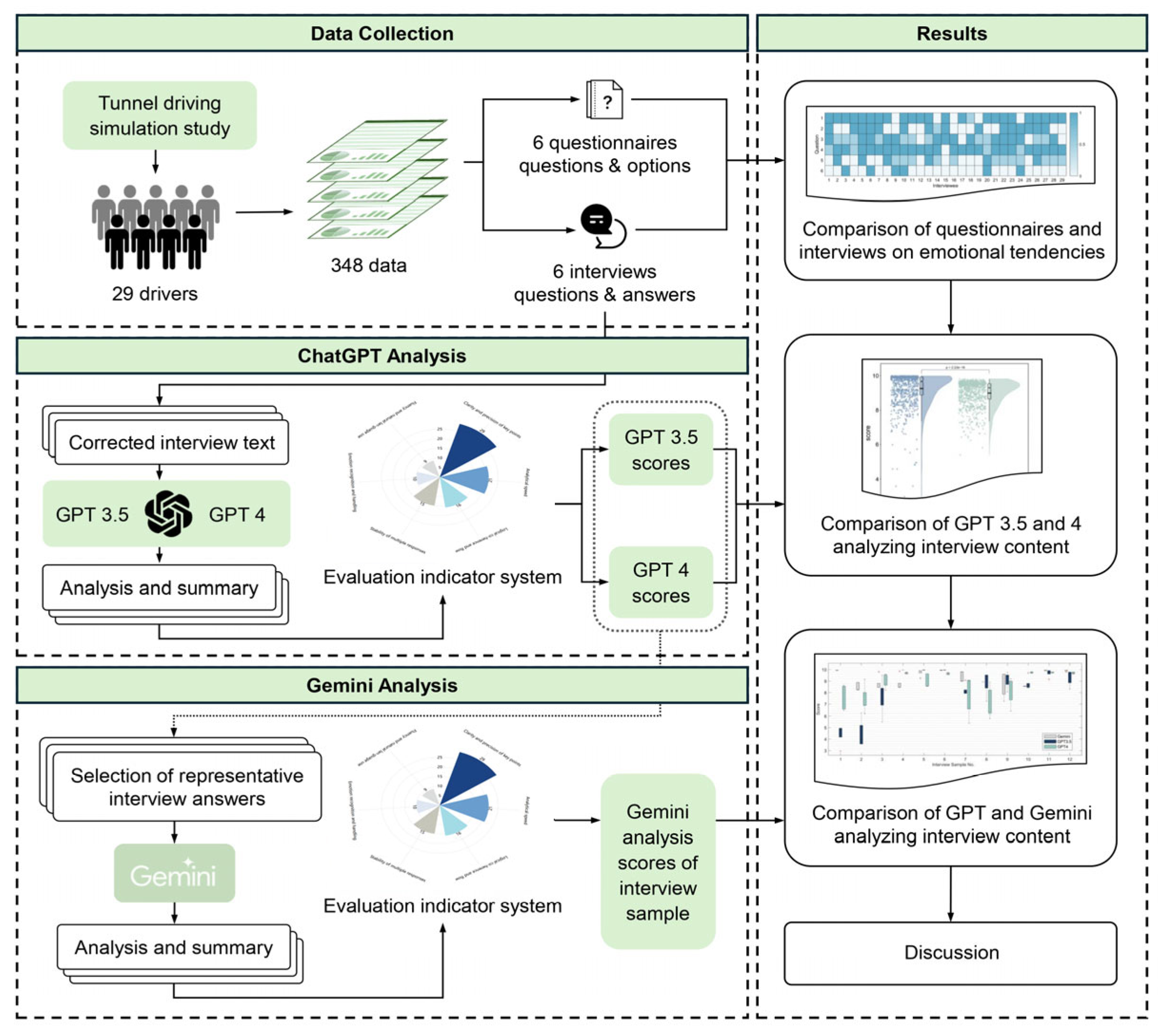

2. Methods

2.1. Dataset Introduction

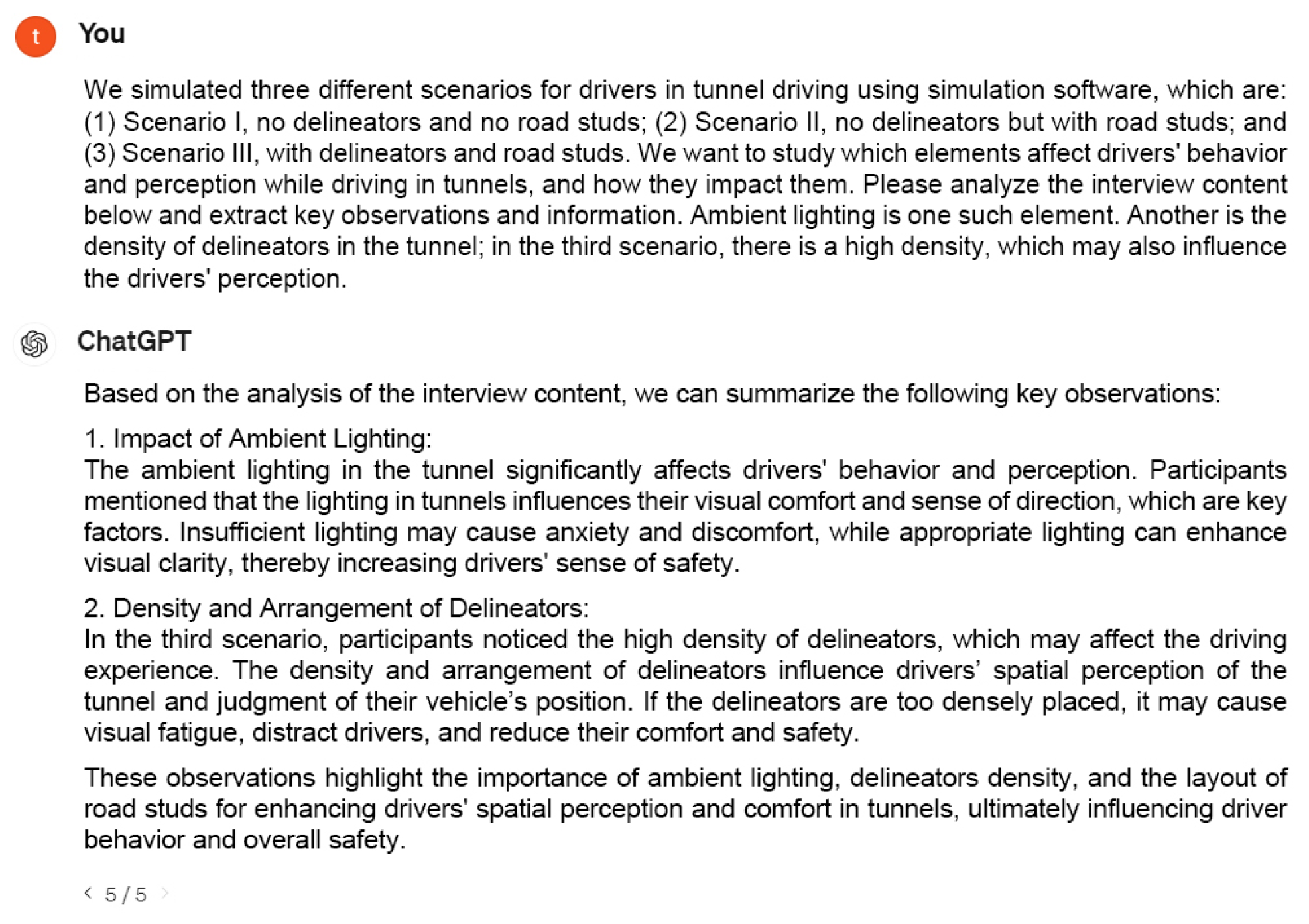

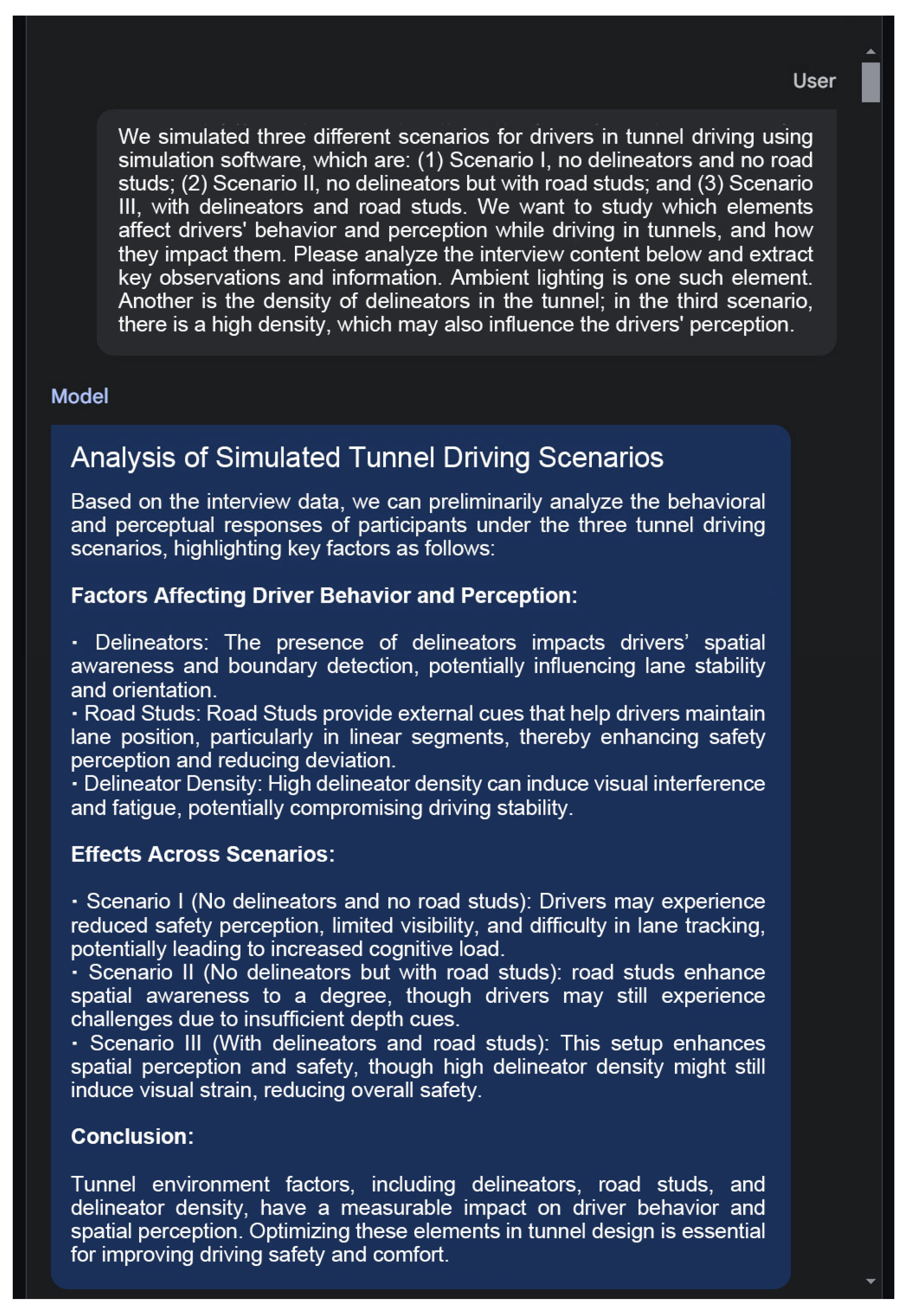

2.2. Input Commands

2.3. Experimental Procedure

2.4. Ethical Statements

3. Results

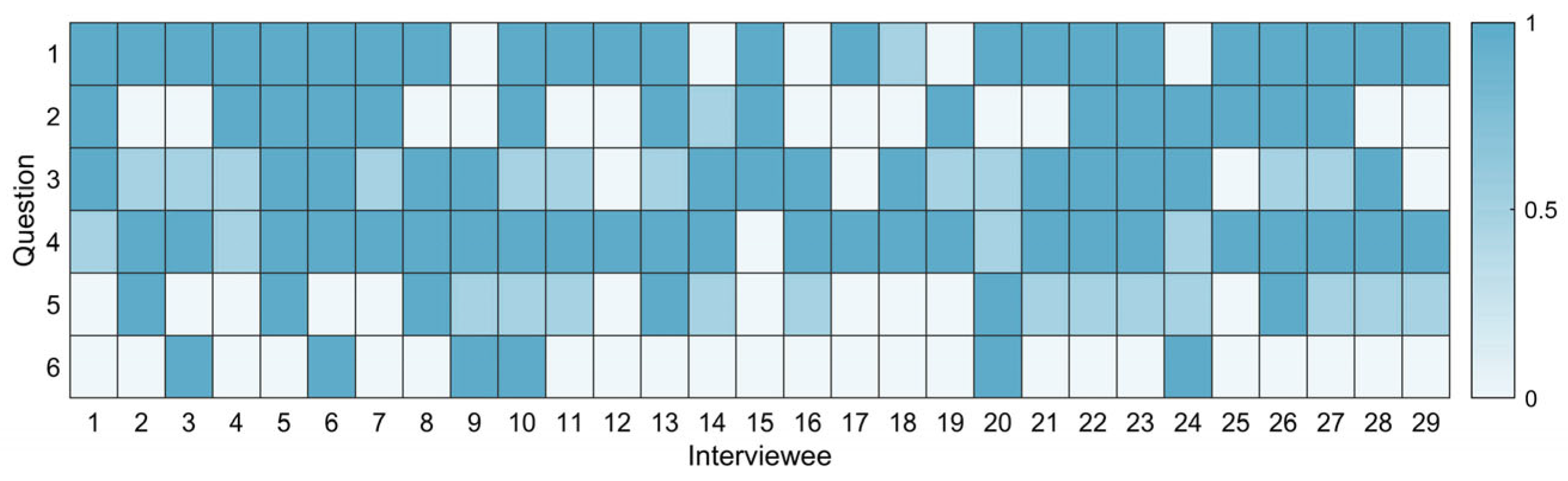

3.1. Comparison of Questionnaire and Interview Results

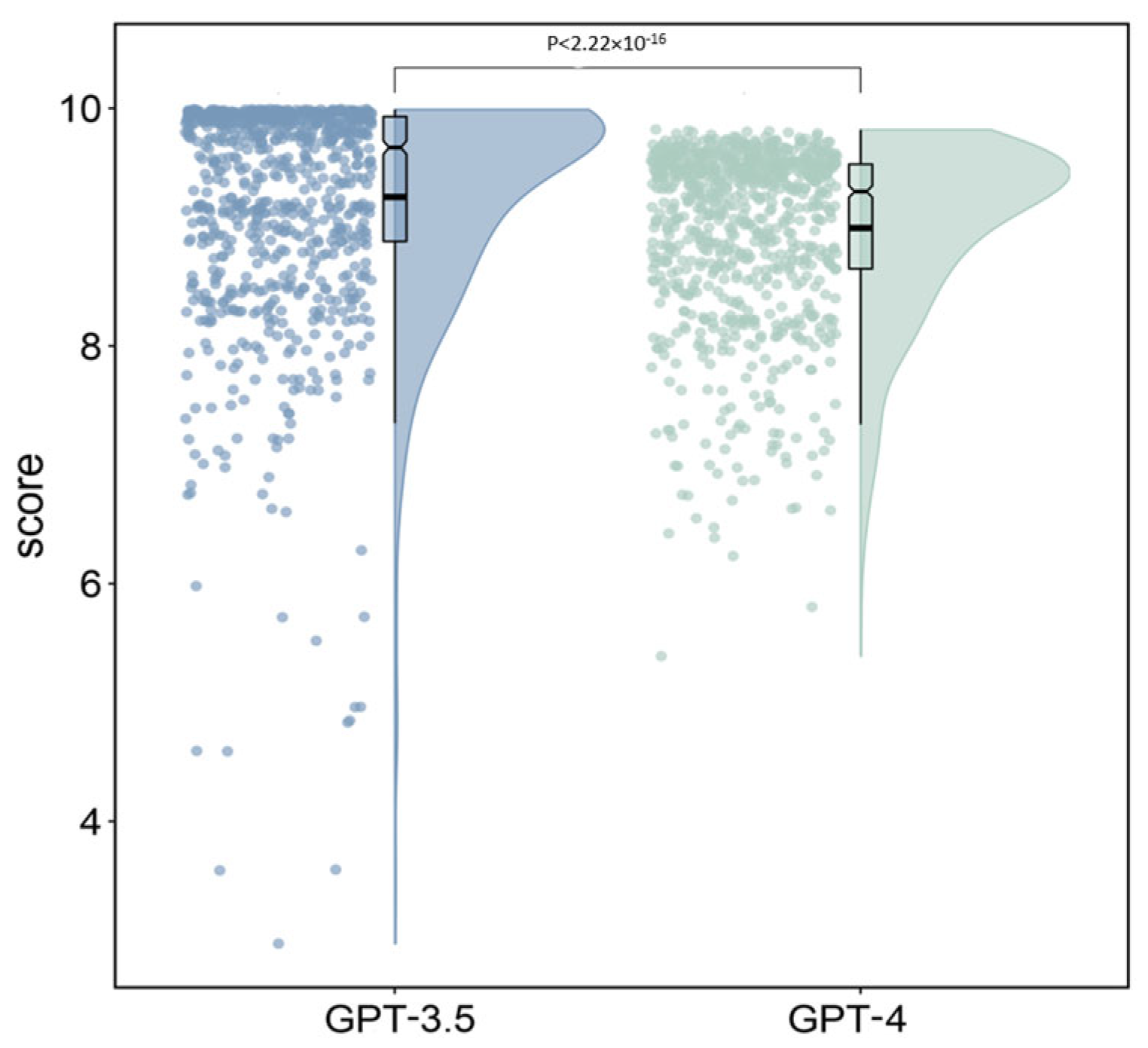

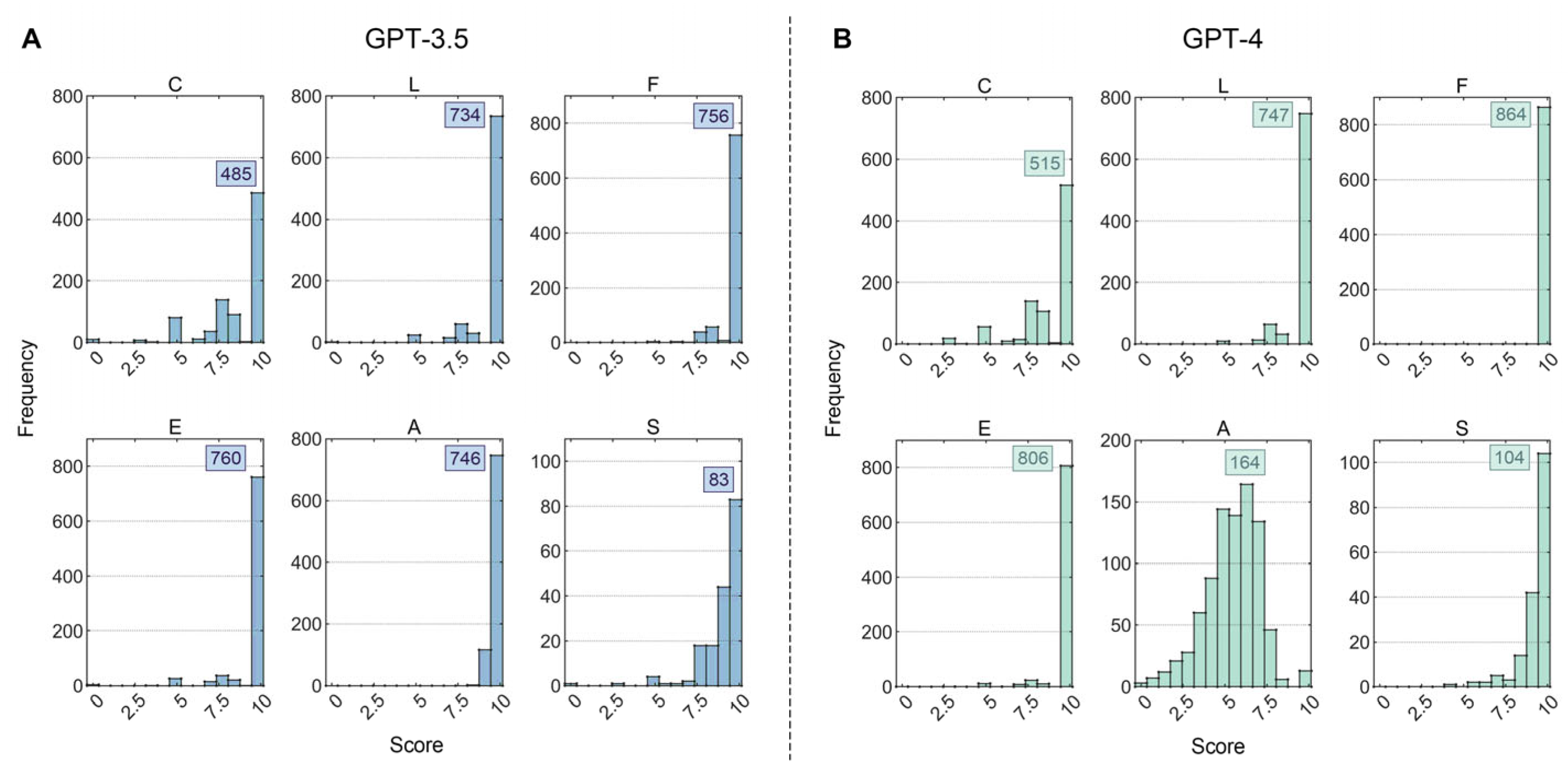

3.2. Comparison of Sentiment Analysis Levels Between GPT-3.5 and GPT-4

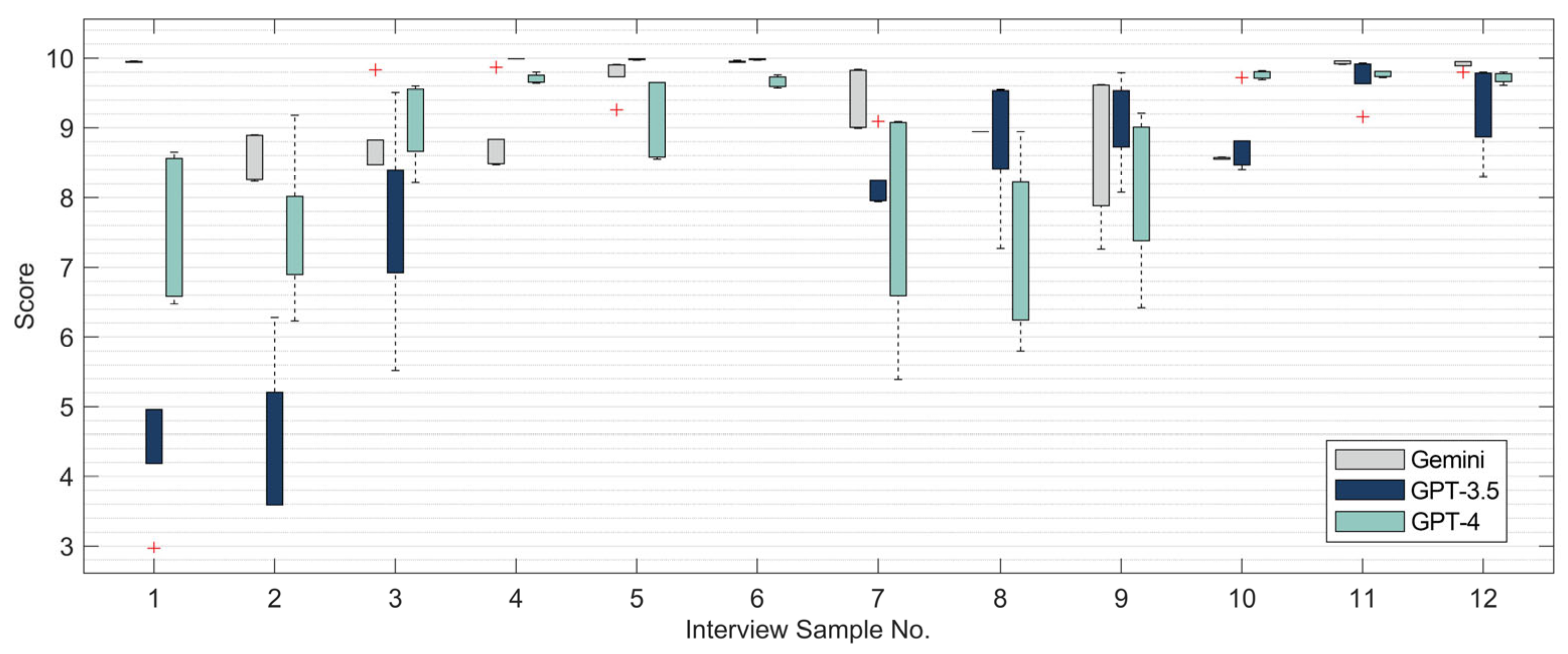

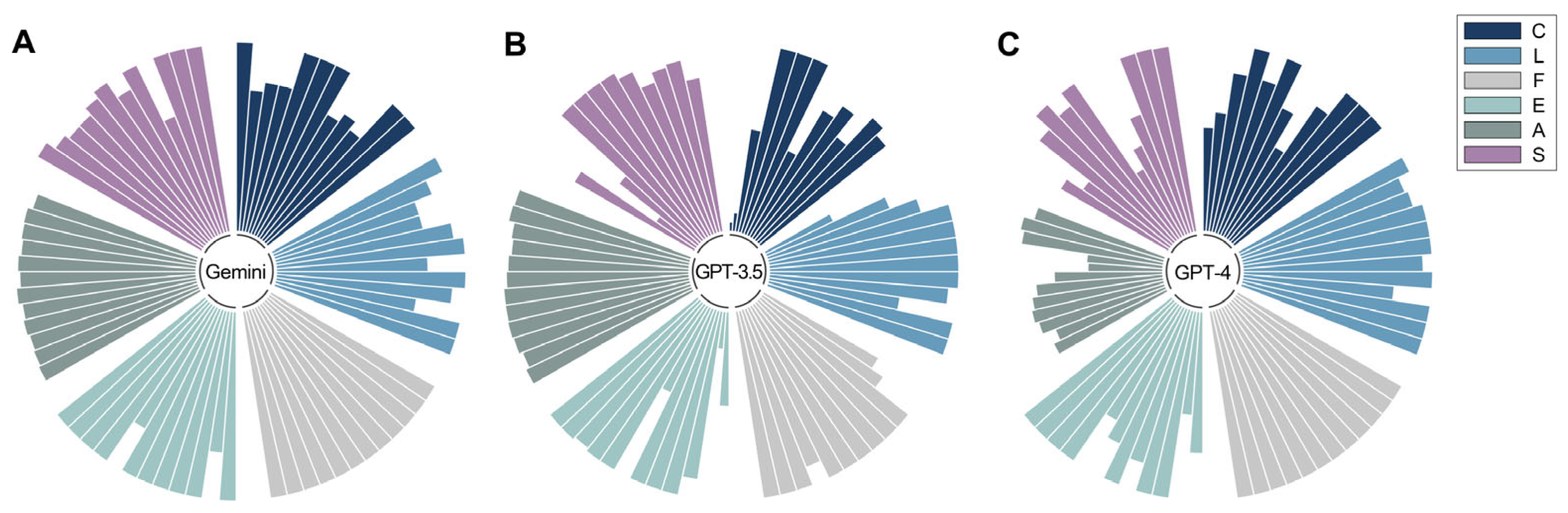

3.3. Comparison of Sentiment Analysis Performance Between Google-Gemini and ChatGPT

4. Discussion

4.1. Conclusions

4.2. Implications for Transportation Research and Practical Applications

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rattray, J.; Jones, M.C. Essential elements of questionnaire design and development. J. Clin. Nurs. 2007, 16, 234–243. [Google Scholar] [CrossRef]

- Lefever, S.; Dal, M.; Matthíasdóttir, Á. Online data collection in academic research: Advantages and limitations. Br. J. Educ. Technol. 2007, 38, 574–582. [Google Scholar] [CrossRef]

- Kelleher, C.; Cardozo, L.; Khullar, V.; Salvatore, S. A new questionnaire to assess the quality of life of urinary incontinent women. BJOG Int. J. Obstet. Gynaecol. 1997, 104, 1374–1379. [Google Scholar] [CrossRef] [PubMed]

- Okumura, M.; Ishigaki, T.; Mori, K.; Fujiwara, Y. Development of an easy-to-use questionnaire assessing critical care nursing competence in Japan: A cross-sectional study. PLoS ONE 2019, 14, e0225668. [Google Scholar] [CrossRef]

- Dillman, D.A.; Christenson, J.A.; Carpenter, E.H.; Brooks, R.M. Increasing mail questionnaire response: A four state comparison. Am. Sociol. Rev. 1974, 39, 744–756. [Google Scholar] [CrossRef]

- Charlton, R. Research: Is an ‘ideal’ questionnaire possible? Int. J. Clin. Pract. 2000, 54, 356–359. [Google Scholar] [CrossRef]

- Krosnick, J.A. Questionnaire design. In The Palgrave Handbook of Survey Research; Springer International Publishing: Cham, Switzerland, 2018; pp. 439–455. [Google Scholar] [CrossRef]

- Taras, V.; Steel, P.; Kirkman, B.L. Negative practice–value correlations in the GLOBE data: Unexpected findings, questionnaire limitations and research directions. J. Int. Bus. Stud. 2010, 41, 1330–1338. [Google Scholar] [CrossRef]

- Slattery, E.L.; Voelker, C.C.J.; Nussenbaum, B.; Rich, J.T.; Paniello, R.C.; Neely, J.G. A practical guide to surveys and questionnaires. Otolaryngol.-Head Neck Surg. 2011, 144, 831–837. [Google Scholar] [CrossRef] [PubMed]

- Choi, B.C.; Pak, A.W. Peer reviewed: A catalog of biases in questionnaires. Prev. Chronic Dis. 2005, 2, A13. [Google Scholar]

- Shinohara, Y.; Minematsu, K.; Amano, T.; Ohashi, Y. Modified Rankin scale with expanded guidance scheme and interview questionnaire: Interrater agreement and reproducibility of assessment. Cerebrovasc. Dis. 2006, 21, 271–278. [Google Scholar] [CrossRef]

- Meo, A.I. Picturing Students’ Habitus: The Advantages and Limitations of Photo-Elicitation Interviewing in a Qualitative Study in the City of Buenos Aires. Int. J. Qual. Methods 2010, 9, 149–171. [Google Scholar] [CrossRef]

- Roulston, K. Interview ‘problems’ as topics for analysis. Appl. Linguist. 2010, 32, 77–94. [Google Scholar] [CrossRef]

- Tomlinson, P. Having it both ways: Hierarchical focusing as research interview method. Br. Educ. Res. J. 1989, 15, 155–176. [Google Scholar] [CrossRef]

- Young, J.C.; Rose, D.C.; Mumby, H.S.; Benitez-Capistros, F.; Derrick, C.J.; Finch, T.; Garcia, C.; Home, C.; Marwaha, E.; Morgans, C.; et al. A methodological guide to using and reporting on interviews in conservation science research. Methods Ecol. Evol. 2018, 9, 10–19. [Google Scholar] [CrossRef]

- Warren, C.A.B.; Barnes-Brus, T.; Burgess, H.; Wiebold-Lippisch, L.; Hackney, J.; Harkness, G.; Kennedy, V.; Dingwall, R.; Rosenblatt, P.C.; Ryen, A.; et al. After the interview. Qual. Sociol. 2003, 26, 93–110. [Google Scholar] [CrossRef]

- DiCicco-Bloom, B.; Crabtree, B.F. The qualitative research interview. Med. Educ. 2006, 40, 314–321. [Google Scholar] [CrossRef]

- Luitse, D.; Denkena, W. The great transformer: Examining the role of large language models in the political economy of AI. Big Data Soc. 2021, 8, 20539517211047734. [Google Scholar] [CrossRef]

- Rathje, S.; Mirea, D.-M.; Sucholutsky, I.; Marjieh, R.; Robertson, C.E.; Van Bavel, J.J. GPT is an effective tool for multilingual psychological text analysis. Proc. Natl. Acad. Sci. USA 2024, 121, e2308950121. [Google Scholar] [CrossRef] [PubMed]

- Amin, M.M.; Cambria, E.; Schuller, B.W. Will Affective Computing Emerge From Foundation Models and General Artificial Intelligence? A First Evaluation of ChatGPT. IEEE Intell. Syst. 2023, 38, 15–23. [Google Scholar] [CrossRef]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence: Toward medical. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813. [Google Scholar] [CrossRef]

- Gunning, D. Explainable Artificial Intelligence; Defense Advanced Research Projects Agency (DARPA): Arlington VA, USA, 2017. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. (CSUR) 2018, 51, 1–42. [Google Scholar] [CrossRef]

- Cambria, E.; Poria, S.; Gelbukh, A.; Thelwall, M. Sentiment analysis is a big suitcase. IEEE Intell. Syst. 2017, 32, 74–80. [Google Scholar] [CrossRef]

- Wu, T.; He, S.; Liu, J.; Sun, S.; Liu, K.; Han, Q.-L.; Tang, Y. A brief overview of ChatGPT: The history, status quo and potential future development. IEEE/CAA J. Autom. Sin. 2023, 10, 1122–1136. [Google Scholar] [CrossRef]

- Jiao, W.; Wang, W.; Huang, J.; Wang, X.; Tu, Z. Is ChatGPT a good translator? Yes with GPT-4 as the engine. arXiv 2023, arXiv:2301.08745. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Bubeck, S.; Chandrasekaran, V.; Eldan, R.; Gehrke, J.; Horvitz, E.; Kamar, E.; Lee, P.; Lee, Y.T.; Li, Y.; Lundberg, S.; et al. Sparks of artificial general intelligence: Early experiments with gpt-4. arXiv 2023, arXiv:2303.12712. [Google Scholar] [CrossRef]

- Liebrenz, M.; Schleifer, R.; Buadze, A.; Bhugra, D.; Smith, A. Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. Lancet Digit. Health 2023, 5, e105–e106. [Google Scholar] [CrossRef]

- Bishop, L. A Computer Wrote This Paper: What Chatgpt Means for Education, Research, and Writing. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4338981 (accessed on 30 August 2025).

- Grimaldi, G.; Ehrler, B. AI et al.: Machines are about to change scientific publishing forever. ACS Energy Lett. 2023, 8, 878–880. [Google Scholar] [CrossRef]

- Chen, Y.; Eger, S. Transformers Go for the LOLs: Generating (Humourous) Titles from Scientific Abstracts End-to-End. In Proceedings of the 4th Workshop on Evaluation and Comparison of NLP Systems, Bali, Indonesia, 1 November 2023; pp. 62–84. [Google Scholar]

- Cotton, D.R.; Cotton, P.A.; Shipway, J.R. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Aljanabi, M.; Mohanad, G.; Ahmed Hussein, A.; Saad Abas, A.; ChatGpt. ChatGpt: Open Possibilities. Iraqi J. Comput. Sci. Math. 2023, 4, 62–64. [Google Scholar] [CrossRef]

- Azaria, A. ChatGPT Usage and Limitations. 2022. Available online: https://osf.io/preprints/osf/5ue7n_v1 (accessed on 30 August 2025).

- Nguyen, Q.; La, V. Academic Writing and AI: Day-4 Experiment with Mindsponge Theory. OSF Preprints 2023. Available online: https://osf.io/preprints/osf/awysc_v1 (accessed on 30 August 2025).

- Nordling, L. How ChatGPT is transforming the postdoc experience. Nature 2023, 622, 655. [Google Scholar] [CrossRef] [PubMed]

- Yeadon, W.; Inyang, O.-O.; Mizouri, A.; Peach, A.; Testrow, C.P. The death of the short-form physics essay in the coming AI revolution. Phys. Educ. 2023, 58, 035027. [Google Scholar] [CrossRef]

- Herbold, S.; Hautli-Janisz, A.; Heuer, U.; Kikteva, Z.; Trautsch, A. A large-scale comparison of human-written versus ChatGPT-generated essays. Sci. Rep. 2023, 13, 18617. [Google Scholar] [CrossRef]

- Fijačko, N.; Gosak, L.; Štiglic, G.; Picard, C.T.; Douma, M.J. Can ChatGPT pass the life support exams without entering the American heart association course? Resuscitation 2023, 185, 109732. [Google Scholar] [CrossRef]

- Hasani, A.M.; Singh, S.; Zahergivar, A.; Ryan, B.; Nethala, D.; Bravomontenegro, G.; Mendhiratta, N.; Ball, M.; Farhadi, F.; Malayeri, A. Evaluating the performance of Generative Pre-trained Transformer-4 (GPT-4) in standardizing radiology reports. Eur. Radiol. 2023, 34, 3566–3574. [Google Scholar] [CrossRef]

- Xue, V.W.; Lei, P.; Cho, W.C. The potential impact of ChatGPT in clinical and translational medicine. Clin. Transl. Med. 2023, 13, e1216. [Google Scholar] [CrossRef]

- Antaki, F.; Touma, S.; Milad, D.; El-Khoury, J.; Duval, R. Evaluating the performance of chatgpt in ophthalmology: An analysis of its successes and shortcomings. Ophthalmol. Sci. 2023, 3, 100324. [Google Scholar] [CrossRef]

- Jazi, A.H.D.; Mahjoubi, M.; Shahabi, S.; Alqahtani, A.R.; Haddad, A.; Pazouki, A.; Prasad, A.; Safadi, B.Y.; Chiappetta, S.; Taskin, H.E.; et al. Bariatric Evaluation Through AI: A Survey of Expert Opinions Versus ChatGPT-4 (BETA-SEOV). Obes. Surg. 2023, 33, 3971–3980. [Google Scholar] [CrossRef]

- Biswas, S.S. Role of ChatGPT in radiology with a focus on pediatric radiology: Proof by examples. Pediatr. Radiol. 2023, 53, 818–822. [Google Scholar] [CrossRef]

- Shoufan, A. Exploring Students’ Perceptions of CHATGPT: Thematic Analysis and Follow-Up Survey. IEEE Access 2023, 11, 38805–38818. [Google Scholar] [CrossRef]

- Farrokhnia, M.; Banihashem, S.K.; Noroozi, O.; Wals, A. A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov. Educ. Teach. Int. 2023, 61, 460–474. [Google Scholar] [CrossRef]

- Kortemeyer, G. Could an artificial-intelligence agent pass an introductory physics course? Phys. Rev. Phys. Educ. Res. 2023, 19, 010132. [Google Scholar] [CrossRef]

- Frieder, S.; Pinchetti, L.; Griffiths, R.R.; Salvatori, T.; Lukasiewicz, T.; Petersen, P.; Berner, J.; Chevalier, A. Mathematical capabilities of chatgpt. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2024; p. 36. [Google Scholar]

- Susnjak, T.; McIntosh, T.R. ChatGPT: The end of online exam integrity? Educ. Sci. 2024, 14, 656. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Bašić, Ž.; Banovac, A.; Kružić, I.; Jerković, I. ChatGPT-3.5 as writing assistance in students’ essays. Humanit. Soc. Sci. Commun. 2023, 10, 1–5. [Google Scholar] [CrossRef]

- Gemini Team; Anil, R.; Borgeaud, S.; Alayrac, J.-B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Carlà, M.M.; Gambini, G.; Baldascino, A.; Giannuzzi, F.; Boselli, F.; Crincoli, E.; D’oNofrio, N.C.; Rizzo, S. Exploring AI-chatbots’ capability to suggest surgical planning in ophthalmology: ChatGPT versus Google Gemini analysis of retinal detachment cases. Br. J. Ophthalmol. 2024, 108, 1457–1469. [Google Scholar] [CrossRef]

- Han, X.; Shao, Y.; Pan, B.; Yu, P.; Li, B. Evaluating the impact of setting delineators in tunnels based on drivers’ visual characteristics. PLoS ONE 2019, 14, e0225799. [Google Scholar] [CrossRef]

- Han, X.; Shao, Y.; Yang, S.; Yu, P. Entropy-based effect evaluation of delineators in tunnels on drivers’ gaze behavior. Entropy 2020, 22, 113. [Google Scholar] [CrossRef]

- Sandmann, S.; Riepenhausen, S.; Plagwitz, L.; Varghese, J. Systematic analysis of ChatGPT, Google search and Llama 2 for clinical decision support tasks. Nat. Commun. 2024, 15, 2050. [Google Scholar] [CrossRef]

- Harris, L.R.; Brown, G.T. Mixing interview and questionnaire methods: Practical problems in aligning data. Pract. Assess. Res. Eval. 2019, 15, 1. [Google Scholar] [CrossRef]

- Denz-Penhey, H.; Murdoch, J.C. A comparison between findings from the DREEM questionnaire and that from qualitative interviews. Med. Teach. 2009, 31, e449–e453. [Google Scholar] [CrossRef]

- Watari, T.; Takagi, S.; Sakaguchi, K.; Nishizaki, Y.; Shimizu, T.; Yamamoto, Y.; Tokuda, Y. Performance Comparison of ChatGPT-4 and Japanese Medical Residents in the General Medicine In-Training Examination: Comparison Study. JMIR Med. Educ. 2023, 9, e52202. [Google Scholar] [CrossRef]

- Rosoł, M.; Gąsior, J.S.; Łaba, J.; Korzeniewski, K.; Młyńczak, M. Evaluation of the performance of GPT-3.5 and GPT-4 on the Polish Medical Final Examination. Sci. Rep. 2023, 13, 20512. [Google Scholar] [CrossRef]

- Madrid-García, A.; Rosales-Rosado, Z.; Freites-Nuñez, D.; Pérez-Sancristóbal, I.; Pato-Cour, E.; Plasencia-Rodríguez, C.; Cabeza-Osorio, L.; Abasolo-Alcázar, L.; León-Mateos, L.; Fernández-Gutiérrez, B.; et al. Harnessing ChatGPT and GPT-4 for evaluating the rheumatology questions of the Spanish access exam to specialized medical training. Sci. Rep. 2023, 13, 22129. [Google Scholar] [CrossRef]

- Brin, D.; Sorin, V.; Vaid, A.; Soroush, A.; Glicksberg, B.S.; Charney, A.W.; Nadkarni, G.; Klang, E. Comparing ChatGPT and GPT-4 performance in USMLE soft skill assessments. Sci. Rep. 2023, 13, 16492. [Google Scholar] [CrossRef]

- Masalkhi, M.; Ong, J.; Waisberg, E.; Lee, A.G. Google DeepMind’s gemini AI versus ChatGPT: A comparative analysis in ophthalmology. Eye 2024, 38, 1412–1417. [Google Scholar] [CrossRef]

- Kaftan, A.N.; Hussain, M.K.; Naser, F.H. Response accuracy of ChatGPT 3.5 Copilot and Gemini in interpreting biochemical laboratory data a pilot study. Sci. Rep. 2024, 14, 8233. [Google Scholar] [CrossRef]

| Question Number | Questionnaire | Interview |

|---|---|---|

| Q1 | Do you find yourself easily distracted or disturbed by your surroundings? | Are you easily distracted or influenced by your surroundings while driving? |

| Q2 | What is your biggest concern about driving in the tunnel? | Do you feel uneasy or anxious while driving in the tunnel? If so, what do you think causes these feelings? |

| Q3 | What worries you the most during tunnel driving? | What factors affect your driving while in the tunnel? How do they influence your driving? |

| Q4 | How do you believe these delineators or road studs affect your driving process? Please assess the degree of assistance provided by the following three scenarios while driving in the tunnel. | How do the delineators and road studs affect your driving in the tunnel? How does your attention differ as a result? |

| Q5 | Which section of the tunnel (alignment) do you think would benefit the most from the placement of delineators or road studs? | In the tunnel, there are three types of sections: straight sections, left-turn sections, and right-turn sections. In different sections, do you prefer driving in the left lane or the right lane? |

| Q6 | What design do you believe should be adopted for delineators or road studs in tunnel driving? | What suggestions or ideas do you have that could help drivers better navigate through tunnels? |

| Question Number | Input Instructions |

|---|---|

| Q1 | We simulated three different scenarios of drivers driving in the tunnel through the simulation software, namely: (1) Scenario I, no delineators and no road studs; (2) Scenario II, no delineators but with road studs; and (3) Scenario III, with delineators and road studs. We aim to investigate whether respondents are prone to distraction while driving or susceptible to the influence of their surroundings. Please analyze the interview content below and extract key viewpoints and information for this purpose. |

| Q2 | We simulated three different scenarios of drivers driving in the tunnel through the simulation software, namely: (1) Scenario I, no delineators and no road studs; (2) Scenario II, no delineators but with traffic buttons; and (3) Scenario III, with delineators and road studs. We aim to investigate whether respondents experience feelings of unease or anxiety while driving in tunnels and what factors contribute to these sensations. Please analyze the interview content below and distill key viewpoints and information for this purpose. |

| Q3 | We simulated three different scenarios of drivers driving in the tunnel through the simulation software, namely: (1) Scenario I, no delineators and no road studs; (2) Scenario II, no delineators but with road studs; and (3) Scenario III, with delineators and road studs. We intend to investigate the factors that influence a respondent’s driving behavior and perceptions while driving in tunnels, as well as how these factors exert their influence. Please analyze the interview content below and extract key viewpoints and information for this purpose. |

| Q4 | We simulated three different scenarios of drivers driving in the tunnel through the simulation software, namely: (1) Scenario I, no delineators and no road studs; (2) Scenario II, no delineators but with road studs; and (3) Scenario III, with delineators and road studs. We aim to study the effects of tunnel markings and road signs on respondents while driving in tunnels, exploring their impact on attention and differences therein. Please analyze the interview content below and distill key viewpoints and information for this purpose. |

| Q5 | We simulated three different scenarios of drivers driving in the tunnel through the simulation software, namely: (1) Scenario I, no delineators and no road studs; (2) Scenario II, no delineators but with road studs; and (3) Scenario III, with delineators and road studs. We aim to study respondents’ preferences regarding lane selection—left or right lanes—while driving in different sections of tunnels, including straight, left-turn, and right-turn segments. Please analyze the interview content below and distill key viewpoints and information for this purpose. |

| Q6 | We simulated three different scenarios of drivers driving in the tunnel through the simulation software, namely: (1) Scenario I, no delineators and no road studs; (2) Scenario II, no delineators but with road studs; and (3) Scenario III, with delineators and road studs. We aim to study ways to drive more safely and comfortably in tunnels. Below are suggestions or ideas provided by the interviewees. Please analyze the interview content below and distill key viewpoints and information for this purpose. |

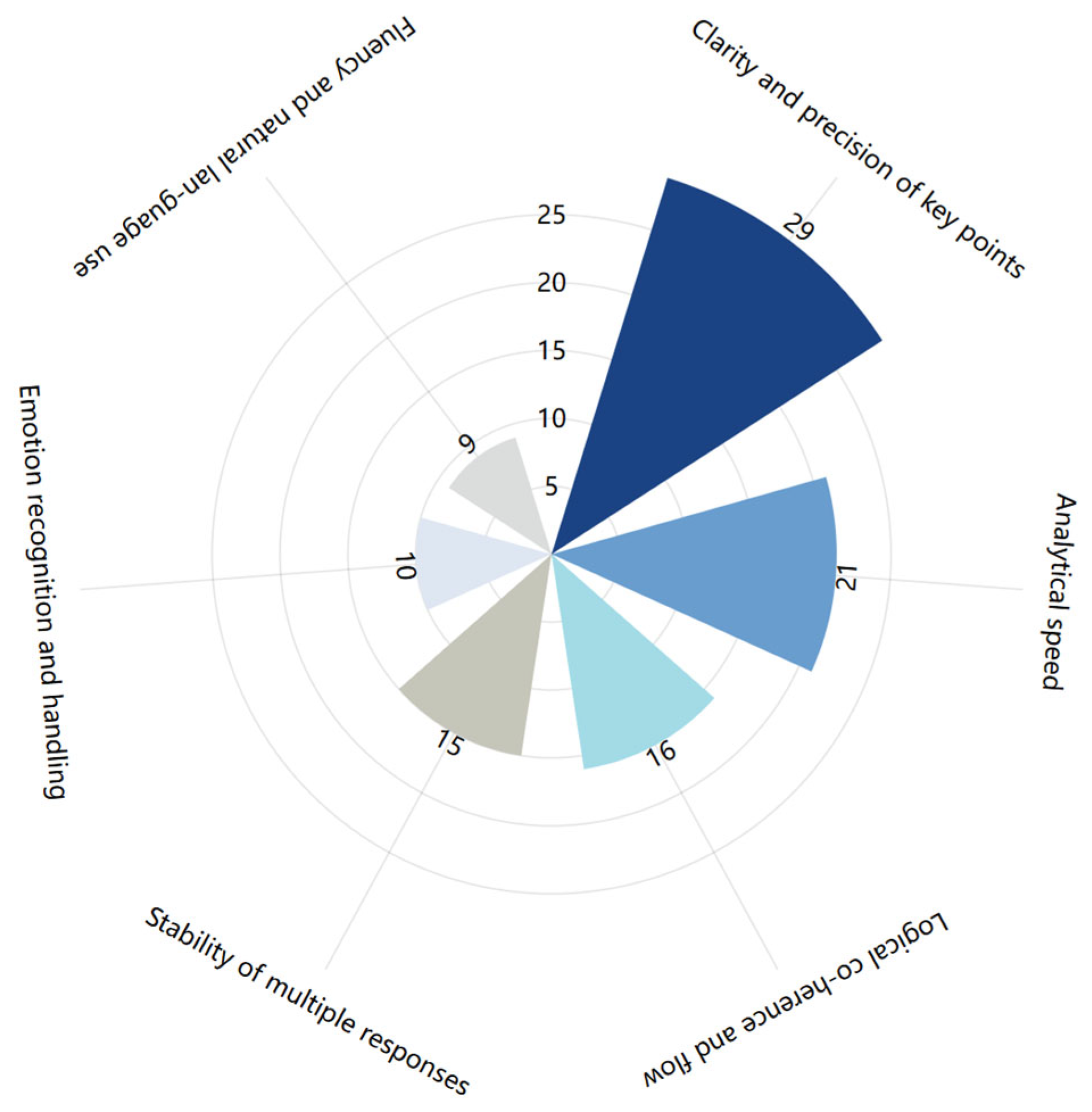

| Indicator | Definition | Basis for Weighting |

|---|---|---|

| Clarity and precision of key points | The depth and accuracy of LLM’s understanding of interview content, assessed by comparing the match between LLM summaries and the key information and details of the interview content. | This is one of the most critical indicators as it directly reflects the LLM’s ability to understand the core content of the interview, which is fundamental for any analysis and summarization task. |

| Logical coherence and flow | LLM’s ability to effectively integrate interview information and form logically coherent content, examining understanding and expression of the relationship between information. | The ability to integrate information and the logical coherence is crucial for generating meaningful and understandable summaries, reflecting the LLM’s capability in structured information processing. |

| Fluency and natural language use | The fluency and naturalness of the text generated by LLM, assessing whether there are errors or unnatural expressions in the vocabulary, grammar, and sentence structure of the text. | Language fluency and naturalness is essential for understanding and communication, but slightly less critical compared to the accuracy and logical coherence of content. |

| Emotion recognition and handling | LLM’s ability to understand and express emotional attitudes in interviews, analyzing whether the tendency of choice for certain closed-ended questions is consistent with the respondents’ original expressions. | Emotion recognition is important for understanding implicit meanings and viewpoints in interviews but is not as critical as precision of key points extraction and logical coherence in technical or professional interviews. |

| Analytical speed | The time taken by LLM to complete the analysis, evaluating its efficiency in analyzing large amounts of data. | Analysis speed is relatively important in scenarios involving the processing of large volumes of data. |

| Stability of multiple responses | The fluctuation of LLM’s analysis level during repeated analyses, evaluating the stability of its performance. | Stability is more important when repeated processing of the same information or processing large amounts of similar information is required. |

| Model | Clarity and Precision of Key Points | Logical Coherence and Flow | Fluency and Natural Language Use | Emotion Recognition and Handling | Analytical Speed | Stability of Multiple Responses |

|---|---|---|---|---|---|---|

| GPT-3.5 | 0.3425 | 0.0977 | 0.1365 | 0.1189 | 0.1575 | 0.1469 |

| GPT-4 | 0.3349 | 0.1774 | 0.008 | 0.0348 | 0.3064 | 0.1385 |

| Model | Clarity and Precision of Key Points | Logical Coherence and Flow | Fluency and Natural Language Use | Emotion Recognition and Handling | Analytical Speed | Stability of Multiple Responses |

|---|---|---|---|---|---|---|

| GPT-3.5 | 0.2046 | 0.1664 | 0.1695 | 0.1345 | 0.1739 | 0.1511 |

| GPT-4 | 0.2717 | 0.223 | 0.043 | 0.1019 | 0.1905 | 0.1699 |

| Method | Clarity and Precision of Key Points | Logical Coherence and Flow | Fluency and Natural Language Use | Emotion Recognition and Handling | Analytical Speed | Stability of Multiple Responses |

|---|---|---|---|---|---|---|

| entropy weight method | 0.3387 | 0.1376 | 0.0723 | 0.0769 | 0.2381 | 0.1427 |

| CRITIC method | 0.2381 | 0.1974 | 0.1063 | 0.1182 | 0.1822 | 0.1605 |

| Index | Clarity and Precision of Key Points | Logical Coherence and Flow | Fluency and Natural Language Use | Emotion Recognition and Handling | Analytical Speed | Stability of Multiple Responses |

|---|---|---|---|---|---|---|

| weight | 0.2884 | 0.1662 | 0.0893 | 0.0975 | 0.207 | 0.1516 |

| C | L | F | E | A | S | |

|---|---|---|---|---|---|---|

| GPT-3.5 | ||||||

| C | - | −0.957 (p < 0.05) | −1.168 (p < 0.05) | −0.999 (p < 0.05) | 1.031 (p < 0.05) | −0.407 (p < 0.05) |

| L | −0.957 (p < 0.05) | - | 0.211 (p < 0.05) | 0.042 (p = 0.476) | 0.075 (p = 0.208) | 0.549 (p < 0.05) |

| F | −1.168 (p < 0.05) | 0.211 (p < 0.05) | - | −0.169 (p < 0.05) | −0.136 (p < 0.05) | 0.76 (p < 0.05) |

| E | −0.999 (p < 0.05) | 0.042 (p = 0.476) | −0.169 (p < 0.05) | - | 0.032 (p = 0.584) | 0.592 (p < 0.05) |

| A | 1.031 (p < 0.05) | 0.075 (p = 0.208) | −0.136 (p < 0.05) | 0.032 (p = 0.584) | - | 0.624 (p < 0.05) |

| S | −0.407 (p < 0.05) | 0.549 (p < 0.05) | 0.76 (p < 0.05) | 0.592 (p < 0.05) | 0.624 (p < 0.05) | - |

| GPT−4 | ||||||

| C | - | −0.835 (p < 0.05) | −1.181 (p < 0.05) | −0.965 (p < 0.05) | −3.373 (p < 0.05) | −0.472 (p < 0.05) |

| L | −0.835 (p < 0.05) | - | 0.346 (p < 0.05) | 0.13 (p < 0.05) | −4.209 (p < 0.05) | 0.363 (p < 0.05) |

| F | −1.181 (p < 0.05) | 0.346 (p < 0.05) | - | −0.216 (p < 0.05) | −4.555 (p < 0.05) | 0.71 (p < 0.05) |

| E | −0.965 (p < 0.05) | 0.13 (p < 0.05) | −0.216 (p < 0.05) | - | −4.339 (p < 0.05) | 0.494 (p < 0.05) |

| A | −3.373 (p < 0.05) | −4.209 (p < 0.05) | −4.555 (p < 0.05) | −4.339 (p < 0.05) | - | −3.845 (p < 0.05) |

| S | −0.472 (p < 0.05) | 0.363 (p < 0.05) | 0.71 (p < 0.05) | 0.494 (p < 0.05) | −3.845 (p < 0.05) | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, R.; Hu, X.; Shao, Y.; Luo, Z.; Liu, B.; Cheng, Y. Using Large Language Models to Analyze Interviews for Driver Psychological Assessment: A Performance Comparison of ChatGPT and Google-Gemini. Symmetry 2025, 17, 1713. https://doi.org/10.3390/sym17101713

Sun R, Hu X, Shao Y, Luo Z, Liu B, Cheng Y. Using Large Language Models to Analyze Interviews for Driver Psychological Assessment: A Performance Comparison of ChatGPT and Google-Gemini. Symmetry. 2025; 17(10):1713. https://doi.org/10.3390/sym17101713

Chicago/Turabian StyleSun, Ruifen, Xinni Hu, Yang Shao, Zhongbin Luo, Bin Liu, and Yuzhu Cheng. 2025. "Using Large Language Models to Analyze Interviews for Driver Psychological Assessment: A Performance Comparison of ChatGPT and Google-Gemini" Symmetry 17, no. 10: 1713. https://doi.org/10.3390/sym17101713

APA StyleSun, R., Hu, X., Shao, Y., Luo, Z., Liu, B., & Cheng, Y. (2025). Using Large Language Models to Analyze Interviews for Driver Psychological Assessment: A Performance Comparison of ChatGPT and Google-Gemini. Symmetry, 17(10), 1713. https://doi.org/10.3390/sym17101713