Abstract

Insider threats remain a persistent challenge in cybersecurity, as malicious or negligent insiders exploit legitimate access to compromise systems and data. This study presents a bibliometric review of 325 peer-reviewed publications from 2015 to 2025 to examine how machine learning (ML) and deep learning (DL) techniques for insider threat detection have evolved. The analysis investigates temporal publication trends, influential authors, international collaboration networks, thematic shifts, and algorithmic preferences. Results show a steady rise in research output and a transition from traditional ML models, such as decision trees and random forests, toward advanced DL methods, including long short-term memory (LSTM) networks, autoencoders, and hybrid ML–DL frameworks. Co-authorship mapping highlights China, India, and the United States as leading contributors, while keyword analysis underscores the increasing focus on behavior-based and eXplainable AI models. Symmetry emerges as a central theme, reflected in balancing detection accuracy with computational efficiency, and minimizing false positives while avoiding false negatives. The study recommends adaptive hybrid architectures, particularly Bidirectional LSTM–Variational Auto-Encoder (BiLSTM-VAE) models with eXplainable AI, as promising solutions that restore symmetry between detection accuracy and transparency, strengthening both technical performance and organizational trust.

1. Introduction

Insider threats represent a critical challenge in cybersecurity, arising from individuals with legitimate system access who misuse their privileges to compromise confidentiality, integrity, or availability [1,2]. These threats manifest in various forms, including malicious insiders who deliberately exfiltrate or sabotage organizational assets, negligent insiders whose carelessness exposes vulnerabilities, and compromised insiders coerced or manipulated by external actors [3,4]. Motivations range from financial gain and revenge to coercion and accidental behavior [1], while impacts are often severe, leading to substantial financial losses, reputational damage, operational disruptions, and regulatory non-compliance [5]. Their multifaceted nature, spanning technical, behavioral, and organizational dimensions, makes them uniquely difficult to detect and mitigate compared to external threats. Numerous reports and articles reinforce the growing prevalence and impact of insider threats. For instance, ref. [6] revealed that approximately 20% of all data breaches involved an insider actor, while [7] showed that 57% of data breaches in healthcare were caused by insider threat during 2023. Additionally, the Ponemon Institute’s 2023 Cost of Insider Risk Report [2] estimated the global average annual cost of insider incidents at US$16.2 million per organization, which represents a 40% increase over the previous four years. Insider attacks are rare events hidden within massive volumes of benign user activities, creating a significant data imbalance problem that complicates the training of detection models [8,9]. Comprehensive labeled datasets are rare due to concerns around privacy and employee trust, as overly intrusive monitoring measures can harm organizational culture, forcing researchers to rely on anomaly detection methods [10].

Insider threat detection is fundamentally linked to the concept of symmetry, where adversarial actions and defensive mechanisms exist in a delicate balance. Over time, researchers have sought to preserve this equilibrium by developing approaches that identify anomalies disrupting the expected patterns of organizational behavior. The field has progressed from static rule-based systems to more adaptive machine learning (ML) and deep learning (DL) techniques, reflecting continuous efforts to enhance the effectiveness of insider threat detection. ML and DL algorithms can learn complex, non-linear patterns in user activity data to enhance detection accuracy [11,12]. For example, support vector machines (SVMs) and random forests have been used to classify unusual behaviors in system logs more accurately than traditional statistical methods, while recurrent neural networks such as Long-Short-Term-Memory (LSTM) have been employed to identify sequential dependencies in user actions, allowing detection of subtle behavioral changes that could signal malicious intent [12,13]. Despite these advances, there is still a limited comprehensive understanding of how ML and DL have developed within the insider threat field, highlighting the need for systematic analysis of their evolution and use. The goal is to fill this gap by answering the following questions:

RQ1: What are the key temporal trends in scholarly output on ML and DL-based insider threat detection over the past decade?

RQ2: Who are the most influential authors in insider threat detection research, and how do their co-citation relationships reveal the intellectual structure of the field?

RQ3: What are the prevailing semantic themes and emerging research trajectories in insider threat detection, and how have these evolved in response to technological advancements and security challenges?

RQ4: To what extent have ML and DL models been integrated into insider threat detection and prevention frameworks?

Addressing these questions requires a thorough analysis of publications on insider threat detection, not only to advance academic understanding but also to inform practical implementation of defense mechanisms. The goal of this work is to systematically map the application of machine learning and deep learning techniques for insider threat detection, thereby providing a consolidated overview of methodological trends, dataset usage, evaluation practices, and explainability efforts. By synthesizing these patterns, the paper contributes a comprehensive evidence base that identifies persistent challenges—such as overreliance on synthetic data, limited integration of eXplainable AI, and inconsistent benchmarking—and highlights promising directions for developing more accurate, adaptive, and transparent detection models.

The paper is organized as follows: Section 2 examines related works on insider threat detection, while Section 3 describes the methodology employed in this work. The findings are then presented in a series of visualizations and metrics in the subsequent sections of the paper. Section 4 presents the analysis of bibliometric insights. Section 5 discusses the findings and their implications. Section 6 presents the research gaps and proposes future directions; Section 7 concludes the paper.

2. Related Work

Insider threat detection research has undergone a major evolution over the past decade; they have transitioned from static, rule-based detection systems to analytical and data-driven ones. The early efforts, such as Denning’s foundational intrusion detection framework [14], focused on simple empirical rules and behavior profiling to flag anomalous user activity [15]. These systems provided the groundwork for statistical anomaly detection and multivariate profiling methods, which in turn paved the way for ML-based approaches. Seminal ML contributions, such as the development of Support Vector Machines and Random Forests, established methodological baselines that many insider threat studies continue to build upon. These systems were effective in the detection of anomalies in predefined events, such as unusual login attempts or unauthorized file transfers; however, they were found to be limited in their ability to detect sophisticated or latent insider attacks. To address these limitations and improve detection, researchers shifted attention to statistical anomaly detection and multivariate profiling techniques. These techniques analyze deviations across multiple behavioral dimensions, rather than focusing on single-event indicators [16]. The statistical anomaly detection and multivariate profiling methods served as groundwork for the transition to ML-based techniques. ML-based approaches to insider threat detection offer enhanced adaptability and the ability to learn patterns of insider behavior from data automatically.

Supervised ML approaches are ML-based techniques that gained early prominence in the field of insider threat detection. They leveraged labeled datasets to train models to distinguish between normal and malicious insider behavior. Decision trees, support vector machines (SVMs), Bayesian classifiers, and random forests are a few of the commonly used supervised ML-based algorithms [17]. For example, in some work, decision-tree models were trained on activity logs to capture access violations or policy breaches [18]. The Random Forests algorithm has gained popularity in several inquiries due to its ensemble nature, which enhances prediction accuracy and mitigates the challenge of overfitting associated with the application of supervised ML techniques in insider threat detection [19]. These supervised ML-based models demonstrated clear advantages over manual, rule-based detection; however, their dependence on labeled data is a significant drawback. The challenge to effective insider threat detection research is the scarcity of data. Additionally, the available datasets are highly imbalanced and often lack comprehensive ground-truth labels, limiting the generalizability of supervised approaches [17].

To address the challenge associated with the lack of comprehensive labeling of datasets, there has been a shift in focus to extensively exploring unsupervised and semi-supervised ML-based methods for insider threat detection literature. Clustering algorithms, such as K-means and DBSCAN, have been widely used to detect these deviations [20]. Similarly, One-Class SVM and Isolation Forest (iForest) methods have demonstrated improved performance in isolating outliers in large-scale logs [21]. Although these models offer greater flexibility when labeled datasets are unavailable, their drawbacks include high false-positive rates and sensitivity to noisy data [22]. These techniques learn profiles of regular activity and compare user behaviors against these learned profiles to identify deviations as anomalous patterns.

As technology advances, the availability of data and computational power has also improved. For instance, DL algorithms such as Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks have been identified to be effective at modeling sequential dependencies in user activity logs [23]. Among deep learning models, the introduction of LSTM by Hochreiter and Schmidhuber [24] was seminal, later adapted to insider threat contexts such as the DANTE system [25] and other sequential log analysis tasks. The DANTE system [25] utilizes LSTM architectures to analyze temporal patterns of user behavior in the datasets to identify anomalous behaviors that indicate insider threats. In scenarios where user actions occur in sequences, such as file access logs, network traffic, or system commands, LSTM models have demonstrated superior performance over traditional ML models [26], making LSTM and its variants central to modern insider threat detection strategies. These advancements led to a shift toward DL methods, which are capable of modelling complex and high-dimensional user behavior patterns.

Autoencoders, introduced by Rumelhart et al. [27] and later extended into Variational Autoencoders (VAEs) by Kingma & Welling [28], represent another set of seminal architectures. These models learn latent spaces of user behavior and detect anomalies based on reconstruction errors, making them effective unsupervised tools for insider threat detection. Subsequent studies like [29,30] demonstrate their potential in modeling login and access patterns and identifying outliers as potential threats. These DL architectures are effective in addressing data imbalance challenges associated with supervised ML-based techniques since they do not require labeled data [31].

The use of Graph Neural Networks (GNNs), which are capable of modeling the relationships among entities, such as users, devices, and resources, in complex IT environments for detecting anomalous user behavior, has also been explored. GNNs are capable of capturing both behavioral and contextual information when insider activities are represented as graph structures, allowing the model to detect multiple-step insider activities difficult to identify with other methods [9]. Works that leverage the power of relational dependencies in GNN for anomaly detection, such as [32,33,34], have demonstrated that they are capable of outperforming sequence-only based models. Other works have also proposed hybrid architectures that integrate GNN embeddings with classifiers such as Random Forests or XGBoost to enhance detection performance [35,36].

An emerging trend is model hybridization, where the strengths of multiple algorithms are combined to enhance the robustness and detection accuracy of models. One such hybrid modeling is the CNN–LSTM, usually employed to capture both spatial and temporal features of user behavior [12,37]. Again, Random Forest–XGBoost hybrid models improve feature importance evaluation and accuracy of detection [38]. The feature extraction capability of DL algorithms has been combined with the interpretability of ML classifiers to reduce false positives while maintaining high detection rates [35,39].

In recent years, emphasis has been placed on eXplainable AI (xAI) techniques that offer explanations and build trust in insider threat detection models. While DL-based models provide better performance than supervised ML models, they are often seen as “black boxes” because their decisions are opaque and lack interpretability. Common variants of XAI models include SHAP (SHapley Additive Explanations), LIME (Local Interpretable Model-agnostic Explanations), and Attention Mechanisms. In this context, XAI is integrated into ML/DL models to offer feature-level explanations for model predictions [40]. A seminal survey by Adadi & Berrada [41] established the foundations of the XAI landscape, influencing subsequent efforts that integrate SHAP, LIME, and Attention Mechanisms into ML/DL-based insider threat detection. These techniques help improve trust in model decisions and support compliance with regulations such as the General Data Protection Regulation (GDPR), which mandates that automated decisions be transparent and explainable.

Researchers have turned to synthetic data and generative models to address the challenge associated with the scarcity of real-world insider threat datasets. To address the class imbalance in these datasets, techniques such as Generative Adversarial Networks (GANs), Synthetic Minority Over-sampling Technique (SMOTE), and VAEs are continually being explored for data augmentation to improve the training of detection models [42]. For instance, rare insider events have been synthesized to create more balanced datasets for supervised learning [43]. This trend is particularly relevant in domains like finance and healthcare, where data confidentiality prevents the release of authentic insider threat logs.

3. Methodology

The bibliometric review was conducted in a series of steps to ensure validity, transparency, and rigor. In the initial stage, three reputable academic databases were chosen as data sources: Scopus, Web of Science, and Google Scholar. These databases were selected because of their comprehensive coverage of peer-reviewed publications in computer science and cybersecurity. While this review prioritizes peer-reviewed, DOI-indexed literature, selected industry reports, such as Verizon DBIR, Ponemon Institute, IBM Cost of Data Breach, were retained because they provide indispensable, real-world insights into the prevalence, cost, and organizational impact of insider threats. Such practitioner-oriented reports complement the academic record by capturing trends and empirical evidence that are often unavailable in scholarly publications, thereby ensuring that the review reflects both theoretical advances and operational realities.

A search strategy was formulated by combining keywords related to insider threat detection with ML and DL techniques, using Boolean operators (“AND” and “OR”). The queries were conducted on titles, abstracts, and keywords to maximize the retrieval of relevant literature.

A total of 873 publications (631 from Scopus, 145 from Web of Science, and 97 from Google Scholar) indexed between 2015 and 2025 were identified on 26 May 2025. The search query was refined to limit the records to Computer Science Conference papers, articles, and book chapters in the English language. This reduced the records count to 612 (443 from Scopus, 113 from Web of Science, and 56 from Google Scholar). The search query used is:

(TITLE-ABS-KEY (“Insider Threat” OR “Insider Attack” OR “Malicious Insider” OR “Insider Behavior” OR “User Behavior Analytics” OR “Employee Threat” OR “Internal Threat” OR “Anomalous Insider Activity”) AND TITLE-ABS-KEY (“Machine Learning” OR “Deep Learning” OR “Neural Networks” OR “Autoencoder” OR “LSTM” OR “Recurrent Neural Network” OR “RNN” OR “Random Forest” OR “Decision Tree” OR “Support Vector Machine” OR “SVM” OR “Ensemble Learning” OR “Anomaly Detection”)) AND PUBYEAR > 2014 AND PUBYEAR < 2026 AND (LIMIT-TO(DOCTYPE, “cp”) OR LIMIT-TO(DOCTYPE, “ar”) OR LIMIT-TO(DOCTYPE, “ch”)) AND (LIMIT-TO(SUBJAREA, “COMP”)) AND (LIMIT-TO(LANGUAGE, “English”))

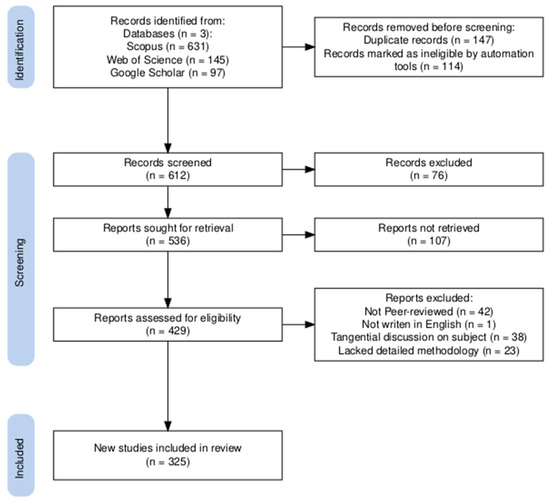

The 612 records retrieved underwent a structured screening and eligibility process as shown in Figure 1. The inclusion and exclusion criteria were developed to enhance transparency and reproducibility, and also ensure rigor and direct relevance to the study objectives. Publications were excluded if they met any of the following criteria in order to minimize bias, improve comparability, and strengthen the reliability of the review:

Figure 1.

Flow diagram for the identification and selection of publications.

- (i)

- not peer-reviewed (e.g., editorials, commentaries, workshop notes),

- (ii)

- not written in English,

- (iii)

- addressed insider threats only tangentially without methodological application of ML/DL, or

- (iv)

- lacked sufficient methodological detail to allow replication or evaluation.

After the screening and eligibility assessment, a total of 325 publications (289 from Scopus, 17 from Web of Science, and 19 from Google Scholar) met the inclusion criteria and were selected for review. These records were exported in CSV format with metadata such as publication ID, title, abstract, authorship, publication year, affiliation, document type, and correspondence country.

Subsequently, VOS viewer—a widely used software tool for constructing and visualizing bibliometric networks—was employed to perform co-authorship analysis, co-citation mapping, keyword co-occurrence analysis, and thematic clustering. To ensure transparency in cluster formation, we explicitly report the thresholds applied in VOSviewer 1.6.20: a minimum of 15 keyword occurrences for inclusion in the keyword network, a minimum of 46 citations of an author for inclusion in the co-citation analysis, and at least four co-authorship links for consideration in the co-authorship network. These thresholds were selected to balance inclusivity with network clarity, avoiding both excessive sparsity and overly dense networks that hinder interpretability. To validate the robustness of the clustering outputs, sensitivity analyses were conducted by varying these thresholds (e.g., keyword occurrence threshold from 5 to 7 and to 12). The resulting clusters showed consistent thematic structures, with only minor changes in peripheral nodes, confirming the stability and reliability of the identified clusters.

4. Results and Analyses of Bibliometric Insights

This section examines the bibliometric data to identify key trends, patterns, and structures within the dataset. The findings are highlighted to reveal growth in publication, influential authors, co-citation networks, and other relevant trends and patterns.

4.1. Evolution of Insider Threat Detection Research (RQ1)

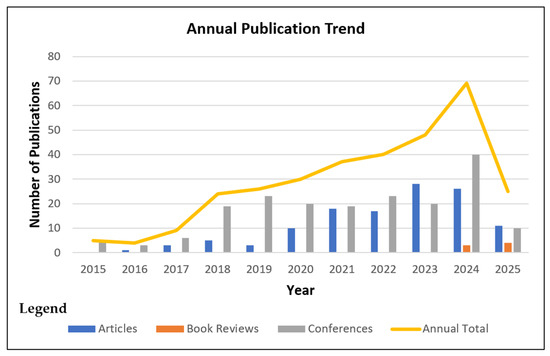

There was a consistent upward trajectory in academic output on the application of ML and DL methods for insider threat detection in cybersecurity, with varying intensity across years within the period under review. The total publication count for 2015 consisted of five conference papers (Figure 2). This indicates early explorations into the field. The count slightly decreased to four publications in 2016, with the emergence of the first journal article, along with three conference papers. This trend was short-lived, as the upward trend resumed in 2017, with nine publications, all of which were conference papers. This formed a baseline for more structured inquiry into insider threat detection models.

Figure 2.

Annual Publication Trend for Articles, Book Reviews, and Conference Papers.

In 2018, a remarkable shift occurred when the publication volume increased to 24, representing over 250% rise compared to the previous year. This increase can be attributed to greater access to structured insider threat datasets and growing confidence in the use of supervised ML algorithms in cybersecurity. There was also a rise in academic participation through conferences, which accounted for nearly 80% of the publications during that period. The trend shows a steady output of research, with 2019 reporting 26 publications, as the focus shifted from conceptual models to experimental implementations. This period also marked the first evidence of LSTM-based model proposals, indicating a move toward temporal behavior modeling techniques, with notable publications like [25,37].

A phase of rapid advancement occurred between 2020 and 2023, as shown in Figure 2, with the publication count rising to 30 in 2020. This was distributed proportionally between conference papers (20) and journal articles (10). This trend points to the maturation of some early-stage models into empirically validated experiments suitable for peer-reviewed publication. By 2021, the annual publication volume had reached 37, with the number of journal articles (18) nearly equaling the number of conference publications (19). This momentum extended into 2022, with 40 publications, and 2023, with 48 publications, marking a peak in journal contributions at 28 journals. The trend reflects a shift in the way academic research is disseminated from conference experimentation to journal-level validation. The rate of growth compounded annually from 2015 to 2024 is approximately 33.9%, which shows a sustained growth in research work within the scope of the work.

The year 2024 saw the publication volume across the decade peaking with 69 entries, with a diversified composition of document types. Conference papers and journal articles saw 40 papers and 26 articles, respectively. Meanwhile, the first recorded book chapter accounted for 3. This consistent surge in conference papers and journal articles suggests that research into insider threat detection models using ML and DL continues to gain heightened academic recognition, and more diverse ideas are being explored. A decline in publication volume is noted in 2025, with only 25 publications recorded. While this could be interpreted as a drop in research interest, it is more of a reflection of partial-year data, given the volume recorded in the preceding years. The appearance of four book chapters and a balanced mix of journal articles and conference papers in the 2025 data, though the year is still young, suggests continued scholarly engagement.

A careful analysis of the growth rates across sub-periods reveals that the field experienced rapid expansion during the 2015–2018 phase, with an average annual growth rate of approximately 68.7%. This rate slightly tapered to 21.6% in the subsequent 2019–2024 period, reflecting a transition from exploratory surge to methodological consolidation. The density of publications in 2023 and 2024 also suggests a growing influence, particularly as high-impact studies utilizing LSTM models and hybrid ML-DL architectures gained traction. These findings illustrate not only the progressive diffusion of research but also the evolving sophistication in algorithm design and evaluation strategies within the field of insider threat detection research.

4.2. Influential Authors Through Co-Citation Network Dynamics (RQ2a)

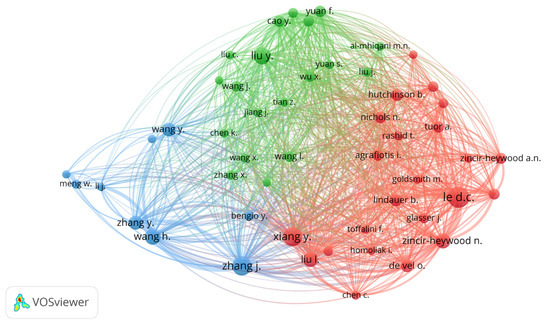

Figure 3 presents the co-citation network of the top 50 most frequently co-cited authors in insider threat detection-related research works. The network reveals a complex structure with multiple clusters, indicating a highly collaborative and wide-ranging research field. In the network, the top-most frequently co-cited authors are grouped into three clusters: red, green, and blue clusters. These demonstrate the intellectual segmentations within the field.

Figure 3.

Co-citation network visualization of the top 50 co-cited authors.

The red cluster, which is notably dense and cohesive, represents foundational contributors in classification-based anomaly detection and hybrid modeling. Authors such as Le D.C., Zincir-Heywood A.N., Lindauer B., and Glasser J. are central figures in this context. These researchers have made significant contributions to the empirical foundations of insider threat detection by developing benchmark datasets and evaluating classifier protocols. For instance, publications in this group often evaluate ensemble models, decision trees, and support vector machines using standardized datasets such as the CERT Insider Threat Dataset, as evidenced in [44,45,46]. Their location within the network reflects their influence on both classical ML techniques and the integration of interpretability in insider threat modeling.

The green cluster comprises scholars like Liu Y., Cao Y., Yuan F., and Wu X., who are prominent in advancing DL-based frameworks, particularly those emphasizing sequential modeling and behavioral analytics. Their contributions frequently explore the application of LSTM, RNN, and Autoencoder-based techniques to complex insider behavior profiling, as shown in works such as [16,30,45,47]. The strong intra-cluster links and high node density indicate a closely interconnected scholarly discourse centered on leveraging temporal dependencies and latent feature representations in DL models.

The blue cluster features authors like Wang Y., Meng W., Zhang Y., and Wang H., who focus on distributed and cloud-native insider threat solutions. Their research broadens the conventional detection paradigms to encompass federated learning, edge computing, and environments that prioritize privacy considerations. For example, refs. [20,48,49] explore innovative techniques for scaling threat detection in decentralized architectures, reflecting a shift toward real-world deployment in complex infrastructures. Although less densely connected than the red and green clusters, the blue cluster exhibits strong internal cohesion and an emerging thematic niche centered on operational scalability and adversarial resilience.

Crucially, the network also reveals inter-cluster connections, especially via authors such as Xiang Y., Liu J., and Homoliak I., who occupy intermediary positions between the red and green clusters as shown in Figure 3. These scholars frequently contribute to hybrid model architectures that blend statistical learning with deep generative or sequence-based techniques, as reflected in [35,50]. Their presence at the intersection of clusters indicates methodological pluralism and a growing emphasis on integrating interpretability, adaptability, and scalability within a single detection framework. Their position as a link between classical machine learning and newer deep learning research becomes clear through their frequent co-citation with leading authors from both areas. Together, these clusters form a cohesive yet diversified research ecosystem, with strategic bridges that link core methodologies. This structural insight not only highlights the influential contributors and their thematic communities but also reveals the integrative trends that are shaping the next phase of insider threat detection research.

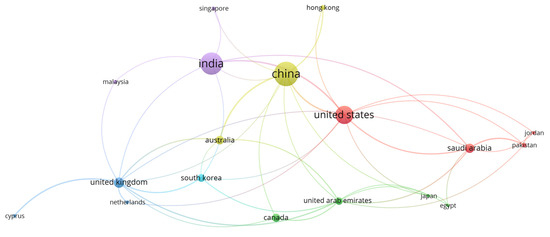

4.3. Country-Level Co-Authorship Trends in Insider Threat Detection (RQ2b)

The international co-authorship landscape in ML and DL-based insider threat detection in cybersecurity shows a highly interconnected network of national contributors, characterized by different levels of publication output and collaboration intensity. The dataset clearly indicates that researchers from China, India, and the United States have been particularly active in advancing work in this area. They demonstrate high centrality, substantial publication output, and strong bilateral as well as multilateral research collaborations, as shown in Figure 4. A detailed summary of the top ten contributing nations, sorted by publication volume and collaboration strength, is provided in Table 1.

Figure 4.

Country-Level Co-authorship Trends.

Table 1.

Top 10 Countries—Co-Authorship Summary.

Table 1 shows that China leads the field with 78 publications, nine of which involve international collaboration. These studies show growing interest in both empirical model evaluation and the use of advanced DL architectures like LSTM, Autoencoders, and Transformer-based models, especially in behavioral profiling and anomaly detection. India is second with 67 publications and seven international collaborations, mainly published through conference proceedings. The high volume of conference outputs from Indian institutions indicates a focus on early-stage experimentation and community involvement. The United States, although ranking third with 38 publications, surpasses China in international collaborations with 11, highlighting its key role as a center for transnational scholarly exchange and fundamental theory development.

The network reveals distinct regional clusters, each characterized by thematic focus and geographic closeness. The Asia-Pacific cluster—comprising China, India, Singapore, Malaysia, and South Korea—is recognized for its frequent bilateral connections and shared interests in DL model evaluation, hybrid setups, and high-performance detection systems. The North American cluster, led by the United States and Canada, shows strong co-authorship links with Australia and the United Kingdom, indicating a cross-regional research focus. Meanwhile, the Gulf Cooperation Council (GCC) countries, especially Saudi Arabia and the United Arab Emirates, are emerging contributors, often working with the United States and China on implementation-focused work and dataset optimization.

Analysis of the collaboration index, defined as the proportion of cross-national publications to total output, reveals strategic patterns. Canada, with a publication count of 13 and three international collaborations, has a collaboration index of 23.10%, indicating a heavy reliance on global partnerships to boost research visibility and capabilities. In contrast, India’s collaboration index of just under 10.4% suggests a domestically focused research base, likely driven by institutional capacity and local funding schemes. Saudi Arabia, part of an active cluster, records only seven collaborations out of 11 publications, with a collaboration index of 63.6%.

The regional specialization patterns further illustrate differences in dissemination priorities. India and China exhibit a high volume of conference-based outputs, indicating the rapid dissemination of novel models, comparative studies, and algorithm benchmarking. Conversely, the United States and the United Kingdom favor journal publications, often focusing on methodological rigor, transparency mechanisms such as SHAP and LIME, and regulatory compliance with cybersecurity standards. This divergence reflects the evolving research ecosystems across regions, shaped by varying academic norms, funding landscapes, and policy priorities.

The co-authorship network reveals a maturing global community centered around a tri-polar axis of China, India, and the United States, supported by regional contributors from the GCC, Asia-Pacific, and Western Europe. The interplay between local innovation and global collaboration is shaping not only the thematic orientation of the field but also its methodological and geopolitical contours.

4.4. Semantic Landscape and Thematic Evolution of Insider Threat Detection Research (RQ3)

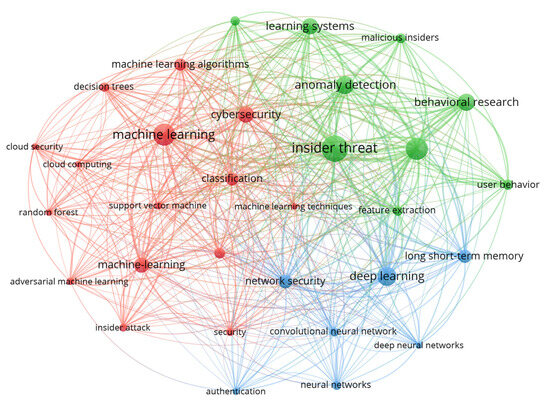

The keyword co-occurrence network derived from bibliographic metadata reveals the intellectual landscape and thematic evolution of research on insider threat detection. Three major thematic clusters are evident within the network, each representing a distinct area of focus. As illustrated in Figure 5, the red cluster emphasizes machine learning-based classification and algorithmic detection, characterized by frequently co-occurring terms such as “machine learning,” “support vector machine,” “decision trees,” “random forest,” and “classification,” indicating the firm foundation of classical supervised learning techniques that dominated early research.

Figure 5.

Keyword co-occurrence network.

The green cluster is centered around terms such as “insider threat,” “anomaly detection,” “user behavior,” and “behavioral research,” indicating a conceptual shift toward behavioral modeling and context-aware analysis of insider actions. In contrast, the blue cluster highlights deep learning methodologies, with keywords such as “deep learning,” “convolutional neural networks,” “neural networks,” and “long short-term memory,” reflecting the growing focus on temporal and sequential modeling of insider activities.

An analysis of keyword frequency and centrality confirms the dominance of terms such as “detection” (193 occurrences), “insider” (161), “threat” (130), and “learning” (106), indicating their persistent centrality to the discourse. Notably, keywords like “deep learning” and “long short-term memory” have shown a marked increase in average publication year, highlighting their more recent adoption as core methodological tools in publications such as [16,35,51]. Conversely, terms associated with earlier approaches, such as “decision trees” and “support vector machine,” though still present, exhibit a declining trend in centrality, suggesting a gradual shift in methodological preferences. The emergence of “autoencoder” and “XAI”, though lower in frequency, illustrates the field’s responsiveness to interpretability and latent feature representation challenges in black-box models.

Over the past decade, the thematic evolution of the field has been marked by a shift from rule-based and static anomaly detection models to more nuanced approaches, rooted in behavioral profiling and hybrid modeling. Early works emphasized anomaly detection based on predefined signatures and static feature engineering, often employing decision boundaries learned via support vector machines or tree-based models. Recent works have increasingly focused on behavioral dynamics, utilizing sequential data and recurrent neural networks to capture insider activity patterns over time. This thematic shift is reflected in the rise in terms such as “user behavior,” “long short-term memory,” and “feature extraction,” signaling a move toward longitudinal modeling and adaptive learning strategies. Additionally, the integration of hybrid frameworks combining ML and DL elements—evident in overlapping keyword clusters—demonstrates growing methodological complexity and adaptability.

Emerging trends are particularly evident at the network’s periphery. Terms such as “XAI,” “adversarial machine learning,” “cloud security,” and “federated learning,” while still underrepresented in terms of frequency, suggest forward-looking research trajectories. These themes are especially relevant in addressing contemporary concerns such as model explainability, robustness to manipulation, and privacy-preserving detection in distributed environments [25,44,49]. Their co-occurrence with core terms like “deep learning” and “insider threat” indicates that future research will likely prioritize transparent, secure, and distributed approaches to insider threat detection, particularly in compliance-driven and operational settings.

The co-occurrence of overlapping keywords across clusters reflects a trend toward methodological convergence. For example, “feature extraction” connects both behavioral modeling and machine l—earning-centric clusters, while “neural networks” bridges traditional ML and advanced DL methods. This convergence underscores the growing integration of diverse analytical strategies, suggesting that future insider threat detection systems will likely employ ensemble or composite models that balance transparency, scalability, and behavioral sensitivity. As such, the keyword network not only maps the field’s historical trajectory but also provides a roadmap for its future direction—highlighting the increasing interplay between foundational ML algorithms, deep neural representations, and emerging requirements for trust, transparency, and operational resilience.

4.5. Algorithmic Taxonomy and Hybrid Modeling Patterns in Insider Threat Detection (RQ4)

This section offers a structured analysis of ML and DL techniques used in insider threat detection, focusing on their frequency, evolution, and application trends. It reviews both individual algorithmic approaches and hybrid modeling strategies, emphasizing co-occurrence patterns, key algorithms, and the prevalence of ML–ML, DL–DL, and ML–DL combinations across the literature.

4.5.1. Algorithmic Landscape of ML and DL Techniques in Insider Threat Detection

The methodological path of insider threat detection research in cybersecurity shows a clear shift from traditional ML methods to DL frameworks. As illustrated in Table 2, it can be observed that several varieties of algorithmic techniques are used, with 20 key models representing most of the implementations in the literature. These algorithms belong to three main categories: supervised learning algorithms, such as Random Forest, SVM, and Logistic Regression; unsupervised learning algorithms, including Autoencoder, Isolation Forest, K-Means, and DBSCAN; and deep learning algorithms, like LSTM, CNN, and GRU. Classifying these into their respective paradigms reflects their specific detection goals—supervised models for threat classification with labeled data, unsupervised models for anomaly detection without prior labels, and DL models for learning complex behavioral and temporal patterns from raw logs.

Table 2.

Frequency Distribution of Top 20 ML and DL Algorithms Identified in the Literature.

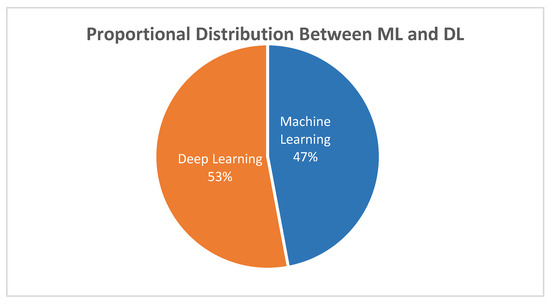

Figure 6 illustrates the proportional distribution between ML and DL techniques, showing that deep learning makes up 53% of the documented algorithmic usage, slightly surpassing the 47% attributed to traditional ML models. This proportional dominance indicates a significant methodological shift over the past decade. From 2015 to 2018, insider threat detection efforts mainly relied on ML techniques such as Decision Trees, Random Forest, and Support Vector Machine. These models offered interpretable decision-making frameworks and were well-suited for structured datasets. However, after 2019, there has been a clear increase in the adoption of DL techniques, especially LSTM (49 occurrences), Neural Networks (41), and CNN (29), as shown in Table 2, highlighting a broader shift toward data-driven, temporal modeling strategies.

Figure 6.

Proportional Distribution Between ML and DL.

This temporal evolution is driven by the increasing complexity of insider threat behavior, which often develops over time and shows through subtle changes in user activities. Deep learning models, such as LSTM and GRU, are designed to capture sequential dependencies, making them effective for modeling long-term behavioral patterns and temporal anomalies. Additionally, Autoencoders and Variational Autoencoders (VAEs), which fall under unsupervised techniques, are increasingly used for their ability to learn compressed hidden patterns and spot rare deviations from typical user behavior. The growth of these techniques aligns with the greater availability of computational resources and large-scale behavioral datasets like CERT r6.2, which enable effective training of complex models without significant performance loss.

Despite the growing preference for DL models, concerns about their opacity and lack of interpretability persist, which complicates their use in high-stakes environments such as financial institutions or government agencies. These concerns have sparked parallel research efforts focusing on explainable artificial intelligence (xAI), with techniques like SHAP and LIME used post hoc to interpret model decisions. The tension between detection performance and model transparency reflects a broader trend in AI research, where explainability has become a crucial requirement across various fields, including fraud detection and network intrusion.

Considering these dynamics, the dominance of deep learning in Figure 6 is not just about popularity but stems from its proven effectiveness in handling complex, sequential, and high-dimensional data. However, the field’s future seems to favor hybrid models that combine DL’s predictive power with the interpretability of traditional ML—an emerging trend seen in recent authors that use LSTM networks along with rule-based classification layers or combine VAEs with explainable decision trees. These hybrid approaches offer a promising compromise, striking a balance between strong performance and operational trustworthiness—an essential factor for real-world insider threat detection systems.

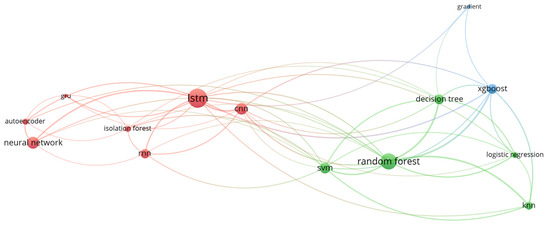

4.5.2. Algorithmic Hybridization and Co-Occurrence Patterns in Insider Threat Detection

The integration of multiple algorithmic approaches within a single architectural model has become a key methodological advancement in insider threat detection. As shown in Table 3, many hybrid models have been explored, combining ML, DL, or cross-paradigm pairings. Notably, the combinations of Decision Tree—Random Forest, Random Forest—XGBoost, and CNN—LSTM occurred most frequently (10 times), highlighting a trend toward leveraging both traditional tree-based classifiers and sequence modeling networks. These pairings demonstrate a strategic effort by researchers to boost performance through algorithmic complementarity—taking advantage of the interpretability of classical models and the pattern-recognition capabilities of neural networks.

Table 3.

Hybrid Algorithm Co-Occurrence.

Figure 7 illustrates the co-occurrence network of algorithms. At the center of this network are LSTM, Random Forest, and CNN, all of which show high edge density, making them key bridging nodes across different paradigms. LSTM demonstrates strong connections with both deep learning peers, like Neural Network and Autoencoder, and machine learning algorithms such as Random Forest and XGBoost, highlighting its flexibility in both temporal modeling and ensemble methods. Similarly, Random Forest acts as an important hub connecting to a wide range of machine learning classifiers like SVM, Decision Tree, and Logistic Regression, illustrating its adaptability and ongoing popularity.

Figure 7.

Algorithm Co-Occurrence Network.

Furthermore, available data indicates that these hybrid models are often used in sequential setups, where one algorithm (usually a DL model) handles feature transformation or encoding, followed by an ML model for the final decision. For example, models like CNN–LSTM or Autoencoder–Neural Network are commonly employed to process log sequences before passing them to a supervised classifier. In fewer cases, parallel ensemble schemes such as voting classifiers or boosted hybrids have been investigated, as shown by pairings like Random Forest—XGBoost or Logistic Regression—XGBoost. Although less common, these architectures reflect a trend toward hybrid voting frameworks that aim to combine accuracy and generalizability.

4.6. Methodological Limitations

While this review offers valuable insights into the intellectual and algorithmic landscape of insider threat detection research in cybersecurity, it has certain limitations. First, the study relied on three bibliographic databases—Scopus, Web of Science, and Google Scholar—which, despite their broad coverage, may not include all relevant publications or grey literature. The construction of search queries and keywords could also introduce bias, by excluding works that use non-standard terminology. Second, citation-based indicators such as citation counts and co-occurrence frequencies inherently favor older, well-cited publications. This can overshadow more recent contributions that have not yet accumulated citations but may nonetheless represent significant methodological innovations.

Third, the visualization and clustering results produced by the analysis tools used, VOS-viewer, are affected by algorithmic settings, such as normalization methods and threshold levels. In this study, thresholds were carefully selected and validated; however, alternative thresholding schemes could produce minor variations in network structure. Importantly, sensitivity analysis confirmed that the dominant thematic clusters—ML-centric, DL-centric, and behavioral/XAI-focused—remained stable across threshold variations, suggesting that the findings are robust rather than artifacts of parameter choice.

Finally, the combined effect of these biases may skew thematic conclusions by amplifying established perspectives while underrepresenting emerging ones. To mitigate these limitations in future work, complementary approaches such as incorporating altmetric indicators (e.g., downloads, social media mentions) and applying temporal segmentation analyses are recommended. These would help balance historical foundations with emerging trends, providing a more holistic picture of the field.

5. Discussion of Research Findings

This section places the findings within the wider body of work on insider threat detection, emphasizing how they confirm, expand, or refine previous investigations. The analysis highlights key insights regarding methodological development, interdisciplinary teamwork, transparency and explainability, dataset constraints, evaluation methods, and future research directions. In this context, the notion of symmetry provides a useful interpretive lens, as insider threat detection research continually seeks balance between accuracy and interpretability, innovation and reproducibility, as well as technical advances and organizational applicability.

5.1. Emerging Methodological Shifts

Early ML techniques such as Decision Trees, SVM, and Random Forest were widely used for insider threat detection between 2015 and 2018 because of their interpretability and relatively low computational demands. However, as noted by Le and Zincir-Heywood [52], these models struggled with dynamic and high-dimensional insider datasets, especially when it came to capturing sequential behavior. Our analysis shows that after 2019, DL models—especially LSTM and CNN—became much more prominent (Figure 6), supporting findings by Yuan et al. [53] that temporal modeling provides better performance in anomaly detection. Additionally, hybrid approaches like CNN–LSTM and Random Forest–XGBoost (Table 3) confirm that combining DL’s ability to represent complex patterns with ML’s interpretability can help reduce false positives [12,36]. While this aligns with trends observed in fraud detection and intrusion studies, it also emphasizes the ongoing challenge of transparency, a critical issue often discussed in the literature [41]. Our results, particularly the prominent role of LSTM in Figure 7, reinforce the current methodological consensus and highlight the need for innovations focused on explainability. These shifts also reflect a form of methodological symmetry: while DL architectures enhance the capacity to model complex behavioral patterns, traditional ML contributes interpretability, and hybrid approaches strive to reconcile both sides of this balance.

5.2. Interdisciplinary Collaboration and Knowledge Integration

The co-citation network in Figure 3 reveals three groups of influential authors, emphasizing the interdisciplinary nature of insider threat detection. Scholars such as Le and Zincir-Heywood [52] focus on ML-based anomaly detection, while Yuan et al. emphasize DL-focused behavioral modeling [53]. These groups support earlier findings that insider threat research increasingly combines perspectives from cybersecurity, behavioral science, and data analytics [47]. Our results also show strong regional collaborations, with China, India, and the United States accounting for more than half of the global output (Figure 4; Table 1). This confirms previous claims that the field is driven by methodological innovation in Asia-Pacific and policy priorities in North America. The emphasis on interdisciplinary collaboration, especially integrating behavioral insights with regulatory compliance (e.g., Regulation (EU) 2016/679 [54], ISO/IEC 27001:2022 [55]), reflects the trend observed in recent insider threat studies, suggesting that effective detection depends on bridging technical accuracy with human and organizational factors. The interdisciplinary nature of the field also demonstrates symmetry, where technical, behavioral, and regulatory perspectives converge to form a balanced framework for addressing insider threats.

5.3. Transparency and the Role of Explainable AI

While DL and hybrid models dominate the algorithmic landscape (53% of usage in Figure 6), their lack of transparency has been widely criticized, with authors such as Adadi and Berrada [41] highlighting the risks of deploying “black-box” systems in critical domains. Our findings confirm the growing importance of XAI, with SHAP and LIME increasingly used to provide feature-level insights [40]. This supports previous claims that explainability is both a technical and regulatory requirement under frameworks like GDPR [56]. Figure 5 emphasizes this, showing the prominence of “explainability” and “trust” among co-occurring keywords. However, most XAI implementations remain post hoc rather than intrinsic, confirming the research gap also noted in recent surveys [41,56]. The convergence between hybrid ML–DL architectures and explainability methods, such as CNN–Random Forest pipelines (Table 3), points to a promising direction toward inherently interpretable yet high-performing models. Therefore, our findings support and expand upon prior critiques by highlighting the imbalance between accuracy and transparency in current DL-focused frameworks. This underscores the asymmetry currently present in the field and reinforces the importance of future work aimed at restoring symmetry by embedding interpretability directly into high-performing models.

5.4. Dataset Limitations and Benchmarking Challenges

The bibliometric results confirm the heavy reliance on CERT datasets, a pattern seen in earlier studies [25,30]. While CERT has been essential for reproducibility, overreliance can lead to overfitting and poor generalization. Our analysis shows that real-world, unstructured logs remain underused due to privacy and security concerns, echoing issues raised in behavioral anomaly detection studies [30]. The lack of diverse benchmark datasets explains why high-performing models often struggle when deployed. Although recent efforts use generative models like VAEs to produce more realistic data [29], their adoption remains limited. Therefore, this bibliometric evidence supports existing critiques and underscores the urgent need for more collaborative dataset-sharing efforts between academia and industry. Addressing dataset challenges would help restore symmetry between experimental performance and real-world applicability, ensuring that advances translate effectively into practice.

5.5. Evaluation Metrics and Interpretability

Our analysis points out a narrow focus on accuracy and ROC-AUC as evaluation metrics. While these metrics offer useful starting points, they are not enough for imbalanced insider threat datasets. Sarhan and Altwaijry [10] highlighted the importance of precision, recall, and F1-score for meaningful assessment, while Sharma et al. [16] stressed that false positives and negatives carry significant operational costs. Despite these insights, little literature in our dataset included cost-sensitive or operationally relevant metrics. Even more striking is the absence of interpretability measures. Singh et al. [39] showed that hybrid models such as CNN–Random Forests not only improve accuracy but also provide feature-level transparency, offering one pathway to evaluation that balances performance with interpretability. Building on Adadi and Berrada’s [41] call for holistic AI assessment, future insider threat detection research must broaden evaluation frameworks to capture not only predictive accuracy but also the quality and clarity of explanations, ensuring the results are reproducible and actionable. Expanding evaluation frameworks to include both performance and interpretability would create a more symmetrical assessment of models, aligning technical outcomes with operational trustworthiness.

5.6. Symmetry as an Overarching Perspective

Taken together, the bibliometric findings reveal that the evolution of insider threat detection research can be understood through the lens of symmetry. Methodological developments highlight the balance between advanced DL architectures and the interpretability of traditional ML models, often reconciled through hybrid approaches. Interdisciplinary collaboration reflects symmetry in the integration of technical, behavioral, and regulatory perspectives, while transparency research underscores the asymmetry between accuracy and interpretability that must be addressed. Dataset limitations expose a lack of balance between experimental reproducibility and real-world applicability, and evaluation practices reveal the need for symmetrical consideration of both predictive performance and operational trust. Framing these insights through symmetry emphasizes that progress in insider threat detection depends not only on technical advances but also on maintaining equilibrium across methodological, organizational, and ethical dimensions. This perspective provides a coherent conceptual anchor for future research, aligning cybersecurity innovation with the journal’s thematic focus on symmetry.

6. Research Gaps and Future Research Direction

6.1. Research Gap

The review reveals several persistent gaps in insider threat detection. A common issue is the heavy dependence on the CERT dataset, which, while valuable as a well-known benchmark, is synthetic and cannot fully mimic the unpredictability of real-world insider behaviors. This dependence reduces the ecological validity of many studies, creating an asymmetry between benchmark performance and real-world applicability. Another gap is the limited adaptability of current models. Although approaches based on LSTM, CNNs, and autoencoders are prevalent in recent publications, many are built as static architectures and are poorly equipped to capture changing insider tactics, resulting in an imbalance between evolving threats and static defenses. Explainability also remains largely unexplored: high-performing deep learning models often operate as black boxes, making their decisions hard to interpret and thus reducing trust and adoption in sensitive environments. Lastly, evaluation methods are inconsistent. Many studies depend on accuracy and ROC-AUC, while neglecting more insightful metrics such as precision, recall, or interpretability measures, leading to an uneven evaluation landscape that hampers fair benchmarking.

6.2. Future Research Directions

Addressing these gaps requires a multi-faceted approach aimed at restoring symmetry between detection accuracy, adaptability, and interpretability. First, there is potential for developing adaptive hybrid architectures that combine temporal sequence models with generative frameworks, providing greater flexibility to detect evolving insider behaviors. Second, future research should systematically incorporate eXplainable AI techniques into detection models, ensuring a balanced interplay between performance and transparency to promote trust, accountability, and regulatory compliance. Third, while CERT remains a key benchmark for comparison, additional strategies are essential to overcome its limitations. These include using generative models to supplement rare attack cases, developing privacy-preserving mechanisms for organizational data sharing, and creating supplementary datasets that represent diverse insider threat scenarios. Lastly, standardized evaluation protocols must be established to ensure reproducibility and fairness. These protocols should integrate both performance metrics (accuracy, precision, recall, F1-score, ROC-AUC) and interpretability evaluations, offering a symmetrically comprehensive benchmark for insider threat detection models.

6.3. Proposed Future Research Trajectory: BiLSTM–VAE Hybrid

A promising path involves combining bidirectional sequence modeling with generative learning in a manner that reflects the symmetry of adversarial and defensive dynamics in cybersecurity. Bidirectional LSTM (BiLSTM) networks can capture dependencies in both forward and backward directions, which is especially useful in insider threat scenarios where the meaning of an action often relies on both earlier and later events. Variational Autoencoders (VAEs) offer powerful latent representations and anomaly detection through reconstruction error, making them effective in situations with class imbalance and hidden behavioral patterns. A hybrid BiLSTM–VAE framework could leverage the strengths of both, providing contextual temporal analysis along with probabilistic anomaly detection. Adding explanation tools, such as attention layers or post hoc attribution methods, would improve transparency, ensuring results are both accurate and actionable. Benchmarking this hybrid against established baselines—using CERT for comparison while testing additional or expanded datasets for robustness—would demonstrate how such an approach can achieve symmetry between adaptability, interpretability, and practical usefulness.

7. Conclusions

This study confirms that insider threat detection research is transitioning from traditional ML toward hybrid DL approaches, with growing—though still limited—attention to explainability. The work of Pantelidis et al., which demonstrates the utility of generative models like VAEs for anomaly detection, provides strong motivation for advancing hybrid frameworks that achieve a symmetry between adaptability and transparency. Policy and practical considerations, particularly compliance with data protection regulations and the need for interpretable tools in operational environments, further highlight the importance of balancing technical innovation with organizational accountability. In addition, the bibliometric trend toward eXplainable AI (xAI) reflects sector-specific regulatory demands—for example, transparent decision-support systems in healthcare and interpretable fraud detection models in finance, where auditability is mandated. Similarly, the emphasis on dataset diversity is especially relevant for defense and critical infrastructure, where over-reliance on narrow benchmark datasets risks limiting real-world applicability.

At the theoretical level, embedding behavioral modeling within advanced architectures underscores the socio-technical nature of insider threats, revealing that current limitations often stem from an imbalance between detection accuracy and trustworthiness. Future research focused on adaptive, explainable, and hybridized solutions can restore this balance, enabling not only higher detection accuracy but also greater organizational trust and resilience. Ultimately, this study underscores the need to align insider threat detection research with the broader principles of responsible AI and cybersecurity ethics. Emphasizing transparency, explainability, and accountability allows xAI-driven approaches to strengthen technical robustness while also advancing fairness and trustworthiness in practical cybersecurity applications.

Author Contributions

Conceptualization, H.K.O., W.L.B.-A. and F.L.; methodology, H.K.O., I.E.A. and R.C.M.; software, H.K.O., K.B.-D. and S.O.F.; formal analysis, H.K.O., K.B.-D. and S.O.F.; Writing—original draft preparation, H.K.O. and K.B.-D.; Writing—review and editing, I.E.A. and R.C.M.; Visualization, H.K.O. and K.B.-D.; supervision, W.L.B.-A., F.L., I.E.A. and R.C.M.; Funding acquisition, I.E.A. and R.C.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Key R&D Program of China (Grant number: 2023YFE0110200) and in part by the National Research Foundation of South Africa (Grant Number 151178).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| BiLSTM | Bidirectional Long Short-Term Memory |

| CERT | Computer Emergency Response Team |

| CNN | Convolutional Neural Network |

| CSV | Comma-Separated Values |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| DL | Deep Learning |

| GDPR | General Data Protection Regulation |

| GNN | Graph Neural Network |

| GRU | Gated Recurrent Unit |

| ISO | International Organisation for Standardization |

| KNN | K-Nearest neighbor |

| LIME | Local Interpretable Model-Agnostic Explanation |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| OC-SVM | One Class Support Vector Machine |

| RNN | Recurrent Neural Network |

| ROC-AUC | Receiver Operating Characteristic-Area Under Curve |

| RQ | Research Question |

| SHAP | SHapley Additive exPlanations |

| SVM | Support Vector Machine |

| UAE | United Arab Emirates |

| VAE | Variational Autoencoder |

| xAI | Explainable Artificial Intelligence |

References

- Insider Threat Report Trends, Challenges, and Solutions. Available online: https://www.securonix.com/wp-content/uploads/2024/01/2024-Insider-Threat-Report-Securonix-final.pdf (accessed on 15 July 2025).

- Ponemon Institute and DTEX Systems. 2023 Cost of Insider Risks: Global Study. Available online: https://www.dtexsystems.com/blog/cost-of-insider-risks/ (accessed on 6 June 2025).

- Cost of a Data Breach Report 2025. Available online: https://www.ibm.com/think/topics/insider-threats (accessed on 25 August 2025).

- Peinado, V.; Valiente, C.; Contreras, A.; Trucharte, A.; Vázquez, C. Insider Threats: Frequency, Cost, and Human Error Stats. Personal. Individ. Differ. 2024, 230, 112782. [Google Scholar] [CrossRef]

- Nasir, R.; Afzal, M.; Latif, R.; Iqbal, W. Behavioral Based Insider Threat Detection Using Deep Learning. IEEE Access 2021, 9, 143266–143274. [Google Scholar] [CrossRef]

- 2024 Data Breach Investigations Report. Available online: https://www.verizon.com/business/resources/reports/2024-dbir-data-breach-investigations-report.pdf (accessed on 25 August 2025).

- 2023 Verizon Data Breach Investigations Report Industry-Specific Breakdown. Available online: https://www.phishingbox.com/news/post/2023-verizon-data-breach-investigations-report-industry-specific-breakdown (accessed on 7 June 2025).

- Mahalakshmi, V.; Garg, A.; Rekha, M.M.; Diwakar, M.; Vigenesh, M.; Verma, R. Detecting Malicious Insiders In Social Networks and Mitigating Cyber Threats with Advanced Security Approaches. In Proceedings of the 2nd IEEE International Conference on Innovations in High-Speed Communication and Signal Processing (IHCSP), Bhopal, India, 6–8 December 2024. [Google Scholar] [CrossRef]

- Hines, C.; Youssef, A. Class Balancing for Fraud Detection in Point of Sale Systems. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 4730–4739. [Google Scholar] [CrossRef]

- Sarhan, B.B.; Altwaijry, N. Insider Threat Detection Using Machine Learning Approach. Appl. Sci. 2022, 13, 259. [Google Scholar] [CrossRef]

- Manikandan, S.P.; Kumar, K.A.; Vardhan, T.S.H.; Thanigaivel, G.; Venkataramanaiah, B.; Reddy, S.P.V.V. Detecting Insider Threats in Cybersecurity Using Machine Learning. In Proceedings of the 5th International Conference on IoT Based Control Networks and Intelligent Systems (ICICNIS), Bengaluru, India, 17–18 December 2024; pp. 152–158. [Google Scholar] [CrossRef]

- Yang, M.; Yi, J.; Zhu, H.; Tan, L. CNN-LSTM-Based Insider Threat Detection Model. In Proceedings of the 2025 International Conference on Electrical Automation and Artificial Intelligence (ICEAAI), Guangzhou, China, 10–12 January 2025; pp. 985–990. [Google Scholar] [CrossRef]

- Xiao, F.; Chen, S.; Chen, S.; Ma, Y.; He, H.; Yang, J. SENTINEL: Insider Threat Detection Based on Multi-Timescale User Behavior Interaction Graph Learning. IEEE Trans. Netw. Sci. Eng. 2024, 12, 774–790. [Google Scholar] [CrossRef]

- Denning, D.E. An Intrusion-Detection Model. IEEE Trans. Softw. Eng. 1987, 13, 222–232. [Google Scholar] [CrossRef]

- Mehmood, R.; Singh, P.; Jeffery, Z. Unsupervised Learning for Insider Threat Prediction: A Behavioral Analysis Approach. In Proceedings of the 17th International Conference on Security of Information and Networks (SIN), Sydney, Australia, 2–4 December 2024. [Google Scholar] [CrossRef]

- Sharma, B.; Pokharel, P.; Joshi, B. User Behavior Analytics for Anomaly Detection Using LSTM Autoencoder-Insider Threat Detection. In Proceedings of the 11th International Conference on Advances in Information Technology, Bangkok, Thailand, 1–3 July 2020; ACM International Conference Proceeding Series. ACM: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Bhasha, S.J.; Panda, S. Data Privacy Preservation and Authentication Scheme for Secured IoMT Communication Using Enhanced Heuristic Approach with Deep Learning. Cybern. Syst. 2024, 1–42. [Google Scholar] [CrossRef]

- Gao, P.; Zhang, H.; Wang, M.; Yang, W.; Wei, X.; Lv, Z.; Ma, Z. Deep Temporal Graph Infomax for Imbalanced Insider Threat Detection. J. Comput. Inf. Syst. 2023, 65, 108–118. [Google Scholar] [CrossRef]

- Tao, X.; Yu, Y.; Fu, L.; Liu, J.; Zhang, Y. An insider user authentication method based on improved temporal convolutional network. High Confid. Comput. 2023, 3, 100169. [Google Scholar] [CrossRef]

- Kumar, S.; Raju, S. Enhancing Threat Detection and Response Through Cloud-Native Security Solutions. In Proceedings of the International Conference on Engineering and Emerging Technologies (ICEET), Dubai, United Arab Emirates, 27–28 December 2024. [Google Scholar] [CrossRef]

- Chaurasia, A.; Mishra, A.; Rao, U.P.; Kumar, A. Deep Learning-Based Solution for Intrusion Detection in the Internet of Things. In International Conference on Computation Intelligence and Network Systems; Communications in Computer and Information Science (CCIS, Volume 1978); Springer: Cham, Switzerland, 2024; pp. 75–89. [Google Scholar] [CrossRef]

- Jing, Y.; Jiang, C.; Chen, W.; Xu, Y. Analyze Organizational Internal Threats Using Machine Learning. In Proceedings of the 2023 5th International Conference on Applied Machine Learning (ICAML), Dalian, China, 21–23 July 2023; pp. 6–10. [Google Scholar] [CrossRef]

- Ge, D.; Zhong, S.; Chen, K. Multi-source data fusion for insider threat detection using residual networks. In Proceedings of the 2022 3rd International Conference on Electronics, Communications and Information Technology (CECIT), Sanya, China, 23–25 December 2022; pp. 359–366. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Ma, Q.; Rastogi, N. DANTE: Predicting insider threat using LSTM on system logs. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 1151–1156. [Google Scholar] [CrossRef]

- Wu, S.X.; Li, G.; Zhang, S.; Lin, X. Detection of Insider Attacks in Distributed Projected Subgradient Algorithms. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 1099–1111. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar] [CrossRef]

- Tahir, S. Variational Autoencoders (VAEs) for Anomaly Detection. In Utilizing Generative AI for Cyber Defense Strategies; IGI Global Scientific Publishing: Hershey, PA, USA, 2024; pp. 309–326. [Google Scholar] [CrossRef]

- Pantelidis, E.; Bendiab, G.; Shiaeles, S.; Kolokotronis, N. Insider threat detection using deep autoencoder and variational autoencoder neural networks. In Proceedings of the 2021 IEEE International Conference on Cyber Security and Resilience (CSR), Rhodes, Greece, 26–28 July 2021; pp. 129–134. [Google Scholar] [CrossRef]

- Vinay, M.S.; Yuan, S.; Wu, X. Contrastive Learning for Insider Threat Detection. In International Conference on Database Systems for Advanced Applications; Lecture Notes in Computer Science (LNCS, Volume 13245) (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer: Cham, Switzerland, 2022; pp. 395–403. [Google Scholar] [CrossRef]

- Eberle, W.; Graves, J.; Holder, L. Insider Threat Detection Using a Graph-Based Approach. J. Appl. Secur. Res. 2010, 6, 32–81. [Google Scholar] [CrossRef]

- Xiao, J.; Yang, L.; Zhong, F.; Wang, X.; Chen, H.; Li, D. Robust Anomaly-Based Insider Threat Detection Using Graph Neural Network. IEEE Trans. Netw. Serv. Manag. 2022, 20, 3717–3733. [Google Scholar] [CrossRef]

- Kexiong, F.; Jiang, Z.; Lin, S.; Weiping, W.; Yong, C.; Fan, Z. A Graph Convolution Neural Network Based Method for Insider Threat Detection. In Proceedings of the 2022 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking, Melbourne, Australia, 17–19 December 2022. [Google Scholar] [CrossRef]

- Ganfure, G.O.; Wu, C.F.; Chang, Y.H.; Shih, W.K. DeepGuard: Deep Generative User-behavior Analytics for Ransomware Detection. In Proceedings of the 2020 IEEE International Conference on Intelligence and Security Informatics (ISI), Arlington, VA, USA, 9–10 November 2020. [Google Scholar] [CrossRef]

- Mamidanna, S.K.; Reddy, C.R.K.; Gujju, A. Detecting an Insider Threat and Analysis of XGBoost using Hyperparameter tuning. In Proceedings of the IEEE International Conference on Advances in Computing, Communication and Applied Informatics (ACCAI), Chennai, India, 28–29 January 2022. [Google Scholar] [CrossRef]

- Saaudi, A.; Al-Ibadi, Z.; Tong, Y.; Farkas, C. Insider threats detection using CNN-LSTM model. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 12–14 December 2018; pp. 94–99. [Google Scholar] [CrossRef]

- Le, D.C.; Zincir-Heywood, N. Exploring Adversarial Properties of Insider Threat Detection. In Proceedings of the 2020 IEEE Conference on Communications and Network Security (CNS), Avignon, France, 29 June–1 July 2020. [Google Scholar] [CrossRef]

- Singh, M.; Mehtre, B.M.; Sangeetha, S. Insider Threat Detection Based on User Behaviour Analysis. In International Conference on Machine Learning, Image Processing, Network Security and Data Sciences; Communications in Computer and Information Science (CCIS, Volume 1241); Springer: Cham, Switzerland, 2020; pp. 559–574. [Google Scholar] [CrossRef]

- Zhang, Z.; Al Hamadi, H.; Damiani, E.; Yeun, C.Y.; Taher, F. Explainable Artificial Intelligence Applications in Cyber Security: State-of-the-Art in Research. IEEE Access 2022, 10, 93104–93139. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Mohamed, M. Comparative Evaluation of VAEs, VAE-GANs and AAEs for Anomaly Detection in Network Intrusion Data. Emit. Int. J. Eng. Technol. 2023, 11, 160–173. [Google Scholar] [CrossRef]

- Duessel, P.; Luo, S.; Flegel, U.; Dietrich, S.; Meier, M. Tracing privilege misuse through behavioral anomaly detection in geometric spaces. In Proceedings of the 2020 13th Systematic Approaches to Digital Forensic Engineering (SADFE), New York, NY, USA, 15 May 2020; pp. 22–31. [Google Scholar] [CrossRef]

- Kumar, T.; Minakshi; Joshi, K. Empowering cloud security through advanced monitoring and analytics techniques. In Risk-Based Approach to Secure Cloud Migration; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 163–186. [Google Scholar] [CrossRef]

- Kumar, M.K.; Kumari, S.; Bharathi, M.; Lavanya, P.; Glory, H.A.; Sriram, V.S.S. Insider Threat Detection in User Activity Data Using Optimized LSTM-AE. Lect. Notes Data Eng. Commun. Technol. 2025, 236, 287–300. [Google Scholar] [CrossRef]

- Lavanya, P.; Raman, V.S.V.; Gosakan, S.S.; Glory, H.A.; Sriram, V.S.S. Silent Threats: Monitoring Insider Risks in Healthcare Sector. In International Conference on Applications and Techniques in Information Security; Communications in Computer and Information Science (CCIS, Volume 2306); Springer: Cham, Switzerland, 2025; pp. 183–198. [Google Scholar] [CrossRef]

- Villarreal-Vasquez, M.; Modelo-Howard, G.; Dube, S.; Bhargava, B. Hunting for Insider Threats Using LSTM-Based Anomaly Detection. IEEE Trans. Dependable Secur. Comput. 2021, 20, 451–462. [Google Scholar] [CrossRef]

- Suganya, M.; Sasipraba, T. Stochastic Gradient Descent long short-term memory based secure encryption algorithm for cloud data storage and retrieval in cloud computing environment. J. Cloud Comput. 2023, 12, 74. [Google Scholar] [CrossRef]

- Padmavathi, G.; Shanmugapriya, D.; Asha, S. A Framework to Detect the Malicious Insider Threat in Cloud Environment using Supervised Learning Methods. In Proceedings of the 2022 9th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 23–25 March 2022; pp. 354–358. [Google Scholar] [CrossRef]

- Kumar, P.; Javeed, D.; Kumar, R.; Islam, A.K.M.N. Blockchain and explainable AI for enhanced decision making in cyber threat detection. Softw. Pract. Exp. 2024, 54, 1337–1360. [Google Scholar] [CrossRef]

- Parshutin, S.; Kirshners, A.; Kornijenko, Y.; Zabiniako, V.; Gasparovica-Asite, M.; Rozkalns, A. Classification with LSTM Networks in User Behaviour Analytics with Unbalanced Environment. Autom. Control Comput. Sci. 2021, 55, 85–91. [Google Scholar] [CrossRef]

- Le, D.C.; Zincir-Heywood, N. Anomaly Detection for Insider Threats Using Unsupervised Ensembles. IEEE Trans. Netw. Serv. Manag. 2021, 18, 1152–1164. [Google Scholar] [CrossRef]

- Yuan, F.; Cao, Y.; Shang, Y.; Liu, Y.; Tan, J.; Fang, B. Insider threat detection with deep neural network. In International Conference on Computational Science; Lecture Notes in Computer Science (LNCS, Volume 10860) (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2018; pp. 43–54. [Google Scholar] [CrossRef]

- Regulation (EU) 2016/679; General Data Protection Regulation (GDPR). European Union: Brussels, Belgium, 2016.

- ISO/IEC 27001:2022; Information Security, Cybersecurity and Privacy Protection—Information Security Management Systems —Requirements. International Organization for Standardization: Geneva, Switzerland, 2022.

- Kuppa, A.; Le-Khac, N.A. Black Box Attacks on Explainable Artificial Intelligence(XAI) methods in Cyber Security. In Proceedings of the International Joint Conference on Neural Networks, Glasgow, UK, 19–24 July 2020. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).