Graph-MambaRoadDet: A Symmetry-Aware Dynamic Graph Framework for Road Damage Detection

Abstract

1. Introduction

- Our contributions are summarized as follows:

- We propose Graph-MambaRoadDet (GMRD), the first road damage detection framework that fuses efficient state–space modeling (Mamba) with dynamic graph generation, enabling simultaneous modeling of spatial–temporal patterns and evolving topologies.

- Our approach introduces a novel combination of Graph-Generating SSMs and deformable scanning modules, allowing automatic and adaptive construction of sparse learnable road graphs without reliance on fixed adjacency priors.

- We design an attention-calibrated topology refinement mechanism, leveraging self-supervised signals to enhance the stability and accuracy of dynamically generated graph structures.

- GMRD achieves state-of-the-art performance on multiple benchmarks in terms of both detection accuracy and segment-level consistency, while maintaining real-time inference speed and low computational cost suitable for edge devices.

- The proposed framework is highly extensible, supporting plug-and-play adaptation to other infrastructure inspection tasks through simple head replacement.

Paper Structure

2. Related Work

2.1. Road Damage Detection and Vision Models

2.2. Graph Neural Networks and Dynamic Topology Learning

2.3. State–Space Models and Hybrid Architectures

3. Methodology

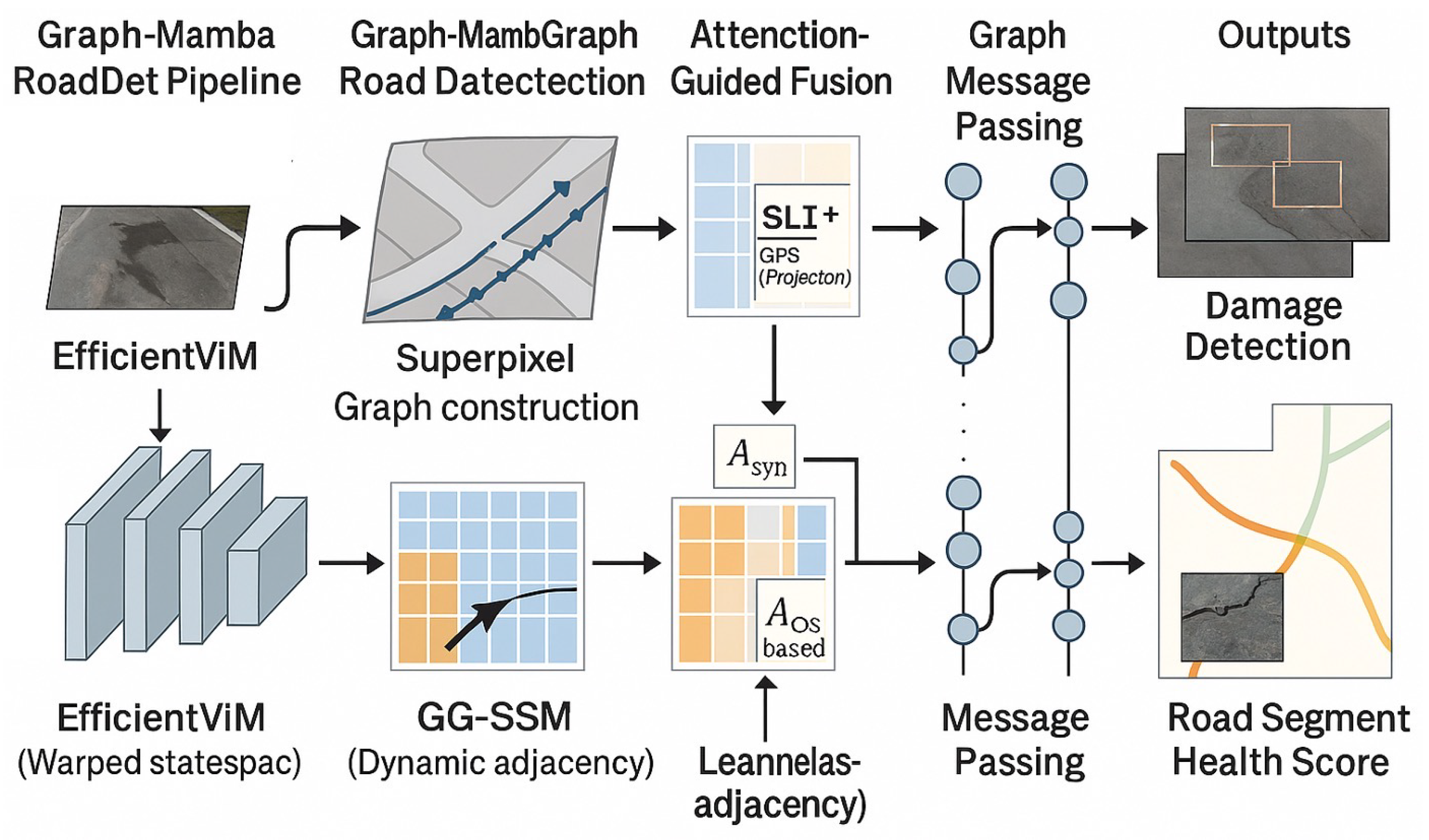

3.1. Overview

3.2. EfficientViM Backbone

3.3. Deformable Mamba Blocks

3.4. Super-Pixel Graph Construction

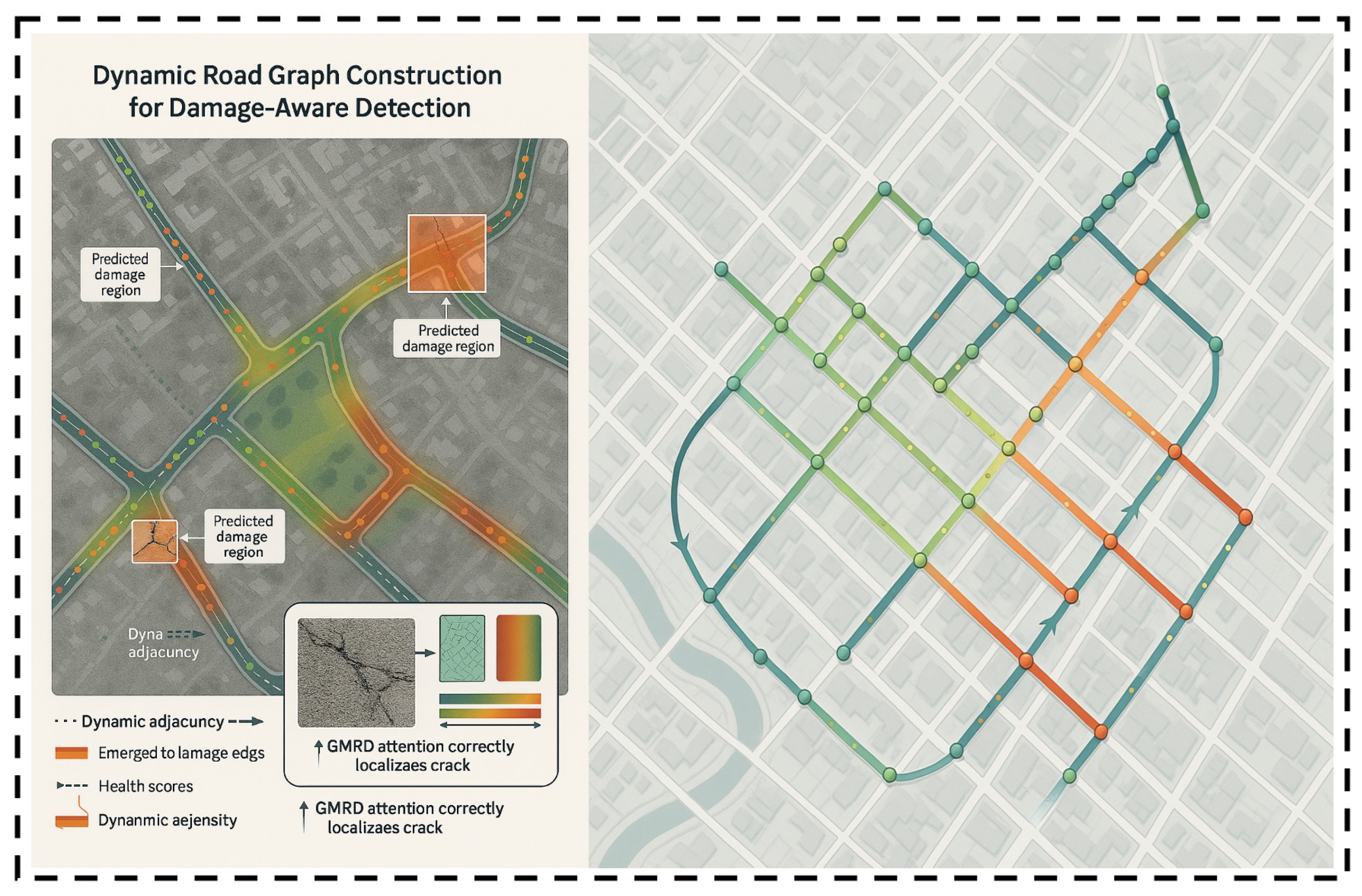

3.5. Dynamic Graph Generation via GG-SSM

3.6. Attention-Guided Topology Calibration

3.7. Graph Message Passing

3.8. Prediction Heads

3.9. Symmetry-Aware Structural Design

3.10. Training Objectives

3.11. Complexity Analysis

3.12. Crack Type and Severity Standards

4. Experiments

4.1. Datasets and Annotations

- RDD2022

- TD-RD

- RoadBench-100K

- Pre-processing

- Evaluation splits

4.2. Implementation Details

- Framework.

- Backbone and input resolution.

- Optimization.

- Data augmentation.

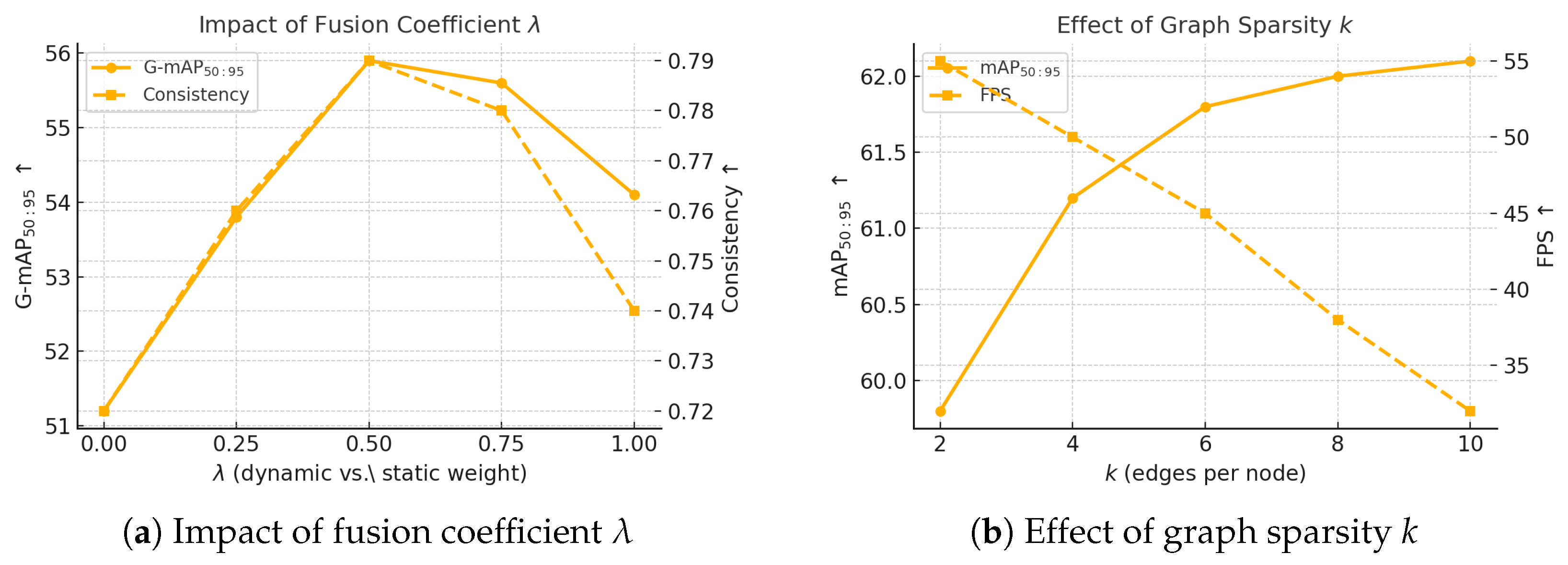

- Graph hyperparameters.

- Edge deployment.

- Evaluation Metrics.

4.3. Comparison with State of the Art

4.4. Cross-Domain Generalization Study

4.5. Ablation Studies

- Effect of DefMamba.

- Effect of GG-SSM.

- Effect of EGTR calibration.

- Effect of Graph Consistency Loss.

- Takeaway.

4.6. Edge-Device Efficiency

- Real-time throughput.

- Latency and power.

- Memory footprint and compatibility.

4.7. Qualitative and Robustness Analysis

- Visual comparison in challenging scenes.

- Attention-map inspection.

- Failure-mode discussion.

4.8. Hardware Runtime Comparison with Lightweight Models

5. Discussion and Future Work

- Symmetry-Aware Gains.

- Limitations.

- Runtime Bottlenecks.

- Generalization and Deployability.

- Future Directions.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hacıefendioğlu, K.; Başağa, H.B. Concrete road crack detection using deep learning-based faster R-CNN method. Iran. J. Sci. Technol. Trans. Civ. Eng. 2022, 46, 1621–1633. [Google Scholar] [CrossRef]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road damage detection using deep neural networks with images captured through a smartphone. arXiv 2018, arXiv:1801.09454. [Google Scholar] [CrossRef]

- Wang, N.; Shang, L.; Song, X. A transformer-optimized deep learning network for road damage detection and tracking. Sensors 2023, 23, 7395. [Google Scholar] [CrossRef] [PubMed]

- Jepsen, T.S.; Jensen, C.S.; Nielsen, T.D. Graph convolutional networks for road networks. IEEE Trans. Intell. Transp. Syst. 2019, 23, 460–463. [Google Scholar]

- Lebaku, P.K.R.; Gao, L.; Lu, P.; Sun, J. Deep learning for pavement condition evaluation using satellite imagery. Infrastructures 2024, 9, 155. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4015–4026. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive Representation Learning on Large Graphs. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 1024–1034. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Gu, A.; Tao, A. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zubic, N.; Scaramuzza, D. Gg-ssms: Graph-generating state space models. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 28863–28873. [Google Scholar]

- Liu, L.; Zhang, M.; Yin, J.; Liu, T.; Ji, W.; Piao, Y.; Lu, H. Defmamba: Deformable visual state space model. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 8838–8847. [Google Scholar]

- Li, L.; Zhou, Z.; Wu, S.; Cao, Y. Multi-scale edge-guided learning for 3d reconstruction. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 109. [Google Scholar] [CrossRef]

- Koch, C.; Brilakis, I. Pothole detection in asphalt pavement images. Adv. Eng. Inform. 2015, 29, 966–975. [Google Scholar] [CrossRef]

- Fan, Z.; Wu, Y.; Lu, J.; Li, W. Automatic pavement crack detection based on structured prediction with the convolutional neural network. arXiv 2018, arXiv:1802.02208. [Google Scholar] [CrossRef]

- Chen, G.H.; Ni, J.; Chen, Z.; Huang, H.; Sun, Y.L.; Ip, W.H.; Yung, K.L. Detection of highway pavement damage based on a CNN using grayscale and HOG features. Sensors 2022, 22, 2455. [Google Scholar] [CrossRef]

- Safyari, Y.; Mahdianpari, M.; Shiri, H. A review of vision-based pothole detection methods using computer vision and machine learning. Sensors 2024, 24, 5652. [Google Scholar] [CrossRef]

- Zhang, J.; Xia, H.; Li, P.; Zhang, K.; Hong, W.; Guo, R. A Pavement Crack Detection Method via Deep Learning and a Binocular-Vision-Based Unmanned Aerial Vehicle. Appl. Sci. 2024, 14, 1778. [Google Scholar] [CrossRef]

- Li, K.; Xu, W.; Yang, L. Deformation characteristics of raising, widening of old roadway on soft soil foundation. Symmetry 2021, 13, 2117. [Google Scholar] [CrossRef]

- Fan, L.; Zou, J. A Novel Road Crack Detection Technology Based on Deep Dictionary Learning and Encoding Networks. Appl. Sci. 2023, 13, 12299. [Google Scholar] [CrossRef]

- Hamishebahar, Y.; Guan, H.; So, S.; Jo, J. A Comprehensive Review of Deep Learning-Based Crack Detection Approaches. Appl. Sci. 2022, 12, 1374. [Google Scholar] [CrossRef]

- Ahmed, K.R. Smart Pothole Detection Using Deep Learning Based on Dilated Convolution. Sensors 2021, 21, 8406. [Google Scholar] [CrossRef]

- Li, Y.; Chen, J.; Feng, X.; Wang, X.; Zhang, L. A Pavement Crack Detection and Evaluation Framework for a UAV-Based Inspection System. Appl. Sci. 2024, 14, 1157. [Google Scholar] [CrossRef]

- Liu, S.S.; Budiwirawan, A.; Arifin, M.F.A.; Chen, W.T.; Huang, Y.H. Optimization model for the pavement pothole repair problem considering consumable resources. Symmetry 2021, 13, 364. [Google Scholar] [CrossRef]

- Li, Z.; Ji, Y.; Wu, A.; Xu, H. MGD-YOLO: An Enhanced Road Defect Detection Algorithm Based on Multi-Scale Attention Feature Fusion. Comput. Mater. Contin. 2025, 84, 5613–5635. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. DeepCrack: Learning Hierarchical Convolutional Features for Crack Detection. IEEE Trans. Image Process. 2019, 28, 1498–1512. [Google Scholar] [CrossRef] [PubMed]

- Zhu, G.; Fan, Z.; Liu, J.; Yuan, D.; Ma, P.; Wang, M.; Sheng, W.; Wang, K.C. RHA-Net: An Encoder-Decoder Network with Residual Blocks and Hybrid Attention Mechanisms for Pavement Crack Segmentation. arXiv 2022, arXiv:2207.14166. [Google Scholar]

- Li, H.; Song, D.; Liu, Y.; Li, B. Automatic pavement crack detection by multi-scale image fusion. IEEE Trans. Intell. Transp. Syst. 2018, 20, 2025–2036. [Google Scholar] [CrossRef]

- Shi, P.; Zhu, F.; Xin, Y.; Shao, S. U2CrackNet: A deeper architecture with two-level nested U-structure for pavement crack detection. Struct. Health Monit. 2023, 22, 2910–2921. [Google Scholar] [CrossRef]

- Liu, H.; Miao, X.; Mertz, C.; Xu, C.; Kong, H. Crackformer: Transformer network for fine-grained crack detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3783–3792. [Google Scholar]

- Wang, C.; Liu, H.; An, X.; Gong, Z.; Deng, F. SwinCrack: Pavement crack detection using convolutional swin-transformer network. Digit. Signal Process. 2024, 145, 104297. [Google Scholar] [CrossRef]

- Tang, Z.; Chamchong, R.; Pawara, P. A comparison of road damage detection based on YOLOv8. In Proceedings of the 2023 International Conference on Machine Learning and Cybernetics (ICMLC), Adelaide, Australia, 9–11 July 2023; pp. 223–228. [Google Scholar]

- Park, J.; Min, K.; Kim, H.; Lee, W.; Cho, G.; Huh, K. Road surface classification using a deep ensemble network with sensor feature selection. Sensors 2018, 18, 4342. [Google Scholar] [CrossRef]

- Kailkhura, V.; Aravindh, S.; Jha, S.S.; Jayanthi, N. Ensemble learning-based approach for crack detection using CNN. In Proceedings of the 2020 4th International Conference on Trends in Electronics and Informatics (ICOEI) (48184), Tirunelveli, India, 15–17 June 2020; pp. 808–815. [Google Scholar]

- Huang, R.; Pedoeem, J.; Chen, C. YOLO-LITE: A real-time object detection algorithm optimized for non-GPU computers. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2503–2510. [Google Scholar]

- Feng, W.; Guan, F.; Sun, C.; Xu, W. Road-SAM: Adapting the segment anything model to road extraction from large very-high-resolution optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6012605. [Google Scholar] [CrossRef]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Wang, B.; Lin, Y.; Guo, S.; Wan, H. GSNet: Learning spatial-temporal correlations from geographical and semantic aspects for traffic accident risk forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 4402–4409. [Google Scholar]

- Yuan, T.; Cao, W.; Zhang, S.; Yang, K.; Schoen, M.; Duraisamy, B. Lane detection and estimation from surround view camera sensing systems. In Proceedings of the 2023 IEEE Sensors, Vienna, Austria, 29 October–1 November 2023; pp. 1–4. [Google Scholar]

- Brust, C.A.; Sickert, S.; Simon, M.; Rodner, E.; Denzler, J. Convolutional patch networks with spatial prior for road detection and urban scene understanding. arXiv 2015, arXiv:1502.06344. [Google Scholar] [CrossRef]

- Xiao, X.; Li, Z.; Wang, W.; Xie, J.; Lin, H.; Roy, S.K.; Wang, T.; Xu, M. TD-RD: A Top-Down Benchmark with Real-Time Framework for Road Damage Detection. arXiv 2025, arXiv:2501.14302. [Google Scholar]

- Li, Z.; Xie, Y.; Xiao, X.; Tao, L.; Liu, J.; Wang, K. An Image Data Augmentation Algorithm Based on YOLOv5s-DA for Pavement Distress Detection. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022; pp. 891–895. [Google Scholar] [CrossRef]

- Weng, W.; Fan, J.; Wu, H.; Hu, Y.; Tian, H.; Zhu, F.; Wu, J. A decomposition dynamic graph convolutional recurrent network for traffic forecasting. Pattern Recognit. 2023, 142, 109670. [Google Scholar] [CrossRef]

- Xu, Y.; Han, L.; Zhu, T.; Sun, L.; Du, B.; Lv, W. Generic dynamic graph convolutional network for traffic flow forecasting. Inf. Fusion 2023, 100, 101946. [Google Scholar] [CrossRef]

- Kim, H.; Lee, B.S.; Shin, W.Y.; Lim, S. Graph anomaly detection with graph neural networks: Current status and challenges. IEEE Access 2022, 10, 111820–111829. [Google Scholar] [CrossRef]

- Pham, T.V.; Tran, N.N.Q.; Pham, H.M.; Nguyen, T.M.; Ta Minh, T. Efficient low-latency dynamic licensing for deep neural network deployment on edge devices. In Proceedings of the 3rd International Conference on Computational Intelligence and Intelligent Systems, Tokyo, Japan, 13–15 November 2020; pp. 44–49. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Li, K.; Li, X.; Wang, Y.; He, Y.; Wang, Y.; Wang, L.; Qiao, Y. VideoMamba: State Space Model for Efficient Video Understanding. In Proceedings of the Computer Vision—ECCV 2024: 18th European Conference, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Lee, S.; Choi, J.; Kim, H.J. EfficientViM: Efficient Vision Mamba with Hidden-State Mixer based State Space Duality. In Proceedings of the IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR), Nashville, TN, USA, 11–15 June 2025; pp. 14923–14933. [Google Scholar]

- Guo, X.; Zhao, L. A systematic survey on deep generative models for graph generation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5370–5390. [Google Scholar] [CrossRef]

- Chelliah, P.R.; Rahmani, A.M.; Colby, R.; Nagasubramanian, G.; Ranganath, S. Model Optimization Methods for Efficient and Edge AI: Federated Learning Architectures, Frameworks and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2024. [Google Scholar]

- Miller, J.S.; Bellinger, W.Y. Distress Identification Manual for the Long-Term Pavement Performance Program; Federal Highway Administration: Washington, DC, USA, 2003. [Google Scholar]

- ASTM-D6433; Standard Practice for Roads and Parking Lots Pavement Condition Index Surveys. ASTM: West Conshohocken, PA, USA, 2009.

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2022: A Multi-National Image Dataset for Automatic Road Damage Detection. arXiv 2022, arXiv:2209.08538. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic Road Crack Detection Using Random Structured Forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Xiao, X.; Zhang, Y.; Wang, J.; Zhao, L.; Wei, Y.; Li, H.; Li, Y.; Wang, X.; Roy, S.K.; Xu, H.; et al. RoadBench: A Vision-Language Foundation Model and Benchmark for Road Damage Understanding. arXiv 2025, arXiv:2507.17353. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

| Dataset | #Images | Resolution | Annotation Type | Topology Info |

|---|---|---|---|---|

| RDD2022 [55] | 26,336 | 600 × 600 | Box (4-class) | No |

| TD-RD [41] | 11,250 | 1024 × 768 | Pixel-wise Mask (5-class) | No |

| CRACK500 [56] | 500 | 2000 × 1500 | Binary Mask | No |

| RoadBench-100K (Ours) [57] | 103,418 | 1280 × 720 | Box + Mask + Graph | Yes |

| Method | RDD2022 | TD-RD | RoadBench | Params (M) | GFLOPs |

|---|---|---|---|---|---|

| mAP | mAP | mAP | |||

| CNN/Transformer-based methods | |||||

| YOLOv8-s | 50.5 | 47.9 | 45.1 | 11.2 | 95 |

| RT-DETR-R50 | 55.1 | 48.4 | 47.0 | 24.0 | 236 |

| Mask2Former-SwinB | 57.3 | 54.8 | 51.1 | 47.1 | 322 |

| Graph-based and state–space baselines | |||||

| SD-GCN (graph-based) | 58.4 | 57.6 | 52.3 | 19.7 | 143 |

| Mamba-Adaptor-Det (state–space) | 59.2 | 58.1 | 52.8 | 18.3 | 128 |

| EffViM-T1 (no graph) | 57.9 | 55.0 | 51.6 | 4.2 | 34 |

| GMRD (Ours) | 61.8 | 60.9 | 55.4 | 1.8 | 12 |

| Method | Edge Efficiency | Topology Metrics | |||

|---|---|---|---|---|---|

| FPS | Latency (ms) | Power (W) | G-mAP | Consistency | |

| YOLOv8-s | 26 | 38 | 9.7 | 42.7 | 0.68 |

| Mask2Former-SwinB | — | — | — | 48.3 | 0.71 |

| SD-GCN | 18 | 55 | 10.5 | 50.6 | 0.74 |

| Mamba-Adaptor-Det | 22 | 45 | 9.9 | 51.4 | 0.73 |

| GMRD (ours) | 45 | 22 | 7.2 | 55.9 | 0.79 |

| Method | RDD-India | CNRDD | CRDDC’22 |

|---|---|---|---|

| YOLOv8 | 62.7 | 59.3 | 55.8 |

| RT-DETR | 64.1 | 60.0 | 56.4 |

| MobileSAM | 60.4 | 57.6 | 53.1 |

| Graph-RCNN | 65.8 | 61.3 | 57.9 |

| Deformable-DETR | 66.4 | 62.0 | 58.6 |

| GMRD (Ours) | 69.5 | 64.2 | 61.0 |

| Method Variant | RDD2022 | CNRDD | CRDDC’22 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mAP | G-mAP | Consist. | FPS | mAP | G-mAP | Consist. | FPS | mAP | G-mAP | Consist. | FPS | |

| Baseline (EffViM only) | 57.9 | 45.0 | 0.70 | 48 | 56.5 | 41.7 | 0.68 | 47 | 58.1 | 43.9 | 0.69 | 47 |

| – DefMamba | 60.1 | 54.0 | 0.77 | 48 | 58.6 | 50.3 | 0.74 | 47 | 60.5 | 51.6 | 0.75 | 47 |

| – GG-SSM | 59.4 | 51.3 | 0.74 | 46 | 58.2 | 48.7 | 0.72 | 46 | 60.0 | 49.8 | 0.72 | 46 |

| – EGTR Calibration | 60.3 | 53.6 | 0.75 | 45 | 59.0 | 49.5 | 0.74 | 45 | 61.2 | 51.0 | 0.74 | 45 |

| – Graph Consistency Loss | 60.6 | 54.2 | 0.73 | 45 | 59.3 | 50.1 | 0.72 | 45 | 61.6 | 52.5 | 0.71 | 45 |

| Full GMRD (Ours) | 61.8 | 55.9 | 0.79 | 45 | 60.5 | 52.9 | 0.77 | 45 | 62.4 | 54.1 | 0.78 | 45 |

| Method | Params (M) | GFLOPs | FPS | Latency (ms) |

|---|---|---|---|---|

| MobileNet-SSD | 4.2 | 2.3 | 43.2 | 23.1 |

| EfficientDet-D0 | 3.9 | 2.5 | 40.6 | 25.4 |

| YOLOv5-Nano | 1.9 | 1.8 | 44.7 | 21.9 |

| EffViM-T1 (no graph) | 4.2 | 3.4 | 48.0 | 20.6 |

| GMRD (ours) | 1.8 | 1.5 | 45.2 | 21.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, Z.; Shao, X.; Bai, Y. Graph-MambaRoadDet: A Symmetry-Aware Dynamic Graph Framework for Road Damage Detection. Symmetry 2025, 17, 1654. https://doi.org/10.3390/sym17101654

Tian Z, Shao X, Bai Y. Graph-MambaRoadDet: A Symmetry-Aware Dynamic Graph Framework for Road Damage Detection. Symmetry. 2025; 17(10):1654. https://doi.org/10.3390/sym17101654

Chicago/Turabian StyleTian, Zichun, Xiaokang Shao, and Yuqi Bai. 2025. "Graph-MambaRoadDet: A Symmetry-Aware Dynamic Graph Framework for Road Damage Detection" Symmetry 17, no. 10: 1654. https://doi.org/10.3390/sym17101654

APA StyleTian, Z., Shao, X., & Bai, Y. (2025). Graph-MambaRoadDet: A Symmetry-Aware Dynamic Graph Framework for Road Damage Detection. Symmetry, 17(10), 1654. https://doi.org/10.3390/sym17101654