A Unified Hardware Design for Multiplication, Division, and Square Roots Using Binary Logarithms

Abstract

1. Introduction

- We propose a unified and algebraically symmetrical hardware architecture capable of performing multiplication, division, and square root operations.

- We introduce a log-domain correction scheme that enhances the accuracy of these operations.

2. Related Work

3. Proposed Design

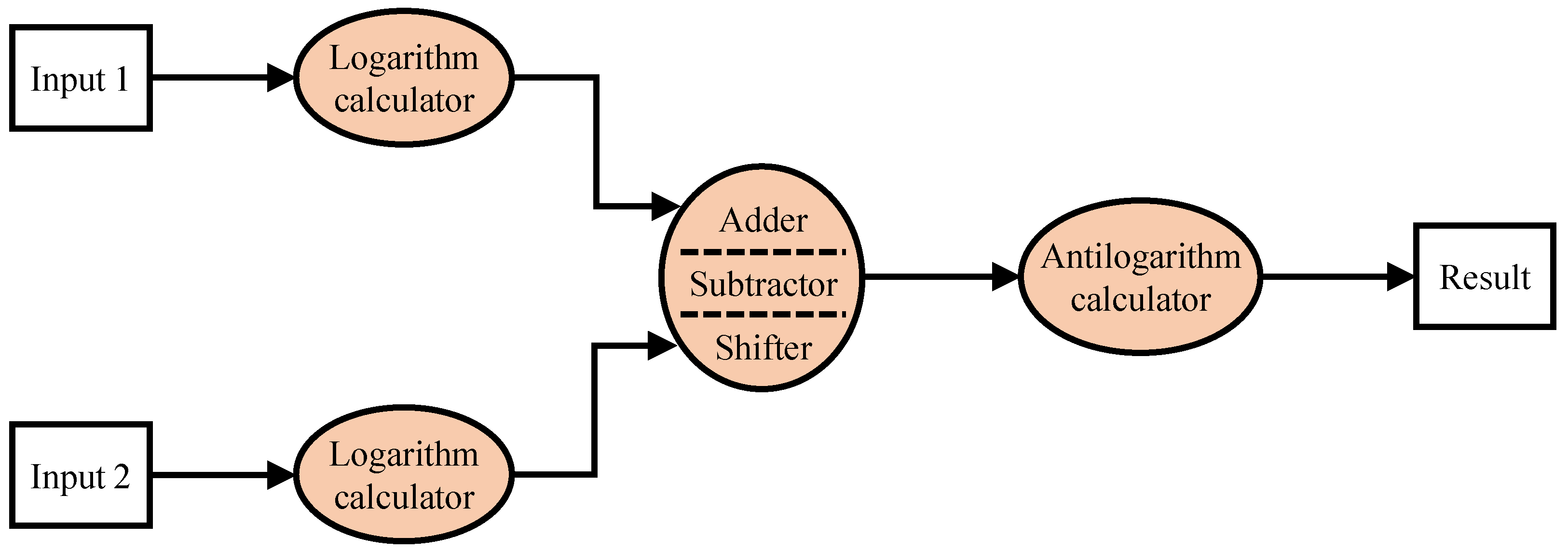

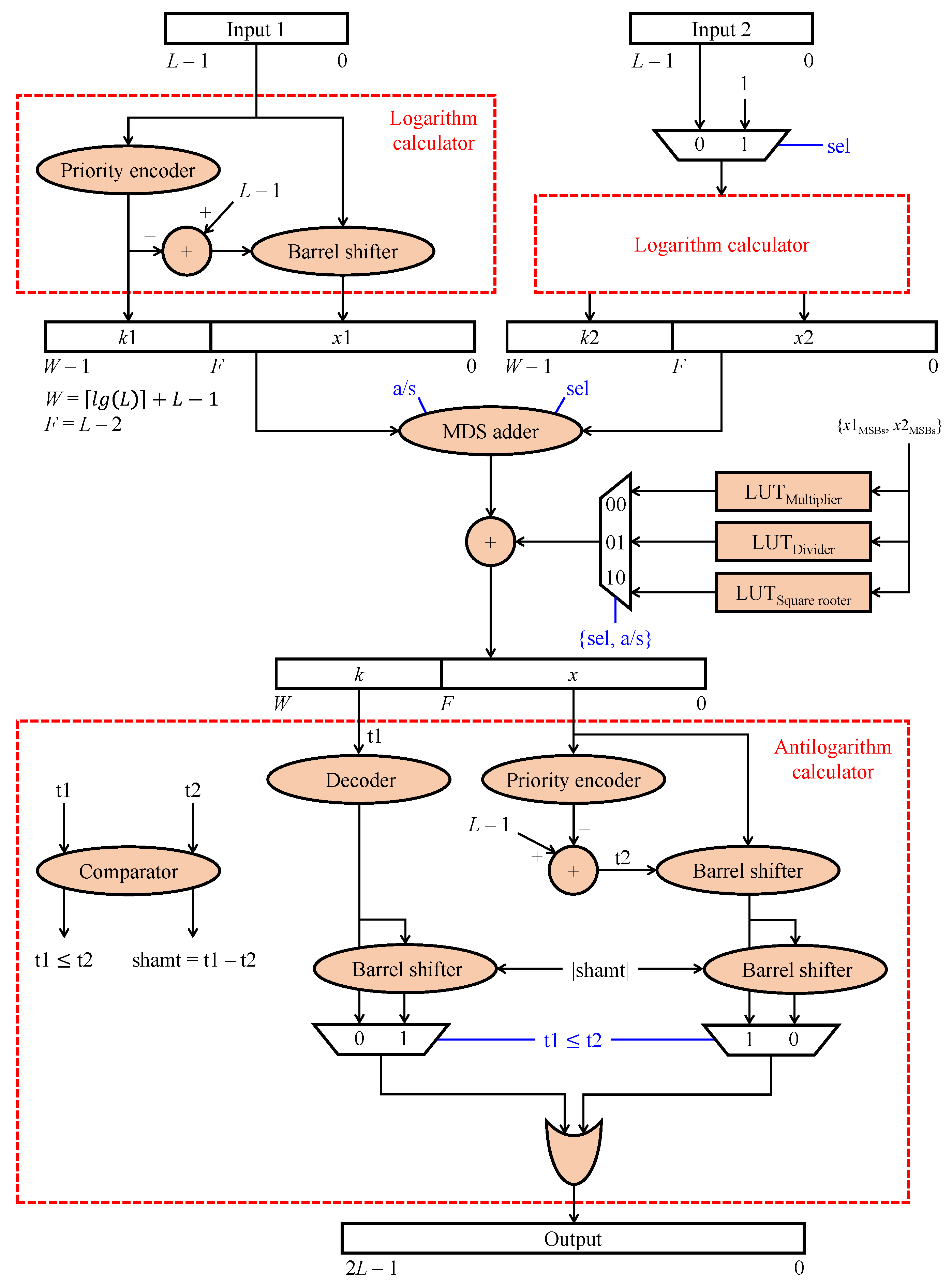

3.1. Mitchell’s Algorithm-Based Logarithm Multiplication, Division, and Square Root

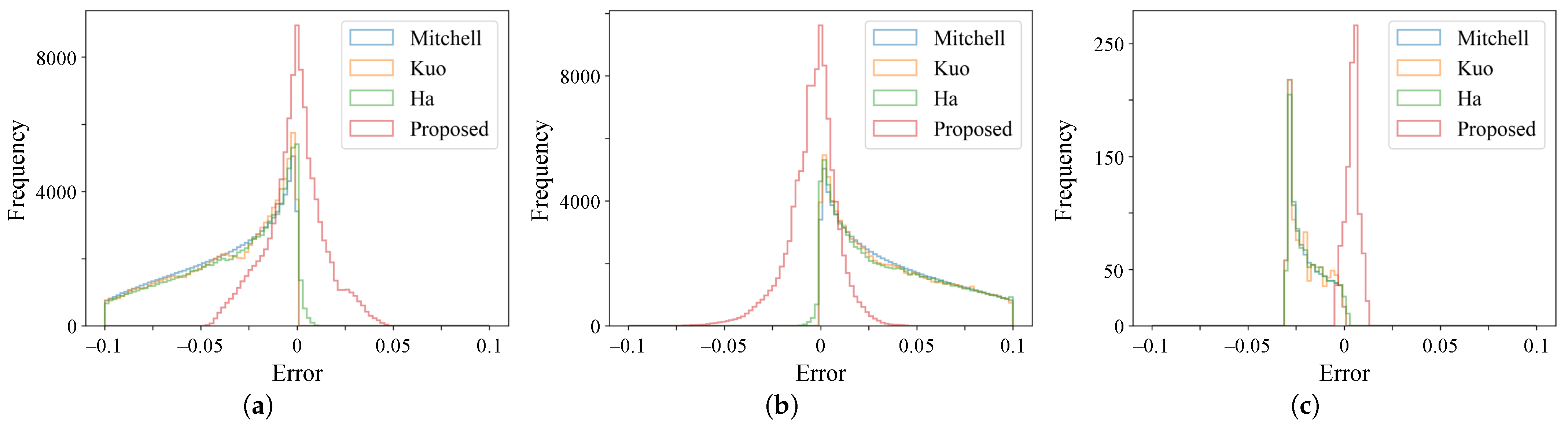

3.2. Error Analysis

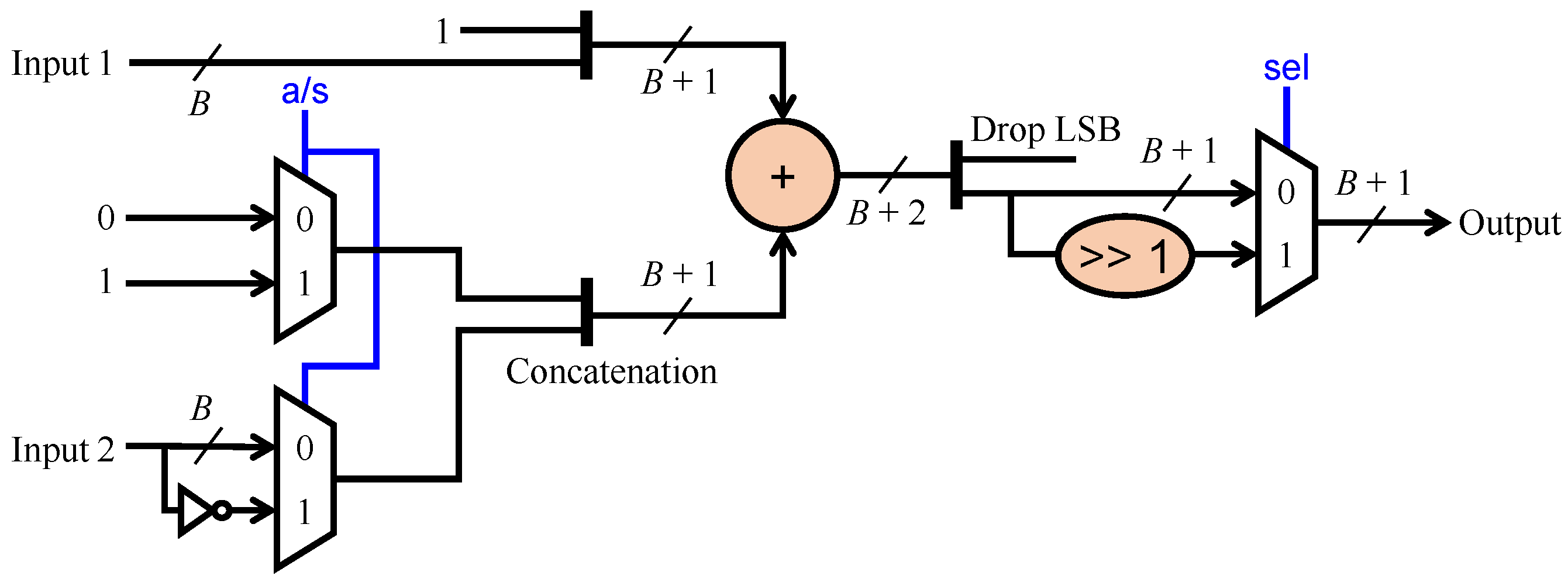

3.3. Unified Hardware Design

3.4. Implementation Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arnold, M.G.; Collange, C. A Real/Complex Logarithmic Number System ALU. IEEE Trans. Comput. 2011, 60, 202–213. [Google Scholar] [CrossRef]

- Chaudhary, M.; Lee, P. An Improved Two-Step Binary Logarithmic Converter for FPGAs. IEEE Trans. Circuits Syst. II Express Briefs 2015, 62, 476–480. [Google Scholar] [CrossRef]

- Mitchell, J.N. Computer Multiplication and Division Using Binary Logarithms. IRE Trans. Electron. Comput. 1962, EC-11, 512–517. [Google Scholar] [CrossRef]

- Kuo, C.; Juang, T. Design of fast logarithmic converters with high accuracy for digital camera application. Microsyst. Technol. 2018, 24, 9–17. [Google Scholar] [CrossRef]

- Ha, M.; Lee, S. Accurate Hardware-Efficient Logarithm Circuit. IEEE Trans. Circuits Syst. II Express Briefs 2017, 64, 967–971. [Google Scholar] [CrossRef]

- Kuo, C. Design and realization of high performance logarithmic converters using non-uniform multi-regions constant adder correction schemes. Microsyst. Technol. 2018, 24, 4237–4245. [Google Scholar] [CrossRef]

- Jana, B.; Roy, A.S.; Saha, G.; Banerjee, S. A Low-Error, Memory-Based Fast Binary Logarithmic Converter. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 2129–2133. [Google Scholar] [CrossRef]

- Makimoto, R.; Imagawa, T.; Ochi, H. Approximate Logarithmic Multipliers Using Half Compensation with Two Line Segments. In Proceedings of the 2023 IEEE 36th International System-on-Chip Conference (SOCC), Santa Clara, CA, USA, 5–8 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmed, S.; Srinivas, M. An Improved Logarithmic Multiplier for Media Processing. J. Signal Process. Syst. 2019, 91, 561–574. [Google Scholar] [CrossRef]

- Wu, X.; Wei, Z.; Ko, S.B.; Zhang, H. Design of Energy Efficient Logarithmic Approximate Multiplier. In Proceedings of the 2023 5th International Conference on Circuits and Systems (ICCS), Huzhou, China, 27–30 October 2023; pp. 129–134. [Google Scholar] [CrossRef]

- Joginipelly, A.; Charalampidis, D. An efficient circuit for error reduction in logarithmic multiplication for filtering applications. Int. J. Circuit Theory Appl. 2020, 48, 809–815. [Google Scholar] [CrossRef]

- Subhasri, C.; Jammu, B.; Harsha, L.; Bodasingi, N.; Samoju, V. Hardware-efficient approximate logarithmic division with improved accuracy. Int. J. Circuit Theory Appl. 2020, 49, 128–141. [Google Scholar] [CrossRef]

- Niu, Z.; Zhang, T.; Jiang, H.; Cockburn, B.F.; Liu, L.; Han, J. Hardware-Efficient Logarithmic Floating-Point Multipliers for Error-Tolerant Applications. IEEE Trans. Circuits Syst. I Regul. Pap. 2024, 71, 209–222. [Google Scholar] [CrossRef]

- Kim, S.; Norris, C.J.; Oelund, J.I.; Rutenbar, R.A. Area-Efficient Iterative Logarithmic Approximate Multipliers for IEEE 754 and Posit Numbers. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2024, 32, 455–467. [Google Scholar] [CrossRef]

- Norris, C.J.; Kim, S. A Use Case of Iterative Logarithmic Floating-Point Multipliers: Accelerating Histogram Stretching on Programmable SoC. In Proceedings of the 2023 IEEE International Symposium on Circuits and Systems (ISCAS), Monterey, CA, USA, 21–25 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Vakili, S.; Vaziri, M.; Zarei, A.; Langlois, J.P. DyRecMul: Fast and Low-Cost Approximate Multiplier for FPGAs using Dynamic Reconfiguration. ACM Trans. Reconfigurable Technol. Syst. 2024. [Google Scholar] [CrossRef]

- Towhidy, A.; Omidi, R.; Mohammadi, K. On the Design of Iterative Approximate Floating-Point Multipliers. IEEE Trans. Comput. 2023, 72, 1623–1635. [Google Scholar] [CrossRef]

- Intel. Integer Arithmetic Intel FPGA IP Cores User Guide. Available online: https://www.intel.com/content/www/us/en/docs/programmable/683490/24-1/integer-arithmetic-cores.html (accessed on 26 August 2024).

- Xilinx. Divider Generator v5.1 Product Guide (PG151). Available online: https://docs.amd.com/v/u/en-US/pg151-div-gen (accessed on 26 August 2024).

- Xilinx. Vivado Design Suite Reference Guide: Model-Based DSP Design Using System Generator (UG958). Available online: https://docs.amd.com/r/en-US/ug958-vivado-sysgen-ref (accessed on 26 August 2024).

- Mclaren, D. Improved Mitchell-based logarithmic multiplier for low-power DSP applications. In Proceedings of the 2003 IEEE International Systems-on-Chip SOC Conference, Portland, OR, USA, 17–20 September 2003; pp. 53–56. [Google Scholar] [CrossRef]

- IEEE Std 1364-2005; (Revision of IEEE Std 1374-2001). IEEE Standard for Verilog Hardware Description Language. IEEE: Piscataway, NJ, USA, 2006. [CrossRef]

- Xilinx. Vivado Design Suite User Guide: Release Notes, Installation, and Licensing (UG973). Available online: https://docs.amd.com/r/en-US/ug973-vivado-release-notes-install-license/Release-Notes (accessed on 21 April 2024).

- Xilinx. ZCU106 Evaluation Board: User Guide (UG1244). Available online: https://docs.xilinx.com/v/u/en-US/ug1244-zcu106-eval-bd (accessed on 25 July 2023).

- Ngo, D.; Kang, B. Taylor-Series-Based Reconfigurability of Gamma Correction in Hardware Designs. Electronics 2021, 10, 1959. [Google Scholar] [CrossRef]

- Lee, S.; Ngo, D.; Kang, B. Design of an FPGA-Based High-Quality Real-Time Autonomous Dehazing System. Remote Sens. 2022, 14, 1852. [Google Scholar] [CrossRef]

↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | |

| 0.00475 | 0.01304 | 0.01979 | 0.02540 | 0.03013 | 0.03417 | 0.03767 | 0.02669 | |

| 0.01304 | 0.03592 | 0.05472 | 0.07042 | 0.08375 | 0.09520 | 0.09112 | 0.03307 | |

| 0.01979 | 0.05472 | 0.08361 | 0.10792 | 0.12865 | 0.13252 | 0.08136 | 0.02522 | |

| 0.02540 | 0.07042 | 0.10792 | 0.13963 | 0.15278 | 0.10957 | 0.06033 | 0.01879 | |

| 0.03013 | 0.08375 | 0.12865 | 0.15278 | 0.11886 | 0.07757 | 0.04292 | 0.01342 | |

| 0.03417 | 0.09520 | 0.13252 | 0.10957 | 0.07757 | 0.05087 | 0.02826 | 0.00886 | |

| 0.03767 | 0.09112 | 0.08136 | 0.06033 | 0.04292 | 0.02826 | 0.01575 | 0.00495 | |

| 0.02669 | 0.03307 | 0.02522 | 0.01879 | 0.01342 | 0.00886 | 0.00495 | 0.00156 |

↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | |

| 0.01426 | 0.07090 | 0.12186 | 0.15092 | 0.15671 | 0.13896 | 0.09853 | 0.03727 | |

| 0.00779 | 0.01413 | 0.05310 | 0.08792 | 0.10306 | 0.09790 | 0.07265 | 0.02834 | |

| 0.01567 | 0.02431 | 0.01409 | 0.03891 | 0.06109 | 0.06552 | 0.05206 | 0.02118 | |

| 0.02221 | 0.04624 | 0.03814 | 0.01410 | 0.02734 | 0.03934 | 0.03528 | 0.01530 | |

| 0.02774 | 0.06492 | 0.07246 | 0.04990 | 0.01413 | 0.01772 | 0.02135 | 0.01038 | |

| 0.03248 | 0.08104 | 0.10236 | 0.09521 | 0.06001 | 0.01417 | 0.00959 | 0.00622 | |

| 0.03657 | 0.09508 | 0.12864 | 0.13545 | 0.11513 | 0.06880 | 0.01422 | 0.00264 | |

| 0.04015 | 0.10743 | 0.15193 | 0.17144 | 0.16492 | 0.13273 | 0.07652 | 0.01428 |

| x | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ | ↓ |

| 0.01198 | 0.02983 | 0.03961 | 0.04280 | 0.04050 | 0.03356 | 0.02265 | 0.00829 |

| Operation | Mitchell [3] | Ha and Lee [5] | Kuo [6] | Proposed Method | ||||

|---|---|---|---|---|---|---|---|---|

| Mean | Std. | Mean | Std. | Mean | Std. | Mean. | Std. | |

| Multiplication | ||||||||

| Division | ||||||||

| Square root | ||||||||

| Command | sel | a/s | Operation | Description |

|---|---|---|---|---|

| 00 | 0 | 0 | Multiplication | |

| 01 | 0 | 1 | Division | |

| 10 | 1 | x | Square root | |

| 11 | x | x | No operation | No description |

| Registers ↓ | LUTs ↓ | Latency ↓ | Power Consumption ↓ | Maximum Frequency ↑ | ||

|---|---|---|---|---|---|---|

| (#) | (#) | (# Clock Cycles) | (W) | (MHz) | ||

| 4-bit | Multiplier [25] | 16 | 20 | 2 | 0.619 | 1272.265 |

| Divider [26] | 97 | 61 | 9 | 0.634 | 1053.741 | |

| AMD Xilinx’s divider [19] | NA | NA | NA | NA | NA | |

| Square rooter [26] | 76 | 75 | 9 | 0.644 | 964.320 | |

| Proposed design | 71 | 78 | 6 | 0.642 | 1005.025 | |

| 8-bit | Multiplier [25] | 32 | 80 | 2 | 0.659 | 603.136 |

| Divider [26] | 343 | 308 | 17 | 0.704 | 834.028 | |

| AMD Xilinx’s divider [19] | 262 | 124 | 17 | NA | 636.000 | |

| Square rooter [26] | 317 | 341 | 17 | 0.732 | 865.052 | |

| Proposed design | 127 | 198 | 6 | 0.675 | 651.042 | |

| 16-bit | Multiplier [25] | 64 | 323 | 2 | 0.793 | 373.413 |

| Divider [26] | 1210 | 1131 | 33 | 0.999 | 776.398 | |

| AMD Xilinx’s divider [19] | NA | NA | NA | NA | NA | |

| Square rooter [26] | 1273 | 1396 | 33 | 1.215 | 786.782 | |

| Proposed design | 234 | 473 | 6 | 0.838 | 591.716 | |

| 32-bit | Multiplier [25] | 175 | 1303 | 2 | 0.915 | 250.000 |

| Divider [26] | 4444 | 4359 | 65 | 1.399 | 644.330 | |

| AMD Xilinx’s divider [19] | 3334 | 1279 | 65 | NA | 604.000 | |

| Square rooter [26] | 5152 | 5935 | 65 | 1.971 | 604.230 | |

| Proposed design | 431 | 1121 | 6 | 0.993 | 506.842 | |

| 64-bit | Multiplier [25] | 440 | 5225 | 2 | 1.175 | 188.679 |

| Divider [26] | 17,038 | 17,099 | 129 | 2.998 | 533.903 | |

| AMD Xilinx’s divider [19] | NA | NA | NA | NA | NA | |

| Square rooter [26] | 29,429 | 24,542 | 129 | 4.094 | 385.208 | |

| Proposed design | 883 | 2394 | 6 | 1.415 | 500.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ngo, D.; Han, S.; Kang, B. A Unified Hardware Design for Multiplication, Division, and Square Roots Using Binary Logarithms. Symmetry 2024, 16, 1138. https://doi.org/10.3390/sym16091138

Ngo D, Han S, Kang B. A Unified Hardware Design for Multiplication, Division, and Square Roots Using Binary Logarithms. Symmetry. 2024; 16(9):1138. https://doi.org/10.3390/sym16091138

Chicago/Turabian StyleNgo, Dat, Siyeon Han, and Bongsoon Kang. 2024. "A Unified Hardware Design for Multiplication, Division, and Square Roots Using Binary Logarithms" Symmetry 16, no. 9: 1138. https://doi.org/10.3390/sym16091138

APA StyleNgo, D., Han, S., & Kang, B. (2024). A Unified Hardware Design for Multiplication, Division, and Square Roots Using Binary Logarithms. Symmetry, 16(9), 1138. https://doi.org/10.3390/sym16091138