Abstract

This paper deals with the problem of financial risk management using a new expected shortfall regression. The latter is based on the expectile model for financial risk-threshold. Unlike the VaR model, the expectile threshold is constructed by an asymmetric least square loss function. We construct an estimator of this new model using the k-nearest neighbors (kNN) smoothing approach. The mathematical properties of the constructed estimator are stated through the establishment of the pointwise complete convergence. Additionally, we prove that the constructed estimator is uniformly consistent over the nearest neighbors (UCNN). Such asymptotic results constitute a good mathematical support of the proposed financial risk process. Thus, we examine the easy implantation of this process through an artificial and real data. Our empirical analysis confirms the superiority of the kNN-approach over the kernel method as well as the superiority of the expectile over the quantile in financial risk analysis.

1. Introduction

Defining an accurate financial risk-metric is a challenging issue for financial institutions. Usually, the value at risk (VaR) is the standard risk-metric for financial risk management. The VaR-model was approved by the Basel committee in (1996, 2006). However, the financial operators have recognized the limitations and the weaknesses of this risk-metric of the VaR-model through the successive financial crises in the last decade. The primary weakness of the VaR model in financial risk management is its insensitivity to the extreme values. Consequently, the Basel committee in 2014 proposed enhancing the financial risk surveillance with the expected shortfall (ES) function. This function examines the expected loss when we exceed a specific threshold. Generally the threshold is defined through the VaR level. The novelty of this paper is to define the ES-function using an alternative risk-threshold that is the expectile regression.

The shortfall risk model was investigated by [1]. Motivated by its coherency feature, the ES function has widely developed in the last decade. A comparison study between VaR and ES-model was carried out by [1]. They stated that the VaR is inaccurate when the profit or the loss is not Gaussian. In this context, the ES-model is a more accurate financial risk metric than the VaR function. From a statistical point of view, the ES model behaves at different manners such as parametric, semi-parametric, or free distribution approaches. For an overview in the parametric approach, we refer to [2,3,4]. The present paper considers the nonparametric strategy. At this stage, we point out that the first study in nonparametric modeling was introduced by [5]. He estimated the ES-model by the kernel method. The same estimator was considered by [6]. They stated the asymptotic normality of their estimator. Alternatively, another estimator using the Bahadur representation was constructed by [7]. The literature concerning the nonparametric estimation of the ES is limited when the data are functional. To the best of our knowledge, only two works have treated the functional ES-model using the nonparametric regression structure. The first results are developed by [8] when the financial time series is modeled under the strong mixing assumption. The authors of [9] have used a weak correlation assumption to model the financial time series. They proved the complete consistency of the functional kernel estimator of the ES-function under the quasi-associated auto-correlation.

The second component of our contribution concerns the expectile model. It was introduced by [10]. It can be considered an alternative to the VaR-function. However, it corrects the main drawback of the quantile, which is the fact that it is the insensitive to the outliers. The expectile metric is very sensitive to the outliers. In financial risk analysis, the expectile has been developed by [11,12,13,14]. It should be noted that the expectile function has been used for other statistical problems, including for the outlier analysis (see [15]) or heteroscedasticity detection (see [16,17]). The expectile regression for vectorial statistics was studied by [18]. The authors of this last paper have developed a semi-parametric estimation of the expectile. Concerning the functional expectile model, we point out that the first result was stated by [19]. They established the asymptotic convergence rate of the kernel estimator of the functional expectile regression. We return to [20] for the parametric version of the functional expectile regression. They established the asymptotic convergence rate of an estimator constructed from the reproducing kernel Hilbert-space structure. For more recent advances and results in functional regression data analysis, we may refer to [21,22,23,24,25].

The third component of this paper is the k-NN smoothing approach. It is an attractive approach for many applied statistics such as the classification problems, the clustering issues or the prediction questions. The kNN estimation approach has been popularized by the contribution of [26]. This cited paper can be considered as pioneer work in nonparametric estimation by the kNN method. Pushed by its diversified applications, the kNN estimation algorithm has been introduced in functional data analysis by [27]. They proved the almost complete point-wise convergence of the functional regression using the kNN estimator. Such a result has been stated under the independence condition. We refer to [28] for the uniform convergence of the kNN estimation of the functional regression. They established the convergence rate using the entropy property. More recent advances and references in the functional kNN method, we may cite [21,29,30].

In this paper, we aim to estimate expectile shortfall regression using the k-NN smoothing approach. The principal motivations on the use of this estimation methodology are as follows: (1) usually, financial data are not Gaussian and the parametric approach fails to fit its randomize movement; (2) the functional approach explores the high frequency of the financial data by treating it as continuous curves; (3) the kNN approach explores the functional structure of the data by considering a varied local bandwidth adapted to functional curves. This feature allows one to update the estimator and identify the financial risk systematically; (4) the last motivation is the possibility of remedying the problem of the outliers’ insensitivity using the expectile instead to the VaR. The mathematical support of this contribution is highlighted by establishing the almost complete convergence of the constructed estimator. Additionally, we provide the convergence rate of the UCNN consistency of the constructed estimator. It should be noted that this last result has a great importance in practice. In particular, it can be used to resolve some practical purpose, namely the problem of the choice of the best number of neighborhood. So, we emphasize our theoretical development to examine the applicability as well as the efficiency of the kNN estimator of expectile shortfall regression. More precisely, we examine the attainability of the estimator using artificial and real financial data.

This paper is structured as follows: In Section 2, we present the risk metric function and its kNN estimator. In Section 3, we state the point-wise convergence of the constructed estimator. The UCNN consistency is sated in Section 4. Section 6 is dedicated to discuss the computation-ability of the estimator over simulated and real-data applications. Finally, the proofs of the auxiliary results are given in Section 7.

2. KNN Estimator of Expectile Shortfall Regression

Let be n independent random pairs in which are independent and have the same distribution as . The functional space is a semi-metric space with a semi-metric d. For our expected shortfall regression analysis, we assume that A is the functional explanatory variable and B is the real response variable. Often, the conventional ES-regression is defined for ,

where is the conditional value at risk. In this paper, instead of , we explicate the ES-regression using the conditional expectile of B given . The latter is denoted by and is defined by

where is the expectile regression of B given defined by

where is the indicator function of the set . Of course, this replacement of by enables one to overcome the lack of risk insensitivity of the quantile to the extreme values. This characteristic is very important in practice because the catastrophic losses are characterized in the extremities. The second feature of our contribution is the use of the kNN estimation approach. This latter feature is based on the determination of the smoothing parameter as

where is a ball of the center a with a radius defined as follows

So, the kNN estimator of EXES-regression is

where is a known measurable function and is the kNN estimator of . The latter is defined as the solution of

with

with

3. Pointwise Convergence

Before establishing the asymptotic properties of the estimator , we consider some notation and assumptions. For the notation, we set by or some strictly positive generic constants, is a given neighborhood of a. Furthermore, for all , we define . Now, to formulate our main result, we will use the hypotheses listed below:

- (P1)

- where .

- (P2)

- There exists an invertible non-negative function , a bounded and positive function , and a function such that

- (i)

- tends to zero as goes to zero and, , as , for certain

- (ii)

- For all ,

- (P3)

- , ,

- (P4)

- For all ,

- (P5)

- The kernel function is supported on such that

- (P6)

- The number of the neighborhood k such thatComments on the hypotheses.

All the considered assumptions are classical in functional data analysis, namely for the kNN smoothing approach. They are used for a similar study (see, for instance [30]). The assumptions (P1) and (P2) relate the functional variable to the probability structure. As discussed in the last paragraph of introduction, the nonparametric path is motivated by the fact that the distribution of the financial movement is unknown in practice. Assumption (P4) concerns the conditional moment integrability of the interest variable B. Such a condition is usually used in the regression analysis. Observe that the upper bound in (P4) is not uniform, but strongly depends on the order of the moment m and the location point a. This assumption is used to apply the Bernstein inequality where the constant should be inferior to , (, (resp. ) being constant independent to m). The assumption over the kernel function is defined in condition (P5). Such a technical assumption is used to precise the convergence rate of the estimator.

Now, we obtain the following result

Theorem 1.

From the suppositions (P1)–(P6), we have

Proof of Theorem 1.

For , we define

Then,

So

Therefore,

It suffices to prove the following lemmas. □

Lemma 1.

From the suppositions of Theorem 1, we have

and

Lemma 2.

From the suppositions of Theorem 1, we have

The proof of both required results is based on the technique of kNN smoothing summarized on the following lemmas.

Lemma 3

(see [28]). Let be a sequence of independent random variables identically distributed as , which are valued in . Let

where is a measurable function in . Consider as a sequence of real random variables and a decreasing positive sequence (with ), and be a non-random function. If, for all increasing sequence with limit 1 (), there exist two sequences of real random variables and such that

- C1.

- and

- C2.

- C3.

- andThen, we have

4. UCNN Convergence

We aim to establish the almost complete consistency of uniformly in the numbers of neighbors . To do that, we denote by C and some strictly positive generic constants. In order to announce the first theorem, we will need the following assumptions.

- U1

- The function’s classis a pointwise measurable class, such that:where the maximum is an overall probability on the space with with G being the envelope function of the set . is the number of open balls with a radius , which is necessary to cover the class of functions . The balls are constructed using the -metric.

- U2

- The kernel is supported within and has a continuous first derivative, such that:where is the indicator function of set A.

- U3

- The sequences and verify:Then, the following theorem gives the UINN consistency of .

Theorem 2.

Under assumptions (P1)–(P4) and (U1)–(U3), we have

Proof of Theorem 2.

Similarly to Theorem 1, the claimed result is the consequence of

Lemma 4.

From the suppositions of Theorem 2, we have

+

and

Lemma 5

□([11]). From the suppositions of Theorem 2, we have

5. Empirical Analysis

In this section, we discuss the practical use of the risk metric studied in the present work. This section is divided into three sections. In the first part, we propose an approach to choose the best number of neighborhood. The selection of this number constitutes a primordial for the practical use of this financial model. The second part is devoted to evaluate the behavior of the estimator for an artificial datum. In the last section, we examine the constructed model over real financial data from the Dow–Jones stock market.

5.1. Smoothing Parameter Selection: Cross-Validation

Generally, the optimal number k is obtained by optimizing some criterion as

where L is a given loss function which is fixed according to the employed selector algorithm. In particular, for the expectile regression, many selector approaches exist; for instance, different cross-validation rules that were used, as can be seen in [19]. For instance, we can use

or more generally

where is the scoring function defining . In practice, these selectors provide an efficient estimator. In this context, the UCNN convergence allows one to ensure the consistency of which is associated with . Therefore, we deduce the following corollary.

Corollary 1.

If and if the conditions of Theorem 2 hold, then we have

5.2. Simulated Data

The first part is devoted to the examination of the performance of the ES-expectile function using artificial observations. We compute this estimator for independent functional data. More precisely, we compare the proposed model to the same estimator obtained by the standard smoothing parameter. Additionally, we compare our estimator to the standard expected shortfall based on the percentile regression. For this empirical study, we generate the functional variable defined, for any , by:

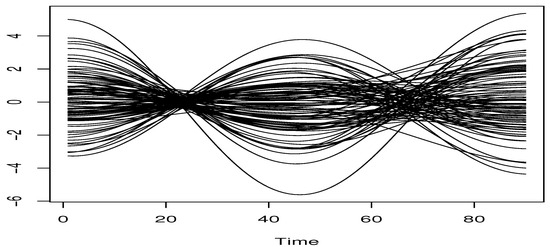

where and are two real random variables. In order to cover more general cases, we consider two examples of . In the first example, we assume that and . While, in the second example, we generate from and from . In both cases, the obtained functional variables are relatively smooth, allowing one to choose the spline -metric (see [31]). A sample of the first co-variate curves is plotted in Figure 1.

Figure 1.

Some explanatory curves of the sample .

We assume that the functional regressor represents a continuous trajectory of a financial asset. The interest variable B represents a future characteristic of this trajectory. More precisely, for all i, we assume that . Recall that the principal aim of this computational part is to conduct a comparison study between the kNN ES-expectile regression and the standard expected shortfall based on the regression associated with the percentile regression

where F is the conditional cumulative function of B given A. The latter is estimated using the kNN estimator of the function F as

Thereafter, we estimate the function by

Recall that, in this case, the expected shortfall regression is expressed by

So, we aim to compare the three estimators (see Equation (2)), (see, Equation (8)) and (obtained by replacing in Equation (2) by a standard bandwidth ). All these estimators are calculated using the -kernel, and the spline metric and the smoothing parameter (h or k) are selected by the cross-validation rule (6). In the kNN estimation, we select the best k by

and for the standard bandwidth, we select h

where is the set of the positive real such as the ball centered at a with radius ‘contains exactly k neighbors of a. We compare this selection procedure using an arbitrary choice. Specifically, we execute the three optimal estimators , and , and the arbitrary one , and . The efficiency of the estimation approaches is examined using the backtesting measure defined by

Such an error is evaluated for various values of , and . The abstained results are given in the following tables (see Table 1 and Table 2).

Table 1.

Comparison of Mse-error.

Table 2.

Comparison of Msp-error.

Clearly, the behavior of the three estimators are strongly impacted by the choice of the smoothing parameter. However, we observe that the kNN approach is more appropriate compared to a standard case. Moreover, the expected shortfall based on expectile is more accurate than the expected shortfall based on the VaR threshold. Without suppressing the behavior of the estimators, it is also affected by the definition of the regressors with respect to the distribution of (normal or lognormal). In particular, the estimators and are more sensitive to this aspect than the estimator . The variability of the Mse and Msp is more important in and than . This sensitivity confirms the importance of the expectile regression as a financial risk model.

5.3. Real Data Application

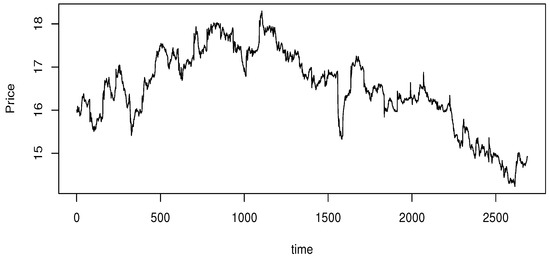

This last paragraph is devoted to the applicability of our model to real data. More precisely, we examine the efficiency of the ES-expectile model over financial data associated with real-time stock prices of liberty energy company. This last is one of a major energy industry service provider across North America. Using these data, we compare our financial metric to its competitive ones. In this financial data analysis, we study the high price of this company during October 2024, observed within a frame of five minutes. The parent data contains more than 2700 values. The process of is displayed in Figure 2. It is available in https://stooq.com/db/l (accessed on 24 April 2024).

Figure 2.

The high price .

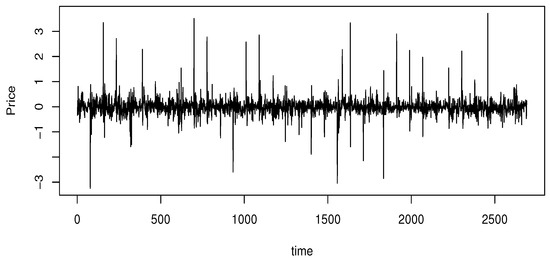

To insure the stability, we proceeded with the difference algorithmic. We constructed the functional data from the process . The transformed data are given in Figure 3.

Figure 3.

The process R(t).

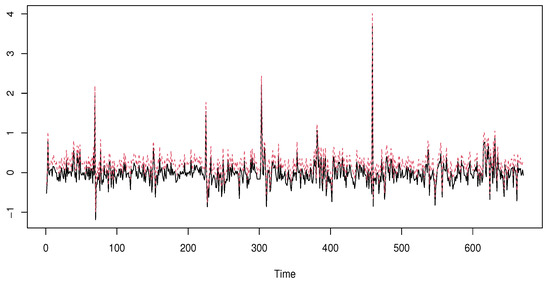

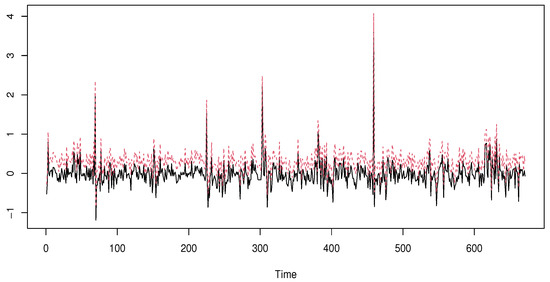

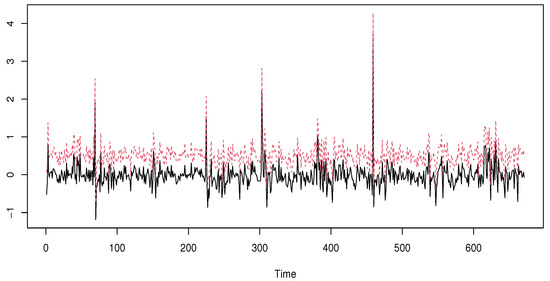

We explore the functional path of the considered data by cutting the process with pieces of 30 points. These pieces represent the functional regressors . Furthermore, we use the same strategy as in the simulated data. Indeed, we choose as the curve of and . Now, to insure the independence structure of our work, we select distanced observations. Specifically, from 2790 observations, we choose 90 equidistant independent values from which we construct our learning sample . Thus, we compare the three estimators , , and using a real datum, . Such estimators are computed using the same algorithm of the simulated data. We use the same kernel and select the smoothing parameters k and h by the rule (6). We use the metric obtained by the PCA-metric. We refer to Ferraty and Vieu [31] for more details on the mathematical formulation of this metric. The comparison results are given in Figure 4, Figure 5 and Figure 6, where we plot the true values of 670 testing observations (black line) versus the estimator and (red line) for values of .

Figure 4.

kNN expectile expected shortfall.

Figure 5.

kNN VaR expected shortfall.

Figure 6.

Standard expectile expected shortfall.

Once again, the comparison confirms the superiority of the kNN ES-expectile regression over the standard ES-expectile and the kNN ES-quantile model. This superiority is confirmed by computing the Mse error (9) of the three models. We obtain, respectively, 0.0274 for the kNN ES-expectile, 0.108 for the standard ES-expectile 0.201, and for the kNN ES-quantile. For a deep examination of the behavior of the three estimators as financial risk models, we use the backtesting measure based on the cover test developed Bayer and Dimitriadis [32]. Specifically, we apply the version so-called one-side intercept expected shortfall regression backtest. This last is obtained using the routine code esr-backtest from the R-package esrback with . We compute the p-values of the 70 observations, randomly chosen, from the above 670 testing observations. The average of the obtained values confirms the first statement that is the kNN ES-expectile regression, which is more adequate than the standard ES-expectile and the kNN ES-quantile model. Specifically, the average of the p-values of the kNN ES-expectile is 0.035 against 0.067 for the standard ES-expectile, and for the 0.058 kNN ES- quantile.

6. Conclusions and Prospects

In the present work, we developed a free-parameter estimation of the ES-expectile-with-regression. We constructed an estimator using kNN smoothing. This study covers two principal aspects of the financial data analysis: In the theoretical part, we establish the almost complete convergence of the constructed estimator; moreover, to ensure the applicability of the constructed estimator, we also determine the convergence rate of the UCNN consistency. Of course, this theoretical analysis constitutes a good mathematical support for the use of the new developed risk-metric in practice. We point out that the obtained asymptotic results are established under standard conditions and with the precision of the convergence rate. In particular, all the assumed conditions are related to the functional structure of the regressors and the nonparametric path of the model. On the other hand, we observe that the applicability of the estimator is very easy and gives better results compared to the other financial risk metric. In addition, our contribution leaves many open questions. For instance, the first natural prospect is the treatment of the dependent case, which allows the control of the movement of the stock exchange in its natural path, that is, the functional time series case. The second future work is the establishment of the asymptotic distribution of our new estimator, which in both cases, are independent or dependent cases. Moreover, the third prospect concerns the determination of the single structure case. This last permit enables one to improve the convergence rate of the estimator. Furthermore, we can also treat the partial model case or the parametric case.

7. The Demonstration of the Intermediate Results

The proof of the intermediate results are regrouped in this Section.

Proof of Lemma 1.

It suffices to apply Lemma 3 for , and Since the choice of , and are the same as in [27], Conditions (C1 and C2) are satisfied. So, all that remains is checking condition (C3). Indeed, by a simple decomposition, for ,

where

and , or Therefore, (C3) is a consequence of

and

Because the proof of the three required results is based on similar analytical arguments in FDA, we only focus on the second results, namely,

To do that, we write

with and . Since and are increasing functions. Thus, ∀

Now, by (P2), we obtain

As

We treat

For this, we write for any

Now, we evaluate

Indeed, let

We write

Since

we can apply the inequality of Bernstein with to obtain, for all ,

Thus

Thereby, for gives

□

Proof of Lemma 2.

Let

Similarly to [19], we have

where

with

Therefore,

Similarly to the previous lemma, we write

where

and

We prove

and

The rest of the proof is based on the same arguments of Lemma 1 where is replaced by or . □

Proof of Lemma 4.

Let ; thus, we write

It is shown, in [33], that

So, all that is left to be proven is

where is obtained by replacing h by in . For this aim, we use the following decomposition

where

with

and

Thus, we split the proof of Lemma 4 into

where and .

We concentrate on the second convergence. The first one can be deduced by the same tools. Indeed, we write that

with and .

Next, since the functions and are monotone, which implies that, for ,

Thus

Observe that Now, it suffices to

For this, we write that

We evaluate the following quantity

The proof of the latter is based on Bernstein’s inequality for empirical processes as in [33]. The empirical processes are

where . Thereafter, we obtain

Consequently, an adequate choice of enables us to deduce (18). □

Author Contributions

The authors contributed approximately equally to this work. Conceptualization, M.B.A.; methodology, M.B.A.; software, M.B.A.; validation, F.A.A.; formal analysis, F.A.A.; investigation, Z.K.; resources, Z.K.; data curation, Z.K.; writing—original draft preparation, A.L.; writing—review and editing, Z.K. and A.L.; visualization, A.L.; supervision, A.L.; project administration, A.L.; funding acquisition, A.L. All authors have read and agreed to the final version of the manuscript.

Funding

The authors thank and extend their appreciation to the funders of this project: (1) Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2024R515), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. (2) The Deanship of Scientific Research at King Khalid University through the Research Groups Program under grant number R.G.P. 1/128/45.

Data Availability Statement

The data used in this study are available through the link https://fred.stlouisfed.org/series/DJIA (accessed on 24 April 2024).

Acknowledgments

The authors are indebted to the editorial board members and the three referees for their very generous comments and suggestions on the first version of our article which helped us to improve content, presentation, and layout of the manuscript. The authors also thank and extend their appreciation to the funders of this project: (1) Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2024R515), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. (2) The Deanship of Scientific Research at King Khalid University through the Research Groups Program under grant number R.G.P. 1/128/45.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Artzner, P.; Delbaen, F.; Eber, J.M.; Heath, D. Coherent measures of risk. Math. Financ. 1999, 9, 203–228. [Google Scholar] [CrossRef]

- Righi, M.B.; Ceretta, P.S. A comparison of expected shortfall estimation models. J. Econ. Bus. 2015, 78, 14–47. [Google Scholar] [CrossRef]

- Moutanabbir, K.; Bouaddi, M. A new non-parametric estimation of the expected shortfall for dependent financial losses. J. Stat. Plan. Inference 2024, 232, 106151. [Google Scholar] [CrossRef]

- Lazar, E.; Pan, J.; Wang, S. On the estimation of Value-at-Risk and Expected Shortfall at extreme levels. J. Commod. Mark. 2024, 3, 100391. [Google Scholar] [CrossRef]

- Scaillet, O. Nonparametric estimation and sensitivity analysis of expected shortfall. Math. Financ. Int. J. Math. Stat. Financ. Econ. 2004, 14, 115–129. [Google Scholar] [CrossRef]

- Cai, Z.; Wang, X. Nonparametric estimation of conditional VaR and expected shortfall. J. Econom. 2008, 147, 120–130. [Google Scholar] [CrossRef]

- Wu, Y.; Yu, W.; Balakrishnan, N.; Wang, X. Nonparametric estimation of expected shortfall via Bahadur-type representation and Berry–Esséen bounds. J. Stat. Comput. Simul. 2022, 92, 544–566. [Google Scholar] [CrossRef]

- Ferraty, F.; Quintela-Del-Río, A. Conditional VAR and expected shortfall: A new functional approach. Econom. Rev. 2016, 35, 263–292. [Google Scholar] [CrossRef]

- Ait-Hennani, L.; Kaid, Z.; Laksaci, A.; Rachdi, M. Nonparametric estimation of the expected shortfall regression for quasi-associated functional data. Mathematics 2022, 10, 4508. [Google Scholar] [CrossRef]

- Newey, W.K.; Powell, J.L. Asymmetric least squares estimation and testing. Econom. J. Econom. Soc. 1987, 55, 819–847. [Google Scholar] [CrossRef]

- Waltrup, L.S.; Sobotka, F.; Kneib, T.; Kauermann, G. Expectile and quantile regression—David and Goliath? Stat. Model. 2015, 15, 433–456. [Google Scholar] [CrossRef]

- Bellini, F.; Di Bernardino, E. Risk management with expectiles. Eur. J. Financ. 2017, 23, 487–506. [Google Scholar] [CrossRef]

- Farooq, M.; Steinwart, I. Learning rates for kernel-based expectile regression. Mach. Learn. 2019, 108, 203–227. [Google Scholar] [CrossRef]

- Bellini, F.; Negri, I.; Pyatkova, M. Backtesting VaR and expectiles with realized scores. Stat. Methods Appl. 2019, 28, 119–142. [Google Scholar] [CrossRef]

- Chakroborty, S.; Iyer, R.; Trindade, A.A. On the use of the M-quantiles for outlier detection in multivariate data. arXiv 2024, arXiv:2401.01628. [Google Scholar]

- Gu, Y.; Zou, H. High-dimensional generalizations of asymmetric least squares regression and their applications. Ann. Stat. 2016, 44, 2661–2694. [Google Scholar] [CrossRef]

- Zhao, J.; Chen, Y.; Zhang, Y. Expectile regression for analyzing heteroscedasticity in high dimension. Stat. Probab. Lett. 2018, 137, 304–311. [Google Scholar] [CrossRef]

- Kneib, T. Beyond mean regression. Stat. Model. 2013, 13, 275–303. [Google Scholar] [CrossRef]

- Mohammedi, M.; Bouzebda, S.; Laksaci, A. The consistency and asymptotic normality of the kernel type expectile regression estimator for functional data. J. Multivar. Anal. 2021, 181, 104673. [Google Scholar] [CrossRef]

- Girard, S.; Stupfler, G.; Usseglio-Carleve, A. Functional estimation of extreme conditional expectiles. Econom. Stat. 2022, 21, 131–158. [Google Scholar] [CrossRef]

- Almanjahie, I.M.; Bouzebda, S.; Kaid, Z.; Laksaci, A. The local linear functional kNN estimator of the conditional expectile: Uniform consistency in number of neighbors. Metrika 2024, 1–29. [Google Scholar] [CrossRef]

- Aneiros, G.; Cao, R.; Fraiman, R.; Genest, C.; Vieu, P. Recent advances in functional data analysis and high-dimensional statistics. H. Multivar. Anal. 2019, 170, 3–9. [Google Scholar] [CrossRef]

- Goia, A.; Vieu, P. An introduction to recent advances in high/infinite dimensional statistics. J. Multivar. Anal. 2016, 170, 1–6. [Google Scholar] [CrossRef]

- Yu, D.; Pietrosanu, M.; Mizera, I.; Jiang, B.; Kong, L.; Tu, W. Functional Linear Partial Quantile Regression with Guaranteed Convergence for Neuroimaging Data Analysis. Stat. Biosci. 2024, 1–17. [Google Scholar] [CrossRef]

- Di Bernardino, E.; Laloe, T.; Pakzad, C. Estimation of extreme multivariate expectiles with functional covariates. J. Multivar. Anal. 2024, 202, 105292. [Google Scholar] [CrossRef]

- Collomb, G.; Härdle, W.; Hassani, S. A note on prediction via conditional mode estimation. J. Statist. Plann. Inference 1987, 15, 227–236. [Google Scholar] [CrossRef]

- Burba, F.; Ferraty, F.; Vieu, P. k-nearest neighbor method in functional nonparametric regression. J. Nonparametr. Statist. 2009, 21, 453–469. [Google Scholar] [CrossRef]

- Kudraszow, N.; Vieu, P. Uniform consistency of kNN regressors for functional variables. Statist. Probab. Lett. 2013, 83, 1863–1870. [Google Scholar] [CrossRef]

- Bouzebda, S.; Nezzal, A. Uniform consistency and uniform in number of neighbors consistency for nonparametric regression estimates and conditional U-statistics involving functional data. Jpn. J. Stat. Data Sci. 2022, 5, 431–533. [Google Scholar] [CrossRef]

- Bouzebda, S.; Mohammedi, M.; Laksaci, A. The k-nearest neighbors method in single index regression model for functional quasi-associated time series data. Rev. Mat. Complut. 2022, 36, 361–391. [Google Scholar] [CrossRef]

- Ferraty, F.; Vieu, P. Nonparametric Functional Data Analysis; Springer Series in Statistics; Springer: New York, NY, USA, 2006. [Google Scholar]

- Bayer, S.; Dimitriadis, T. Regression-Based Expected Shortfall Backtesting. J. Financ. Econom. 2022, 20, 437–471. [Google Scholar] [CrossRef]

- Kara-Zaïtri, L.; Laksaci, A.; Rachdi, M.; Vieu, P. Data-driven kNN estimation for various problems involving functional data. J. Multivariate Anal. 2017, 153, 176–188. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).