3.1. FGPS

In our previous work [

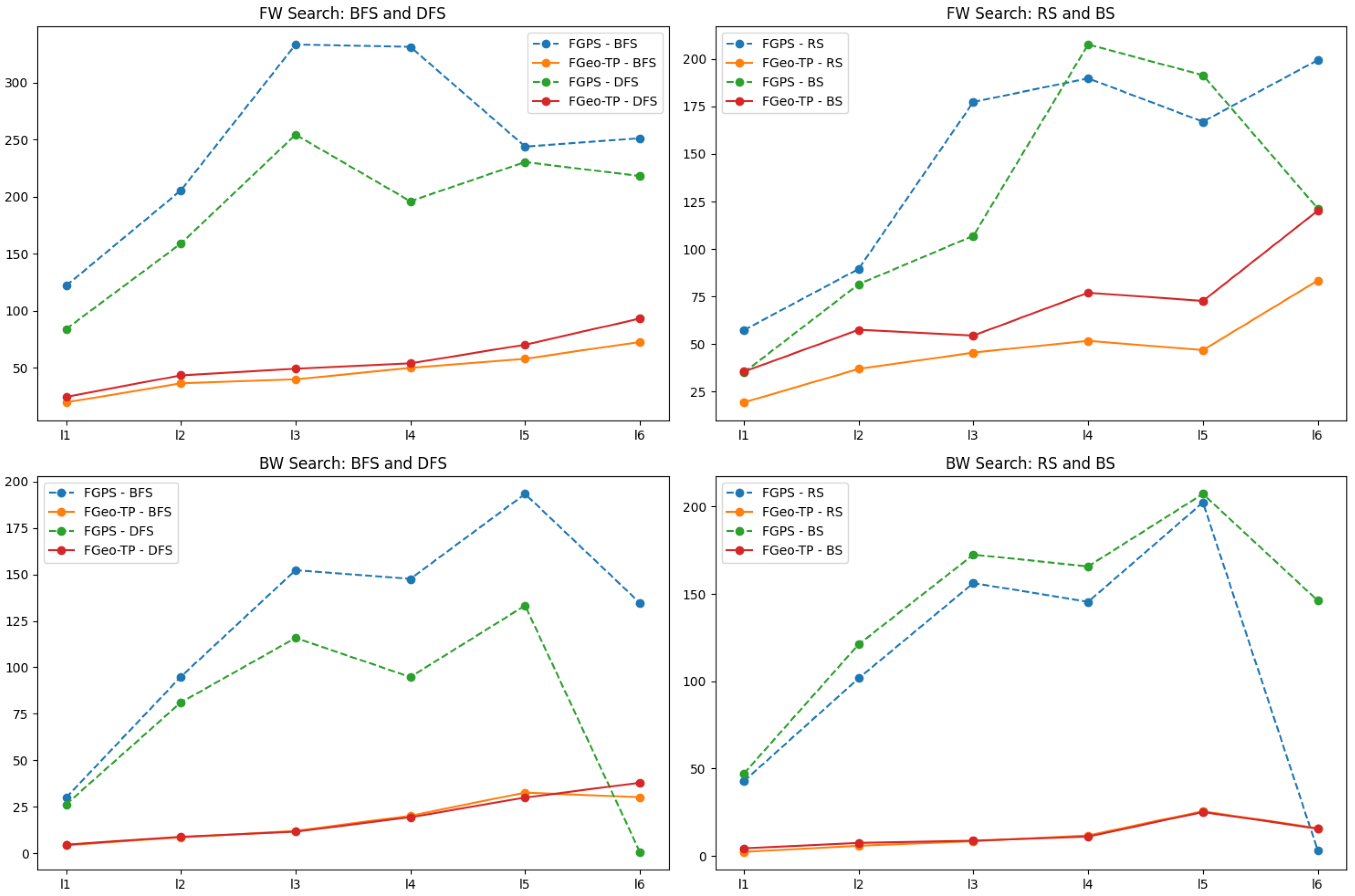

10], we implemented FGPS for the inference and solution of all problems in the FormalGeo7k dataset. However, this was contingent on the annotated theorem sequences provided in the FormalGeo7k dataset. When the solution theorem sequence is not provided, FGPS resorts to using built-in methods to search for theorem solutions. During the search process, FGPS constructs a search tree containing the sequence of theorem applications for solving a given problem. We utilized both forward search (FW) and backward search (BW) methods. FW starts from known conditions, continually searching for available theorems to generate new conditions until the solving objective is reached. In contrast, BW starts from the solving objective, decomposes it into multiple sub-goals, and seeks the conditions required for each sub-goal, determining whether the current sub-goal is solvable. This process repeats until all sub-goals are resolved.

Additionally, we employed the following four search strategies: breadth-first search (BFS), depth-first search (DFS), random search (RS), and beam search (BS). BFS traverses each node of the search tree in a level-wise manner, DFS recursively selects nodes from shallow to deep, RS randomly selects nodes for expansion at each stage, and BS selects a specified number of nodes (K) at each expansion stage, striking a balance between BFS and RS.

In cases where the search time exceeded 600 s, indicating a timeout for problem-solving, the search results are presented in

Table 1. Notably, the forward random search method achieved the highest success rate of 39.7%, while the backward depth-first search method exhibited the lowest unsolved rate of 2.42%. We observed that a substantial portion of problem-solving tasks were not entirely unsolvable but rather failed due to prolonged solving times, leading to timeout. Hence, there is a need for optimizations and pruning of the FGPS solving process to achieve a higher success rate in problem-solving.

In line with the habitual problem-solving practices of humans, a high school student, accustomed to regular problem-solving, typically skims through the problem conditions when faced with a plane geometry question. With this initial scan, the student can often make an approximate inference regarding the primary knowledge points being tested by the question. Therefore, our aim is for FGPS to emulate this cognitive process. To achieve this, we have incorporated a theorem predictor ahead of the solver in our methodology. This modification enables FGPS to select more suitable theorems for application, rather than attempting to utilize all available theorems.

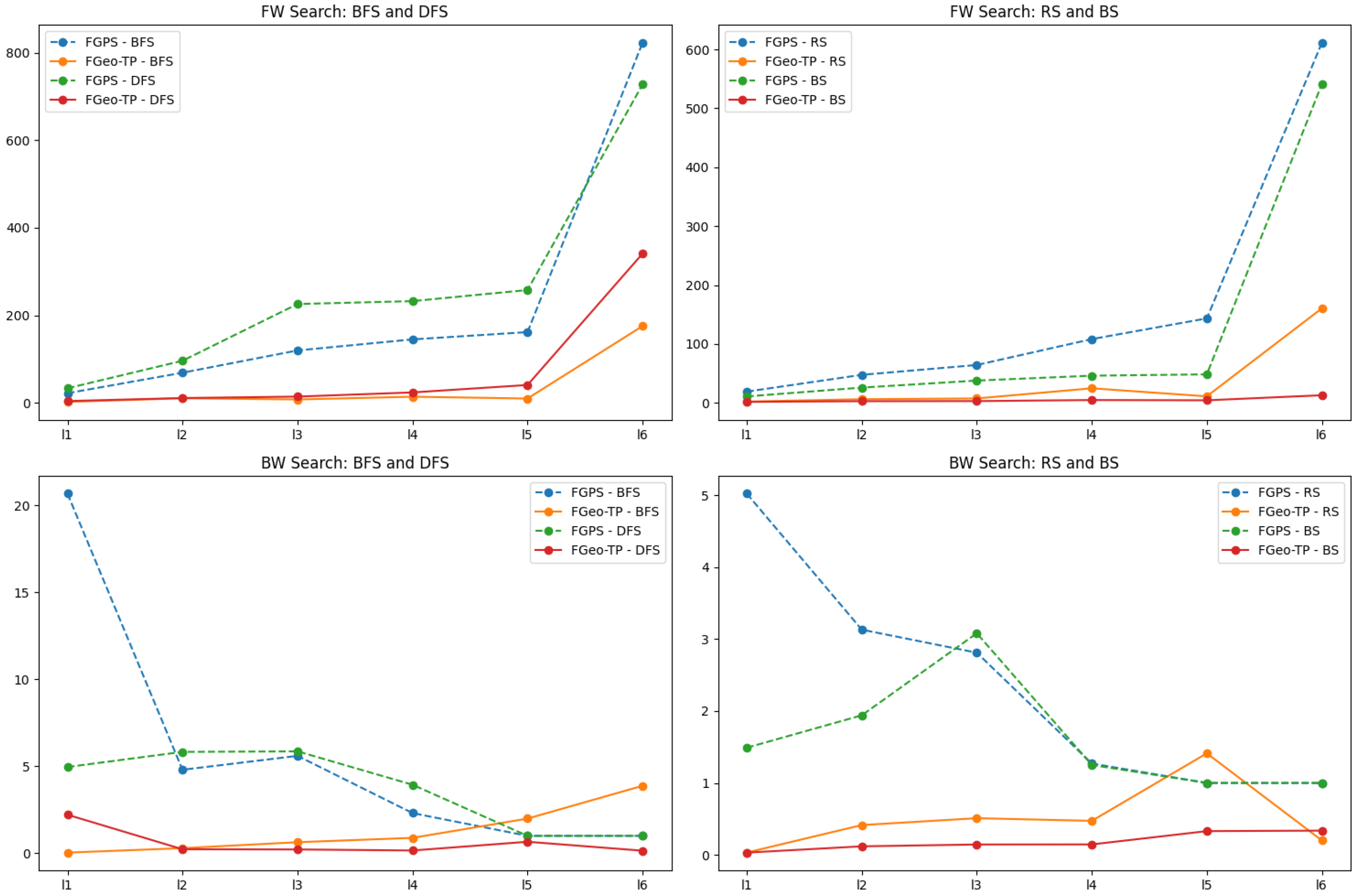

3.2. FGeo-TP

FGPS experienced a high percentage of timeouts in searching the FormalGeo7k dataset, primarily because, during the search process, each step involves exploring a large number of theorems for potential matches. To optimize the solver’s solving process, we introduced a theorem predictor. Before FGPS initiates the theorem search, the theorem predictor guides FGPS, reducing the search complexity. The augmented FGPS, incorporating the theorem predictor, is denoted as FGeo-TP (theorem predictor). The architecture of FGeo-TP is illustrated in

Figure 2.

In contrast to directly inputting the formalized language into FGPS, FGeo-TP requires the formalized language to be simultaneously input into the theorem predictor. The theorem predictor outputs the corresponding theorem sequence, and FGPS receives both the formalized language and the predicted theorem sequence.

In the theorem predictor, we anticipate the input and output to be the formalized language from FormalGeo7k and the required theorem sequence for each problem. As the length of the formalized language and the theorems varies for each problem, a Seq2Seq model is considered suitable. We opted to use the well-established transformer architecture for implementing both the encoder and decoder.

The formalized conditional language in FormalGeo7k consists of geometric relationship predicates, geometric form predicates, free variables, or numbers. This differs significantly from popular natural language corpora in the field of natural language processing. Therefore, pre-training the transformer model with our corpus is essential for the subsequent comprehension of formalized language by the model. To enhance readability, the formalized geometric relationship and form predicates in FormalGeo7k are composed of English words or concatenations of English words. This can be treated as word-level tokenization in the encoder, while free variables and numbers are considered character-level tokenization. Hence, there is no need to update the existing vocabulary. Due to the specificity of theorem names, a slight change in a single word may render FGPS unable to recognize them. To prevent out-of-vocabulary situations in the decoder, we convert theorem names into their corresponding numerical representations and represent the theorem sequence as a one-dimensional array containing only integers.

We performed fine-tuning on the transformer pre-trained model using annotated training and validation datasets. The fine-tuned model was then evaluated on the test set to assess the prediction results. In fine-tuning tasks, the optimization was carried out using the negative log-likelihood loss to refine the generated objectives.

Here, N represents the length of the sequence, representing the number of theorems in the target sequence. represents the conditional probability of predicting theorem given the historical predictions and the input formalized language.

To achieve higher matching degrees, we employed beam search, selecting the union of multiple theorem sequences with higher probabilities to enhance the degree of matching.

represents the set of the top k predicted theorem sequences when predicting the next theorem. Each predicted theorem sequence has the form [

], where

is the theorem added in that step. The

input is the formalized geometry problem conditions. This process continues until the predefined sequence length is reached or an end token is encountered. In the experiments, we set the maximum sequence length to 20, and the number of top predicted theorem sequences is 5.

represents the final predicted theorem sequence, while denotes the set of the top-k predicted theorem sequences when the last theorem prediction is completed. The experimental findings indicate that even when merging multiple predicted theorem sequences, the final predicted theorem sequence’s length does not experience a significant increase.

After FGeo-TP executes the predicted theorem sequence, the conclusions are deduced based on the original problem conditions. We integrate these conclusions with the initial problem conditions to form a new set of conditions. If the problem-solving objective is still unresolved with this updated set, FGeo-TP employs a search method based on the new condition set to identify a solution. FGPS demonstrates a quick use of the theorem, thus, the use of predicted theorem sequences by FGeo-TP does not impose a significant burden on reasoning. On the contrary, it can reduce FGPS’ search for subsequent theorems. Through these steps, our objective is to streamline the solver’s search process and implement pruning in the solver’s solving procedure.