1. Introduction

In medical cost studies, researchers need to use appropriate methods to evaluate the average medical cost of a patient across their whole life. Due to censoring mechanisms, the survival function is generally not identifiable. Bang and Tsiatis [

1] introduced a class of weighted estimators that appropriately account for censoring; although extensive simulation studies showed that the estimators perform well in finite samples, even with heavily censored data, the estimator is not efficient, and the computations are complex. Lin et al. [

2] partitioned the entire time period of interest into a number of small intervals and estimated the average total cost to minimize the bias induced by censoring. Furthermore, the estimators were proven to be asymptotically normal. Huang and Lovato [

3] formulated weighted log-rank statistics in a marked point process framework and developed the asymptotic theory. The above methods have been applied to estimate the cumulative mean function. However, in addition to censoring mechanisms, data often consist of longitudinal outcomes, time-dependent/independent covariates, event times, and censoring times. In longitudinal studies, subjects are repeatedly observed at a set of distinct time points until the terminal event time. The joint model [

4] contains both a longitudinal sub-model, to express the effect on longitudinal measurements from time-dependent covariates, and a survival sub-model, to reveal the survival function association with the longitudinal part. Time-independent covariates are generally present in the survival sub-model due to the absence of repeated measurements.

Deng [

5] considered a linear parametric regression model to describe longitudinal data and used the joint modeling technique and the inverse probability weighting method [

6] to estimate the cumulative mean function. Although this method can also solve the problem of handling time-dependent covariates and right-censored time-to-event data, it may still be restrictive for capturing initial data.The main reason for this is that the effects of covariates on longitudinal outcomes are considered time-independent constants.

Thus, some researchers have focused on non-parametric longitudinal sub-models (e.g., Hoover et al. [

7]; Zhao et al. [

8]; Li et al. [

9]; Do et al. [

10]). There have been numerous approaches to estimating non-parametric estimators in the recent literature, such as kernel, smoothing spline, regression spline, and wavelet-based methods. For instance, Eubank and Speckman [

11] proposed a well-behaved non-parametric kernel regression model in a small-sample study with bias-corrected confidence bands and proved the asymptotic properties. Wu et al. [

12] minimized the local square criterion and obtained the asymptotic distributions for kernel estimators. However, when the covariate dimension is too high, the smooth estimation of general multivariate non-parametric regression may require a large sample size, and the smoothing results may be difficult to interpret.

Several time-varying coefficient longitudinal models have been considered in recent studies. For instance, You et al. [

13] considered the time-varying coefficient as a polynomial basis regression spline, proposed a mixed-effects model for multiple longitudinal outcomes using the local polynomial method, and provided tuning parameters and variable selection. However, this model can only be used in continuous longitudinal outcomes. Observed data tend to be discrete in many applications. Moreover, time-independent covariates were not considered in their study [

13]. The joint model with kernel smoothing varying coefficients in the longitudinal sub-model can be used to estimate the cumulative mean function with discrete data. Therefore, in this study, we estimate the cumulative mean function with time-dependent/independent covariates using the kernel method in a joint time-varying coefficient model based on right/interval censoring history process data. In our method, the estimator of the mean state function is unbiased at time points where all subjects are observed. The reason for this is that, after smoothing the function with the kernel method, the values of the original sample points are not changed. Thus, we can utilize all the known information for estimation without data bias. Moreover, in our simulation study, the estimator of the cumulative mean state function is almost equal to the value of the preassigned function at any time point.

The remainder of the paper is organized as follows:

Section 2 establishes the joint varying coefficient model and proposes the estimators of time-varying coefficients based on our method.

Section 3 demonstrates the feasibility of this method through numerical simulations.

Section 4 applies the proposed model to a real-world data set from a multicenter automatic defibrillator implantation trial (MADIT).

Section 5 discusses the influence of bandwidth

h selection and concludes this paper. Finally, the appendices provide the proofs of the main results.

2. Estimation for a Time-Varing Coefficient Model

It is assumed that history process data are right-censored. Let T denote the terminal event time, and C denote the censoring event time. Let denote the state process which is related to the time-dependent covariate and time-independent covariate . The state process satisfies when . For , let and denote the true values of T and C for the ith subject. Further, denotes the censoring indicator. Assume that censoring time is independent of terminal event time and the state process. Let , q-dimensional vector , and p-dimensional vector be the observed history of , , and for the ith subject.

Now, the time-varying coefficient joint model can be formed as follows:

where

is time-varying coefficient parameter,

is the association coefficient of the longitudinal outcome to the hazards for occurring event,

is the baseline hazards function, which is known in our model, and

is the random error with

. Further, it is assumed that

is independent of terminal event

T conditional on

and

W.

2.1. Estimation of Time-Varying Coefficient in Longitudinal Model

We should notice that the observations for ith subject are not continuous but only able to be obtained at some special times , . The state process can be the observed until the event time Thus, let be the observed state history. For the sake of illustration, assume when .

For convenience, define sets for and , where are the observed distinct time points for all subjects. N denotes the number of all the distinct observed time points.

The estimator

of the time-varying coefficient

can be calculated by minimizing the following equation:

where

is the kernel weight function. The bandwidth, which is generally selected according to the observed time points, reveals the distance of

t and

. There is a major distinction between the estimation from Equation (

2) and the estimation in Wu [

12] since the censoring mechanism, indicator

, is necessary in order to avoid incomplete data due to censoring.

Remark 1. We assume that is the Epanechnikov kernel function. The cross-validation method can be used to select the appropriate kernel function. The kernel function with the smallest induced error is considered the best. More details on kernel function selection can be found in [14]. Equivalently, we write Equation (

2) in the matrix form:

where

and

It is assumed that

is also invertible. By minimizing Equation (

3),

can be expressed as the following

q-dimensional column vector:

The traditional method for bandwidth selection is K-fold cross-validation (CV). Time-varying coefficients complicate the calculation. However, ‘leave-one-subject-out’ cross-validation proposed by Rice and Sliverman [

15] can be used in such scenarios. In this case, the kernel weight function is

, the estimator of time-varying coefficient

is

. Minimizing the following equation:

where

is the kernel estimator computed with all measurements except the measurements of

ith subject. Then, the cross-validation bandwidth can be obtained.

2.2. Estimation of Survival Model

Define

, and then the estimate of

is

The data related to survival sub-model consist of .

The Cox partial maximum likelihood function [

4] is

The log-partial likelihood function for Equation (

5) is

Then, replacing

by the estimator

, the estimator

can be obtained by maximizing Equation (

6).

We define the survival function of

T as:

The estimate of

can be obtained from the hazards function in joint model:

Theoretically, the estimate

is related to the values of covariates

for

. Since

generally is not continuously observed, the estimate

can not be derived even at observed time points.

can be calculated only if

can be observed continuously. Thus, we replace

with the Kaplan–Meier estimator [

16]. Alternatively, in terms of the law of large numbers, the survival function can also be estimated as follows:

Moreover, the estimate of the survival function of

for

can be obtained in a similar way.

2.3. Estimation of Cumulative Mean State Function

Suppose

is the mean function of

, that is, given

,

. Because of the censoring, the proposed estimator

for the mean state function

at any time is as follows:

where

is the fitted value of

at time point

t.

Then, the cumulative mean function

for any time point

t can be obtained as:

2.4. The Asymptotic Property of Estimators

Here, we discuss the asymptotic property of estimators. To rigorously define the statements, we introduce various notations. Let denote the observed covariate processes, such as baseline information, study time, and so on, that is, . Then, let denote the longitudinal covariate history prior to time t and denote the response history prior to time t, that is, and . Furthermore, we use to denote the Euclidean norm in real space and to denote the derivative of with respect to time t. It is assumed that all observed time points are independent of each other and follow a distribution with density .

Assumption 1. is Lipschitz-continuous with order , for any and in support of and some , and and are Lipschitz-continuous with orders of and , respectively.

Assumption 2. and are continuous.

Assumption 3. is square-integrable that integrates to one and satisfies , , and , while and as .

Assumption 4. The bandwidth satisfies for some constant .

Assumption 5. for some

Theorem 1. Under Assumptions (1)–(5), the estimator defined in Equation (4) is asymptotically multivariate normal for any as . We summarize the asymptotic normality of

at a fixed point

, where

in

Appendix A.

Remark 2. Most assumptions are similar regularity conditions as that in Wu et al. [12]. Assumptions 1 and 2 are rigorous statements for which is positive-definite and invertible asymptotically. Assumption 3 ensures that has a compact support on R. Assumptions 4 and 5 are results from finite moments in Assumptions 1 and 2. More discussions about the asymptotic risk for the kernel estimators can be found in [12]. Theorem 2. It is assumed that for , , the estimator defined in Equation (8) is an unbiased estimator for any . Based on Zeng and Cai [

17], the following assumptions are imposed on the joint model.

Assumption 6. For any , the covariate process is fully observed and conditional on , , and ; the distribution of depends only on . is continuously differentiable in and with a probability of one.

Assumption 7. The censoring time C depends only on and for any conditional on , , and .

Assumption 8. Full-rank ) is positive. Additionally, if there is an existing constant vector satisfying for a deterministic function for all with a positive probability, then and .

Assumption 9. The true value of parameter satisfies for a known positive constant .

Assumption 10. The baseline hazard function is bounded and positive in .

Assumption 11. There is an existing positive constant satisfying .

Remark 3. Assumption 6 serves as a fundamental statement in joint models, indicating that the association between the history process and the survival time is due to observed covariate processes, such as baseline information, study time, and so on, denoted by . Assumption 7 means that there exist some appropriate measures such that the intensity function of exists. Assumption 8 is the identifiability assumption in a linear mixed-effects model. Assumptions 9–11 imply that, conditional on and , the probability of a subject surviving after time τ is at least some positive constant. Theorem 3.1 in Zeng and Cai [17] states the strong consistency of the maximum likelihood estimator. More discussions on the assumptions can be found in [17]. Theorem 3. Under Assumptions (1)–(11), the estimators defined in Equation (8) and defined in Equation (9) are consistent for any . 3. Simulation

In this section, some numerical results are presented. In our simulation, the joint model can be described as:

where

is the state function,

is the vector of covariates for regression parameters,

is the time-varying coefficients,

w is the covariates for regression parameter

,

is the association parameter, and

.

The standard deviation (Std.dev) and the root mean square errors (RMSE) with

of overall estimates calculated by R 4.3.1 [

18] are used to assess the quality of estimators. We summarize the steps in the following procedure:

Set the sample size n, the true function , the true value of parameters , and the rate of censoring r;

Generate a random sample ;

Derive the random sample of lifetime with the hazards function ;

Generate a random sample of censoring ;

Set ;

Generate the random sample of the time-dependent covariates , and the baseline hazards function .

For , generate the response variables .

Output the estimated function , the estimated value of parameters and , the bias and the std.err of parameters and , and the RMSE of the estimated function .

We utilize packages of ‘MASS’, ‘splines’, ‘survival’, ‘nlme’, ‘JM’, ‘lattice’, ‘mvtnorm’, ‘tibble’ and ‘ggplot2’ in our simulation study.

Now, we consider the following scenarios:

Scenario 1: Set , where , , , , , , , , , and .

Scenario 2: Set , where , , , , , , , , , and .

The following is the form based on the joint model:

After 1000 replications in R, we obtain the results of the estimation at different sample sizes. In each scenario, we control the censoring rate by about .

In scenario 1, we set the true values of parameters as

and

. In the scenario 2, we set the true values of parameters as

and

. From the proposed estimators given in Equations (

8) and (

9), fitted values of the state function

and the cumulative mean function

for any time points can be computed.

Table 1 and

Table 2 present the estimates of the root of mean square errors (RMSEs) of the state function

and the cumulative mean function

with different numbers of time points

N for the two scenarios. The RMSE is small when

because the estimators of

are unbiased at observed time points. Since the estimators are biased at other time points, the RMSE increases as

N increases.

Table 3 and

Table 4 summarize the main findings of fixed parameters. The results show that as the sample size increases, the bias and model-based standard errors decrease, which coincides with empirical results reasonably well. The performance improves with larger sample size. Note that it is common for the Std. Err. of

to be very large, sometimes reaching as large as

in small samples for linear mixed-effects models (see Table 1 in [

5]). In the semi-parametric model estimation based on polynomial regression, the Std. Err. of

even reaches

(see Tables 1 and 2 in [

19]). Compared with these methods, the Std. Err. of

in our paper is less.

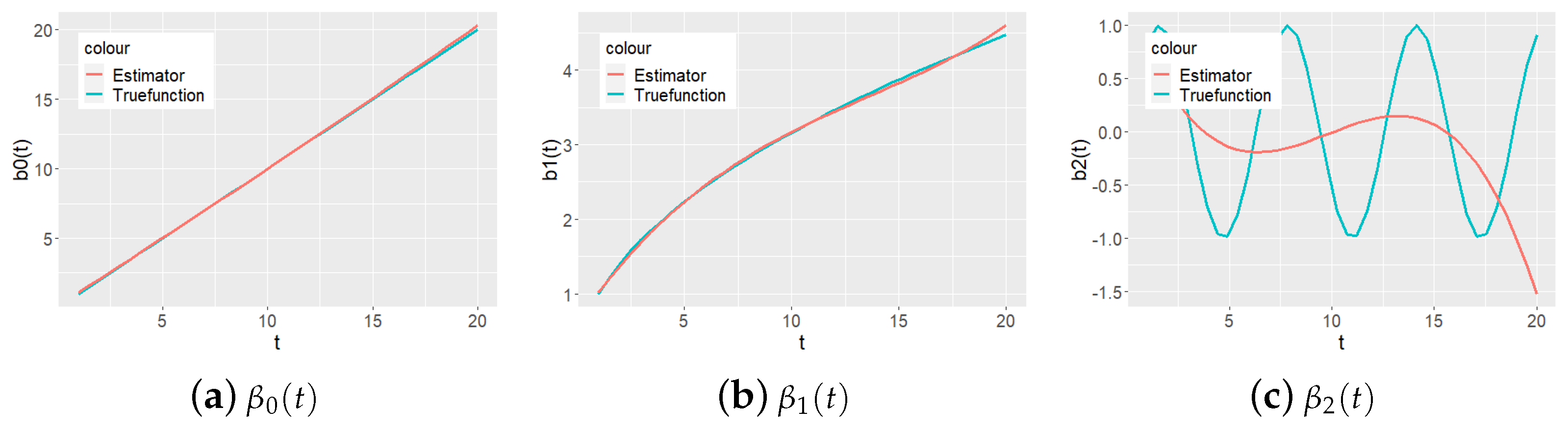

Figure 1 and

Figure 2 present the proposed estimated functions of

as a continuous curve and true values of

at time points

as a series of points.

Figure 3 and

Figure 4 present the estimated functions of

using the local polynomial regression method. In Scenario 1, our method is not significantly superior to polynomial regression. However, in Scenario 2, the term regression method does not fit

well, and our method estimates

close to the true value. This is because

is set up as a trigonometric function rather than a linear combination of power functions. It can be seen that while the local polynomial regression method is suitable for power basis functions, the kernel smoothing method fits the function better, even in such cases.

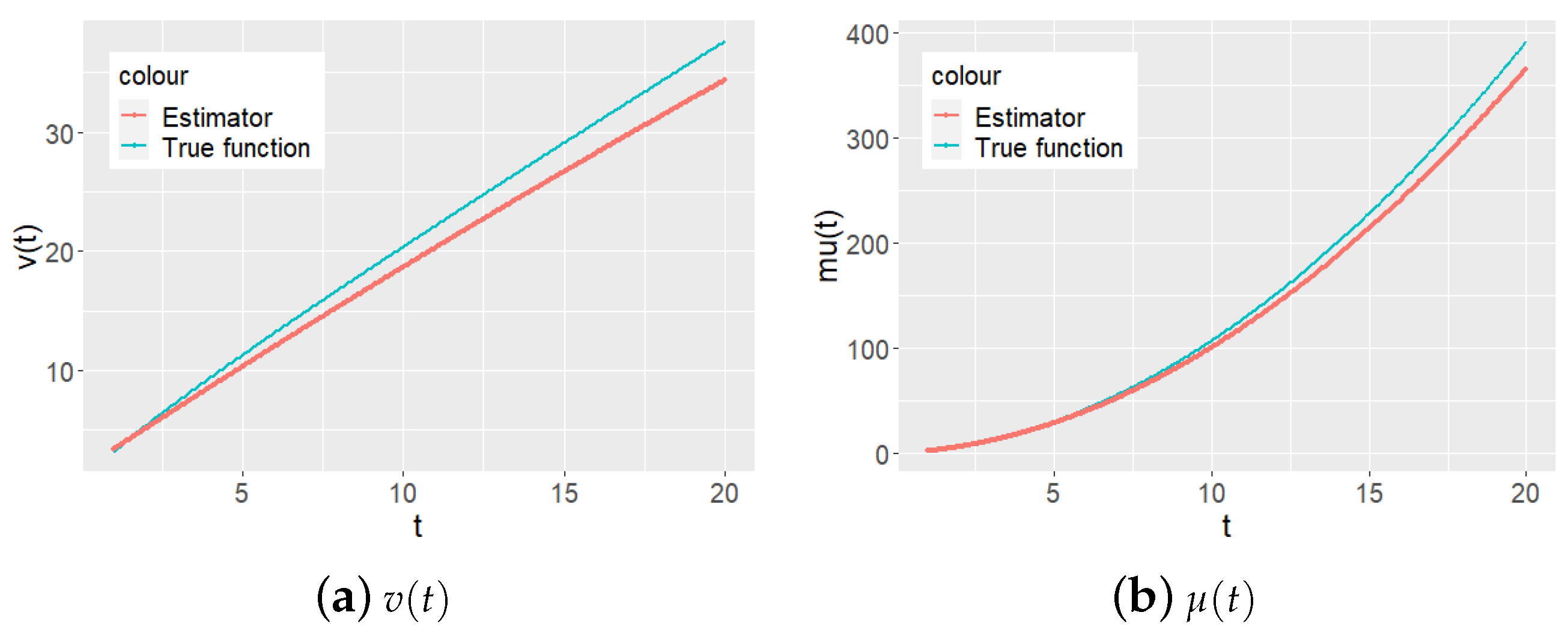

Furthermore,

Figure 5 and

Figure 6 show the true curves and fitted values of the state function

and the mean of cumulative mean function

. In each figure, the estimated functions approximate to the values of true functions.

Figure 7 and

Figure 8 show the results of the local polynomial regression method. Similar to the previous conclusion, the method we proposed works better.

4. An Application to MADIT Data

In this section, we validate the proposed estimator with a real data set from a multicenter automatic defibrillator implantation trial (MADIT). MADIT data contain 181 subjects (patients) from 36 centers in the USA that were observed at a total of 134,853 discrete time points. With the 181 patients, 89 of them chose to implant cardiac defibrillators, while another 92 did not. Throughout this section, we encode the ‘implanted’ group as and ‘not implanted’ group as . Since the effect of treatment of whether to implant cardiac defibrillators (ICDs) did not directly induce any medical costs but actually affected the expected survival time, we consider it as the time-independent covariate in the survival sub-model.

The observed patients have six types of costs that can be observed daily from the start to the death time or censoring time: Type 1: hospitalization and emergency department visits; Type 2: outpatient tests and procedures; Type 3: physician/specialist visits; Type 4: community services; Type 5: medical supplies; Type 6: medication. These costs directly drive medical costs, thus they should be considered as time-dependent covariates in the longitudinal process sub-model. It should be pointed out that the whole observation contains quite a lot of data points, so the R-code cannot work for the daily cost data. Therefore, we merge the data by combining 12 days into one time unit.

These data also include the patients’ ID codes, observed survival times in days, and death indicators.

Now, in the data set, we encode the total 181 patients as follows:

ID code (from 1 to 181);

Treatment code (1 for ICD and 0 for conventional);

Observation of survival time;

Death indicator(1 for death, 0 for censored);

Merged medical costs of type 1–6.

To analyze this data set, we describe the model as follows:

where for

,

if type = 1, otherwise,

,

for the ICD group and

for the not implanted group. The estimated parameters

and

in survival sub-model are obtained by Cox partial maximum likelihood method, they are always asymptotically normal. The estimates of

and

in this paper are attained by calling ‘coxph()’ and ‘method = peicewise-PH-GH’ in the JM package. The Std. Err. and

p-value are automatically produced.

In this case,

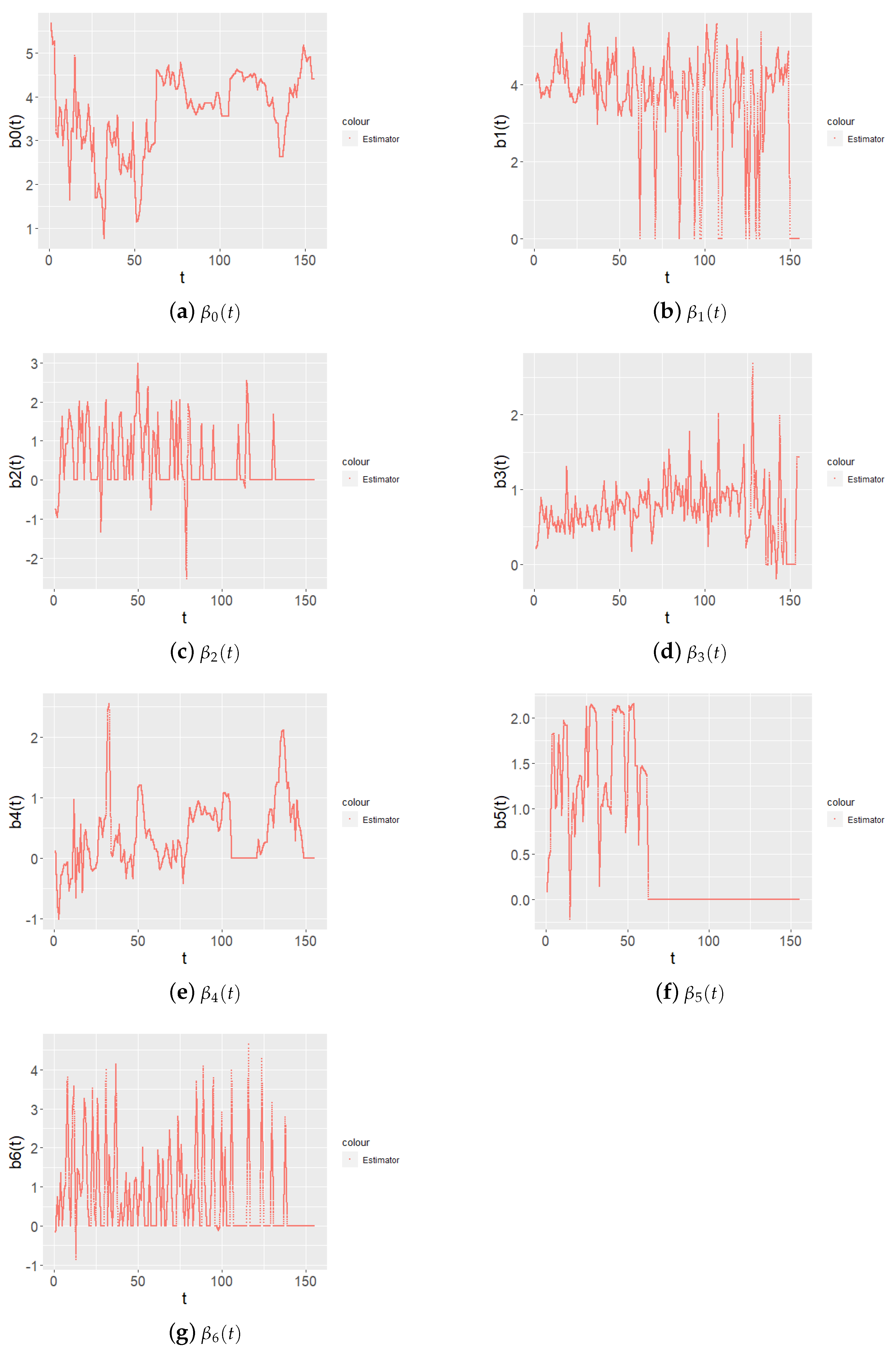

can be considered the relationship between the natural logarithm of medical cost and time unit. The fitted curve is illustrated in

Figure 9. Time-varying parameters more accurately describe the effect of covariates on state function over time, such as

; after a certain time point,

does not affect the state function, although the in early stage it does.

Table 5 shows the estimates of the survival sub-model. The association effect

is positive, which corresponds to reality, that is, patients in a serious life condition require more medical attention. In other words, serious illness leads to higher medical costs, corresponding to lower survival rates. This further supports that our model is efficient and reasonable. However, due to the large

p-value of the result, this conclusion is not specific, which should be further tested using a large sample in future research. The treatment effect

is negative, which corresponds to reality, that is, an automatic defibrillator implantation trial can reduce the risk of death. In other words, the ‘implanted’ group (ICD = 1) has a lower risk of death. This conclusion is supported by the small

p-value of the result.

Table 6 presents the estimated values of the cumulative cost for 5 years and the total treatment period. Comparing Sub-figure(a) with Sub-figure(b) in

Figure 10 and

Figure 11, we realize that the fitted points for the mean medical cost based on the current approach better describe the elaborate trajectories of medical costs, and the result for the fitted points of the cumulative mean medical cost based on the current approach exhibit a similar trend to Li’s [

19] result; however, the result is more accurate and shows continuous change, which, in turn, leads to improved understanding of real-world data.

Compared with parametric/semi-parametric estimation, our method suggests a smoother time-varying mean medical cost function, which is no longer a straight line with an observable change rate, but exhibits more fluctuations over time. In practical situations, government agencies or insurance companies would not know which treatment was selected by a patient. The estimated cumulative mean function provides them with a more accurate statistical decision recommendation for macro allocation. For example, we should consider the additional changes in medical costs because they would suddenly increase or decrease at distinct time points.

Moreover, the estimated marginal survival function is smoother compared with the Kaplan–Meier estimator. The result is similar to research by Li’s [

19], as shown in

Figure 12. The solid line is the estimated survival rate, and the dotted line represents the

confidence interval of the estimated survival rate.

5. Discussion

Generally, some estimate methods can lead to biased estimates in the model, and the time-varying coefficient model compensates for this shortcoming. In this study, we provide an estimation of the time-varying coefficient using the kernel function technique. When the covariates are continuous, the continuous state function can be obtained, allowing for the derivation of the continuous cumulative mean state function. In summary, our method is more precise because the kernel function smooths the estimator; more importantly, the proposed estimator at the original observed true points is unbiased. Theorem 2 demonstrates this perspective. In our numerical simulation and application analysis, we consider the observation interval to be a unit of 1, and we chose a bandwidth h of 1 in the kernel smoothing function. Notably, in cases where the observation time interval is not 1 or involves interval censoring, we must choose the appropriate bandwidth. Many studies on kernel function have proposed methods for choosing the bandwidth. In general, too large a bandwidth distorts data, and too small a bandwidth does not lead to a smooth continuous function. Moreover, as , turns out to be a series of constants, that is, a time-independent parametric estimator.

In this study, we do not consider the random effect coefficients in the longitudinal data model, and the correlation that may exist among repeated observations within individuals is ignored. If we consider the random effect coefficients, we will not obtain Theorem 2, but we can still obtain Theorem 3. In future studies, random effects should be considered in longitudinal models, which may yield better results.

Furthermore, the estimation of the time-varying coefficient using the kernel function technique can be extended to multiple longitudinal outcomes, and the justification for the survival sub-model would need a multi-dimensional association coefficient. The methods proposed in [

20] may be applicable.