Assessing the Role of Facial Symmetry and Asymmetry between Partners in Predicting Relationship Duration: A Pilot Deep Learning Analysis of Celebrity Couples

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Source

- Name: Identifier for each celebrity.

- image_id_1 and image_id_2: The image IDs corresponding to the two partners.

- Duration_of_Partnership_in_months_until_2023: The calculated duration of each partnership.

- Married/Partnership: A binary indicator representing whether the partnership was a marriage (coded as 1) or a non-marital partnership (coded as 0).

2.2. Deep Learning-Based Analysis of Dissimilarity

2.3. Machine and Deep Learning-Based Prediction of Relationship Duration

2.4. Landmark-Based Subanalyses

2.5. Statistical Analysis

3. Results

3.1. Comparative Analyses

3.2. Prediction Modelling

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alvarez, L.; Jaffe, K. Narcissism Guides Mate Selection: Humans Mate Assortatively, as Revealed by Facial Resemblance, Following an Algorithm of “self Seeking Like”. Evol. Psychol. 2004, 2, 177–194. [Google Scholar] [CrossRef]

- Griffiths, R.W.; Kunz, P.R. Assortative Mating: A Study of Physiognomic Homogamy. Soc. Biol. 1973, 20, 448–453. [Google Scholar] [CrossRef] [PubMed]

- Hinsz, V.B. Facial Resemblance in Engaged and Married Couples. J. Soc. Pers. Relat. 1989, 6, 223–229. [Google Scholar] [CrossRef]

- Little, A.C.; Burt, D.M.; Perrett, D.I. Assortative Mating for Perceived Facial Personality Traits. Pers. Individ. Dif. 2006, 40, 973–984. [Google Scholar] [CrossRef]

- Zajonc, R.B.; Adelmann, P.K.; Murphy, S.T.; Niedenthal, P.M. Convergence in the Physical Appearance of Spouses. Motiv. Emot. 1987, 11, 335–346. [Google Scholar] [CrossRef]

- Wong, Y.K.; Wong, W.W.; Lui, K.F.H.; Wong, A.C.-N. Revisiting Facial Resemblance in Couples. PLoS ONE 2018, 13, e0191456. [Google Scholar] [CrossRef]

- Thiessen, D.; Gregg, B. Human Assortative Mating and Genetic Equilibrium: An Evolutionary Perspective. Ethol. Sociobiol. 1980, 1, 111–140. [Google Scholar] [CrossRef]

- Jiahui, G.; Feilong, M.; Visconti Di Oleggio Castello, M.; Nastase, S.A.; Haxby, J.V.; Gobbini, M.I. Modeling Naturalistic Face Processing in Humans with Deep Convolutional Neural Networks. Proc. Natl. Acad. Sci. USA 2023, 120, e2304085120. [Google Scholar] [CrossRef]

- Schrimpf, M.; Kubilius, J.; Hong, H.; Majaj, N.J.; Rajalingham, R.; Issa, E.B.; Kar, K.; Bashivan, P.; Prescott-Roy, J.; Geiger, F. Brain-Score: Which Artificial Neural Network for Object Recognition Is Most Brain-Like? BioRxiv 2018, 407007. [Google Scholar] [CrossRef]

- Yamins, D.L.K.; Hong, H.; Cadieu, C.F.; Solomon, E.A.; Seibert, D.; DiCarlo, J.J. Performance-Optimized Hierarchical Models Predict Neural Responses in Higher Visual Cortex. Proc. Natl. Acad. Sci. USA 2014, 111, 8619–8624. [Google Scholar] [CrossRef]

- Dobs, K.; Martinez, J.; Kell, A.J.; Kanwisher, N. Brain-like Functional Specialization Emerges Spontaneously in Deep Neural Networks. Sci. Adv. 2022, 8, eabl8913. [Google Scholar] [CrossRef]

- Grossman, S.; Gaziv, G.; Yeagle, E.M.; Harel, M.; Mégevand, P.; Groppe, D.M.; Khuvis, S.; Herrero, J.L.; Irani, M.; Mehta, A.D.; et al. Convergent Evolution of Face Spaces across Human Face-Selective Neuronal Groups and Deep Convolutional Networks. Nat. Commun. 2019, 10, 4934. [Google Scholar] [CrossRef]

- Ratan Murty, N.A.; Bashivan, P.; Abate, A.; DiCarlo, J.J.; Kanwisher, N. Computational Models of Category-Selective Brain Regions Enable High-Throughput Tests of Selectivity. Nat. Commun. 2021, 12, 5540. [Google Scholar] [CrossRef]

- Park, S.H.; Russ, B.E.; McMahon, D.B.T.; Koyano, K.W.; Berman, R.A.; Leopold, D.A. Functional Subpopulations of Neurons in a Macaque Face Patch Revealed by Single-Unit fMRI Mapping. Neuron 2017, 95, 971–981.e5. [Google Scholar] [CrossRef]

- Oosterhof, N.N.; Todorov, A. The Functional Basis of Face Evaluation. Proc. Natl. Acad. Sci. USA 2008, 105, 11087–11092. [Google Scholar] [CrossRef]

- Todorov, A.; Said, C.P.; Engell, A.D.; Oosterhof, N.N. Understanding Evaluation of Faces on Social Dimensions. Trends Cogn. Sci. 2008, 12, 455–460. [Google Scholar] [CrossRef]

- Visconti di Oleggio Castello, M.; Halchenko, Y.O.; Guntupalli, J.S.; Gors, J.D.; Gobbini, M.I. The Neural Representation of Personally Familiar and Unfamiliar Faces in the Distributed System for Face Perception. Sci. Rep. 2017, 7, 12237. [Google Scholar] [CrossRef]

- Visconti di Oleggio Castello, M.; Haxby, J.V.; Gobbini, M.I. Shared Neural Codes for Visual and Semantic Information about Familiar Faces in a Common Representational Space. Proc. Natl. Acad. Sci. USA 2021, 118, e2110474118. [Google Scholar] [CrossRef] [PubMed]

- Ramon, M.; Gobbini, M.I. Familiarity Matters: A Review on Prioritized Processing of Personally Familiar Faces. Vis. Cogn. 2018, 26, 179–195. [Google Scholar] [CrossRef]

- Carlin, J.D.; Calder, A.J.; Kriegeskorte, N.; Nili, H.; Rowe, J.B. A Head View-Invariant Representation of Gaze Direction in Anterior Superior Temporal Sulcus. Curr. Biol. 2011, 21, 1817–1821. [Google Scholar] [CrossRef]

- Hoffman, E.A.; Haxby, J.V. Distinct Representations of Eye Gaze and Identity in the Distributed Human Neural System for Face Perception. Nat. Neurosci. 2000, 3, 80–84. [Google Scholar] [CrossRef] [PubMed]

- Pashos, A.; Niemitz, C. Results of an Explorative Empirical Study on Human Mating in Germany: Handsome Men, Not High-Status Men, Succeed in Courtship. Anthropol. Anz. 2003, 61, 331–341. [Google Scholar] [CrossRef] [PubMed]

- Frieze, I.H.; Olson, J.E.; Russell, J. Attractiveness and Income for 680 Men and Women in Management 1. J. Appl. Soc. Psychol. 1991, 21, 1039–1057. [Google Scholar] [CrossRef]

- Henderson, J.J.; Anglin, J.M. Facial Attractiveness Predicts Longevity. Evol. Hum. Behav. 2003, 24, 351–356. [Google Scholar] [CrossRef]

- Perrett, D.I.; May, K.A.; Yoshikawa, S. Facial Shape and Judgements of Female Attractiveness. Nature 1994, 368, 239. [Google Scholar] [CrossRef] [PubMed]

- Rubenstein, A.J.; Langlois, J.H.; Roggman, L.A. What Makes a Face Attractive and Why: The Role of Averageness in Defining Facial Beauty; Rhodes, G., Zebrowitz, L.A., Eds.; Ablex Publishing: Westport, CT, USA, 2002. [Google Scholar]

- Schmid, K.; Marx, D.; Samal, A. Computation of a Face Attractiveness 800 Index Based on Neoclassical Canons, Symmetry, and Golden Ratios. Pattern Recognit. 2008, 41, 2710–2717. [Google Scholar] [CrossRef]

- Jayaratne, Y.S.; Deutsch, C.K.; McGrath, C.P.; Zwahlen, R.A. Are Neoclassical Canons Valid for Southern Chinese Faces? PLoS ONE 2012, 7, 52593. [Google Scholar] [CrossRef]

- Borissavlievitch, M.; Hautecœr, L. The Golden Number and the Scientific Aesthetics of Architecture; Alec Tiranti Ltd.: London, UK, 1958. [Google Scholar]

- Jefferson, Y. Facial Beauty-Establishing a Universal Standard. Int. J. Orthod. 2004, 15, 9–26. [Google Scholar]

- Farkas, L.G.; Schendel, S.A. Anthropometry of the Head and Face. Plast. Reconstr. Surg. 1995, 96, 480. [Google Scholar]

- Farkas, L.G.; Kolar, J.C. Anthropometrics and Art in the Aesthetics of Women’s Faces. Clin. Plast. Surg. 1987, 14, 599–616. [Google Scholar] [CrossRef] [PubMed]

- Pallett, P.M.; Link, S.; Lee, K. New “Golden” Ratios for Facial Beauty. Vis. Res. 2010, 50, 149–154. [Google Scholar] [PubMed]

- Bóo, F.L.; Rossi, M.A.; Urzúa, S.S. The Labor Market Return to an Attractive Face: Evidence from a Field Experiment. Econ. Lett. 2013, 118, 170–172. [Google Scholar]

- Holland, E. Marquardt’s Phi Mask: Pitfalls of Relying on Fashion Models and the Golden Ratio to Describe a Beautiful Face. Aesthetic Plast. Surg. 2008, 32, 200–208. [Google Scholar] [CrossRef]

- Shen, H.; Chau, D.K.; Su, J.; Zeng, L.-L.; Jiang, W.; He, J.; Fan, J.; Hu, D. Brain Responses to Facial Attractiveness Induced by Facial Proportions: Evidence from an Fmri Study. Sci. Rep. 2016, 6, 35905. [Google Scholar] [CrossRef]

- Gunes, H.; Piccardi, M. Assessing Facial Beauty through Proportion Analysis by Image Processing and Supervised Learning. Int. J. 2006, 64, 1184–1199. [Google Scholar] [CrossRef]

- Chen, F.; Zhang, D. Evaluation of the Putative Ratio Rules for Facial Beauty Indexing; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Shao, M.; Fu, Y. Attractive or Not?: Beauty Prediction with Attractiveness-Aware Encoders and Robust Late Fusion. In Proceedings of the 22nd ACM international conference on Multimedia, Orlando, FL, USA, 3–7 November 2014. [Google Scholar]

- Rothe, R.; Timofte, R.; Gool, L. Some like It Hot-Visual Guidance for Preference Prediction. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5553–5561. [Google Scholar]

- Cadieu, C.F.; Hong, H.; Yamins, D.L.; Pinto, N.; Ardila, D.; Solomon, E.A.; Majaj, N.J.; DiCarlo, J.J. Deep Neural Networks Rival the Representation of Primate It Cortex for Core Visual Object Recognition. PLoS Comput. Biol. 2014, 10, e1003963. [Google Scholar] [CrossRef]

- Yamins, D.L.; DiCarlo, J.J. Using Goal-Driven Deep Learning Models 845 to Understand Sensory Cortex. Nat. Neurosci. 2016, 19, 356. [Google Scholar] [CrossRef]

- Cichy, R.M.; Pantazis, D.; Oliva, A. Similarity-Based Fusion of Meg and Fmri Reveals Spatio-Temporal Dynamics in Human Cortex during Visual Object Recognition. Cereb. Cortex 2016, 26, 3563–3579. [Google Scholar] [CrossRef] [PubMed]

- Cichy, R.M.; Khosla, A.; Pantazis, D.; Torralba, A.; Oliva, A. Comparison of Deep Neural Networks to Spatio-Temporal Cortical Dynamics of Human Visual Object Recognition Reveals Hierarchical Correspondence. Sci. Rep. 2016, 6, 27755. [Google Scholar] [CrossRef]

- Wang, P.; Cottrell, G.W. Central and Peripheral Vision for Scene Recognition: A Neurocomputational Modeling Exploration. J. Vis. 2017, 17, 9. [Google Scholar] [CrossRef]

- Seeliger, K.; Fritsche, M.; Güçlü, U.; Schoenmakers, S.; Schoffelen, J.-M.; Bosch, S.; Gerven, M. Convolutional Neural Network-Based Encoding and Decoding of Visual Object Recognition in Space and Time. NeuroImage 2018, 180, 253–266. [Google Scholar] [CrossRef] [PubMed]

- OToole, A.J.; Castillo, C.D.; Parde, C.J.; Hill, M.Q.; Chellappa, R. Face Space Representations in Deep Convolutional Neural Networks. Trends Cogn. Sci. 2018, 22, 794–809. [Google Scholar] [CrossRef]

- Kietzmann, T.C.; Spoerer, C.J.; Sörensen, L.K.; Cichy, R.M.; Hauk, O.; Kriegeskorte, N. Recurrence Is Required to Capture the Representational Dynamics of the Human Visual System. Proc. Natl. Acad. Sci. USA 2019, 116, 21854–21863. [Google Scholar] [CrossRef] [PubMed]

- McCurrie, M.; Beletti, F.; Parzianello, L.; Westendorp, A.; Anthony, S.; Scheirer, W.J. Convolutional Neural Networks for Subjective Face Attributes. Image Vis. Comput. 2018, 78, 14–25. [Google Scholar] [CrossRef]

- Parde, C.J.; Hu, Y.; Castillo, C.; Sankaranarayanan, S.; OToole, A.J. Social Trait Information in Deep Convolutional Neural Networks Trained for Face Identification. Cogn. Sci. 2019, 43, 12729. [Google Scholar] [CrossRef]

- Nemrodov, D.; Niemeier, M.; Mok, J.N.Y.; Nestor, A. The Time Course of Individual Face Recognition: A Pattern Analysis of ERP Signals. NeuroImage 2016, 132, 469–476. [Google Scholar] [CrossRef]

- Willis, J.; Todorov, A. First Impressions: Making Up Your Mind After a 100-Ms Exposure to a Face. Psychol. Sci. 2006, 17, 592–598. [Google Scholar] [CrossRef] [PubMed]

- Collins, J.A.; Olson, I.R. Beyond the FFA: The Role of the Ventral Anterior Temporal Lobes in Face Processing. Neuropsychologia 2014, 61, 65–79. [Google Scholar] [CrossRef]

- Liu, S.; Quinn, P.C.; Wheeler, A.; Xiao, N.; Ge, L.; Lee, K. Similarity and Difference in the Processing of Same- and Other-Race Faces as Revealed by Eye Tracking in 4- to 9-Month-Olds. J. Exp. Child Psychol. 2011, 108, 180–189. [Google Scholar] [CrossRef]

- Jiang, F.; Blanz, V.; Rossion, B. Holistic Processing of Shape Cues in Face Identification: Evidence from Face Inversion, Composite Faces, and Acquired Prosopagnosia. Vis. Cogn. 2011, 19, 1003–1034. [Google Scholar] [CrossRef]

- Riesenhuber, M.; Jarudi, I.; Gilad, S.; Sinha, P. Face Processing in Humans Is Compatible with a Simple Shape–Based Model of Vision. Proc. R. Soc. Lond. B 2004, 271 (Suppl. S6), S448–S450. [Google Scholar] [CrossRef]

- Jones, B.C.; DeBruine, L.M.; Flake, J.K.; Liuzza, M.T.; Antfolk, J.; Arinze, N.C.; Ndukaihe, I.L.G.; Bloxsom, N.G.; Lewis, S.C.; Foroni, F.; et al. To Which World Regions Does the Valence-Dominance Model of Social Perception Apply? Nat. Hum. Behav. 2021, 5, 159–169. [Google Scholar] [CrossRef]

- Todorov, A.; Pakrashi, M.; Oosterhof, N.N. Evaluating Faces on Trustworthiness After Minimal Time Exposure. Soc. Cogn. 2009, 27, 813–833. [Google Scholar] [CrossRef]

- Todorov, A.; Olivola, C.Y.; Dotsch, R.; Mende-Siedlecki, P. Social Attributions from Faces: Determinants, Consequences, Accuracy, and Functional Significance. Annu. Rev. Psychol. 2015, 66, 519–545. [Google Scholar] [CrossRef] [PubMed]

- Dotsch, R.; Hassin, R.R.; Todorov, A. Statistical Learning Shapes Face Evaluation. Nat. Hum. Behav. 2016, 1, 0001. [Google Scholar] [CrossRef]

- Ng, W.-J.; Lindsay, R.C.L. Cross-Race Facial Recognition: Failure of the Contact Hypothesis. J. Cross-Cult. Psychol. 1994, 25, 217–232. [Google Scholar] [CrossRef]

- Crookes, K.; Ewing, L.; Gildenhuys, J.; Kloth, N.; Hayward, W.G.; Oxner, M.; Pond, S.; Rhodes, G. How Well Do Computer-Generated Faces Tap Face Expertise? PLoS ONE 2015, 10, e0141353. [Google Scholar] [CrossRef]

- Luo, S. Assortative Mating and Couple Similarity: Patterns, Mechanisms, and Consequences. Soc. Pers. Psychol. Compass 2017, 11, e12337. [Google Scholar] [CrossRef]

- Watson, D.; Klohnen, E.C.; Casillas, A.; Nus Simms, E.; Haig, J.; Berry, D.S. Match Makers and Deal Breakers: Analyses of Assortative Mating in Newlywed Couples. J. Personal. 2004, 72, 1029–1068. [Google Scholar] [CrossRef]

- Buss, D.M. Marital Assortment for Personality Dispositions: Assessment with Three Different Data Sources. Behav. Genet. 1984, 14, 111–123. [Google Scholar] [CrossRef]

- Schwartz, C.R.; Graf, N.L. Assortative Matching among Same-Sex and Different-Sex Couples in the United States, 1990–2000. Demogr. Res. 2009, 21, 843. [Google Scholar] [CrossRef] [PubMed]

- Robinson, M.R.; Kleinman, A.; Graff, M.; Vinkhuyzen, A.A.; Couper, D.; Miller, M.B.; Peyrot, W.J.; Abdellaoui, A.; Zietsch, B.P.; Nolte, I.M. Genetic Evidence of Assortative Mating in Humans. Nat. Hum. Behav. 2017, 1, 0016. [Google Scholar] [CrossRef]

- Vandenberg, S.G. Assortative Mating, or Who Marries Whom? Behav. Genet. 1972, 2, 127–157. [Google Scholar] [CrossRef]

- Epstein, E.; Guttman, R. Mate Selection in Man: Evidence, Theory, and Outcome. Soc. Biol. 1984, 31, 243–278. [Google Scholar] [CrossRef]

- Hitsch, G.J.; Hortaçsu, A.; Ariely, D. What Makes You Click?—Mate Preferences in Online Dating. Quant. Mark. Econ. 2010, 8, 393–427. [Google Scholar] [CrossRef]

- Watson, D.; Beer, A.; McDade-Montez, E. The Role of Active Assortment in Spousal Similarity. J. Pers. 2014, 82, 116–129. [Google Scholar] [CrossRef]

- Xie, Y.; Cheng, S.; Zhou, X. Assortative Mating without Assortative Preference. Proc. Natl. Acad. Sci. USA 2015, 112, 5974–5978. [Google Scholar] [CrossRef]

- Zajonc, R.B. Emotion and Facial Efference: A Theory Reclaimed. Science 1985, 228, 15–21. [Google Scholar] [CrossRef] [PubMed]

- Tea-makorn, P.P.; Kosinski, M. Spouses’ Faces Are Similar but Do Not Become More Similar with Time. Sci. Rep. 2020, 10, 17001. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep Learning Face Attributes in the Wild. arXiv 2014, arXiv:1411.7766. [Google Scholar] [CrossRef]

- Bruce, V. Stability from Variation: The Case of Face Recognition. The M.D. Vernon Memorial Lecture. Q. J. Exp. Psychol. A 1994, 47, 5–28. [Google Scholar] [CrossRef] [PubMed]

- Milord, J.T. Aesthetic Aspects of Faces: A (Somewhat) Phenomenological Analysis Using Multidimensional Scaling Methods. J. Pers. Soc. Psychol. 1978, 36, 205–216. [Google Scholar] [CrossRef]

- Harmon, L.D. The Recognition of Faces. Sci. Am. 1973, 229, 71–82. [Google Scholar] [CrossRef]

- Lindsay, R.C.L. Biased Lineups: Where Do They Come From? In Adult Eyewitness Testimony; Ross, D.F., Read, J.D., Toglia, M.P., Eds.; Cambridge University Press: Cambridge, UK, 1994; pp. 182–200. ISBN 978-0-521-43255-9. [Google Scholar]

- Spuhler, J.N. Assortative Mating with Respect to Physical Characteristics. Eugen. Q. 1968, 15, 128–140. [Google Scholar] [CrossRef]

- Hill, C.T.; Rubin, Z.; Peplau, L.A. Breakups Before Marriage: The End of 103 Affairs. J. Soc. Issues 1976, 32, 147–168. [Google Scholar] [CrossRef]

| Model | Hyperparameter | Values Considered |

|---|---|---|

| Random Forest | Number of Trees | [50, 100, 400, 700] |

| Maximum Tree Depth | [None, 10, 20, 40, 60] | |

| Min Samples for Split | [2, 5, 10, 20, 30] | |

| Min Samples for Leaf | [1, 2, 4, 7, 9] | |

| Support Vector | Regularization Strength (C) | [0.1, 1, 10] |

| Machine (SVM) | Kernel Type | [‘linear’, ‘rbf’, ‘poly’] |

| Kernel Coefficient (Gamma) | [‘scale’, ‘auto’, 0.1, 1] | |

| Linear Regression | - | - |

| Ridge Regression | Regularization Strength (Alpha) | [0.1, 1, 10] |

| Deep Learning | Number of Epochs | [10, 20, 30] |

| Batch Size | [32, 64, 128] | |

| Number of Hidden Units | [32, 64, 128] | |

| Learning Rate | [0.001, 0.01, 0.1] |

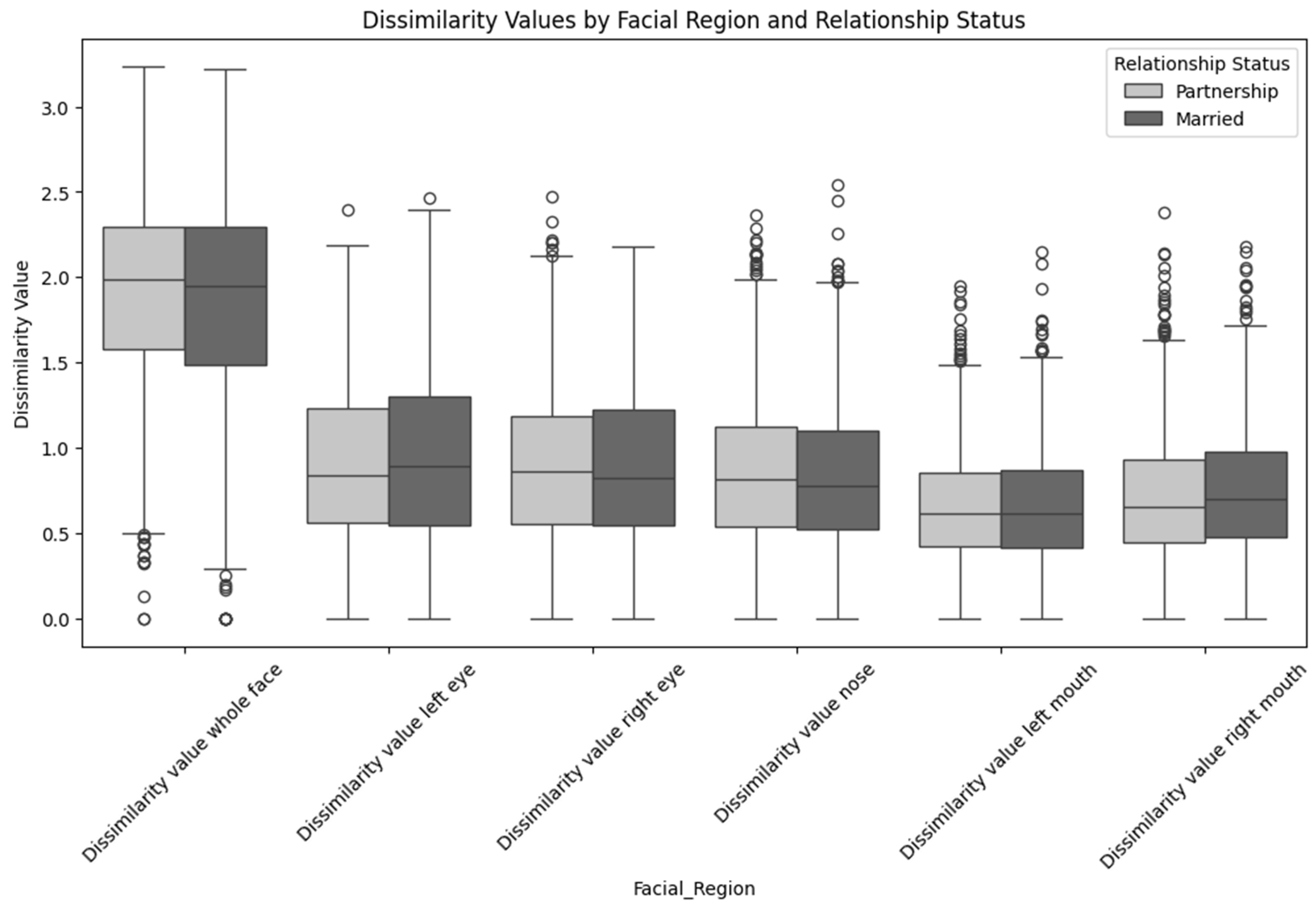

| Facial Region | Overall Mean (95% CI) | Overall Median | Partnership Mean (95% CI) | Partnership Median | Married Mean (95% CI) | Married Median | p-Value |

|---|---|---|---|---|---|---|---|

| Whole Face | 1.88 (1.85–1.91) | 1.97 | 1.90 (1.86–1.94) | 1.99 | 1.86 (1.82–1.90) | 1.95 | 0.319 |

| Left Eye | 0.93 (0.91–0.95) | 0.86 | 0.91 (0.88–0.94) | 0.84 | 0.94 (0.91–0.98) | 0.89 | 0.194 |

| Right Eye | 0.90 (0.88–0.92) | 0.85 | 0.91 (0.88–0.94) | 0.86 | 0.89 (0.86–0.92) | 0.82 | 0.449 |

| Nose | 0.86 (0.84–0.88) | 0.80 | 0.87 (0.84–0.90) | 0.82 | 0.85 (0.82–0.88) | 0.78 | 0.374 |

| Right Mouth Region | 0.74 (0.72–0.76) | 0.68 | 0.72 (0.70–0.75) | 0.66 | 0.75 (0.73–0.78) | 0.70 | 0.071 |

| Left Mouth Region | 0.66 (0.65–0.68) | 0.61 | 0.66 (0.64–0.68) | 0.61 | 0.67 (0.65–0.69) | 0.62 | 0.848 |

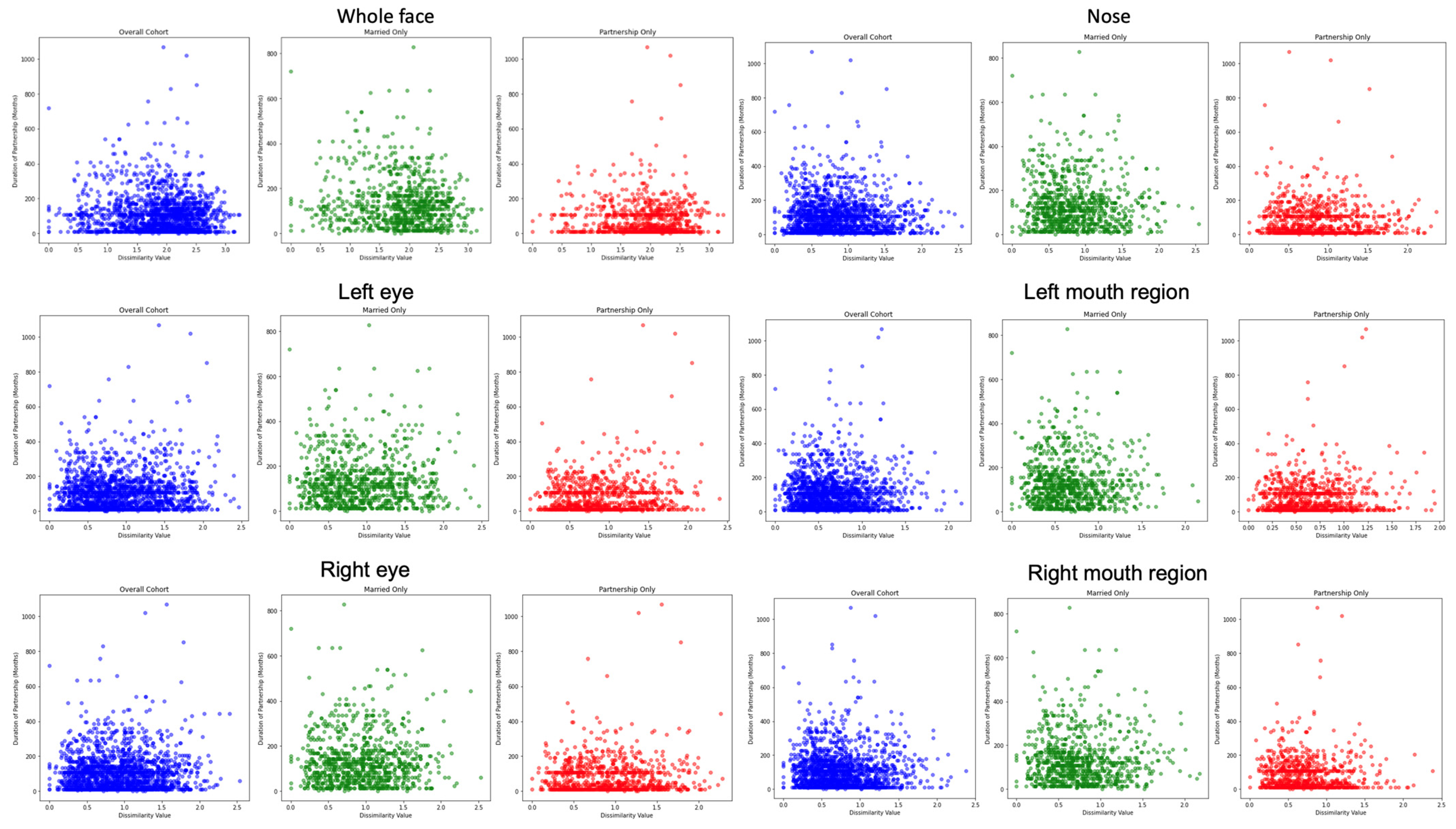

| Duration of Partnership | Dissimilarity Value Whole Face | Dissimilarity Value Left Eye | Dissimilarity Value Right Eye | Dissimilarity Value Nose | Dissimilarity Value Left Mouth | Dissimilarity Value Right Mouth | ||

|---|---|---|---|---|---|---|---|---|

| Duration of partnership | Correlation Coefficient | 1.000 | −0.045 | 0.020 | 0.006 | −0.026 | −0.007 | −0.010 |

| p-value | - | 0.055 | 0.392 | 0.797 | 0.262 | 0.780 | 0.657 | |

| Dissimilarity value whole face | Correlation Coefficient | −0.045 | 1.000 | 0.129 ** | 0.048 * | 0.049 * | −0.001 | −0.027 |

| p-value | 0.055 | - | 0.000 | 0.042 | 0.036 | 0.963 | 0.255 | |

| Dissimilarity value left eye | Correlation Coefficient | 0.020 | 0.129 ** | 1.000 | 0.037 | 0.010 | 0.055 * | 0.040 |

| p-value | 0.392 | 0.000 | - | 0.115 | 0.666 | 0.019 | 0.092 | |

| Dissimilarity value right eye | Correlation Coefficient | 0.006 | 0.048 * | 0.037 | 1.000 | 0.179 ** | −0.005 | 0.011 |

| p-value | 0.797 | 0.042 | 0.115 | - | 0.000 | 0.846 | 0.625 | |

| Dissimilarity value nose | Correlation Coefficient | −0.026 | 0.049 * | 0.010 | 0.179 ** | 1.000 | −0.004 | 0.019 |

| p-value | 0.262 | 0.036 | 0.666 | 0.000 | - | 0.868 | 0.427 | |

| Dissimilarity value left mouth | Correlation Coefficient | −0.007 | −0.001 | 0.055 * | −0.005 | −0.004 | 1.000 | 0.244 ** |

| p-value | 0.780 | 0.963 | 0.019 | 0.846 | 0.868 | - | 0.000 | |

| Dissimilarity value right mouth | Correlation Coefficient | −0.010 | −0.027 | 0.040 | 0.011 | 0.019 | 0.244 ** | 1.000 |

| Sig. (2-tailed) | 0.657 | 0.255 | 0.092 | 0.625 | 0.427 | 0.000 | - | |

| Feature | Algorithm | Mean MSE | Mean R2 |

|---|---|---|---|

| Whole Face | Neural Network | 1.172 | 0.0227 |

| Linear Regression | 1.128 | 0.0587 | |

| Ridge Regression | 1.124 | 0.0623 | |

| Random Forest | 1.198 | 0.0011 | |

| SVM | 1.162 | 0.0296 | |

| Left Eye | Neural Network | 1.115 | 0.0684 |

| Linear Regression | 1.126 | 0.0599 | |

| Ridge Regression | 1.122 | 0.0631 | |

| Random Forest | 1.179 | 0.0164 | |

| SVM | 1.172 | 0.0215 | |

| Left Mouth | Neural Network | 1.149 | 0.0410 |

| Linear Regression | 1.127 | 0.0599 | |

| Ridge Regression | 1.124 | 0.0617 | |

| Random Forest | 1.168 | 0.0254 | |

| SVM | 1.168 | 0.0247 | |

| Nose | Neural Network | 1.156 | 0.0358 |

| Linear Regression | 1.127 | 0.0595 | |

| Ridge Regression | 1.124 | 0.0619 | |

| Random Forest | 1.166 | 0.0255 | |

| SVM | 1.169 | 0.0231 | |

| Right Eye | Neural Network | 1.150 | 0.0388 |

| Linear Regression | 1.138 | 0.0505 | |

| Ridge Regression | 1.132 | 0.0549 | |

| Random Forest | 1.173 | 0.0218 | |

| SVM | 1.176 | 0.0184 | |

| Right Mouth | Neural Network | 1.165 | 0.0307 |

| Linear Regression | 1.128 | 0.0592 | |

| Ridge Regression | 1.125 | 0.0614 | |

| Random Forest | 1.169 | 0.0262 | |

| SVM | 1.169 | 0.0235 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shavlokhova, V.; Vollmer, A.; Stoll, C.; Vollmer, M.; Lang, G.M.; Saravi, B. Assessing the Role of Facial Symmetry and Asymmetry between Partners in Predicting Relationship Duration: A Pilot Deep Learning Analysis of Celebrity Couples. Symmetry 2024, 16, 176. https://doi.org/10.3390/sym16020176

Shavlokhova V, Vollmer A, Stoll C, Vollmer M, Lang GM, Saravi B. Assessing the Role of Facial Symmetry and Asymmetry between Partners in Predicting Relationship Duration: A Pilot Deep Learning Analysis of Celebrity Couples. Symmetry. 2024; 16(2):176. https://doi.org/10.3390/sym16020176

Chicago/Turabian StyleShavlokhova, Veronika, Andreas Vollmer, Christian Stoll, Michael Vollmer, Gernot Michael Lang, and Babak Saravi. 2024. "Assessing the Role of Facial Symmetry and Asymmetry between Partners in Predicting Relationship Duration: A Pilot Deep Learning Analysis of Celebrity Couples" Symmetry 16, no. 2: 176. https://doi.org/10.3390/sym16020176

APA StyleShavlokhova, V., Vollmer, A., Stoll, C., Vollmer, M., Lang, G. M., & Saravi, B. (2024). Assessing the Role of Facial Symmetry and Asymmetry between Partners in Predicting Relationship Duration: A Pilot Deep Learning Analysis of Celebrity Couples. Symmetry, 16(2), 176. https://doi.org/10.3390/sym16020176