Abstract

Path planning is a challenging, computationally complex optimization task in high-dimensional scenarios. The metaheuristic algorithm provides an excellent solution to this problem. The dung beetle optimizer (DBO) is a recently developed metaheuristic algorithm inspired by the biological behavior of dung beetles. However, it still has the drawbacks of poor global search ability and being prone to falling into local optima. This paper presents a multi-strategy enhanced dung beetle optimizer (MDBO) for the three-dimensional path planning of an unmanned aerial vehicle (UAV). First, we used the Beta distribution to dynamically generate reflection solutions to explore more search space and allow particles to jump out of the local optima. Second, the Levy distribution was introduced to handle out-of-bounds particles. Third, two different cross operators were used to improve the updating stage of thief beetles. This strategy accelerates convergence and balances exploration and development capabilities. Furthermore, the MDBO was proven to be effective by comparing seven state-of-the-art algorithms on 12 benchmark functions, the Wilcoxon rank sum test, and the CEC 2021 test suite. In addition, the time complexity of the algorithm was also analyzed. Finally, the performance of the MDBO in path planning was verified in the three-dimensional path planning of UAVs in oil and gas plants. In the most challenging task scenario, the MDBO successfully searched for feasible paths with the mean and standard deviation of the objective function as low as 97.3 and 32.8, which were reduced by 39.7 and 14, respectively, compared to the original DBO. The results demonstrate that the proposed MDBO had improved optimization accuracy and stability and could better find a safe and optimal path in most scenarios than the other metaheuristics.

1. Introduction

Artificial intelligence is one of the most promising technologies for solving problems in various fields. UAV is a powerful auxiliary tool in artificial intelligence technology with high flexibility, low cost, and high efficiency [1]. It is also considered as a candidate technology for future 6G networks and has wide applications in various scenarios [2]. For example, in hazardous areas such as oil and gas plants, UAVs can replace humans to perform specific tasks and improve work efficiency. However, ensuring the safety of autonomous flight UAVs in complex environments is a significant challenge. Path search has essential research significance as a supporting technology for UAV flight. Path planning allows the UAV to find the optimal safe path from a start point to an endpoint according to specific optimization criteria such as the minimal path length or the lowest energy consumption [3]. The UAV path searching problem is based on the case of task points and prior maps. Therefore, the mission, the surrounding environment, and the UAV’s physical constraints should be clarified first. On this basis, the task requirements, environmental states, and constraints related to UAVs are expressed through a mathematical model and applied to the path-planning problem. It has been proven that finding the optimal path is an NP-hard problem [4], and the complexity of the problem increases rapidly with the size of the problem.

Many researchers have been trying to develop solutions to path-planning problems, for example, conventional methods such as the artificial potential algorithm [5] and the Voronoi diagram search method [6]; cell-based methods such as Dijkstra [7] and the A-Star algorithm [8]; model-based methods such as rapidly exploring random tree (RRT) [9]; learning-based methods such as neural networks [10] and the evolutionary computation technique [11]. However, the limitations of the traditional and cell-based methods lie in poor flexibility and fault tolerance. The model-based methods typically have complex modeling and cannot work in real-time path planning. Neural networks have low complexity, flexibility, and fault tolerance advantages. Nevertheless, most neural networks require a learning process, which is time-consuming. Due to the limitations of traditional methods to successfully solve optimal paths, researchers have proposed metaheuristic algorithms for solving path-planning problems inspired by natural phenomena or laws, for example, genetic algorithm (GA) [12], particle swarm optimization (PSO) [13], ant colony optimization (ACO) [14], differential evolution (DE) [15], fruit fly optimization algorithm (FOA) [16], and the grey wolf optimization (GWO) algorithm [17]. The dung beetle optimization algorithm [18] is a recently developed metaheuristic algorithm that imitates the biological behavior of dung beetles. However, a determined metaheuristic cannot perform well in all categories of optimization problems, a hypothesis already proven by the “No Free Lunch” theorem [19]. This paper aimed to develop an efficient optimization algorithm for solving three-dimensional path-planning problems in oil and gas plants.

Nowadays, researchers have proposed many improved metaheuristic algorithms for solving various engineering application problems including path-planning problems. Yu X. et al. [15] modeled UAV path planning as a constraint satisfaction problem, in which the fitness function included the travel distance and risk of the UAV. Three constraints considered in the problem were UAV height, angle, and limited UAV slope. Jain et al. [20] proposed the multiverse optimizer (MVO) algorithm to deal with the coordination of UAVs in a three-dimensional environment. The results showed that the MVO algorithm performed better in most testing scenarios with the minimum average execution time. Li K. et al. [21] focused on multi-UAV collaborative task assignment problems with changing tasks. They proposed an improved fruit fly optimization algorithm (ORPFOA) to solve the real-time path-planning problem. Pehlivanoglu et al. [12] considered the path-planning problem of autonomous UAVs in target coverage problems and proposed initial population enhancement methods in GA for efficient path planning. These techniques aim to reduce the chances of collisions between UAVs.

Zhang X. et al. [22] proposed an improved FOA (MCFOA) by introducing two new strategies, Gaussian mutation and chaotic local search. Then, the MCFOA was applied to the feature selection problem to verify its optimization performance. Song S. et al. [23] used two different Gaussian variant strategies and dimension decision strategies to design an enhanced HHO (GCHHO) algorithm. The GCHHO algorithm has successfully optimized engineering design problems (such as tensile/compression springs and welded beam design problems). Gupta S. et al. [24] presented an improved random walk grey wolf algorithm and applied it to the parameter estimation of the frequency modulation (FM) unconstrained optimization problem, the optimal capacity of production facilities, and the design of pressure vessels. Pichai [25] introduced asymmetric chaotic sequences into the competitive swarm optimization (CSO) algorithm and applied it to the feature selection of high-dimensional data. The results showed that the algorithm reduced the number of features and improved the accuracy. Mikhalev [26] proposed a cyclic spider algorithm to optimize the weights of recurrent neural networks, and the results showed that the algorithm reduced the prediction errors. Almotairi [27] proposed a hybrid algorithm based on the reptile search algorithm and the remora algorithm, improving the original algorithm’s search capability. Then, the hybrid algorithm was applied to the cluster analysis problem.

Previous work has deeply studied the defects of intelligent algorithms and improved the convergence ability of algorithms. In this paper, we improved the optimization ability of the DBO and the solving efficiency of the UAV path-planning problem. The following work was conducted to improve the search performance of the DBO:

- Adding a reflective learning strategy and using Beta distribution function mapping to generate reflective solutions, improving the algorithm’s search ability;

- For particles that exceeded the search space range, Levy distribution mapping was used to handle particle boundaries, enhancing the probability of global search reaching the optimal position;

- Individual crossover mechanism and dimension crossover mechanism were used to update the position of individual thief beetles, increasing the population diversity and avoiding falling into local optima;

- Applying the improved MDBO to solve the three-dimensional UAV path-planning problem, and design sets of scene experiments to verify the efficiency of the MDBO.

The rest of this paper is organized as follows. Section 2 illustrates the basic DBO. In Section 3, the proposed MDBO is described in detail. In Section 4, the experimental results of the proposed MDBO are analyzed. Section 5 describes the UAV path-planning model. Section 6 conducts the UAV planning simulation experiments and discusses the comparison results between the MDBO and other metaheuristic algorithms. Finally, Section 7 provides a summary of the paper.

2. Dung Beetle Optimizer (DBO)

The basic DBO is based on population, mainly inspired by the ball-rolling, dancing, foraging, stealing, and reproduction behaviors of dung beetles. The DBO divides the population into four search agents: ball-rolling dung beetle, brood ball, small dung beetle, and thief. Specifically, each search agent has its own unique updating rules. Note that specific details will be described as follows.

- (1)

- Ball-rolling dung beetle

During the rolling process, dung beetles must navigate through celestial cues to keep the dung ball rolling in a straight line. Thus, the position of the ball-rolling dung beetle is updated and can be expressed as:

where represents the current number of iterations; represents the position information of the ith dung beetle during the tth iteration; is a constant value representing the deflection coefficient; is a constant value belonging to (0,1); is a natural coefficient assigned to 1 or −1; is the global worst position; simulates changes in light intensity.

When a dung beetle encounters obstacles that prevent it from progressing, it will redirect itself through dancing to obtain a new route. A tangent function simulates the dancing behavior to obtain a new rolling direction. Therefore, the location of the ball-rolling dung beetle is updated as follows:

where is the deflection angle.

- (2)

- Brood ball

Choosing a suitable spawning site is crucial for dung beetles to provide a safe environment for their offspring. Hence, in DBO, a boundary selection strategy is proposed to simulate the spawning area of female dung beetles, which is defined as follows:

where represents the current local optimal position; and represent the lower and upper bounds of the spawning area; ; denotes the maximum number of iterations; and are the lower and upper bounds of the search space, respectively.

It is assumed that each female dung beetle will only lay one egg in each iteration. The boundary range of the spawning area changes dynamically with values, so the position of the egg ball also changes dynamically during the iteration process expressed as follows:

where is the position of the ith sphere at the tth iteration; and are two independent random vectors with a size of ; is the dimension.

- (3)

- Small dung beetle

Some adult dung beetles will burrow out of the ground in search of food, and this type of dung beetle is called the small dung beetle. The boundaries of the optimal foraging area for small dung beetles are defined as follows:

where represents the global optimal position; and are the lower and upper bounds of the optimal foraging area, respectively. Therefore, the location of the small dung beetle is updated as follows:

where represents the position of the ith dung beetle at the tth iteration; is the random number subject to normal distribution; is the random vector within the range of (0,1).

- (4)

- Thief

Some dung beetles, known as thieves, steal dung balls from other beetles. From Equation (5), it can be observed that is the optimal food source. Assume that the area around is the optimal location for competing food. During the iteration process, the location information of the thief is updated as follows:

where represents the location information of the ith thief at the tth iteration; is a random vector subject to normal distribution with the size of ; is a constant value.

3. The Proposed Method

The MDBO proposed in this section mainly includes three strategies: generating reflective solutions, handling position boundary violations, and improving thief dung beetle position updates.

3.1. Dynamic Reflective Learning Strategy Based on Beta Distribution

The dynamic region selection strategy proposed by DBO strictly limits the search scope to this region, which is not conducive to searching other regions. The search scope can be expanded by dynamically calculating the solution in the opposite direction, helping the original algorithm break away from the local optimal region. The traditional method of randomly generating initial positions according to uniform distribution is simple and feasible. It is adverse to forming an “encircling” to the optimal solution. This paper proposes generating reflective solutions using Beta distribution to effectively surround the optimal solution with the initial position.

The Beta distribution function is defined as:

where is the Beta function, defined as:

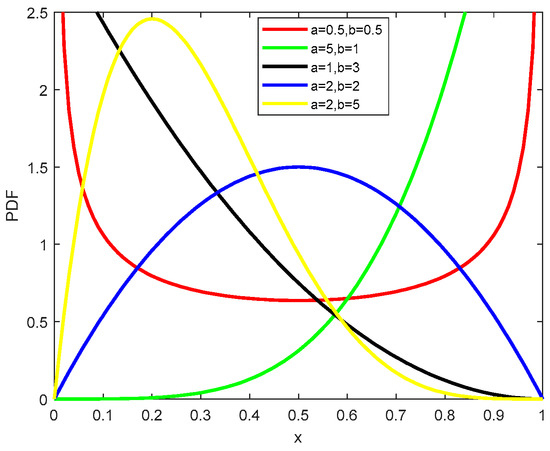

Figure 1 shows the probability density function of the Beta distribution. From the figure, it can be seen that when , the shape of the density function is symmetric in a U-shape. The candidate solution generated is most likely located near the boundary of the search space, so the global optimal solution is better “surrounded” within the initial particle swarm.

Figure 1.

Probability density function image of Beta distribution.

Assume that the number of particles is N, the dimension is D, the maximum number of iterations is T, the upper and lower bounds of the jth dimension are [,], and the reverse solution is , generated according to the following formulas:

where is the jth value of the ith solution at the t + 1th iteration; is the jth value of the ith reflective solution at the t + 1th iteration; is the domain center of the upper and lower boundaries; generated a random number within that follows the Beta distribution; is a function to randomly generate a number that obeys the Beta distribution. In this article, = = 0.5.

3.2. Cross Boundary Limits Method Based on Levy Distribution

The simple method of “if the particle exceeds the boundary, it is equal to the boundary” is used to deal with the individual position. The advantage of this method is that it is simple to implement. However, because the boundary point is not the global optimal solution, this method is unfavorable to the optimization process. In this paper, a new boundary processing method based on Levy distribution mapping was adopted. The specific operations are as follows:

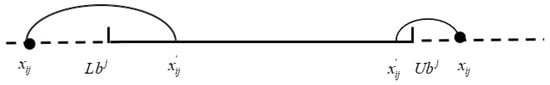

where is the particle position after boundary processing; is a constant number; Levy is a random search path, and its random step size is a Levy distribution. The notation is entry-wise multiplications. Figure 2 visually shows the method for handling particle boundaries. When the particle jumps out of the upper and lower boundaries, it re-enters the search region using Levy steps with random jumps.

Figure 2.

Cross boundary method based on Levy distribution.

3.3. Cross Operators for Updating the Location of Thieves

As can be seen from Equation (8), the thief’s position is affected by the global optimal solution. This mechanism enables the optimal solution to be generated by the thieves. However, once the optimal individual falls into the local optimal solution, the solving efficiency of the algorithm will be greatly reduced. Inspired by the crisscross optimizer [28], this paper introduced horizontal and vertical crossover operators to perform crosstalk operations on thieves to improve the convergence ability of the algorithm. Note that specific details are described as follows.

- (1)

- Horizontal crossover search (HCS)

HCS is similar to the crossover operation in genetic algorithms, which involves performing crossover operated on all dimensions between two individuals. Assuming that the dth dimension of the parent individual and are used to perform HCS, their kids can be reproduced as:

where and are random numbers uniformly distributed in the range of (0,1); and are random numbers uniformly distributed in the range of (−1,1). and are the kids generated by and , respectively.

- (2)

- Vertical crossover search (VCS)

VCS is a crossover operation performed between two dimensions of the corresponding individuals, which has a lower probability of occurrence than HCS. Assume that the d1th and d2th dimensions of the individual i are used to perform VCS, which is calculated as follows:

where is random number uniformly distributed in the range of (0,1), and is the offspring of and .

3.4. The Detailed Process of the MDBO

By introducing the above three strategies into the MDBO, the convergence speed and convergence accuracy of the algorithm can be effectively improved to balance the global exploration and local exploitation while enhancing the performance of the original DBO.

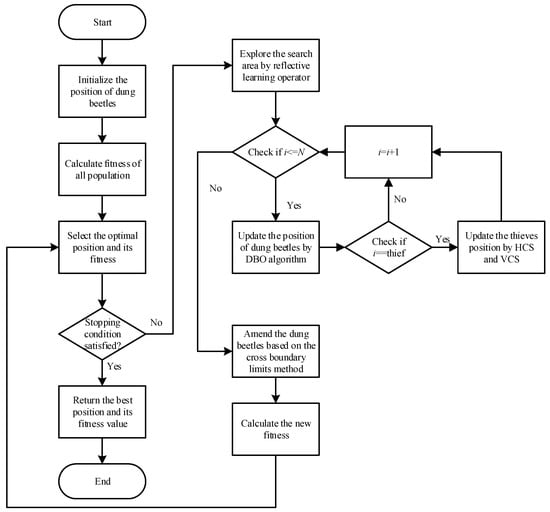

Figure 3 shows the process of MDBO implementation.

Figure 3.

Flowchart of the MDBO.

The pseudo-code of MDBO is shown in Algorithm 1.

| Algorithm 1: The pseudo code of MDBO |

| Initialize the particle’s population N; the maximum iterations T; the dimensions D. |

| Initialize the positions of the dung beetles While t ≤ T do Calculate the current best position and its fitness Obtain N reflective solutions by Equations (11)–(15) Update the positions of N individuals For i = 1:N do if i == ball-rolling dung beetle then Generate a random number if p < 0.9 then Update search position by Equation (1) Else Update search position by Equation (3) end if if i == brood ball then Update search position by Equation (5) end if if i == small dung beetle then Update search position by Equation (7) end if if i == thief then Update search position by Equation (8) while t ≤ T/4 do Perform HCS using Equations (17)–(18) Perform VCS using Equation (19) end while end if end for Update the best position and its fitness t = t + 1 end while Return the optimal solution Xb and its fitness fb. |

3.5. Computational Complexity Analysis

The pseudo-code of the proposed algorithm is shown in Algorithm 1. The complexity of the optimizer is one of the key indicators that can reflect the algorithm’s efficiency. According to the pseudo-code in Algorithm 1, it can be seen that the complexity of MDBO mainly includes these several processes: initialization; calculating the reflective solutions by Beta distribution; HCS and VCS; updating the dung beetles’ position with new boundary handling method. For convenience, it was assumed that N solutions and T iterations are involved in the optimization process of a D-variable problem. First, the complexity in the initialization phase is . Then, the complexity level of calculating reflective solutions is . The complexity level of HCS and VCS is . In MDBO, the total number of evaluations in updating the particles’ position is because group solutions are evaluated per cycle. Thus, the time complexity of the dung beetles to look for the best position is about , which can be abbreviated as . Based on the above discussions, the overall time complexity of MDBO is . Compared to DBO, although it increases the time overhead, the performance is optimized.

4. Analysis of Simulation Experiments

4.1. Experimental Design

In this section, a series of numerical experiments on MDBO were conducted to validate the effectiveness of MDBO. The statistical analysis and convergence analysis were performed on 12 basic benchmark functions [29,30,31] and CEC2021 competition functions [32]. Four well-established optimization techniques (the POA [33], SCSO [34], HBA [35], and DBO [18]), and three improved metaheuristics in recent years (the ISSA [36], MCFOA [22], GCHHO [23]) were compared with our proposed MDBO. Table 1 shows the parameter settings of all algorithms. For fairness, the population size N = 30 for all algorithms, and the maximum iterations T = 500. Mean (Mean) and standard deviation (Std) are used as statistical indicators to assess the optimization performance. All experiments were achieved on MatlabR2019a version.

Table 1.

Parameter settings of all algorithms.

4.2. Sensitivity Analysis of MDBO’s Parameters

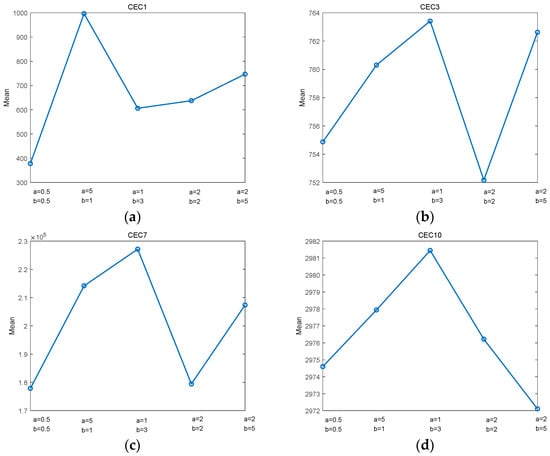

This section analyzes the sensitivity of the control parameters (k, b, α, β) employed in the MDBO. The parameters k and b are consistent with the values taken in the DBO. Xue [18] has demonstrated that the algorithm performance is the most robust when k = 0.1, b = 0.3. In this subsection, we focused on analyzing the effect of the added parameters α and β on the search performance of the algorithm. Four CEC test functions (including the unimodal function CEC-1, the basic function CEC-3, the hybrid function CEC-7, and the composition function CEC-10) were used to test the design of these control parameters. Five parameter combinations, , , , , and , were set according to different distributions.

The sensitivity analysis is shown in Figure 4, where the horizontal coordinates indicate the five parameter combinations, and the vertical coordinates indicate the mean values. It can be seen from Figure 4a,c that the algorithm performed optimally when α = 0.5 and β = 0.5. For CEC1, α and β showed a high sensitivity to different inputs. Noting that a = 0.5 and b = 0.5 had the best performance. Moreover, we could see that α = 0.5 and β = 0.5 could obtain the best search performance in CEC7. It is observable in Figure 4b,d that α = 0.5 and β = 0.5 demonstrated the robust behavior of different inputs. For CEC3, from the values displayed in the vertical coordinates, the optimized objective function values obtained for various combinations of a and b were relatively close. Note that α = 0.5 and β = 0.5 obtained second place after α = 2 and β = 2. In CEC10, the performance of α = 2 and β = 5 was better than that of α = 2 and β = 2. However, α = 0.5 and β = 0.5 still achieved second place, showing their robust performance. Therefore, α = 0.5 and β = 0.5 were selected as the recommended parameter values for MDBO.

Figure 4.

Sensitivity analysis of the MDBO’s parameters. (a) CEC-1; (b) CEC-3; (c) CEC-7; (d) CEC-10.

4.3. Comparison of Performance on 12 Benchmark Functions

The 12 basic benchmark functions included seven unimodal functions and six multimodal functions (details in Appendix A, Table A1). It is worth noting that F1–F7 are typical unimodal test functions since they have one and only one global optimal value. The multimodal functions F8–F12 have features with multiple local optimums. Therefore, F1–F7 are widely used to estimate the convergence accuracy and speed, and F8–F12 are more suitable for testing the global exploration ability. Moreover, to fairly compare the comprehensive search ability of each metaheuristic, all algorithms were independently run 30 times in each experiment. We also tested the scalability and compared the performance of MDBO on dimensions of 30, 50, and 100. Two indicators were used to measure their optimization performance: the mean value (Mean) and the standard deviation (Std).

Table 2 shows the mean and standard deviation data of all algorithms on the 12 benchmark test functions tested, and the table also provides the comparison results of the algorithms when the test functions were taken in 30, 50, and 100 dimensions, respectively. As can be seen from Table 2, MDBO achieved the best results in all of the test functions. From the perspective of vertical comparison, MDBO ranked first in the test of other functions, except for some algorithms that showed a comparable performance to MDBO in the F9, F10, and F11 functions. For functions F1–F6, MDBO converged to the theoretical optimal value of 0. For F7 and F8, although MDBO could not have the best value, it showed significant advantages compared with other algorithms. Next, from the results of the dimension comparison, the results of 100 dimensions, 30 dimensions, and 50 dimensions were similar, with little change in the order of optimal value. Based on the above analysis, it can be seen that MDBO has more significant competitive advantages in solving unimodal and multimodal functions. The results reflect the ability to jump out of the local optima by the reflective learning operator and crisscross optimizer.

Table 2.

Twelve benchmark test function results in different dimensions.

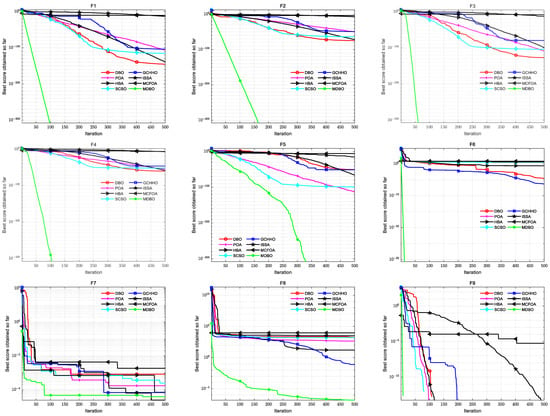

4.4. Convergence Curve Analysis

Convergence analysis plays a vital role in evaluating the ability of the local exploitation and global exploration of the algorithm. Figure 5 shows the convergence curves of DBO, POA, HBA, SCSO, ISSA, GCHHO, MCFOA, and MDBO on functions F1–F12. As shown in Figure 5, MDBO’s optimization ability was the best in 11 (F1–F6, F8–F12) out of 12 functions. For functions F1–F6, the MDBO’s curves dropped rapidly in early iterations and kept a fast convergence rate to the optimal solution. Notably, F1–F5 are continuous unimodal test functions, and MDBO can quickly search for the optimal theoretical value of 0. Since the vertical coordinates of the convergence curve were generated by the logarithmic scale, the accuracy of the displayed magnitude was 10−300. For F4, the function curve of MDBO showed an inflection point because crossover operators can help MDBO re-exploit the optimization precision. For function F7, although the progress of convergence to 500 generations was not as good as that of ISSA, the average number of iterations of function curve convergence to the optimal value was the least and converged to the optimal value in about 100 generations. In F9 and F11, MDBO obtained the optimal global value of 0. The curve broke during iterations because the figure showed an average best value in logarithmic. For functions F8, F10, and F12, MDBO exhibited a more competitive performance than the other comparison algorithms. In summary, convergence analysis proved that MDBO had a higher success ratio than the other optimization algorithms.

Figure 5.

The convergence curves by MDBO on 12 benchmark test functions.

4.5. Wilcoxon Rank-Sum Test

To further test the effectiveness of the MDBO, the Wilcoxon rank sum test [37] was used to determine whether there was a statistical difference. Each algorithm was run 30 times independently, and the data volume met the requirement of statistical analysis. Table 3 shows the p-values of the Wilcoxon rank sum test at the α = 5% significance level. If p < 0.05, the original hypothesis is rejected, and the alternative hypothesis is accepted. In Table 3, “+/−/=” indicates the number of MDBO with better/worse/comparable performance compared with other algorithms, respectively, where the ‘’NaN’’ markers had comparable performance. In general, most of the p-values of the rank sum test were less than 0.05. This indicates that the performance of MDBO was significantly different from other algorithms. Therefore, it was considered that the proposed MDBO had excellent convergence performance.

Table 3.

Wilcoxon rank sum test results.

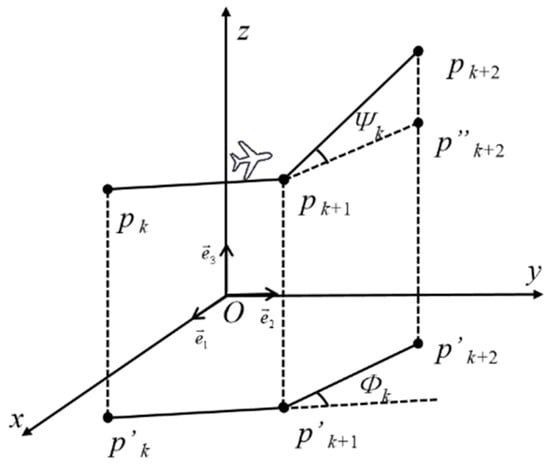

4.6. MDBO’s Performance on CEC2021 Suite

To further verify the performance of the MDBO, the challenging CEC 2021 test suite (details in Appendix A, Table A2) was used to test against the seven metaheuristics above-mentioned. Similarly, for CEC2021, the population size was set to 30, along with 500 maximum iterations, and the dimension was set to 20. Each metaheuristic was run independently 30 times to keep fairness and objectivity. The Wilcoxon signed-rank test (signed-rank test) [37] was also carried out. The symbol “gm” is the score representing the difference between the number of symbols “+” and “−”.

Table 4 shows the experimental results of the MDBO and other optimizers. In Table 4, the proposed MDBO ranked first in CEC-1, CEC-3, CEC-4, and CEC-6. For CEC-2 and CEC-9, MDBO achieved the best mean results compared with other algorithms. For CEC-10, the mean value of MDBO was second only to HBA, but the standard deviation was smaller and more stable than HBA. The “gm” score showed that the MDBO differed significantly from other algorithms in the vast majority of cases. Overall, the results of the CEC2021 test functions show that the MDBO is effective and suitable for some engineering problems.

Table 4.

CEC 2021 test function results.

5. UAV Path-Planning Model

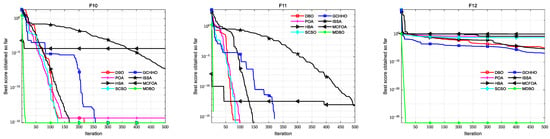

5.1. Environment Model

In the route planning of an UAV inspection in oil and gas plants, it is vital to create an appropriate environment model as it will improve the efficiency of the optimization algorithm. Considering some objects as obstacles in oil and gas plants such as complex pipelines, oil wells, signal towers, and so on, this paper adopted the geometric description method to establish the three-dimensional environment model. In this model, the obstacles are described by cuboids of different sizes. The transformation process of the model is shown in Figure 6. In addition, compared with the military UAV, the inspection UAV does not need to consider threats such as missiles, radar, and anti-aircraft guns. After ensuring the limitations of the UAV, the final optimal path is generated according to the surrounding environment and the task requirements to complete the inspection task. In order to prevent the UAV from colliding with obstacles during the flight, the safety threshold is added to the length, width, and height of different cuboids.

Figure 6.

The environment model of oil and gas plants.

5.2. Path Representation

In this paper, it was assumed that the planning path had a start point and an end point . During the inspection, the UAV needs to traverse some task points with no collisions. Assume that the path can be represented as a series of discrete points such as , where the first and last waypoints are the given start and target point. The coordinates of are . The generation of the initial path is introduced as follows:

Step 1, confirm the direction of the next point to the starting point . The direction of the UAV in three-dimensional space can be generated as:

where represents the removable direction, and are the direction on the x-axis, y-axis, and z-axis, respectively.

Then, the next movable waypoint is expressed as:

where is the position of the ith discrete point.

Step 2, make sure that the generated waypoints stay within the map and do not collide with the obstacles. Therefore, it is necessary to penalize infeasible solutions. The penalty function is introduced as:

where is the point of collision with the obstacles, and denotes the point outside the map.

Step 3, find the optimal waypoint that satisfies the conditions and add it to the path, which can be described as follows:

where is the index of discrete points from 0 to n; is a constant value that depends on the scale of the search space; and represent the Euclidean distance between the current path point and the next path point and the end point, respectively.

Step 4, repeat the above operation until the UAV flies to the first task point.

Step 5, finally, repeat the above steps until the UAV flies over all task points and generates a complete initial path.

5.3. Cost Function and Performance Constraints

The objective function that evaluates a candidate route should take account of the cost of the path and the performance, which is expressed as follows:

where represents the overall cost function; is the weight coefficient; to are respectively the costs associated with the path length, flight height, and smoothness. The decision variable is , and is the dimension of the problem space. and are the lower and upper bounds of the search space. The cost function and constraints of the UAV path are described as follows:

- (1)

- Length cost

In the inspection of UAVs, the shorter the path, the less the time and energy consumption. The cost function is the length of the UAV path, which can be calculated by the Euclidean operator as follows:

where is the index of discrete points from 0 to .

- (2)

- Flight altitude cost

During the flight, maintaining a steady altitude can reduce the power consumption. In order to ensure the safety of the flight, the altitude of the UAV is usually limited between two given extrema, the minimum height and the maximum height , respectively. Therefore, the cost function is computed as:

where denotes the flight height with respect to the ground, and is the index of waypoints. It should be mentioned that maintains the average height and penalizes the values outside the range.

- (3)

- Smooth cost

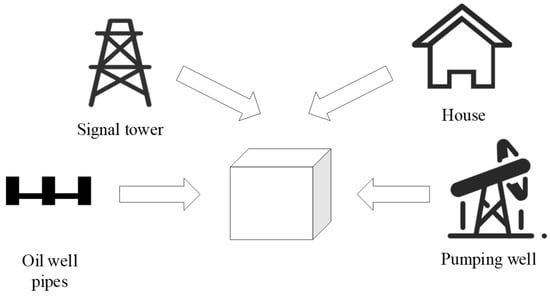

To ensure that the UAV can always maintain a good attitude during the working flight, this paper adopted a smooth cost, consisting of the climb turning angle and climbing rates. As shown in Figure 7, the turning angle is the angle between two adjacent consecutive path segments. If is the unit vector in the z-axis direction, the projected vector is calculated as:

Figure 7.

Turning angle and climbing angle calculation.

The turning angle is calculated as:

where the notation is a dot product, and is a cross product.

The climbing angle is the angle between the and onto the horizontal plane, and the calculation formula is as follows:

Then, the smooth cost function is composed of the turning angle and the climbing rate, which can be calculated as:

where and are the constants.

6. Simulation Experiments and Discussions on UAV Path Planning

6.1. Scenario Setup

The environment region was 1000 m long, 1000 m wide, and 12 m high, with several known obstacles (the coordinates and length, width, and height data of the obstacles are shown in Appendix A, Table A5). The start point and the destination point were [1,950,12], [950,1,1]. Six representative state-of-the-art metaheuristics (the PSO [38], GWO [39], DBO [18], FOA [40], GBO [41], and HPO [42]) were chosen to draw comparisons. For fairness, the population number was set to 30 for all algorithms, and the maximum iteration was set to 100. Each metaheuristic was independently run 20 times. The best value (Best), mean (Mean), and standard deviation (Std) were used as statistical indicators to assess the optimization performance.

6.2. Effect of the Cost Function Parameters

The objective function weights depend on the importance assigned to its different parts. The purpose of the path cost is to ensure that it generates an effective drone flight path. In many scenarios, the turning of drones during the flight is inevitable. Therefore, the weight of the smooth cost is lower than the other costs. This section mainly verifies the performance of MDBO in solving the cost function with different weight combinations. The value of w3 is 0.1 or 0.2. When w3 is 0.1, the combination of w1 and w2 is {0.7,0.2} or {0.2,0.7}, and when w3 is 0.2, the combination of w1 and w3 is {0.4,0.4} or {0.5,0.3} or {0.3,0.5}. Thus, there are a total of five combinations of design.

The results are given in Table 5. It can be seen that MDBO achieved first place in 11 out of 15 indices in all index tests. For the Std index, MDBO ranked first among the two test combinations. When w1 = 0.4, w2 = 0.4, w3 = 0.2, and w1 = 0.3, w2 = 0.5, w3 = 0.2, and w1 = 0.5, w2 = 0.3, w3 = 0.2, the performance of MDBO was second only to DBO. Although DBO had better standard deviations than MDBO under these three weight combinations, its optimal convergence solution and mean value were not as good as MDBO. In the case of the shortest path length, we believe that the performance of MDBO was still better than DBO. It is worth noting that when w1 = 0.7, w2 = 0.2, and w3 = 0.1, MDBO ranked second only to GBO in terms of the mean value. In particular, when w1 = 0.5, w2 = 0.3, and w3 = 0.2, the performance of MDBO was optimal. Overall, MDBO had good searchability and robustness in all testing scenarios.

Table 5.

Comparison of the performance of the five algorithms under different weight combinations.

6.3. Impact of the Count and Position of Tasks

During the flight of the UAV, the task requirements need to be considered. In other words, the UAV must pass through a series of task points from the starting point and fly to the destination after completing the corresponding task. The number of task points and complexity of the task will affect the execution efficiency of the algorithm. This section mainly compares the search performance of each algorithm with different numbers of task points and coordinates. The number of set targets and their coordinates in this experiment are given in Table 6. Three groups of experiments were set up, and the number of task points in each group was 2, 3, and 4. The constant values of the cost function were the optimal weight combination according to the above experiment. The experimental results are shown in Table 7.

Table 6.

Details of the coordinates of the tasks.

Table 7.

Comparison of the performance of the five algorithms with different tasks.

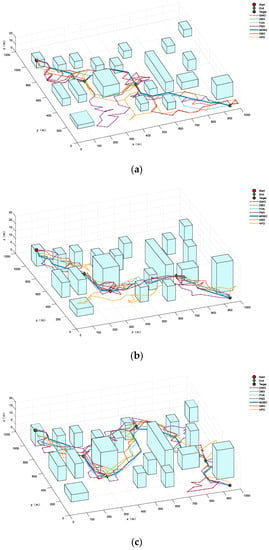

From Table 7, it can be intuitively observed that MDBO achieved the best results in all indices. The planned path length will also increase as the number of tasks increases. Compared with the other algorithms, it still showed a superior performance. The results indicate that MDBO can handle both simple and complex tasks. Figure 8 shows a top view of the optimal path generated by all algorithms. From Figure 8, it can be seen that all of the algorithms could successfully find secure paths. As the number of tasks increases and the complexity increases, the path will undergo significant changes. This indicates the complexity of multitasking. Meanwhile, it is evident from the comparison of paths that MDBO had smoother paths and fewer corners at task points, which means that it had a stronger optimization performance than the other algorithms.

Figure 8.

The UAV paths of all algorithms under a different number of targets. (a) The generated paths when the number of tasks was 2. (b) The generated paths when the number of tasks was 3. (c) The generated paths when the number of tasks was 4.

6.4. Influence of the Number and Arrangement of Obstacles

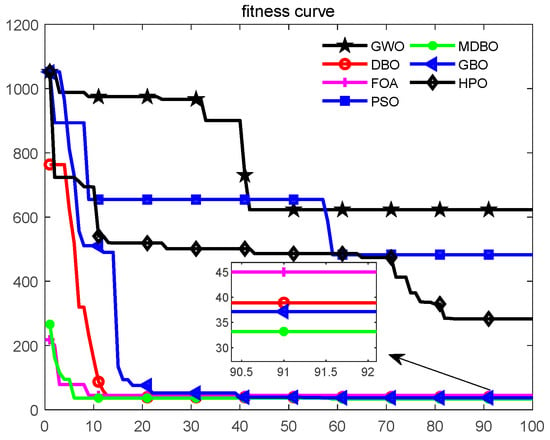

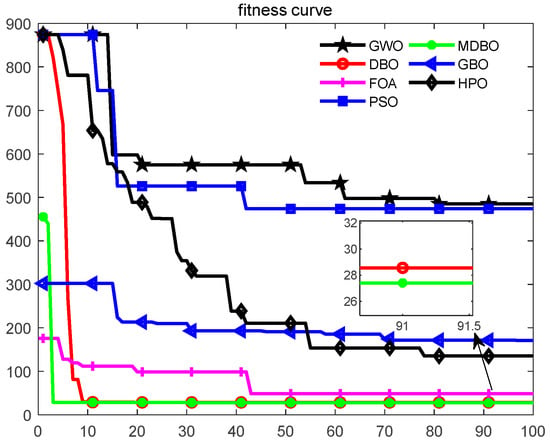

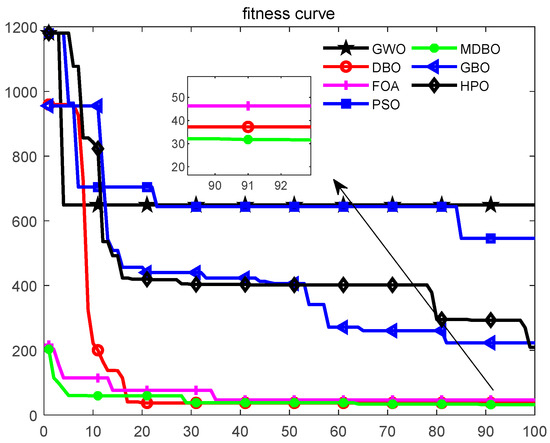

The number and arrangement of obstacles in the map will affect the algorithm’s efficiency in finding the optimal solution. Moreover, the number of iterations required may increase if there are many obstacles in the presence of a line of sight path between the start and the destination of the UAV. Based on the previous experiments, this section assessed the performance of seven algorithms in different scenarios. We mainly set up three groups of map scenes. The number of obstacles distributed in each group of map scenes was 6, 13, and 19, respectively. The specific information of obstacles in Maps 1–3 including the coordinates and length, width, and height of obstacles are shown in Table A3, Table A4 and Table A5 in Appendix A.

The results of the average convergence curves for 100 iterations are plotted in Figure 9, Figure 10 and Figure 11. It can be seen that in all cases, MDBO always obtained the optimal solution compared to the other algorithms. For Map 1, DBO, GBO, FOA, and MDBO converged rapidly in the early stage and converged to the optimal value of around 50 iterations. The comparison from the final results found that MDBO had the highest solution accuracy, followed by the GBO. It was also found that MDBO converged to the optimal value very quickly at the beginning of the iteration, and the performance improvement was obvious compared to the original DBO. This proves that the proposed search strategies can accelerate the convergence speed and improve the convergence accuracy. For Map 2, it can be seen from Figure 10 that the GBO and FOA outperformed the DBO and MDBO at the beginning of the iterations. However, as the iterations began, DBO and MDBO converged quickly to a minimal value. At the same time, the GBO algorithm fell into a local optimum at a later stage. For Map 3, with more obstacles, the FOA and the MDBO performed the best, with the MDBO converging late to obtain the best accuracy.

Figure 9.

Avg. best cost vs. iteration for Map 1.

Figure 10.

Avg. best cost vs. iteration for Map 2.

Figure 11.

Avg. best cost vs. iteration for Map 3.

On average, the number of iterations for MDBO to converge to the optimal value was 50. In general, MDBO still has a higher convergence accuracy and stronger robustness than the state-of-the-art metaheuristic methods.

7. Conclusions

In this paper, a multi-strategy enhanced dung beetle optimizer (MDBO) was proposed to improve the original algorithm’s performance using a reflective learning method, Levy boundary mapping processing, and two different cross-search mechanisms. The proposed MDBO was then used to successfully handle the three-dimensional route planning problem of oil and gas plants.

Tests for MDBO were conducted on 12 benchmark test functions, the Wilcoxon rank sum test, and the CEC2021 suite. The results showed that the MDBO is capable of handling a wide range of optimization problems and is competitive with some advanced metaheuristic algorithms. Second, based on the comparative experiments of UAV path-planning scenarios, the proposed method significantly outperformed other algorithms and achieved satisfactory results in UAV path planning. Finally, the time complexity analysis showed that the proposed MDBO increased in time complexity, so future research will focus on reducing the complexity of the algorithm. In addition, when solving the UAV path-planning problem, the UAVs are required to respond promptly when the mission dynamics change, and the number of UAVs needs to be increased if necessary, which is a limitation of this study.

Future work will focus on multi-UAV cooperation path planning in complex environments. We will also work further on reducing the running time of MDBO and applying it to more complicated optimization problems.

8. Discussion

This study provides a solution for path planning with an improved dung beetle optimizer (MDBO). It was found that the three proposed strategies effectively improved the performance of the original DBO, enabling it to solve various optimization problems. Simulation experiments in the UAV path-planning scenario demonstrated the superiority of the MDBO for path searching.

This paper examined the performance of metaheuristic algorithms such as the dung beetle optimization algorithm in solving various types of optimization problems, with a focus on the MDBO’s performance in addressing path-planning problems. First, tests were performed on 12 benchmark functions, the Wilcoxon rank sum test, and the CEC2021 suite. The results show that the three enhancement strategies can improve the original DBO’s performance and expand the algorithm’s application capabilities. This helps to verify that different improvement strategies can improve the performance of the original algorithm [23,24] and help achieve satisfactory results for specific engineering issues [22,25,26].

Second, tests on trajectory planning scenarios indicate that the DBO and the MDBO outperformed other advanced comparison algorithms. The results demonstrate the efficiency of the intelligence algorithms in solving path-planning problems [15,20,21]. In contrast to previous research, we focused on the flaws of the intelligent algorithm and aimed to develop a more reasonable search mechanism that is suitable for resolving path optimization problems.

While the findings are not surprising, it is important to understand the question of the performance gaps of the metaheuristic algorithm when applied to engineering problems. This study demonstrates the power of metaheuristic algorithms for a wide range of optimization problems and successful route planning in oil and gas plants provides theoretical support for practical navigation. However, considering the complex application environment of the real world, there will be many dynamic obstacles and changing tasks, which may require multiple UAVs to avoid dynamic obstacles. Therefore, further investigation will be considered with dynamic tasks. We will focus on designing a multi-objective beetle optimization algorithm to optimize the three-dimensional spatial navigation of several UAVs.

Author Contributions

Conceptualization, methodology, writing—original draft, software, writing—review, Q.S.; writing–review, editing, supervision, investigation, M.X.; writing–review, editing, investigation, D.Z.; writing–review, visualization, supervision, funding acquisition, Q.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, grant number No.62062021, 61872034, 62166006; Natural Science Foundation of Guizhou Province, grant number [2020]1Y254; Guizhou Provincial Science and Technology Projects, grant number Guizhou Science Foundation-ZK [2021] General 335.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Twelve benchmark functions.

Table A1.

Twelve benchmark functions.

| No. | Function Name | Search Space | Dim | fmin |

|---|---|---|---|---|

| F1 | Sphere | [−100,100] | 30/50/100 | 0 |

| F2 | Schwefel 2.22 | [−10,10] | 30/50/100 | 0 |

| F3 | Schwefel 1.2 | [−100,100] | 30/50/100 | 0 |

| F4 | Schwefel 2.21 | [−100,100] | 30/50/100 | 0 |

| F5 | Zakharov | [−5,10] | 30/50/100 | 0 |

| F6 | Step | [−100,100] | 30/50/100 | 0 |

| F7 | Quartic | [−1.28,1.28] | 30/50/100 | 0 |

| F8 | Qing | [−500,500] | 30/50/100 | 0 |

| F9 | Rastrigin | [−5.12,5.12] | 30/50/100 | 0 |

| F10 | Ackley 1 | [−32,32] | 30/50/100 | 0 |

| F11 | Griewank | [−600,600] | 30/50/100 | 0 |

| F12 | Penalized 1 | [−50,50] | 30/50/100 | 0 |

Table A2.

Summary of the CEC2021 test suite [32].

Table A2.

Summary of the CEC2021 test suite [32].

| No. | Functions | Fi* | |

|---|---|---|---|

| Unimodal Function | CEC-1 | Shifted and Rotated Bent Cigar Function | 100 |

| Basic Functions | CEC-2 | Shifted and Rotated Schwefel’s Function | 1100 |

| CEC-3 | Shifted and Rotated Lunacek bi-Rastrigin Function | 700 | |

| CEC-4 | Expand Rosenbrock’s plus Griewangk’s Function | 1900 | |

| Hybrid Functions | CEC-5 | Hybrid Function 1 (N = 3) | 1700 |

| CEC-6 | Hybrid Function 2 (N = 4) | 1600 | |

| CEC-7 | Hybrid Function 3 (N = 5) | 2100 | |

| Composition Functions | CEC-8 | Composition Function 1 (N = 3) | 2200 |

| CEC-9 | Composition Function 2 (N = 4) | 2400 | |

| CEC-10 | Composition Function 3 (N = 5) | 2500 | |

| Search range: [−100,100]D | |||

Table A3.

The data of obstacles on Map 1.

Table A3.

The data of obstacles on Map 1.

| No. | X | Y | Z | L | W | H |

|---|---|---|---|---|---|---|

| 1 | 550 | 100 | 0 | 50 | 100 | 10 |

| 2 | 0 | 400 | 0 | 50 | 200 | 10 |

| 3 | 300 | 320 | 0 | 50 | 380 | 15 |

| 4 | 800 | 150 | 0 | 50 | 100 | 15 |

| 5 | 500 | 350 | 0 | 50 | 100 | 10 |

| 6 | 50 | 800 | 0 | 50 | 100 | 10 |

Table A4.

The data of obstacles on Map 2.

Table A4.

The data of obstacles on Map 2.

| No. | X | Y | Z | L | W | H |

|---|---|---|---|---|---|---|

| 1 | 40 | 100 | 0 | 100 | 150 | 5 |

| 2 | 450 | 350 | 0 | 50 | 100 | 10 |

| 3 | 850 | 100 | 0 | 100 | 100 | 20 |

| 4 | 0 | 400 | 0 | 50 | 200 | 10 |

| 5 | 100 | 400 | 0 | 50 | 200 | 10 |

| 6 | 260 | 430 | 0 | 100 | 180 | 15 |

| 7 | 600 | 320 | 0 | 50 | 380 | 15 |

| 8 | 800 | 500 | 0 | 50 | 100 | 15 |

| 9 | 430 | 650 | 0 | 50 | 100 | 10 |

| 10 | 20 | 900 | 0 | 50 | 100 | 10 |

| 11 | 500 | 800 | 0 | 50 | 100 | 10 |

| 12 | 450 | 200 | 0 | 50 | 100 | 10 |

| 13 | 750 | 200 | 0 | 50 | 100 | 10 |

Table A5.

The data of obstacles on Map 3.

Table A5.

The data of obstacles on Map 3.

| No. | X | Y | Z | L | W | H |

|---|---|---|---|---|---|---|

| 1 | 40 | 100 | 0 | 100 | 150 | 5 |

| 2 | 400 | 150 | 0 | 50 | 100 | 10 |

| 3 | 550 | 100 | 0 | 50 | 100 | 10 |

| 4 | 850 | 100 | 0 | 100 | 100 | 20 |

| 5 | 0 | 400 | 0 | 50 | 200 | 10 |

| 6 | 100 | 400 | 0 | 50 | 200 | 10 |

| 7 | 260 | 430 | 0 | 100 | 180 | 15 |

| 8 | 500 | 320 | 0 | 50 | 100 | 10 |

| 9 | 600 | 320 | 0 | 50 | 380 | 15 |

| 10 | 700 | 300 | 0 | 100 | 100 | 10 |

| 11 | 800 | 500 | 0 | 50 | 100 | 15 |

| 12 | 300 | 700 | 0 | 50 | 100 | 10 |

| 13 | 430 | 650 | 0 | 50 | 100 | 10 |

| 14 | 20 | 900 | 0 | 50 | 100 | 10 |

| 15 | 100 | 800 | 0 | 50 | 100 | 10 |

| 16 | 200 | 800 | 0 | 50 | 100 | 10 |

| 17 | 500 | 800 | 0 | 50 | 100 | 10 |

| 18 | 750 | 750 | 0 | 50 | 100 | 10 |

| 19 | 900 | 900 | 0 | 50 | 100 | 10 |

References

- Jordan, S.; Moore, J.; Hovet, S.; Box, J.; Perry, J.; Kirsche, K.; Lewis, D.; Tse, Z.T.H. State-of-the-art technologies for UAV inspections. IET Radar Sonar Navig. 2018, 12, 151–164. [Google Scholar] [CrossRef]

- Hu, H.; Xiong, K.; Qu, G.; Ni, Q.; Fan, P.; Ben Letaief, K. AoI-Minimal Trajectory Planning and Data Collection in UAV-Assisted Wireless Powered IoT Networks. IEEE Internet Things J. 2021, 8, 1211–1223. [Google Scholar] [CrossRef]

- Yu, X.; Li, C.; Yen, G.G. A knee-guided differential evolution algorithm for unmanned aerial vehicle path planning in disaster management. Appl. Soft Comput. 2020, 98, 106857. [Google Scholar] [CrossRef]

- Lin, L.; Goodrich, M.A. UAV intelligent path planning for Wilderness Search and Rescue. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 709–714. [Google Scholar] [CrossRef]

- Shin, Y.; Kim, E. Hybrid path planning using positioning risk and artificial potential fields. Aerosp. Sci. Technol. 2021, 112, 106640. [Google Scholar] [CrossRef]

- Huang, S.-K.; Wang, W.-J.; Sun, C.-H. A Path Planning Strategy for Multi-Robot Moving with Path-Priority Order Based on a Generalized Voronoi Diagram. Appl. Sci. 2021, 11, 9650. [Google Scholar] [CrossRef]

- Wang, J.; Li, Y.; Li, R.; Chen, H.; Chu, K. Trajectory planning for UAV navigation in dynamic environments with matrix alignment Dijkstra. Soft Comput. 2022, 26, 12599–12610. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, J.; Dai, J.; He, C. A Novel Real-Time Penetration Path Planning Algorithm for Stealth UAV in 3D Complex Dynamic Environment. IEEE Access 2020, 8, 122757–122771. [Google Scholar] [CrossRef]

- Lu, L.; Zong, C.; Lei, X.; Chen, B.; Zhao, P. Fixed-Wing UAV Path Planning in a Dynamic Environment via Dynamic RRT Algorithm. Mech. Mach. Sci. 2017, 408, 271–282. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, Z.; Qin, F.; Zhang, X.; Yao, H. A residual convolutional neural network based approach for real-time path planning. Knowl.-Based Syst. 2022, 242, 108400. [Google Scholar] [CrossRef]

- Chai, X.; Zheng, Z.; Xiao, J.; Yan, L.; Qu, B.; Wen, P.; Wang, H.; Zhou, Y.; Sun, H. Multi-strategy fusion differential evolution algorithm for UAV path planning in complex environment. Aerosp. Sci. Technol. 2021, 121, 107287. [Google Scholar] [CrossRef]

- Pehlivanoglu, Y.V.; Pehlivanoglu, P. An enhanced genetic algorithm for path planning of autonomous UAV in target coverage problems. Appl. Soft Comput. 2021, 112, 107796. [Google Scholar] [CrossRef]

- Phung, M.D.; Ha, Q.P. Safety-enhanced UAV path planning with spherical vector-based particle swarm optimization. Appl. Soft Comput. 2021, 107, 107376. [Google Scholar] [CrossRef]

- Ali, Z.A.; Han, Z.; Hang, W.B. Cooperative Path Planning of Multiple UAVs by using Max–Min Ant Colony Optimization along with Cauchy Mutant Operator. Fluct. Noise Lett. 2021, 20, 2150002. [Google Scholar] [CrossRef]

- Yu, X.; Li, C.; Zhou, J. A constrained differential evolution algorithm to solve UAV path planning in disaster scenarios. Knowl.-Based Syst. 2020, 204, 106209. [Google Scholar] [CrossRef]

- Zhang, X.; Lu, X.; Jia, S.; Li, X. A novel phase angle-encoded fruit fly optimization algorithm with mutation adaptation mechanism applied to UAV path planning. Appl. Soft Comput. 2018, 70, 371–388. [Google Scholar] [CrossRef]

- Dewangan, R.K.; Shukla, A.; Godfrey, W.W. Three dimensional path planning using Grey wolf optimizer for UAVs. Appl. Intell. 2019, 49, 2201–2217. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2022, 79, 7305–7336. [Google Scholar] [CrossRef]

- Peres, F.; Castelli, M. Combinatorial Optimization Problems and Metaheuristics: Review, Challenges, Design, and Development. Appl. Sci. 2021, 11, 6449. [Google Scholar] [CrossRef]

- Jain, G.; Yadav, G.; Prakash, D.; Shukla, A.; Tiwari, R. MVO-based path planning scheme with coordination of UAVs in 3-D environment. J. Comput. Sci. 2019, 37, 101016. [Google Scholar] [CrossRef]

- Li, K.; Ge, F.; Han, Y.; Wang, Y.A.; Xu, W. Path planning of multiple UAVs with online changing tasks by an ORPFOA algorithm. Eng. Appl. Artif. Intell. 2020, 94, 103807. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Y.; Yu, C.; Heidari, A.A.; Li, S.; Chen, H.; Li, C. Gaussian mutational chaotic fruit fly-built optimization and feature selection. Expert Syst. Appl. 2020, 141, 112976. [Google Scholar] [CrossRef]

- Song, S.; Wang, P.; Heidari, A.A.; Wang, M.; Zhao, X.; Chen, H.; He, W.; Xu, S. Dimension decided Harris hawks optimization with Gaussian mutation: Balance analysis and diversity patterns. Knowl.-Based Syst. 2021, 215, 106425. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. A novel Random Walk Grey Wolf Optimizer. Swarm Evol. Comput. 2019, 44, 101–112. [Google Scholar] [CrossRef]

- Pichai, S.; Sunat, K.; Chiewchanwattana, S. An Asymmetric Chaotic Competitive Swarm Optimization Algorithm for Feature Selection in High-Dimensional Data. Symmetry 2020, 12, 1782. [Google Scholar] [CrossRef]

- Mikhalev, A.S.; Tynchenko, V.S.; Nelyub, V.A.; Lugovaya, N.M.; Baranov, V.A.; Kukartsev, V.V.; Sergienko, R.B.; Kurashkin, S.O. The Orb-Weaving Spider Algorithm for Training of Recurrent Neural Networks. Symmetry 2022, 14, 2036. [Google Scholar] [CrossRef]

- Almotairi, K.H.; Abualigah, L. Hybrid Reptile Search Algorithm and Remora Optimization Algorithm for Optimization Tasks and Data Clustering. Symmetry 2022, 14, 458. [Google Scholar] [CrossRef]

- Meng, A.-B.; Chen, Y.-C.; Yin, H.; Chen, S.-Z. Crisscross optimization algorithm and its application. Knowl.-Based Syst. 2014, 67, 218–229. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar] [CrossRef]

- Cai, L.; Qu, S.; Cheng, G. Two-archive method for aggregation-based many-objective optimization. Inf. Sci. 2018, 422, 305–317. [Google Scholar] [CrossRef]

- Mohammadi-Balani, A.; Nayeri, M.D.; Azar, A.; Taghizadeh-Yazdi, M. Golden eagle optimizer: A nature-inspired metaheuristic algorithm. Comput. Ind. Eng. 2021, 152, 107050. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Sallam, K.M.; Agrawal, P.; Hadi, A.A.; Mohamed, A.K. Evaluating the performance of meta-heuristic algorithms on CEC 2021 benchmark problems. Neural Comput. Appl. 2023, 35, 1493–1517. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. Pelican Optimization Algorithm: A Novel Nature-Inspired Algorithm for Engineering Applications. Sensors 2022, 22, 855. [Google Scholar] [CrossRef] [PubMed]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2022, 1–25. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Hegazy, A.E.; Makhlouf, M.A.; El-Tawel, G.S. Improved salp swarm algorithm for feature selection. J. King Saud Univ.—Comput. Inf. Sci. 2020, 32, 335–344. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Pan, W.-T. A new Fruit Fly Optimization Algorithm: Taking the financial distress model as an example. Knowl. Based Syst. 2012, 26, 69–74. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Bozorg-Haddad, O.; Chu, X. Gradient-based optimizer: A new metaheuristic optimization algorithm. Inf. Sci. 2020, 540, 131–159. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F.; Molahosseini, A.S. Hunter–prey optimization: Algorithm and applications. Soft Comput. 2022, 26, 1279–1314. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).