Symmetry-Based Fusion Algorithm for Bone Age Detection with YOLOv5 and ResNet34

Abstract

1. Introduction

2. Related Works

- Using the adaptable anchor frame in YOLOv5 makes it easier for the network to learn the features of hand bone images, improves the efficiency of image pre-processing work before training, and the selection of a suitable anchor frame also provides great help to improve the accuracy of subsequent image feature extraction.

- ResNet34 is used to train the small joint dataset, which ensures that the accuracy of each classification reaches more than 90%.

- A bone age detection framework based on YOLOv5 and ResNet34 combined with the RUS-CHN scoring method (YARN) was proposed and implemented to construct a targeted detection model for bone age detection in Chinese adolescent children.

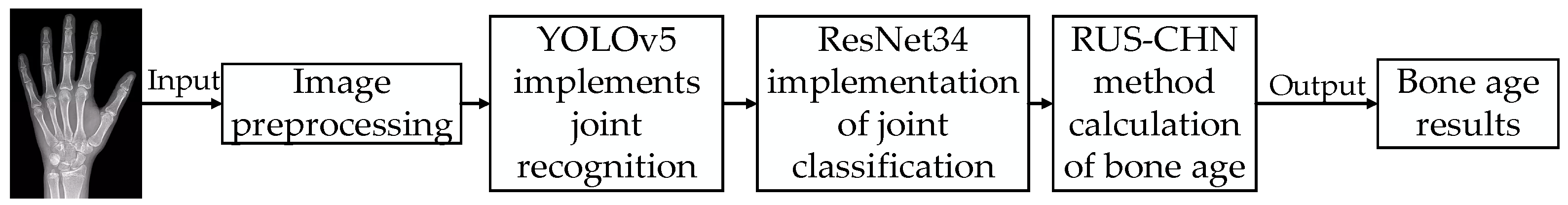

3. Model Design and Implementation

- (1)

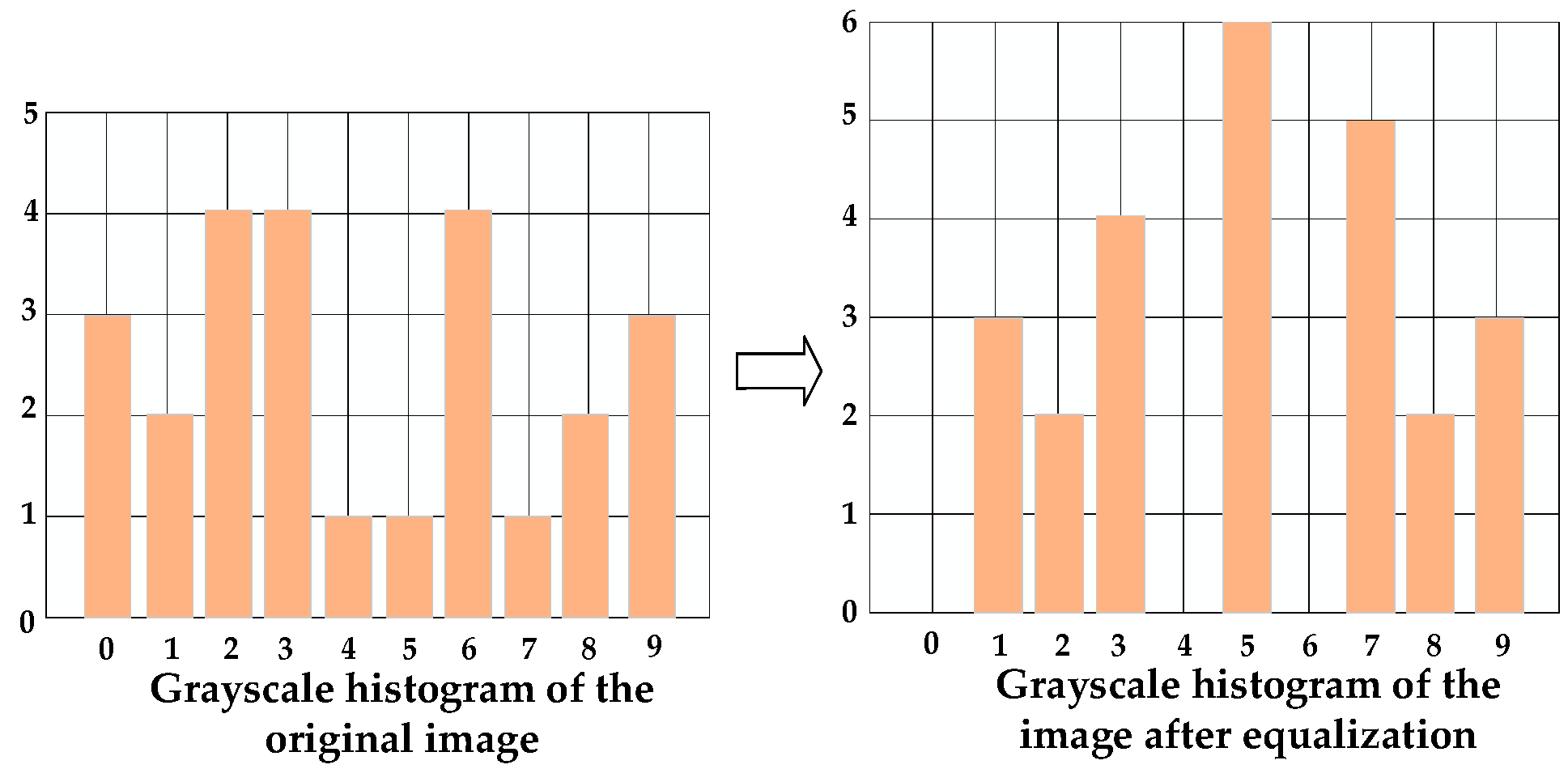

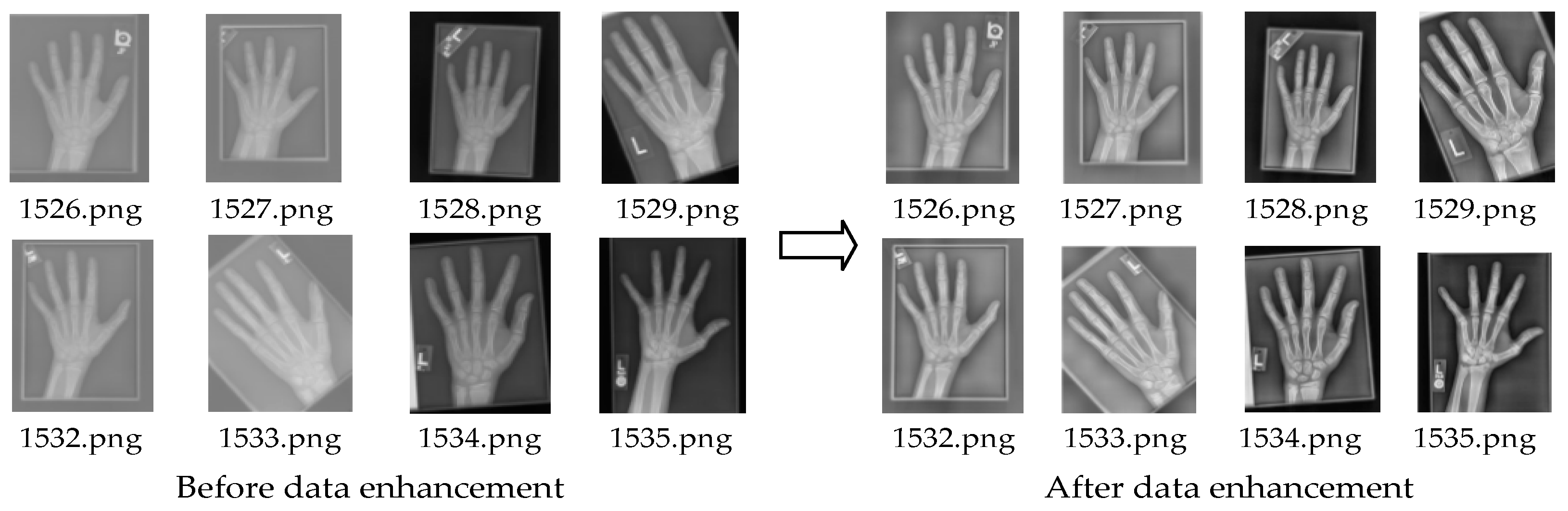

- To improve the quality of the dataset used in model training and to speed up the target detection, the hand bone images will be subjected to contrast-limited adaptive histogram equalization defogging, rotation augmentation, and adaptive scaling expansion square operations as a way to enhance the data of the images themselves.

- (2)

- To improve the accuracy and efficiency of hand bone joint recognition, the study uses the YOLOv5 network structure for training, and 21 joints are identified based on the dataset labels, and then 13 joints are obtained via screening.

- (3)

- To solve the problems of gradient disappearance, gradient explosion, overfitting, and degradation that often accompany deep networks, the study uses the ResNet34 network [3], which is more effective in solving the degradation problem, to weaken the connection between layers of the network through its residual structure.

- (4)

- To further improve the model accuracy, the SGD optimizer is selected for the training of joint classification based on ResNet34 through experimental comparison.

- (5)

- To more closely match the physiological characteristics of Chinese adolescent children, the RUS-CHN scoring method is used as the evaluation marker for bone age detection.

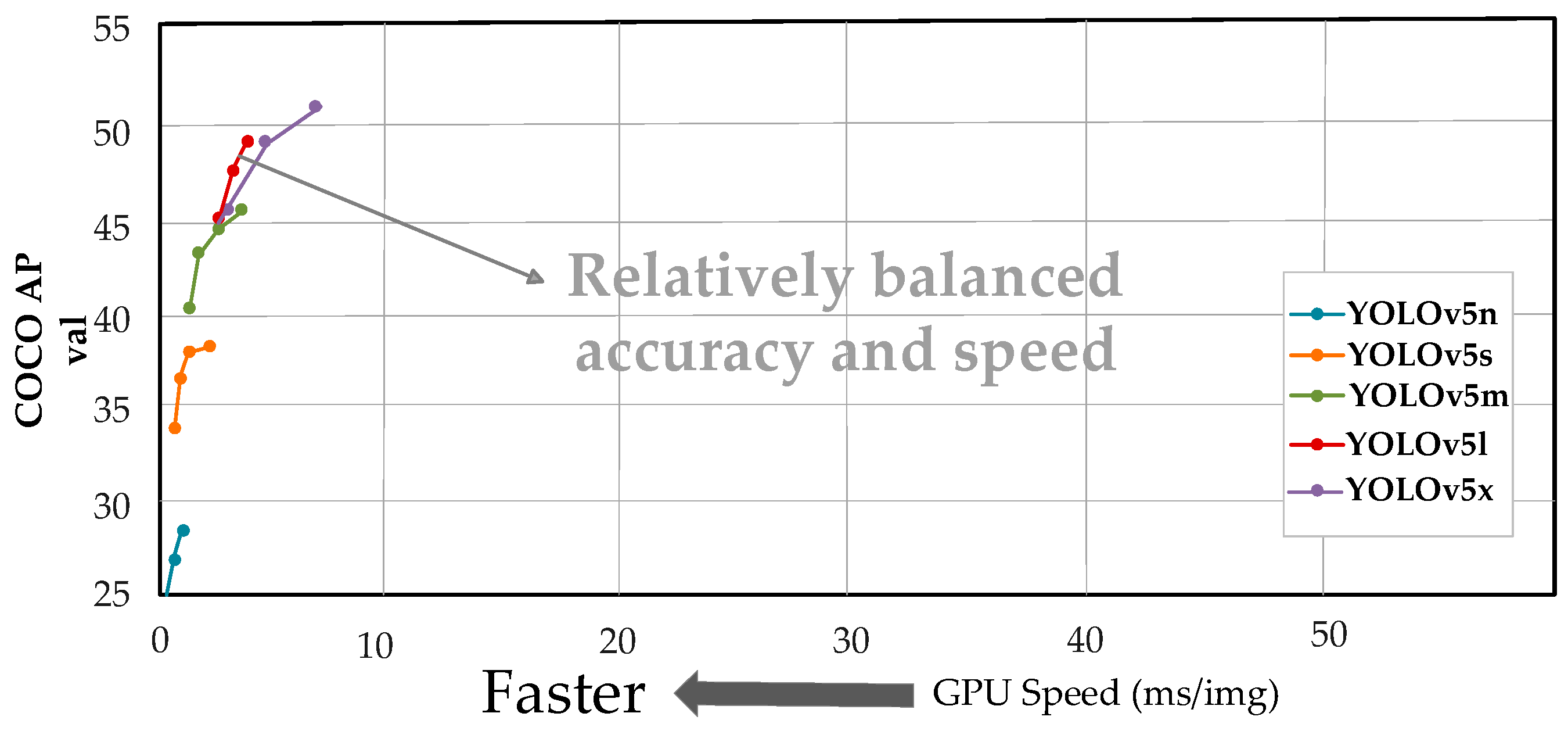

3.1. YOLOv5 Network

3.1.1. Input

3.1.2. Backbone Network

3.1.3. Neck Layer

3.1.4. Prediction Detection Layer

3.2. ResNet34 Network

3.3. SGD-ResNet34 Optimization Network

3.4. Bone Age Detection Model Based on YOLOv5 and ResNet34

| Algorithm 1 YARN Bone Age Detection Algorithm |

| 1: def opt, label, yolov5(structure), resnet34(structure), model |

| 2: opt = torch.randn(opt_name[0], opt_name[1]) 3: batch reading and data enhancement based on bath_size |

| 4: if opt.update: # update all models (to fix sourcechangewarning) 5: for opt.weights in [‘yolov5l.pt’]: |

| 6: detect() |

| 7: get(opt.weights) |

| 8: else: |

| 9: detect() 10: for key, value in all_labels: 11: if opt_name == key: 12: get(opt) 13: else: 14: next opt 15: for epoch in range(epochs): 16: resnet34.train() 17: for opt, label in enumerate(train_bar): 18: for outputs = model(opt): 19: get(outputs) |

- (1)

- Input hand bone X-ray images for image pre-processing operation;

- (2)

- Use the YOLOv5 network to first identify the 21 joints of the hand bone, and then filter the 13 major joints that were focused by the RUS-CHN method based on the testing parameters of YOLOv5;

- (3)

- Train the minor joint dataset using ResNet34, finding the corresponding class of each minor joint by weighting, and then classify these 13 minor joints into 9 major classes precisely;

- (4)

- Calculate the age of hand bones using the RUS-CHN method.

4. Experimental Results and Analysis

4.1. Public Dataset Preparation

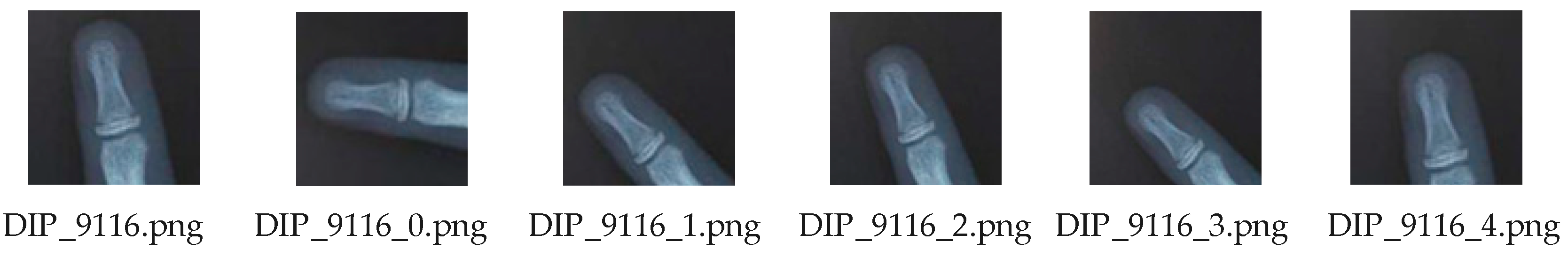

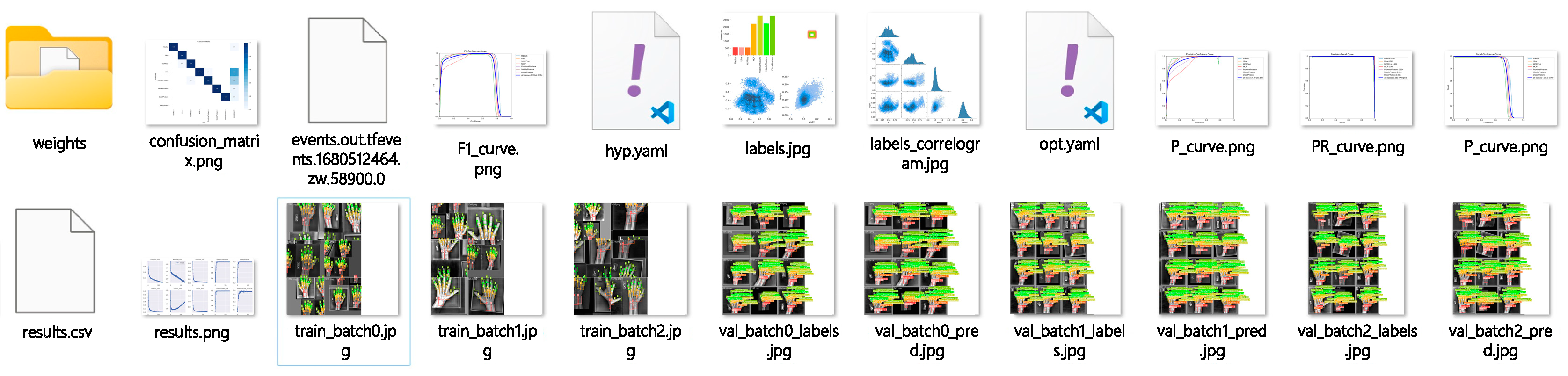

4.2. YOLOv5 Implementation of Joint Recognition

4.2.1. Model Training

- (1)

- Divide the training set, validation set, and test set. The training set is used to estimate the parameters in the model so that the model can learn the laws that are close to the real environment and make predictions for real situations; the validation set is used to make a preliminary assessment of the hyperparameters of the network and the ability of the model to prevent overfitting while training; and the test set is used to evaluate the prediction performance of the model. Among them, the data in the training set and the test set should not overlap, and the amount of data in the training set should be much larger than those in the test set.

- (2)

- Modify the data path to prevent a situation where the training cannot be performed due to the non-existence of the directory and the non-existence of the data. The paths of the relevant datasets should be modified.

- (3)

- Modify the categories. There are 80 categories in the COCO dataset, and 7 categories are used to identify joints in this experiment.

- (4)

- Write Yaml files to write these seven categories in Yaml file format.

- (5)

- Adjust the hyperparameters for training. YOLOv5 defines many parameters, which can be modified as needed during training and testing, and the parameters for this experiment are shown in Table 3.

4.2.2. Detection Results of YOLOv5

4.3. ResNet34 Implementation of Joint Classification

4.4. Algorithm Comparison

4.5. Bone Age Detection Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| TW | Tanner–Whitehouse |

| YOLOv5 | You Only Look Once-v5 |

| ResNet34 | Residual Network-34 |

| YARN | A bone age detection framework combining YOLOv5 and ResNet34 |

| RUS-CHN | China 05 RUS–CHN |

| CNN | Convolutional Neural Network |

| BAA | Bone Age Assessment |

| GP | Greulich–Pyle |

| ReLU | Rectified Linear Units |

| PreLU | Parametric Rectified Linear Units |

| MAE | Mean Absolute Error |

| RB-FCL | A region-based feature connectivity layer |

| R-CNN | A region-based convolutional neural network |

| CBAM | Convolutional Block Attention Module |

| VGG | Visual Geometry Group |

| ROI | Region Of Interest |

| CHN | the standards of skeletal maturity of hand and wrist for Chinese method |

| SGD | Stochastic Gradient Descent |

| Adam | Adaptive Moment Estimation |

| CBL | Conv Bn Leakyrelu |

| CPS | Cyber Physical Systems |

| SPP | Spatial Pyramid Pooling |

| NCHW | Non-Coronal Hole Solar Wind |

| FPN | Feature Pyramid Network |

| PAN | Path Aggregation Network |

| NMS | Non-Maximum Suppression |

| BN | Batch Normalization |

| RNN | Recurrent Neural Network |

References

- Ning, G.; Qu, H.B.; Liu, G.J.; Wu, K.M.; Xie, S.X. Diagnostic Test of TW System Bone Age of the Radius Ulna and Short of Bones in Chinese Girls With Idiopathic Precocious Puberty. Chin. J. Obs./Gyne Pediatr. (Electron. Version) 2008, 4, 16–20. [Google Scholar]

- Nepal, U.; Eslamiat, H. Comparing YOLOv3, YOLOv4 and YOLOv5 for Autonomous Landing Spot Detection in Faulty UAVs. Sensors 2022, 22, 464. [Google Scholar] [CrossRef] [PubMed]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Peng, L.Y.; Duan, Y.M.; Wang, Y.Q.; Peng, T.; Niu, X.K. The value of novel artificial intelligence in the determination of bone age in regional population. China Med. Equip. 2021, 18, 113–117. [Google Scholar]

- Zhang, X.Y. Baid Paddlepaddle: Independent AI based deep learning accelerates industrial upgrading. In-Depth Interview 2022, 11, 26–37. [Google Scholar]

- Davis, L.M.; Theobald, B.J.; Bagnall, A. Automated Bone Age Assessment Using Feature Extraction. Lect. Notes Comput. Sci. 2012, 7435, 43–51. [Google Scholar]

- Lee, H.; Tajmir, S.; Lee, J.; Zissen, M.; Yeshiwas, B.A.; Alkasab, T.K.; Choy, G.; Do, S. Fully Automated Deep Learning System for Bone Age Assessment. J. Digit. Imaging 2017, 4, 427–441. [Google Scholar] [CrossRef]

- Zhan, M.J.; Zhang, S.J.; Liu, L.; Liu, H.; Bai, J.; Tian, X.M.; Ning, G.; Li, Y.; Zhang, K.; Chen, H.; et al. Automated bone age assessment of left hand and wrist in Sichuan Han adolescents based on deep learning. Chin. J. Forensic Med. 2019, 34, 427–432. [Google Scholar]

- Wibisono, A.; Mursanto, P. Multi Region-Based Feature Connected Layer (RB-FCL) of deep learning models for bone age assessment. J. Big Data 2020, 7, 67. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, J.H. Bone Age Assessment Method on X-ray Images of Pediatric Hand Bone Based on Deep Learning. Space Med. Med. Eng. 2021, 34, 252–259. [Google Scholar]

- Wang, J.Q.; Mei, L.Y.; Zhang, J.H. Bone Age Assessment for X-ray Images of Hand Bone Based on Deep Learning. Comput. Eng. 2021, 47, 291–297. [Google Scholar]

- Ding, W.L.; Yu, J.; Li, T.; Ding, X. Bone age assessment of the carpal region based on improved bilinear network. J. Zhe Jiang Univ. Technol. 2021, 49, 511–519. [Google Scholar]

- Lee, K.C.; Lee, K.H.; Kang, C.H.; Ahn, K.S.; Chung, L.Y.; Lee, J.J.; Hong, S.J.; Kim, B.H.; Shim, E. Clinical Validation of a Deep Learning-Based Hybrid (Greulich-Pyle and Modified Tanner-Whitehouse) Method for Bone Age Assessment. Korean J. Radiol. 2021, 22, 2017–2025. [Google Scholar] [CrossRef] [PubMed]

- Mao, K.J.; Wu, K.X.; Lu, W.; Chen, L.J.; Mao, J.F. A Study of the CHN Intelligent Bone Age Assessment Method concerning Atlas Developmental Indication. J. Electron. Inf. Technol. 2023, 45, 958–967. [Google Scholar]

- Kleinberg, R.; Li, Y.Z.; Yuan, Y. An Alternative View: When Does SGD Escape Local Minima? In Proceedings of the 35th International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 2698–2707. [Google Scholar]

- Tang, S.H.; Teng, Z.S.; Sun, B.; Hu, Q.; Pan, X.F. Improved BP neural network with ADAM optimizer and the application of dynamic weighing. J. Electron. Meas. Instrum. 2021, 35, 127–135. [Google Scholar]

- Li, X.B.; Li, Y.G.; Guo, N.; Fan, Z. Mask detection algorithm based on YOLOv5 integrating attention mechanism. J. Graph. 2023, 44, 16–25. [Google Scholar]

- Chen, C.Q.; Fan, Y.C.; Wang, L. Logo Detection Based on Improved Mosaic Data Enhancement and Feature Fusion. Comput. Meas. Control 2022, 30, 188–194+201. [Google Scholar]

- Fang, S.Q.; Hu, P.L.; Huang, Y.Y.; Zhang, X. Optimization and Application of K-means Algorithm. Mod. Inf. Technol. 2023, 7, 111–115. [Google Scholar]

- Wu, L.Z.; Wang, X.L.; Zhang, Q.; Wang, W.H.; Li, C. An object detection method of a falling person based on optimized YOLOv5s. J. Graph. 2022, 43, 791–802. [Google Scholar]

- Huang, C.; Jiang, H.; Quan, Z.; Zuo, K.; He, N.; Liu, W.C. Design and Implementation of Batched GEMM for Deep Learning. Chin. J. Comput. 2022, 45, 225–239. [Google Scholar]

- Veit, A.; Matera, T.; Neumann, L.; Matas, J.; Belongie, S. COCO-Text: Dataset and Benchmark for Text Detection and Recognition in Natural Images. arXiv 2016, arXiv:1601.07140. [Google Scholar]

- Xu, X.P.; Kou, J.C.; Su, L.J.; Liu, G.J. Classification Method of Crystalline Silicon Wafer Based on Residual Network and Attention Mechanism. Math. Pract. Theory 2023, 53, 1–11. [Google Scholar]

- Li, Z.H.; Li, R.G.; Li, X.F. Improved LFM-SGD collaborative filtering recommendation algorithm based on implicit data. Intell. Comput. Appl. 2023, 13, 52–57. [Google Scholar]

- Sharkawy, A.N. Principle of neural network and its main types. J. Adv. Appl. Comput. Math. 2020, 7, 8–19. [Google Scholar] [CrossRef]

- Wang, J.; Pang, Y.W. X-Ray Luggage Image Enhancement Based on CLAHE. J. Tianjin Univ. 2010, 43, 194–198. [Google Scholar]

| Network Layer Name | Output Size | Network Layer Structure |

|---|---|---|

| Conv1 | 112 × 112 | 7 × 7, 64, stride 2 3 × 3 Maxpooling, stride 2 |

| Conv2_x | 56 × 56 | |

| Conv3_x | 28 × 28 | |

| Conv4_x | 14 × 14 | |

| Conv5_x | 7 × 7 | |

| 1 × 1 | Average pooling, 1000-d fc, softmax |

| Joint Name | DIP | DIP First | MCP | MCP First | MIP | PIP | PIP First | Radius | Ulan |

| Number of Levels | 11 | 11 | 10 | 11 | 12 | 12 | 12 | 14 | 12 |

| Parameter Name | Value | Role |

|---|---|---|

| weights | YOLOv5l.pt | Version weights used by YOLOv5 |

| data | ‘data/my_data.yaml’ | Yaml files on categories and category numbers |

| epochs | 200 | Training rounds |

| batch-size | −1 | Automatic calculation of batches per round |

| Files/Folders | Instructions |

|---|---|

| weights | Best/worst weights for training |

| Confusion_matrix.png | Confusion Matrix |

| F1_curve | The relationship between the harmonic mean function of precision and recall and the confidence level |

| train_batch | Training results |

| val_bathch | Validation results |

| Parameter Name | Value | Role |

|---|---|---|

| weights | runs/train/exp/weights/best.pt | The best weights obtained from YOLOv5 training |

| source | ‘E:/code/Bone/Img’ | Path of the dataset to detect |

| data | ‘data/my_data.yaml’ | Yaml files on categories and category numbers |

| conf-three | 0.5 | Confidence threshold |

| you-three | 0.25 | IOU threshold |

| save-txt | true | Retain target information txt file |

| 13 Joints | Joint Name 9 Categories | Classifying Grades |

|---|---|---|

| MCPFirst | Metacarpal bone I | MCPFirst→11 |

| MCPThird | Metacarpal bone III | MCP→10 |

| MCPFifth | Metacarpal bone V | MCP→10 |

| DIPFirst | Distal phalange I | DIPFirst→11 |

| DIPThird | Distal phalange III | DIP→11 |

| DIPFifth | Distal phalange V | DIP→11 |

| PIPFirst | Proximal phalange I | PIPFirst→12 |

| PIPThird | Proximal phalange III | PIP→12 |

| PIPFifth | Proximal phalange V | PIP→12 |

| MIPThird | Middle phalange III | MAP→12 |

| MIPFifth | Middle phalanges V | MAP→12 |

| Radius | Radius | Radius→14 |

| Ulna | Ulnar | Ulna→12 |

| SGD | opt = torch.optim.SGD(model.parameters(),lr = 0.001,moment um = 0.9,weight_decay = 0.005) |

| Adam | opt = torch.optim.Adam(model.parameters(),lr = 0.001,be tas = (0.9,0.999)) |

| SGD | Adam | ||

|---|---|---|---|

| MCPFirst | 0.952 | MCPFirst | 0.832 |

| MCP | 0.928 | MCP | 0.804 |

| DIPFirst | 0.917 | DIPFirst | 0.804 |

| DIP | 0.945 | DIP | 0.859 |

| PIPFirst | 0.974 | PIPFirst | 0.888 |

| PIP | 0.958 | PIP | 0.828 |

| MIP | 0.962 | MIP | 0.856 |

| Radius | 0.929 | Radius | 0.838 |

| Ulna | 0.931 | Ulna | 0.835 |

| Joint Bone | YOLOv5 | ResNet34 | YARN |

|---|---|---|---|

| MCPFirst | 0.942 | 0.905 | 0.951 |

| MCP | 0.934 | 0.912 | 0.960 |

| DIPFirst | 0.915 | 0.910 | 0.942 |

| DIP | 0.935 | 0.923 | 0.953 |

| PIPFirst | 0.927 | 0.916 | 0.948 |

| PIP | 0.956 | 0.914 | 0.965 |

| MIP | 0.961 | 0.907 | 0.962 |

| Radius | 0.931 | 0.911 | 0.939 |

| Ulna | 0.940 | 0.929 | 0.944 |

| Average Accuracy Rate | 0.938 | 0.914 | 0.952 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheng, W.; Shen, J.; Huang, Q.; Liu, Z.; Lin, J.; Zhu, Q.; Zhou, L. Symmetry-Based Fusion Algorithm for Bone Age Detection with YOLOv5 and ResNet34. Symmetry 2023, 15, 1377. https://doi.org/10.3390/sym15071377

Sheng W, Shen J, Huang Q, Liu Z, Lin J, Zhu Q, Zhou L. Symmetry-Based Fusion Algorithm for Bone Age Detection with YOLOv5 and ResNet34. Symmetry. 2023; 15(7):1377. https://doi.org/10.3390/sym15071377

Chicago/Turabian StyleSheng, Wenshun, Jiahui Shen, Qiming Huang, Zhixuan Liu, Jiayan Lin, Qi Zhu, and Lan Zhou. 2023. "Symmetry-Based Fusion Algorithm for Bone Age Detection with YOLOv5 and ResNet34" Symmetry 15, no. 7: 1377. https://doi.org/10.3390/sym15071377

APA StyleSheng, W., Shen, J., Huang, Q., Liu, Z., Lin, J., Zhu, Q., & Zhou, L. (2023). Symmetry-Based Fusion Algorithm for Bone Age Detection with YOLOv5 and ResNet34. Symmetry, 15(7), 1377. https://doi.org/10.3390/sym15071377