Towards Reliable Federated Learning Using Blockchain-Based Reverse Auctions and Reputation Incentives

Abstract

:1. Introduction

- We propose a BlockChain-based Decentralized Federation Learning (BCD-FL) model, which can mitigate the single point of failure issue in traditional FL and create a secure and trustworthy system by using blockchain to ensure data privacy and fairness. In addition, we introduce the Interplanetary File System (IPFS) [23] as the storage medium for training models in BCD-FL to effectively reduce the storage cost.

- We design a smart contract incentive mechanism using the reverse auction technique to incentivize data owners to join and contribute high-quality models with a limited payment budget.

- To enhance the model quality in BCD-FL, we design a reputation mechanism based on the proposed marginal contribution model quality detection method, which can effectively translate the training model quality into the reputation of the data owner.

- Finally, we conduct extensive experiments on a public dataset. The experimental results show that the proposed approaches can effectively enhance the quality of FL model updates while maintaining promising individual rationality, computational efficiency, budget balance, and truthfulness.

2. Related Work

2.1. Federated Learning

2.2. Incentive Mechanism

2.3. Blockchain-Based Federated Learning

3. Preliminaries

3.1. Federated Learning

3.2. Blockchain

3.3. Smart Contract

3.4. InterPlanetary File System

4. System Model

4.1. Proposed System Architecture

- Data Owner: A data owner is a node that holds a local dataset that is not publicly available in FL. Data owners can be candidates or participants. Candidates are the nodes that are eligible to participate in the FL task and can bid by calling a smart contract. The selection process will be carried out by BCD-FL, and only successful bidders will be able to participate in the task. Once selected, the candidate will become a participant and can call the corresponding smart contract for calculation. Only the selected nodes that are designated as participants can receive rewards through smart contracts. This ensures that only the authorized nodes are part of the FL task and are incentivized appropriately for their contributions.

- Task Publisher: The task publisher is the entity that initiates the training task. It describes the type of data needed and the model structure and provides the payment budget for each round of the FL process. The above information is then published to the BCD-FL platform. Data owners who hold local datasets and have computational resources can participate in the learning process by bidding on the training task. The data owners develop their bidding strategy based on multiple factors, including their computational power, data volume, quality, etc., and invoke the corresponding smart contract to bid on the task publisher’s offer.

- Blockchain Network: We utilize blockchain in the BCD-FL as a medium for storing the IPFS hash of model weights (). Each data owner initiates a transaction and invokes a smart contract to participate in FL. After validation, the transaction is encapsulated into a new block, which is then added to the blockchain network following consensus authentication.

- Learning Model: The learning model, often referred to as the training model, uses a dataset for training purposes. In the BCD-FL model, we distinguish between two types of learning models: the local model and the global model. The local model is trained individually by each participant using its own dataset. The global model is obtained through the cooperation of all winning nodes, with the model quality being evaluated and aggregated by the task publisher.

- Multi-smart Contracts: To enhance the trustworthiness and reliability of BCD-FL, we use smart contracts during the execution of FL tasks. Our model includes several types of smart contracts that are specifically designed to facilitate different stages of the process. These include Task Initialization Contract, Auction Contract, FL Contract, Reputation Contract, and Reward Contract. The FL process within the BCD-FL relies on several contracts to ensure its robustness, security, and fairness. Each of these contracts plays a critical role in achieving these goals.

4.2. Multi-Smart Contracts in BCD-FL

- (1)

- Task Initialization Contract: When the task publisher initiates a new FL task, the BCD-FL guarantees a systematic and organized start through the use of this contract, which is defined as follows:

- InitializeTask(): This function can be used by the task publisher to write the model parameter type, model structure, and payment budget information for an FL task to BCD-FL.

- (2)

- Auction Contract: After a task is published, interested candidates can bid through this type of contract during the auction time. After the auction ends, the task publisher combines the reputation and bids of the candidates to select the nodes. The main functions are as follows:

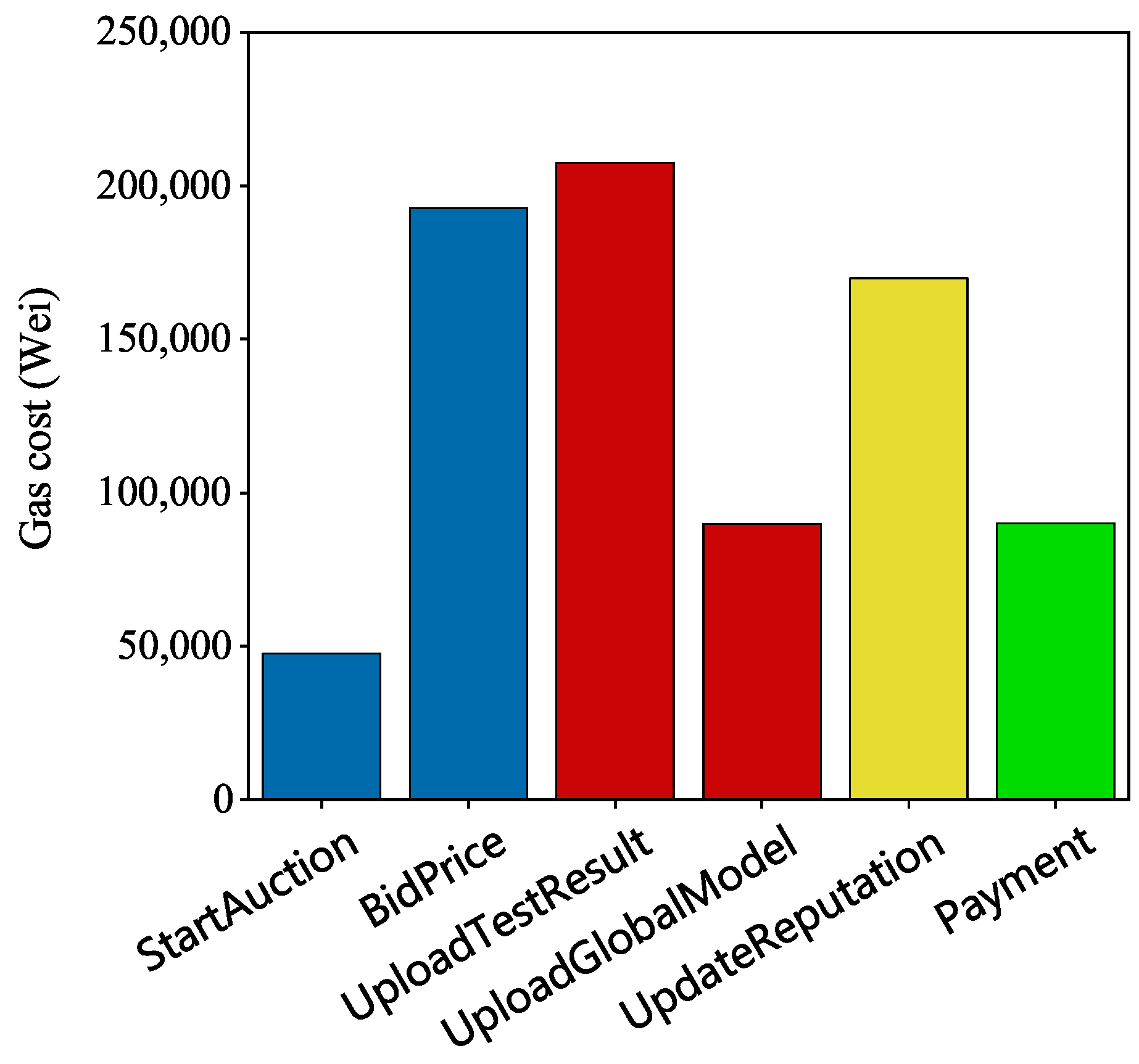

- StartAuction(): This function is called by the task publisher in order to initiate the auction process. The purpose of this function is to allow the task publisher to set the auction time and bid range, as well as verify their identity before proceeding with the execution of the auction. It is crucial to highlight that only the task publisher possesses the authority to activate this function, ensuring accountability and controlled management of FL tasks within the BCD-FL.

- BidPrice(): This function is designed to facilitate bidding by candidates, allowing them to specify the size of data used. If a candidate fails to bid during the auction period, it is considered to have abandoned the auction.

- (3)

- Federated Learning Contract: This contract serves as a means of communication between the task publisher and the data owner. The data owner uses this contract to retrieve the hash of the global model and access it from IPFS. On the other hand, the task publisher uses this contract to obtain the hash of the local model and upload the evaluation result and IPFS hash of the aggregated global model. The main functions are as follows:

- DownloadGlobalModel(): After the task is published, the winning node can retrieve the latest global model hash by invoking this function. Subsequently, the node obtains the most recent global model from the IPFS.

- UploadLocalModel(): Once the winning node obtains the global model, it proceeds to train this model and subsequently uploads the trained local model to the IPFS. The node then invokes this function to upload the IPFS hash of the local model.

- DownloadLocalModel(): The task publisher invokes this function to download the IPFS hash of all local model parameters uploaded by the winning nodes. Subsequently, the publisher retrieves the local models from the IPFS file storage system using these hashes. Importantly, this function also requires identity verification of the caller, thereby ensuring the integrity of the FL process and safeguarding the data from unauthorized access.

- UploadTestResult(): When the task publisher finishes downloading all local models, the task publisher performs model quality evaluation tests on all local models. Then, this function uploads the evaluation result and calls the reputation contract to update the reputation of the node.

- UploadGlobalModel(): After obtaining the global model and uploading it to IPFS through the model aggregation algorithm, the task publisher can use this function to update the IPFS hash of the global model.

- (4)

- Reputation Contract: Reputation contract is designed to enhance trust by incentivizing good behavior and penalizing poor behavior among data owners. Reputation contract will add a reputation score to those nodes with good behavior and deduct the reputation of nodes with poor quality of uploaded models. The main functions are discussed as follows:

- UpdateReputation(): This function updates the reputation of a node by testing the input of the results and combining the historical reputation values. The reputation indirectly reflects the quality of the local models so that the task publisher can select high-quality models for aggregation.

- QueryReputation(): The data owner can call this function to view the current reputation as well as the historical reputation of the record.

- (5)

- Reward Contract: This contract pays the reward for the winning node. The main function is described as follows:

- Payment(): The task publisher considers the reputation of the data owner and the bid price, and then makes a payment to the winning node based on the reward payment algorithm.

4.3. Federated Learning Processes in BCD-FL

- Step 1: After sending the initialized model to IPFS, the task publisher invokes the task initialization contract function with the information of the payment budget, model parameters, and computational resources.

- Step 2: The task publisher uses reverse auction to call StartAuction() to initiate the auction. Interested candidates can develop a strategy based on their computational power, data volume, and computational cost, and then call BidPrice() function to bid. The task publisher combines the bids and reputation of the nodes and selects the winning node.

- Step 3: The winning node uses the DownloadGlobalModel() function to obtain the global model hash and retrieve the actual global model from the IPFS.

- Step 4: The winning node sends the trained local model to IPFS, and then calls UploadLocalModel() to upload the model IPFS hash.

- Step 5: The task publisher calls the DownloadLocalModel() function to obtain the hash of the local model and the local model of all nodes from IPFS.

- Step 6: After obtaining all the local models, the task publisher performs the model quality evaluation and calls UploadTestResult() to upload the test results, followed by calling UpdateReputation() to update the reputation of the winning nodes.

- Step 7: The task publisher checks the model quality and filters out the bad models, and aggregates the remaining models to obtain a new global model.

- Step 8: When the new global model is generated, the task publisher sends the updated global model to IPFS, and the corresponding hash value is uploaded to the blockchain network by calling the UploadGlobalModel() function.

- Step 9: The task publisher can make a payment to the winning nodes by calling the Payment() function.

| Algorithm 1 Processing Methods for Task Publisher in BCD-FL |

|

| Algorithm 2 Processing Methods for Data Owner in BCD-FL |

|

4.4. Optimization Goals

5. The Incentive and Reputation Techniques in BCD-FL

5.1. Incentive Mechanism Based on Reverse Auction (IMRA)

| Algorithm 3 Selecting and Paying Methods for Data Owners |

|

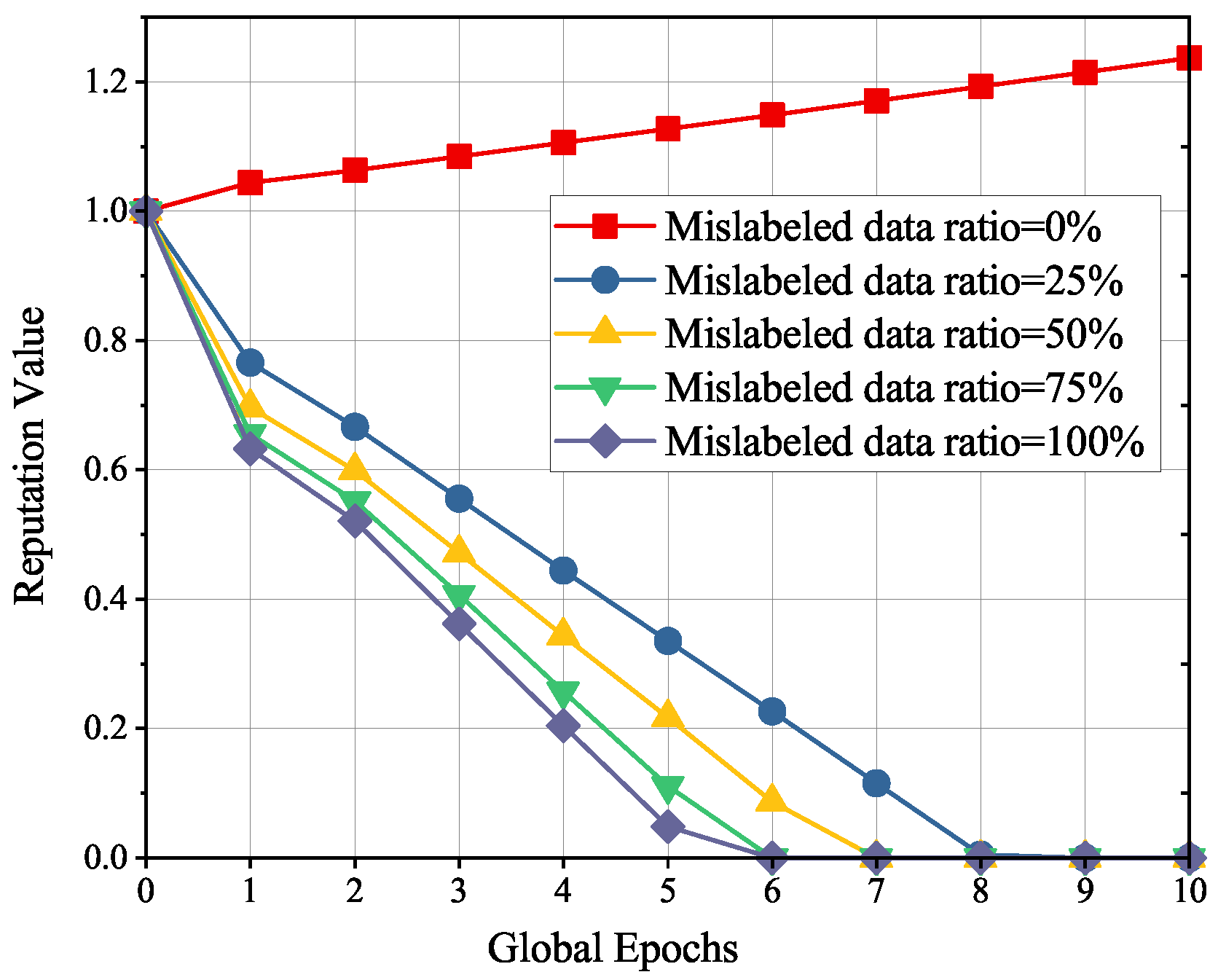

5.2. Reputation-Based Model Quality Evaluation

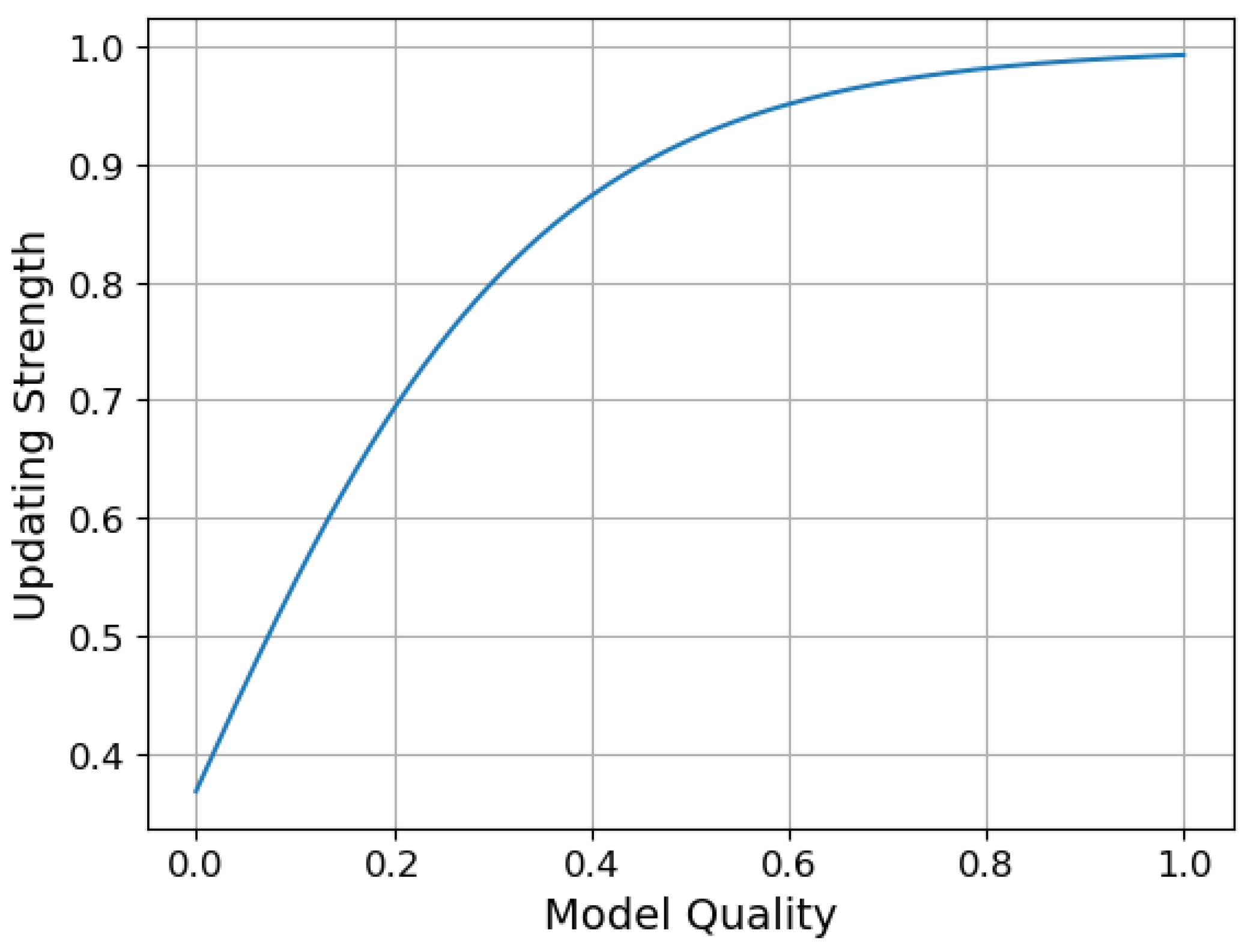

- represents the historical reputation value of node i, which is the exponential moving average, and can be computed as the following formula:where denotes the reputation value in the th round and is a smoothing factor. If the value of is larger, the exponential moving average is more sensitive to the reputation value at the current moment. Otherwise, the past reputation values have a greater impact.

- and are the reputation update values of unit updating strength. The update is triggered only when the training model quality is greater than or equal to . Otherwise, the node will be penalized by a decrease in its reputation. The and are scaling factors to limit the updating strength in a certain interval.

5.3. Model Aggregation

6. Theoretical Analysis

- The selection rule is monotonic: In iteration t, if a node i wins by its bid price , then node i will win by bidding at any bid price .

- Critical payment: If node i wins with a bid price , then node i can also win with another bid price , but winning with gives node i the maximum payment, which is called the critical payment of node i. That is, the critical payment is the maximum bid that node i needs. If a node’s bid price is greater than the critical payment, it is unlikely to win the bid.

7. Performance Analysis

7.1. Experimental Settings

- PyTorch: We used Python 3.7.13 and PyTorch 1.13.0 to train and aggregate the local and global models.

- IPFS: IPFS uses version 0.7.0 and each IPFS client uses the "ipfs init" command for initial setup and the "ipfs daemon" command to start the daemon.

- Blockchain Network Setup: We have built a local blockchain network using Ganache. The smart contracts we develop are written in Solidity, a programming language specifically designed for creating contracts on the Ethereum blockchain.

- Dataset: In our experiments, the FL model is trained on the MNIST dataset, which is commonly used as a benchmark dataset for testing FL algorithms. The training set consists of 60,000 examples, whereas the test set has 10,000 examples. The dataset contains handwritten digits ranging from 0 to 9.

- Federated Learning Setup: The setup for FL is shown in Table 3, where we set 20 data owners and a budget of 20 for each round of task publishers. Additionally, we set the number of malicious nodes at random, where the value = 0.5 indicates that half of the nodes in the network are malicious. When = 1, it indicates that malicious nodes tampered with the data labels and set the mislabeled data ratio to 100%, which results in low-quality error samples. This occurs because the data of malicious nodes alter the data labels in accordance with the maliciousness of the nodes themselves. Additionally, we have set the parameters within our model for optimal performance and accuracy. Specifically, the values and are set to 0.1 and 0.5 respectively, while and are both set to 0.2. The value of is chosen to be −0.02. These parameter settings empower the model to effectively handle data from malicious nodes and maintain the integrity of our learning process.

- The model architecture: The DNN architecture of MNIST is presented in Table 4. The architecture of the model is comprised of three layers that are fully connected, and the activation function employed is Rectified Linear Unit (ReLU). The model employs the cross-entropy loss function as its loss function, and the learning rate is assigned a value of 0.01.

7.2. Experimental Results

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Zheng, H.; Li, B.; Liu, G.; Li, Y.; Zhang, Y.; Gao, W.; Zhao, X. Blockchain-based Federated Learning Framework Applied in Face Recognition. In Proceedings of the 2022 7th International Conference on Signal and Image Processing (ICSIP), Suzhou, China, 20–22 July 2022; pp. 265–269. [Google Scholar] [CrossRef]

- Ni, J.; Shen, K.; Chen, Y.; Cao, W.; Yang, S.X. An Improved Deep Network-Based Scene Classification Method for Self-Driving Cars. IEEE Trans. Instrum. Meas. 2022, 71, 1–14. [Google Scholar] [CrossRef]

- Wang, F.Y.; Yang, J.; Wang, X.; Li, J.; Han, Q.L. Chat with chatgpt on industry 5.0: Learning and decision-making for intelligent industries. IEEE-CAA J. Autom. Sin. 2023, 10, 831–834. [Google Scholar] [CrossRef]

- Shehab, M.; Abualigah, L.; Shambour, Q.; Abu-Hashem, M.A.; Shambour, M.K.Y.; Alsalibi, A.I.; Gandomi, A.H. Machine learning in medical applications: A review of state-of-the-art methods. Comput. Biol. Med. 2022, 145, 105458. [Google Scholar] [CrossRef]

- Faheem, M.; Fizza, G.; Ashraf, M.W.; Butt, R.A.; Ngadi, M.A.; Gungor, V.C. Big Data acquired by Internet of Things-enabled industrial multichannel wireless sensors networks for active monitoring and control in the smart grid Industry 4.0. Data Brief 2021, 35, 106854. [Google Scholar] [CrossRef]

- Faheem, M.; Butt, R.A. Big datasets of optical-wireless cyber-physical systems for optimizing manufacturing services in the internet of things-enabled industry 4.0. Data Brief 2022, 42, 108026. [Google Scholar] [CrossRef]

- Li, Q.; Diao, Y.; Chen, Q.; He, B. Federated Learning on Non-IID Data Silos: An Experimental Study. In Proceedings of the 2022 IEEE 38th International Conference on Data Engineering (ICDE), Kuala Lumpur, Malaysia, 9–12 May 2022; pp. 965–978. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A.Y. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar] [CrossRef]

- Ding, J.; Tramel, E.; Sahu, A.K.; Wu, S.; Avestimehr, S.; Zhang, T. Federated Learning Challenges and Opportunities: An Outlook. In Proceedings of the ICASSP 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 8752–8756. [Google Scholar] [CrossRef]

- Tu, X.; Zhu, K.; Luong, N.C.; Niyato, D.; Zhang, Y.; Li, J. Incentive Mechanisms for Federated Learning: From Economic and Game Theoretic Perspective. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 1566–1593. [Google Scholar] [CrossRef]

- Kang, J.; Xiong, Z.; Niyato, D.; Xie, S.; Zhang, J. Incentive Mechanism for Reliable Federated Learning: A Joint Optimization Approach to Combining Reputation and Contract Theory. IEEE Internet Things J. 2019, 6, 10700–10714. [Google Scholar] [CrossRef]

- Zhan, Y.; Li, P.; Qu, Z.; Zeng, D.; Guo, S. A Learning-Based Incentive Mechanism for Federated Learning. IEEE Internet Things J. 2020, 7, 6360–6368. [Google Scholar] [CrossRef]

- Zhan, Y.; Zhang, J.; Li, P.; Xia, Y. Crowdtraining: Architecture and Incentive Mechanism for Deep Learning Training in the Internet of Things. IEEE Netw. 2019, 33, 89–95. [Google Scholar] [CrossRef]

- Vu, V.T.; Pham, T.L.; Dao, P.N. Disturbance observer-based adaptive reinforcement learning for perturbed uncertain surface vessels. ISA Trans. 2022, 130, 277–292. [Google Scholar] [CrossRef]

- Nguyen, K.; Dang, V.T.; Pham, D.D.; Dao, P.N. Formation control scheme with reinforcement learning strategy for a group of multiple surface vehicles. Int. J. Robust Nonlinear Control. 2023, 1–28. [Google Scholar] [CrossRef]

- Zhu, J.; Cao, J.; Saxena, D.; Jiang, S.; Ferradi, H. Blockchain-empowered federated learning: Challenges, solutions, and future directions. ACM Comput. Surv. 2023, 55, 1–31. [Google Scholar] [CrossRef]

- Oktian, Y.E.; Stanley, B.; Lee, S.-G. Building Trusted Federated Learning on Blockchain. Symmetry 2022, 14, 1407. [Google Scholar] [CrossRef]

- Mahmood, Z.; Jusas, V. Implementation Framework for a Blockchain-Based Federated Learning Model for Classification Problems. Symmetry 2021, 13, 1116. [Google Scholar] [CrossRef]

- Yun, J.; Lu, Y.; Liu, X. BCAFL: A Blockchain-Based Framework for Asynchronous Federated Learning Protection. Electronics 2023, 12, 4214. [Google Scholar] [CrossRef]

- Yuan, Y.; Wang, F.Y. Blockchain and Cryptocurrencies: Model, Techniques, and Applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1421–1428. [Google Scholar] [CrossRef]

- Upadhyay, K.; Dantu, R.; Zaccagni, Z.; Badruddoja, S. Is Your Legal Contract Ambiguous? Convert to a Smart Legal Contract. In Proceedings of the 2020 IEEE International Conference on Blockchain (Blockchain), Rhodes, Greece, 2–6 November 2020; pp. 273–280. [Google Scholar] [CrossRef]

- Benet, J. Ipfs-content addressed, versioned, p2p file system. arXiv 2014, arXiv:1407.3561. [Google Scholar]

- Hard, A.; Rao, K.; Mathews, R.; Ramaswamy, S.; Beaufays, F.; Augenstein, S.; Eichner, H.; Kiddon, C.; Ramage, D. Federated learning for mobile keyboard prediction. arXiv 2018, arXiv:1811.03604. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Akter, M.; Moustafa, N.; Lynar, T.; Razzak, I. Edge Intelligence: Federated Learning-Based Privacy Protection Framework for Smart Healthcare Systems. IEEE J. Biomed. Health Inform. 2022, 26, 5805–5816. [Google Scholar] [CrossRef]

- Xing, J.; Tian, J.; Jiang, Z.; Cheng, J.; Yin, H. Jupiter: A modern federated learning platform for regional medical care. Sci. China-Inf. Sci. 2021, 64, 1–14. [Google Scholar] [CrossRef]

- Fu, Y.; Li, C.; Yu, F.R.; Luan, T.H.; Zhang, Y. A Selective Federated Reinforcement Learning Strategy for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2023, 24, 1655–1668. [Google Scholar] [CrossRef]

- Rjoub, G.; Bentahar, J.; Wahab, O.A. Explainable AI-based Federated Deep Reinforcement Learning for Trusted Autonomous Driving. In Proceedings of the 2022 International Wireless Communications and Mobile Computing (IWCMC), Dubrovnik, Croatia, 30 May 2022–3 June 2022; pp. 318–323. [Google Scholar] [CrossRef]

- Yang, H.; Li, C.; Sun, Z.; Yao, Q.; Zhang, J. Cross-Domain Trust Architecture: A Federated Blockchain Approach. In Proceedings of the 2022 20th International Conference on Optical Communications and Networks (ICOCN), Shenzhen, China, 12–15 August 2022; pp. 1–3. [Google Scholar] [CrossRef]

- Peng, B.; Chi, M.; Liu, C. Non-IID federated learning via random exchange of local feature maps for textile IIoT secure computing. Sci. China-Inf. Sci. 2022, 65, 170302. [Google Scholar] [CrossRef]

- Shapley, L.S. A value for n-person games. Class. Game Theory 1997, 69, 307–317. [Google Scholar]

- Song, T.; Tong, Y.; Wei, S. Profit allocation for federated learning. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2577–2586. [Google Scholar] [CrossRef]

- Wang, G. Interpret federated learning with shapley values. arXiv 2019, arXiv:1905.04519. [Google Scholar]

- Agarwal, A.; Dahleh, M.; Sarkar, T. A marketplace for data: An algorithmic solution. In Proceedings of the 2019 ACM Conference on Economics and Computation, Phoenix, AZ, USA, 24–28 June 2019; pp. 701–726. [Google Scholar] [CrossRef]

- Jia, R.; Dao, D.; Wang, B.; Hubis, F.A.; Hynes, N.; Gürel, N.M.; Li, B.; Zhang, C.; Song, D.; Spanos, C.J. Towards efficient data valuation based on the shapley value. In Proceedings of the The 22nd International Conference on Artificial Intelligence and Statistics, Naha, Okinawa, Japan, 16–18 April 2019; pp. 1167–1176. [Google Scholar] [CrossRef]

- Yang, S.; Wu, F.; Tang, S.; Gao, X.; Yang, B.; Chen, G. On Designing Data Quality-Aware Truth Estimation and Surplus Sharing Method for Mobile Crowdsensing. IEEE J. Sel. Areas Commun. 2017, 35, 832–847. [Google Scholar] [CrossRef]

- Pandey, S.R.; Tran, N.H.; Bennis, M.; Tun, Y.K.; Manzoor, A.; Hong, C.S. A Crowdsourcing Framework for On-Device Federated Learning. IEEE Trans. Wirel. Commun. 2020, 19, 3241–3256. [Google Scholar] [CrossRef]

- Khan, L.U.; Pandey, S.R.; Tran, N.H.; Saad, W.; Han, Z.; Nguyen, M.N.H.; Hong, C.S. Federated Learning for Edge Networks: Resource Optimization and Incentive Mechanism. IEEE Commun. Mag. 2020, 58, 88–93. [Google Scholar] [CrossRef]

- Feng, S.; Niyato, D.; Wang, P.; Kim, D.I.; Liang, Y.C. Joint Service Pricing and Cooperative Relay Communication for Federated Learning. In Proceedings of the 2019 International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Atlanta, GA, USA, 14–17 July 2019; pp. 815–820. [Google Scholar] [CrossRef]

- Kang, J.; Xiong, Z.; Niyato, D.; Yu, H.; Liang, Y.C.; Kim, D.I. Incentive Design for Efficient Federated Learning in Mobile Networks: A Contract Theory Approach. In Proceedings of the 2019 IEEE VTS Asia Pacific Wireless Communications Symposium (APWCS), Singapore, Singapore, 28–30 August 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Garg, S.; Xiong, Z.; Niyato, D.; Leung, C.; Miao, C.; Guizani, M. Dynamic Contract Design for Federated Learning in Smart Healthcare Applications. IEEE Internet Things J. 2021, 8, 16853–16862. [Google Scholar] [CrossRef]

- Ding, N.; Fang, Z.; Huang, J. Optimal Contract Design for Efficient Federated Learning With Multi-Dimensional Private Information. IEEE J. Sel. Areas Commun. 2021, 39, 186–200. [Google Scholar] [CrossRef]

- Majeed, U.; Hong, C.S. FLchain: Federated Learning via MEC-enabled Blockchain Network. In Proceedings of the 2019 20th Asia-Pacific Network Operations and Management Symposium (APNOMS), Matsue, Japan, 18–20 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Blockchained on-device federated learning. IEEE Commun. Lett. 2019, 24, 1279–1283. [Google Scholar] [CrossRef]

- Li, Y.; Chen, C.; Liu, N.; Huang, H.; Zheng, Z.; Yan, Q. A blockchain-based decentralized federated learning framework with committee consensus. IEEE Netw. 2020, 35, 234–241. [Google Scholar] [CrossRef]

- Majeed, U.; Hassan, S.S.; Hong, C.S. Cross-silo model-based secure federated transfer learning for flow-based traffic classification. In Proceedings of the 2021 International Conference on Information Networking (ICOIN), Jeju Island, Republic of Korea, 13–16 January 2021; pp. 588–593. [Google Scholar] [CrossRef]

- Nakamoto, S. Bitcoin: A peer-to-peer electronic cash system. Decentralized Bus. Rev. 2008, 2008, 21260. [Google Scholar]

- Wood, G. Ethereum: A secure decentralised generalised transaction ledger. EThereum Proj. Yellow Pap. 2014, 151, 1–32. [Google Scholar]

- Androulaki, E.; Barger, A.; Bortnikov, V.; Cachin, C.; Christidis, K.; De Caro, A.; Enyeart, D.; Ferris, C.; Laventman, G.; Manevich, Y.; et al. Hyperledger fabric: A distributed operating system for permissioned blockchains. In Proceedings of the Thirteenth EuroSys conference, New York, USA, 23–26 April 2018; pp. 1–15. [Google Scholar] [CrossRef]

- Szabo, N. Formalizing and securing relationships on public networks. First Monday 1997, 2, 9. [Google Scholar] [CrossRef]

- Pu, H.; Ge, Y.; Yan-Feng, Z.; Yu-Bin, B. Survey on blockchain technology and its application prospect. Comput. Sci. 2017, 44, 1–7. [Google Scholar] [CrossRef]

- Waliszewski, P.; Konarski, J. A Mystery of the Gompertz Function. In Proceedings of the Fractals in Biology and Medicine; Springer: Berlin/Heidelberg, Germany, 2005; pp. 277–286. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Myerson, R.B. Optimal auction design. Math. Oper. Research. 1981, 6, 58–73. [Google Scholar] [CrossRef]

| Schemes | Main Contribution |

|---|---|

| Google [24] | Introduced FL for input prediction on mobile devices. |

| Akter et al. [26] | Proposed a privacy-preserving framework for healthcare systems using FL. |

| Xing et al. [27] | Developed an FL platform for medical data sharing with privacy protection. |

| Fu et al. [28] | Created a federated reinforcement learning approach for autonomous driving. |

| Rjoub et al. [29] | Constructed a deep reinforcement learning framework with FL for edge computing reliability. |

| Yang et al. [30] | Introduced a hierarchical trust structure for federated IoT applications. |

| Peng et al. [31] | Introduced an FL framework for IoT in the textile industry. |

| Song et al. [33] | Developed a contribution index based on Shapley value for FL participants. |

| Wang [34] | Utilized Shapley value for data feature importance determination. |

| Kang et al. [41] | Designed incentive mechanisms using contract theory for FL participation. |

| Majeed and Hong [44] | Presented FLchain, a blockchain-based FL approach. |

| Kim et al. [45] | Proposed BlockFL for decentralized FL training. |

| Li et al. [46] | Introduced BFLC for credible participant selection in FL. |

| Our | Proposed BCD-FL model enhances data quality, optimizes model aggregation, and improves reward distribution in FL. |

| Notations | Descriptions |

|---|---|

| The utility value of the node i in the t-th iteration. | |

| The reward received by node i in the t-th iteration | |

| The cost of node i in the t-th iteration | |

| The bid of node i in the t-th iteratio | |

| The reputation of node i in the t-th iteration | |

| The selection status of node i | |

| The local model parameters in the t-th iteration | |

| Training datasets of the node i | |

| Budget | |

| Learning rate |

| Parameter | Setting |

|---|---|

| Number of data owners | = 20 |

| Task Publisher’Budget | = 20 |

| Bid range | b = [1∼3] |

| Data Size range | D = [1000∼3000] |

| Percentage of malicious nodes | = [0, 0.1, 0.3, 0.5] |

| Mislabeled data ratio | = [0∼1] |

| Global epochs | E = 10 |

| Local epochs | e = 5 |

| Serial Number | Layer (Type) | Value | State_dict |

|---|---|---|---|

| 1 | Input | (784,) | - |

| 2 | fc1 | (784,256) | fc1.weight fc1.bias |

| 3 | Relu | - | - |

| 4 | fc2 | (256,128) | fc2.weight fc2.bias |

| 5 | Relu | - | - |

| 6 | fc3 | (128,10) | fc3.weight fc3.bias |

| Name | One Iteration | After 10 Iteration |

|---|---|---|

| Traditional FL | 6.23∼18.69 MB | 62.3∼186.9 MB |

| BCD-FL | 36 KB | 360 KB |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ouyang, K.; Yu, J.; Cao, X.; Liao, Z. Towards Reliable Federated Learning Using Blockchain-Based Reverse Auctions and Reputation Incentives. Symmetry 2023, 15, 2179. https://doi.org/10.3390/sym15122179

Ouyang K, Yu J, Cao X, Liao Z. Towards Reliable Federated Learning Using Blockchain-Based Reverse Auctions and Reputation Incentives. Symmetry. 2023; 15(12):2179. https://doi.org/10.3390/sym15122179

Chicago/Turabian StyleOuyang, Kai, Jianping Yu, Xiaojun Cao, and Zhuopeng Liao. 2023. "Towards Reliable Federated Learning Using Blockchain-Based Reverse Auctions and Reputation Incentives" Symmetry 15, no. 12: 2179. https://doi.org/10.3390/sym15122179

APA StyleOuyang, K., Yu, J., Cao, X., & Liao, Z. (2023). Towards Reliable Federated Learning Using Blockchain-Based Reverse Auctions and Reputation Incentives. Symmetry, 15(12), 2179. https://doi.org/10.3390/sym15122179