Cross-Version Software Defect Prediction Considering Concept Drift and Chronological Splitting

Abstract

:1. Introduction

1.1. Research Questions

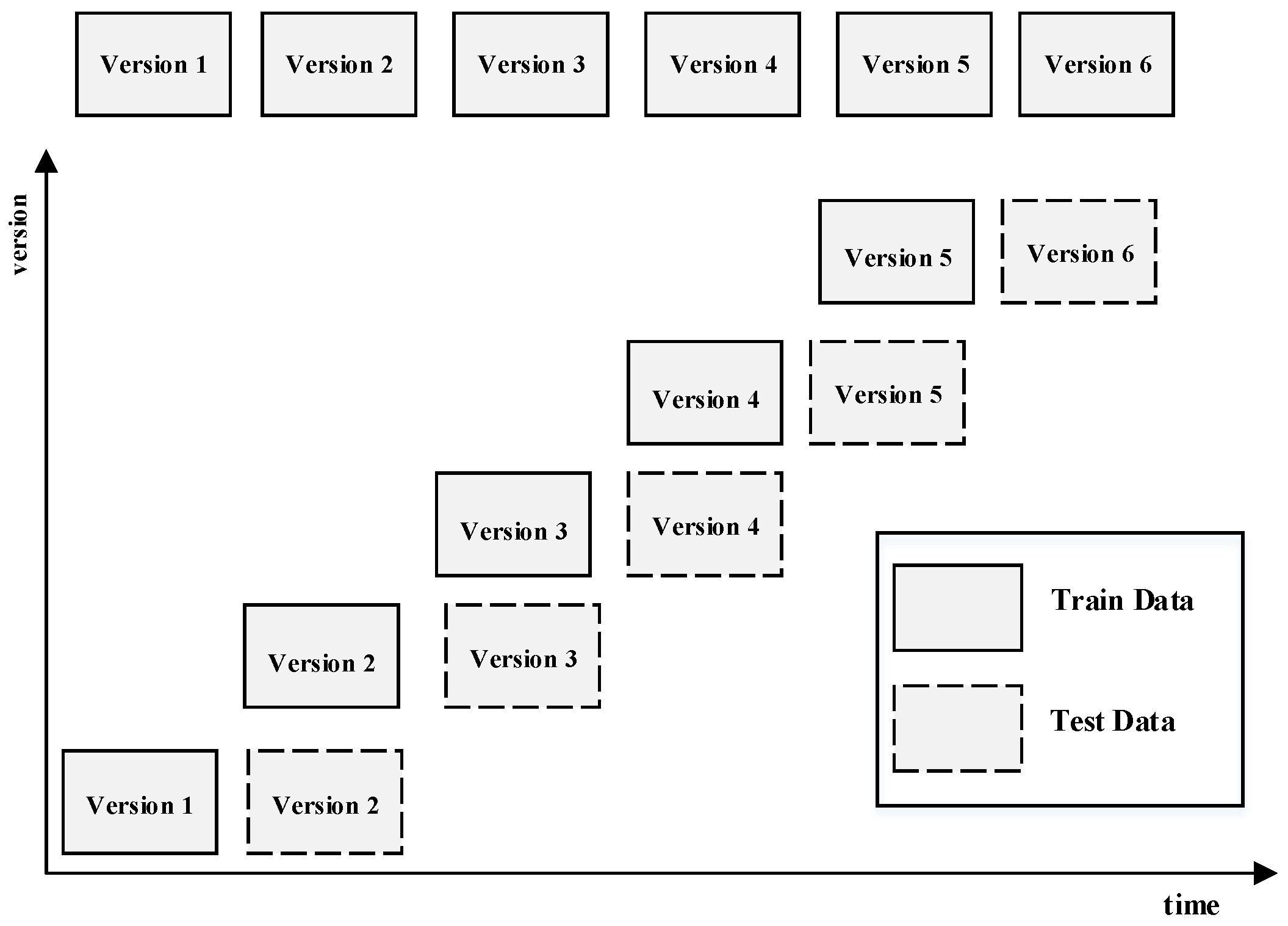

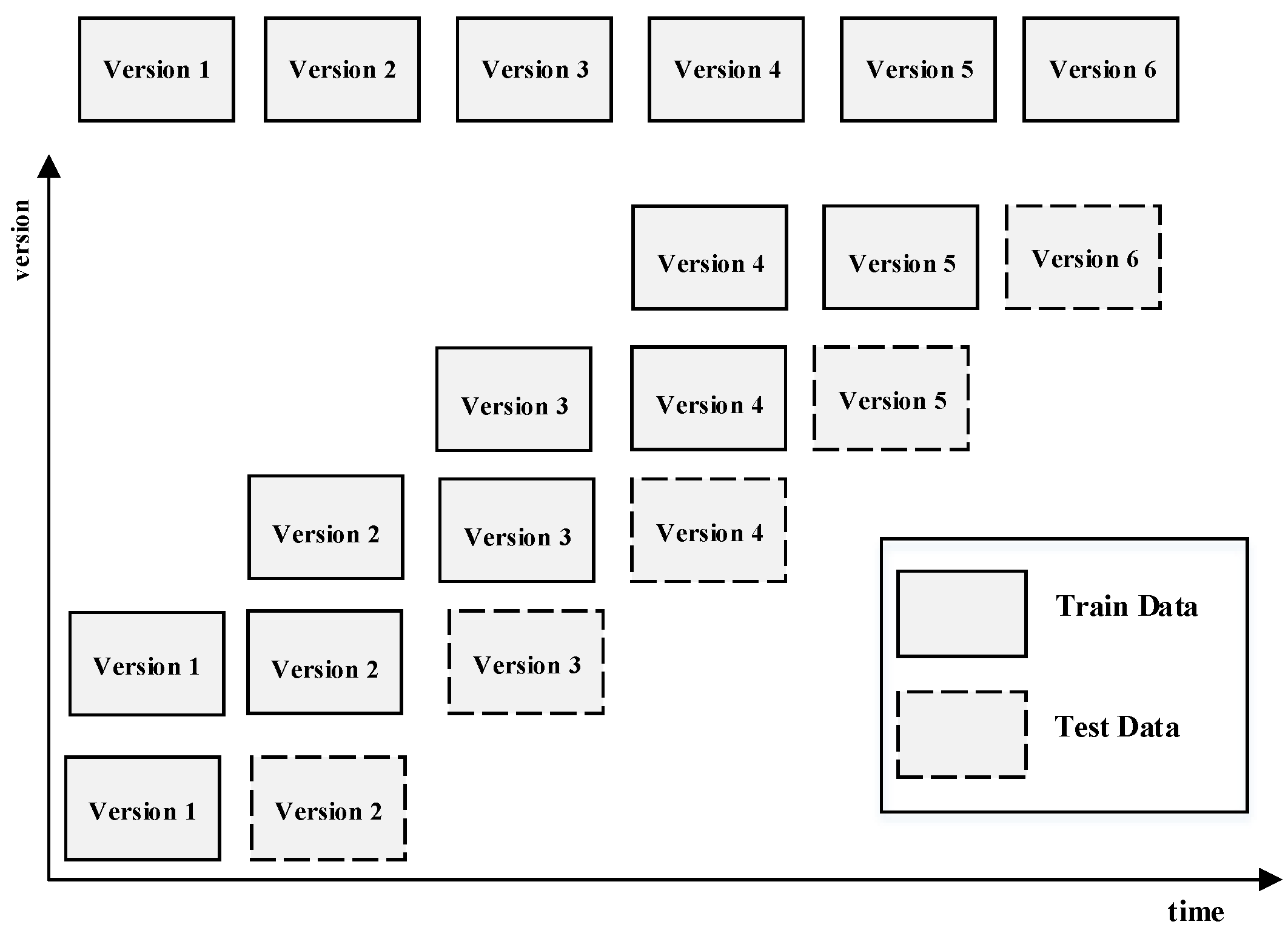

- All data from past versions, excluding the most recent one, with a window size of , where N encompasses all historical data;

- Data from the two most recent completed versions prior to the latest completed version, where the window size ;

- Data from the most recent completed version prior to the latest completed version, where the window size ;

- As a control, data from the most recent completed version are used as a testing set to obtain prediction results.

1.2. Contributions

- This study is one of the few initial contributions to conduct theoretical analysis and identification of CD in software defect datasets.

- A CDD framework is proposed to recognize CD in cross-version defect datasets.

- The potential effects of CD on CVDP performance are empirically evaluated.

2. Preliminaries

2.1. The Notion of Dataset Shift

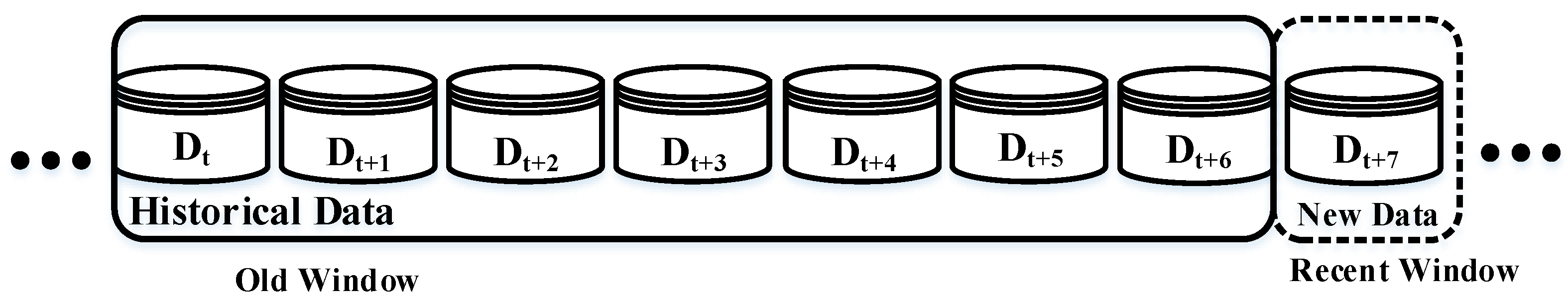

2.2. Approaches of Detecting Data Drift

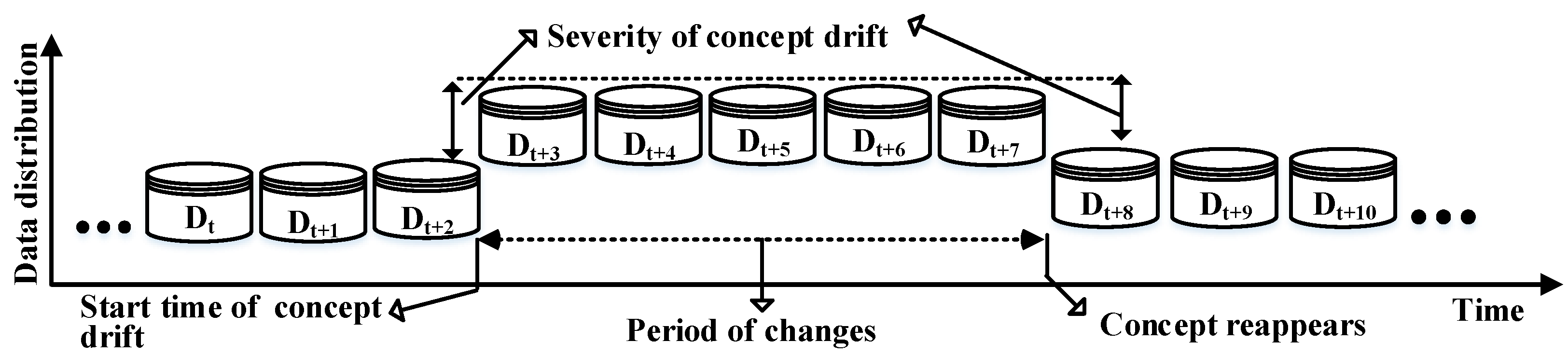

2.3. Concept Drift

3. Materials and Methods

3.1. Benchmark Datasets

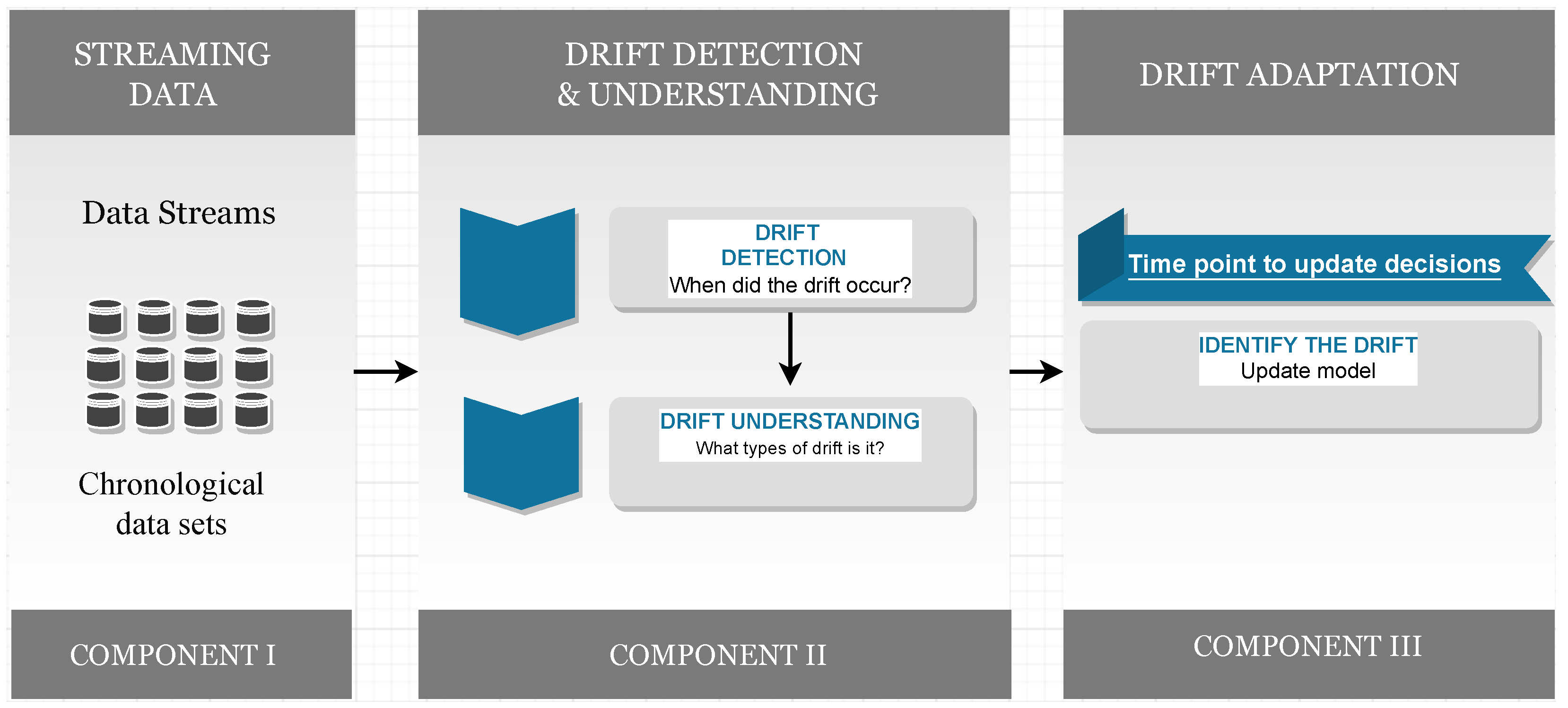

3.2. Framework

3.3. CDD Implementation

- Stage I: Data streams and CVDP scenario design

- Stage II: CD detection and understanding

- Warning flag : The learning process maintains the current decision model and it triggers the warning flag if it reaches the threshold .

- CD flag : It triggers the CD flag if it reaches the threshold .

- Control flag : When the learning error does not reach the level of and , it is assumed that the environment is stationary.

| Algorithm 1 Overview of CDD |

| Input: Training window and testing window Learner Sample of training and testing i Outcome: CD flag , warning flag and control flag . Procedure:

|

- Stage III: CD adaptation

3.4. Designing CD Detection Statistical Test

3.5. Evaluation Settings

- RQ1: Is there any data distribution difference across software versions that degrades the prediction performance?

- RQ2: Can insights be gained by observing the trends in CD detection results while the window size varies?

3.6. Cliff’s Effect Size Computation

4. Results

- −

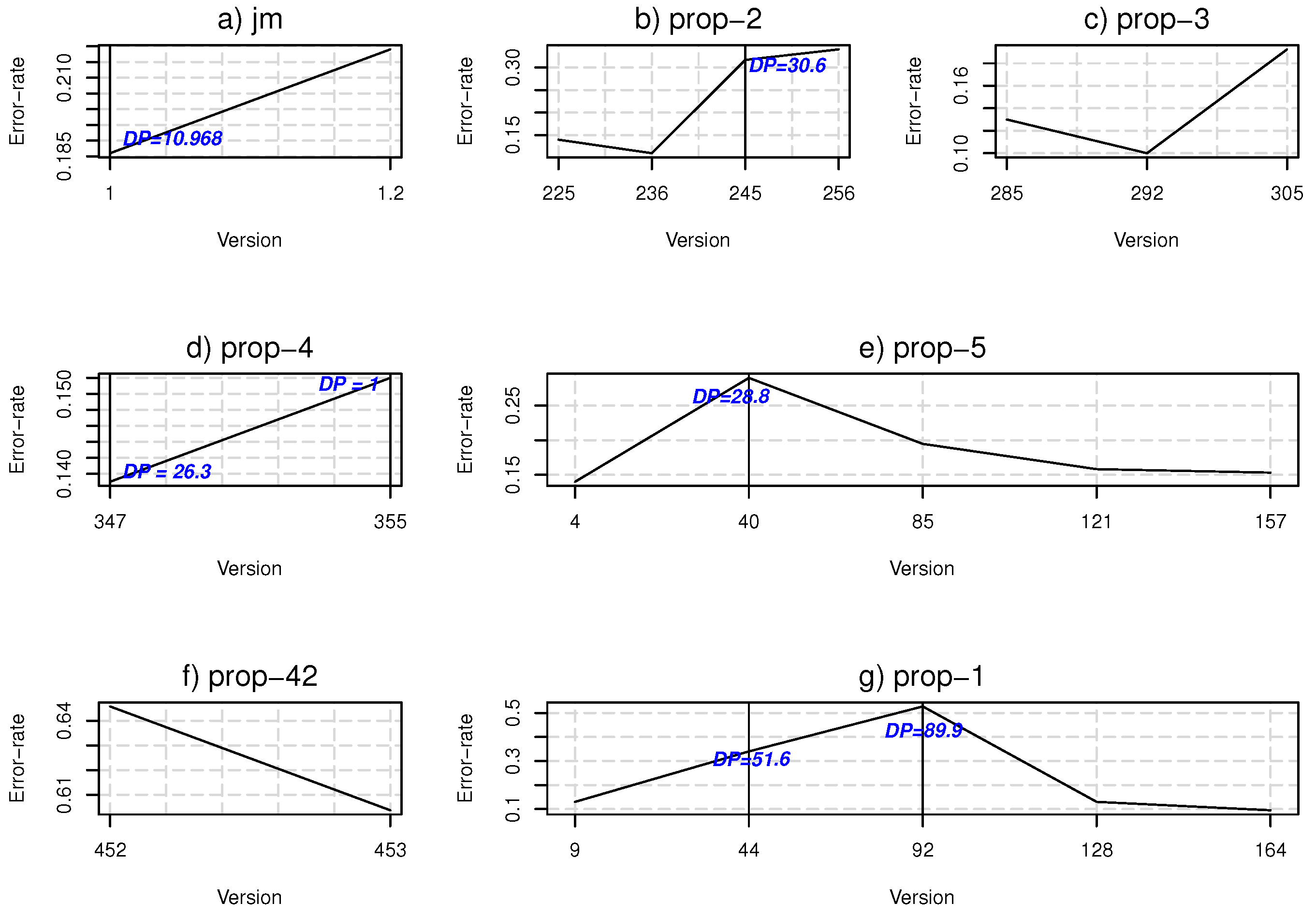

- In the chronological defect datasets, our proposed CDD framework identifies CDs by considering the fact that the data of the projects’ versions arrives in streaming format.

- −

- The performance of CVDP models is affected by the data distributions in the chronological splitting scenario. The prediction performance fluctuates among the versions for each chronological split approach.

- −

- A trend is observed: when CDs are detected, the error-rate of the affected versions is increased when splitting methods are applied in the experiment.

5. Discussion

5.1. RQ1: Is There Any Data Distribution Difference across Software Versions That Degrades the Prediction Performance?

5.2. RQ2: Can Insights Be Gained by Observing the Trends of CD Detection Results While the Window Size Varies?

6. Threats to Validity

7. Related Work

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jin, C. A training sample selection method for predicting software defects. Appl. Intell. 2022, 53, 12015–12031. [Google Scholar] [CrossRef]

- Rathore, S.S.; Kumar, S. An empirical study of ensemble techniques for software fault prediction. Appl. Intell. 2021, 51, 3615–3644. [Google Scholar] [CrossRef]

- Gong, L.; Jiang, S.; Bo, L.; Jiang, L.; Qian, J. A Novel Class-Imbalance Learning Approach for Both Within-Project and Cross-Project Defect Prediction. IEEE Trans. Reliab. 2019, 69, 40–54. [Google Scholar] [CrossRef]

- Lu, J.; Liu, A.; Dong, F.; Gu, F.; Gama, J.; Zhang, G. Learning under Concept Drift: A Review. IEEE Trans. Knowl. Data Eng. 2019, 31, 2346–2363. [Google Scholar] [CrossRef]

- Vinagre, J.; Jorge, A.M.; Rocha, C.; Gama, J. Statistically robust evaluation of stream-based recommender systems. IEEE Trans. Knowl. Data Eng. 2019, 33, 2971–2982. [Google Scholar] [CrossRef]

- Schlimmer, J.C.; Granger, R.H. Incremental learning from noisy data. Mach. Learn. 1986, 1, 317–354. [Google Scholar] [CrossRef]

- Turhan, B. On the dataset shift problem in software engineering prediction models. Empir. Softw. Eng. 2012, 17, 62–74. [Google Scholar] [CrossRef]

- Dong, F.; Lu, J.; Li, K.; Zhang, G. Concept drift region identification via competence-based discrepancy distribution estimation. In Proceedings of the 2017 12th International Conference on Intelligent Systems and Knowledge Engineering (ISKE), Nanjing, China, 24–26 November 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Xu, Z.; Li, S.; Luo, X.; Liu, J.; Zhang, T.; Tang, Y.; Xu, J.; Yuan, P.; Keung, J. TSTSS: A two-stage training subset selection framework for cross version defect prediction. J. Syst. Softw. 2019, 154, 59–78. [Google Scholar] [CrossRef]

- Bennin, K.E.; Toda, K.; Kamei, Y.; Keung, J.; Monden, A.; Ubayashi, N. Empirical Evaluation of Cross-Release Effort-Aware Defect Prediction Models. In Proceedings of the 2016 IEEE International Conference on Software Quality, Reliability and Security (QRS), Vienna, Austria, 1–3 August 2016; pp. 214–221. [Google Scholar] [CrossRef]

- Yang, X.; Wen, W. Ridge and Lasso Regression Models for Cross-Version Defect Prediction. IEEE Trans. Reliab. 2018, 67, 885–896. [Google Scholar] [CrossRef]

- Gangwar, A.K.; Kumar, S. Concept Drift in Software Defect Prediction: A Method for Detecting and Handling the Drift. ACM Trans. Internet Technol. 2023, 23, 1–28. [Google Scholar] [CrossRef]

- Gangwar, A.K.; Kumar, S.; Mishra, A. A paired learner-based approach for concept drift detection and adaptation in software defect prediction. Appl. Sci. 2021, 11, 6663. [Google Scholar] [CrossRef]

- Ekanayake, J.; Tappolet, J.; Gall, H.C.; Bernstein, A. Tracking concept drift of software projects using defect prediction quality. In Proceedings of the 2009 6th IEEE International Working Conference on Mining Software Repositories, Vancouver, BC, Canada, 16–17 May 2009; pp. 51–60. [Google Scholar] [CrossRef]

- Ekanayake, J.; Tappolet, J.; Gall, H.C.; Bernstein, A. Time variance and defect prediction in software projects. Empir. Softw. Eng. 2012, 17, 348–389. [Google Scholar] [CrossRef]

- Nishida, K.; Yamauchi, K. Detecting Concept Drift Using Statistical Testing. In Proceedings of the Discovery Science; Corruble, V., Takeda, M., Suzuki, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 264–269. [Google Scholar]

- Ren, S.; Liao, B.; Zhu, W.; Li, K. Knowledge-maximized ensemble algorithm for different types of concept drift. Inf. Sci. 2018, 430–431, 261–281. [Google Scholar] [CrossRef]

- Nagendran, N.; Sultana, H.P.; Sarkar, A. A Comparative Analysis on Ensemble Classifiers for Concept Drifting Data Streams. In Soft Computing and Medical Bioinformatics; Springer: Singapore, 2019; pp. 55–62. [Google Scholar] [CrossRef]

- Lu, N.; Zhang, G.; Lu, J. Concept drift detection via competence models. Artif. Intell. 2014, 209, 11–28. [Google Scholar] [CrossRef]

- Liu, D.; Wu, Y.; Jiang, H. FP-ELM: An online sequential learning algorithm for dealing with concept drift. Neurocomputing 2016, 207, 322–334. [Google Scholar] [CrossRef]

- Gu, F.; Zhang, G.; Lu, J.; Lin, C.-T. Concept drift detection based on equal density estimation. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 24–30. [Google Scholar] [CrossRef]

- Gama, J.; Medas, P.; Castillo, G.; Rodrigues, P. Learning with Drift Detection. In Proceedings of the Advances in Artificial Intelligence–SBIA 2004, Maranhao, Brazil, 29 September–1 October 2004; Bazzan, A.L.C., Labidi, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 286–295. [Google Scholar]

- Lu, J.; Liu, A.; Song, Y.; Zhang, G. Data-driven decision support under concept drift in streamed big data. Complex Intell. Syst. 2019, 6, 157–163. [Google Scholar] [CrossRef]

- Babüroğlu, E.S.; Durmuşoğlu, A.; Dereli, T. Concept drift from 1980 to 2020: A comprehensive bibliometric analysis with future research insight. Evol. Syst. 2023, 1–21. [Google Scholar] [CrossRef]

- Bayram, F.; Ahmed, B.S.; Kassler, A. From concept drift to model degradation: An overview on performance-aware drift detectors. Knowl.-Based Syst. 2022, 245, 108632. [Google Scholar] [CrossRef]

- Kabir, M.A.; Keung, J.W.; Bennin, K.E.; Zhang, M. Assessing the Significant Impact of Concept Drift in Software Defect Prediction. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; Volume 1, pp. 53–58. [Google Scholar] [CrossRef]

- Kabir, M.A.; Keung, J.; Turhan, B.; Bennin, K.E. Inter-release defect prediction with feature selection using temporal chunk-based learning: An empirical study. Appl. Soft Comput. 2021, 113, 107870. [Google Scholar] [CrossRef]

- Agrahari, S.; Singh, A.K. Concept drift detection in data stream mining: A literature review. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 9523–9540. [Google Scholar] [CrossRef]

- Madeyski, L.; Jureczko, M. Which process metrics can significantly improve defect prediction models? An empirical study. Softw. Qual. J. 2015, 23, 393–422. [Google Scholar] [CrossRef]

- Xu, Z.; Liu, J.; Luo, X.; Zhang, T. Cross-version defect prediction via hybrid active learning with kernel principal component analysis. In Proceedings of the 2018 IEEE 25th International Conference on Software Analysis, Evolution and Reengineering (SANER), Campobasso, Italy, 20–23 March 2018; pp. 209–220. [Google Scholar] [CrossRef]

- Xu, Z.; Li, S.; Tang, Y.; Luo, X.; Zhang, T.; Liu, J.; Xu, J. Cross Version Defect Prediction with Representative Data via Sparse Subset Selection. In Proceedings of the 26th Conference on Program Comprehension (ICPC’18), Gothenburg, Sweden, 27–28 May 2018; ACM: New York, NY, USA, 2018; pp. 132–143. [Google Scholar] [CrossRef]

- Menzies, T.; Shepperd, M. jm1. Zenodo 2004. [Google Scholar] [CrossRef]

- Jureckzo, M. prop. Zenodo 2010. [Google Scholar] [CrossRef]

- Wu, F.; Jing, X.Y.; Dong, X.; Cao, J.; Xu, M.; Zhang, H.; Ying, S.; Xu, B. Cross-project and within-project semi-supervised software defect prediction problems study using a unified solution. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering Companion (ICSE-C), Buenos Aires, Argentina, 20–28 May 2017; pp. 195–197. [Google Scholar] [CrossRef]

- Menzies, T.; DiStefano, J.; Orrego, A.; Chapman, R. Assessing predictors of software defects. In Proceedings of the Workshop Predictive Software Models, Chicago, IL, USA, 11–17 September 2004. [Google Scholar]

- Lokan, C.; Mendes, E. Investigating the use of moving windows to improve software effort prediction: A replicated study. Empir. Softw. Eng. 2017, 22, 716–767. [Google Scholar] [CrossRef]

- Minku, L.; Yao, X. Which models of the past are relevant to the present? A software effort estimation approach to exploiting useful past models. Autom. Softw. Eng. 2017, 24, 499–542. [Google Scholar] [CrossRef]

- Zhang, L.; Lin, J.; Karim, R. Sliding Window-Based Fault Detection From High-Dimensional Data Streams. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 289–303. [Google Scholar] [CrossRef]

- Iwashita, A.S.; Papa, J.P. An Overview on Concept Drift Learning. IEEE Access 2019, 7, 1532–1547. [Google Scholar] [CrossRef]

- Mitchell, T.M. Machine Learning; McGraw Hill: New York, NY, USA, 1997. [Google Scholar]

- Lu, N.; Lu, J.; Zhang, G.; de Mantaras, R.L. A concept drift-tolerant case-base editing technique. Artif. Intell. 2016, 230, 108–133. [Google Scholar] [CrossRef]

- Lowery, J.; Hays, M.J.; Burch, A.; Behr, D.; Brown, S.; Kearney, E.; Senseney, D.; Arce, S. Reducing central line-associated bloodstream infection (CLABSI) rates with cognitive science-based training. Am. J. Infect. Control. 2022, 50, 1266–1267. [Google Scholar] [CrossRef]

- Akhtar, A.; Sosa, E.; Castro, S.; Sur, M.; Lozano, V.; D’Souza, G.; Yeung, S.; Macalintal, J.; Patel, M.; Zou, X.; et al. A Lung Cancer Screening Education Program Impacts both Referral Rates and Provider and Medical Assistant Knowledge at Two Federally Qualified Health Centers. Clin. Lung Cancer 2022, 23, 356–363. [Google Scholar] [CrossRef]

- Menzies, T.; Greenwald, J.; Frank, A. Data Mining Static Code Attributes to Learn Defect Predictors. IEEE Trans. Softw. Eng. 2007, 33, 2–13. [Google Scholar] [CrossRef]

- Bennin, K.E.; Keung, J.; Monden, A.; Phannachitta, P.; Mensah, S. The Significant Effects of Data Sampling Approaches on Software Defect Prioritization and Classification. In Proceedings of the 2017 ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Toronto, ON, Canada, 9–10 November 2017; pp. 364–373. [Google Scholar] [CrossRef]

- Bennin, K.E.; Keung, J.W.; Monden, A. On the relative value of data resampling approaches for software defect prediction. Empir. Softw. Eng. 2019, 24, 602–636. [Google Scholar] [CrossRef]

- Bennin, K.E.; Keung, J.; Phannachitta, P.; Monden, A.; Mensah, S. MAHAKIL: Diversity Based Oversampling Approach to Alleviate the Class Imbalance Issue in Software Defect Prediction. IEEE Trans. Softw. Eng. 2018, 44, 534–550. [Google Scholar] [CrossRef]

- Amasaki, S. Cross-version defect prediction: Use historical data, cross-project data, or both? Empir. Softw. Eng. 2020, 25, 1573–1595. [Google Scholar] [CrossRef]

- Lokan, C.; Mendes, E. Applying Moving Windows to Software Effort Estimation. In Proceedings of the 2009 3rd International Symposium on Empirical Software Engineering and Measurement (ESEM ’09), Lake Buena Vista, FL, USA, 15–16 October 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 111–122. [Google Scholar] [CrossRef]

- Wilkinson, L. Statistical methods in psychology journals: Guidelines and explanations. Am. Psychol. 1999, 54, 594. [Google Scholar] [CrossRef]

- Carl, E.; Witcraft, S.M.; Kauffman, B.Y.; Gillespie, E.M.; Becker, E.S.; Cuijpers, P.; Van Ameringen, M.; Smits, J.A.; Powers, M.B. Psychological and pharmacological treatments for generalized anxiety disorder (GAD): A meta-analysis of randomized controlled trials. Cogn. Behav. Ther. 2020, 49, 1–21. [Google Scholar] [CrossRef]

- Mensah, S.; Keung, J.; MacDonell, S.G.; Bosu, M.F.; Bennin, K.E. Investigating the Significance of the Bellwether Effect to Improve Software Effort Prediction: Further Empirical Study. IEEE Trans. Reliab. 2018, 67, 1176–1198. [Google Scholar] [CrossRef]

- Kampenes, V.B.; Dybå, T.; Hannay, J.E.; Sjøberg, D.I. A systematic review of effect size in software engineering experiments. Inf. Softw. Technol. 2007, 49, 1073–1086. [Google Scholar] [CrossRef]

- Kitchenham, B.; Madeyski, L.; Budgen, D.; Keung, J.; Brereton, P.; Charters, S.; Gibbs, S.; Pohthong, A. Robust Statistical Methods for Empirical Software Engineering. Empir. Softw. Eng. 2017, 22, 579–630. [Google Scholar] [CrossRef]

- Quezada-Sarmiento, P.A.; Elorriaga, J.A.; Arruarte, A.; Washizaki, H. Open BOK on software engineering educational context: A systematic literature review. Sustainability 2020, 12, 6858. [Google Scholar] [CrossRef]

- Ma, J.; Xia, D.; Wang, Y.; Niu, X.; Jiang, S.; Liu, Z.; Guo, H. A comprehensive comparison among metaheuristics (MHs) for geohazard modeling using machine learning: Insights from a case study of landslide displacement prediction. Eng. Appl. Artif. Intell. 2022, 114, 105150. [Google Scholar] [CrossRef]

- Shukla, S.; Radhakrishnan, T.; Muthukumaran, K.; Neti, L.B.M. Multi-objective cross-version defect prediction. Soft Comput. 2018, 22, 1959–1980. [Google Scholar] [CrossRef]

- Chidamber, S.R.; Kemerer, C.F. A metrics suite for object oriented design. IEEE Trans. Softw. Eng. 1994, 20, 476–493. [Google Scholar] [CrossRef]

- Peters, F.; Menzies, T.; Marcus, A. Better cross company defect prediction. In Proceedings of the 2013 10th Working Conference on Mining Software Repositories (MSR), San Francisco, CA, USA, 18–19 May 2013; pp. 409–418. [Google Scholar] [CrossRef]

- Turhan, B.; Menzies, T.; Bener, A.B.; Di Stefano, J. On the relative value of cross-company and within-company data for defect prediction. Empir. Softw. Eng. 2009, 14, 540–578. [Google Scholar] [CrossRef]

- Bernstein, A.; Ekanayake, J.; Pinzger, M. Improving Defect Prediction Using Temporal Features and Non Linear Models. In Proceedings of the Ninth International Workshop on Principles of Software Evolution: In Conjunction with the 6th ESEC/FSE Joint Meeting (IWPSE’07), San Francisco, CA, USA, 9 December 2023; ACM: New York, NY, USA, 2007; pp. 11–18. [Google Scholar] [CrossRef]

- Kabir, M.A.; Begum, S.; Ahmed, M.U.; Rehman, A.U. CODE: A Moving-Window-Based Framework for Detecting Concept Drift in Software Defect Prediction. Symmetry 2022, 14, 2508. [Google Scholar] [CrossRef]

| Project | Version | # M | #FM | %FM | Skew | Kurt |

|---|---|---|---|---|---|---|

| jm | 1 | 7782 | 1672 | 21.49% | 1.39 | 2.93 |

| 1.2 | 9593 | 1759 | 18.34% | 1.64 | 3.68 | |

| 1.3 | 7782 | 1672 | 21.49% | 1.39 | 2.93 | |

| prop-1 | 9 | 4255 | 149 | 3.50% | 5.06 | 26.59 |

| 44 | 4620 | 389 | 8.42% | 2.99 | 9.97 | |

| 92 | 3557 | 1269 | 35.68% | 0.60 | 1.36 | |

| 128 | 3527 | 220 | 6.24% | 3.62 | 14.10 | |

| 164 | 3457 | 319 | 9.23% | 2.82 | 8.94 | |

| 192 | 3598 | 85 | 2.36% | 6.27 | 40.35 | |

| prop-2 | 225 | 1810 | 147 | 8.12% | 3.07 | 10.40 |

| 236 | 2231 | 76 | 3.41% | 5.14 | 27.39 | |

| 245 | 1962 | 103 | 5.25% | 4.01 | 17.10 | |

| 256 | 1964 | 625 | 31.82% | 0.78 | 1.61 | |

| 265 | 2307 | 229 | 9.93% | 2.68 | 8.18 | |

| prop-3 | 285 | 1694 | 177 | 10.45% | 2.59 | 7.69 |

| 292 | 2285 | 209 | 9.15% | 2.83 | 9.03 | |

| 305 | 2344 | 89 | 3.80% | 4.83 | 24.38 | |

| 318 | 2395 | 365 | 15.24% | 1.93 | 4.74 | |

| prop-4 | 347 | 2871 | 162 | 5.64% | 3.84 | 15.78 |

| 355 | 2791 | 924 | 33.11% | 0.72 | 1.52 | |

| 362 | 2854 | 213 | 7.46% | 3.24 | 11.48 | |

| prop-5 | 4 | 3022 | 264 | 8.74% | 2.92 | 9.54 |

| 40 | 4053 | 466 | 11.50% | 2.41 | 6.83 | |

| 85 | 3077 | 948 | 30.81% | 0.83 | 1.69 | |

| 121 | 2998 | 425 | 14.18% | 2.05 | 5.22 | |

| 157 | 2496 | 367 | 14.70% | 1.99 | 4.97 | |

| 185 | 2825 | 268 | 9.49% | 2.77 | 8.65 | |

| prop-42 | 452 | 256 | 33 | 12.89% | 2.21 | 5.91 |

| 453 | 192 | 20 | 10.42% | 2.59 | 7.72 | |

| 454 | 212 | 13 | 6.13% | 3.66 | 14.37 |

| Metric | Description |

|---|---|

| WMC | Each class using weighted methods |

| DIT | Tree depth of the inheritance |

| NOC | Children in the sample |

| CBO | Connecting classes of objects |

| RFC | Class’s response |

| LCOM | Methods are not cohesive enough |

| Ca | Afferent couple |

| Ce | Successful coupling |

| NPM | The quantity of public methods |

| LCOM3 | Methods are not cohesive and from LCOM |

| LOC | Lines of code |

| DAM | Access to data metrics |

| MOA | Aggregation measurement |

| MFA | Functional abstraction index |

| CAM | Coherence of classification methods |

| IC | Coupling with inheritance |

| CBM | Linking different methods |

| AMC | Method complexity on the average |

| MaxCC | The highest possible values for a class’s methods’ cyclomatic complexity |

| Avg(CC) | Calculating the average cyclomatic complexity of a class of methods |

| BUG | Bugs in the class |

| Attribute | Description |

|---|---|

| V(g) | Cyclomatic complexity |

| Iv(G) | Design_complexity |

| Ev(G) | Essential_complexity |

| loc | Loc-total |

| n | Halstead total operators + operands |

| v | Halstead total operators + operands |

| l | Halstead “volume” |

| d | Halstead “difficulty” |

| i | Halstead “intelligence” |

| e | Halstead “effort” |

| b | Halstead |

| t | Halstead’s time estimator |

| lOCode | Halstead’s line count |

| lOComment | Halstead’s count of lines of comments |

| lOBlank | Halstead’s count of blank lines |

| lOCodeAndComment | |

| uniq_Op : | unique operators |

| uniq_Opnd : | unique operands |

| total_Op : | total operators |

| total_Opnd : | total operands |

| branchCount : | of the flow graph |

| defects {false,true}: | module has/has not reported defects |

| Predicted as Positive | Predicted as Negative | |

|---|---|---|

| Actual positive | ||

| Actual negative |

| Positive Prediction | Negative Prediction | |

|---|---|---|

| Actual positive | ||

| Actual negative |

| Recent Window | Old Window | |

|---|---|---|

| # of correct class | ||

| # of errors |

| Predicted Positive | Predictive Negative | |

|---|---|---|

| Actual positive | TP | FN |

| Actual negative | FP | TN |

| Window-of-Size-1 | ||||||

| Project | IVersion | #InsOWin | #InsRWin | CD | t-Value | p-Value |

| prop-1 | 9 | 4255 | 4620 | 8.153 * | 5.422 | 0.116 |

| 44 | 4620 | 3557 | 91.621 * | 24.591 | 0.026 ** | |

| 92 | 3557 | 3527 | 89.879 * | 23.172 | 0.027 ** | |

| 128 | 3527 | 3457 | 8.310 * | 2.173 | 0.275 | |

| 164 | 3457 | 3598 | 0.998 | 6.331 | 0.100 | |

| prop-2 | 225 | 1810 | 2231 | 5.260 * | 3.661 | 0.170 |

| 236 | 2231 | 1962 | 0.995 | 0.085 | 0.946 | |

| 245 | 1962 | 1964 | 30.591 * | 12.821 | 0.050 ** | |

| 256 | 1964 | 2307 | 33.783 * | 9.171 | 0.069 | |

| prop-3 | 285 | 1694 | 2285 | 5.973 * | 0.626 | 0.644 |

| 292 | 2285 | 2344 | 0.999 | 1.553 | 0.364 | |

| 305 | 2344 | 2395 | 16.202 * | 5.765 | 0.109 | |

| prop-4 | 347 | 2871 | 2791 | 26.242 * | 21.006 | 0.030 ** |

| 355 | 2791 | 2854 | 1.003 | 17.890 | 0.036 | |

| prop-5 | 4 | 3022 | 4053 | 0.991 | 2.366 | 0.255 |

| 40 | 4053 | 3077 | 28.760 * | 13.823 | 0.046 ** | |

| 85 | 3077 | 2998 | 11.260 * | 10.779 | 0.059 | |

| 121 | 2998 | 2496 | 4.637 * | 0.856 | 0.549 | |

| 157 | 2496 | 2825 | 2.632 | 3.433 | 0.180 | |

| prop-42 | 452 | 256 | 192 | 2.280 | 2.562 | 0.237 |

| 453 | 192 | 212 | 3.000 * | 9.811 | 0.045 ** | |

| jm | 1 | 7782 | 9593 | 11.689 * | 19.296 | 0.033 ** |

| 1.2 | 9593 | 7782 | 9.422 * | 9.811 | 0.065 | |

| Window-of-Size-N | ||||||

| Project | IVersion | #InsOWin | #InsRWin | CD | t-Value | p-Value |

| prop-1 | 9 | 4255 | 4620 | 4.887 * | 5.422 | 0.116 |

| 44 | 8875 | 3557 | 48.356 * | 32.327 | 0.020* | |

| 92 | 12,432 | 3527 | 3.994 * | 10.491 | 0.060 | |

| 128 | 15,959 | 3457 | 6.396 * | 6.070 | 0.104 | |

| 164 | 19,416 | 3598 | 1.069 | 12.759 | 0.050 | |

| prop-2 | 225 | 1810 | 2231 | 7.216 * | 3.661 | 0.170 |

| 236 | 4010 | 1962 | 1.006 | 2.307 | 0.260 | |

| 245 | 6003 | 1964 | 34.406 * | 15.811 | 0.040 ** | |

| 256 | 7967 | 2307 | 6.282 * | 3.787 | 0.164 | |

| prop-3 | 285 | 1694 | 2285 | 4.693 * | 0.626 | 0.644 |

| 292 | 3973 | 2344 | 0.933 | 2.208 | 0.271 | |

| 305 | 6323 | 2395 | 11.838 * | 7.441 | 0.085 | |

| prop-4 | 347 | 2871 | 2791 | 39.092 * | 21.006 | 0.030 ** |

| 355 | 5662 | 2854 | 0.953 | 11.578 | 0.055 | |

| prop-5 | 4 | 3022 | 4053 | 2.627 | 2.366 | 0.255 |

| 40 | 7075 | 3077 | 30.946 * | 16.657 | 0.038 ** | |

| 85 | 10,152 | 2998 | 10.129 * | 2.901 | 0.211 | |

| 121 | 13,150 | 2496 | 5.532 * | 2.969 | 0.207 | |

| 157 | 15,646 | 2825 | 1.210 | 7.181 | 0.088 | |

| prop-42 | 452 | 256 | 192 | 1.148 | 2.562 | 0.237 |

| 453 | 448 | 212 | 4.319* | 14.507 | 0.044 ** | |

| jm | 1 | 7782 | 9593 | 10.968 * | 19.296 | 0.033 ** |

| 1.2 | 17,375 | 7782 | 9.069 * | 3.193 | 0.193 | |

| Window-of-Size-2 | ||||||

| Project | IVersion | #InsOWin | #InsRWin | CD | t-Value | p-Value |

| prop-1 | 9 | 4255 | 4620 | 7.540 * | 5.422 | 0.116 |

| 44 | 8875 | 3557 | 51.009 * | 32.327 | 0.020 ** | |

| 92 | 8177 | 3527 | 13.581 * | 15.962 | 0.040 ** | |

| 128 | 7084 | 3457 | 33.327 * | 16.404 | 0.039 * | |

| 164 | 6984 | 3598 | 0.970 | 6.025 | 0.105 | |

| prop-2 | 225 | 1810 | 2231 | 7.216 * | 3.661 | 0.170 |

| 236 | 4010 | 1962 | 1.006 | 2.307 | 0.260 | |

| 245 | 4193 | 1964 | 34.360 * | 16.148 | 0.039 ** | |

| 256 | 3926 | 2307 | 9.437 * | 8.416 | 0.075 | |

| prop-3 | 285 | 1694 | 2285 | 4.693 * | 0.626 | 0.644 |

| 292 | 3973 | 2344 | 0.933 | 2.208 | 0.271 | |

| 305 | 4629 | 2395 | 11.298 * | 8.312 | 0.076 | |

| prop-4 | 347 | 2871 | 2791 | 39.092 * | 21.006 | 0.030 ** |

| 355 | 5662 | 2854 | 0.953 | 11.578 | 0.055 | |

| prop-5 | 4 | 3022 | 4053 | 1.173 | 2.366 | 0.255 |

| 40 | 7075 | 3077 | 29.492 * | 16.657 | 0.038 ** | |

| 85 | 7130 | 2998 | 9.838 * | 5.517 | 0.114 | |

| 121 | 6075 | 2496 | 4.893 * | 7.311 | 0.087 | |

| 157 | 5494 | 2825 | 1.183 | 4.408 | 0.142 | |

| prop-42 | 452 | 256 | 192 | 1.148 | 2.562 | 0.237 |

| 453 | 448 | 212 | 4.319 * | 14.507 | 0.044 ** | |

| jm | 1 | 7782 | 9593 | 10.968 * | 19.296 | 0.033 ** |

| 1.2 | 17,375 | 7782 | 9.069 * | 3.193 | 0.193 | |

| Project | Old Window | Recent Window | t-Value | p-Value | Cliff’s Effect Size |

|---|---|---|---|---|---|

| prop-1 | 44 | 92 | 24.591 | 0.026 | 0.497 |

| 92 | 128 | 23.172 | 0.027 | 0.484 | |

| prop-2 | 245 | 256 | 12.821 | 0.050 | 0.437 |

| prop-4 | 347 | 355 | 21.006 | 0.030 | 0.490 |

| 355 | 362 | 17.890 | 0.036 | 0.462 | |

| prop-5 | 40 | 85 | 13.823 | 0.046 | 0.461 |

| prop-42 | 453 | 454 | 9.811 | 0.065 | 0.477 |

| jm | 1 | 1.2 | 19.296 | 0.033 | 0.464 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kabir, M.A.; Rehman, A.U.; Islam, M.M.M.; Ali, N.; Baptista, M.L. Cross-Version Software Defect Prediction Considering Concept Drift and Chronological Splitting. Symmetry 2023, 15, 1934. https://doi.org/10.3390/sym15101934

Kabir MA, Rehman AU, Islam MMM, Ali N, Baptista ML. Cross-Version Software Defect Prediction Considering Concept Drift and Chronological Splitting. Symmetry. 2023; 15(10):1934. https://doi.org/10.3390/sym15101934

Chicago/Turabian StyleKabir, Md Alamgir, Atiq Ur Rehman, M. M. Manjurul Islam, Nazakat Ali, and Marcia L. Baptista. 2023. "Cross-Version Software Defect Prediction Considering Concept Drift and Chronological Splitting" Symmetry 15, no. 10: 1934. https://doi.org/10.3390/sym15101934

APA StyleKabir, M. A., Rehman, A. U., Islam, M. M. M., Ali, N., & Baptista, M. L. (2023). Cross-Version Software Defect Prediction Considering Concept Drift and Chronological Splitting. Symmetry, 15(10), 1934. https://doi.org/10.3390/sym15101934