4.1. Stability of SF1

By applying SF1 on the polynomial system

, we obtain its rational vectorial operator

, with

j-th coordinate

Then, the following result is proven.

Theorem 3. The rational operator related to the class of iterative schemes SF1 has, , , , as fixed points, being the roots of , for any value of . Moreover, they are superattracting. Indeed, there exist other fixed points of , whose number depends on the value of the parameter as follows:

If or , then each component of the fixed points can be or any of the two real roots of polynomial .

If , the only fixed points are the roots of system .

Proof. From the expression of the rational function

and the separate variables of the system, we deduce that a fixed point

must satisfy

,

. So,

Then, values

satisfy this expression, and the roots of

:

,

,

,

are fixed points of the rational operator

. To analyze their stability, we calculate

,

It is clear is the null matrix. Therefore, the fixed points are superattracting. Moreover, there are (strange) fixed points different from the roots of , satisfying . That is, the roots of , when they are real, are components of the strange fixed points of . It can be checked that when , all the roots of are complex; in other case, there are two real roots of , that by themselves or combined with , form the strange fixed points of . □

In addition to the calculation of fixed points of , it is feasible that other attracting elements there exist to be avoided. To detect them, if they exist, we calculate the free critical points. The proof of the following result is straightforward from the eigenvalues of .

Theorem 4. The rational operator related to the iterative method SF1 has points , , , , as critical points. However, depending on the value of , the number of critical points of can be increased as follows:

If , then exist free critical points whose components are the different combinations among the roots of polynomial and .

For values of , there are no free critical points.

Proof. Critical points of are found as those cancelling the eigenvalues of the associated Jacobian matrix, . Due to the separate variables of , these eigenvalues are the non-null components of . So, the components of critical points are and the real roots of . It can be checked that the roots of are all complex if and there exist two real roots in the other case. □

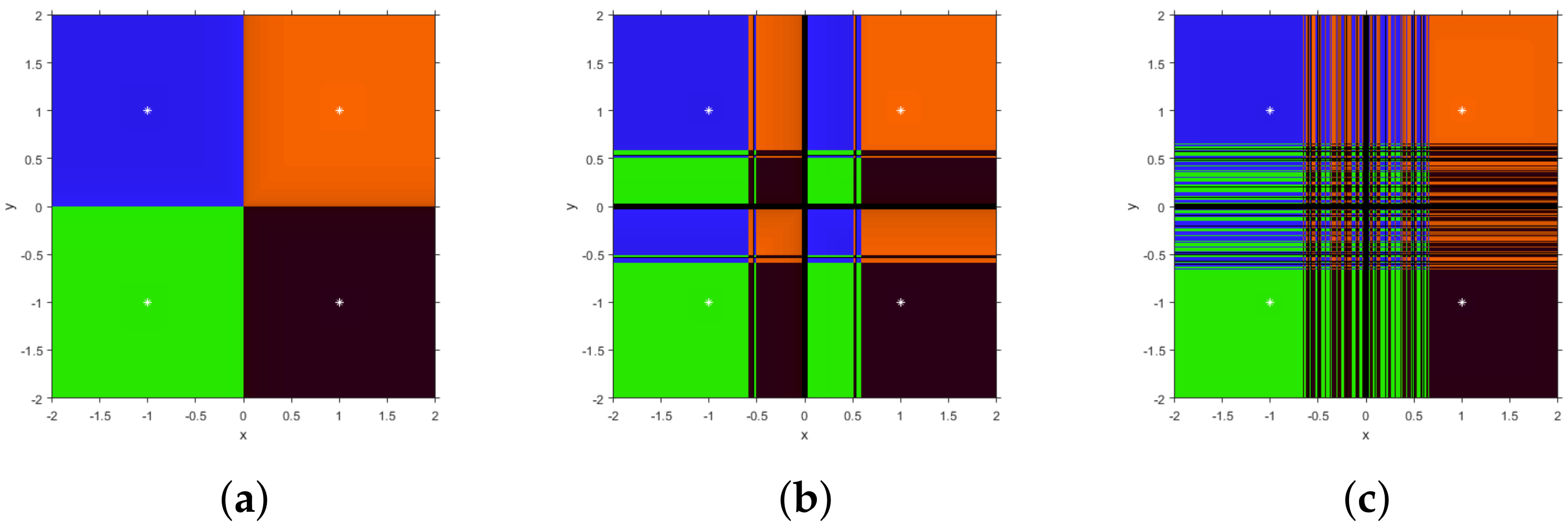

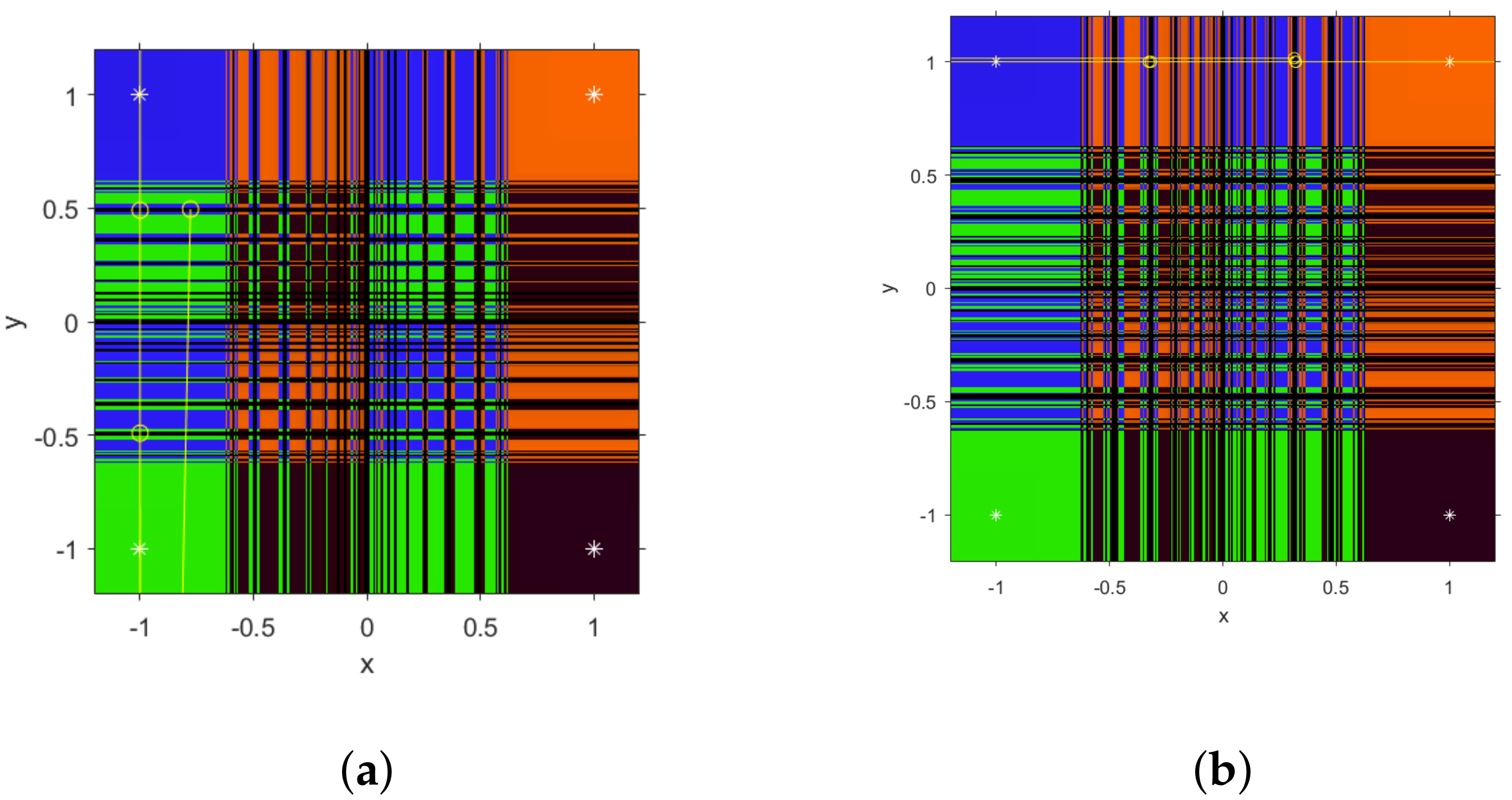

From Theorem 4, we state that convergence of SF1 on

is global if

, as no other behavior than convergence to the roots is allowed. In

Figure 1, some dynamical planes can be seen. We use a mesh

M with

points, and every initial guess in this mesh is iterated a maximum of 80 times with an exact error lower than

.

Each point of

M is colored depending on the root (presented as white stars) it converges to. This color is brighter when the amount of iterations needed is lower. If the maximum of iterations are reached and no convergence to the roots is achieved, then the point is colored in black. In

Figure 1a, the dynamical plane of the SF1 method acting on

is presented. Let us notice that, for

, it has the same performance as Newton’s method, but with fourth-order of convergence (see

Figure 1a), it is still very stable for

where the basins of attraction of the roots have infinite components (

Figure 1b) and in case of

black regions of no convergence appear, but in this case they correspond to slow convergence (

Figure 1c).

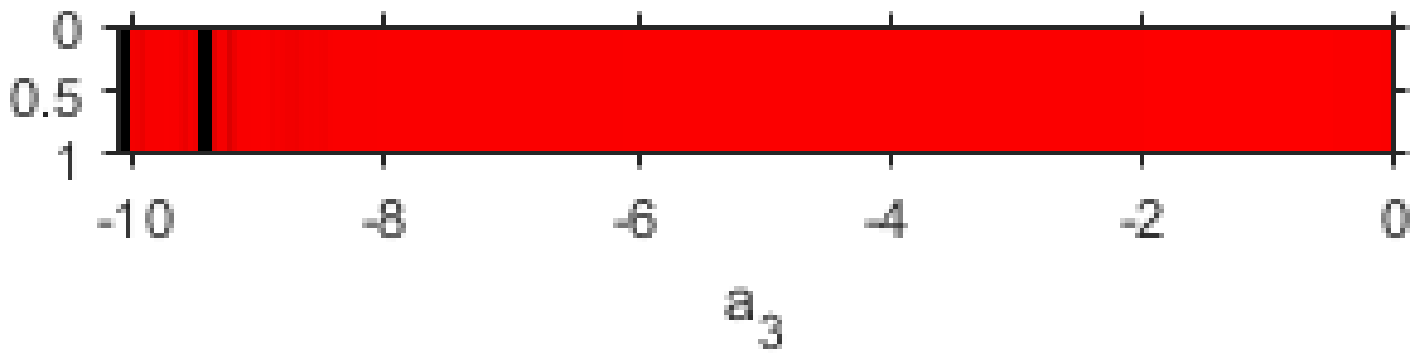

In order to find undesirable values of the parameter, we look for those members of the family that are able to converge to any other attracting element different from the roots of

. In order to achieve this, we use the parameter line. To generate it, a free critical point depending of

is used as initial guess for each value of

(see

Figure 2). In the interval

, where there exist free critical points, each point of the mesh of 500 points is painted in red if the critical point converges to one of the roots or in black otherwise.

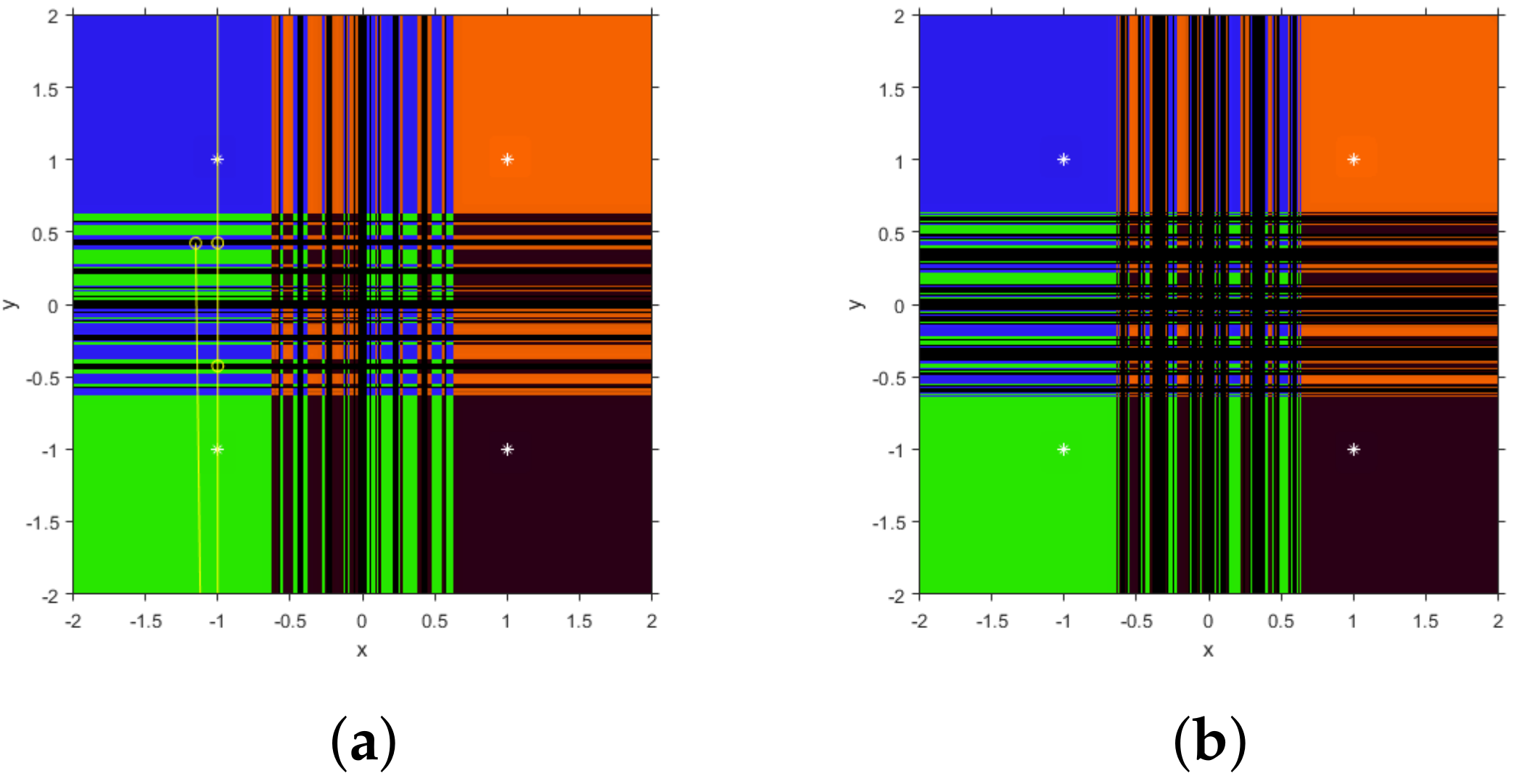

In

Figure 3, different unstable cases can be found: for

, several black areas of no convergence to the roots can be found, corresponding to four periodic orbits of period 4 (see

Figure 3a)

Similar performance can be found in

Figure 3b, where the black areas correspond to periodic orbits of higher period. So, the only values of

where convergence to attracting orbits is assured are those in black in the parameter line. In the rest of the real values, the only possible behavior is convergence to the roots of

.

4.2. Stability of SF2

Now, let us apply the SF2 method on

and obtain its rational vectorial operator

, whose

j-th coordinate is

where

.

Therefore, we analyze the fixed points of in the next result. The proof is analogous to that of SF1, so it is omitted.

Theorem 5. Rational operator related to the class of iterative methods SF2 has the roots of , , , , as superattracting fixed points, but also some strange fixed points exist, whose components are the real roots of polynomial or the combination between one of these roots and or . The number of real roots of depends on the value of :

If or , has only two real roots and the strange fixed points are defined by combining them with themselves or with .

If , has not real roots and there are not strange fixed points.

Now, it is important to study if there exist free critical points, as it is stated in the next result. Let us notice that, also in this case, there exist free critical points depending on the value of that can give rise to their own basins of attraction.

Theorem 6. The rational operator related to the iterative method SF2 has points as critical points. Moreover, the following cases can be described depending on the value of :

If , or , then there are no free critical points.

In other case, that is, if or , the components of the free critical points are any of the two real roots of polynomial , or any of them combined with .

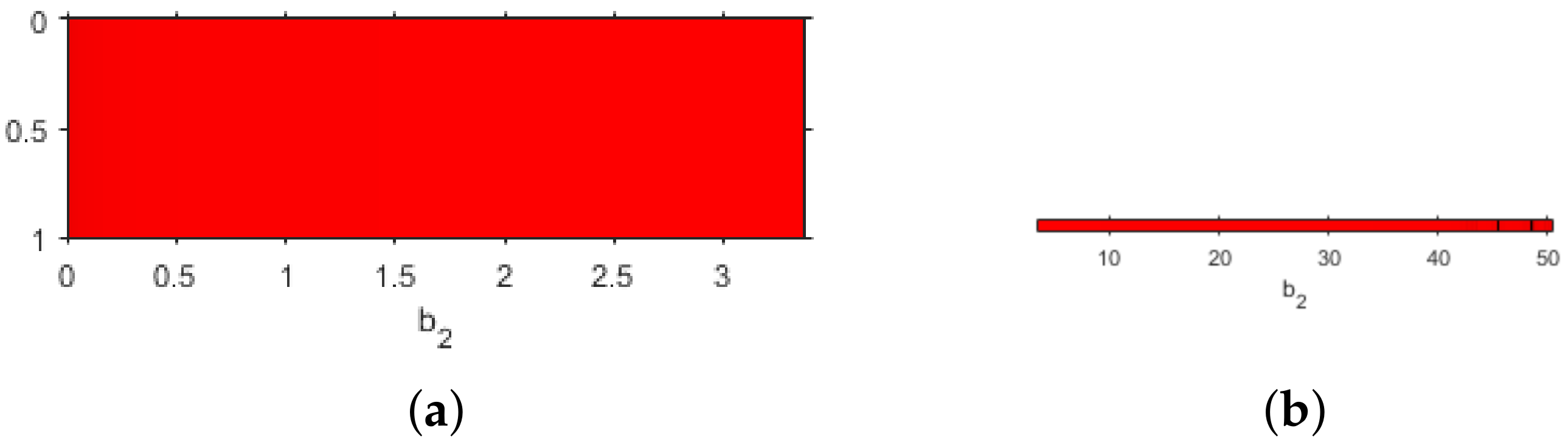

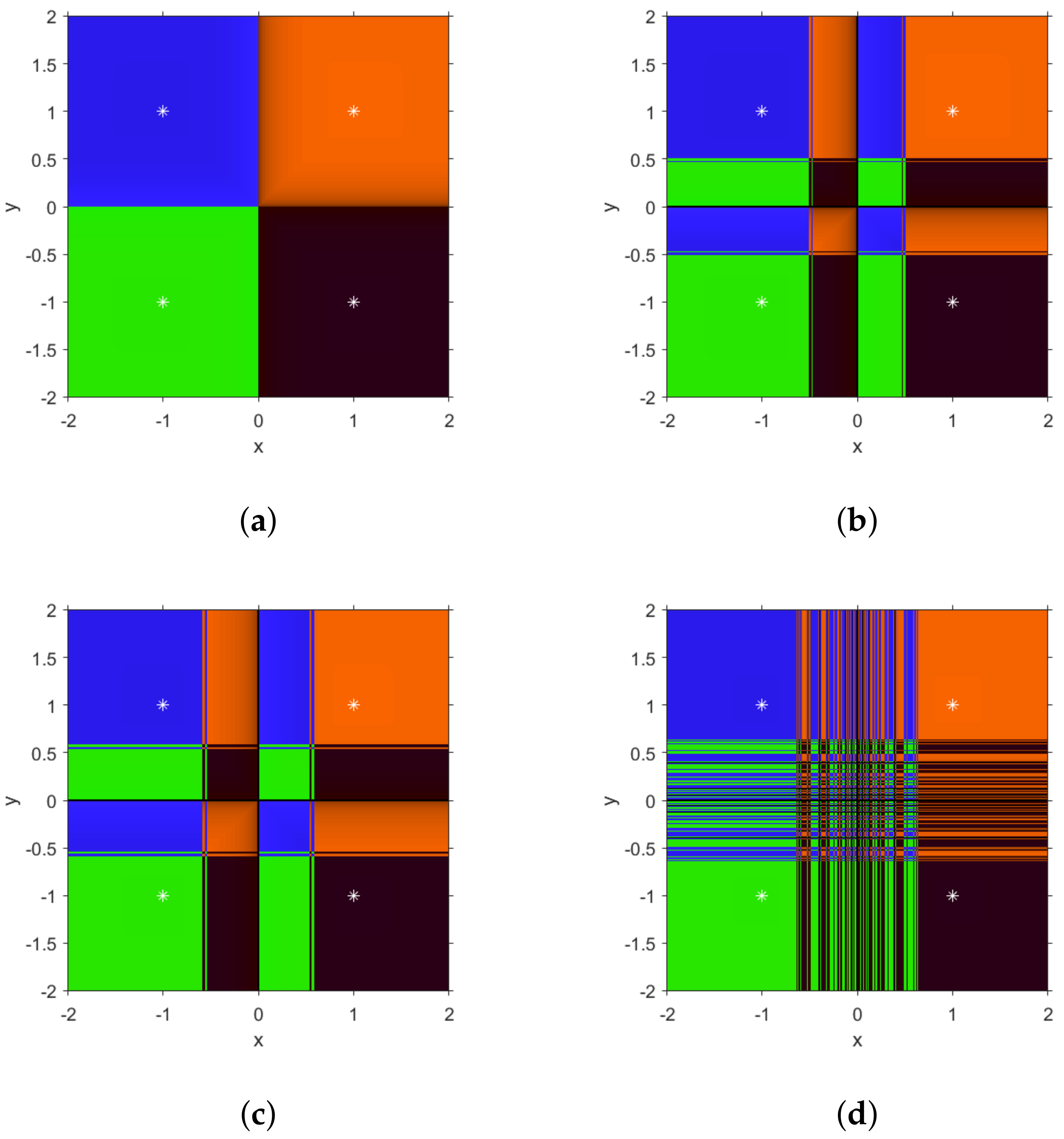

From Theorem 6, we deduce that those elements of class SF2 with values of

satisfying

,

or

can only converge to the roots of the system, achieving the most stable performance. In other cases, the parameter line help us to deduce their behavior. In

Figure 4a, the line shows convergence to the roots, for both free critical points when

; however, in

Figure 4b two black areas of the parameter line show the values where convergence to the roots is not guaranteed when

. In this case, the same performance also appears for any free critical point in this interval.

So, we can isolate values of

in these areas of the parameter line looking for unstable behavior and choose any other value of the parameter for the stable one. In

Figure 5, we observe the dynamical planes corresponding to SF2 method acting on

(

Figure 5a). We observe that in both cases the complexity of the boundary between the basins of convergence is higher than in stable elements of SF2 and, in the case of

Figure 5b, a black area of slower convergence (or even divergence) appears. The first case, for

, shows in yellow color a periodic orbit of period 4, close to one of the roots; specifically, this orbit is

and there exist other three symmetrical ones for this value of

. In

Figure 5b, a similar performance with black lines corresponding to the basin of attraction of the periodic orbit

of period 6 and other symmetric ones in the vertical lines containing the roots.

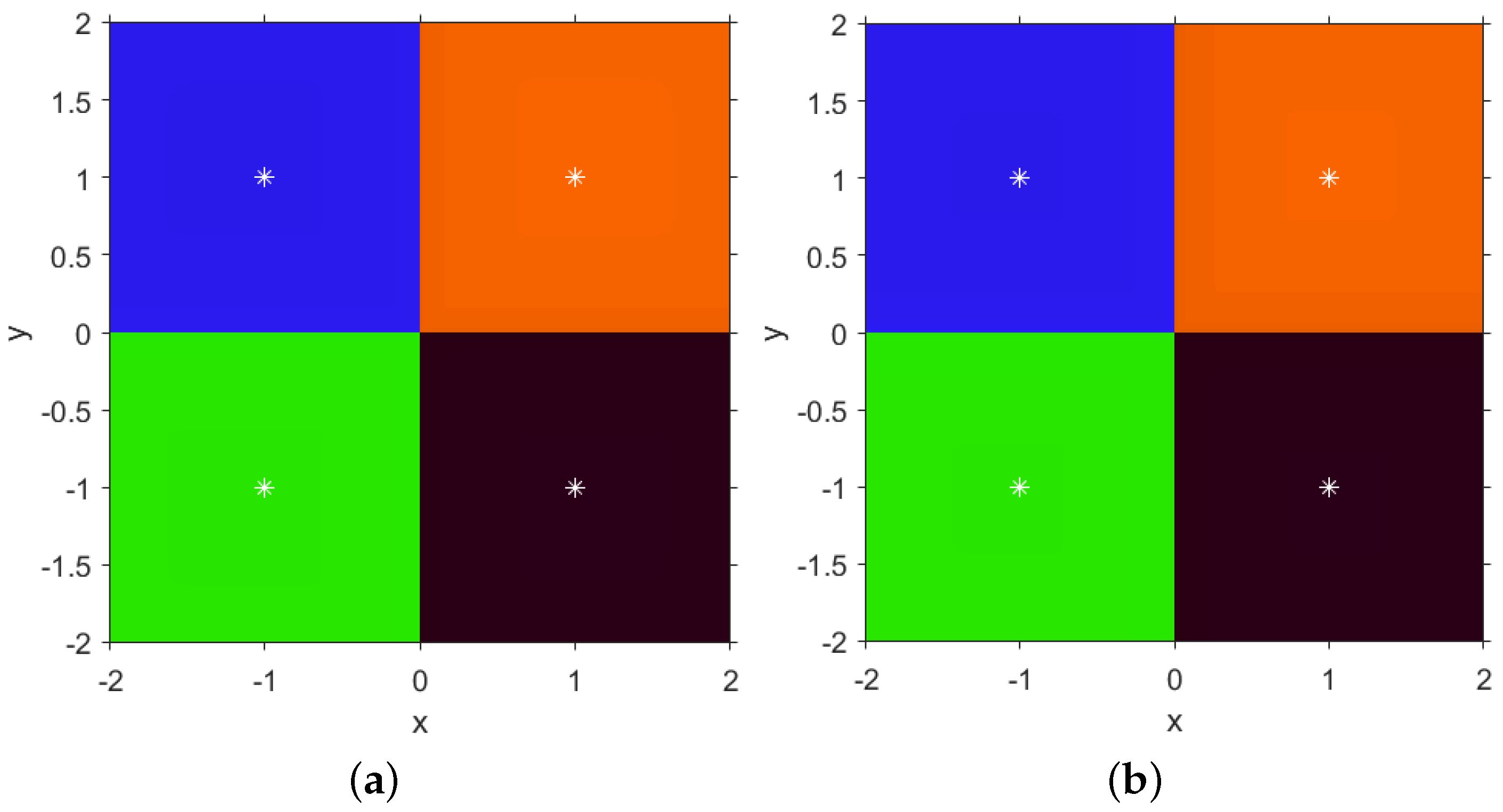

Nevertheless, the most common performance of this class of iterative methods is stability, with convergence to the roots as the only possible performance. Some examples of this behavior can be observed in

Figure 6 for different values of

with no free critical points (

Figure 6a,b,d) or in the red areas of the parameter lines (

Figure 6c).

4.3. Stability of SF3

Finally, let us apply the SF3 method on

and obtain its rational multidimensional operator

, whose

j-th coordinate is

where

and

. Therefore, the results that give us information about the stability

appear below. The proofs are omitted as they are similar to the previous ones.

Theorem 7. The rational operator related to the iterative method SF3 has only the roots of , , , and as fixed points (being superattracting) if . However, there exist also strange fixed points, depending on negative values of :

If , the entries of the strange fixed points are the four real roots of polynomial , combined with .

If , the entries of the strange fixed points are the two real roots of , combined with .

Now, it is important to study if there exist free critical points, as it is stated in the next result. In this case there also exist points, different from the roots of , that make null both eigenvalues of .

Theorem 8. The rational operator related to the iterative method SF3 has points , as the only critical points if . In the other case, the number of free critical points depends of the roots of polynomials of eighteenth degree.

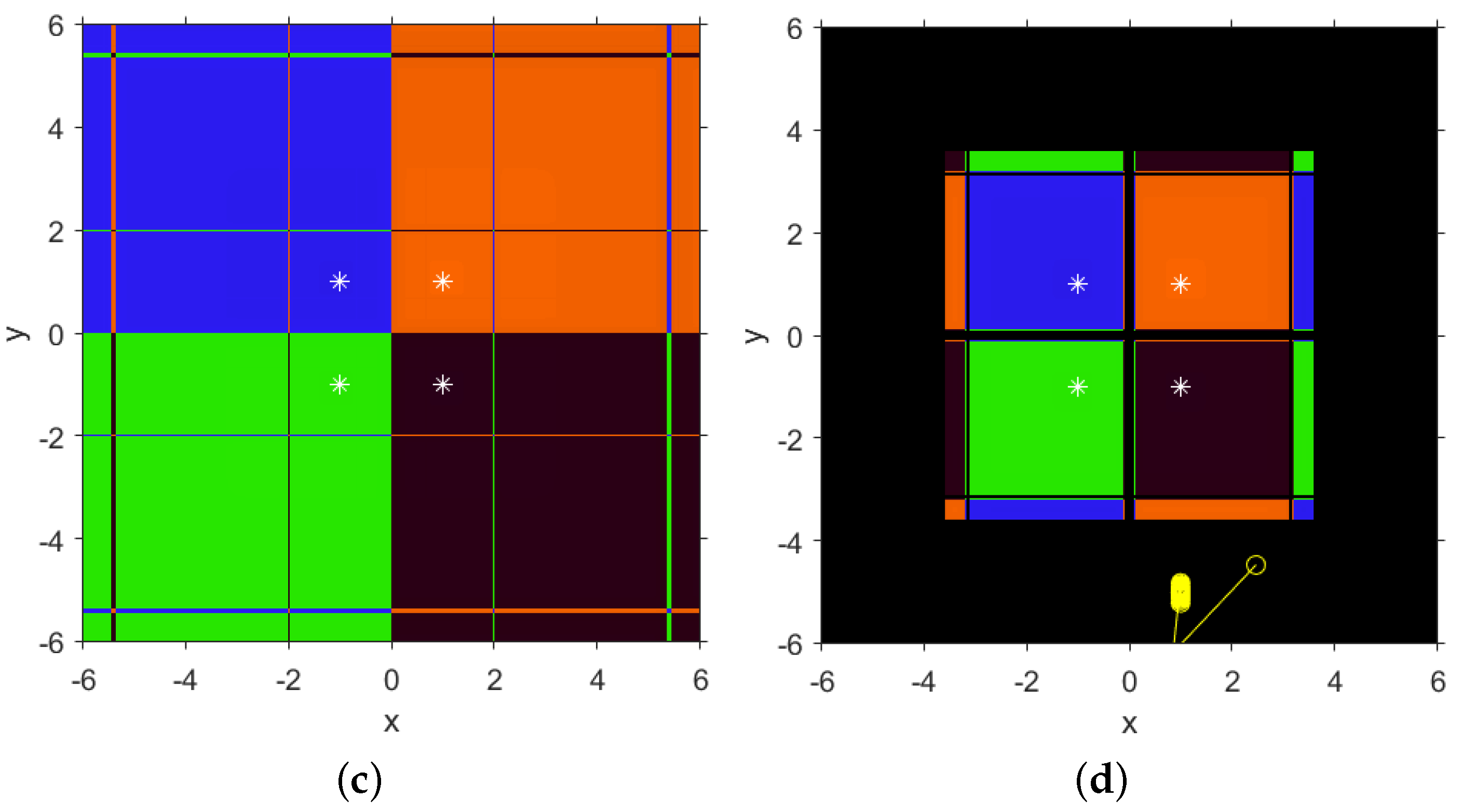

In

Figure 7, the dynamical planes corresponding to SF3 method acting on

can be observed. Stable behavior correspond to positive or null values of

(

Figure 7a,b), meanwhile stable or unstable performance is found for some negative values of

, as can be seen in

Figure 7c,d. Several attracting strange fixed points have been found for

, whose components are

and/or

, whose basins of attraction appear in black color.