Truth-Telling in a Sender–Receiver Game: Social Value Orientation and Incentives

Abstract

1. Introduction

1.1. Reward Amount

1.2. Social Preference

1.3. Interactive Play

1.4. Current Study

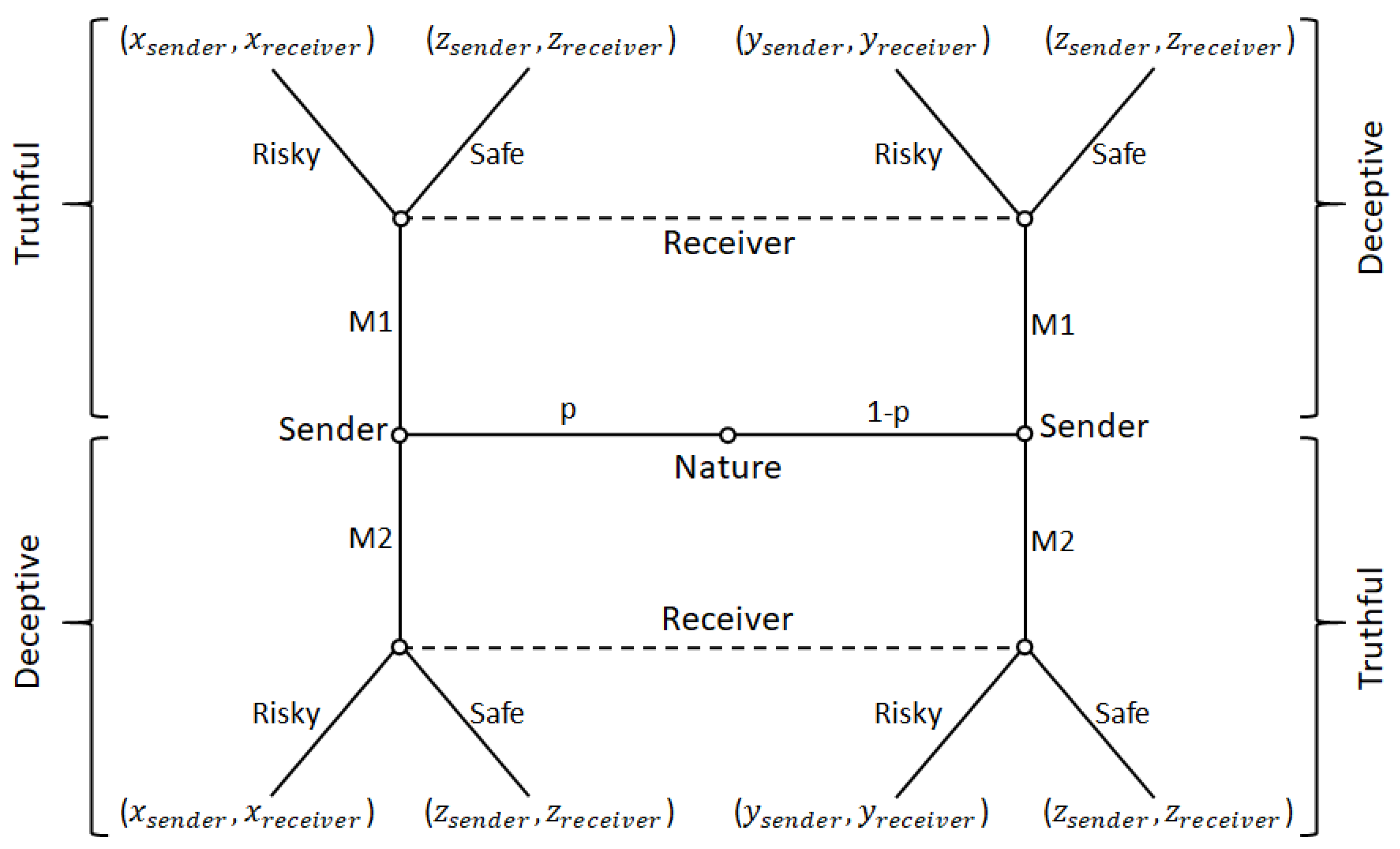

2. A Sender–Receiver Game of Deception

3. Experiment 1

3.1. Methods

3.1.1. Participants

3.1.2. Design

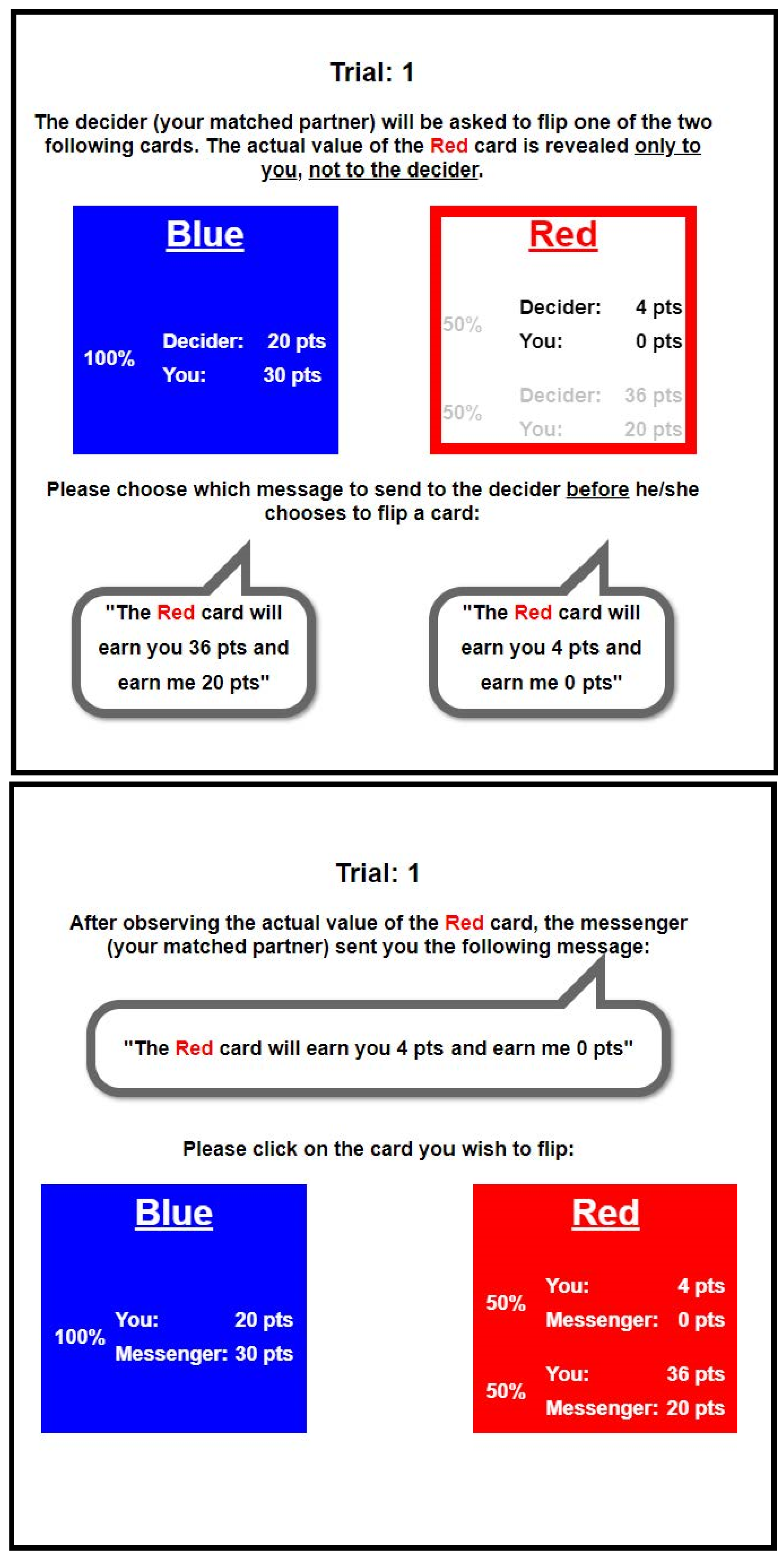

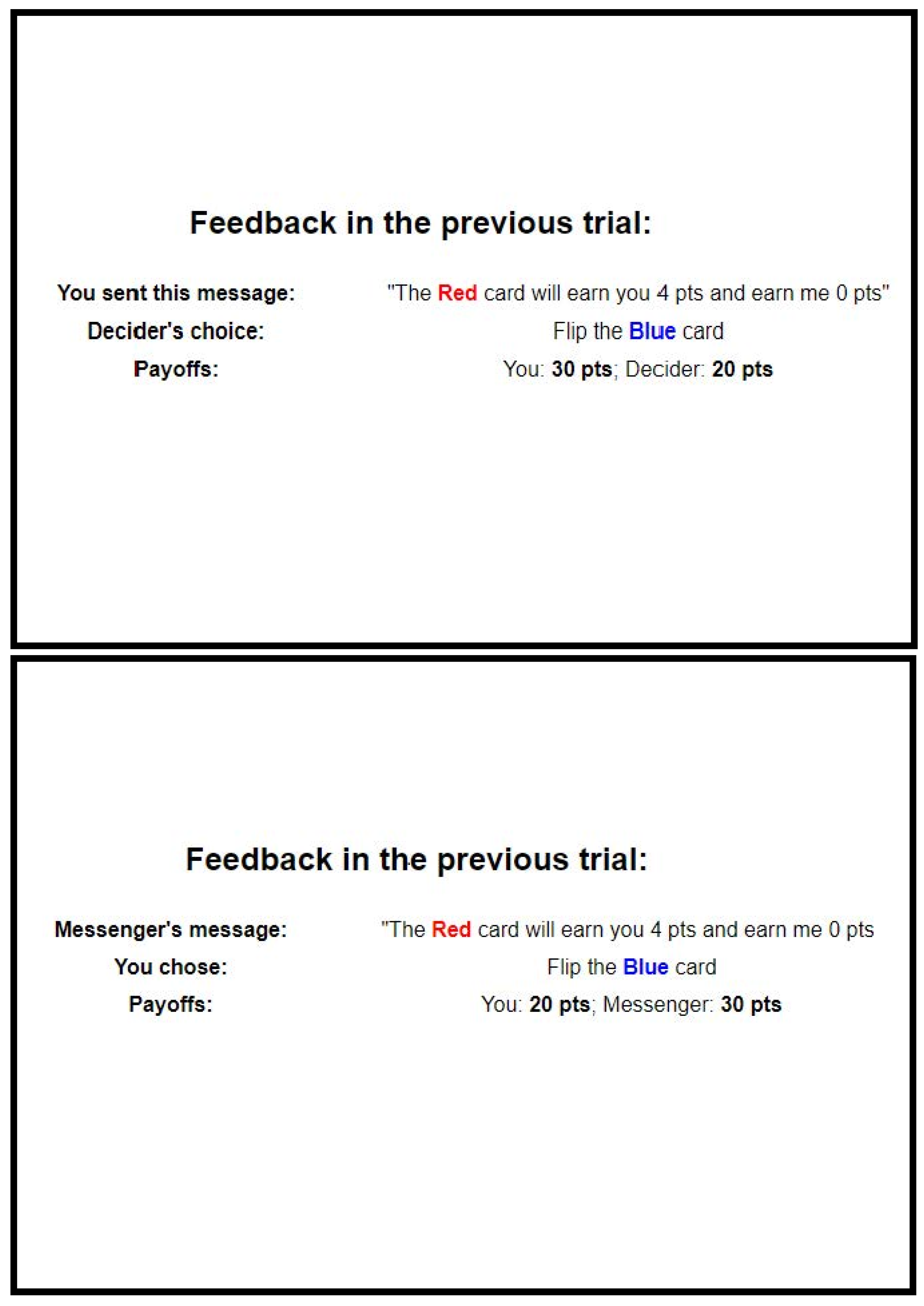

Interactive Sender–Receiver Game

False: “The red card will earn you 36 points and earn me 20 points.”

True: “The red card will earn you 4 points and earn me 0 points.”

Social Value Orientation Scale

3.1.3. Procedure

3.2. Results

3.2.1. Lying Propensity and Risk Taking

Sender’s Lying Propensity

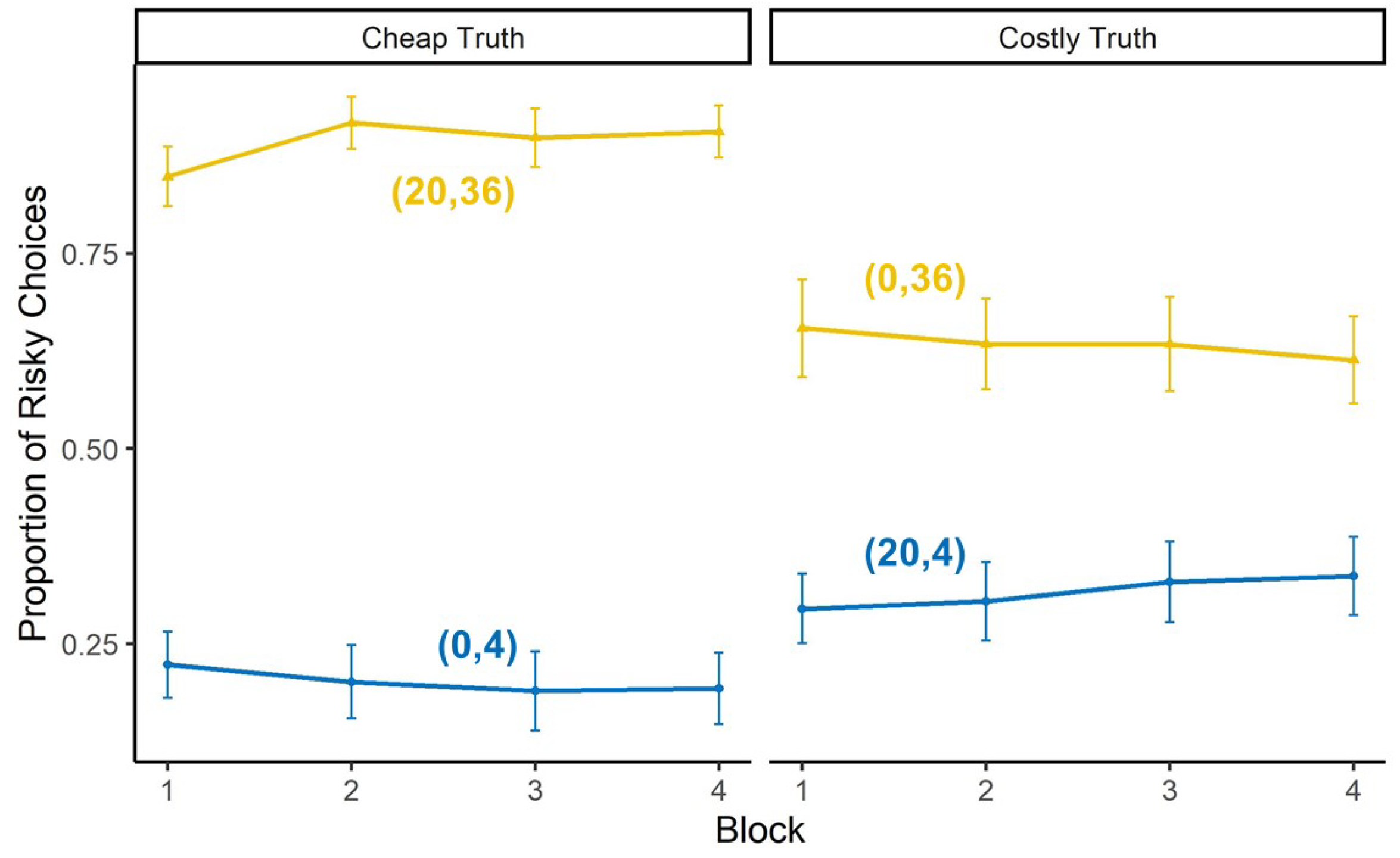

Receiver’s Risk Taking

3.2.2. Interactive Play

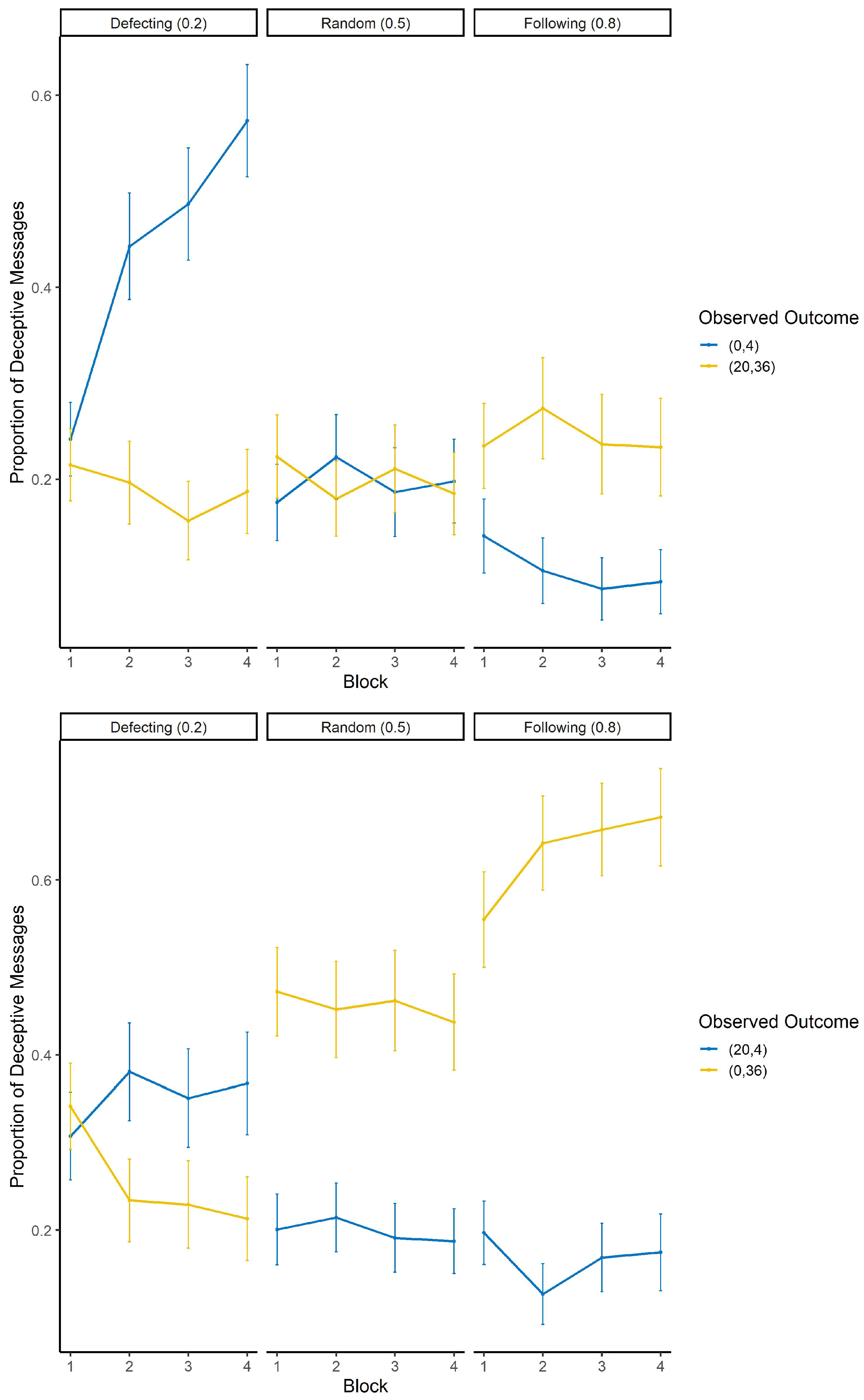

3.3. Discussion

4. Experiment 2

4.1. Methods

4.1.1. Participants

4.1.2. Design

Sender Bot

Receiver Bot

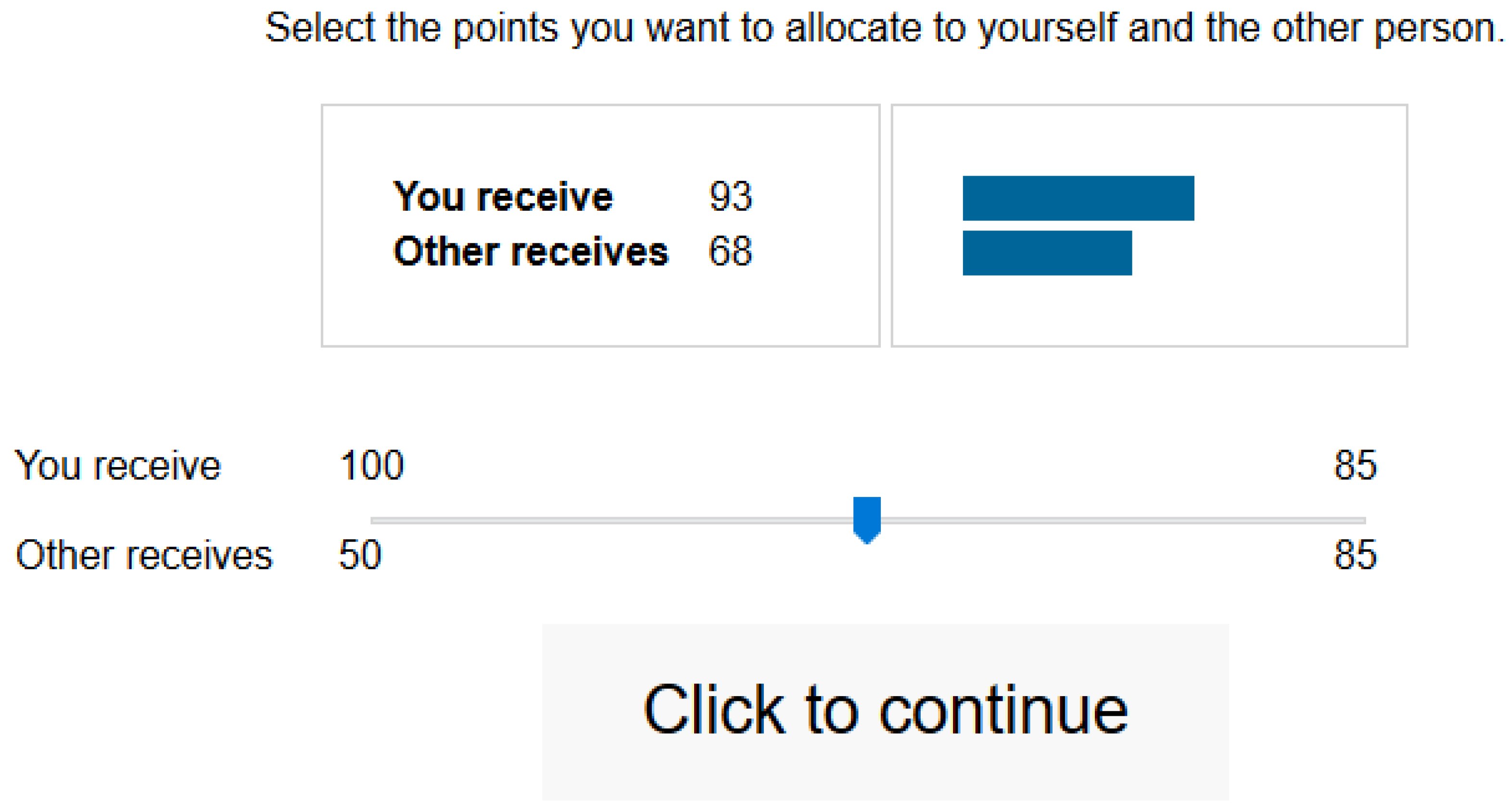

Social and Risk Preference

4.1.3. Procedure

4.2. Results

4.2.1. Human Sender

Cheap Truth

Costly Truth

4.2.2. Human Receiver

Cheap Truth

Costly Truth

4.3. Discussion

5. General Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Theoretical Analysis

| (,) | (,) | (,) | (,) | |

|---|---|---|---|---|

| (,) | ||||

| (,) | ||||

| (,) | ||||

| (,) | ||||

Appendix A.2. SVO Consistency

- Item 1: prosocial vs. competitive

- Item 2: competitive vs. individualist

- Item 3: altruist vs. prosocial

- Item 4: altruist vs. competitive

- Item 5: individualist vs. altruist

- Item 6: individualist vs. prosocial

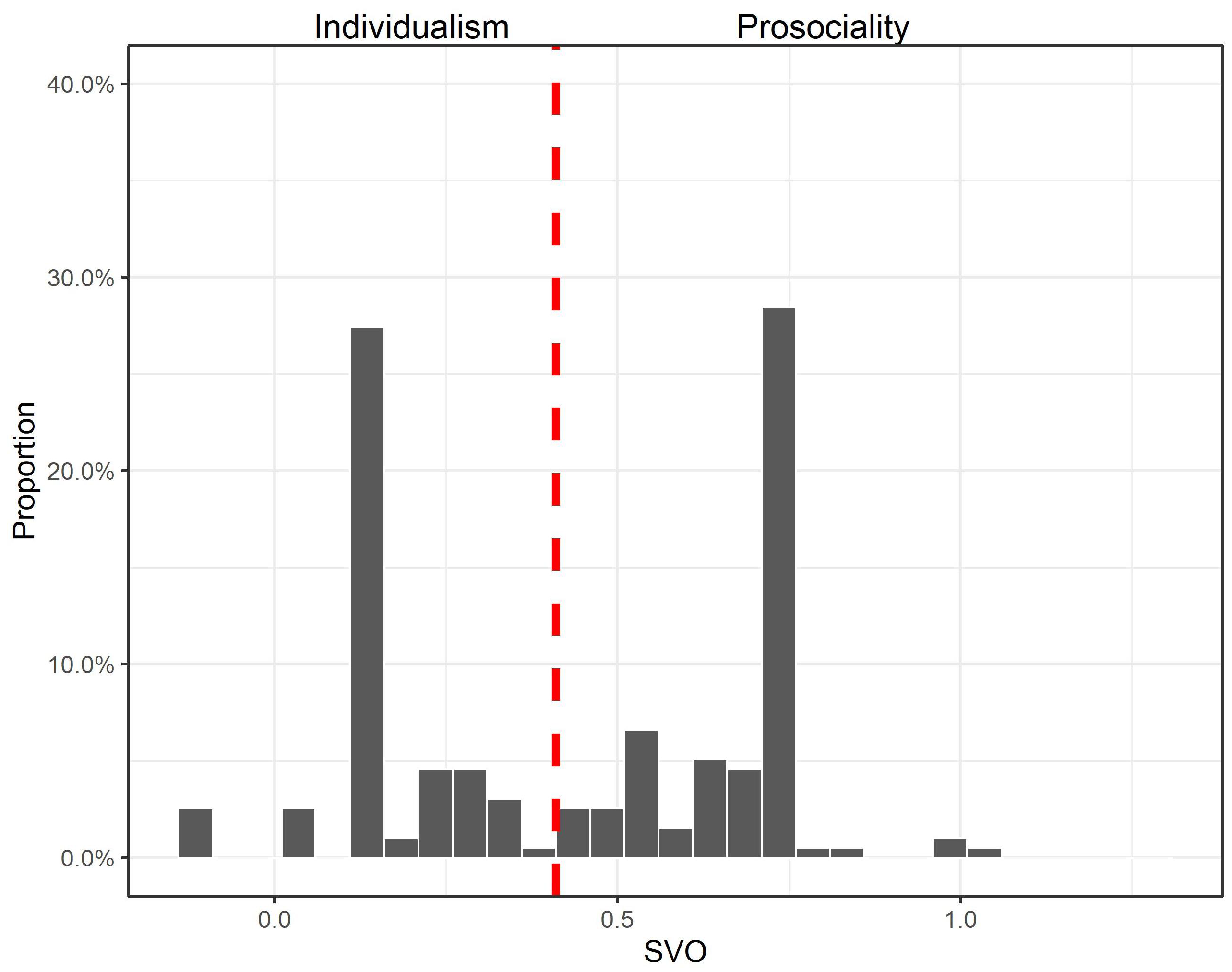

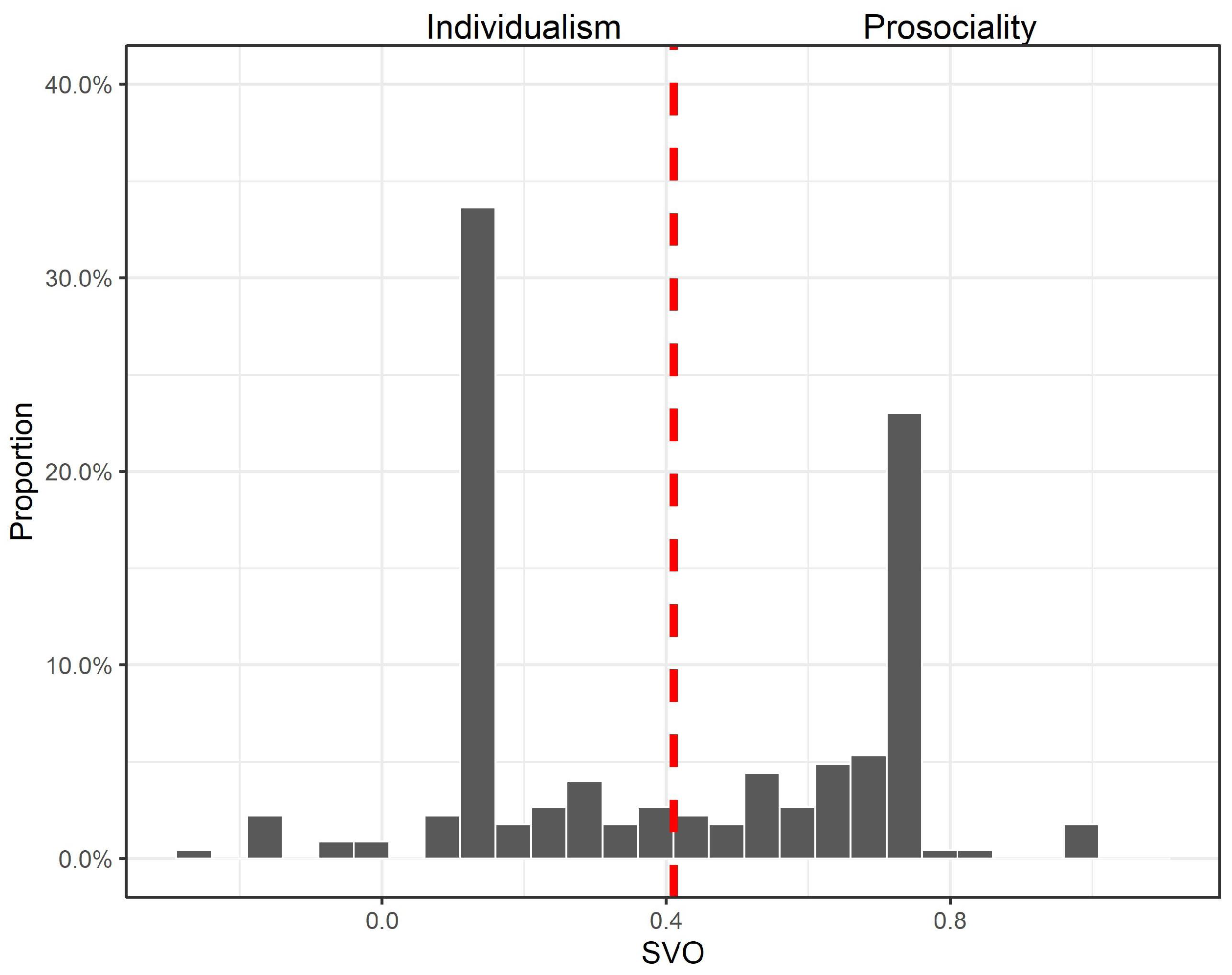

Appendix A.3. Distribution of Participants’ Social Preference

References

- Rowe, N.C.; Rrushi, J. Introduction to Cyberdeception; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Gerlach, P.; Teodorescu, K.; Hertwig, R. The truth about lies: A meta-analysis on dishonest behavior. Psychol. Bull. 2019, 145, 1–44. [Google Scholar] [CrossRef] [PubMed]

- Gneezy, U. Deception: The role of consequences. Am. Econ. Rev. 2005, 95, 384–394. [Google Scholar] [CrossRef]

- Mazar, N.; Amir, O.; Ariely, D. The dishonesty of honest people: A theory of self-concept maintenance. J. Mark. Res. 2008, 45, 633–644. [Google Scholar] [CrossRef]

- Fischbacher, U.; Föllmi-Heusi, F. Lies in disguise—An experimental study on cheating. J. Eur. Econ. Assoc. 2013, 11, 525–547. [Google Scholar] [CrossRef]

- Kajackaite, A.; Gneezy, U. Incentives and cheating. Games Econ. Behav. 2017, 102, 433–444. [Google Scholar] [CrossRef]

- Sutter, M. Deception through telling the truth?! Experimental evidence from individuals and teams. Econ. J. 2009, 119, 47–60. [Google Scholar] [CrossRef]

- Gonzalez, C. Learning and dynamic decision making. Top. Cogn. Sci. 2022, 14, 14–30. [Google Scholar] [CrossRef]

- Gino, F.; Ayal, S.; Ariely, D. Self-serving altruism? The lure of unethical actions that benefit others. J. Econ. Behav. Organ. 2013, 93, 285–292. [Google Scholar] [CrossRef]

- Erat, S.; Gneezy, U. White lies. Manag. Sci. 2012, 58, 723–733. [Google Scholar] [CrossRef]

- Cappelen, A.W.; Sørensen, E.Ø.; Tungodden, B. When do we lie? J. Econ. Behav. Organ. 2013, 93, 258–265. [Google Scholar] [CrossRef]

- Biziou-van Pol, L.; Haenen, J.; Novaro, A.; Occhipinti Liberman, A.; Capraro, V. Does telling white lies signal pro-social preferences? Judgm. Decis. Mak. 2015, 10, 538–548. [Google Scholar]

- Utikal, V.; Fischbacher, U. Disadvantageous lies in individual decisions. J. Econ. Behav. Organ. 2013, 85, 108–111. [Google Scholar] [CrossRef][Green Version]

- Abeler, J.; Becker, A.; Falk, A. Representative evidence on lying costs. J. Public Econ. 2014, 113, 96–104. [Google Scholar] [CrossRef]

- Gneezy, U.; Kajackaite, A.; Sobel, J. Lying aversion and the size of the lie. Am. Econ. Rev. 2018, 108, 419–453. [Google Scholar] [CrossRef]

- Hurkens, S.; Kartik, N. Would I lie to you? On social preferences and lying aversion. Exp. Econ. 2009, 12, 180–192. [Google Scholar] [CrossRef]

- Kerschbamer, R.; Neururer, D.; Gruber, A. Do altruists lie less? J. Econ. Behav. Organ. 2019, 157, 560–579. [Google Scholar] [CrossRef]

- Maggian, V.; Villeval, M.C. Social preferences and lying aversion in children. Exp. Econ. 2016, 19, 663–685. [Google Scholar] [CrossRef]

- Sheremeta, R.M.; Shields, T.W. Do liars believe? Beliefs and other-regarding preferences in sender–receiver games. J. Econ. Behav. Organ. 2013, 94, 268–277. [Google Scholar] [CrossRef]

- Rode, J. Truth and trust in communication: Experiments on the effect of a competitive context. Games Econ. Behav. 2010, 68, 325–338. [Google Scholar] [CrossRef]

- Gneezy, U.; Rockenbach, B.; Serra-Garcia, M. Measuring lying aversion. J. Econ. Behav. Organ. 2013, 93, 293–300. [Google Scholar] [CrossRef]

- Brandts, J.; Charness, G. Truth or consequences: An experiment. Manag. Sci. 2003, 49, 116–130. [Google Scholar] [CrossRef]

- Sánchez-Pagés, S.; Vorsatz, M. An experimental study of truth-telling in a sender–receiver game. Games Econ. Behav. 2007, 61, 86–112. [Google Scholar] [CrossRef]

- Sánchez-Pagés, S.; Vorsatz, M. Enjoy the silence: An experiment on truth-telling. Exp. Econ. 2009, 12, 220–241. [Google Scholar] [CrossRef]

- Murphy, R.O.; Ackermann, K.A. Social value orientation: Theoretical and measurement issues in the study of social preferences. Personal. Soc. Psychol. Rev. 2014, 18, 13–41. [Google Scholar] [CrossRef]

- Moisan, F.; ten Brincke, R.; Murphy, R.O.; Gonzalez, C. Not all Prisoners’ Dilemma games are equal: Incentives, social preferences, and cooperation. Decision 2018, 5, 306–322. [Google Scholar] [CrossRef]

- Ponzano, F.; Ottone, S. Prosociality and fiscal honesty: Tax evasion in Italy, United Kingdom, and Sweden. In Dishonesty in Behavioral Economics; Bucciol, A., Montinari, N., Eds.; Academic Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Fleiß, J.; Ackermann, K.A.; Fleiß, E.; Murphy, R.O.; Posch, A. Social and environmental preferences: Measuring how people make tradeoffs among themselves, others, and collective goods. Cent. Eur. J. Oper. Res. 2020, 28, 1049–1067. [Google Scholar] [CrossRef]

- Murphy, R.O.; Ackermann, K.A. Social preferences, positive expectations, and trust based cooperation. J. Math. Psychol. 2015, 67, 45–50. [Google Scholar] [CrossRef]

- Murphy, R.O.; Ackermann, K.A.; Handgraaf, M. Measuring social value orientation. Judgm. Decis. Mak. 2011, 6, 771–781. [Google Scholar] [CrossRef]

- Hedden, T.; Zhang, J. What do you think I think you think?: Strategic reasoning in matrix games. Cognition 2002, 85, 1–36. [Google Scholar] [CrossRef]

- Van Lange, P.A. The pursuit of joint outcomes and equality in outcomes: An integrative model of social value orientation. J. Personal. Soc. Psychol. 1999, 77, 337–349. [Google Scholar] [CrossRef]

- JASP Team. JASP (Version 0.14.1) [Computer Software]. 2020. Available online: https://jasp-stats.org/ (accessed on 25 July 2022).

- Williams, P.; Heathcote, A.; Nesbitt, K.; Eidels, A. Post-error recklessness and the hot hand. Judgm. Decis. Mak. 2016, 11, 174–184. [Google Scholar]

- Charness, G.; Gneezy, U.; Imas, A. Experimental methods: Eliciting risk preferences. J. Econ. Behav. Organ. 2013, 87, 43–51. [Google Scholar] [CrossRef]

- Camerer, C.F.; Ho, T.H.; Chong, J.K. A cognitive hierarchy model of games. Q. J. Econ. 2004, 119, 861–898. [Google Scholar] [CrossRef]

- Zhang, H.; Moisan, F.; Gonzalez, C. Rock-Paper-Scissors Play: Beyond the Win-Stay/Lose-Change Strategy. Games 2021, 12, 52. [Google Scholar] [CrossRef]

- Brockbank, E.; Vul, E. Recursive Adversarial Reasoning in the Rock, Paper, Scissors Game. In Proceedings of the Annual Meeting of the Cognitive Science Society, Online Virtual Meeting, 27 July–1 August 2020; Available online: https://cognitivesciencesociety.org/cogsci20/ (accessed on 25 July 2022).

- Gonzalez, C.; Lerch, J.F.; Lebiere, C. Instance-based learning in dynamic decision making. Cogn. Sci. 2003, 27, 591–635. [Google Scholar] [CrossRef]

- Gonzalez, C.; Dutt, V. Instance-based learning: Integrating sampling and repeated decisions from experience. Psychol. Rev. 2011, 118, 523–551. [Google Scholar] [CrossRef]

- Nguyen, T.N.; Gonzalez, C. Theory of mind from observation in cognitive models and humans. Top. Cogn. Sci. 2021. [Google Scholar] [CrossRef]

| Payoffs Risky Option | EV Risky | Payoffs Safe Option | ||

|---|---|---|---|---|

| Condition | (, ) | (, ) | (, ) | |

| Cheap Truth | (20,36) | (0,4) | (10,20) | (30,20) |

| Costly Truth | (0,36) | (20,4) | (10,20) | (30,20) |

| Condition | (, ) | (, ) | ||

|---|---|---|---|---|

| Truthful Bot | Random Bot | Deceptive Bot | ||

| Cheap Truth | (0,4) | (20,36) | (20,36) | (20,36) |

| Costly Truth | (20,4) | (0,36) | (0,36) | (0,36) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Moisan, F.; Aggarwal, P.; Gonzalez, C. Truth-Telling in a Sender–Receiver Game: Social Value Orientation and Incentives. Symmetry 2022, 14, 1561. https://doi.org/10.3390/sym14081561

Zhang H, Moisan F, Aggarwal P, Gonzalez C. Truth-Telling in a Sender–Receiver Game: Social Value Orientation and Incentives. Symmetry. 2022; 14(8):1561. https://doi.org/10.3390/sym14081561

Chicago/Turabian StyleZhang, Hanshu, Frederic Moisan, Palvi Aggarwal, and Cleotilde Gonzalez. 2022. "Truth-Telling in a Sender–Receiver Game: Social Value Orientation and Incentives" Symmetry 14, no. 8: 1561. https://doi.org/10.3390/sym14081561

APA StyleZhang, H., Moisan, F., Aggarwal, P., & Gonzalez, C. (2022). Truth-Telling in a Sender–Receiver Game: Social Value Orientation and Incentives. Symmetry, 14(8), 1561. https://doi.org/10.3390/sym14081561