A Neural Beamspace-Domain Filter for Real-Time Multi-Channel Speech Enhancement

Abstract

:1. Introduction

- •

- The target signal can be pre-extracted with the fixed beamformer, and the dominant part should exist within at least one directional beam, serving as the SNR-improved target priori to guide the subsequent beam fusion process. The interference-dominant beam can be obtained, when the beam steers towards the interference direction, providing the interference priori for better distinguishment in a spatial-spectral sense. Besides, the target and interference components may co-exist within each beam, while their distributions are dynamically changed, due to their spectral difference. Therefore, the beam set can be viewed as a reasonable candidate to indicate the spectral and spatial characteristics.

- •

- In addition to the design of beam pattern in the spatial domain, the proposed system can, also, learn the spectral characteristics of the interference components, to cancel residual noise in the spectral domain, completing the enhancement of both the spatial domain and the spectral domain, which can achieve a higher upper limit of performance than the neural spatial network that only performs filtering in the spatial domain.

- •

- From the optimization standpoint, the small error in the beamforming weights may lead to serious distortion of the beam pattern, while the beamspace-domain weights will only leak some undesired components when the error occurs, which has much less direct impact on the performance of the system. Therefore, the beamspace-domain filter is more robust.

- •

- We propose a novel multi-channel speech enhancement scheme in the beam-space domain. To the best of our knowledge, this is the first work that shows the effectiveness of the neural beamspace-domain filter for multi-channel speech enhancement.

- •

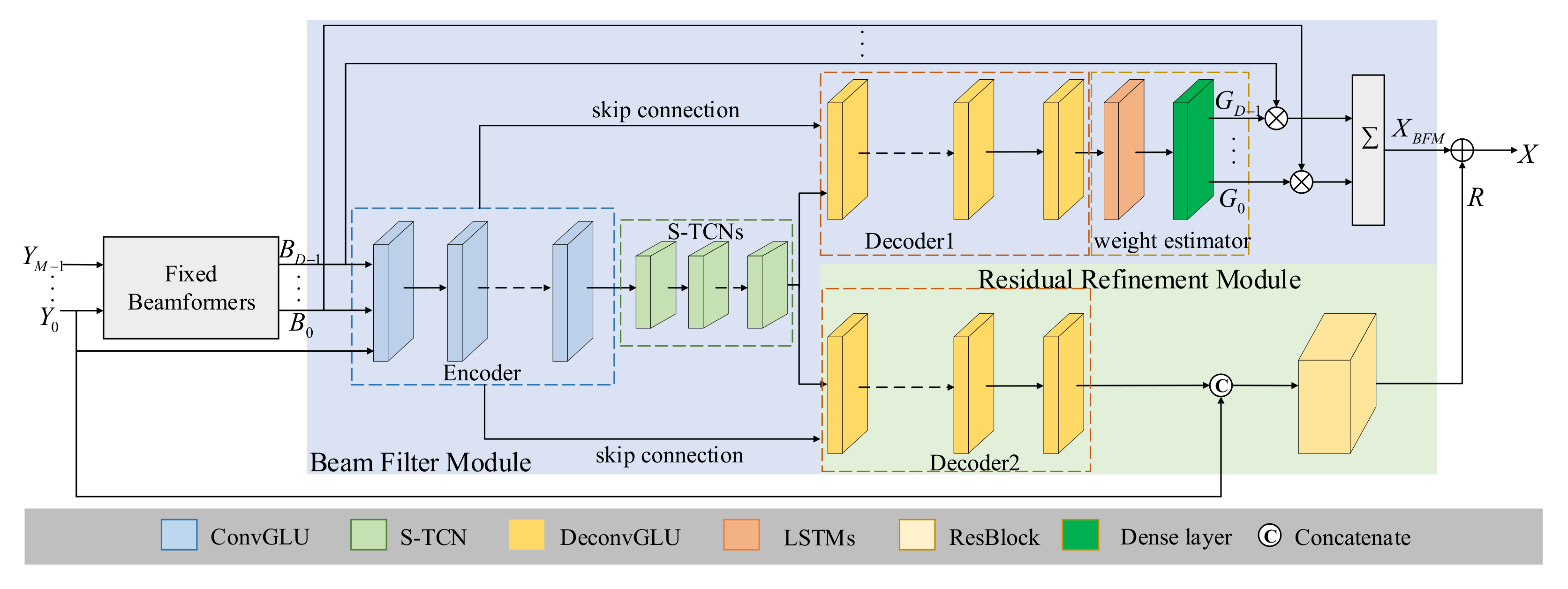

- We introduce the residual U-Net into the convolutional encoder-decoder architecture, to improve the feature representation capability. A weight estimator module is designed, to predict the time-frequency bin-level filter coefficients, and a residual refinement module is designed to refine the estimated spectrum.

- •

- We validate the superiority of the proposed framework, by comparing it with state-of-the-art algorithms in both the directional interference and diffuse noise scenarios. These evaluation results demonstrate the superiority and potentiality of the proposed method.

2. Materials and Methods

2.1. Signal Model

- is the STFT of .

- is the STFT of .

- is the STFT of .

- refers to the index of frames.

- refers to the index of frequency bins. Considering the symmetry of in frequency, is chosen throughout this paper.

- is the direct-path signal of the speech source and its early reflections.

- is the late reverberant speech.

- is the STFT of .

- is the STFT of .

- is the STFT of .

- refers to the index of frames.

- refers to the index of frequency bins. Considering the symmetry of in frequency, is chosen throughout this paper.

- is the direct-path signal of the speech source and its early reflections.

- is the late reverberant speech.

- denotes the parameter set of the mapping function .

2.2. Forward Stream

- is the function of FBM.

- denotes the parameter set.

- is the complex filter estimated by BFM.

- is the complex residual estimated by RRM.

2.3. Fixed Beamforming Module

- is the steering vector.

- is the complex transpose operator.

- denotes the covariance matrix of a diffuse noise field with the diagonal loading to control the white noise gain.

- .

- is the distance between the i-th and j-th microphones.

- c is the speed of sound.

- is the sampling rate.

2.4. Beam Filter Module

2.4.1. CED Architecture

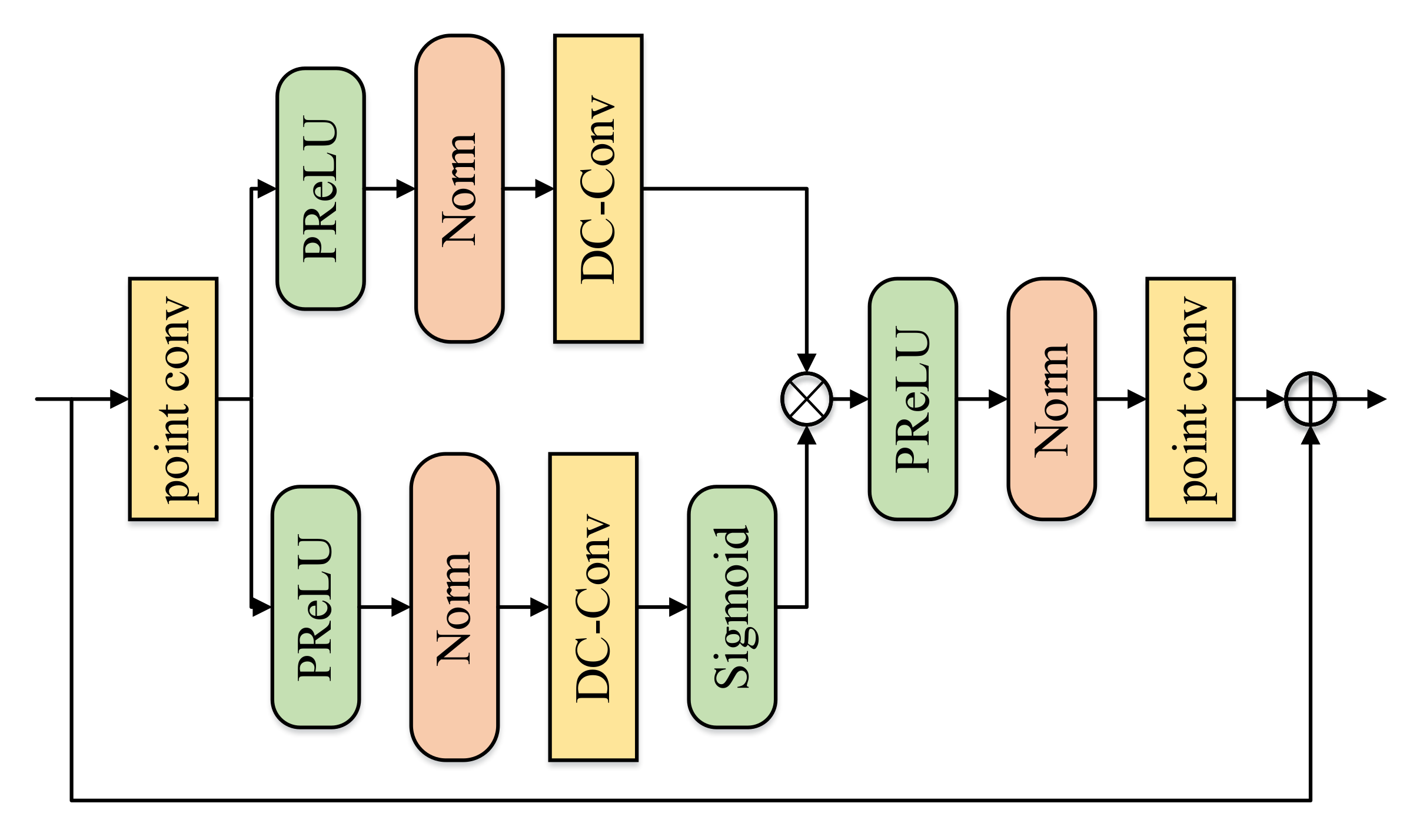

2.4.2. GLU-RSU Block

- * is the convolution operator.

- ⊙ is the Hadamard product operator.

- and are the weights of these two convolutional layers.

- and are the bias of these two convolutional layers.

- is the Sigmoid function.

- is the function of U-Net.

- denotes the parameter set.

2.4.3. Squeezed Temporal Convolutional Network

2.5. Residual Refinement Module

2.6. Loss Function

2.7. Datasets

3. Experiments

3.1. Baselines

3.2. Experiment Setup

3.2.1. Training Detail

3.2.2. Network Detail

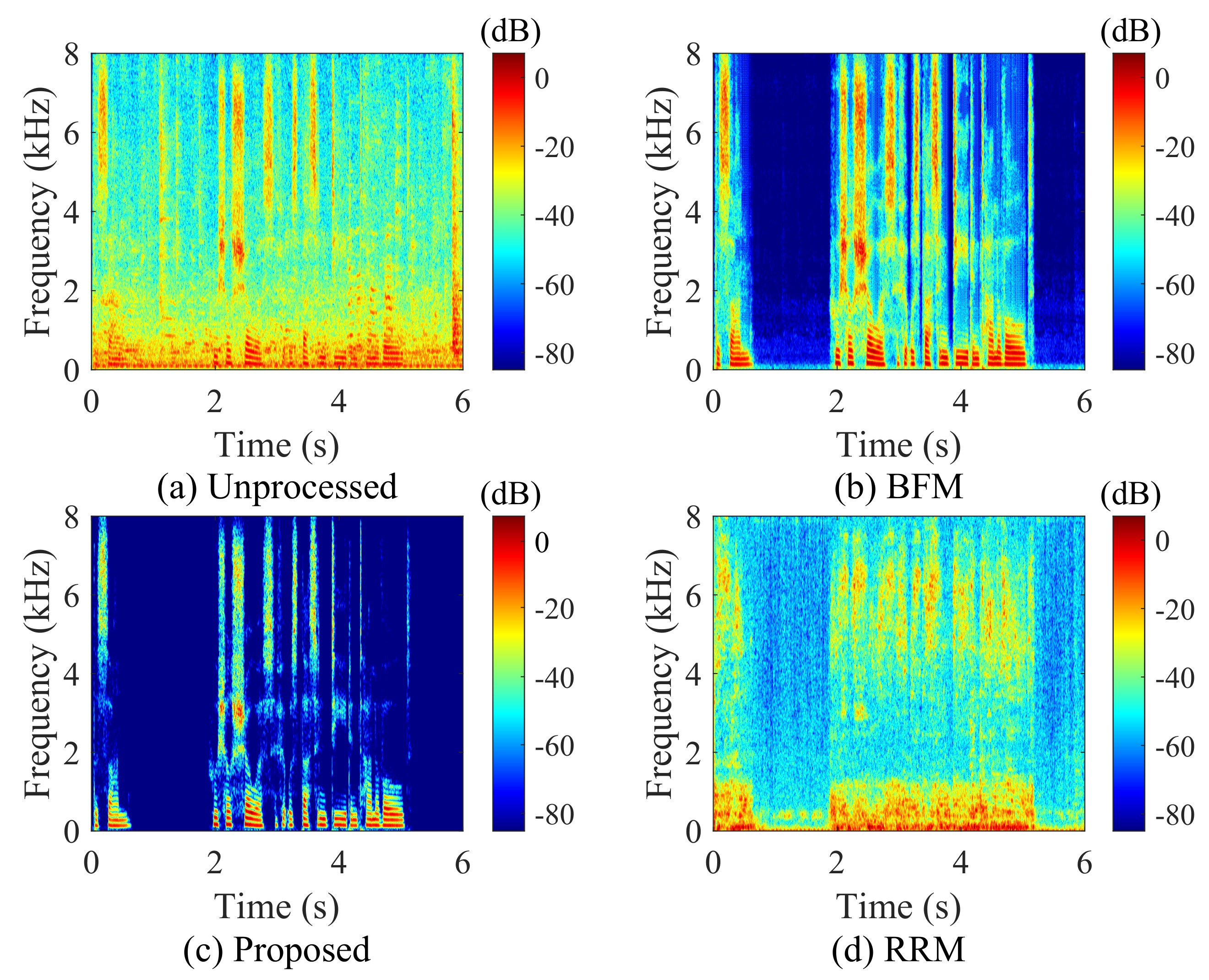

4. Results and Discussion

4.1. Results Comparison in the Directional Interference Case

4.2. Results Comparison in the Diffused Babble Noise Case

4.3. Ablation Analysis

5. Conclusions

- •

- The proposed system achieves better speech quality and intelligibility over previous SOTA approaches in the directional interference case.

- •

- In the diffused babble noise scenario, our method, also, achieves better performance than previous systems.

- •

- From the spectrograms of BFM and RRM, one can see that RRM is helpful to refine the missing components of the output of BFM.

- •

- From the ablation study, RSU is able to learn stronger discriminating features to improve the performance.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, D.; Chen, J. Supervised speech separation based on deep learning: An overview. IEEE/ACM Trans. Audio Speech Lang. Proc. 2018, 26, 1702–1726. [Google Scholar] [CrossRef] [PubMed]

- Benesty, J.; Makino, S.; Chen, J. Speech Enhancement; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005; pp. 199–228. [Google Scholar]

- Makino, S.; Lee, T.W.; Sawada, H. Blind Speech Separation; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007; pp. 3–45. [Google Scholar]

- Tawara, N.; Kobayashi, T.; Ogawa, T. Multi-channel speech enhancement using time-domain convolutional denoising autoencoder. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 86–90. [Google Scholar]

- Liu, C.; Fu, S.; Li, Y.; Huang, J.; Wang, H.; Tsao, Y. Multichannel speech enhancement by raw waveform-mapping using fully convolutional networks. IEEE/ACM Trans. Audio Speech Lang. Proc. 2020, 28, 1888–1900. [Google Scholar] [CrossRef] [Green Version]

- Tan, K.; Xu, Y.; Zhang, S.; Yu, M.; Yu, D.; Tsao, Y. Audio-visual speech separation and dereverberation with a two-stage multimodal network. IEEE J. Sel. Top. Signal Process. 2020, 14, 542–553. [Google Scholar] [CrossRef] [Green Version]

- Wu, J.; Chen, Z.; Li, J.; Yoshioka, T.; Tan, Z.; Lin, E.; Luo, Y.; Xie, L. An end-to-end architecture of online multi-channel speech separation. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 81–85. [Google Scholar]

- Gu, R.; Chen, L.; Zhang, S.; Zheng, J.; Xu, Y.; Yu, M.; Su, D.; Zou, Y.; Yu, D. Neural spatial filter: Target speaker speech separation assisted with directional information. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 4290–4294. [Google Scholar]

- Fu, Y.; Wu, J.; Hu, Y.; Xing, M.; Xie, L. Desnet: A multi-channel network for simultaneous speech dereverberation, enhancement and separation. In Proceedings of the IEEE Spoken Language Technology Workshop, Shenzhen, China, 19–22 January 2021; pp. 857–864. [Google Scholar]

- Xu, Y.; Yu, M.; Zhang, S.; Chen, L.; Weng, C.; Liu, J.; Yu, D. Neural Spatio-Temporal Beamformer for Target Speech Separation. In Proceedings of the Interspeech, Shanghai, China, 25–29 October 2020; pp. 56–60. [Google Scholar]

- Zhang, Z.; Xu, Y.; Yu, M.; Zhang, S.; Chen, L.; Yu, D. Adl-mvdr: All deep learning mvdr beamformer for target speech separation. In Proceedings of the ICASSP, Toronto, ON, Canada, 6–11 June 2021; pp. 6089–6093. [Google Scholar]

- Heymann, J.; Drude, L.; Haeb-Umbach, R. Neural network based spectral mask estimation for acoustic beamforming. In Proceedings of the ICASSP, Shanghai, China, 20–25 March 2016; pp. 196–200. [Google Scholar]

- Zhang, X.; Wang, Z.; Wang, D. A speech enhancement algorithm by iterating single- and multi-microphone processing and its application to robust asr. In Proceedings of the ICASSP, New Orleans, LA, USA, 5–9 March 2017; pp. 276–280. [Google Scholar]

- Gu, R.; Zhang, S.; Zou, Y; Yu, D. Complex neural spatial filter: Enhancing multi-channel target speech separation in complex domain. IEEE Signal Proc. Let. 2021, 28, 1370–1374. [Google Scholar] [CrossRef]

- Zheng, C.; Liu, W.; Li, A.; Ke, Y.; Li, X. Low-latency monaural speech enhancement with deep filter-bank equalizer. J. Acoust. Soc. Am. 2022, 151, 3291–3304. [Google Scholar] [CrossRef]

- Luo, Y.; Han, C.; Mesgarani, N.; Ceolini, E.; Liu, S. Fasnet: Low-latency adaptive beamforming for multi-microphone audio processing. In Proceedings of the ASRU, Sentosa, Singapore, 14–18 December 2019; pp. 260–267. [Google Scholar]

- Luo, Y.; Chen, Z.; Mesgarani, N.; Yoshioka, T. End-to-end microphone permutation and number invariant multi-channel speech separation. In Proceedings of the ICASSP, Virtual, 4–8 May 2020; pp. 6394–6398. [Google Scholar]

- Xiao, X.; Watanabe, S.; Erdogan, H.; Lu, L.; Hershey, J.; Seltzer, M.L.; Chen, G.; Zhang, Y.; Mandel, M.; Yu, D. Deep beamforming networks for multi-channel speech recognition. In Proceedings of the ICASSP, Shanghai, China, 20–25 March 2016; pp. 5745–5749. [Google Scholar]

- Xu, Y.; Zhang, Z.; Yu, M.; Zhang, S.; Yu, D. Generalized spatio-temporal rnn beamformer for target speech separation. arXiv 2021, arXiv:2101.01280. [Google Scholar]

- Ren, X.; Zhang, X.; Chen, L.; Zheng, X.; Zhang, X.; Guo, L.; Yu, B. A causal u-net based neural beamforming network for real-time multi-channel speech enhancement. In Proceedings of the Interspeech, Brno, Czechia, 30 August 2021; pp. 1832–1836. [Google Scholar]

- Chen, J.; Li, J.; Xiao, X.; Yoshioka, T.; Wang, H.; Wang, Z.; Gong, Y. Fasnet: Cracking the cocktail party problem by multi-beam deep attractor network. In Proceedings of the ASRU, Okinawa, Japan, 16–20 December 2017; pp. 437–444. [Google Scholar]

- Reddy, C.; Dubey, H.; Gopal, V.; Cutler, R.; Braun, S.; Gamper, H.; Aichner, R.; Srinivasan, S. Icassp 2021 deep noise suppression challenge. In Proceedings of the ICASSP, Toronto, ON, Canada, 6–11 June 2021; pp. 6623–6627. [Google Scholar]

- Li, A.; Zheng, C.; Zhang, L.; Li, X. Glance and gaze: A collaborative learning framework for single-channel speech enhancement. arXiv 2021, arXiv:2106.11789. [Google Scholar] [CrossRef]

- Parsons, A.T. Maximum directivity proof for three-dimensional arrays. J. Acoust. Soc. Am. 1987, 82, 179–182. [Google Scholar] [CrossRef]

- Pan, C.; Chen, J.; Benesty, J. Reduced-Order Robust Superdirective Beamforming With Uniform Linear Microphone Arrays. IEEE/ACM Trans. Audio Speech Lang. Proc. 2016, 24, 1548–1559. [Google Scholar] [CrossRef]

- Li, A.; Liu, W.; Zheng, C.; Fan, C.; Li, X. Two heads are better than one: A two-stage complex spectral mapping approach for monaural speech enhancement. IEEE/ACM Trans. Audio Speech Lang. Proc. 2021, 29, 1829–1843. [Google Scholar] [CrossRef]

- Tan, K.; Wang, D. A convolutional recurrent neural network for real-time speech enhancement. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018; pp. 3229–3233. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the CVPR, Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A convolutional neural network for modelling sentences. In Proceedings of the ACL, Balimore, MD, USA, 22–27 June 2014; pp. 655–665. [Google Scholar]

- Ciaburro, G.; Iannace, G. Improving smart cities safety using sound events detection based on deep neural network algorithms. Informatics 2020, 7, 23. [Google Scholar] [CrossRef]

- Ciaburro, G. Sound Event Detection in Underground Parking Garage Using Convolutional Neural Network. Big Data Cogn. Comput. 2020, 4, 20. [Google Scholar] [CrossRef]

- Tan, K.; Wang, D. Learning complex spectral mapping with gated convolutional recurrent networks for monaural speech enhancement. IEEE/ACM Trans. Audio Speech Lang. Proc. 2019, 28, 380–390. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Liu, W.; Li, A.; Zheng, C.; Li, X. A separation and interaction framework for causal multi-channel speech enhancement. Digital Signal Process. 2022, 126, 103519. [Google Scholar] [CrossRef]

- Zue, V.; Seneff, S.; Glass, J. Speech database development at mit: Timit and beyond. Speech Commun. 1990, 9, 351–356. [Google Scholar] [CrossRef]

- Varga, A.; Steeneken, H. Assessment for automatic speech recognition: II. NOISEX-92: A database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 1993, 12, 247–251. [Google Scholar] [CrossRef]

- Barker, J.; Marxer, R.; Vincent, E.; Watanabe, S. The third ‘CHiME’ speech separation and recognition challenge: Dataset, task and baselines. In Proceedings of the ASRU, Scottsdale, AZ, USA, 13–17 December 2015; pp. 504–511. [Google Scholar]

- Allen, J.; Berkley, D. Image method for efficiently simulating small-room acoustics. J. Acoust. Soc. Am. 1979, 65, 943–950. [Google Scholar] [CrossRef]

- Zhang, J.; Zorilă, C.; Doddipatla, R.; Barker, J. On End-to-end Multi-channel Time Domain Speech Separation in Reverberant Environments. In Proceedings of the ICASSP, Virtual, 4–8 May 2020; pp. 6389–6393. [Google Scholar]

- Rao, W.; Fu, Y.; Hu, Y.; Xu, X.; Jv, Y.; Han, J.; Shang, S.; Jiang, Z.; Xie, L.; Wang, Y.; et al. Interspeech 2021 conferencingspeech challenge: Towards far-field multi-channel speech enhancement for video conferencing. arXiv 2021, arXiv:2104.00960. [Google Scholar]

- Rix, A.; Beerends, J.; Hollier, M.; Hekstra, A. Perceptual evaluation of speech quality (pesq)—A new method for speech quality assessment of telephone networks and codecs. In Proceedings of the ICASSP, Salt Palace Convention Center, Salt Lake City, UT, USA, 7–11 May 2001; pp. 749–752. [Google Scholar]

- Jensen, J.; Taal, C. An algorithm for predicting the intelligibility of speech masked by modulated noise maskers. IEEE/ACM Trans. Audio Speech Lang. Proc. 2016, 24, 2009–2022. [Google Scholar] [CrossRef]

| Parameters | Description |

|---|---|

| Architecture | x86-64 |

| CPU op-mode(s) | 32-bit, 64-bit |

| CPU(s) | 96 |

| On-line CPU(s) list | 0-95 |

| Thread(s) per core | 2 |

| Vendor ID | GenuineIntel |

| CPU family | 6 |

| Model | 85 |

| Model name | Montage(R) Jintide(R) C2460 1 |

| Stepping | 4 |

| CPU MHz | 1001.030 |

| CPU max MHz | 2101.0000 |

| CPU min MHz | 1000.0000 |

| BogoMIPS | 4202.12 |

| Memory device(s) | 32 GB × 16 |

| GPU | TESLA V100 PCIe 32 GB |

| Metrics | PESQ | ESTOI (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | |

| Unprocessed | 1.38 | 1.54 | 1.58 | 1.70 | 1.91 | 1.62 | 25.81 | 35.86 | 40.42 | 47.51 | 56.77 | 41.27 |

| MC-ConvTasNet | 1.93 | 2.18 | 2.20 | 2.33 | 2.41 | 2.21 | 56.80 | 64.42 | 64.60 | 68.93 | 71.99 | 65.35 |

| MIMO-UNet | 1.93 | 2.18 | 2.27 | 2.41 | 2.57 | 2.27 | 54.99 | 63.73 | 66.81 | 71.77 | 75.74 | 66.61 |

| FaSNet-TAC | 2.03 | 2.31 | 2.34 | 2.47 | 2.66 | 2.36 | 54.64 | 64.46 | 66.20 | 71.03 | 75.73 | 66.41 |

| Proposed | 2.52 | 2.87 | 2.96 | 3.10 | 3.30 | 2.95 | 67.98 | 77.03 | 79.68 | 82.79 | 87.36 | 78.97 |

| Metrics | PESQ | ESTOI (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | |

| Unprocessed | 1.27 | 1.35 | 1.43 | 1.54 | 1.76 | 1.47 | 24.35 | 32.89 | 39.00 | 44.20 | 54.32 | 38.95 |

| MC-ConvTasNet | 1.98 | 2.09 | 2.24 | 2.34 | 2.41 | 2.21 | 55.61 | 59.62 | 63.49 | 67.29 | 68.36 | 62.87 |

| MIMO-UNet | 2.11 | 2.35 | 2.51 | 2.56 | 2.70 | 2.45 | 57.06 | 65.17 | 69.45 | 71.84 | 75.65 | 67.83 |

| FaSNet-TAC | 2.11 | 2.23 | 2.40 | 2.48 | 2.63 | 2.37 | 55.10 | 60.87 | 66.30 | 69.29 | 73.32 | 64.98 |

| Proposed | 2.59 | 2.78 | 2.97 | 3.11 | 3.26 | 2.94 | 67.20 | 73.58 | 78.46 | 81.47 | 85.44 | 77.23 |

| Metrics | PESQ | ESTOI (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | |

| Unprocessed | 1.38 | 1.54 | 1.68 | 1.78 | 1.94 | 1.66 | 29.91 | 38.43 | 45.49 | 51.73 | 60.65 | 45.24 |

| MC-ConvTasNet | 2.00 | 2.13 | 2.24 | 2.31 | 2.41 | 2.22 | 56.52 | 62.84 | 64.07 | 67.38 | 70.94 | 64.35 |

| MIMO-UNet | 2.09 | 2.34 | 2.47 | 2.53 | 2.69 | 2.42 | 58.21 | 66.97 | 70.40 | 73.38 | 77.59 | 69.31 |

| FaSNet-TAC | 2.14 | 2.32 | 2.45 | 2.57 | 2.69 | 2.43 | 56.97 | 64.63 | 66.79 | 71.56 | 75.97 | 67.18 |

| Proposed-10beams | 2.68 | 2.88 | 3.07 | 3.22 | 3.38 | 3.05 | 71.73 | 77.23 | 81.68 | 84.84 | 88.58 | 80.81 |

| Metrics | PESQ | ESTOI (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | |

| Unprocessed | 1.36 | 1.49 | 1.54 | 1.62 | 1.78 | 1.56 | 26.54 | 35.27 | 39.86 | 45.49 | 53.87 | 40.21 |

| MC-ConvTasNet | 1.89 | 2.16 | 2.28 | 2.38 | 2.55 | 2.25 | 51.67 | 59.84 | 64.02 | 67.37 | 72.53 | 63.08 |

| MIMO-UNet | 2.29 | 2.55 | 2.66 | 2.76 | 2.92 | 2.63 | 60.44 | 68.67 | 72.29 | 75.50 | 79.58 | 71.30 |

| FaSNet-TAC | 2.14 | 2.35 | 2.44 | 2.52 | 2.65 | 2.42 | 56.45 | 63.35 | 66.61 | 69.24 | 73.73 | 65.88 |

| Proposed-10beams (w/o U-Net) | 2.64 | 2.89 | 2.98 | 3.08 | 3.24 | 2.97 | 69.83 | 76.83 | 80.07 | 82.36 | 85.97 | 79.01 |

| Proposed-10beams | 2.73 | 2.97 | 3.07 | 3.17 | 3.32 | 3.05 | 71.93 | 78.43 | 81.69 | 83.76 | 87.10 | 80.58 |

| Metrics | PESQ | ESTOI (%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | −5 dB | −2 dB | 0 dB | 2 dB | 5 dB | Avg. | |

| Unprocessed | 1.34 | 1.48 | 1.56 | 1.67 | 1.87 | 1.58 | 26.69 | 35.73 | 41.64 | 47.81 | 57.25 | 41.82 |

| Proposed-10beams (w/o RSU) | 2.35 | 2.64 | 2.79 | 2.95 | 3.14 | 2.77 | 63.34 | 71.86 | 76.29 | 80.33 | 85.14 | 75.39 |

| Proposed-10beams (w/o RRM) | 2.48 | 2.70 | 2.85 | 3.01 | 3.20 | 2.85 | 65.73 | 73.64 | 77.94 | 81.46 | 85.79 | 76.91 |

| Proposed-7beams | 2.57 | 2.82 | 2.97 | 3.12 | 3.29 | 2.95 | 68.45 | 75.49 | 79.52 | 82.72 | 86.85 | 78.61 |

| Proposed-10beams | 2.60 | 2.84 | 3.00 | 3.14 | 3.31 | 2.98 | 68.97 | 75.95 | 79.94 | 83.03 | 87.13 | 79.00 |

| Proposed-19beams | 2.61 | 2.87 | 3.01 | 3.15 | 3.33 | 2.99 | 69.69 | 76.52 | 80.17 | 83.24 | 87.44 | 79.41 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Li, A.; Wang, X.; Yuan, M.; Chen, Y.; Zheng, C.; Li, X. A Neural Beamspace-Domain Filter for Real-Time Multi-Channel Speech Enhancement. Symmetry 2022, 14, 1081. https://doi.org/10.3390/sym14061081

Liu W, Li A, Wang X, Yuan M, Chen Y, Zheng C, Li X. A Neural Beamspace-Domain Filter for Real-Time Multi-Channel Speech Enhancement. Symmetry. 2022; 14(6):1081. https://doi.org/10.3390/sym14061081

Chicago/Turabian StyleLiu, Wenzhe, Andong Li, Xiao Wang, Minmin Yuan, Yi Chen, Chengshi Zheng, and Xiaodong Li. 2022. "A Neural Beamspace-Domain Filter for Real-Time Multi-Channel Speech Enhancement" Symmetry 14, no. 6: 1081. https://doi.org/10.3390/sym14061081

APA StyleLiu, W., Li, A., Wang, X., Yuan, M., Chen, Y., Zheng, C., & Li, X. (2022). A Neural Beamspace-Domain Filter for Real-Time Multi-Channel Speech Enhancement. Symmetry, 14(6), 1081. https://doi.org/10.3390/sym14061081