1. Introduction

The object-detection task for remote sensing images, as one of the important tasks of remote sensing image interpretation, needs to detect all the objects describing the Earth’s surface objects in remote sensing images, to achieve the goals of determining the classes of objects and accurate localization. The classes of interest in the object-detection task for remote sensing images mainly encompass airplanes, buildings, vehicles, vessels, etc., playing a highly crucial role in object tracking and monitoring, activity detection, scenario analysis, urban planning, military research, and other application fields [

1]. Deep learning automatically acquires the colors, edges, textures, contours, and other low-level features of images and advanced features macroscopically describing the semantics [

2], and its performance in object-detection tasks for remote sensing images has already exceeded that of traditional detection algorithms, which design features manually [

3]. However, it is important to note that the deep-learning method needs the trained data to be integral and to have sufficiently rich features. In practice, the real world is dynamically changing, and new remote sensing image data appear continuously over time.

Classical learning paradigms that learn from static data are not suitable for such a scenario of incremental data change. Incremental learning (IL) [

4,

5,

6,

7] has received much attention among the methods for handling streaming data. Specifically, IL has three requirements: (1) not having access to large amounts of old data again; (2) maintaining stability, that is, firmly committing the knowledge learned from old data to memory (this is difficult because, when the neural network only learns new data, a catastrophic forgetting of the old knowledge occurs [

8,

9]); (3) achieving plasticity, that is, continuing to learn useful knowledge from new data to fit the distribution of new data. Most studies on IL [

10,

11,

12,

13] generally focus on a class-incremental scenario for image classification, which has achieved good results in solving the problem of the catastrophic forgetting of an old class. For more complex object-detection tasks, when the domain of the class changes, the methods described in [

14,

15,

16,

17,

18] can enable the detector to adapt to the new and old classes.

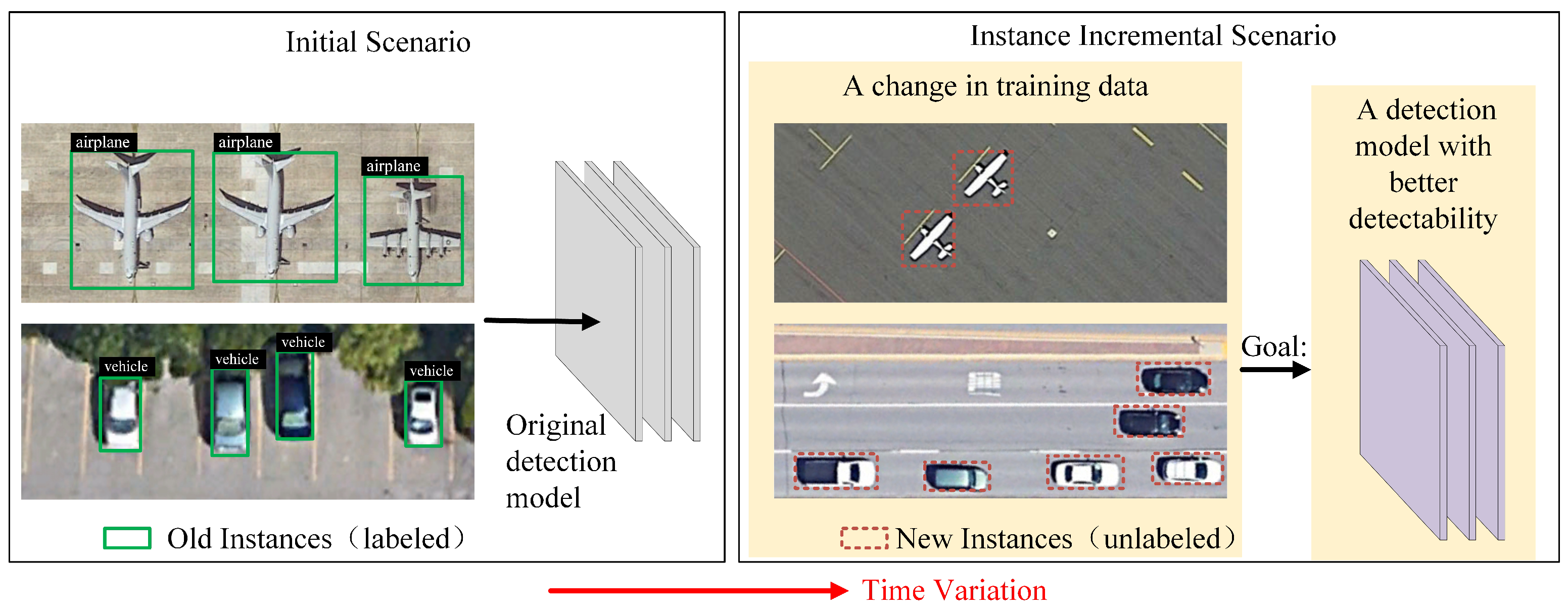

However, in the field of remote sensing, the classes of objects are relatively constant. The instance increment scenario in remote sensing object detection is common, but there is a lack of research. The instances of the same class provided by remote sensing satellites are ever increasing, and the feature diversity of an object is continuously enriched. The feature diversity of the objects of remote sensing images refers specifically to the diversity in color, size, resolution, background complexity, and other aspects, which stems from a myriad of factors, including imaging factors such as the imaging condition and imaging quality; environmental factors such as the weather, season, and geographic location; and intrinsic semantic factors, such as the shape and visual appearance. When the feature diversity of the old instance data is not enough, the trained model cannot adapt to the new instance well. As shown in

Figure 1, the original detection model trains old instances in the initial scenario. When new instances are added to the training data, the detection model should fit the features of all the objects and demonstrate improved detectability after IL. Moreover, a mass of unlearned new instances are unlabeled and need to be manually annotated before being provided for IL.

In the instance incremental scenario, the plasticity and stability of IL come from new and old instances, respectively, and the IL value of the data is different. For the new instances, there are some data with features similar to those of the old instances, and there are also data with features different from those of the old instances. The former situation is not well understood by the detection model, which is more valuable for the plasticity of IL. Using a memory buffer [

6] is a good strategy for maintaining stability, i.e., relearning some of the old instances. With the same buffer volume, different old instances represent different memory ranges, and the IL value of consolidating stability is different. If all the data are treated in a consistent manner, resources are wasted due to the label costs of new instances, the storage space for old instances, and training resources, which eventually lead to low learning efficiency. The existing methods solve data differences at a single level using the data-learning order or a training strategy. Some methods in the domain of active learning [

19,

20,

21,

22,

23] can give priority to learning important new instances by estimating the learning value of unlabeled samples via the query algorithm; the methods focusing on hard samples [

24,

25,

26] can adjust the learning process. However, there is a lack of an effective two-level unified method.

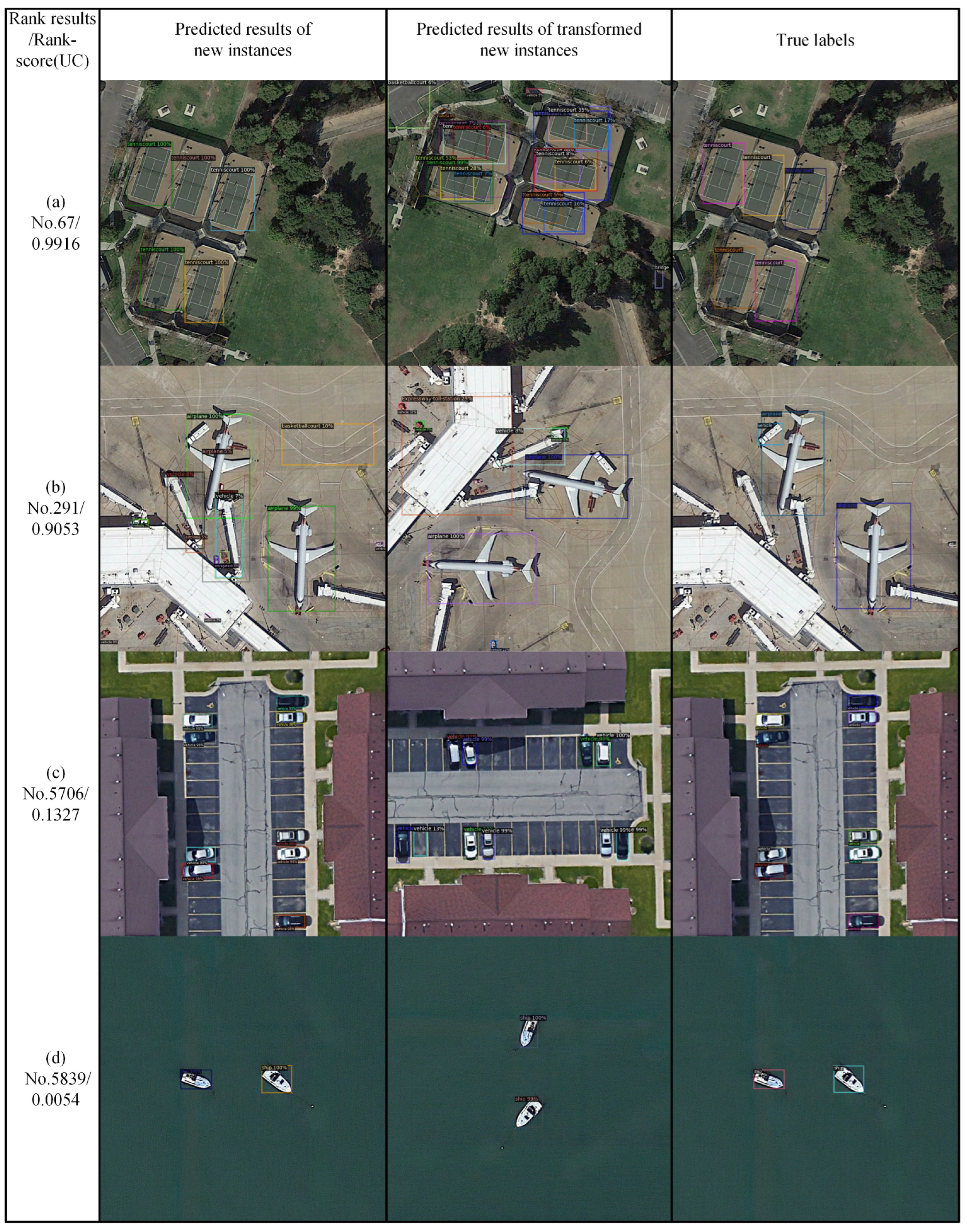

Therefore, this study designed a rank-aware instance-incremental learning (RAIIL) method for the instance incremental scenario of remote sensing image object detection. This method includes instance rank (IR) and rank-aware incremental learning (RAIL). Oriented to the unlabeled new instances and learned old instances in IR, the designed rank-score can automatically estimate the IL value and rank data. In RAIL, the rank-aware loss is designed for loss weighting according to the rank-score to balance the training contributions. The key to the success of the method lies in the rank-scores of the instances. The new instances that the model cannot understand have a high learning value, and the rank-scores of new instances are measured according to the uncertainty of the predicted results without artificial visual participation. The idea of selecting uncertain data to learn comes from active learning [

27], but it is not focused on the learned data. For the old instances, considering that the representative samples can better restore memory and that whether they are representative is evaluated from the prediction results, the rank-score is calculated according to the uncertainty and inaccuracy of the prediction results. Afterwards, according to the rank-score, uniform sampling makes the prediction results as rich as possible.

The main contributions of this article are summarized as the following three points:

For the object-detection task for remote sensing images, a rank-aware instance-incremental learning method for the instance incremental scenario, which is an incremental learning paradigm using the learning order and a training strategy for learning streaming data with differing values, is proposed.

The calculation method for the rank-score was designed based on the uncertainty and inaccuracy of the predicted results to adaptively rank new instances and old instances; meanwhile, a uniform sample weighting direction was provided for model training.

Experiments were conducted on two widely used remote sensing image datasets, the superiority of the proposed method compared to the existing methods was verified, and the intrinsic effectiveness of the method was verified by an ablation experiment and a hyperparameter analysis experiment.

4. Materials for Experiments

4.1. Datasets

An experiment was conducted on DIOR [

62] and DOTA [

63], two commonly used public datasets for remote sensing images, which have ample classes for object detection. DIOR has 23,463 images of size 800 × 800, including 192,472 instance objects covering 20 common classes. DIOR falls into a training set, validation set, and test set, containing 5862, 5863, and 11,725 images, respectively. DOTA has 2806 images varying in size from 800 × 800 to 4000 × 4000, including 188,282 objects covering 15 classes. DOTA falls into a training set, validation set, and test set, containing 1411, 758, and 937 images, respectively. Since the test set of DOTA is unusable, its training set was used for determining the training and testing accuracy over the validation set. Additionally, since a uniform size was required, 9123 and 2796 images were generated, respectively, within the 800*800 range to comprise a usable training set and a validation set, after the original DOTA images were clipped.

4.2. Baselines

There is no existing method for instance-IL for object detection. We considered the correlation, representativeness, and reproducibility and selected existing methods that could deal with incremental learning or data differences to verify that this proposed method could solve the problem of data differentiation learning in an instance incremental scenario.

(1) Fine-tuning (FT). It suggests that there is no difference between new instances and that they are added into the training set in a disorderly fashion. At the same time, the original model is used to fine-tune the parameters.

(2) ALDOD [

19]. It actively selects samples for learning according to the query strategy. We selected two mean-based active query strategies: Avg (based on the average 1v2 confidence level) and Avg + w (based on the average 1v2 confidence level while balancing the numbers of objects of different classes). This method is used in new instances.

(3) The memory buffer (MB). It adds new instances into the training set in a disorderly manner while accounting for the existence of forgetting and preserving old instances, also in a disorderly manner.

(4) Focal loss (FL) [

25]. It suggests that there is a difference in the difficulty of new samples and that the losses of hard samples should be balanced during model training. This method is used in new instances.

4.3. Experiment Setup

4.3.1. Division of Datasets for the Instance Incremental Scenario

To simulate the instance incremental scenario, the whole dataset was divided into three sub-datasets, one for old instances, one for new instances, and a third for test instances. Old instances were used to train the original model. As new instances appeared later, the model needed to start incremental learning. Test instances were always fixed before and after incrementation, to estimate the accuracy of the trained model. For DIOR, the training set was set as old instances, the validation set as new instances, and the test set as test instances. For DOTA, half of the elements of the training set were selected at random as old instances, and the other half as new instances. The original validation set was used as test instances. The statistics regarding the division of the datasets are shown in

Table 1 and

Table 2. The old instances and new instances of each dataset have roughly the same numbers of images and objects.

4.3.2. Sampling Proportion Setting for IL

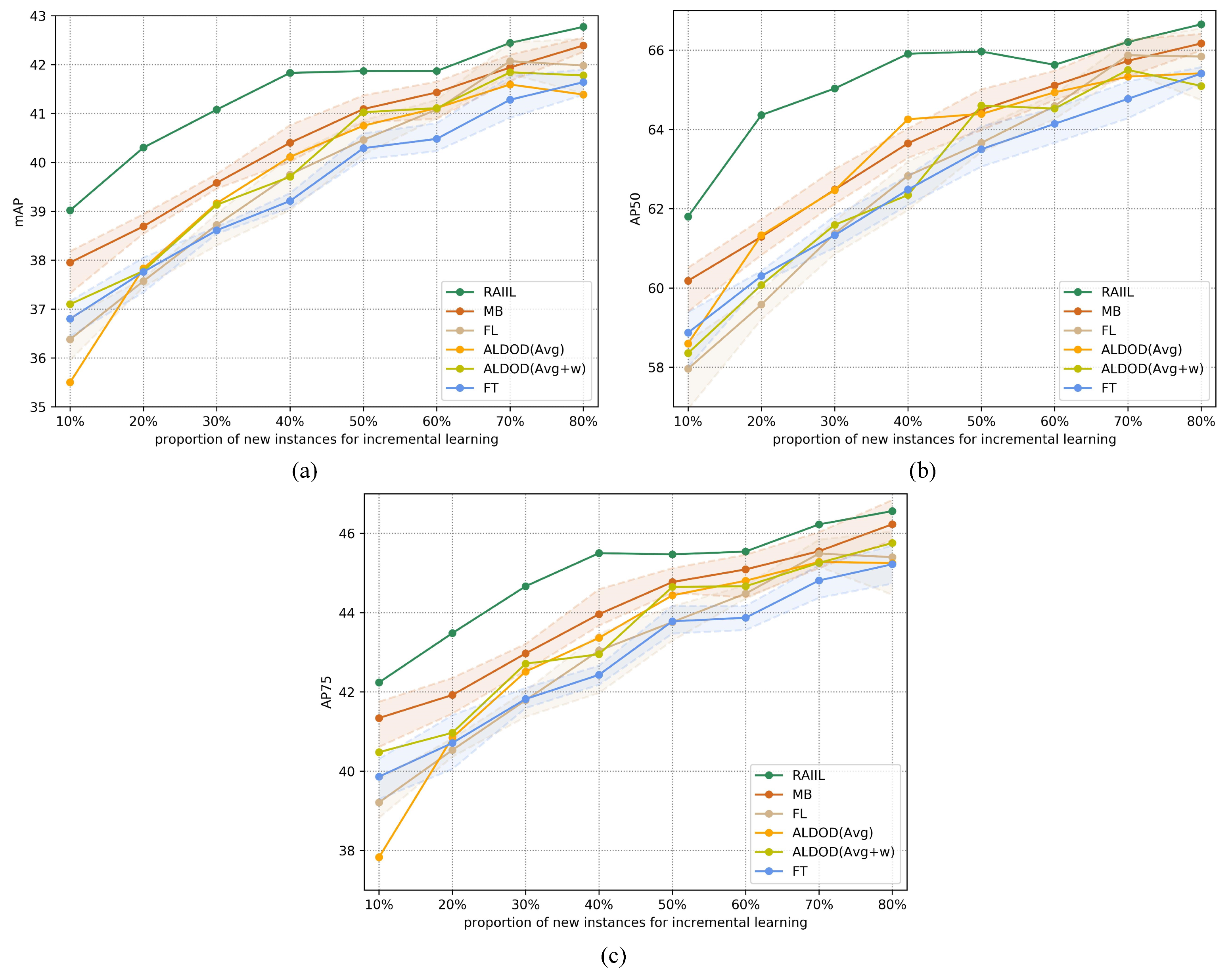

To explore and compare the performance of different methods (including the baselines and the method proposed in this article) regarding data differences, we set distinct sampling proportions of new instances and old instances for incremental training.

The proportion of new instances varied from 10% to 80% in intervals of 10%. Some of the new instances were sampled according to a certain proportion by the sampling strategy for one method. From the experimental accuracy results, we could explore whether sampling this part of the data was useful and whether the model could learn it well.

When old instances are relearned in the method, due to the limitations of storage space and training resources, most data cannot be retained. The default proportion of old instances is set as 25%, and other smaller proportions are set as 5–20% in intervals of 5%. IL uses a certain proportion to sample old instances for relearning, and we analyzed the results to determine if those data were useful.

4.3.3. Training Configuration

The object-detection model used was a Faster R-CNN, which exhibits outstanding accuracy for DIOR and DOTA. The backbone network adopted the Resnet-50-FPN. The specific implementation was based on Detectron2 [

64]. The GPU was configured with an NVIDIA GeForce GTX 2080. The pretraining model for the COCO dataset [

61] was utilized and relocated onto the object-detection task for remote sensing images. If the random selecting operation was available in the method, then the execution needed to be repeated five times. In the hyperparameter setting, during the training period, the initial learning rate was set as 0.00025, the batch size as 4, and the epochs as 12. The hyperparameters

and

of RAIIL were set as 1 by default, and the transformation operation on the images was set as a clockwise rotation by 90°. For the hyperparameter of FL [

25], we referred to the original document and set the value of

as 2. Since the Faster R-CNN is a two-stage detection model, the classification losses in the structures of the RPN and the Fast R-CNN became focal losses. Additionally, the value of focal losses in classification was converted to the same value as the value of the original classification losses to avoid an imbalance between the classification losses and regression losses.

4.4. Precision Metrics

Like the existing incremental detection methods [

14,

15,

16,

17,

18], this study also adopted the average precision (AP) as the metric of the instance-IL for object detection. The AP metric comprehensively estimated the precision of the classification and coordinate regression results over the test set. Specifically, three precision metrics of the COCO dataset [

61] were adopted:

(1) mAP: the mean AP below all the thresholds (0.50:0.05:0.95) of the IoU (intersection over union) and under all the classes, which integrates different requirements on the positioning accuracy and reflects the average performance;

(2) AP50: the AP of all the classes below the threshold 0.5 of IoU; as per the positioning accuracy requirement, the IoU between the predicted box and ground truth should be greater than 0.5;

(3) AP75: the AP of all the classes below the threshold 0.75 of IoU; as per the positioning accuracy requirement, the IoU between the predicted box and ground truth should be greater than 0.75.

7. Conclusions

In this work, the instance incremental scenario of the object-detection task for remote sensing images was explored. The instance incremental scenario where new instances are continuously added is more applicable than scenarios where data are closed and static. We analyzed the difference in the learning value of data in the instance incremental scenario, proposed the RAIIL method, designed a rank-score function for the value estimation of learned and unlearned data, and designed a balanced training strategy with the rank-aware loss. Over two remote sensing datasets, DIOR and DOTA, full experiments were conducted, including performance comparison, data labeling cost, ablation of methods, visualization of rank results, exploring the parameter sensitivity and the influence of the data order. The outstanding results for RAIIL demonstrate the effectiveness of this method. It is hoped that the work presented here can assist more studies in exploring instance incremental detection for remote sensing images.