1. Introduction

Let

H be a real Hilbert space with the norm

and

C be a nonempty closed convex subset of

A mapping

is said to be

nonexpansive if it satisfies the following symmetric contractive-type condition:

for all

; see [

1].

The notation of the set of all fixed points of T is

Many mathematicians have studied iterative schemes for finding the approximate fixed-point theorem of nonexpansive mappings over many years; see [

2,

3]. One of these is the Picard iteration process, which is well known and popular. Picard’s iteration process is defined by

where

and an initial point

is randomly selected.

The iterative process of Picard has been developed extensively by many mathematicians, as follows:

Mann iteration process [

4] is defined by

where

and an initial point

is randomly selected and

is a sequence in

.

Ishikawa iteration process [

5] is defined by

where

and an initial point

is randomly selected and

,

are sequences in

.

S-iteration process [

6] is defined by

where

and an initial point

is randomly selected and

,

are sequences in

. We know that the S-iteration process (

3) is independent of Mann and Ishikawa iterative schemes and converges quicker than both; see [

6].

Noor iteration process [

7] is defined by

where

and an initial point

is randomly selected and

,

are sequences in

. We can see that Mann and Ishikawa iterations are special cases of the Noor iteration.

SP-iteration process [

8] is defined by

where

and an initial point

is randomly selected and

,

are sequences in

. We know that Mann, Ishikawa, Noor and SP-iterations are equivalent and the SP-iteration converges faster than the other; see [

8].

The fixed-point theory is a rapidly growing field of research because of its many applications. It has been found that a self-map on a set admits a fixed point under specific conditions. One of the recent generalizations is due to Jachymiski.

Jachymski [

9] proved some generalizations of the Banach contraction principle in a complete metric space endowed with a directed graph using a combination of fixed-point theory and graph theory. In Banach spaces with a graph, Aleomraninejad et al. [

10] proposed an iterative scheme for

G-contraction and

G-nonexpansive mappings.

G-monotone nonexpansive multivalued mappings on hyperbolic metric spaces endowed with graphs were defined by Alfuraidan and Khamsi [

11]. On a Banach space with a directed graph, Alfuraidan [

12] showed the existence of fixed points of monotone nonexpansive mappings. For

G-nonexpansive mappings in Hilbert spaces with a graph, Tiammee et al. [

13] demonstrated Browder’s convergence theorem and a strong convergence theorem of the Halpern iterative scheme. The convergence theorem of the three-step iteration approach for solving general variational inequality problems was investigated by Noor [

7]. According to [

14,

15,

16,

17], the three-step iterative method gives better numerical results than the one-step and two-step approximate iterative methods. For approximating common fixed points of a finite family of

G-nonexpansive mappings, Suantai et al. [

18] combined the shrinking projection with the parallel monotone hybrid method. Additionally, they used a graph to derive a strong convergence theorem in Hilbert spaces under certain conditions and applied it to signal recovery. There is also research related to the application of some fixed-point theorem on the directed graph representations of some chemical compounds; see [

19,

20].

Several fixed-point algorithms have been introduced by many authors [

7,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18] for finding a fixed point of

G-nonexpansive mappings with no inertial technique. Among these algorithms, we need those algorithms that are efficient for solving the problem. So, some accelerated fixed-point algorithms have been introduced to improve convergence behavior; see [

21,

22,

23,

24,

25,

26,

27,

28]. Inspired by these works mentioned above, we employed a coordinate affine structure to define an accelerated fixed-point algorithm with an inertial technique for a countable family of

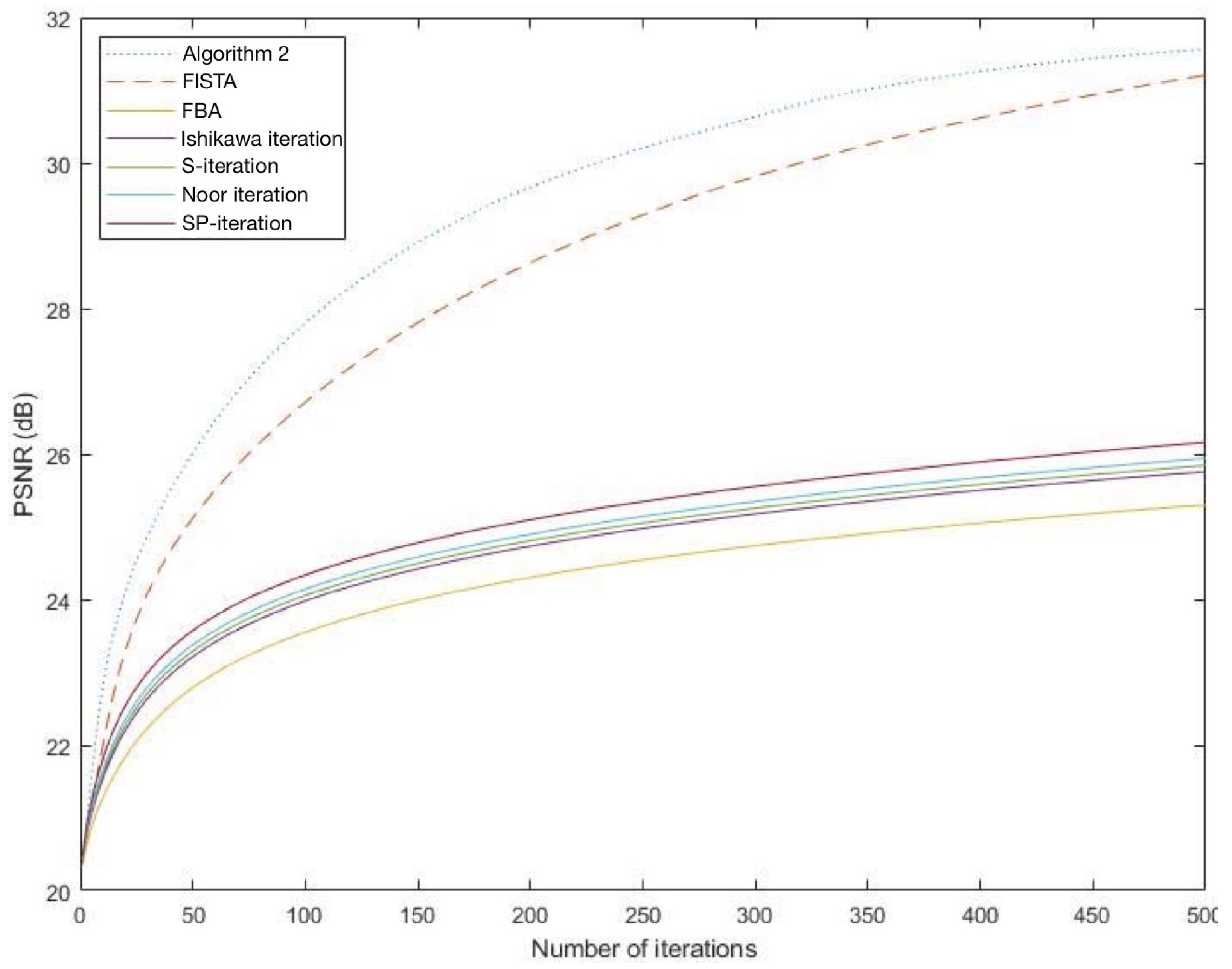

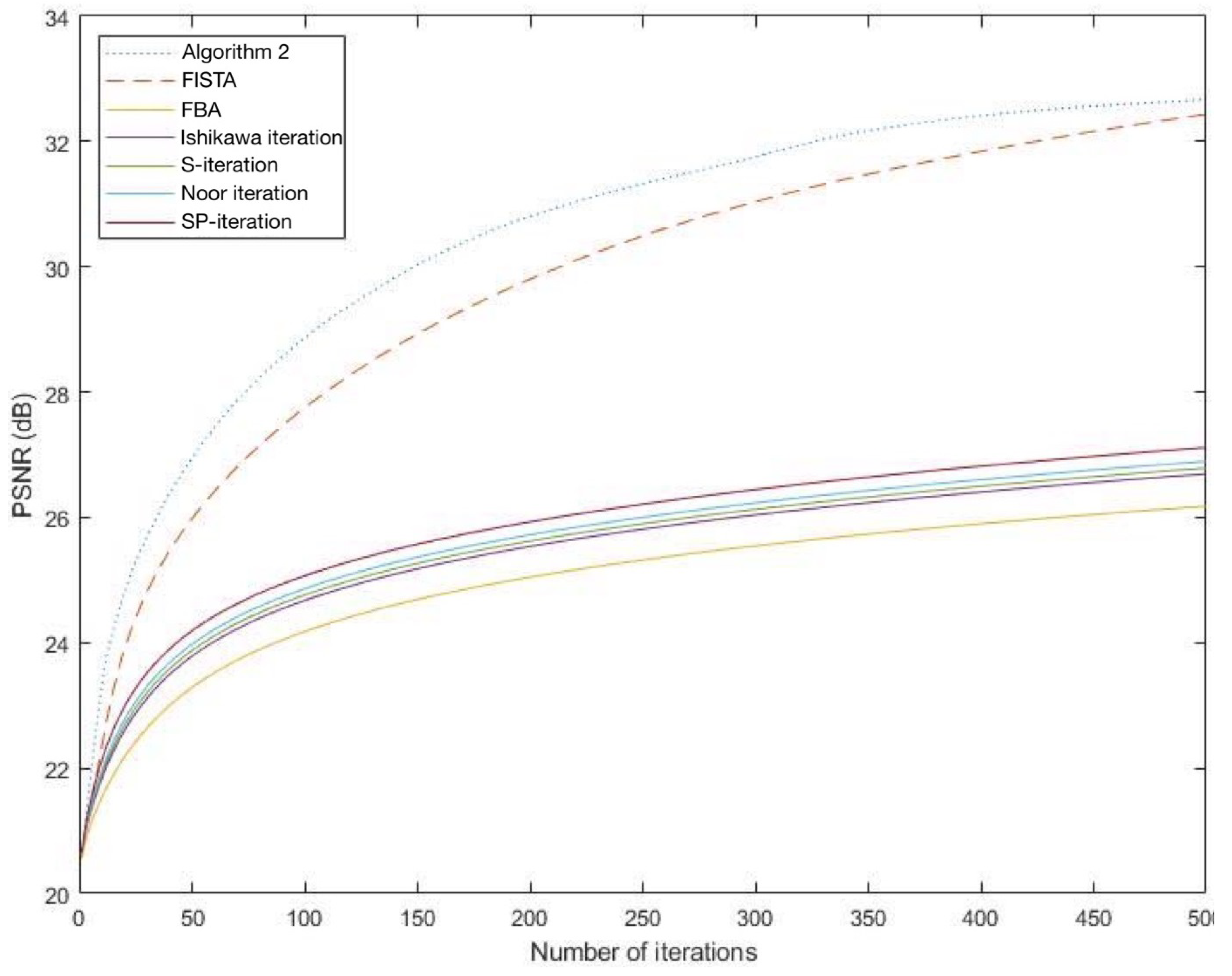

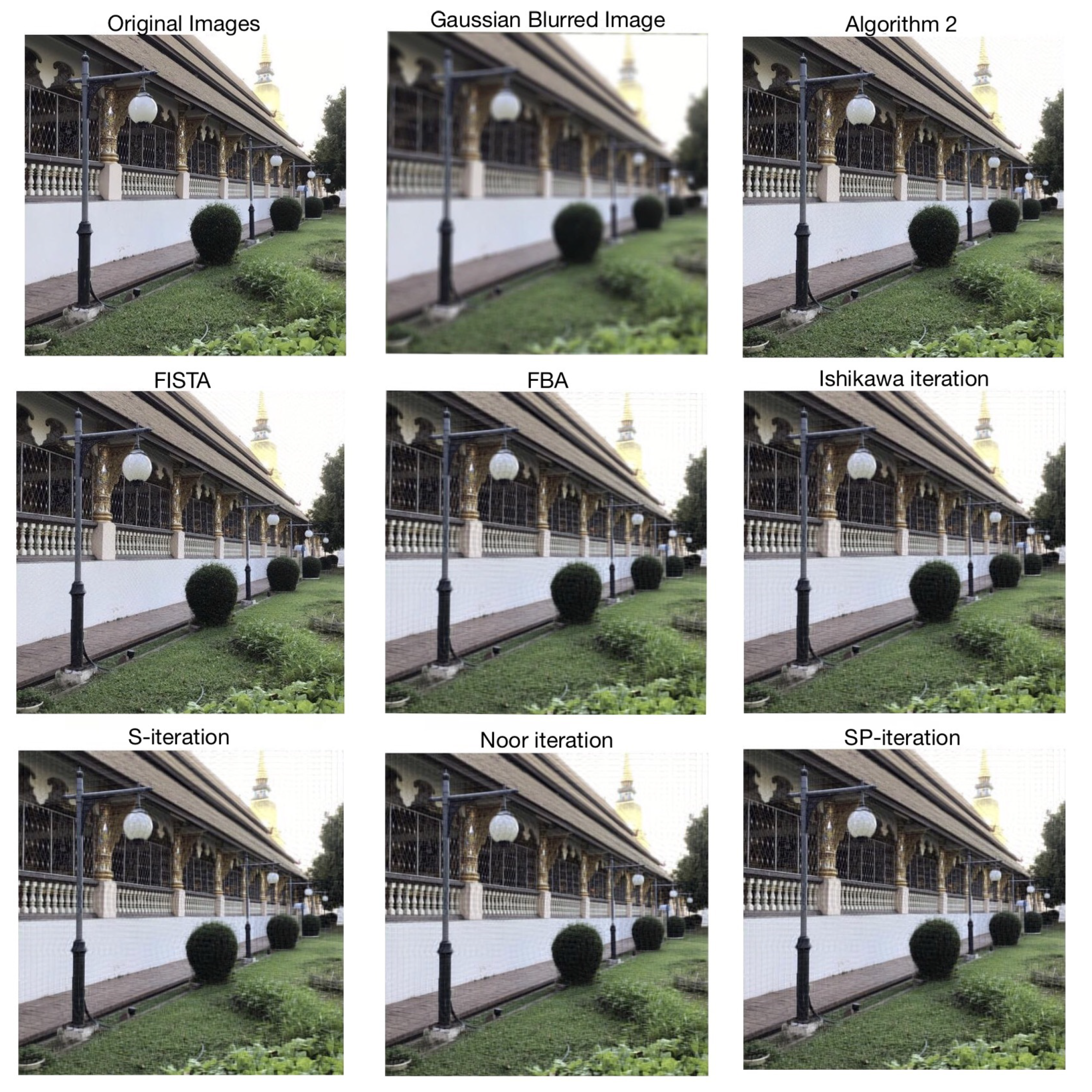

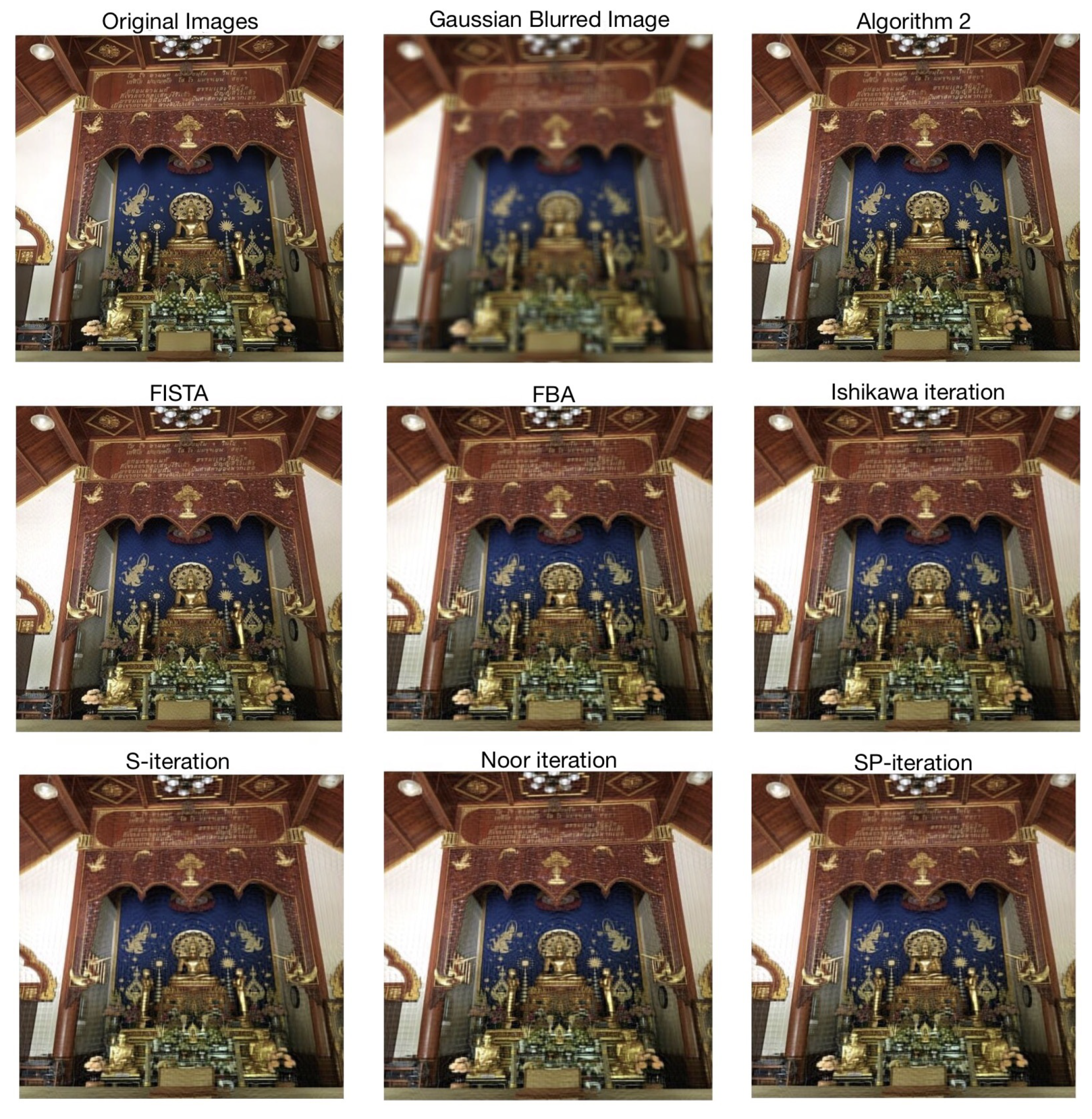

G-nonexpansive mappings applied to image restoration and convex minimization problems.

This paper is divided into four sections. The first section is the introduction. In

Section 2, we recall the basic concepts of mathematics, definitions, and lemmas that will be used to prove the main results. In

Section 3, we prove a weak convergence theorem of an iterative scheme with the inertial step for finding a common fixed point of a countable family of

G-nonexpansive mappings. Furthermore, we apply our proposed method for solving image restoration and convex minimization problems; see

Section 4.

2. Preliminaries

The basic concepts of mathematics, definitions, and lemmas discussed in this section are all important and useful in proving our main results.

Let

X be a real normed space and

C be a nonempty subset of

X. Let

, where Δ stands for the diagonal of the Cartesian product

. Consider a directed graph

G in which the set

of its vertices corresponds to

C, and the set

of its edges contains all loops, that is

Assume that

G does not have parallel edges. Then,

. The conversion of a graph

G is denoted by

. Thus, we have

A graph G is said to be symmetric if ; we have .

A graph G is said to be transitive if for any such that ; then,

Recall that a graph

G is

connected if there is a path between any two vertices of the graph

Readers might refer to [

29] for additional information on some basic graph concepts.

We say that a mapping

is said to be

G-contraction [

9] if

T is edge preserving, i.e.,

for all

, and there exists

such that

for all

where

is called a contraction factor. If

T is edge preserving, and

for all

, then

T is said to be

G-nonexpansive; see [

13].

A mapping is called G-demiclosed at 0 if for any sequence and ; then, .

To prove our main result, we need to introduce the concept of the coordinate affine of the graph

. For any

,

with

we say that

is said to be

left coordinate affine if

for all

,

Similar to this,

is said to be

right coordinate affine if

for all

,

If is both left and right coordinate affine, then is said to be coordinate affine.

The following lemmas are the fundamental results for proving our main theorem; see also [

21,

30,

31].

Lemma 1 ([

30])

. Let and such thatwhere If and then exists. Lemma 2 ([

31])

. For a real Hilbert space H, the following results hold:(i) For any and Lemma 3 ([

21])

. Let and such thatwhere Then,where Furthermore, if then is bounded. Let be a sequence in We write to indicate that a sequence converges weakly to a point Similarly, will symbolize the strong convergence. For if there is a subsequence of such that then v is called a weak cluster point of Let be the set of all weak cluster points of

The following lemma was proved by Moudafi and Al-Shemas; see [

32].

Lemma 4 ([

32])

. Let be a sequence in a real Hilbert space H such that there exists satisfying:(i) For any exists.

(ii) Any weak cluster point of

Then, there exists such that

Let

and

be families of nonexpansive mappings of

C into itself such that

where

is the set of all common fixed points of each

A sequence

satisfies the NST-condition (I) with

if, for any bounded sequence

in

for all

; see [

33]. If

then

satisfies the NST-condition (I) with

The forward–backward operator of lower semi-continuous and convex functions of has the following definition:

A forward-backward operator

T is defined by

for

, where

is the gradient operator of function

f and

(see [

34,

35]). Moreau [

36] defined the operator

as the proximity operator with respect to

and function

g. Whenever

, we know that

T is a nonexpansive mapping and

L is a Lipschitz constant of

. We have the following remark for the definition of the proximity operator; see [

37].

Remark 1. Let be given by . The proximity operator of g is evaluated by the following formulawhere and . The following lemma was proved by Bassaban et al.; see [

22].

Lemma 5. Let H be a real Hilbert space and T be the forward–backward operator of f and g, where g is a proper lower semi-continuous convex function from H into , and f is a convex differentiable function from H into with gradient being L-Lipschitz constant for some . If is the forward–backward operator of f and g such that with a, , then satisfies the -condition (I) with T.

3. Main Results

In this section, we obtain a useful proposition and a weak convergence theorem of our proposed algorithm by using the inertial technique.

Let C be a nonempty closed and convex subset of a real Hilbert space H with a directed graph such that . Let be a family of G-nonexpansive mappings of C into itself such that .

The following proposition is useful for our main theorem.

Proposition 1. Let and be such that , . Let be a sequence generated by Algorithm 1. Suppose is symmetric, transitive and left coordinate affine. Then, for all

| Algorithm 1 (MSPA) A modified SP-algorithm |

- 1:

Initial. Take , are arbitary and , and where is called an inertial step size. - 2:

Step 1. and are computed by

Then, and go to Step 1.

|

Proof. We shall prove the results by using mathematical induction. From Algorithm 1, we obtain

Since

,

and

is left coordinate affine, we obtain

and

Since

and

is edge preserving, we obtain

. Next, suppose that

for

We shall show that

and

By Algorithm 1, we obtain

and

Since

is left coordinate affine,

is edge preserving and from (

6)–(

9), we obtain

and

By mathematical induction, we conclude that

for all

Since

is symmetric, we obtain

Since

and

is transitive, we obtain

The proof is now complete. □

In the following theorem, we prove the weak convergence of G-nonexpansive mapping by using Algorithm 1.

Theorem 1. Let C be a nonempty closed and convex subset of a real Hilbert space H with a directed graph with and is symmetric, transitive and left coordinate affine. Let and be a sequence in H defined by Algorithm 1. Suppose that satisfies the NST-condition (I) with T such that and for all Then, converges weakly to a point in

Proof. Let

. By the definitions of

and

, we obtain

and

By the definition of

and (

11), we obtain

From (

10)–(

12), we obtain

So, we obtain

, where

from Lemma 3. Thus,

is bounded because

. Then,

Note that

being bounded implies that

and

are also bounded. By Lemma 1 and (

13), we find that

exists. Then, we let

From the boundedness of

and (

12), we obtain

By (

10) and (

14), we obtain

From (

15) and (

16), it follows that

Similarly, from (

11), (

12), (

17) and the boundedness of

, we obtain

From (

18), we obtain that

It follows that

exists. By the definition of

and Lemma 2 (i), we obtain

From (

14) and (

19), we obtain

Since

and from (

20), it follows that

Since

is bounded, (

20), and

satisfies the NST-condition (I) with

T, we obtain that

Let

be the set of all weak cluster points of

Then,

by the demicloseness of

at

By Lemma 3, we conclude that there exists

such that

and it follows from (

21) that

The proof is now complete. □