Abstract

In this paper, a stochastic quasi-Newton algorithm for nonconvex stochastic optimization is presented. It is derived from a classical modified BFGS formula. The update formula can be extended to the framework of limited memory scheme. Numerical experiments on some problems in machine learning are given. The results show that the proposed algorithm has great prospects.

Keywords:

nonconvex stochastic optimization; stochastic approximation; quasi-Newton method; damped limited-memory BFGS method; variance reduction PACS:

62L20; 90C30; 90C15; 90C60

1. Introduction

Machine learning is an interdisciplinary subject involving probability theory, statistics, approximation theory, convex analysis, algorithm complexity theory, and so on. In machine learning, people usually construct an appropriate model from an extraordinary large amount of data. Therefore, the traditional algorithms for solving optimization problems are no longer suitable for machine learning problems. A stochastic algorithm must be used to solve the model optimization problem we encounter in machine learning.

This type of problem is considered in machine learning

where is a continuously differentiable function, denotes the expectation taken with respect and is the random variable of the distribution function . In most practical cases, the function is not given intuitively. In addition, even worse, the distribution function may also be unknown. The objective function (1) is defined using the empirical expectation

where is the loss function that corresponds to the ith data sample, and denotes the number of data samples which is assumed to be extremely large.

The stochastic approximation () algorithm is usually used to solve the above problems by Robbins and Monro [1]. The original SA algorithm can also be called random gradient descent (). It is somewhat similar to the classical steepest descent method, which adopts the iterative process of . In general, random gradient is used to represent the approximation of the full gradient of f at and is the step size (Learning rate). The SA algorithm has been deeply studied by many scholars [2,3,4].

In this thesis, we mainly study the stochastic second-order method, that is, stochastic quasi-Newton methods to solve problem (2). Among the traditional optimization methods, the quasi-Newton methods have faster convergence speed and higher convergence accuracy than the first-order method because it uses the approximate second-order derivative information. The quasi Newton method is usually updated by the following iterative formula:

where is the symmetric positive definite approximation of Hessian matrix at or is the symmetric positive definite approximation of . In the traditional BFGS algorithm, the iterative formula of is as follows:

where and . If formula Sherman–Morrison–Woodbury formula is used, the iterative formula of can be easily obtained:

It is very important to use a limited memory variant for large-scale problems. This so-called L-BFGS [5] algorithm has a linear convergence rate. It produces well scaled and productive search directions that yield an approximate solution in fewer iterations and function evaluations. In stochastic optimization, many stochastic quasi Newton formulas have been proposed.

The LBFGS method has the following iteration rule . The LBFGS method updates by the following rule:

where , and r is the memory size. Bordes, Bottomu, and Gallinari studied the quasi Newton method of diagonal rescaling matrix based on secant in [6]. In [7], Byrd et al. proposed a stochastic LBFGS method based on SA and proved its convergence for strongly convex problems. In [8], Gower, Goldfarb, and Richtárik proposed a variance reduced block L-BFGS method that converges linearly for convex functions. It is worth noting that, in the above quasi-Newton methods, the convergence of the algorithm needs to be convex or strongly convex.

If the objective function itself does not have the property of convexity, there are several problems that the LBFGS method has difficulty overcoming:

- How can we guarantee the positive definiteness of iterative matrix without line search?

- How can we guarantee the convergence of the proposed L-BFGS method?

These problems seem particularly difficult. However, a modified stochastic limited BFGS (LMLBFGS) is proposed to solve the above problems. On this basis, a new improved algorithm (LMLBFGS-VR) is proposed. Note that our presented algorithm can be adapted to approximate the solution of a nonlinear system of equations in [9].

2. Premise Setting and Algorithm

In this part, a new LBFGS(LMLBFGS) algorithm is proposed, which can automatically generate a positive definite matrix .

2.1. LMLBFGS Algorithm

In order to solve this kind of problem, suppose that does not depend on x and the random gradient at x is generated by a stochastic first-order oracle (SFO), for which the distribution of is supported on . It is common to use a mini-batch stochastic gradient of the i-th sampling during the k-th iteration, which is described as

and a sub-sampled Hessian defined as follows

We have the subset and is the sample number where and are the cardinalities of and . is a random variable. From the definition of random gradient, it is not difficult to find that the random gradient under this setting can be calculated faster than the full gradient. We assume here that the SFO generation method can separate and independently and generate the output . Therefore, the stochastic gradient difference and the iterative difference are defined as

In traditional methods, the authors in [10] proposed a new type of by using

where

Inspired by their methods, we have the following new definitions:

where

Our is guaranteed to be meaningful by

Hence, our stochastic LBFGS algorithm updates is

Using the Sherman–Morrison–Woodbury formula, we can update as

Through simple observation, we can find the fact that, when the function is nonconvex, we can not guarantee that is true. Thus, we add some additional settings to the algorithm to ensure the nonnegativity of . Define the index set as follows:

where m is a positive constant.

As is known to all, the cost of calculating through (19) is very huge when n is tremendously large. Hence, the LBFGS method is usually used instead of the BFGS method to overcome the poser of a large amount of calculation in large-scale optimization problems. The advantage of LBFGS is that it only uses curvature information and does not need to store the update matrix, which can effectively reduce the computational cost: Use (6) to iterate

where . The initial matrix is often chosen as: . Because may be exceedingly close to 0, we set

and

where is a given constant.

Therefore, our modified stochastic L-BFGS algorithm is outlined in Algorithm 1.

| Algorithm 1: Modified stochastic LBFGS algorithm (LMLBFGS). |

| Input: Given , batch size , , the memory size r, a positive definite matrix , and a positive constant 1: for do 2: Compute by (7) and Hessian matrix by Algorithm 2; 3: Compute the iteration point . 4: end for |

2.2. Extension of Our LMLBFGS Algorithm with Variance Reduction

Recently, using variance reduction technology in stochastic optimization methods can make the algorithm have better properties. Motivated by the development of the SVRG method for nonconvex problems, we present a new modified stochastic LBFGS algorithm (called LMLBFGS-VR) with a variance reduction technique for a faster convergence speed, as shown in Algorithm 3.

In LMLBFGS-VR, the mini-batch stochastic gradient is defined as

| Algorithm 2: Hessian matrix updating. |

| Input: correction pairs , memory parameter r, and Output: new 1: 2: for do 3: 4: if 5: 6: end if 7: end for 8: return |

| Algorithm 3: Modified stochastic LBFGS algorithm with variance reduction (LMLBFGS-VR). |

| Input: Given , , batch size , , the memory size r and a constant Output: Iterationxis chosen randomly from a uniform 1: for do 2: 3: compute 4: for do 5: Samlple a minibatch with 6: Calculate where ; 7: Compute ; 8: end for 9: Generate the updated Hessian matrix by Algorithm 2; 10: 11: end for |

3. Global Convergence Analysis

In this section, the convergence of Algorithms 1 and 3 will be discussed and analyzed.

3.1. Basic Assumptions

In the algorithm, it is assumed that the step size satisfies

Assumption 1.

is continuously differentiable and for any , is bounded below. This means that there is constant that makes

for any .

Assumption 2.

The noise level of the gradient estimation σ such that

where and denotes the expectation taken with respect to .

Assumption 3.

There are positive and such that

Our random variables are defined as follows: are the random samplings in the k-th iteration, and are the random samplings in the first k-th iterations.

Assumption 4.

For any , the random variable depends only on .

From (2) and (4), we can get

3.2. Key Propositions, Lemmas, and Theorem

Lemma 1.

If Assumptions 1–4 hold and for all k, we have

where the conditional expectation is taken with respect to .

Proof.

Taking expectation with respect to on both sides of (31) conditioned on , we gain

where we use the fact that . From Assumption 2, it follows that

Together with (32), we have

Then, combining that with implies (30). □

Before proceeding further, the definition of supermartingale will be introduced [11].

Definition 1.

Let be an increasing sequence of σ-algebras. If is a stochastic process satisfying

- (1)

- ,

- (2)

- and, for all k,

then is called a supermartingale.

Proposition 1.

If is a nonnegative supermartingale, then almost surely and

Lemma 2.

Let be generated by Algorithm 1, where the batch size for all k. Then, there is a constant such that

for all k.

Proof.

For convenience of explanation, we have the following definitions:

Let be the the lower bound of the function and be the -algebra measuring , and . From the definition, we obtain

Hence, we obtain

As a result, we have

□

3.3. Global Convergence Theorem

In this part, we provide the convergence analysis of the proposed Algorithms 1 and 3.

Theorem 1.

Assume that Assumptions 1–4 hold for generated by Algorithm 1, where the batch size is . The step size satisfies (24) and .Then, we have

Proof.

According to Definition 1, is a supermartingale. Hence, there exists a such that with probability 1, and (Proposition 1). Form (36), we have . Thus,

which means that

Since (24), it follows that (48) holds. □

Next, the convergence of the algorithm can be given.

Theorem 2.

If Assumptions A1, A2, and A4 hold for generated by Algorithm 1, where the batch size is . The step size satisfies (24) and . Then, we have

Proof.

The proof will be established by contradiction, and the discussion is listed as follows.

According to the definition of , we have

where . It is easy to see that

where is a positive constant.

According to the definition of , we have

From (41) and (42), we have

where the first inequality is derived from the quasi Newton condition. This equation shows that the eigenvalue of our initial matrix is bounded, and the eigenvalue is much greater than 0.

Instead of directly analyzing the properties of , we get the results by analyzing the properties of . In this situation, the limited memory quasi-Newton updating formula is as follows:

- (i)

- .

- (ii)

- for , and

The determinant of is now considered because the determinant can be used to prove that the minimum eigenvalue of matrix B is uniformly bounded. From the theory in [12], we can get the following equation about matrix determinant:

It can be obtained from (45) that the maximum eigenvalue of matrix is uniformly bounded. Therefore, according to (41) and combining the fact that the smallest eigenvalue of is bounded away from zero, the following equation is obtained:

In this way, the maximum eigenvalue and the minimum eigenvalue of matrix are uniformly bounded and much greater than 0. Therefore, we can get

where and are positive constants. According to Theorem 1 that we proved above, the convergence of our proposed Algorithm 1 can be obtained. □

Corollary 1.

If Assumptions 1, 2, and 4 hold for generated by Algorithm 3, where the batch size is and the step size satisfies (24) and , then, we have

4. The Complexity of the Proposed Algorithm

The convergence results of the algorithm have been discussed. Now, let us analyze the complexity of Algorithms 1 and 3.

Assumption 5.

For any k, we have

Theorem 3.

Suppose Assumptions 1–5 hold, is generated by Algorithm 1, and batch size for all k. Then, we have

where N denotes the iteration number.

Moreover, for a given , to guarantee thatthe number of iterations N needed is at most .

Proof.

Obviously, (49) satisfies (24) and the condition . Then, taking expectations on both sides of (30) and summing over all k yield

which results in (50), where the second inequality is due to Lemma 2, and the last inequality is due to Theorem 1.

Next, for a given , in order to obtain , we only need the following equation:

Since , it follows that the number of iterations N needed is at most □

Corollary 2.

Assume that Assumptions 1, 3, 4 and (27) hold for generated by Algorithm 3 with batch size for all k. We also assume that is specifically chosen as

with . Then,

where N denotes the iteration number. Moreover, for a given , to guarantee that, the number of iterations N needed is at most .

5. Numerical Results

In this section, we focus on the numerical performances of the proposed Algorithm 3 for solving nonconvex empirical risk minimization (ERM) problems and nonconvex support vector machine (SVM) problems.

5.1. Experiments with Synthetic Datasets

The models of the nonconvex SVM problems and nonconvex ERM problems are given as follows: is a regularization parameter.

Problem 1.

The ERM problem with a nonconvex sigmoid loss function [13,14] is formulated as follows:

where and represent the feature vector and corresponding label, respectively.

Problem 2.

The nonconvex support vector machine (SVM) problem with a sigmoid loss function [15,16] is formulated as follows:

We compare the proposed LMLBFGS-VR algorithm with SGD [1], SVRG [17] and SAGA [18], where the LMLBFGS-VR algorithms use a descent step size and other algorithms use a constant step size . The data sets in our experiments including Adult, IJCNN, Mnist, and Coctype. All the codes are written in MATLAB 2018b on a PC with AMD Ryzen 7 5800H with Radeon Graphics 3.20 GHz and 16 GB of memory.

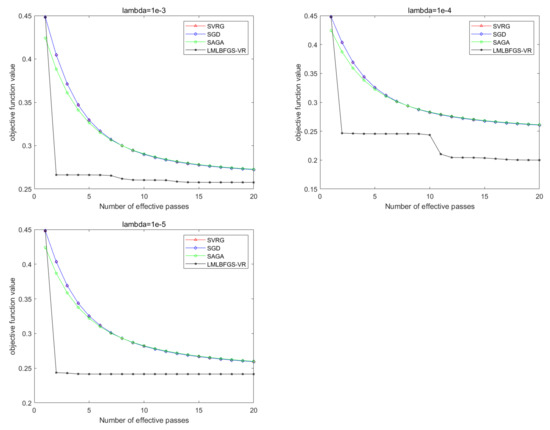

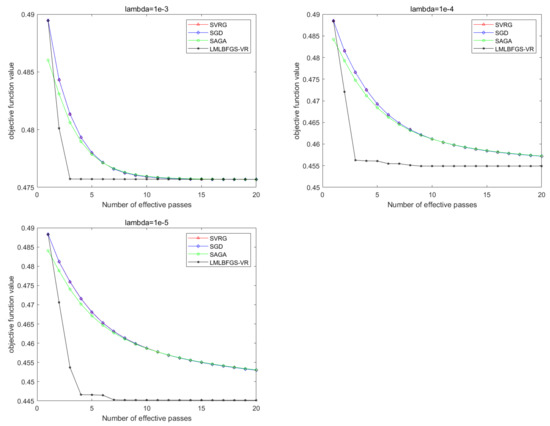

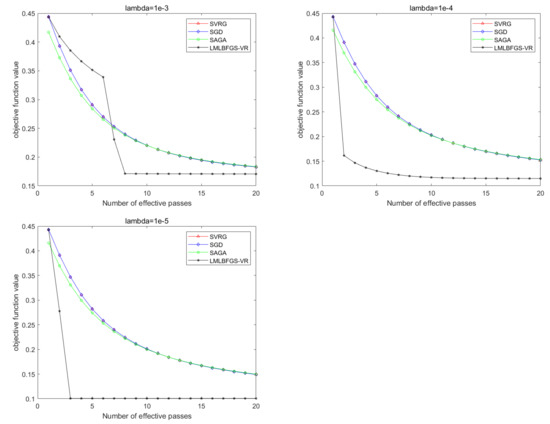

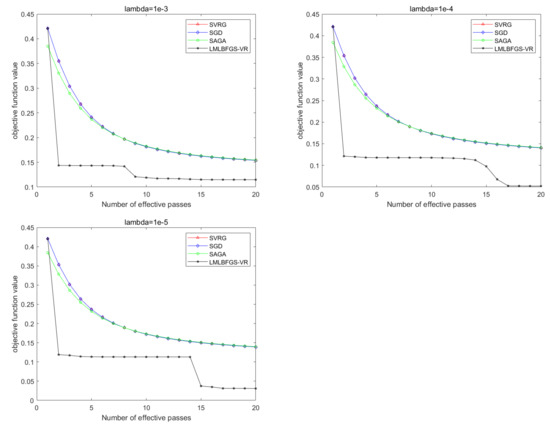

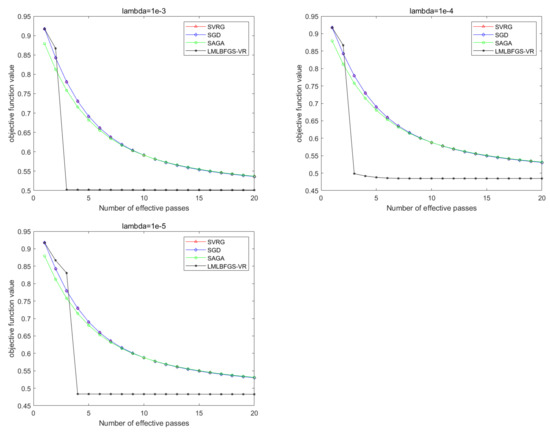

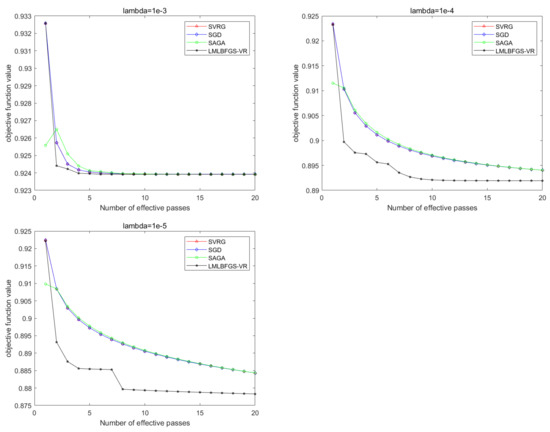

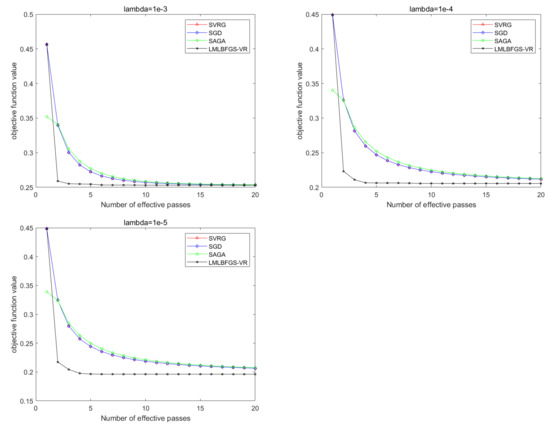

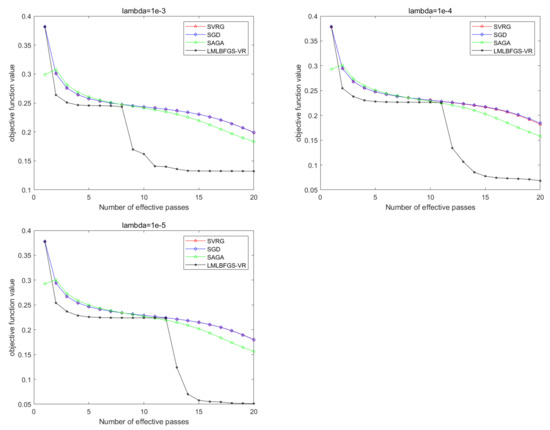

5.2. Numerical Results for Problem 1

In this subsection, we present the numerical results of LMLBFGS-VR, SGD, SVRG, and SAGA for solving Problem 1 on the four data sets. For LMLBFGS-VR algorithms, the step size is , and the memory size is and . The step size of other algorithms is chosen as 0.02. The number of inner loop q we chose as uniformly, where V is the batch size. The batch-size is set to 100 for Adult, IJCNN, and Covtype, and for Mnist. In order to further test the performance of the algorithm, the regularization parameter is set to , or . The following pictures demonstrate the performance of different algorithms. Figure 1, Figure 2, Figure 3 and Figure 4 show the convergence performance of all the stochastic algorithms for solving Problem 1 with or on four different data sets. From Figure 1, Figure 2, Figure 3 and Figure 4, we obtain that all the algorithms can solve the problem successfully. However, the proposed LMLBFGS- VR algorithms have significantly faster convergence speed than other algorithms. It is clear that the proposed algorithms, especially LMLBFGS-VR, have a great advantage for solving nonconvex support vector machine problems.

Figure 1.

Comparison of all the algorithms for solving Problem 1 on Adult. From left to right: .

Figure 2.

Comparison of all the algorithms for solving Problem 1 on Covtype. From left to right: .

Figure 3.

Comparison of all the algorithms for solving Problem 1 on IJCNN. From left to right: .

Figure 4.

Comparison of all the algorithms for solving Problem 1 on mnist. From left to right: .

5.3. Numerical Results for Problem 2

The numerical results of LMLBFGS-VR, SGD, SVRG, and SAGA for solving Problem 2 on the four data sets are presented in this subsection. All parameters are the same as the above subsection, and the regularization parameter is also set to or . The following figures demonstrate the performance of all the stochastic algorithms. The y-axis is the objective function value, and the x-axis denotes the number of effective passes, where computing a full gradient or evaluatingncomponent gradients is regarded as an effective pass. Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 demonstrate that the convergence performance of our LMLBFGS-VR algorithms on the four data sets, which show that they remarkably outperform the other algorithms. When , the objective function is almost minimized by two effective passes. In contrast, the SGD, SVRG, and SAGA algorithms converge slightly slowly, where these algorithms only use first-order information. Due to the use of second-order information and limited memory technique, LMLBFGS-VR requires only a few effective passes to quickly minimize the function value. From Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8, we find that, as decreases, the value of the function decreases to a smaller value. Thus, we can choose a smaller for practical problems. Combined with the previous discussion, our LMLBFGS-VR algorithms make great progress in improving the computing efficiency for nonconvex machine learning problems.

Figure 5.

Comparison of all the algorithms for solving Problem 2 on Adult. From left to right: .

Figure 6.

Comparison of all the algorithms for solving Problem 2 on Covtype. From left to right: .

Figure 7.

Comparison of all the algorithms for solving Problem 2 on IJCNN. From left to right: .

Figure 8.

Comparison of all the algorithms for solving Problem 2 on mnist. From left to right: .

6. Conclusions

In this paper, we proposed one efficient modified stochastic limited BFGS algorithms for solving nonconvex stochastic optimization. The proposed algorithms can preserve the positive definiteness of without any convexity properties. The LMLBFGS-VR method with variance reduction was also presented to solve nonconvex stochastic optimization problems. Numerical experiments on nonconvex SVM problems and nonconvex ERM problems were performed to demonstrate the performance of the proposed algorithms, and the results indicated that our algorithms are comparable to other similar methods. In the future, we could consider the following points: (i) Whether we can use a proper line search to determine an appropriate step size, which can reduce the complexity and enhance the accuracy of the algorithm. (ii) Further experiments on the practical problems could be performed in the future to check the performance of the presented algorithms.

Author Contributions

Writing—original draft preparation, H.L.; writing—review and editing, Y.L. and M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China Grant No.11661009, the High Level Innovation Teams and Excellent Scholars Program in Guangxi institutions of higher education Grant No. [2019]52, the Guangxi Natural Science Key Fund No. 2017GXNSFDA198046, the Special Funds for Local Science and Technology Development Guided by the Central Government No. ZY20198003, the special foundation for Guangxi Ba Gui Scholars, and the Basic Ability Improvement Project for Young and Middle-Aged Teachers in Guangxi Colleges and Universities No. 2020KY30018.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Chung, K.L. On a stochastic approximation method. Ann. Math. Stat. 1954, 25, 463–483. [Google Scholar] [CrossRef]

- Polyak, B.T.; Juditsky, A.B. Acceleration of stochastic approximation by averaging. SIAM J. Control Optim. 1992, 30, 838–855. [Google Scholar] [CrossRef]

- Ruszczyǹski, A.; Syski, W. A method of aggregate stochastic subgradients with online stepsize rules for convex stochastic programming problems. In Stochastic Programming 84 Part II; Springer: Berlin/Heidelberg, Germany, 1986; pp. 113–131. [Google Scholar]

- Wright, S.; Nocedal, J. Numerical Optimization; Springer: Berlin/Heidelberg, Germany, 1999; Volume 35, p. 7. [Google Scholar]

- Bordes, A.; Bottou, L. SGD-QN: Careful quasi-Newton stochastic gradient descent. J. Mach. Learn. Res. 2009, 10, 1737–1754. [Google Scholar]

- Byrd, R.H.; Hansen, S.L.; Nocedal, J.; Singer, Y. A stochastic quasi-Newton method for large-scale optimization. SIAM J. Optim. 2016, 26, 1008–1031. [Google Scholar] [CrossRef]

- Gower, R.; Goldfarb, D.; Richtárik, P. Stochastic block BFGS: Squeezing more curvature out of data. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1869–1878. [Google Scholar]

- Covei, D.P.; Pirvu, T.A. A stochastic control problem with regime switching. Carpathian J. Math. 2021, 37, 427–440. [Google Scholar] [CrossRef]

- Wei, Z.; Li, G.; Qi, L. New quasi-Newton methods for unconstrained optimization problems. Appl. Math. Comput. 2006, 175, 1156–1188. [Google Scholar] [CrossRef]

- Durrett, R. Probability: Theory and Examples; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Deng, N.Y.; Li, Z.F. Ome global convergence properties of a conic-variable metric algorithm for minimization with inexact line searches. Numer. Algebra Control Optim. 1995, 5, 105–122. [Google Scholar]

- Allen-Zhu, Z.; Hazan, E. Variance reduction for faster non-convex optimization. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 699–707. [Google Scholar]

- Shalev-Shwartz, S.; Shamir, O.; Sridharan, K. Learning kernel-based halfspaces with the 0–1 loss. SIAM J. Comput. 2011, 40, 1623–1646. [Google Scholar] [CrossRef] [Green Version]

- Ghadimi, S.; Lan, G. Stochastic first-and zeroth-order methods for nonconvex stochastic programming. SIAM J. Optim. 2013, 23, 2341–2368. [Google Scholar] [CrossRef] [Green Version]

- Mason, L.; Baxter, J.; Bartlett, P.; Frean, M. Boosting algorithms as gradient descent in function space. Proc. Adv. Neural Inf. Process. Syst. 1999, 12, 512–518. [Google Scholar]

- Johnson, R.; Zhang, T. Accelerating stochastic gradient descent using predictive variance reduction. Adv. Neural Inf. Process. Syst. 2013, 26, 315–323. [Google Scholar]

- Defazio, A.; Bach, F.; Lacoste-Julien, S. SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 1646–1654. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).