Abstract

Longitudinal data modeling is widely carried out using parametric methods. However, when the parametric model is misspecified, the obtained estimator might be severely biased and lead to erroneous conclusions. In this study, we propose a new estimation method for longitudinal data modeling using a mixed estimator in nonparametric regression. The objective of this study was to estimate the nonparametric regression curve for longitudinal data using two combined estimators: truncated spline and local linear. The weighted least square method with a two-stage estimation procedure was used to obtain the regression curve estimation of the proposed model. To account for within-subject correlations in the longitudinal data, a symmetric weight matrix was given in the regression curve estimation. The best model was determined by minimizing the generalized cross-validation value. Furthermore, an application to a longitudinal dataset of the poverty gap index in Bengkulu Province, Indonesia, was conducted to illustrate the performance of the proposed mixed estimator. Compared to the single estimator, the truncated spline and local linear mixed estimator had better performance in longitudinal data modeling based on the GCV value. Additionally, the empirical results of the best model indicated that the proposed model could explain the data variation exceptionally well.

1. Introduction

Regression analysis is a statistical technique that plays a crucial role in inferential statistics and is widely employed in numerous scientific disciplines. The classical regression method used for many years is parametric regression, which assumes the regression curve’s shape follows a specified functional form, such as linear, quadratic or cubic [1]. Along with the development of computational science and limitations in parametric regression models, i.e., the assumption of the specified regression curve functional form, a nonparametric regression model that does not necessitate numerous assumptions is becoming more recommended for solving problems in various applied fields. The nonparametric regression method has a high degree of flexibility since the data can determine the form of the estimated curve without interference from the researcher’s subjectivity [2]. In nonparametric regression, there are various functions utilized to estimate the regression curve, which are local linear [3,4,5], spline [6,7,8], kernel [9,10,11], local polynomial [12,13,14], and Fourier series [15,16,17] functions. In addition [18], developed moving extremes ranked set sampling (MERSS) to estimate a simple linear regression model.

The nonparametric regression approach, which has been conducted using various estimators, is capable of modeling a dataset, but the model has a weakness. It is limited to one data pattern form, i.e., the non-mixed estimator (often called a single estimator) in nonparametric regression. Theoretically, it assumes that each predictor variable always has the same relationship pattern as the response variable. In reality, however, the data pattern of each predictor has a different form. In addition, nonparametric regression with a single estimator is unable to handle different data patterns between predictors. Because of these weaknesses, researchers have developed a nonparametric regression model by involving two or more estimators in the nonparametric regression model, from now on referred to as a mixed estimator. The idea of developing a mixed estimator is taken from the concept of semiparametric regression, such as that carried out by [19]. Several mixed estimator studies have been conducted to estimate the nonparametric regression curve; for example [20,21,22] developed a mixed estimator of spline and kernel for estimating nonparametric regression curves. Similarly, in [23,24], researchers presented nonparametric regression curve estimation using an estimator that combined truncated spline and Fourier series. On the other hand, [25] proposed two nonparametric estimators of the regression function with mixed measurement errors.

The development of research that uses the regression method not only deals with cross-sectional data but also with longitudinal data. Longitudinal data modeling commonly uses a parametric regression method. Several methods in parametric models for longitudinal data have been developed and can be read about in [26], with related references listed therein. Occasionally, parametric models for longitudinal data are too restrictive for many applications because when the parametric model is misspecified, the estimators might be severely biased and lead to erroneous conclusions. In recent years, a large amount of literature on longitudinal data analysis has proposed several estimators in nonparametric regression, such as spline [27,28], kernel [29,30], Fourier series [31], and local linear [32,33] to overcome this difficulty.

In longitudinal studies, the nonparametric regression model has not dealt with two combined estimators, i.e., a mixed estimator. Therefore, this study developed an adaptive method to estimate regression curves for longitudinal data by using a mixed estimator in nonparametric regression. Among several estimators in nonparametric regression, the truncated spline is one of the more renowned estimators due to its high flexibility in handling data that change at particular subintervals. Moreover, this estimator has an accurate visual interpretation [2]. Meanwhile, the local linear estimator is widely used in nonparametric regression because it is simple and easy to understand. The local linear estimator is one of the smoothing techniques used in the nonparametric approach [34]. This estimator has a good ability to model data that have a monotonous pattern, such as upward or downward trends. The estimation is obtained by locally fitting a one-degree polynomial to the data via weighted least squares (WLS) optimization. Considering the advantages of these two estimators, as mentioned earlier, the primary objective of this study is to acquire a nonparametric regression curve estimation for longitudinal data using a mixed estimator that combines truncated spline and local linear functions. The curve estimation was carried out using WLS optimization through two-stage estimation. In addition, to illustrate the performance of the proposed model, an application to a real dataset is given for modeling poverty gap index data in Bengkulu Province, Indonesia.

The remainder of the paper is structured as follows: Section 2 presents an overview of the longitudinal data nonparametric regression model, truncated spline function, local linear function, and WLS optimization. Section 3 comprises four subsections. The estimation of the nonparametric regression curve for longitudinal data using a truncated spline and local linear mixed estimator is presented in Section 3.1., followed by the selection of the optimal knot point and bandwidth parameter to obtain the best model in Section 3.2. Section 3.3 provides the implementation of the proposed model in a real longitudinal data case. A discussion of the findings and future research are addressed in the final section.

2. Materials and Methods

In longitudinal studies, data from individuals are collected repeatedly over time. Longitudinal data are usually correlated between observations within a subject but are independent between subjects [35]. Given a paired longitudinal dataset , the relationship between predictor and response variables is assumed to follow a nonparametric regression model for longitudinal data, which can be expressed as:

where is the regression curve and is a random error that is assumed to be identical, independent and normally distributed. In this study, represents the number of subjects, and denote the number of predictor variables, and represents the number of observations for each subject. For simplicity, Equation (1) can be represented in matrix form as follows:

The regression curve of for each i-th subject is assumed unknown and to be an additive model. Thus, it can be written as:

in which represents the truncated spline component and is the local linear component. According to Equations (1) and (3), the paired data following the nonparametric regression model for longitudinal data can be rewritten as follows:

where random error has the following assumptions:

such that the error varian-covarian matrix can be written as follows:

Furthermore, the regression curve component is approximated by a linear truncated spline with knots , as given in Equation (5):

with the truncated function,

Meanwhile, the regression curve component is approximated by a local linear function at fixed point . Assume that are independently on different interval and has a derivative for at . By Taylor expansion, can be locally approximated by a local linear function, defined as follows:

where , is the local neighborhood with the size specified by a constant called the bandwidth parameter.

In general terms, the WLS optimization form for estimating the regression curve of using a mixed estimator of truncated spline and local linear form in Equation (4) is equal to the goodness of fit component that can be defined by

However, the regression curve estimate of the proposed model in this study is achieved simultaneously through a two-stage estimation technique. The first stage is to complete the estimation of the local linear component. The following stage involves the completion of truncated spline component estimation. The estimation of the two components is carried out using WLS optimization. The estimation results of each component are given by Theorems 1 and 2 in Section 3.1.

3. Results

3.1. Estimation of the Nonparametric Regression Curve for Longitudinal Data Using a Truncated Spline and Local Linear Mixed Estimator

As mentioned previously, a two-stage estimation technique using weighted least squares (WLS) optimization was adopted to generate the truncated spline and local linear mixed estimator in the nonparametric regression for longitudinal data. Consequently, some lemmas and theorems are needed to obtain the regression curve estimation of the proposed model. The first lemma describes the goodness of fit of the local linear component. The first stage of estimation, as stated in Theorem 1, is derived by using the result of Lemma 1. Lemma 2 shows the second stage of estimation, i.e., WLS optimization, to estimate the regression curve of the truncated spline component, with the estimation results presented in Theorem 2. Appendixe A, Appendixe B, Appendixe C and Appendixe D provide all the proofs for the lemmas and theorems.

Lemma 1.

If the regression curve of the local linear component in the nonparametric regression model for longitudinal data is given by Equation (7), then the goodness of fit can be determined using the following equation:

whereandare thesymmetric matrix as a weighting of the local linear component and longitudinal data, respectively.

The proof of the first lemma can be seen in Appendix A.

Theorem 1.

If the goodness of fit is given in Lemma 1, then the regression curve estimation of the local linear component can be obtained from WLS optimization, which is as follows:

whereand.

The proof of Theorem 1 is provided in Appendix B. Furthermore, Lemma 2 describes the second stage of WLS optimization to estimate the regression curve of the truncated spline component, with Theorem 2 being the estimation result.

Lemma 2.

If the regression curve of the truncated spline component

is as presented in Equation (5), then the WLS optimization can be formulated as follows:

whereandis thesymmetric matrix as a weighting of the longitudinal data.

The evidence to support Lemma 2 can be found in Appendix C.

Theorem 2.

If Lemma 2 provides the optimization of WLS, then the regression curve estimation of the truncated spline component in the nonparametric regression model for the longitudinal data in Equation (4) can be obtained by WLS optimization, such that

whereand

In addition, an explanation of how to prove Theorem 2 is shown in Appendix D.

After obtaining the estimation of the truncated spline component in Theorem 2, the estimation result of in Theorem 1 can be expressed as Equation (12). We start by substituting Equation (10) into Equation (A9), which yields the following equation:

Finally, the regression curve estimation of the local linear component is obtained by substituting Equation (11) into Equation (A2) and it can be rewritten as Equation (12).

The most important finding of this study is the curve estimation of the truncated spline and local linear mixed estimator in the nonparametric regression for longitudinal data. This finding is shown in Corollary 1.

Corollary 1.

Based on the estimation of truncated spline and local linear components in Equation (10) and Equation (12), respectively, the estimation of the nonparametric regression curve for longitudinal data using the truncated spline and local linear mixed estimator can be expressed as a matrix:

Proof of Corollary 1.

The regression curve estimation of the mixed estimator in nonparametric regression model for longitudinal data in Equation (3) can be rewritten in the following matrix form:

By substituting the regression curve estimation results of in Equation (10) and in Equation (12), can be defined as follows:

For simplification, can also be expressed as

□

3.2. Optimal Number of Knots and Bandwidth Selection

One method that is commonly used to determine the optimal knot is generalized cross validation (GCV) [36], in which the optimal knot is obtained by taking the minimum GCV value. In the case of longitudinal data, Wu and Zhang, in [35], generalize the GCV method for selecting the optimal knot. In this study, modifications to the GCV method were carried out for the selection of the knot and bandwidth parameters on a truncated spline and local linear mixed estimator in a nonparametric regression model for longitudinal data. The modified GCV method is given by Lemma 3 and Lemma 4.

Lemma 3.

If, given the regression curve estimation of the truncated spline and local linear mixed estimator in nonparametric regression for longitudinal data, as in Equation (13), then the mean square error (MSE) of the model is as follows:

where,and.

Proof of Lemma 3.

From Theorems 1 and 2, we obtain the curve estimation of the truncated spline and local linear mixed estimator in a nonparametric regression model for longitudinal data written as . Thus, based on in Equation (13), the MSE of the model is given as follows:

□

Lemma 4.

If given the regression curve estimationin Equation (13) andin Lemma 3, then the GCV function for the truncated spline and local linear mixed estimator in a nonparametric regression model for longitudinal data is given by:

Proof of Lemma 4.

Based on the regression curve estimation in Equation (13) and in Lemma 3, the GCV function for the truncated spline and local linear mixed estimator in a nonparametric regression model for longitudinal data can be formulated as follows:

The optimum knot and the bandwidth parameter are obtained by minimizing the modification of the GCV function for the proposed mixed estimator model in Equation (15), as shown below:

where , and derive from the regression curve estimation of the proposed mixed estimator model, as in Equation (13). □

3.3. Application to Real Data

In this part, we make an effort to demonstrate how the proposed model can be applied to a real case. The proposed mixed estimator model, along with its curve estimation and modified GCV function, was applied in order to model poverty gap index data in 10 regencies across Bengkulu Province, Indonesia, over a twelve-year period (2010–2021). The dataset was a longitudinal observation consisting of ten regencies as subjects with twelve repeated times. The observed response () in this study was the distribution pattern of poverty gap index data in each regency. The poverty gap index (hereinafter PGI-P1) is one of the poverty indicators established by Statistics Indonesia to measure poverty intensity. PGI-P1 is defined as the average measure of the expenditure gap of each poor population toward the poverty line. A decrease in PGI-P1 indicates that the average expenditure of poor people tends to be closer to the poverty line, which means that the expenditure inequality of the poor is also decreasing [37].

Poverty eradication is the first goal of the Sustainable Development Goals (SDGs) established by the United Nations in 2015. In Indonesia, the poverty issue has become a strategic topic and a research priority for both central and local governments. Bengkulu is one of the provinces in Indonesia that requires tremendous attention in poverty alleviation programs. The poverty rate in Bengkulu is approximately double the national poverty rate. BPS socio-economic data (March 2022) report that Bengkulu is among Indonesia’s 10 poorest provinces, with 14.62% of the population living in poverty [38]. According to BPS socio-economic data, over the past twelve years, the poverty rate in Bengkulu has generally declined; however, this is not in line with the decrease in the poverty gap index. In this regard, PGI-P1 could help evaluate (public or private) policy in the area of poverty reduction programs. Therefore, PGI-P1 was a potential topic to be discussed in this research, particularly the PGI-P1 data in Bengkulu Province.

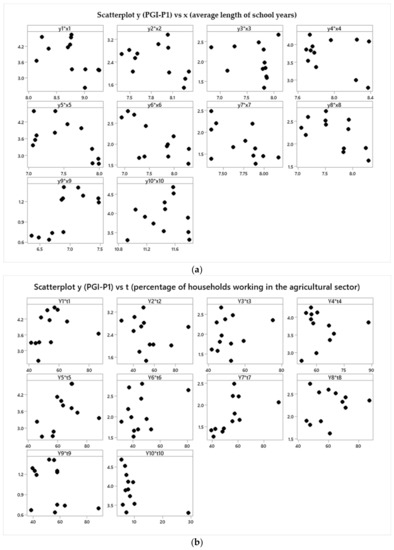

Several longitudinal studies have been conducted to analyze the factors that significantly affect PGI-P1, such as the average length of school years [39,40], literacy rate [40], gross regional domestic product (GRDP) per capita [41,42], and percentage of households working in the agricultural sector [40,42]. However, we used only the average length of school years and percentage of households working in the agricultural sector as predictor variables in this study. Furthermore, the partial relationship between the response variable and each predictor variable for ten regencies is demonstrated by the scatterplot in Figure 1.

Figure 1.

Partial scatterplot of 10 subjects between (a) the poverty gap index (PGI-P1) and average length of school years; (b) the poverty gap index (PGI-P1) and percentage of households working in the agricultural sector. * scatterplot between response and each predictor.

Based on Figure 1, the partial scatterplot between PGI-P1 and the average length of school years () tends to change monotonically with local acuity. Thus, it is assumed that the average length of school years is a predictor variable for the local linear. Meanwhile, the partial scatter plot between PGI-P1 and percentage of households working in the agricultural sector () indicated a change in the data pattern at particular subintervals in some subjects; this is a good fit for the truncated spline component.

Another important point observed in Table 1 is the comparison of some proposed model combinations. Based on the GCV criterion, the leading PGI-P1 model produces the smallest GCV of 19.2324, obtained from the model with a combination of 2 knots, second weight, and bandwidth parameter of 0.9067. This model produces a coefficient of determination (R-squared) of 92.17% and a mean square error (MSE) equal to 0.2917. The R-squared value implies that 92.17% of the variance in PGI-P1 in Bengkulu Province could be explained by predictors in the model. Meanwhile, other variables not incorporated in the model produce a relatively small contribution to describing the data variability. The results of knot location for each subject and the best model parameter estimation are presented in Appendix E and Appendix F, respectively.

Table 1.

Summary of GCV results for PGI-P1 modeling.

In general, the estimation of the nonparametric regression model for longitudinal data using the truncated spline and local linear mixed estimator with two knots and two predictors, one of the predictors following spline function and the other following local linear function, can be formulated as follows:

for .

Based on the results of parameter estimation for the best model in Appendix F, the nonparametric regression model with a truncated spline and local linear mixed estimator for modeling the PGI-P1 data of Bengkulu over the past twelve years for each regency (subject) can be written as follows

Subject 1

Subject 2

Subject 10

Interpretation of the model in each subject over time is generally divided for each predictor and each subinterval of the truncated spline function. As an example, for subject 1 (South Bengkulu Regency), the interpretation of the model for predictor (t), percentage of households working in the agricultural sector, is as follows: if it is assumed that the other predictor (average length of school years) is constant, then the influence of the percentage of households working in the agricultural sector on PGI-P1 in South Bengkulu can be expressed in the following equation:

in which

The model in Equation (16) possesses three subintervals and it can be interpreted using the following truncated function:

Based on the truncated function in Equation (17), in which the first subinterval was assigned to the percentage of households working in the agricultural sector in South Bengkulu over twelve years that was less than 46.36, an increase of one point in percentage of households working in the agricultural sector will increase the PGI-P1 by 0.1778 points. The second subinterval contains the percentage of households working in the agricultural sector in the range of 46.36 to 76.44 and also had a positive correlation; an escalation of one point in the percentage of households working in the agricultural sector will add 0.0073 points to PGI-P1. Meanwhile, the last subinterval was applied to the percentage of households working in the agricultural sector that was greater than 76.44, which occurred only in 2010. In that year, the percentage of households working in the agricultural sector also had a positive correlation with PGI-P1. If there is an increase of one point in the percentage of households working in the agricultural sector, then the index of PGI-P1 would increase by 0.0794 points. This interpretation is applicable to other subjects in the same way.

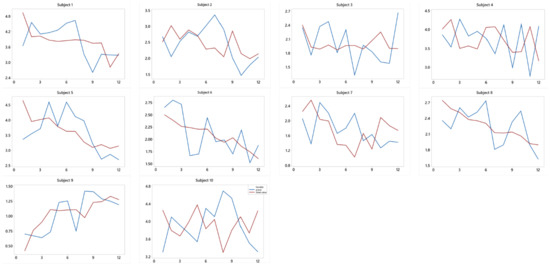

Furthermore, based on the empirical results of the best model, it is also possible to visually compare the actual and fitted values of the response variable for each subject. A comparison between the response variable (blue line) and the fitted values (red line) using the proposed model is presented in Figure 2. Some of the fitted values, as shown on the graph, have a similar pattern to the actual data, while others do not; nonetheless, the discrepancy is not extremely large. In summary, this application study certainly contributes to our understanding of the proposed model, which is the truncated spline and local linear mixed estimator in nonparametric regression for longitudinal data, notwithstanding its limitations.

Figure 2.

Comparison between actual and fitted values for each subject.

4. Discussion and Conclusions

In this study, nonparametric regression with a new mixed estimator is proposed for estimating curves in longitudinal data modeling. We combine the truncated spline and the local linear as the classes of estimators in nonparametric regression. The estimation of the proposed model’s regression curve using two-stage WLS optimization is as follows:

Furthermore, the application of the real dataset to model the PGI-P1 data in Bengkulu Province shows that the proposed mixed estimator model produces better results compared to the single estimator model. One of the most important findings is that the best PGI-P1 model is obtained from the proposed model using a combination of two knots, the second weight, and some value of bandwidth parameters. The best model yields an R-squared value that is quite significant in explaining the data variability based on the predictors in the model. In summary, these implementation studies may provide an understanding of regression curve estimation using the truncated spline and local linear mixed estimator in the nonparametric regression for longitudinal data.

A major limitation of this research is the absence of a confidence interval estimation and hypothesis testing of the proposed model. Therefore, further research ought to be conducted to attain a confidence interval estimation and to perform hypothesis testing. The applicability of the approach described in this paper to different mixed estimators in nonparametric regression for longitudinal data is also a potential issue for further research. Additionally, other case studies could be performed using combinations of the proposed model with more predictors, higher knot numbers and more varied bandwidth parameters to learn more about the performance evaluation of the proposed model.

Author Contributions

Conceptualization, I.S., I.N.B. and V.R.; methodology, I.S. and I.N.B.; software, I.S. and V.R.; validation, I.N.B. and V.R.; formal analysis, I.S. and I.N.B.; investigation, I.S. and V.R.; data curation, I.S.; writing—original draft preparation, I.S.; writing—review and editing, I.N.B. and V.R.; visualization, I.S.; supervision, I.N.B. and V.R.; project administration, I.N.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Acknowledgments

The authors would like to thank the anonymous reviewers and the editor for their helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The regression curve is assumed to be unknown but smooth and contained in a specific function space. That regression curve is approximated using a local linear estimator, as presented in Equation (7). The local linear function with one predictor variable, notated by , can be written as

According to Equation (7), the local linear function in the component of regression curve as written above can be described in the following matrix form:

Consequently, the local linear function with number of predictors for the component of regression curve given in Equation (A1) can be expressed as follows:

thus, obtained

The model of nonparametric regression for longitudinal data in Equation (4) can be rewritten as

In the matrix form, the model in Equation (A3) can be written as in Equation (A4).

Thus, based on Equation (A3), where is the local linear function, the goodness of fit for WLS optimization as presented in Equation (8) can be written as follows:

As a result, the goodness of fit for the local linear component in Equation (A5) can be represented by the matrix form below

Appendix B

According to the goodness of fit in Lemma 2, the WLS optimization in Equation (8) can be expressed in the form

In the matrix from, Equation (A6) is written as

Suppose , thus Equation (A7) can be described as follows

Subsequently, Equation (A7) can be rewritten in the form:

The estimator can be obtained by solving the optimization in Equation (A8). The completion is done by taking derivative partial against and equating the result with zero, which is as follows:

giving the result

By substituting in Equation (A9) into Equation (A2), the regression curve estimation of the local linear component can be written as

in which and

Appendix C

The estimation result of Theorem 1 for the local linear component still includes the linear truncated spline function , as shown in Equation (5). Therefore, to complete the WLS optimization in Equation (8), the estimation of the regression curve for the truncated spline component is required. According to Equation (5), the truncated spline component in the nonparametric regression curve for longitudinal data, which only has one predictor , can be expressed in the following matrix form:

Therefore, using Equation (A11), the function of truncated spline for predictors, can be written as follows:

According to Equation (A12), the function of the truncated spline component in nonparametric regression for longitudinal data can be written in following matrix form:

such that

Equation (A13) can be rewritten in the form

where is matrix and is vector.

Furthermore, the additive model of nonparametric regression for longitudinal data in Equation (4) can be expressed in this matrix form:

Substituting Equation (A10) into Equation (A15) obtained

In order to estimate the regression curve of the truncated spline component through WLS optimization, Equation (A16) can be formulated as follows:

Thus, the error model can be written as

Therefore, the regression curve estimation can be obtained by solving Equation (A18) using WLS optimization. The WLS is given by

Appendix D

The second stage of the estimation procedure in the proposed method is performed by estimating the component of regression curve that is approximated by the truncated spline function using WLS optimization. According to the result of the WLS in Lemma 2, the WLS optimization can be written as follows:

If given , then by performing the multiplication of parentheses in , we obtain

The WLS optimization completion is obtained by setting equal to zero the partial derivative of against , i.e.,

The partial derivation yields the parameter estimate of , which is as follows:

Such that by solving the above equation, the result is obtained as in Equation (A20).

Equation (A20) can be rewritten in the following form:

where and

Finally, the regression curve estimation for the truncated spline component is obtained by substituting Equation (A21) into the truncated spline estimator component as in Equation (A14), which is given by

Appendix E

Table A1.

Knot location for each subject of the best model.

Table A1.

Knot location for each subject of the best model.

| Subject | Knots Location | Subject | Knots Location | ||

|---|---|---|---|---|---|

| Subject 1 | 46.36 | 76.44 | Subject 6 | 36.89 | 69.97 |

| Subject 2 | 35.54 | 78.41 | Subject 7 | 45.06 | 79.98 |

| Subject 3 | 45.47 | 67.80 | Subject 8 | 49.91 | 77.73 |

| Subject 4 | 55.74 | 80.09 | Subject 8 | 43.98 | 77.67 |

| Subject 5 | 50.53 | 78.84 | Subject 10 | 7.98 | 23.92 |

Appendix F

Table A2.

The best model parameter estimation results.

Table A2.

The best model parameter estimation results.

| Subject | Parameter Notation | Estimated Value | Subject | Parameter Notation | Estimated Value |

|---|---|---|---|---|---|

| Subject 1 | −6.7469 | Subject 6 | −1.5656 | ||

| 0.1778 | 0.0207 | ||||

| −0.1705 | −0.0142 | ||||

| 0.0721 | −0.0162 | ||||

| 2.2127 | 2.5207 | ||||

| −0.1967 | −0.4925 | ||||

| Subject 2 | 5.1266 | Subject 7 | −3.5361 | ||

| −0.0022 | −0.0593 | ||||

| 0.0226 | 0.0017 | ||||

| −0.0953 | 0.1892 | ||||

| −3.2662 | 7.9063 | ||||

| −1.0230 | −1.6067 | ||||

| Subject 3 | 1.4510 | Subject 8 | 1.1165 | ||

| −0.1051 | −0.0094 | ||||

| 0.1202 | 0.0138 | ||||

| 0.0197 | 0.0050 | ||||

| 5.1926 | 1.2300 | ||||

| 0.0526 | −0.5594 | ||||

| Subject 4 | 8.5899 | Subject 9 | 3.4895 | ||

| −0.2437 | −0.0491 | ||||

| 0.3035 | 0.0727 | ||||

| −0.1710 | −0.0930 | ||||

| 8.0732 | −0.1362 | ||||

| −0.5480 | 0.7152 | ||||

| Subject 5 | 2.0107 | Subject 10 | −1.2430 | ||

| 0.0535 | 0.3772 | ||||

| −0.0766 | −0.2532 | ||||

| 0.1059 | −0.3447 | ||||

| −1.4896 | 2.8213 | ||||

| −1.4033 | 1.3042 |

References

- Montgomery, C.D.; Peck, E.A.; Vining, G.G. Introduction to Linear Regression Analysis, 5th ed.; John Willey & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar]

- Eubank, R.L. Nonparametric Regression and Spline Smoothing, 2nd ed.; Marcel Dekker, Inc.: New York, NY, USA, 1999. [Google Scholar]

- Linke, Y.; Borisov, I.; Ruzankin, P.; Kutsenko, V.; Yarovaya, E.; Shalnova, S. Universal Local Linear Kernel Estimators in Nonparametric Regression. Mathematics 2022, 10, 2693. [Google Scholar] [CrossRef]

- Luo, S.; Zhang, C.Y.; Xu, F. The Local Linear M-Estimation with Missing Response Data. J. Appl. Math. 2014, 2, 1–10. [Google Scholar] [CrossRef]

- Cheruiyot, L.R. Local Linear Regression Estimator on the Boundary Correction in Nonparametric Regression Estimation. J. Stat. Theory Appl. 2020, 19, 460–471. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, M.; Ju, F. Bayesian P-Splines Quantile Regression of Partially Linear Varying Coefficient Spatial Autoregressive Models. Symmetry 2022, 14, 1175. [Google Scholar] [CrossRef]

- Du, R.; Yamada, H. Principle of Duality in Cubic Smoothing Spline. Mathematics 2020, 8, 1839. [Google Scholar] [CrossRef]

- Lestari, B.; Fatmawati; Budiantara, I.N. Spline Estimator and Its Asymptotic Properties in Multiresponse Nonparametric Regression Model. Songklanakarin J. Sci. Technol. 2020, 42, 533–548. [Google Scholar] [CrossRef]

- Kayri, M.; Zirhlioglu, G. Kernel Smoothing Function and Choosing Bandwidth for Non-Parametric Regression Methods. Ozean J. Appl. Sci. 2009, 2, 49–54. [Google Scholar]

- Zhao, G.; Ma, Y. Robust Nonparametric Kernel Regression Estimator. Stat. Probab. Lett. 2016, 116, 72–79. [Google Scholar] [CrossRef]

- Yang, Y.; Pilanci, M.; Wainwright, M.J. Randomized Sketches for Kernels: Fast and Optimal Nonparametric Regression. Ann. Stat. 2017, 45, 991–1023. [Google Scholar] [CrossRef]

- Syengo, C.K.; Pyeye, S.; Orwa, G.O.; Odhiambo, R.O. Local Polynomial Regression Estimator of the Finite Population Total under Stratified Random Sampling: A Model-Based Approach. Open J. Stat. 2016, 6, 1085–1097. [Google Scholar] [CrossRef]

- Chamidah, N.; Budiantara, I.N.; Sunaryo, S.; Zain, I. Designing of Child Growth Chart Based on Multi-Response Local Polynomial Modeling. J. Math. Stat. 2012, 8, 342–347. [Google Scholar] [CrossRef][Green Version]

- Opsomer, J.D.; Ruppert, D. Fitting a Bivariate Additive Model by Local Polynomial Regression. Ann. Stat. 1997, 25, 186–211. [Google Scholar] [CrossRef]

- Bilodeau, M. Fourier Smoother and Additive Models. Can. J. Stat. 1992, 20, 257–269. [Google Scholar] [CrossRef]

- Kim, J.; Hart, J.D. A Change-Point Estimator Using Local Fourier Series. J. Nonparametr. Stat. 2011, 23, 83–98. [Google Scholar] [CrossRef]

- Yu, W.; Yong, Y.; Guan, G.; Huang, Y.; Su, W.; Cui, C. Valuing Guaranteed Minimum Death Benefits by Cosine Series Expansion. Mathematics 2019, 7, 835. [Google Scholar] [CrossRef]

- Yao, D.S.; Chen, W.X.; Long, C.X. Parametric Estimation for the Simple Linear Regression Model under Moving Extremes Ranked Set Sampling Design. Appl. Math. J. Chin. Univ. 2021, 36, 269–277. [Google Scholar] [CrossRef]

- Ruppert, D.; Wand, M.P.; Carroll, R.J. Semiparametric Regression; Cambridge University Press: Cambridge, UK, 2003; ISBN 9780511755453. [Google Scholar]

- Hidayat, R.; Budiantara, I.N.; Otok, B.W.; Ratnasari, V. The Regression Curve Estimation by Using Mixed Smoothing Spline and Kernel (MsS-K) Model. Commun. Stat.-Theory Methods 2021, 50, 3942–3953. [Google Scholar] [CrossRef]

- Sauri, M.S.; Hadijati, M.; Fitriyani, N. Spline and Kernel Mixed Nonparametric Regression for Malnourished Children Model in West Nusa Tenggara. J. Varian 2021, 4, 99–108. [Google Scholar] [CrossRef]

- Budiantara, I.N.; Ratnasari, V.; Ratna, M.; Zain, I. The Combination of Spline and Kernel Estimator for Nonparametric Regression and Its Properties. Appl. Math. Sci. 2015, 9, 6083–6094. [Google Scholar] [CrossRef]

- Mariati, N.P.A.M.; Budiantara, I.N.; Ratnasari, V. The Application of Mixed Smoothing Spline and Fourier Series Model in Nonparametric Regression. Symmetry 2021, 13, 2094. [Google Scholar] [CrossRef]

- Nurcahayani, H.; Budiantara, I.N.; Zain, I. The Curve Estimation of Combined Truncated Spline and Fourier Series Estimators for Multiresponse Nonparametric Regression. Mathematics 2021, 9, 1141. [Google Scholar] [CrossRef]

- Yin, Z.H.; Liu, F.; Xie, Y.F. Nonparametric Regression Estimation with Mixed Measurement Errors. Appl. Math. 2016, 7, 2269–2284. [Google Scholar] [CrossRef][Green Version]

- Diggle, P.J.; Heagerty, P.; Liang, K.Y.; Zeger, S.L. Analysis of Longitudinal Data; Oxford Univ. Press, Inc.: Oxford, NY, USA, 2002. [Google Scholar]

- Mardianto, M.F.F.; Gunardi; Utami, H. An Analysis about Fourier Series Estimator in Nonparametric Regression for Longitudinal Data. Math. Stat. 2021, 9, 501–510. [Google Scholar] [CrossRef]

- Fernandes, A.A.R.; Budiantara, I.N.; Otok, B.W.; Suhartono. Spline Estimator for Bi-Responses Nonparametric Regression Model For Longitudinal Data. Appl. Math. Sci. 2014, 8, 5653–5665. [Google Scholar] [CrossRef]

- Vogt, M.; Linton, O. Classification of Non-Parametric Regression Functions in Longitudinal Data Models. J. R. Stat. Soc. Ser. B Stat. Methodol. 2017, 79, 5–27. [Google Scholar] [CrossRef]

- Cheng, M.Y.; Paige, R.L.; Sun, S.; Yan, K. Variance Reduction for Kernel Estimators in Clustered/Longitudinal Data Analysis. J. Stat. Plan. Inference 2010, 140, 1389–1397. [Google Scholar] [CrossRef]

- Jou, P.H.; Akhoond-Ali, A.M.; Behnia, A.; Chinipardaz, R. A Comparison of Parametric and Nonparametric Density Functions for Estimating Annual Precipitation in Iran. Res. J. Environ. Sci. 2009, 3, 62–70. [Google Scholar] [CrossRef][Green Version]

- Sun, Y.; Sun, L.; Zhou, J. Profile Local Linear Estimation of Generalized Semiparametric Regression Model for Longitudinal Data. Lifetime Data Anal. 2013, 19, 317–349. [Google Scholar] [CrossRef]

- Yao, W.; Li, R. New Local Estimation Procedure for Nonparametric Regression Function of Longitudinal Data. J. R. Stat. Soc. Ser. B Stat. Methodol. 2013, 75, 123–138. [Google Scholar] [CrossRef]

- Fan, J.; Gijbels, I. Local Polynomial Modelling and Its Applications; Chapman & Hall: London, UK, 1996. [Google Scholar]

- Wu, H.; Zhang, J. Nonparametric Regression Methods for Longitudinal Data Analysis; John Willey & Sons, Inc.: Hoboken, NJ, USA, 2006. [Google Scholar]

- Wahba, G. Spline Models for Observational Data; SIAM, Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1990. [Google Scholar]

- BPS-Statistics Indonesia. Penghitungan And Analisis Kemiskinan Makro Indonesia Tahun 2019; BPS-Statistics Indonesia: Jakarta, Indonesia, 2021.

- BPS-Statistics of Bengkulu Province. Profil Kemiskinan Provinsi Bengkulu September 2021; BPS-Statistics of Bengkulu Province: Bengkulu, Indonesia, 2022.

- Asrol, A.; Ahmad, H. Analysis of Factors That Affect Poverty in Indonesia. Rev. Espac. 2018, 39, 14–25. [Google Scholar]

- Ghazali, M.; Otok, B.W. Pemodelan Fixed Effect Pada Regresi Data Longitudinal Dengan Estimasi Generalized Method of Moments (Studi Kasus Data Pendududuk Miskin Di Indonesia). Statistika 2016, 4, 39–48. [Google Scholar]

- Sinaga, M. Analysis of Effect of GRDP (Gross Regional Domestic Product) Per Capita, Inequality Distribution Income, Unemployment and HDI (Human Development Index). Budapest Int. Res. Critics Inst. J. 2020, 3, 2309–2317. [Google Scholar] [CrossRef]

- Fajriyah, N.; Rahayu, S.P. Pemodelan Faktor-Faktor Yang Mempengaruhi Kemiskinan Kabupaten/Kota Di Jawa Timur Menggunakan Regresi Data Panel. J. Sains Seni ITS 2016, 5, 2337–3520. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).