A Shift-Deflation Technique for Computing a Large Quantity of Eigenpairs of the Generalized Eigenvalue Problems

Abstract

1. Introduction

2. Shift Technique

- (1)

- (2)

- A similar shift technique can be observed where the coefficient matrix in (5) is defined by . At this moment, the changes of the left eigenvectors are available while those of the right eigenvectors are unavailable. Moreover, we need to solve a homogeneous system using a certain numerical method if we want both the left and right eigenvectors.

- (3)

- A similar shift technique can be observed where the coefficient matrix in (5) is defined by . At this moment, the left eigenvectors are not needed, and in both the condition and the relation should be replaced by .

- (1)

- (2)

- The relation of the left eigenvectors and can also be given as when . However, it will fail if for a certain i. In order to remedy this issue, we should shift this eigenpair together with the converged eigenpair by applying the shift technique referred to in Remark 1 (3).

- (3)

- We can also shift to infinity by using the following shift techniquewhile keeping the corresponding right eigenvector and the remained eigenvalues unchanged. Moreover, we have the relation that and when .

3. Deflation Technique

- (1)

- If we apply the shift technique (5) and solve a homogeneous system for columns of Y as suggested by Remark 1 (1), the full column rank matrices are obtained with and .

- (2)

- The nonsingularity of is essential in Theorem 2. If R is singular, then the deflation technique fails.

- (1)

- There are a lot of choices of the matrices H and K. In actual computation, we choose the nonsingular matrices H and K to be the Householder matrices such that and , which guarantees the low computational cost and the numerical stability.

- (2)

- The condition is needed in Corollary 2. If , the deflation technique fails. To circumvent this problem, we can shift to infinity by using the shift technique (11) without deflation, and continue to compute the next eigenvalue of interest.

4. Shift-Deflation Technique

| Algorithm 1 Shift-deflationtechnique for the GEP. |

| Input: matrices A, B and the number k of the desired eigenpairs. Output: k approximate eigenpairs and their relative residuals.

|

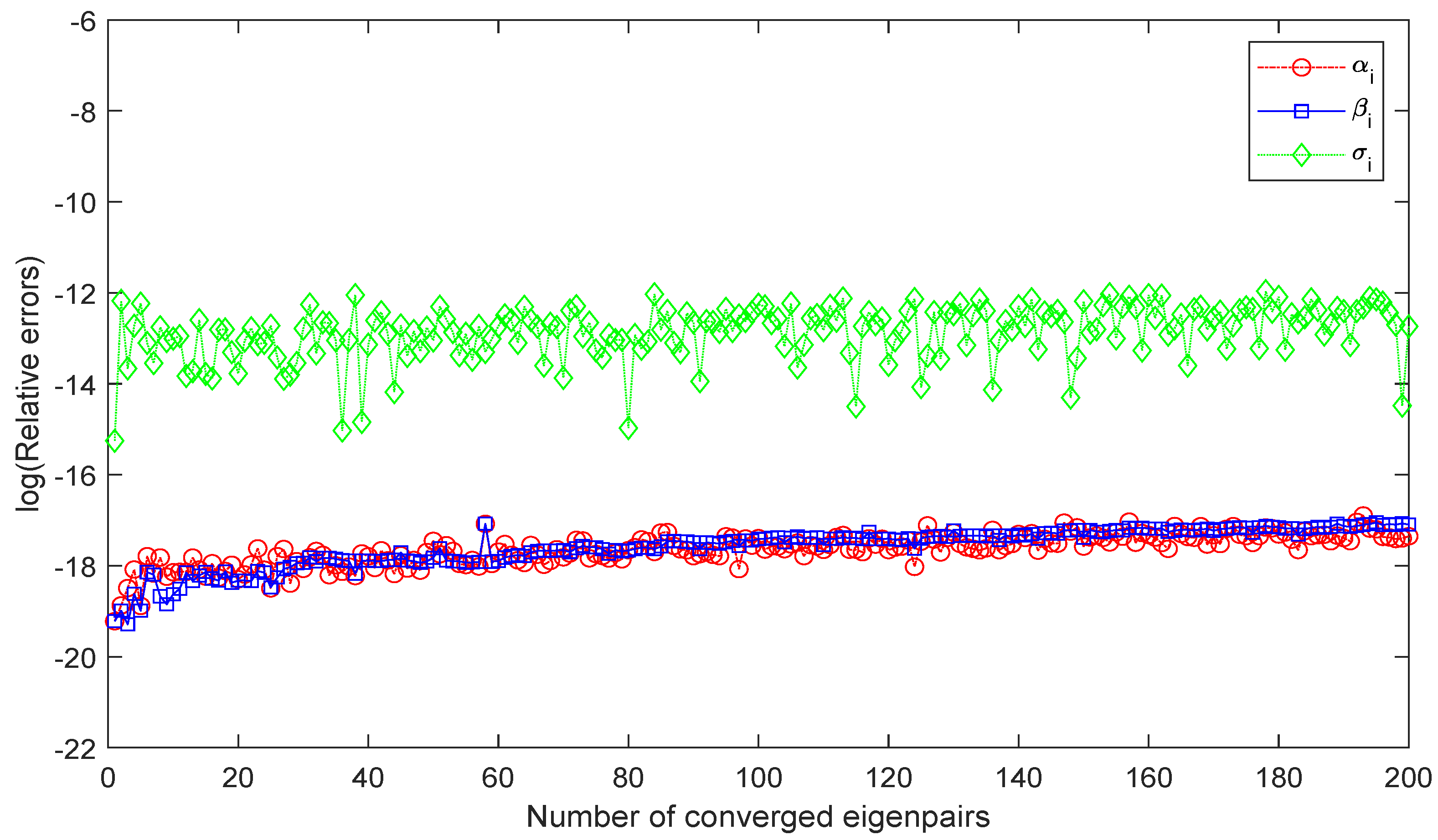

5. Numerical Results

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Saad, Y. Numerical Methods for Large Eigenvalue Problems; Manchester University Press: Manchester, UK, 1992. [Google Scholar]

- Poedts, S.; Meijer, P.M.; Goedbloed, J.P.; van der Vorst, H.; Jakoby, A. Parallel Magnetohydrodynamics on the CM-5. High-Performance Computing and Networking; Springer: Berlin/Heidelberg, Germany, 1994; pp. 365–370. [Google Scholar]

- Yu, I.-W. Subspace iteration for eigen-solution of fluid-structure interaction problems. J. Press. Vessel Technol. ASME 1987, 109, 244–248. [Google Scholar] [CrossRef]

- Brebbia, C.A.; Venturini, W.S. Boundary Element Techniques: Applications in Fluid Flow and Computational Aspects; Computational Mechanics Publications: Southampton, UK, 1987. [Google Scholar]

- Moler, C.B.; Stewart, G.W. An algorithm for generalized matrix eigenvalue problems. SIAM J. Numer. Anal. 1973, 10, 241–256. [Google Scholar] [CrossRef]

- Li, J.-F.; Li, W.; Vong, S.-W.; Luo, Q.-L.; Xiao, M. A Riemannian optimization approach for solving the generalized eigenvalue problem for nonsquare matrix pencils. J. Sci. Comput. 2020, 82, 67. [Google Scholar] [CrossRef]

- Saad, Y. Numerical solution of large nonsymmetric eigenvalue problems. Comput. Phys. Comm. 1989, 53, 71–90. [Google Scholar] [CrossRef][Green Version]

- Rommes, J. Arnoldi and Jacobi-Davidson methods for generalized eigenvalue problems with singular. Math. Comput. 2008, 77, 995–1015. [Google Scholar] [CrossRef]

- Najafi, H.S.; Moosaei, H.; Moosaei, M. A new computational Harmonic projection algorithm for large unsymmetric generalized eigenproblems. Appl. Math. Sci. 2008, 2, 1327–1334. [Google Scholar]

- Jia, Z.-X.; Zhang, Y. A refined shift-invert Arnoldi algorithm for large unsymmetric generalized eigenproblems. Comput. Math. Appl. 2002, 44, 1117–1127. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Miao, C.-Q. On local quadratic convergence of inexact simplified Jacobi-Davidson method. Linear Algebra Appl. 2017, 520, 215–241. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Miao, C.-Q. On local quadratic convergence of inexact simplified Jacobi-Davidson method for interior eigenpairs of Hermitian eigenproblems. Appl. Math. Lett. 2017, 72, 23–28. [Google Scholar] [CrossRef]

- Li, J.-F.; Li, W.; Duan, X.-F.; Xiao, M. Newton’s method for the parameterized generalized eigenvalue problem with nonsquare matrix pencils. Adv. Comput. Math. 2021, 47, 29. [Google Scholar] [CrossRef]

- Duff, I.S.; Grimes, R.G.; Lewis, J.G. Sparse matrix test problems. ACM Trans. Math. Soft. 1989, 15, 1–14. [Google Scholar] [CrossRef]

| i | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 2 | 3 | ∞ | |

| Computed Eigenvalues | ||

|---|---|---|

| 0 | 0 | 0 |

| 1.0000 | ||

| 1.0000 | ||

| 2.0000 | ||

| 3.0000 | ||

| 0 |

| r | |||

|---|---|---|---|

| 1 | |||

| 4 | |||

| 3 | |||

| 2 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, W.; Chen, X.; Shi, X.; Luo, A. A Shift-Deflation Technique for Computing a Large Quantity of Eigenpairs of the Generalized Eigenvalue Problems. Symmetry 2022, 14, 2547. https://doi.org/10.3390/sym14122547

Wei W, Chen X, Shi X, Luo A. A Shift-Deflation Technique for Computing a Large Quantity of Eigenpairs of the Generalized Eigenvalue Problems. Symmetry. 2022; 14(12):2547. https://doi.org/10.3390/sym14122547

Chicago/Turabian StyleWei, Wei, Xiaoping Chen, Xueying Shi, and An Luo. 2022. "A Shift-Deflation Technique for Computing a Large Quantity of Eigenpairs of the Generalized Eigenvalue Problems" Symmetry 14, no. 12: 2547. https://doi.org/10.3390/sym14122547

APA StyleWei, W., Chen, X., Shi, X., & Luo, A. (2022). A Shift-Deflation Technique for Computing a Large Quantity of Eigenpairs of the Generalized Eigenvalue Problems. Symmetry, 14(12), 2547. https://doi.org/10.3390/sym14122547