Abstract

In mobile crowd computing (MCC), smart mobile devices (SMDs) are utilized as computing resources. To achieve satisfactory performance and quality of service, selecting the most suitable resources (SMDs) is crucial. The selection is generally made based on the computing capability of an SMD, which is defined by its various fixed and variable resource parameters. As the selection is made on different criteria of varying significance, the resource selection problem can be duly represented as an MCDM problem. However, for the real-time implementation of MCC and considering its dynamicity, the resource selection algorithm should be time-efficient. In this paper, we aim to find out a suitable MCDM method for resource selection in such a dynamic and time-constraint environment. For this, we present a comparative analysis of various MCDM methods under asymmetric conditions with varying selection criteria and alternative sets. Various datasets of different sizes are used for evaluation. We execute each program on a Windows-based laptop and also on an Android-based smartphone to assess average runtimes. Besides time complexity analysis, we perform sensitivity analysis and ranking order comparison to check the correctness, stability, and reliability of the rankings generated by each method.

Keywords:

mobile cloud; mobile grid; dynamic resource selection; MCDM; entropy; EDAS; ARAS; MABAC; MARCOS; COPRAS; time complexity analysis 1. Introduction

The trend in the miniaturization of electronics has paved the way for smart mobile devices (SMDs) to be incorporated with significant computing capabilities. They are being loaded with several processing cores, specialized processors for different purposes, sizeable memory, and fat batteries. This has prompted users to prefer SMDs, which include smartphones and tablets, as the primary computing device leaving behind desktops and laptops. In general, though the SMDs are used frequently, they are not being used most of the time but their owners. The SMDs’ processing units are discretely utilized only for a few hours a day, on average [1,2,3]. The rest of the time, the processing modules remain idle, so a significant computing resource has been wasted. These wasted computing cycles can be utilized by lending them to more needy applications which are in want of extra computing resources to carry out some computing-intensive task [4,5,6,7]. If a collection of such unused computing resources is connected cumulatively, it can deliver an economical and sustainable HPC environment [8,9,10].

1.1. Mobile Crowd Computing

In mobile crowd computing (MCC), public-owned SMDs are used as computing resources [11]. The increasing use of SMDs has fueled the possibilities of MCC to a great extent. An estimation by Statista, a leading market and consumer data provider, suggests that the number of global smartphone users will reach 4.3 billion in 2023 from 3.8 billion in 2021 [12]. Due to this widescale SMD user base, there is a great probability of finding a sufficient number of SMDs not only at a populous place but also at scantily crowded locations. Therefore, due to the infrastructural flexibility and the omnipresence of SMDs, an ad-hoc HPC can be formed anywhere, allowing to achieve on-demand pervasive and ubiquitous computing [13]. And in the waking of the IoT and the IoE, the need for local processing is growing [14] because most of these applications are time-constrained and cannot afford to send data to a remote cloud for processing [15]. MCC can offer a local computing facility to these applications as ad-hoc mobile cloud computing [16,17,18] and as edge computing [19,20,21]. Besides the ad-hoc use of MCC, it can always be used for organizational computing infrastructure by making use of the in-house SMDs.

1.2. Resource Selection in Mobile Crowd Computing

The effectivity (e.g., response time, throughput, turnaround time, etc.) and reliability (e.g., fault tolerance, ensuring resource availability, device mobility handling, minimized hands-off, etc.) of MCC largely depend on selecting the right resources for job scheduling. That is why it is very crucial to select the most suitable resources among the currently available ones [22]. In this paper, we considered only the computing resources of the SMDs as selection criteria. Among others, the computing capability is one of the most important selection criteria as this would eventually influence the response time, throughput, and turnaround time for any given task. However, selecting SMDs based on their computing factors, which are conflicting in nature, is non-trivial.

As mentioned earlier, there might be quite many SMDs available at a certain place (local MCC, connected through a WLAN or other short-range communication means) [23,24] or for a certain application (global MCC, connected through the internet) to be considered as computing resources [25,26,27]. Among this sizable pool of resources, which of them would be most suitable? The selection problem has been aggravated by the fact that the number of SMD makers launch different devices with a variety of hardware resources regularly. Hence, in most of the cases, the available SMDs in an MCC would be vastly heterogeneous in terms of hardware (e.g., CPU & GPU clock frequency, number of cores, primary memory size, secondary memory size, battery capacity, etc.); and with different specifications, the SMDs boast varying computing capacities [28].

Along with the hardware specifications of the SMDs, another aspect is needed to be considered while selecting an SMD as a computing device—the present status of different resources of an SMD such as CPU & GPU load, available memory, available battery, signal strength, etc. Irrespective of their actual capacity, the resource usability depends on their actual availability. To elaborate this, let us consider the following scenario:

Two SMDs, M1 and M2, have the CPU frequencies 1.8 GHz and 2.2 GHz, respectively. Their present CPU loads are 30% and 90%, respectively. In this case, though M2 has a more capable CPU, as an immediate computing resource, M1 would be preferable because it has a much lower CPU load, i.e., it is more usable than M2.

The values of these variable parameters change depending on the SMD usage by its user. That is why, instead of selecting the SMDs based only on the hardware specifications, the current status of these parameters is needed to be considered. For a better QoS of MCC, it is crucial to select the most suitable SMDs with the best usable resources to offer at the moment of job submission and during its execution.

In general, considering all these diverse specifications, selecting the right SMD or a set of SMDs, in terms of computing resources, among many available SMDs in the MCC network, can be considered an MCDM problem.

1.3. Resource Selection as an MCDM Problem

Deciding on the best candidates from some alternatives based on multiple pre-set criteria is known as the MCDM problem. Suppose there is a finite set of distinct alternatives {A1, A2, …, An}. The alternatives are evaluated using a set of criteria {C1, C2, …, Cm}. A performance score pik is calculated for each alternative Ai ∀ i = 1, 2, …, n with respect to the criterion Cj ∀ j = 1, 2, …, m. Based on the calculated performance scores, an MCDM method orders the alternatives from the best to the worst. Here, the alternatives are homogeneous in nature, but the criteria may not be. They can be expressed in different units which do not have any apparent interrelationship. The criteria may be conflicting to each other; i.e., some may have maximizing objectives while others have minimizing objectives. The criteria may also have some weight, signifying their importance in the decision-making [29]. The common stages of a typical MCDM method are shown in Figure 1.

Figure 1.

Typical MCDM stages.

In our SMD selection problem, the alternatives are the SMDs available in the MCC at the time of job submission, and the criteria are different parameters considered for SMD selection (e.g., CPU frequency, RAM, CPU load, etc.). The MCDM solutions provide a ranking of the available SMDs based on the selection criteria. From this ranked list, the resource management module of the MCC selects the top-ranked SMD(s) for job scheduling.

Over the years, several algorithms have been developed which contributed significantly to the evolution of the expanding field of MCDM. These methods differ in terms of their computational logic and assumption, applicability, calculation complexities, and ability to withstand variations in the given conditions. Table 1 lists some of the popular MCDM approaches and the most noteworthy representatives of each approach.

Table 1.

The popular MCDM approaches and their respective popular representatives.

1.4. Paper Objective

Though the resource selection problem in MCC is an ideal MCDM problem, we could not find any significant work on this topic. In fact, MCDM is not sufficiently explored to solve the resource selection problems in analogous distributed computing systems. As discussed in Section 2, very few works have attempted using MCDM methods for resource selection in the allied domains such as grid computing, cloud computing, and mobile cloud.

However, witnessing the wide-scale applications of MCDM, especially in decision-making problems, we believe that it can also offer promising solutions for resource selection in MCC and other similar computing systems, which is not explored so far. For real-time resource selection in a dynamic environment like MCC, adopting the MCDM approach that provides consistent and considerably accurate SMD selection decisions is necessary, balancing various parameters at a reasonable time complexity. In view of that, the key objective of this paper is to find out, among several existing MCDM methods, which one would be the most suitable for this particular problem scenario.

In this paper, we aim to assess and compare the performance of different MCDM methods in selecting SMDs as computing resources in MCC. The comparative assessment is made in terms of the correctness and robustness of the SMD rankings given by each method and the precise run-time of each method.

1.5. Paper Contribution

This paper presents a comparative study of five MCDM methods under asymmetric conditions with varying criteria and alternative sets for resource selection in MCC. The followings are the main contributions of the paper:

- We use five distinct MCDM algorithms for the comparative analysis—EDAS, ARAS, MABAC, COPRAS, and MARCOS.

- The five algorithms that are used in this study are of distinctive nature in terms of their fundamental procedure. Moreover, the combination of the considered MCDM methods comprises some popularly used methods and some recently proposed methods. This diverse combination for a comparative study of MCDM methods is quite rare in the literature.

- To check the impact of the number of alternatives and criteria on the performance of the MCDM methods, we consider four data sets of different sizes. Each of the methods is implemented on all four datasets.

- We carry out an extensive comparative analysis of the results for all the considered scenarios under different variations of criteria and alternative sets. The comparative analysis is done on two aspects: (a) an exhaustive validation and robustness check and (b) the time complexity of each method.

- Along with the time complexity of each MCDM method, the actual runtime of each method on two different types of devices (laptop and smartphone) are compared and analyzed for each considered scenario.

- We found hardly any work in which computational and runtime-based comparison of different MCDM methods has been carried out apart from the validation and robustness check. To be specific, this paper is the first of its kind that compares the MCDM methods of different categories for resource selection in MCC or any other distributed mobile computing systems.

1.6. Paper Organization

The rest of this paper is presented as follows. In Section 2, we collate some of the related work and discuss their findings. Section 3 discusses the objective weighting method (Entropy) and other MCDM methods used in the study and their respective algorithms. In Section 4, we furnish the research methodology, which includes the details of data collection, choosing the resource selection criteria, and different experimental cases (datasets) to be considered for the study. Section 5 presents the experimental details and results of the comparative analysis. Section 6 presents a critical analysis of the experimental findings and the rationality and practicability of this study. Finally, Section 7 concludes the paper while pointing out the limitations of this study and mentioning the future scopes and research prospects for improving this work. Table 2 lists the acronyms used in this paper and their full forms.

Table 2.

List of acronyms.

2. Related Work

MCDM techniques have been used for decision-making in several application domains for a long time [44,45]. They have been extensively used in engineering [46]. Table 3 lists some major application areas of MCDM along with respective references. However, this list is in no way comprehensive but only representative. To make the list short, we majorly considered the review or survey articles. In the following, we discuss some scholarly works in the context of our study.

Table 3.

Examples of various applications of MCDM methods.

Like web service selection [47,48], MCDM methods are also popularly used for cloud service selection [49,50,51]. Youssef [52] used a combination of TOPSIS and BWM to rank cloud service providers based on nine service evaluation criteria, including sustainability, response time, usability, interoperability, cost, maintainability, reliability, scalability, and security. Singla et al. [53] used Fuzzy AHP and Fuzzy TOPSIS to select optimal cloud services in a dynamic mobile cloud computing environment. They considered resource availability, privacy, capacity, speed, and cost as selection criteria.

MCDM methods are being used to improve the efficiency and effectiveness of job offloading in mobile cloud computing [54,55]. To save the energy of a mobile device, Ravi and Peddoju [56] used TOPSIS for selecting suitable service providers such as cloud, cloudlet, and peer mobile devices to offload the computation tasks. They considered the waiting time, the energy required for communication, the energy required for processing in mobile devices, and connection time with the resource as the selection criteria.

Mishra et al. [57] proposed an adaptive MCDM model for resource selection in fog computing, which can accommodate the new-entrant fog nodes without reranking all the alternatives. The proposed method is claimed to have less response time and is suitable for a dynamic and distributed environment.

To ensure the quality of the collected data in mobile crowd sensing applications, Gad-ElRab and Alsharkawy [58] used the SAW method for selecting the most efficient devices based on computation capabilities, available energy, sensors attached to the device, etc.

Nik et al. [59] used the TOPSIS method to select the resource with the best response time for asynchronous replicated systems in a utility-based computing environment. To achieve a shorter response time, they considered four QoS parameters (efficiency, freshness of data, reliability, and cost) as selection criteria.

MCDM methods have been used for resource selection in grid computing as well. Mohammadi et al. [60] used AHP and TOPSIS combinedly for grid resource ranking considering cost, security, location, processing speed, and round-trip time as criteria. Abdullah et al. [61] used the TOPSIS method to select resources for fair load balancing in a multi-level computing grid. For resource selection, they considered three criteria expected completion time, resource reliability, and the resource’s load. Kaur and Kadam [62] used MCDM methods for a two-phased resource selection in grid computing. They applied the SAW method to rank the best resources in the local or lower level and then used enriched PROMETHEE-II combined with AHP for a global resource selection or to select the best resources across all the top-ranked resources at each local level.

Several works are proposed for evaluation and selection of smartphones [63,64,65,66,67,68,69], but in all these works, smartphones were considered as consumer devices. Various aspects were considered for selection by matching with the consumers’ choice and interest. We could not find any work that applied MCDM for smartphone selection as a computing resource.

Triantaphyllou, in his book [70], extensively compared the popular MCDM methods such as WSM, WPS, TOPSIS, ELECTRE, and AHP (along with its variants). The methods were discussed based on real-life issues, both theoretically and empirically. A sensitivity analysis was performed on the considered methods, and the abnormalities with some of these methods were rigorously analyzed. Velasquez and Hester [71] performed a literature review of several MCDM methods, viz., MAUT, AHP, fuzzy set theory, case-based reasoning, DEA, SMART, goal programming, ELECTRE, PROMETHEE, SAW, and TOPSIS. This study aimed to analyze the advantages and disadvantages of the considered methods and examine their suitability in specific application scenarios.

Several other works attempted to present comparative studies of different MCDM methods with respect to different application areas. Table 4 presents a comprehensive list of such works. However, despite our best effort, we could not find any comparative analysis of MCDM methods for resource selection in a dynamic environment like MCC or any other related applications. From the table, it can also be observed that barring only a few works, none has conducted time complexity analysis. Furthermore, we found not a single paper that calculated the actual runtime of the MCDM algorithms. These unique contributions of our paper make it exclusive.

Table 4.

Survey of comparative analysis of different MCDM methods.

3. Research Background

This section briefly discusses the key methods considered for the comparative study and their corresponding computational algorithms.

3.1. MCDM Methods Considered for the Comparative Study

This section briefly describes five MCDM methods considered for the comparative analysis along with their computation algorithms. In this paper, we derived the preferential order of the alternatives based on the following aspects:

- (a)

- Separation from average solution (EDAS method).

- (b)

- The relative positioning of the alternatives with respect to the best one (ARAS method).

- (c)

- Utility-based classification and preferential ordering on the proportional scale (COPRAS method).

- (d)

- Approximation of the positions of the alternatives to the average solution area (MABAC method).

- (e)

- Compromise solution while trading of the effects of the criteria on the alternatives (MARKOS method).

We considered the widely used MCDM methods as a representation of each above-mentioned class. In Table 5, we present a comparative analysis of the merits and demerits of the considered MCDM methods. Since the calculation time is vital in our problem (resource selection in MCC) and subjective bias might affect the final solution, we avoided considering the pairwise comparison methods such as AHP, ANP, ELECTRE, MACBETH, REMBRANDT (multiplicative AHP), PAPRIKA, etc.

Table 5.

Merits and demerits of the MCDM methods considered in this study.

3.1.1. EDAS Method

EDAS is a recently developed distance-based algorithm that considers the average solution as a reference point [32]. The alternative with a higher favorable deviation, i.e., the positive distance from average (PDA), is preferred compared to non-favorable deviation, i.e., the negative distance from average (NDA). As a result, EDAS provides a reasonably robust solution, free from outlier effect and rank reversal problem, and decision-making fluctuations [165]. However, the EDAS method does not portray a favorable result. Therefore, this method is more suited in the case of risk aversion considerations. The procedural steps of EDAS are described below.

Step 1: Calculation of the average solution

The average solution is the midpoint for all alternatives in the solution space with respect to a particular criterion and is calculated by:

Step 2: Calculation of PDA and NDA

PDA and NDA are the dispersion measures for each possible solution with respect to the average point. An alternative with higher PDA and lower NDA is treated as better than the average one. The PDA and NDA matrices are defined as:

where:

and:

PDA = [PDAij]m×n

NDA = [NDAij]m×n

It can be inferred that if PDA > 0, then the corresponding NDA = 0, and if NDA > 0, then the PDA = 0 for an alternative with respect to a particular criterion.

Step 3: Determine the weighted sum of PDA and NDA for all alternatives

where, wj is the weight of jth criterion.

Step 4: Normalization of the values of SP and SN for all the alternatives

The normalization of linear form for SP and SN values are obtained by using the following expression:

Step 5: Calculation of the appraisal score (AS) for all alternatives

Here the appraisal score denotes the performance score of the alternatives.

where, 0 ≤ ASi ≤ 1. The alternative having the highest ASi is ranked first and so on.

3.1.2. ARAS Method

ARAS method uses the concept of utility values for comparing the alternatives. In this method, a relative scale (i.e., ratio) is used to compare the alternatives with respect to the optimal solution [35,166,167]. This method uses a simple additive approach while working under compromising situations effectively and with lesser computational complexities [168,169]. However, it is observed that ARAS works reasonably well only when the number of alternatives is limited [170]. The procedural steps of ARAS are described below.

Step 1: Formation of the decision matrix

Step 2: Determination of the optimal value

The optimal value for jth criterion is given by:

Step 3: Formation of the normalized decision matrix

The criteria have different dimensions. Normalization is carried out to achieve dimensionless weighted performance values for all alternatives under the influences of the criteria. In this case, we follow a linear ratio approach for normalization. However, we consider the optimum point as the base level. Therefore, in the normalized decision matrix, we include the optimum value, and the order of the matrix is . In the ARAS method, a two-stage normalization is followed for the cost type of criteria. The normalized decision matrix is given by:

where:

If in case of cost type criteria , we consider .

Step 4: Derive the weighted normalized decision matrix

where:

and .

Step 5: Calculation of the optimality function value for each alternative

where, .

Higher is the value of , better is the alternative.

Step 6: Find out the priority order of the alternatives based on utility degree with respect to the ideal solution

where, and .

Obviously, the bigger value of is preferable. It is pretty certain that the optimality function maintains a direct and proportional relationship with the performance values of the alternatives and weights of the criteria. Hence, the greater the value of , more is the effectiveness of the corresponding solution. The degree of utility is essentially the usefulness of the corresponding alternative with respect to the optimal one.

3.1.3. MABAC Method

MABAC uses two areas: an upper approximation area (UAA) for favorable or ideal solutions and a lower approximation area (LAA) for non-favorable or anti-ideal solutions for performance-based classifications of the solutions. This method provides lesser computational complexities compared to the EDAS and ARAS methods. Further, since this method does not involve distance-based separation measures, it generates stable results [33]. MABAC compares the alternatives based on relative strength and weakness [171]. Because of its simplicity and usefulness, MABAC has been a widely popular method in various applications, for example, social media efficiency measurement [172], health tourism [173], supply chain performance assessment [159], portfolio selection [174], railway management [175], medical tourism site selection [176], and selection of hotels [177]. The procedural steps of MABAC are described below.

Step 1: Normalization of the criteria values

Here, a linear max-min type scheme is used. The usefulness of normalization is explained in the descriptions of the previous algorithms.

where, and are the maximum and minimum criteria values, respectively.

Step 2: Formulate the weighted normalization matrix (Y)

Elements of Y are given by:

where, is the criteria weight.

Step 3: Determination of the Border Approximation Area (BAA)

The elements of the BAA (T) are denoted as:

where:

where, m is the total number of alternatives and corresponds to each criterion.

Step 4: Calculation of the matrix Q related to the separation of the alternatives from BAA

Q = Y − T

A particular alternative is said to be belonging to the UAA (i.e., ) if or LAA (i.e., ) if or BAA (i.e., T) if . The alternative is considered to be the best among the others if more numbers of criteria pertaining to it possibly belong to .

Step 5: Ranking of the alternatives

It is done according to the final values of the criterion functions as given by:

The higher the value is, more is the preference.

3.1.4. COPRAS Method

The COPRAS method calculates the utility values of the alternatives under the direct and proportional dependencies of the influencing criteria for carrying out preferential ranking [38,178,179]. The procedural steps for finding out the utility values of the alternatives using the COPRAS method are discussed in the following. The alternatives are ordered in descending order based on the obtained utility values.

Step 1: Construct the normalized decision matrix using the simple proportional approach

where, is the performance value of the ith alternative with respect to jth criterion (i = 1, 2, …, m; j = 1, 2, …, n)

Step 2: Calculation of the sums of the weighted normalized values for optimization in ideal and anti-ideal effects

The ideal and anti-ideal effects are calculated as:

where, k is the number of maximizing (i.e., profit type) criteria and is the significance of the jth criterion.

In case of , all values are corresponding to the beneficial or profit type criteria, and for , we take the performance values of the alternatives related to cost type criteria.

Step 3: Calculation of the relative weights of the alternatives

The relative weight for any alternative (ith) is given as:

The value corresponding to the ith alternative signifies the degree of satisfaction of that with respect to the given conditions. The greater is the value of better is the relative performance of the concerned alternative, and hence, higher is the position. Therefore, the most rational and efficient DMU should have i.e., the optimum value. The relative utility of a particular DMU or alternative is determined by comparing the value of any DMU with respect to the value, corresponding to the most effective one.

The utility for each alternative is given by:

Needless to say, the value for the most preferred choice is 100%.

3.1.5. MARCOS Method

MARCOS belongs to a strand of MCDM algorithms that derives solutions under compromise situations. However, unlike the previous versions, MARCOS starts with including ideal and anti-ideal solutions in the fundamental decision matrix at the very beginning. Likewise, COPRAS also finds out the utility values. However, here the decision-maker can make a trade-off among the ideal and anti-ideal solutions to arrive at the utility values of the alternatives. The MARCOS method is also capable of handling a large set of alternatives and criteria [43,180,181]. The procedural steps of MARCOS are described below.

Step 1: Formation of the extended decision matrix (D*) by including the anti-ideal solution () values in the first row and the ideal solution () values in the last row

and are defined by:

The anti-ideal solution represents the worst choice, whereas the ideal solution is the reference point that shows the best possible characteristics given the set of constraints, i.e., criteria.

Step 2: Normalization of D*

The normalized values are given by:

Since it is preferred to set apart from the anti-ideal reference point, in MARCOS, the normalization is carried out using a linear ratio approach with respect to the anti-ideal solution.

Step 3: Formation of weighted D*

After normalization, the weighted normalized matrix with elements is formulated by multiplying the normalized value of each alternative with the corresponding weight of the criteria, as given below:

Step 4: Calculation of utility degrees of the alternatives for and

The utility degree of a particular alternative with respect to given conditions represents its relative attractiveness of the same. The utility degrees are calculated as follows:

where:

Step 5: Calculation of values of utility functions for and

The utility function resembles the trade-off that the observed or considered alternatives make vis-à-vis the ideal and anti-ideal reference points, and are given by:

The decision is made related to the selection of a particular alternative is based on utility functional values. The utility function exhibits the relative position of the concerned alternative with respect to the reference points. The best alternative is closest to the ideal reference and, subsequently, distant from the anti-ideal one compared to other available choices.

Step 6: Calculation of the utility function values for the alternatives

The utility function value for ith alternative is calculated by:

The alternative having the highest utility function value is ranked first over the others.

3.2. Entropy Method for Criteria Weight Calculation

Each selection criterion carries some weight. The weights define the importance of the criteria in the decision-making. To determine the criteria weights, we applied the most popularly used entropy method. The entropy method works on objective information following the concept of the probabilistic information theory [182]. The objective weighting approach can mitigate the man-made instabilities in the subjective weighting approach and gives more realistic results [183]. The entropy method shows its efficacy in dealing with imprecise information and dispersions while offsetting the subjective bias [184,185]. Extant literature shows a colossal number of applications of the Entropy method for determining criteria weights in various situations (for example [174,186,187,188,189,190]). The steps of the entropy method are given below:

Suppose, represents the decision matrix where m is the number of alternatives and n is the number of criteria.

Step 1: Normalization of the decision matrix

Normalization is carried out to bring the performance values of all alternatives subject to different criteria to a common unitless form having scale values ϵ(0,1). Here we follow the linear normalization scheme.

Entropy value signifies the level of disorder. In the case of criteria weight determination, a criterion with a higher Entropy value indicates that that particular criterion contains more information.

The normalization matrix is represented as where the elements are given by:

Step 2: Calculation of Entropy values

The Entropy value for ith alternative for jth criterion is given by:

where, k is a constant value and is defined by:

and:

If then,

Step 3: Calculation of criteria weight

The weight for each criterion is given by:

Here, the higher the value of is, more is the information contained in the jth criterion.

4. Research Methodology

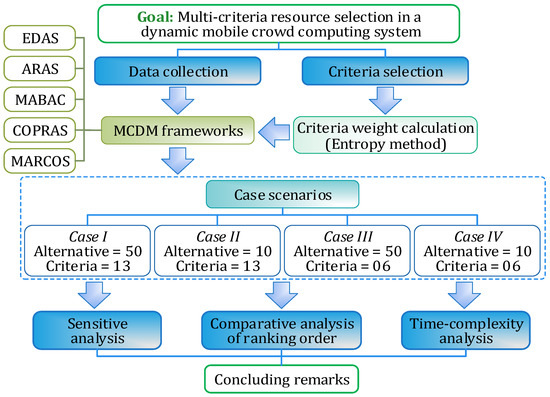

This section discusses the research framework used in this paper and provides the computational steps of the MCDM algorithms applied for carrying out the comparative analysis in a dynamic environment. Figure 2 depicts the steps followed in this research work.

Figure 2.

Research framework.

4.1. Resource Selection Criteria

For the experimental purpose, in this paper, we considered a generalized scenario for the resource requirement of the MCC computing jobs. Generally, an SMD’s computing capability is determined by typical resource parameters such as CPU and GPU power, RAM, battery, signal strength (for data transfers), etc. Here, we considered thirteen criteria for SMD selection, as shown in Table 6. Out of these, eight are profit criteria, i.e., their maximized values would be ideal for selection, whereas five are cost criteria, i.e., their minimized values should be ideal.

Table 6.

List of selection criteria.

However, depending on specific applications and specific job types, the criteria and their weights would vary. For example, a CPU-bound job may not use GPU cores, while some highly computing-intensive jobs (such as image and video analysis, complex scientific calculations, etc.) would use GPU more than the CPU. Similarly, the RAM size would be a decisive factor for a data-intensive job that might not be so important for a CPU-intensive job. Here, we chose the criteria that would, in general and overall, be considered for selecting an SMD as a computing resource.

4.2. Data Collection

To collect the SMD data to be used in the comparative analysis, we considered a local MCC scenario at the Data Engineering Lab of the Department of Computer Science & Engineering at National Institute of Technology, Durgapur. We collected data from the users’ SMD connected to the Wi-Fi access point deployed at this lab, which is generally accessed by the institute’s research scholars, the project students, faculty members, and the technical staff. We developed a logger program using the Python 3.6 environment. The Python script constantly monitored the wireless network interfaces. Whenever an SMD gets connected to the access point, the logger program collects the required data and stores them in a database within the MCC coordinator. All the devices connected to the access point were identified (UID) using their MAC addresses. The overall MCC setup and data collection scenario is shown in Figure 3.

Figure 3.

SMD data collection setup.

In another experiment for local MCC [191], we logged the SMD information for nearly eight months of several users (whoever connected to the access point during this period). Among them, we picked the users who were more consistent with high presence frequency and less sparsity. For this study, we considered such 50 SMDs, selected randomly. We collected various information related to the users and their SMDs. However, in this paper, we used only that information required for this experiment. To be specific, here, we considered a total of thirteen resource parameters that are important in the decision-making process for selecting an SMD as a suitable resource in MCC, as shown in Table 6. It can be seen from the table that some resource parameters are fixed, i.e., they would not change their values in their lifetime (e.g., C1, C2, C3, C4, C6, and C13), while some parameters’ values are changed dynamically (e.g., C5, C7, C8, C9, C10, C11, and C12). We considered some instantaneous values of all the parameters and used the same for all experimental illustrations for the experimental purpose.

4.3. Experiment Cases

As in this study, we wanted to assess the effect of the number of criteria and alternatives in the selection outcome and computational complexity; we considered different variations of the selection criteria and alternatives for comparison. Accordingly, we generated four case scenarios, as discussed in the following subsections. Each case has a different number of alternatives (SMDs) and criteria. The reason behind choosing four datasets of different sizes is to assess the performance of the MCDM methods under different MCC scenarios.

4.3.1. Case 1: Full List of Alternatives and Full Criteria Set

This scenario considers the full list of alternatives under comparison (i.e., 50) subject to the influence of full criteria set consisting of 13 different criteria, as shown in Table 6. Accordingly, the decision matrix (50 × 13) is given in Table 7.

Table 7.

Decision matrix (Case 1).

4.3.2. Case 2: Lesser Number of Alternatives and Full Criteria Set

In this minimized dataset, we assume that only ten SMDs available for crowd computing (typically in a small-scale MCC). In this case, we shortened the number of alternatives. Here, the decision-maker would be able to compare the MCDM methods on a limited number of alternatives for the full list of criteria. For simplicity, we selected one smartphone model out of each group of five starting from the beginning, i.e., M5, M10, M15, and so on. The decision matrix (10 × 13) is given in Table 8.

Table 8.

Decision matrix (Case 2).

4.3.3. Case 3: Total Number of Alternatives and a Smaller Number of Criteria

In a situation, depending on the MCC application requirement, the full criteria set may not need to be considered. For these cases, only a small number of crucial criteria may be defined. To represent such a scenario, in this case, we considered a minimized dataset by eliminated some criteria from the original dataset. We assumed that some criteria (e.g., CPU and battery temperature and signal strength) could be kept out of the selection matrix and, if required, could be set as threshold criteria straightforwardly. For example, suppose the threshold for temperature is set at 40 °C. In that case, all the SMDs having a temperature more than this would be filtered out and would not be considered for the selection, irrespective of other resource specifications. We also removed information of GPU, assuming that the tasks are CPU bound only and they do not require to exploit the power of GPU, i.e., the jobs are sequential and not parallel. It can also be vice versa, i.e., we could consider GPU where the MCC job involves mostly parallel processing. Table 9 shows the criteria considered, and in Table 10, the decision matrix (50 × 6) is presented.

Table 9.

Minimized selection criteria.

Table 10.

Decision matrix (Case 3).

4.3.4. Case 4: Minimized Number of Alternatives and Criteria

In this case, we considered the combination of a minimized set of alternatives and criteria. This scenario considers a limited number of choices and the influence of a limited number of criteria. We considered the alternatives as selected in Case 2 and the criteria as listed in Table 9. Hence, in this case, our decision matrix is of dimension 10 × 6, as shown in Table 11.

Table 11.

Decision matrix (Case 4).

5. Experiment, Results, and Comparative Analysis

In this section, we present the details of the experiment for the comparative study, including the results and critical discussion. The experiment focuses on the comparative ranking for the SMD selection using five distinct MCDM methods and to find their time complexities under different scenarios by varying the criteria and/or alternative sets.

5.1. Experiment

We applied the entropy method and the five MCDM methods (i.e., EDAS, ARAS, MABAC, COPRAS, and MARCOS) on four datasets, as discussed in Section 4.3. The algorithms were implemented using a spreadsheet (MS Excel) as well as through hand-coded programming (using Java). However, for ranking and sensitivity analysis, we used the spreadsheet calculation, and to estimate the runtime, we considered the programming outturn. The details of the programmatical implementation are discussed in Section 5.4. The aggregate rankings of the SMDs were derived from each MCDM method for each dataset. We checked the consistencies among the results of the individual MCDM methods and the final aggregate ranks. We also compared the robustness and stability in the performance of the MCDM methods applied in this paper. Finally, the actual runtimes of each method under different scenarios were calculated.

5.2. Results

In this section, we report the details of the experimental results of SMD rankings using the considered MCDM methods, obtained through the spreadsheet calculation.

Table 12 shows the criteria weights calculated for Case 1 using the Entropy method where and Cj represents the criteria, where j = 1, 2, 3, …, 13. It is seen that the weights of the criteria are reasonably distributed. However, based on the values of the decision matrix, the Entropy method calculates higher weights (>10%) for C1, C2, and C4 while assigning the least weights to C11 and C12.

Table 12.

Criteria weights (Case 1).

We used these criteria weights to rank the alternatives based on the decision matrix of Table 7, applying the five MCDM methods considered in this paper. Table 13, Table 14, Table 15, Table 16 and Table 17 present the rankings of the alternatives based on the final score values as derived by using the five MCDM algorithms. From Table 13, we observe that considering the average solution point as the reference, M19, M14, M36, M41, and M7 are the top performers while proportional assessment methods such as ARAS and COPRAS respectively yield M36, M14, M26, M19, M31 and M19, M14, M41, M36, M6 as better performers (see Table 14 and Table 16). It is observed that the top-performing DMUs show reasonable consistency. However, Table 15 and Table 17 show that the relative ranking results derived by MABAC and MARCOS are weekly consistent with previous rankings.

Table 13.

Ranking results of EDAS method (Case 1).

Table 14.

Ranking results of ARAS method (Case 1).

Table 15.

Ranking results of MABAC method (Case 1).

Table 16.

Ranking results of COPRAS method (Case 1).

Table 17.

Ranking results of MARCOS method (Case 1).

To find out the aggregate ranking, we used the final score values of the alternatives as obtained using different algorithms and applied the SAW method [192] for objective evaluation as adopted in [159]. Table 18 exhibits the relative positioning of the alternatives by different MCDM methods and their aggregate ranks derived by using SAW. In this context, Table 19 shows the findings of the rank correlation tests among the results obtained by using different methods and the final rank obtained by SAW. For this, we derived the following two correlation coefficients:

Table 18.

Comparative analysis of the rankings by different MCDM methods (Case 1).

Table 19.

Correlation test I (Case 1).

Kendall’s τ: Let, {(a1, b1), (a2, b2), …, (an, bn)} is a set of observations for two random variables A and B where all ai and bi (i = 1, 2, …, n) values are unique. Then, Kendall’s τ is calculated as follows:

Spearman’s ρ: Any pair and where is said to be concordant if either both and or and hold good. The Spearman’s is calculated as follows:

here, is the difference between two ranks of each observation, and is the number of observations.

The aggregated final rank in terms of consistency is: MABAC > COPRAS > EDAS > ARAS > MARCOS. Similarly, we derived the ranking of alternatives subject to the influence of the criteria for the other cases (Case 2 to 4). Table 20, Table 21 and Table 22 show the criteria weights for Case 2–4 as derived from the performance values of the alternatives subject to influences of the criteria involved. In Case 2, we used the full set of criteria but a reduced number of alternatives, while in Case 3, we used the full set of alternatives subject to a reduced set of criteria. In Case 4, we considered a reduced set for both alternatives and criteria. It may be noted from Table 20 that C1, C2, and C13 obtain higher weights (more than 10%) while C4 and C8 are holding the least weight. It suggests that when we reduce the number of alternatives, there is a change in the derived criteria weights (see Table 12 and Table 20). The same phenomenon is observed when we compared the derived criteria weights for the reduced set of criteria (for Cases 3 and 4, see Table 21 and Table 22).

Table 20.

Criteria weights (Case 2).

Table 21.

Criteria weights (Case 3).

Table 22.

Criteria weights (Case 4).

Table 23, Table 24 and Table 25 show the alternatives’ comparative ranking under Case 2–4, respectively. After obtaining the ranking of the alternatives by various algorithms, we found the aggregate rank by using the SAW method based on the appraisal scores.

Table 23.

Comparative analysis of the ranking by different MCDM methods (Case 2).

Table 24.

Comparative analysis of the ranking by different MCDM methods (Case 3).

Table 25.

Comparative analysis of the ranking by different MCDM methods (Case 4).

Now, for comparative analysis of various MCDM methods, it is important to see the consistency of their results with the final preferential order. Hence, we performed a non-parametric rank correlation test. Table 19 for Case 1 and Table 26, Table 27 and Table 28 for Case 2–4 exhibit the results of correlation tests. From Table 26, we find that COPRAS > EDAS > ARAS > MABAC (MARCOS shows non-consistency with the final ranking). Table 27 indicates that EDAS > ARAS > COPRAS > MABAC > MARCOS, while from Table 28, we trace that COPRAS > ARAS > EDAS > MABAC > MARCOS in terms of consistency of their individual results with final ranking order as obtained by using SAW.

Table 26.

Correlation test II (Case 2).

Table 27.

Correlation test III (Case 3).

Table 28.

Correlation test IV (Case 4).

5.3. Sensitivity Analysis

Some of the essential requirements for MCDM-based analysis are the rationality, stability, and reliability of the rankings [193]. There are several variations in the given conditions, for instance, change in the weights of the criteria, MCDM algorithms and normalization methods, and deletion/inclusion of the alternatives that often lead to instability of the results [171,194,195]. Sensitivity analysis is conducted to experimentally check the robustness of the results obtained using MCDM based analysis [196,197]. A particular MCDM method shows stability in the result if it can withstand variations in the given conditions, such as fluctuations in the criteria weights.

For the sensitivity analysis, we used the scheme followed in [198], which simulates different experimental scenarios by interchanging criteria weights. Table 29, Table 30, Table 31 and Table 32 present the experimentations vis-à-vis the four cases used in this study. Here, the numbers in italics denote that the cell values of that particular column interchange their weights [199,200,201], in each experiment. In this scheme, we attempt to interchange weights of optimum and sub-optimum criteria, beneficial and cost type of criteria to simulate various possible scenarios for examining the stability of the ranking results obtained by various MCDM methods.

Table 29.

Interchange of criteria weights for sensitivity analysis (Case 1).

Table 30.

Interchange of criteria weights for sensitivity analysis (Case 2).

Table 31.

Interchange of criteria weights for sensitivity analysis (Case 3).

Table 32.

Interchange of criteria weights for sensitivity analysis (Case 4).

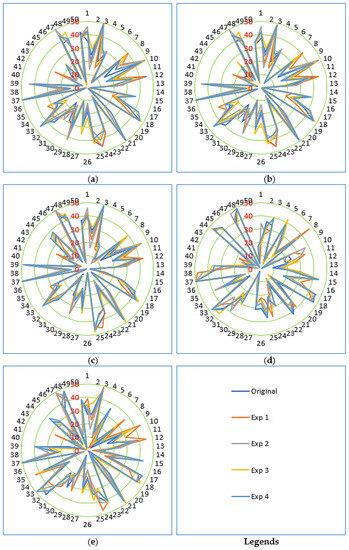

Figure 4 depicts the comparative variations in the rankings of the alternatives as derived by using five MCDM algorithms under different experimental set up for Case 1. We observe that all five considered MCDM methods provide reasonable stability in the solution while COPRAS and ARAS perform comparatively better. Table 33 highlights the correlation of the actual ranking with those obtained by changing the criteria weights (see Table 29). In the same way, we carried out the sensitivity analysis for all MCDM methods for Cases 2 to 4. Table 34, Table 35 and Table 36 show the results of the correlation test as we do for Case 1.

Figure 4.

Pictorial representation of sensitivity analysis (Case 1) (a) EDAS, (b) COPRAS, (c) ARAS, (d) MARCOS, (e) MABAC.

Table 33.

Correlation test V (sensitivity analysis—Case 1).

Table 34.

Correlation test VI (sensitivity analysis—Case 2).

Table 35.

Correlation test VII (sensitivity analysis—Case 3).

Table 36.

Correlation test VIII (sensitivity analysis—Case 4).

5.4. Time Complexity Analysis

This section reports the time complexity analysis and the runtimes of the five MCDM methods considered in this study, as summarized in Table 37. All the methods have a worst-case time complexity of O(mn), where m is the number of alternatives and n is the number of considered criteria. However, EDAS, MABAC, and COPRAS exhibit Ω(m + n) as the best-case time complexity if the decision matrix is already prepared. But if the matrix is constructed in runtime, the best-case time complexity for these methods also would be Ω(mn).

Table 37.

Time complexity and runtimes for each MCDM method under various considerations.

Depending on the MCC application and architecture, the MCC coordinator where the SMD selection program would run might be a computer or an SMD. That is why, to check the performance of the MCDM methods, we checked the runtime of each of them by running on a laptop and a smartphone.

To run the MCDM algorithms on the laptop, we used Java (version 16) as the programming language and MS Excel (version 2019) as the database. The programs were executed on a laptop with AMD Ryzen 3 dual-core CPU (2.6 GHz, 64 bit) and 4 GB of RAM, operating on Windows 10 (64-bit). To run the programs on a smartphone, we designed an app that could accommodate and run Java program scripts; and in this case, we used a text file to store the decision matrix. The programs were executed on an SoC with 1.95 GHz Snapdragon 439 (12 nm), octa-core (4 × 1.95 GHz Cortex-A53 and 4 × 1.45 GHz Cortex A53) CPU, and Adreno 505 GPU, with 3 GB of RAM, operating on Android 11.

The MCDM module may get the decision matrix either from the secondary storage or primary memory. We generally might store the database on the secondary storage when we need to maintain the log for future analysis and prediction. But, updating the SMD resource values in the decision matrix on the secondary storage and retrieving them frequently for decision making involves considerable overhead. Alternatively, the decision matrix could be updated dynamically where the SMD resource values come directly to the coordinator’s memory. Compared to secondary storage, accessing memory takes negligible time.

Since in MCC, the SMDs are mobile, the available SMDs (alternatives) continuously change. Existing SMDs may leave, and new SMDs may join the network randomly. Also, the status of the variable resources (e.g., C5, C7, C8, C9, C10, C11) of each SMD varies time-to-time depending on its usage. In fact, in a typical centralized MCC, a data logging program always runs in the background to track the values of these recourses. This leads to change the decision matrix continuously. And based on the changed decision matrix, the SMD ranking also changes. It is desirable to store the decision matrix in the memory in such a dynamic scenario as long as resource selection is required.

Therefore, to have a comparative analysis in this aspect, we calculated the runtime considering both the scenarios: (a) when the dataset was fetched from the secondary storage and (b) when it was preloaded on RAM. The execution time was calculated using a timer (a Java function) in the program. The timer counted the time from data fetching (either from RAM or storage) to completion of the program execution. We executed each algorithm twenty times and took the average runtime. To eliminate the outliers, we discarded the particular execution instances that were abnormally protracted.

From Table 37, it can be observed that the average runtimes of the MCDM programs, when they are executed on a laptop, are significantly higher when the decision matrix is in the secondary storage as compared to when it is in memory. However, when these programs are executed on the smartphone, this difference is not that high. This is because the typical storage used in smartphones is much faster than the hard disks of laptops. Another point is worth mentioning that we used text files as a database to execute the programs on the smartphone in our study. If it were other traditional database applications, the time taken to fetch the dataset from the phone storage would probably be much higher. In that case, the difference between the dataset in memory and storage would be significantly larger.

In our comparative analysis, we executed each algorithm ten times for each case. The average runtimes of ten executions were noted. The runtime of any program varies depending on several internal and external factors. That is why we took the average of ten execution instances. However, it is observed that the runtime variations are much higher on a laptop than on a smartphone. This is because the number of background processes typically run on laptops is significantly higher than on smartphones. Also, the resource scheduling in a laptop is more complex than in a smartphone. Nevertheless, the variations in each execution could be more neutralized if the number of considered execution instances is increased.

6. Discussion

In this section, we discuss the experimental findings and our observations. We also present a critical discussion on the judiciousness and practicability of this work and the findings.

6.1. Findings and Observations

In this section, we discuss the observations on the findings obtained through data analysis. As already mentioned, we have four conditions:

- Condition 1: Full set (Case 1: complete set of 13 criteria and 50 alternatives)

- Condition 2: Reduction in the number of alternatives keeping the criteria set unaltered (Case 2: reduced set of 10 alternatives and complete set of 13 criteria)

- Condition 3: Variation in the criteria set (Case 3: reduced set of 6 criteria) keeping the alternative set the same (i.e., 50)

- Condition 4: Variations in both alternative and criteria sets (Case 4: reduced set of 10 alternatives and 6 criteria).

For all conditions, we noticed some variations in the relative ranking orders. By further introspecting the results obtained from different methods and their association with the final ranking (obtained by using SAW), we found that for Case 1, MABAC and COPRAS are more consistent. For Case 2, COPRAS and EDAS outperformed others in terms of consistency with the final ranking. For Case 3, we observed that EDAS and ARAS showed better consistency while COPRAS performed reasonably well. For Case 4, we found that COPRAS and ARAS showed relatively better consistency with the final ranking. Therefore, the first level inference advocates in favor of COPRAS for all conditions under consideration.

Moving further, we checked for stability in the results. We performed a sensitivity analysis for all methods under all conditions, as demonstrated in Section 5.3. Here also, we noticed mixed performance. However, COPRAS shows reasonably stable results under all conditions given the variations in the criteria weights except Case 4.

Therefore, it may be concluded that given our problem statement and experimental setup, COPRAS has performed comparatively well under all case scenarios, while ARAS being its nearest competitor in this aspect. For both methods, the procedural steps are less in number, simple ratio-based or proportional approach is followed, i.e., no need to identify anti-ideal and ideal solutions or calculate distance. Therefore, the result does not show any aberrations. It may, however, be interesting to examining the performance of the algorithms when criteria weights are predefined, i.e., not depending on the decision matrix.

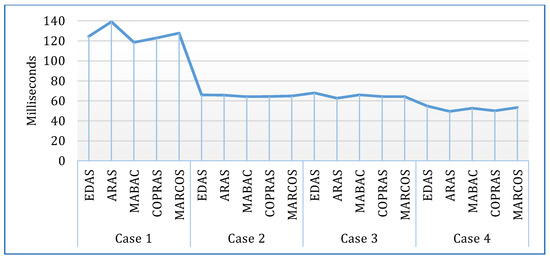

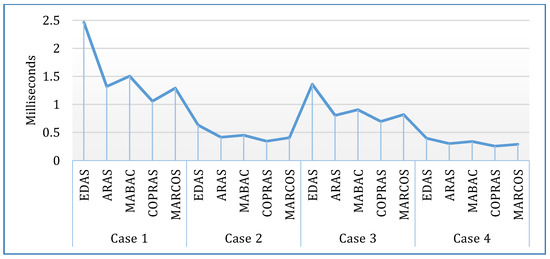

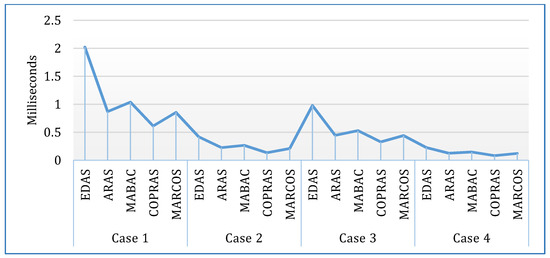

We further investigated the time complexities of the MCDM algorithms used in this paper to find out the most time-efficient one. All the considered MCDM methods perform equally in this aspect, though the best-case time complexity for EDAS, MABAC, and COPRAS is better than others. Figure 5, Figure 6, Figure 7 and Figure 8 graphically present the case-wise comparisons of the runtimes of each MCDM method for all the scenarios. Our experiment observed that the COPRAS method exhibits the most petite runtime for each dataset (cases) for all the considered scenarios, i.e., whether the dataset is in the secondary storage or memory or the program is run on a laptop or smartphone. Specifically, considering the average runtime for all the cases and scenarios, the ranking of the MCDM methods as per their runtime (RT) is: RTCOPRAS < RTMARCOS < RTARAS < RTMABAC < RTEDAS.

Figure 5.

Runtime comparison of MCDM methods on the laptop for each case when the dataset is in the memory.

Figure 6.

Runtime comparison of MCDM methods on the laptop for each case when the dataset is in the secondary storage.

Figure 7.

Runtime comparison of MCDM methods on the smartphone for each case when the dataset is in the phone storage.

Figure 8.

Runtime comparison of MCDM methods on the smartphone for each case when the dataset is in the memory.

However, this rank does not hold true for all the executions in each case. For example, from Figure 6, it can be noted that ARAS and MABAC took less time to execute in Case 1. In practice, Case 3 probably would be more common than other cases for a typical MCC application, i.e., there would be few numbers of SMDs available as computing resources and the application demanding a certain number of selection criteria. For this case, COPRAS took 0.05597 milliseconds on average if it runs on a laptop while the dataset resides in the memory and 0.32844 milliseconds for a smartphone. For a dynamic resource selection in MCC, this time requirement is tolerable. However, when the dataset is on the secondary storage, the runtime increases exponentially in the case of the laptop but not a smartphone.

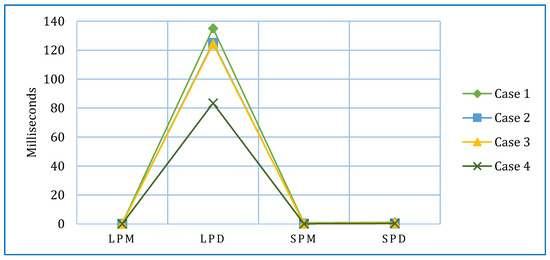

The runtime for both the MCDM method and Entropy calculation should be considered to get the effective runtime for the ranking process. Like the MCDM methods, for Entropy calculation also, when the dataset is on the secondary storage, the runtime increases exponentially in the case of the laptop but not a smartphone, as shown in Figure 9. Therefore, we can postulate that if the MCC coordinator is a laptop or desktop computer, the dataset needs to be stored in the memory before resource selection.

Figure 9.

Runtime comparison for Entropy method.

Considering the above discussions, it can be deduced that the COPRAS method is the most suitable for resource selection in MCC in terms of correctness, robustness, and computational (time) complexity.

6.2. Rationality and Practicability

In this section, we present a critical discussion on the rationality and practicability of this study.

6.2.1. Assertion

In the previous section, we conclusively observed that for resource selection in MCC, the COPRAS method is the most favorable in all respect. However, it should not be misinterpreted that the COPRAS method is the ideal solution for resource selection in MCC. In fact, optimized resource selection in a dynamic environment like MCC is an NP-hard problem. Hence, practically no solution can be claimed as optimal. We only assert that we found that COPRAS scales favorably in all aspects compared to other methods. But this does not mean that COPRAS is the ideal solution. There is always scope to explore further for a more suitable multi-criteria resource selection algorithm that would be more computing and time-efficient.

Moreover, it should be noted that the effectiveness of an MCDM solution depends on the particular problem and the data. In real implementations of MCC, the actual SMD data would certainly change, be it for different instances of the same MCC system or in different MCC systems. Because due to the dynamic nature of a typical MCC, the SMDs are not fixed. Even if the SMDs are fixed in an MCC for a certain period, their resource values will vary depending on the applications running on them and their users’ device usage behavior. Moreover, since the need for computing resources varies according to application requirements, the selection criteria and weights also differ accordingly. In these cases, the datasets would vary from those we used in our experiment. But the problem behavior and data types would be the same for all MCC applications and throughout their different execution instances. Hence, a solution found suitable for the given dataset would be applicable to any similar dataset for MCC. Even if the size of the datasets varies in different MCC, the finding of this study will hold true because we found that COPRAS performed comparatively better in all four datasets of different sizes considered in the experiment.

6.2.2. Application

The resource selection module is generally incorporated in the resource manager module of a typical distributed system. And the resource manager module generally is part of the middleware of a 3-tier system. Therefore, in the actual designing and implementation of an MCC system, the MCDM-based resource selection algorithm would be integrated into the middleware of MCC. This resource selection algorithm should generate a ranked list of the available SMDs based on their resources. The MCC job scheduler would dispatch the MCC jobs to the top-ranked SMDs from the list. This would ensure a better turnaround time and throughput and, in turn, better QoS of the MCC.

6.2.3. Implications

The findings of this paper would allow the MCC system designers and developers to adopt the right resource selection method for their MCC based on its scale and also on the preference and priority of the resource types. This would also contribute to managerial decision-making for implementing organizational MCC. As the study simulates different scenarios and compares the available options, it would be a likely reference for the decision-makers to choose the right MCDM method for resource selection and consider the appropriate size of the employed MCC and decide on the right number of selection criteria.

Furthermore, the pronouncements of this paper shall allow the researchers to choose a suitable MCDM method with reasonably higher accuracy and lesser run time complexity to solve real-life problems similar to the one discussed in this paper. Not only the researchers in the area of MCC and other allied fields (e.g., mobile grid computing, mobile cloud computing, and other related forms of distributed computing), this study would be of interest also to the people from the MCDM field who might find it motivating to nurture this problem domain and come up with some novel or improved methods that would be more suitable to address the associated resource dynamicity.

7. Conclusions, Limitations, and Further Research Scope

In this concluding section, we recap and summarize the presented problem, experimental work, and findings. We also point out the shortfalls of this study and identify the future scopes and research prospects to expand this work.

7.1. Summary

In mobile crowd computing (MCC), the computing capabilities of smart mobile devices (SMDs) are exploited to execute resource-intensive jobs. For better quality of service, selecting the most capable SMDs is essential. Since the selection is made based on several diverse SMD resources, the SMD selection problem can be described as multicriteria decision-making (MCDM) problem.

In this paper, we performed a comparative assessment of different MCDM methods (EDAS, ARAS, MABAC, MARCOS, and COPRAS) to rank the SMDs based on their resource parameters, among a number of available SMDs, for being considered as computing resources in MCC. The assessment was done in terms of ranking robustness and the execution time of the MCDM methods. Considering the dynamic nature of MCC, where the resource selection is supposed to be on-the-fly, the selection process needs to be as less time-consuming as possible. For selection criteria, we considered the fixed (e.g., CPU and GPU power, RAM and battery capacity, etc.) and the variable (e.g., current CPU and GPU load, available RAM, battery remaining, etc.) resource parameters.

We used the final score values of the alternatives as obtained by using different algorithms and applied the SAW method for arriving at the aggregate ranking of the alternatives. We also carried out a comparison of the ranking performance of the MCDM methods used in this study. We investigated their consistency with respect to the aggregate ranking and their stability through sensitivity analysis.

We calculated the time complexities of all the methods. We also assessed the actual runtime of all the methods by executing them on a Windows-based laptop and an Android-based smartphone. To assess the effect of the size of the dataset, we executed the MCDM methods with four datasets of different sizes. To have datasets of varied sizes, we varied the number of selection criteria and alternatives (SMDs) separately. For each dataset, we executed the programs considering two scenarios, when the dataset resides in the primary memory and when it is fetched from secondary memory.

7.2. Observation

It is observed that in terms of correctness, consistency, and robustness, the COPRAS method exhibits better performance under all case scenarios. As per time complexity, all the five MCDM methods are equal, i.e., O(mn), where is the decision matrix (m is the number of SMDs and n is the number of selection criteria). However, EDAS, MABAC, and COPRAS have a better best-case (Ω(m + n)) complexity. Overall, COPRAS has been shown to consume the least runtime for each execution case, i.e., for all four matrix sizes, on the laptop as well as on the smartphone.

7.3. Conclusive Statement

The COPRAS method is found to be better than other MCDM methods (EDAS, ARAS, MABAC, and MARCOS) for all test parameters and in all test scenarios. Hence, it can be concluded that among the existing MCDM methods, COPRAS would be the most suitable choice for resource ranking to select the best resource in MCC and other similar problem setups.

7.4. Limitations and Improvement Scopes

We used the entropy method to calculate the criteria weights. It is an objective approach in which the criteria weights depend on the decision matrix values. In a dynamic environment like MCC, the SMDs may join and leave the network frequently, and the status of their variable resources also changes as per device usage. This results in frequent alteration in the decision matrix. This implies that the entropy calculation should be done every time for criteria weight determination, which is a real overhead.

Here, the criteria weights were calculated dynamically based on the present resource status of the SMDs, expressed in metric terms. We did not take into account the criteria preferences in line with the resource specification preference of the MCC applications. As the dataset gets changed based on varying criteria and alternative sets, the criteria weights also get changed according to the performance values of the alternatives. Hence, this approach might not provide the optimal resource ranking as per the real applicational requirements. So, our future study can explore the possibility of defining the criteria weights based on the required resource specifications of a typical MCC user or application.

Furthermore, we opted for the most straightforward normalization technique, i.e., linear normalization. But there are various normalization techniques in practice that could be used. Therefore, there is a scope to study the effect of different normalization techniques in the ranking and execution performance of the MCDM methods.

7.5. Open Research Prospects

Since the MCC environment is really dynamic in nature, i.e., not only the SMDs but also the status of the resource parameters of each existing SMDs change frequently. Therefore, the resource selection not only needs to be optimal but also to be adaptive in an unpredictable MCC environment. This opens up scope for exploring an adaptive MCDM method that would well acclimate the frequent variation in the alternatives and their values (i.e., the data matrix). Ideally, whenever there is a change in the alternative list or in the performance score, the MCDM method should be able to reflect this change in the overall ranking without reranking the whole list. This would not only minimize the SMD selection and decision-making time but also truly reflect the dynamic and scalable nature of MCC, which is not in the case of the traditional MCDM methods. Also, there is a requirement for further research on realizing an MCDM method that would be suitable for a distributed resource selection in an inter-MCC system.

Author Contributions

Conceptualization, P.K.D.P.; methodology, S.B. and S.P.; software, S.P.; validation, P.K.D.P., S.B. and S.P.; formal analysis, P.K.D.P., S.B. and S.P.; investigation, P.K.D.P., S.B. and S.P.; data curation, P.K.D.P.; writing—original draft preparation, P.K.D.P. and S.B.; writing—review and editing, P.K.D.P., S.B., S.P., D.M. and P.C.; supervision, D.M. and P.C.; funding acquisition, D.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the German Research Foundation and the Open Access Publication Fund of Technische Universität Berlin.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors wish to acknowledge the support received from the German Research Foundation and the Technische Universität Berlin.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Falaki, H.; Mahajan, R.; Kandula, S.; Lymberopoulos, D.; Govindan, R.; Estrin, D. Diversity in smartphone usage. In Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services (MobiSys 2010), San Francisco, CA, USA, 15–18 June 2010. [Google Scholar]

- Wagner, D.T.; Rice, A.; Beresford, A.R. Device Analyzer: Understanding smartphone usage. In Mobile and Ubiquitous Systems: Computing Networking and Services; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; Volume 131, pp. 195–208. [Google Scholar]

- Wurmser, Y. US Time Spent with Mobile 2019. 30 May 2019. Available online: https://www.emarketer.com/content/us-time-spent-with-mobile-2019 (accessed on 9 April 2021).

- Loke, S.W.; Napier, K.; Alali, A.; Fernando, N.; Rahayu, W. Mobile Computations with Surrounding Devices: Proximity Sensing and MultiLayered Work Stealing. ACM Trans. Embed. Comput. Syst. 2015, 14, 1–25. [Google Scholar] [CrossRef]

- Mtibaa, K.; Harras, A.; Habak, K.; Ammar, M.; Zegura, E.W. Towards Mobile Opportunistic Computing. In Proceedings of the IEEE 8th International Conference on Cloud Computing, New York, NY, USA, 27 June–2 July 2015. [Google Scholar]

- Lavoie, E.; Hendren, L. Personal volunteer computing. In Proceedings of the 16th ACM International Conference on Computing Frontiers (CF ‘19), Alghero, Italy, 30 April–2 May 2019. [Google Scholar]

- Fernando, N.; Loke, S.W.; Rahayu, W. Mobile Crowd Computing with Work Stealing. In Proceedings of the 15th International Conference on Network-Based Information Systems, Melbourne, Australia, 26–28 September 2012. [Google Scholar]

- Pramanik, P.K.D.; Choudhury, P.; Saha, A. Economical Supercomputing thru Smartphone Crowd Computing: An Assessment of Opportunities, Benefits, Deterrents, and Applications from India’s Perspective. In Proceedings of the 4th International Conference on Advanced Computing and Communication Systems (ICACCS—2017), Coimbatore, India, 6–7 January 2017. [Google Scholar]

- Pramanik, P.K.D.; Pal, S.; Choudhury, P. Smartphone Crowd Computing: A Rational Approach for Sustainable Computing by Curbing the Environmental Externalities of the Growing Computing Demands. In Emerging Trends in Disruptive Technology Management; Das, R., Banerjee, M., De, S., Eds.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2019. [Google Scholar]

- Pramanik, P.K.D.; Pal, S.; Choudhury, P. Green and Sustainable High-Performance Computing with Smartphone Crowd Computing: Benefits, Enablers, and Challenges. Scalable Comput. Pract. Exp. 2019, 20, 259–283. [Google Scholar] [CrossRef]

- Pramanik, P.K.D.; Choudhury, P. Mobility-aware service provisioning for delay tolerant applications in a mobile crowd computing environment. SN Appl. Sci. 2020, 2, 1–17. [Google Scholar] [CrossRef]

- O’Dea, S. Smartphone Users Worldwide 2016–2023. 31 March 2021. Available online: https://www.statista.com/statistics/330695/number-of-smartphone-users-worldwide/ (accessed on 9 April 2021).

- Loke, S.W. Crowd-Powered Mobile Computing and Smart Things; Springer Briefs in Computer Science; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Pramanik, P.K.D.; Choudhury, P. IoT Data Processing: The Different Archetypes and their Security & Privacy Assessments. In Internet of Things (IoT) Security: Fundamentals Techniques and Applications; Shandilya, S.K., Chun, S.A., Shandilya, S., Weippl, E., Eds.; River Publishers: Gistrup, Denmark, 2018; pp. 37–54. [Google Scholar]

- Pramanik, P.K.D.; Pal, S.; Brahmachari, A.; Choudhury, P. Processing IoT Data: From Cloud to Fog. It’s Time to be Down-to-Earth. In Applications of Security Mobile Analytic and Cloud (SMAC) Technologies for Effective Information Processing and Management; Karthikeyan, P., Thangavel, M., Eds.; IGI Global: Hershey, PA, USA, 2018; pp. 124–148. [Google Scholar]

- Miluzzo, E.; Cáceres, R.; Chen, Y.-F. Vision: mClouds—Computing on Clouds of Mobile Devices. In Proceedings of the 3rd ACM Workshop on Mobile Cloud Computing and Services (MCS’12), Low Wood Bay, Lake District, UK, 25 June 2012. [Google Scholar]

- Marinelli, E.E. Hyrax: Cloud Computing on Mobile Devices Using. Master’s Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2009. [Google Scholar]

- Shila, D.M.; Shen, W.; Cheng, Y.; Tian, X.; Shen, X.S. AMCloud: Toward a Secure Autonomic Mobile Ad Hoc Cloud Computing System. IEEE Wirel. Commun. 2017, 24, 74–81. [Google Scholar] [CrossRef]

- Hirsch, M.; Mateos, C.; Zunino, A. Augmenting computing capabilities at the edge by jointly exploiting mobile devices: A survey. Future Gener. Comput. Syst. 2018, 88, 644–662. [Google Scholar] [CrossRef]

- Fernando, N.; Loke, S.W.; Rahayu, W. Computing with Nearby Mobile Devices: A Work Sharing Algorithm for Mobile Edge-Clouds. IEEE Trans. Cloud Comput. 2019, 7, 329–343. [Google Scholar] [CrossRef]

- Habak, K.; Ammar, M.; Harras, K.A.; Zegura, E. Femto Clouds: Leveraging Mobile Devices to Provide Cloud Service at the Edge. In Proceedings of the IEEE 8th International Conference on Cloud Computing, New York, NY, USA, 27 June–2 July 2015. [Google Scholar]

- Zhou, A.; Wang, S.; Li, J.; Sun, Q.; Yang, F. Optimal mobile device selection for mobile cloud service providing. J. Supercomput. 2016, 72, 3222–3235. [Google Scholar] [CrossRef]

- Kandappu, T.; Misra, A.; Cheng, S.-F.; Jaiman, N.; Tandriansiyah, R.; Chen, C.; Lau, H.C.; Chander, D.; Dasgupta, K. Campus-Scale Mobile Crowd-Tasking: Deployment & Behavioral Insights. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing (CSCW 16), San Francisco, CA, USA, 26 February–2 March 2016. [Google Scholar]

- McKnight, L.W.; Howison, J.; Bradner, S. Guest Editors’ Introduction: Wireless Grids—Distributed Resource Sharing by Mobile, Nomadic, and Fixed Devices. IEEE Internet Comput. 2004, 8, 24–31. [Google Scholar] [CrossRef]

- Mohamed, M.M.; Srinivas, A.V.; Janakiram, D. Moset: An anonymous remote mobile cluster computing paradigm. J. Parallel Distrib. Comput. 2005, 65, 1212–1222. [Google Scholar] [CrossRef]

- Kumar, M.P.; Bhat, R.R.; Alavandar, S.R.; Ananthanarayana, V.S. Distributed Public Computing and Storage using Mobile Devices. In Proceedings of the IEEE Distributed Computing, VLSI, Electrical Circuits and Robotics (DISCOVER), Mangalore, India, 13–14 August 2018. [Google Scholar]

- Curiel, M.; Calle, D.F.; Santamaría, A.S.; Suarez, D.F.; Flórez, L. Parallel Processing of Images in Mobile Devices using BOINC. Open Eng. 2018, 8, 87–101. [Google Scholar] [CrossRef]

- Yaqoob, I.; Ahmed, E.; Gani, A.; Mokhtar, S.; Imran, M. Heterogeneity-aware task allocation in mobile ad hoc cloud. IEEE Access 2017, 5, 1779–1795. [Google Scholar] [CrossRef]

- Žižović, M.; Pamučar, D.; Albijanić, M.; Chatterjee, P.; Pribićević, I. Eliminating Rank Reversal Problem Using a New Multi-Attribute Model—The RAFSI Method. Mathematics 2020, 8, 1015. [Google Scholar] [CrossRef]

- Hwang, C.L.; Yoon, K. Multiple Attribute Decision Making: Methods and Applications; Springer: New York, NY, USA, 1981. [Google Scholar]

- Hwang, C.-L.; Lai, Y.-J.; Liu, T.-Y. A new approach for multiple objective decision making. Comput. Oper. Res. 1993, 20, 889–899. [Google Scholar] [CrossRef]

- Ghorabaee, M.K.; Zavadskas, E.K.; Olfat, L.; Turskis, Z. Multi-criteria inventory classification using a new method of evaluation based on distance from average solution (EDAS). Informatica 2015, 26, 435–451. [Google Scholar] [CrossRef]

- Pamučar, D.; Ćirović, G. The selection of transport and handling resources in logistics centers using Multi-Attributive Border Approximation area Comparison (MABAC). Expert Syst. Appl. 2015, 42, 3016–3028. [Google Scholar] [CrossRef]

- Alinezhad, A.; Khalili, J. MABAC Method. In New Methods and Applications in Multiple Attribute Decision Making (MADM); International Series in Operations Research & Management Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 277, pp. 193–198. [Google Scholar]

- Zavadskas, E.K.; Turskis, Z. A new additive ratio assessment (ARAS) method in multicriteria decision-making. Technol. Econ. Dev. Econ. 2010, 16, 159–172. [Google Scholar] [CrossRef]