Abstract

Symmetries play an important role in the dynamics of physical systems. As an example, quantum physics and microworld are the basis of symmetry principles. These problems are reduced to solving inequalities in general. That is why in this article, we study the numerical approximation of solutions to variational inequality problems involving quasimonotone operators in an infinite-dimensional real Hilbert space. We prove that the iterative sequences generated by the proposed iterative schemes for solving variational inequalities with quasimonotone mapping converge strongly to some solution. The main advantage of the proposed iterative schemes is that they use a monotone and non-monotone step size rule based on operator knowledge rather than a Lipschitz constant or some line search method. We present a number of numerical experiments for the proposed algorithms.

Keywords:

variational inequality problem; subgradient extragradient method; strong convergence theorems; quasi-monotone operators; Lipschitz continuity MSC:

65Y05; 65K15; 68W10; 47H05; 47H10

1. Introduction

Our main concern here is to study the different iterative algorithms that are used to evaluate the numerical solution of the variational inequality problem (shortly, (1)) involving quasimonotone operators in any real Hilbert space Let be a real Hilbert space and be a nonempty closed and convex subset of a real Hilbert space Let be an operator. The variational inequality problem for on is defined as follows [1,2]:

In order to prove the strong convergence, it is assumed that the following conditions are satisfied:

- (1)

- The solution set for the problem (1) is denoted by and it is nonempty;

- (2)

- A mapping is said to be quasimonotone if

- (3)

- A mapping is said to be Lipschitz continuous if there exists a constant such that

- (4)

- A mapping is said to be weakly sequentially continuous if weakly converges to for each sequence that weakly converges to

It is well-established that the problem (1) is a key problem in nonlinear analysis. It is an important mathematical model that incorporates many important topics in pure and applied mathematics, such as a nonlinear system of equations, optimization conditions for problems with the optimization process, complementarity problems, network equilibrium problems and finance (see [3,4,5,6,7,8,9,10,11,12,13] and others in [14,15,16,17,18,19]). As a result, this problem has a number of applications in engineering, mathematical programming, network economics, transportation analysis, game theory and software engineering. Moreover, symmetries play an important role in the dynamics of physical systems. The basis of symmetry principles, for example, is quantum physics and the microworld. These problems are reduced to solving inequalities in general.

Most solution techniques for problem (1) are iterative since analytic or explicit solutions are hard to find. The class of regularized methods and the projection methods are two well-known and common techniques for evaluating a numerical solution to variational inequalities. It should also be noted that the first approach is most commonly used to solve the variational inequalities involving the class of monotone operators. In the class of regularized methods, the regularized sub-problem is strongly monotone and its unique solution exists. In this study, we focus on projection methods that are well-known and widely used due to their simple numerical computations.

The gradient projection method was the first well-established projection method for solving variational inequalities, and it was followed by several other projection methods, including the well-known extragradient method [20], the subgradient extragradient method [21,22] and others in [23,24,25,26,27]. These methods are used to solve variational inequalities involving strongly monotone, monotone or inverse monotone operators. These methods have serious flaws. The first is the constant step size, which requires knowledge or approximation of the Lipschitz constant of the relevant operator and only converges weakly in Hilbert spaces. In most cases, the Lipschitz constants are undefined or impossible to compute. Estimating the Lipschitz constant a priori may be difficult from a computational point of view, which may affect the convergence rate and applicability of the method.

The objective of this study is to solve the variational inequalities involving a quasimonotone operator in an infinite-dimensional Hilbert space. We prove that the proposed iterative sequence generated by the subgradient extragradient algorithms for solving variational inequalities involving quasimonotone operators converges strongly to a solution. Subgradient extragradient algorithms use both the monotone and the new non-monotone variable step size rule. For the proposed algorithms, we present a set of computational experiments.

2. Preliminaries

For each the following inequality holds:

A metric projection of is defined by:

Lemma 1.

[28] For any and . Then:

- (i)

- (ii)

Lemma 2.

[29] Assume that is a sequence satisfying the following inequality:

Moreover, two sequences and meet the following conditions:

Then,

Lemma 3.

Indeed,

[30] Let be a sequence of real numbers and there exists a subsequence of such that

Then, there exists a nondecreasing sequence such that as and the following inequality for is satisfied:

3. Main Results

In this section, we propose two new methods for solving quasimonotone variational inequalities in real Hilbert spaces and prove strong convergence theorems. Both methods use the monotonic self-adaptive step rule to make the method independent of the Lipschitz constant.

Lemma 4.

A sequence generated by (2) is convergent and monotonically decreasing.

Proof.

Due to the Lipschitz continuity mapping , there exists a real fixed number Suppose that such that:

We can easily see that the sequence is monotonically decreasing and bounded. Hence, sequence is convergent to some □

Lemma 5.

Let a mapping satisfy the conditions (1)–(4) and a sequence be generated by Algorithm 1. For each we have:

| Algorithm 1 (Monotonic Explicit Mann-Type Subgradient Extragradient Method) |

|

Proof.

Consider that:

Since we have:

The above expression implies that:

From Expressions (3) and (5), we obtain:

Since we have:

Due to the definition of on , we obtain:

Thus, we have:

Combining Expressions (6) and (7), we obtain:

Now, taking we have:

Combining Expressions (8) and (9), we obtain:

□

Lemma 6.

Let be an operator that satisfies the conditions (1)–(4). If there exists a subsequence weakly convergent to and , then

Proof.

Since is weakly convergent to and due to the sequence is also weakly convergent to Next, we need to prove that By the value of we have:

that is equivalent to

The above inequality implies that:

Thus, we obtain:

By the use of and in (13), we have:

Furthermore, it implies that:

Since Thus, we have:

and together with (15) and (16), we obtain:

Moreover, let us take a positive sequence that is decreasing and convergent to zero. For each there exists a least positive integer denoted by such that

Since is a decreasing sequence and it is easy to see that the sequence is increasing, if there exists a natural number such that for all Consider that:

Due to the above definition, we have:

Moreover, from Expressions (18) and (20) for all we have:

By the definition of quasimonotone, we have:

For all we have

Due to the fact that converges weakly to with being weakly sequentially continuous on the set we obtain that converges weakly to Let ; it implies that:

Since and we have:

By letting in (23), we obtain:

Let be an arbitrary element and for let:

Then and from (26) we have:

Hence:

Let Then along a line segment. By the continuity of an operator, converges to as It follows from (29) that:

Therefore is a solution of problem (1). □

Theorem 1.

Let be an operator that satisfies the conditions(1)–(4). Then, generated by Algorithm 1 converges strongly to

Proof.

By Lemma 5, we have:

The above expression is obtained due to and there exists a number , such that:

Thus, there exits a number such that

It is given that , we have:

Next, we need to compute the following:

Substituting (31) into (35), we obtain:

The above expression implies that:

Combining Expressions (33) and (37), we obtain:

Thus, the above expression implies that is a bounded sequence. Indeed, by the use of the definition of we have:

By the use of Expression (35), we have:

Combining Expressions (39) and (40) (for some ), we obtain:

Assume that Thus, we obtain:

where Thus, we obtain:

Next, we need to evaluate:

Combining Expressions (43) and (44), we obtain:

Case 1: Suppose that there exists a fixed number () such that:

Thus, exists. By the use of Expression (41), we have:

By the use of limit existence of and , we can deduce that:

Consequently, we have:

It follows from Expression (49) and that:

which gives that

It is given that ; we thus have:

Since is bounded sequence and thus there exists a subsequence that weakly converges to some By using Lemma 6, we have

By the use of It follows that:

By the use of Expressions (45), (54) and Lemma 2 we deduce that as

Case 2: Suppose that there exits a subsequence of such that:

By Lemma 3 there exists a sequence () such that

From Expression (41), we have:

Due to we can deduce the following:

It follows that:

It continues from that:

By using a similar argument as in Case 1, we have

By using Expressions (45) and (55), we have:

It follows that:

Since and is bounded. Thus, we have:

By using Expressions (55) and (63), we obtain:

As a result as This completes the proof of the theorem. □

Theorem 2.

Let a mapping satisfy the conditions(1)–(4). Then, the sequence generated by the Algorithm 2 converges strongly to

| Algorithm 2 (Inertial Monotonic Explicit Subgradient Extragradient Method) |

|

Proof.

By using the definition of we obtain:

where

Given , it implies that there exists a fixed number such that:

Thus, there exists a finite number such that:

By using Lemma 5, we have:

From Expressions (68) and (70), we have:

Thus, we can infer that is a bounded sequence. Indeed, from Expression (68), we have:

for some Combining Expressions (10) and (72), we have:

From Expression (67), we can write:

From Expressions (70) and (74), we obtain:

Case 1: Assume that there exists a fixed number () such that

The above relation implies that exists and let , for By use of Expression (73), we have:

Due to the existence of the limit of and we deduce that:

It continues from Expression (78) that:

Following that, we evaluate

The above expression implies that:

It is given that we thus have:

By using Lemma 6, we have:

by using the fact that . Thus, using Expression (83) we obtain:

By the use of Expressions (75), (84) and Lemma 2 we deduce that as

Case 2: Consider that there exists a subsequence of such that

By using Lemma 3 there exists a sequence as such that

Similar to Case 1, the expression (77) implies that:

Due to , we can infer the following:

Furthermore, it follows that:

Following that, we must evaluate

The above expression implies that:

By following the same argument as in Case 1, such that

Combining Expressions (75) and (85), we obtain:

Thus, we obtain:

since and is a bounded sequence. Thus, following Expressions (91) and (93), we obtain:

The above expression implies that:

as a result the sequence as This completes the proof of the theorem. □

Next, we introduce the other variants of Algorithms 1 and 2 in which the constant step size is chosen adaptively and thus produces a non-monotone step size sequence that does not require the knowledge of the Lipschitz-type constant

Lemma 7.

A sequence generated by (99) is convergent to γ and satisfies the following inequality:

Proof.

The Lipschitz continuity of a mapping and implies that:

By using the mathematical induction on the definition of we have:

Let

and

By the definition of , we have:

That is, the series is convergent. Next, we need to prove the convergence of Let . Due to the reason that we have:

By letting in (98), we have as . This is a contradiction. Due to the convergence of the series and taking in (98) we obtain This completes the proof of the theorem. □

Theorem 3.

Let a mapping satisfy the conditions(1)–(4). Then, generated by the Algorithm 3 converges strongly to a solution

Proof.

The proof is the same as for the proof of Theorem 1. □

| Algorithm 3 (Non-Monotonic Explicit Mann-Type Subgradient Extragradient Method) |

|

Theorem 4.

Let a mapping satisfy the conditions(1)–(4). Then, generated by Algorithm 4 converges strongly to a solution

Proof.

The proof is the same as for the proof of Theorem 2. □

| Algorithm 4 (Inertial Non-Monotonic Explicit Subgradient Extragradient Method) |

|

4. Numerical Illustrations

This section describes the numerical performance of the proposed algorithms, in contrast to some related work in the literature, as well as the analysis of how variations in control parameters affect the numerical effectiveness of the proposed algorithms. All computations were carried out in MATLAB R2018b and run on an HP i-5 Core(TM)i5-6200 8.00 GB (7.78 GB usable) RAM laptop.

Example 1.

Let be a real Hilbert space with with the sequences of real numbers satisfying the following condition:

Assume that a mapping is defined by

where We can easily see that is weakly sequentially continuous on and the solution set is For any we have:

Hence is L-Lipschitz continuous with For any and let such that

since , and it implies that

Consider that

Hence a mapping is quasimonotone on Let and such that

Let us consider the following projection formula:

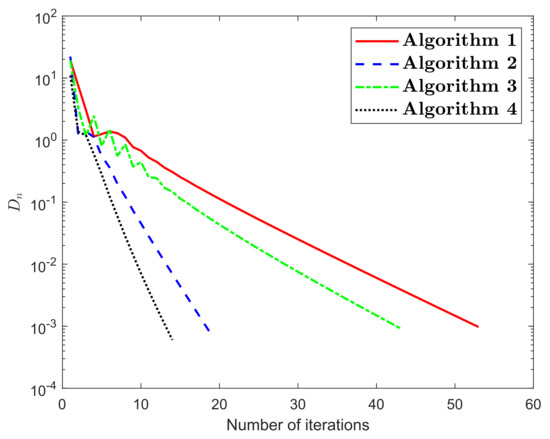

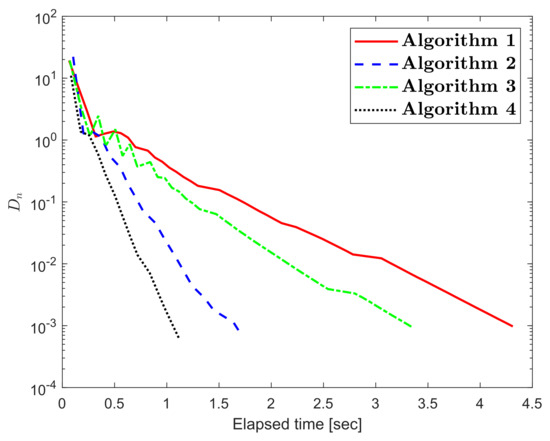

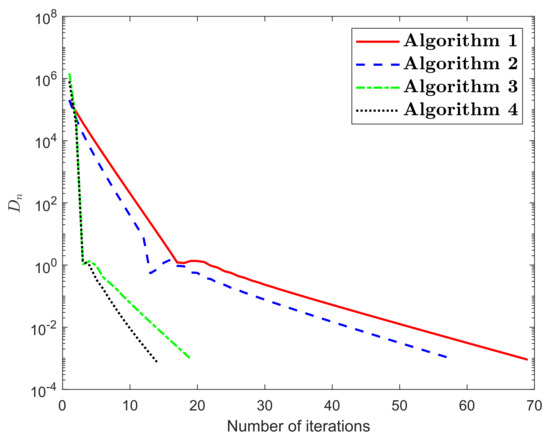

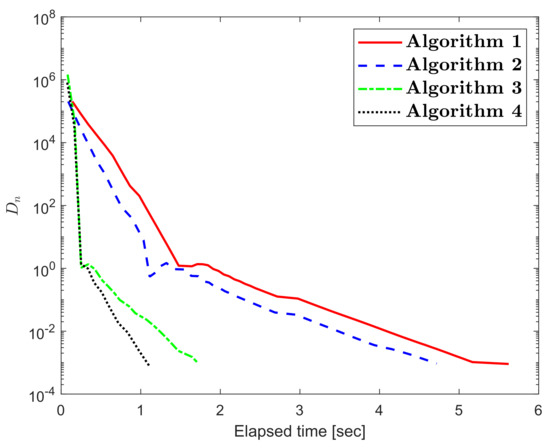

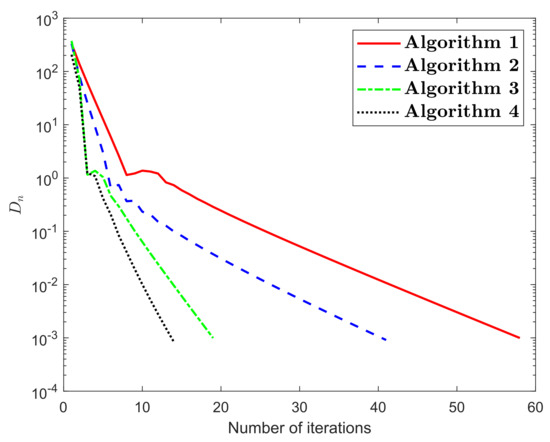

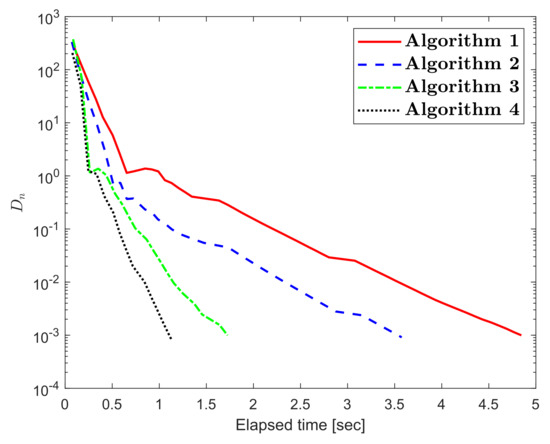

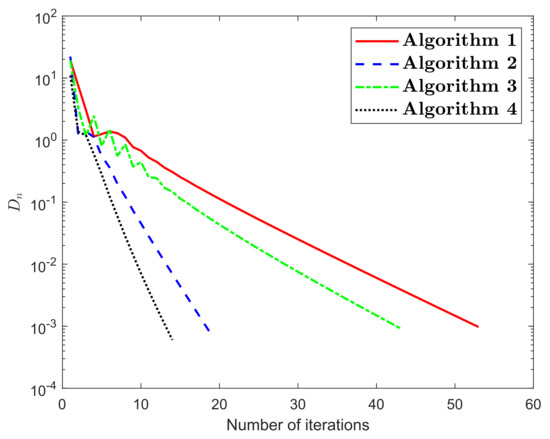

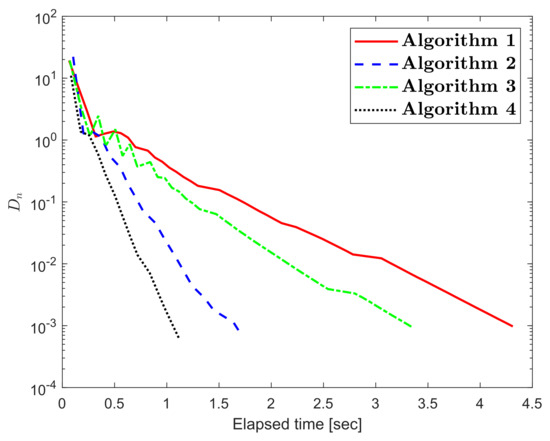

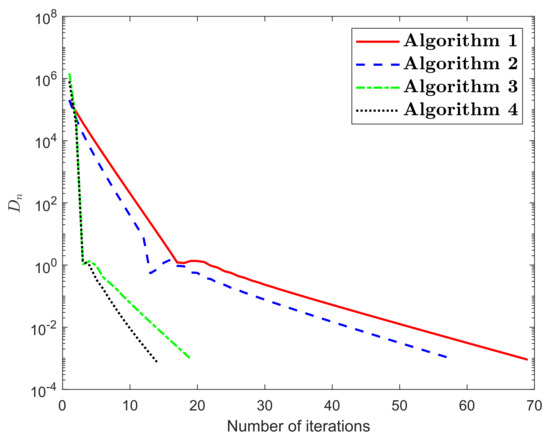

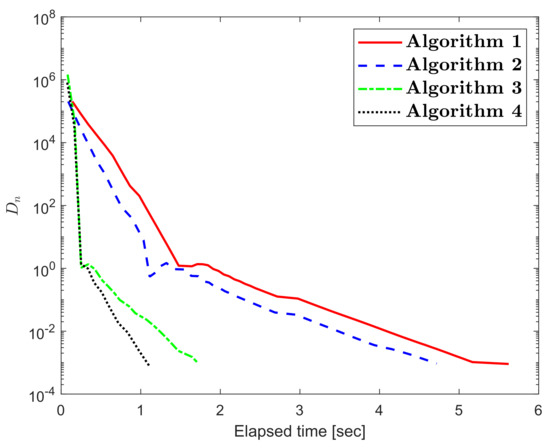

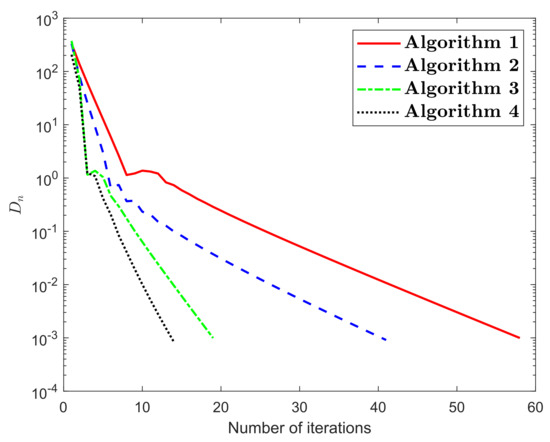

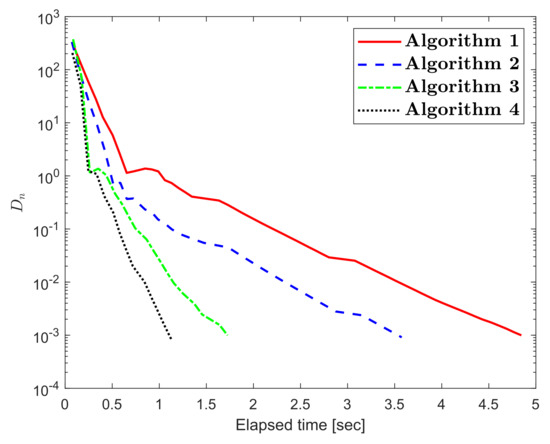

The numerical results for Example 1 are shown in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6 and Table 1Table 2. The control conditions are taken in the following way:

Figure 1.

Numerical illustration of Algorithms 1–4 while

Figure 2.

Numerical illustration of Algorithms 1–4 while

Figure 3.

Numerical illustration of Algorithms 1–4 while

Figure 4.

Numerical illustration of Algorithms 1–4 while

Figure 5.

Numerical illustration of Algorithms 1–4 while

Figure 6.

Numerical illustration of Algorithms 1–4 while

Table 1.

Numerical values for Example 1.

Table 2.

Numerical values for Example 1.

- (i)

- Algorithm 1 (shortly, Algorithm 1):

- (ii)

- Algorithm 3 (shortly, Algorithm 3):

- (iii)

- Algorithm 2 (shortly, Algorithm 2):

- (iv)

- Algorithm 4 (shortly, Algorithm 4):

5. Conclusions

We formulated four explicit extragradient-type methods to find a numerical solution to the quasimonotone variational inequalities in a real Hilbert space. These methods are considered as a modification of the two-step extragradient-type method. Two strongly convergent results have been proven corresponding to the proposed methods. The numerical results were examined in order to verify the numerical advantage of the proposed algorithms. Such computational results show that the non-monotone variable step size rule continues to improve the effectiveness of the iterative sequence in this context.

Author Contributions

Conceptualization, N.W., I.K.A., M.S., W.D. and C.I.A.; methodology, N.W., I.K.A. and C.I.A.; software, N.W., I.K.A. and M.S.; validation, M.S., W.D. and C.I.A.; formal analysis, N.W., I.K.A. and C.I.A.; investigation, N.W., I.K.A. and W.D.; writing—original draft preparation, N.W., I.K.A., M.S., W.D. and C.I.A.; writing—review and editing, N.W., I.K.A., M.S. and W.D.; visualization, I.K.A., M.S. and C.I.A.; supervision and funding, N.W., I.K.A. and C.I.A. All authors have read and agree to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the associate editor and referee(s) for his/her comments and suggestions on the manuscript. Nopparat Wairojjana would like to thank Valaya Alongkorn Rajabhat University under the Royal Patronage (VRU).

Conflicts of Interest

The authors declare no competing interests.

References

- Stampacchia, G. Formes bilinéaires coercitives sur les ensembles convexes. C. R. Hebd. Seances Acad. Sci. 1964, 258, 4413–4416. [Google Scholar]

- Konnov, I.V. On systems of variational inequalities. Rus. Math. Izvestiia-Vysshie Uchebnye Zavedeniia Matematika 1997, 41, 77–86. [Google Scholar]

- Kassay, G.; Kolumbán, J.; Páles, Z. On Nash stationary points. Publ. Math. 1999, 54, 267–279. [Google Scholar]

- Kassay, G.; Kolumbán, J.; Páles, Z. Factorization of Minty and Stampacchia variational inequality systems. Eur. J. Oper. Res. 2002, 143, 377–389. [Google Scholar] [CrossRef]

- Kinderlehrer, D.; Stampacchia, G. An Introduction to Variational Inequalities and Their Applications; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2000. [Google Scholar] [CrossRef]

- Konnov, I. Equilibrium Models and Variational Inequalities; Elsevier: Amsterdam, The Netherlands, 2007; Volume 210. [Google Scholar]

- Elliott, C.M. Variational and Quasivariational Inequalities Applications to Free—Boundary ProbLems. (Claudio Baiocchi And António Capelo). SIAM Rev. 1987, 29, 314–315. [Google Scholar] [CrossRef]

- Nagurney, A.; Economics, E.N. A Variational Inequality Approach; Springer: Berlin, Germany, 1999. [Google Scholar]

- Takahashi, W. Introduction to Nonlinear and Convex Analysis; Yokohama Publishers: Yokohama, Japan, 2009. [Google Scholar]

- Argyros, I.K.; Magreñán, Á. Iterative Methods and Their Dynamics with Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. Iterative Algorithms II; Nova Science Publishers: Hauppauge, NY, USA, 2016. [Google Scholar]

- Argyros, I.K.; Magreñán, Á.A. On the convergence of an optimal fourth-order family of methods and its dynamics. Appl. Math. Comput. 2015, 252, 336–346. [Google Scholar] [CrossRef]

- Argyros, I.K.; Magreñán, Á.A. Extending the convergence domain of the Secant and Moser method in Banach Space. J. Comput. Appl. Math. 2015, 290, 114–124. [Google Scholar] [CrossRef]

- Rehman, H.; Kumam, P.; Abubakar, A.B.; Cho, Y.J. The extragradient algorithm with inertial effects extended to equilibrium problems. Comput. Appl. Math. 2020, 39. [Google Scholar] [CrossRef]

- Rehman, H.; Kumam, P.; Kumam, W.; Shutaywi, M.; Jirakitpuwapat, W. The inertial sub-gradient extra-gradient method for a class of pseudo-monotone equilibrium problems. Symmetry 2020, 12, 463. [Google Scholar] [CrossRef] [Green Version]

- Rehman, H.; Kumam, P.; Argyros, I.K.; Deebani, W.; Kumam, W. Inertial extra-gradient method for solving a family of strongly pseudomonotone equilibrium problems in real Hilbert spaces with application in variational inequality problem. Symmetry 2020, 12, 503. [Google Scholar] [CrossRef] [Green Version]

- Rehman, H.; Kumam, P.; Argyros, I.K.; Alreshidi, N.A.; Kumam, W.; Jirakitpuwapat, W. A self-adaptive extra-gradient methods for a family of pseudomonotone equilibrium programming with application in different classes of variational inequality problems. Symmetry 2020, 12, 523. [Google Scholar] [CrossRef] [Green Version]

- Rehman, H.; Kumam, P.; Argyros, I.K.; Shutaywi, M.; Shah, Z. Optimization based methods for solving the equilibrium problems with applications in variational inequality problems and solution of Nash equilibrium models. Mathematics 2020, 8, 822. [Google Scholar] [CrossRef]

- Rehman, H.; Kumam, P.; Shutaywi, M.; Alreshidi, N.A.; Kumam, W. Inertial optimization based two-step methods for solving equilibrium problems with applications in variational inequality problems and growth control equilibrium models. Energies 2020, 13, 3292. [Google Scholar] [CrossRef]

- Korpelevich, G. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2010, 148, 318–335. [Google Scholar] [CrossRef] [Green Version]

- Censor, Y.; Gibali, A.; Reich, S. Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 2011, 26, 827–845. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich extragradient method for the variational inequality problem in Euclidean space. Optimization 2012, 61, 1119–1132. [Google Scholar] [CrossRef]

- Tseng, P. A Modified Forward-Backward Splitting Method for Maximal Monotone Mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity Approximation Methods for Fixed-Points Problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Fang, C.; Chen, S. An inertial subgradient-type method for solving single-valued variational inequalities and fixed point problems. Numer. Algorithms 2018, 79, 941–956. [Google Scholar] [CrossRef]

- Iusem, A.N.; Svaiter, B.F. A variant of korpelevich’s method for variational inequalities with a new search strategy. Optimization 1997, 42, 309–321. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: Berlin, Germany, 2011; Volume 408. [Google Scholar]

- Xu, H.K. Another control condition in an iterative method for nonexpansive mappings. Bull. Aust. Math. Soc. 2002, 65, 109–113. [Google Scholar] [CrossRef] [Green Version]

- Maingé, P.E. Strong Convergence of Projected Subgradient Methods for Nonsmooth and Nonstrictly Convex Minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).