1. Introduction

As the core technology of network information retrieval, Google’s PageRank model (called the PageRank problem) uses the original hyperlink structure of the World Wide Web to determine the importance of each page and has received a lot of attention in the last two decades. The core of the PageRank problem is to compute a dominant eigenvector (or PageRank vector) of the Google matrix

by using the classical power method [

1]:

where

is the PageRank vector,

is a column vector with all elements equal to 1,

is a personalized vector and the sum of its elements is 1,

is a column-stochastic matrix (i.e., the dangling nodes have been replaced by columns with

), and

is a damping factor.

As the damping factor

gradually approaches 1, the Google matrix is close to the original hyperlink structure. However, for large

such as

, the second eigenvalue (

) of the matrix

will be close to the main eigenvalue (equal to 1) [

2], such that the classical power method suffers from slow convergence. In order to accelerate the power method, a lot of new algorithms are used to compute PageRank problems. The quadratic extrapolation method proposed by Kamvar et al. [

3] accelerates the convergence by periodically subtracting estimates of non-dominant eigenvectors from the current iteration of the power method. It is worth mentioning that the authors [

4] provide a theoretical justification for acceleration methods, generalizing the quadratic extrapolation and interpreting it as a Krylov subspace method. Gleich et al. [

5] proposed an inner-outer iteration, wherein an inner PageRank linear system with a smaller damping factor is solved in each iteration. The inner-outer iteration shows good potential as a framework for accelerating PageRank computations, and a series of methods have been proposed based on it. For example, Gu et al. [

6] constructed the power-inner-outer (PIO) method by combining the inner-outer iteration with the power method. It is worth mentioning that different versions of the Arnoldi algorithm applied to PageRank computations were first introduced in [

7]. Gu and Wang [

8] proposed the Arnoldi-Inout (AIO) algorithm by knitting the inner-outer iteration with the thick restarted Arnoldi algorithm [

9]. Hu et al. [

10] proposed a variant of the Power-Arnoldi (PA) algorithm [

11] by using an extrapolation process based on a trace of the Google matrix

[

12].

Anderson(

) acceleration [

13,

14] has been widely used to accelerate the convergence of a fixed-point iteration. Its principle is to store

prior evaluations of the fixed-point method and compute a linear combination of those evaluations such that a new iteration is obtained. Anderson(0) is the given fixed-point iteration. Note that when the parameter

becomes large, the computational cost of the Anderson(

) acceleration becomes expensive. Hence, in most applications,

is chosen to be small, and we set

as a usual choice in this paper. In [

15], Toth et al. proved that Anderson(1) extrapolation was locally q-linearly convergent. Pratapa et al. [

16] developed the Alternating Anderson–Jacobi (AAJ) method by periodically employing Anderson extrapolation to accelerate the classical Jacobi iterative method for sparse linear systems.

In this paper, with the aim of accelerating the Arnoldi-Inout method for computing PageRank problems, the Anderson(1) extrapolation is used as an accelerator, and thus a new method is presented by combining the Anderson(1) extrapolation with the Arnoldi-Inout method periodically. Our proposed method is called the AIOA method, and its construction and convergence behavior are analyzed in detail, and numerical simulation experiments prove the effectiveness of the new algorithm.

The other parts of this article are structured as follows: In

Section 2, we briefly review the Anderson acceleration and the Arnoldi-Inout method for PageRank problems. In

Section 3, the AIOA method is constructed, and its convergence behavior is discussed. In

Section 4, numerical comparisons are reported. Finally, in

Section 5, we give some conclusions.

3. The AIOA Method for Computing PageRank

In this section, we combine the Arnoldi-Inout method with the Anderson(1) acceleration. The new method is called the AIOA method, which can be understood as the Arnoldi-Inout method accelerated with the Anderson(1) extrapolation. We first describe the construction of the AIOA method and then analyze its convergence behavior.

3.1. The Construction of the AIOA Method

The mechanism of the AIOA method can be described as follows: We first ran the Arnoldi-Inout method with a given initial guess to get an approximation vector . If the approximation vector was unsatisfactory, then we treated the inner-outer iteration as a fixed-point problem and ran Algorithm 1 with vector as the starting vector to get another approximation vector . If the vector did not work better than the approximation vector of the fixed-point problem, we set . If the new approximation vector was still not up to the specified accuracy, then we returned to the Arnoldi-Inout method with as the starting vector. We repeated the above process similarly until the required accuracy was reached. The specific algorithmic version is shown as follows.

3.2. Convergence Analysis

The convergence of the Arnoldi-Inout method and that of the Anderson acceleration can be found in [

8,

14,

15]. In this subsection, we analyze the convergence of the AIOA method. Specifically, the convergence analysis of Algorithm 3 focuses on the process when turning from the Anderson(1) acceleration to the Arnoldi-Inout method.

| Algorithm 3 AIOA method |

| (1). Given a unit initial guess , an inner tolerance , an outer tolerance , the size of the subspace , the number of approximate eigenvectors that are retained from one cycle to the next , three parameters and to control the inner-outer iteration. Set . |

| (2). Run the Algorithm 2 with the initial vector . If the residual norm satisfies , then stop, otherwise continue. |

| (3). Run the Algorithm 1 with as the starting guess, where is the approximation vector obtained from step 2. |

| 3.1. |

| 3.2. While |

| 3.3. |

| 3.4. Repeat |

| 3.5. |

| 3.6. Until |

| 3.7. ; |

| 3.8. |

| 3.9. |

| 3.10. End While |

| 3.11. Compute |

| 3.12. Compute that satisfies . |

| 3.13. Compute . |

| 3.14. If |

| 3.15. |

| 3.16. else |

| 3.17. |

| 3.18. End If |

| 3.19. If , stop, else go back to step 2 with the vector as the starting vector. |

Let

denote the set of polynomials whose degree does not exceed

and

represent the set of eigenvalues of the matrix

. Assume the eigenvalues of

are sorted in the decreasing order

. The following theorem proposed by Saad [

20] describes the relationship between an approximate eigenvector

and the Krylov subspace

.

Theorem 1. [20] Assume thatis diagonalizable and that the initial vectorin Arnoldi’s method has expansionwith respect to the eigenbasisin whichand. Then the following inequality holdswhereis the orthogonal projector onto the subspace,and For the purpose of analyzing the convergence speed of our algorithm, it is given that two useful theorems about the spectrum properties of the Google matrix are as follows.

Theorem 2. [21] Assume that the spectrum of the column-stochastic matrixisand then the spectrum of the matrixis, whereandis a vector with nonnegative elements such that. Theorem 3. [2] Letbe ancolumn-stochastic matrix. Letbe a real number such that. Letbe anrank-one column-stochastic matrix, whereis the-vector whose elements are all ones andis an-vector whose elements are all nonnegative and sum to 1. Letbe ancolumn-stochastic matrix, and then its dominant eigenvalue,. In the Arnoldi-Inout method, let

from the previous thick restarted Arnoldi method be the starting vector for the inner-outer iteration. Next, the inner-outer method produces the vector

, where

and

. The derivation of the iterative matrix

can be found in [

5]. In our proposed method, we ran Algorithm 1 with vector

as the initial vector. Note that in the Anderson(1) acceleration, we treated the inner-outer iteration as a fixed-point iteration such that the new vector

was produced such that

was the normalizing factor. If the vector

worked better than the vector

, then, as given in Algorithm 3, we set

, which meant the Anderson(1) acceleration was reduced to the inner-outer iteration and the convergence of Algorithm 3 was certainly established for this case. Hence, it is discussed that the convergence for another case when the vector

works better than the vector

.

In the next cycle of the AIOA algorithm, a

-step Arnoldi process was run with

as the starting vector, and then the new Krylov subspace

was constructed. Next, we introduced the theorem that illustrates the convergence of the AIOA method.

Theorem 4. Suppose that the matrixis diagonalizable if we denote bythe orthogonal projector onto the subspace. Then under the notations of Theorem 1, it haswhere,,and. Proof of Theorem 4. For any

, there exists

such that

where

is the expansion of

within the eigenbasis

.

As shown in [

5] and [

8], it has

then

where we use

.

Assume that

is an eigenvalue of

, and from Theorem 2,

, then the matrix

has eigenvalues

such that

Using the fact that

and

, we have

and

. Let

then, according to Theorem 3 and derivation in [

8], it has

, such that

Substituting (7) and (8) into (6), it has

and then

where we let

satisfy

,

and

.

Remark 1. Comparing (4) with (5), it is easy to find that our method can improve the convergence speed by a factor of at leastwhen turning from the Anderson(1) acceleration to the Arnoldi-Inout method.

4. Numerical Experiments

In this section, we first give the appropriate choice for the parameter

and then test the effectiveness of the AIOA method. For the thick restarted Arnoldi method, there were two parameters,

and

, that needed to be considered, but the thick restarted Arnoldi method had the same effect as the Arnoldi-Inout [

8] method and the AIOA method. In addition, with the parameters

and

increasing, the cost would have been expensive, and they usually take small values. As a result, we don’t discuss the choice of the two parameters

and

in detail and set

and

for all test examples.

All the numerical experiments were performed using MATLAB R2018a programming package on 2.10 GHZ CPU with 1 6GB RAM.

Table 1 lists the characteristics of the test matrices, where

represents the matrix size,

denotes the number of nonzero elements and

is the density which is defined by

All the test matrices are available from

https://sparse.tamu.edu/ (accessed on 14 July 2020). For the sake of justice, the same initial guess

with

was used. The damping factors were chosen as

and

in all numerical experiments. The stopping criterion were set as the 2-norm of the residual, and the prescribed outer tolerance was

. For the inner-outer iterations, the inner residual tolerance was

, and the smaller damping factor was

. The parameters chosen to control the flip-flop were

and

. We ran the thick restarted Arnoldi procedure twice in each loop of the Arnoldi-Inout [

8] method and the AIOA method. In the AIOA algorithm, we chose the QR decomposition to compute

.

4.1. The Selection of Parameter

In this subsection, we discuss the selection of the parameter value

by analyzing the numerical results of the Arnoldi-Inout [

8] (denoted as “AIO”) method and the AIOA method for the web-Stanford matrix, which contains 281,903 pages and 2,312,497 links.

Table 2 lists the matrix–vector products (MV) of the AIO method and the AIOA method for the web-Stanford matrix when

and

.

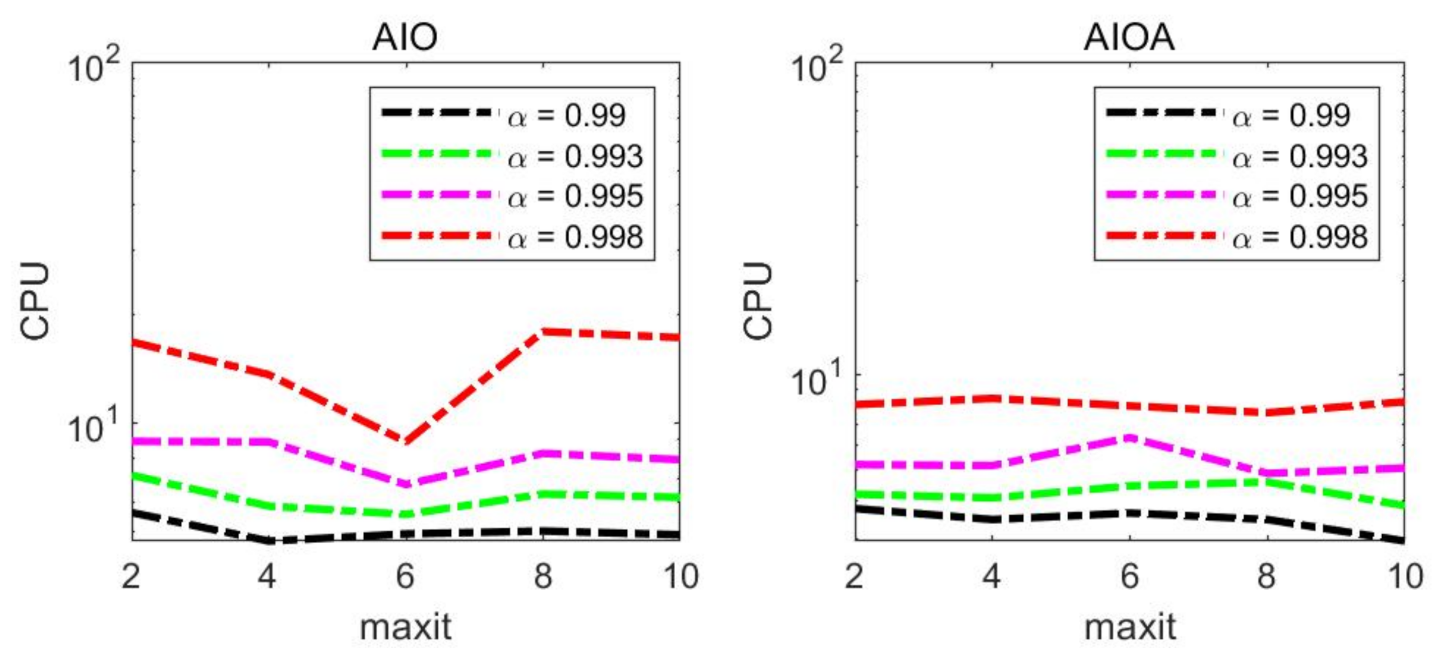

Figure 1 depicts the curves of computing time (CPU) of the two methods versus number

, respectively.

From

Table 2, it is observed that the optimal

was different for different

and different methods. From

Figure 1, optimal

is 6 and the worst performing

is 8 for the AIO method, but for the AIOA method, the best value of

is not 6. For fairness, we decided to choose the

in the following numerical experiments. In addition, in

Table 2, when

and

, the MV of the AIOA is a little more than that of the AIO method, but the CPU time of AIOA method is better than that of the AIO method. The situation suggests that our method has some potential.

4.2. Comparisons of Numerical Results

In this subsection, we tested the effectiveness of the AIOA method through numerical comparison experiments with the inner-outer (denoted as “Inout”) [

5] method, the power-inner-outer (denoted as “PIO”) [

6] method and the Arnoldi-Inout (denoted as “AIO”) [

8] method in terms of iteration counts (IT), the number of matrix-vector products (MV) and the computing time (CPU) in seconds. In all experiments in this subsection, we set the parameters

and

.

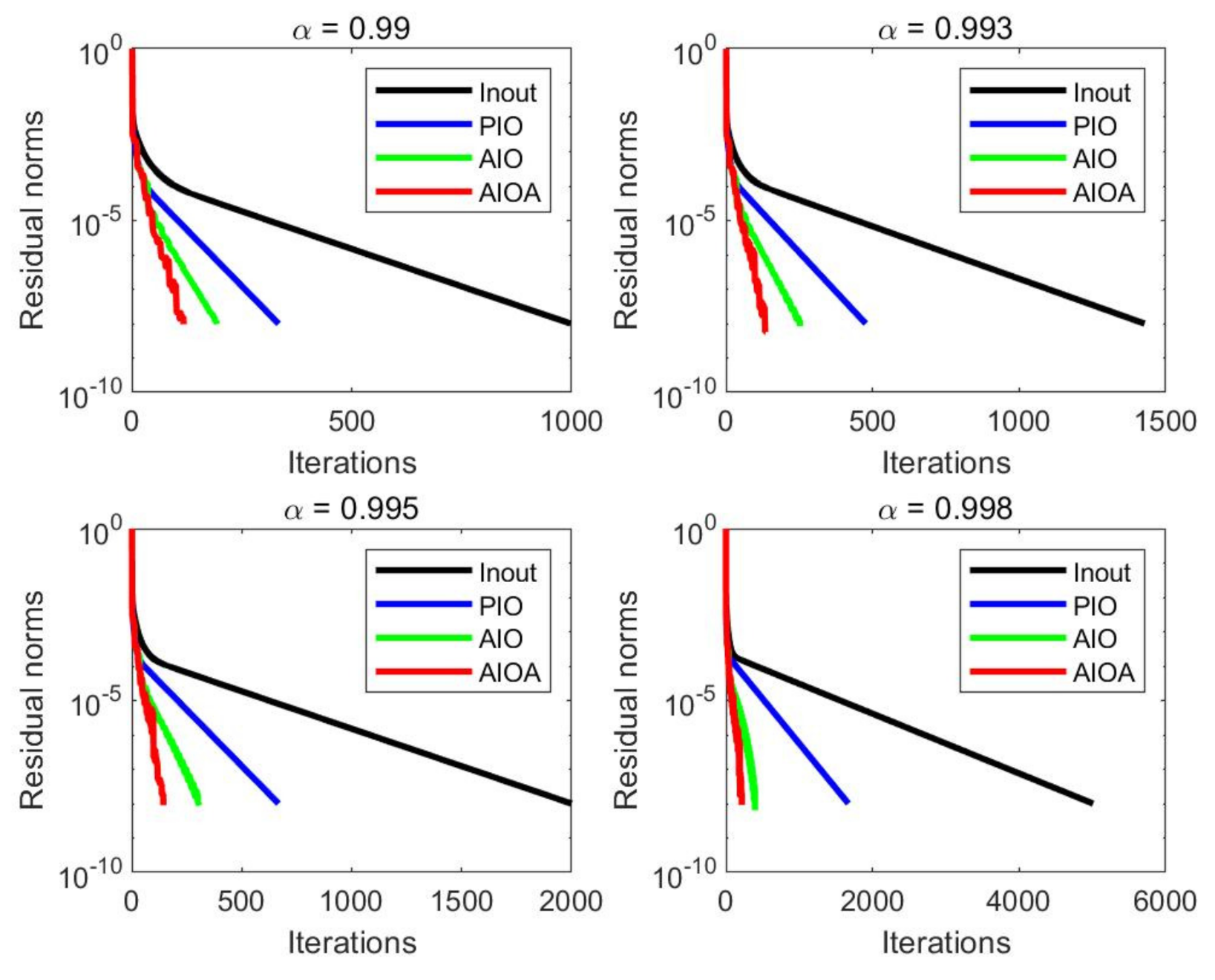

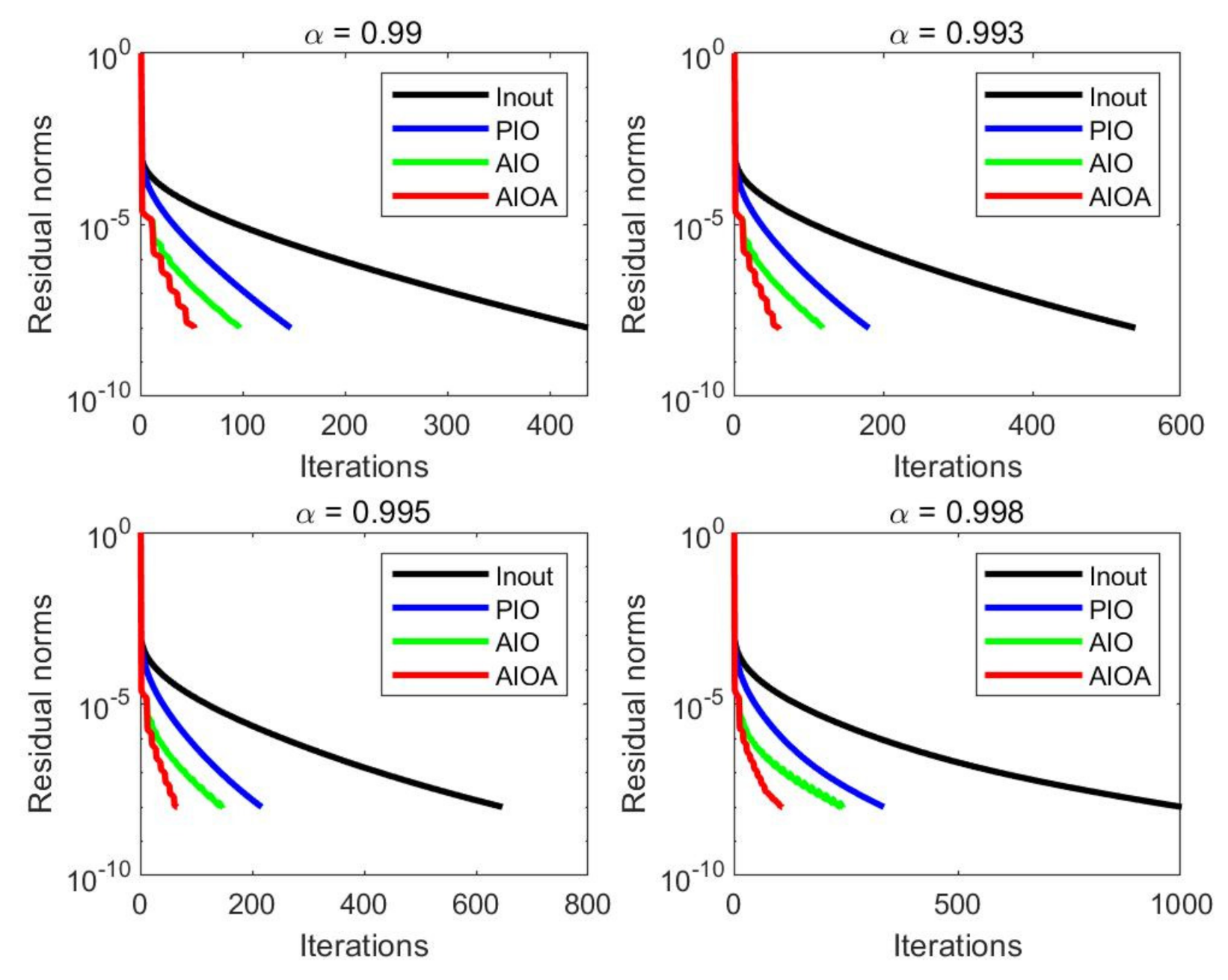

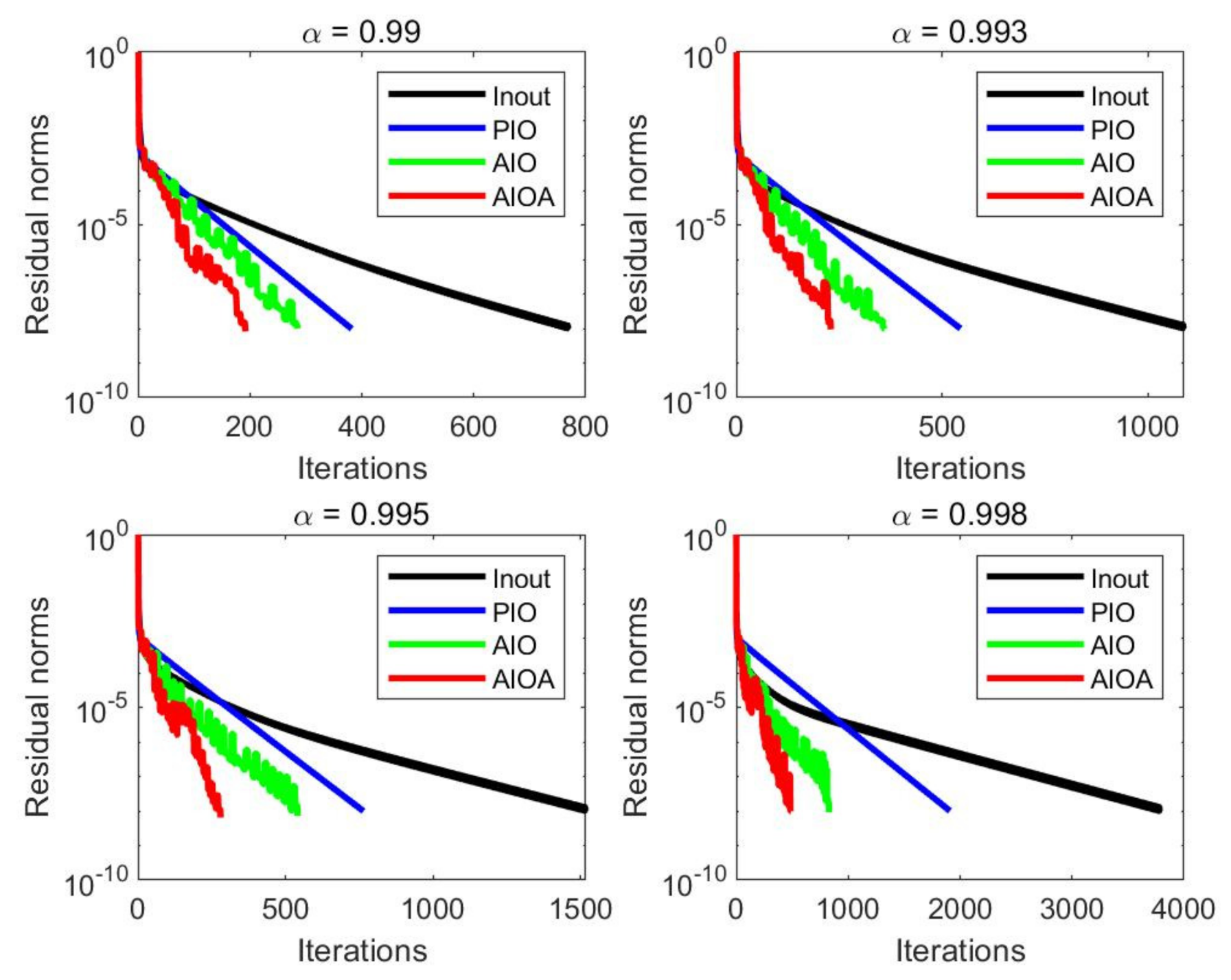

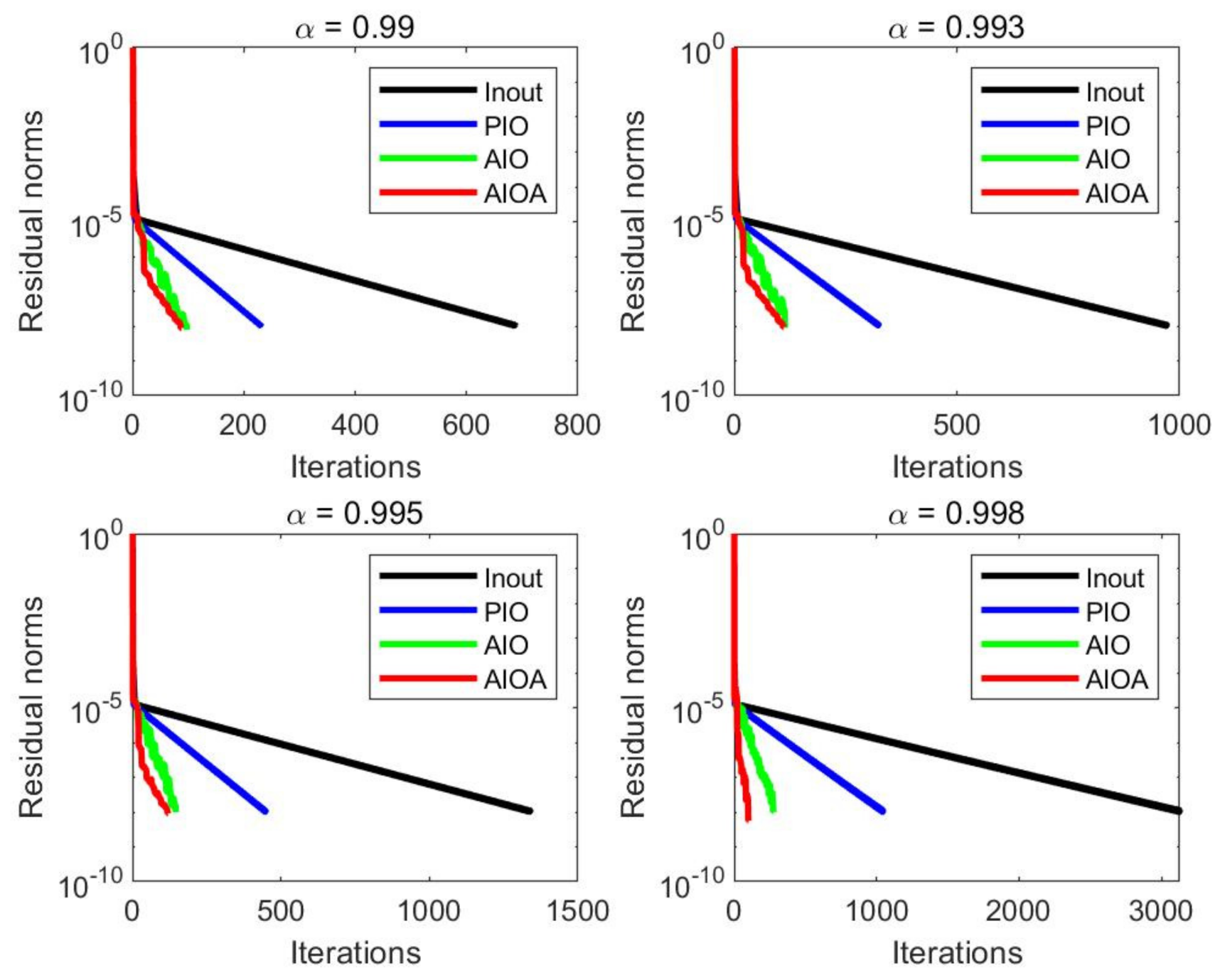

Table 3,

Table 4,

Table 5 and

Table 6 give the numerical experiment results of the Inout method, the PIO method, the AIO method and the AIOA method for four matrices when

, and

Figure 2,

Figure 3,

Figure 4 and

Figure 5 describe the residual convergence images of the above methods with different

for all test matrices.

In order to better demonstrate the efficiency of our proposed method, we defined

to show the speedup of the AIOA method with respect to the AIO method in terms of CPU.

From the numerical results in

Table 3,

Table 4,

Table 5 and

Table 6, it is easy to see that the AIOA method performed better than the other three methods in terms of IT, MV and CPU time for four matrices with different damping factors. As we expected, the advantage of the AIOA method was obvious for large

. For instance, when

, the speedup is

in

Table 3 and

in

Table 5. When

, the speedup is

in

Table 4 and

in

Table 6. In addition, from

Figure 2,

Figure 3,

Figure 4 and

Figure 5, it is easy to observe that the AIOA method can reach the accuracy requirement faster than the Inout method, the PIO method and the AIO method for all test examples. Therefore, the above results verify the effectiveness of the AIOA method.