Ship Detection and Tracking in Inland Waterways Using Improved YOLOv3 and Deep SORT

Abstract

1. Introduction

1.1. Problem Statement

1.2. Motivation

1.3. Contribution

- Optimize the initial value of the anchor frame based on the Kmeans algorithm

- Choice of classifier depending on dataset used

- NMS algorithm optimization, Soft NMS algorithm is introduced.

1.4. Paper Organization

2. Methods

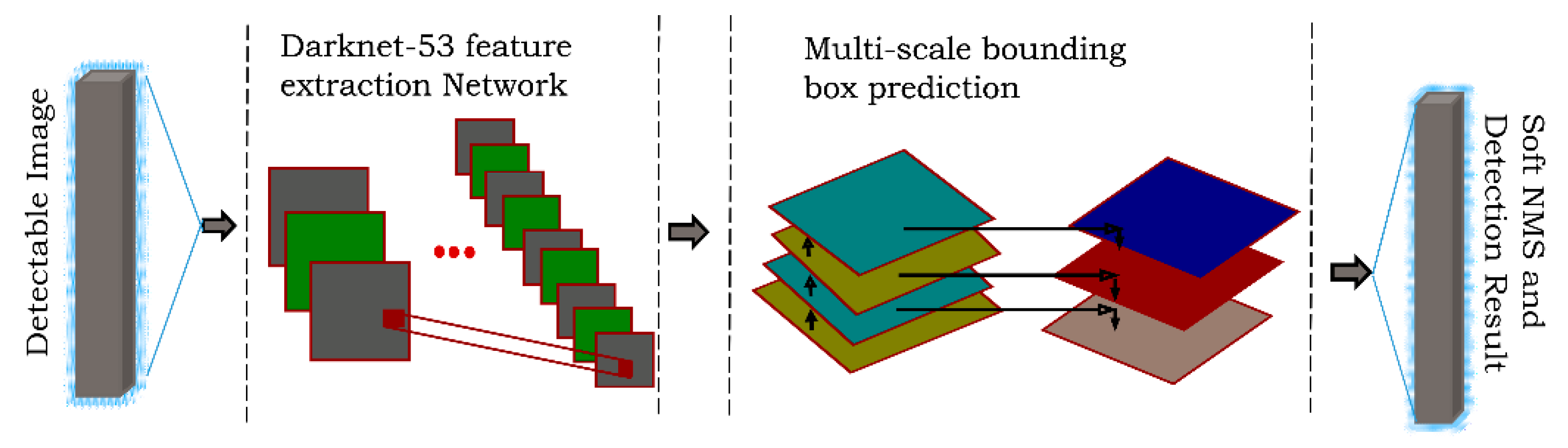

2.1. Object Detection Method

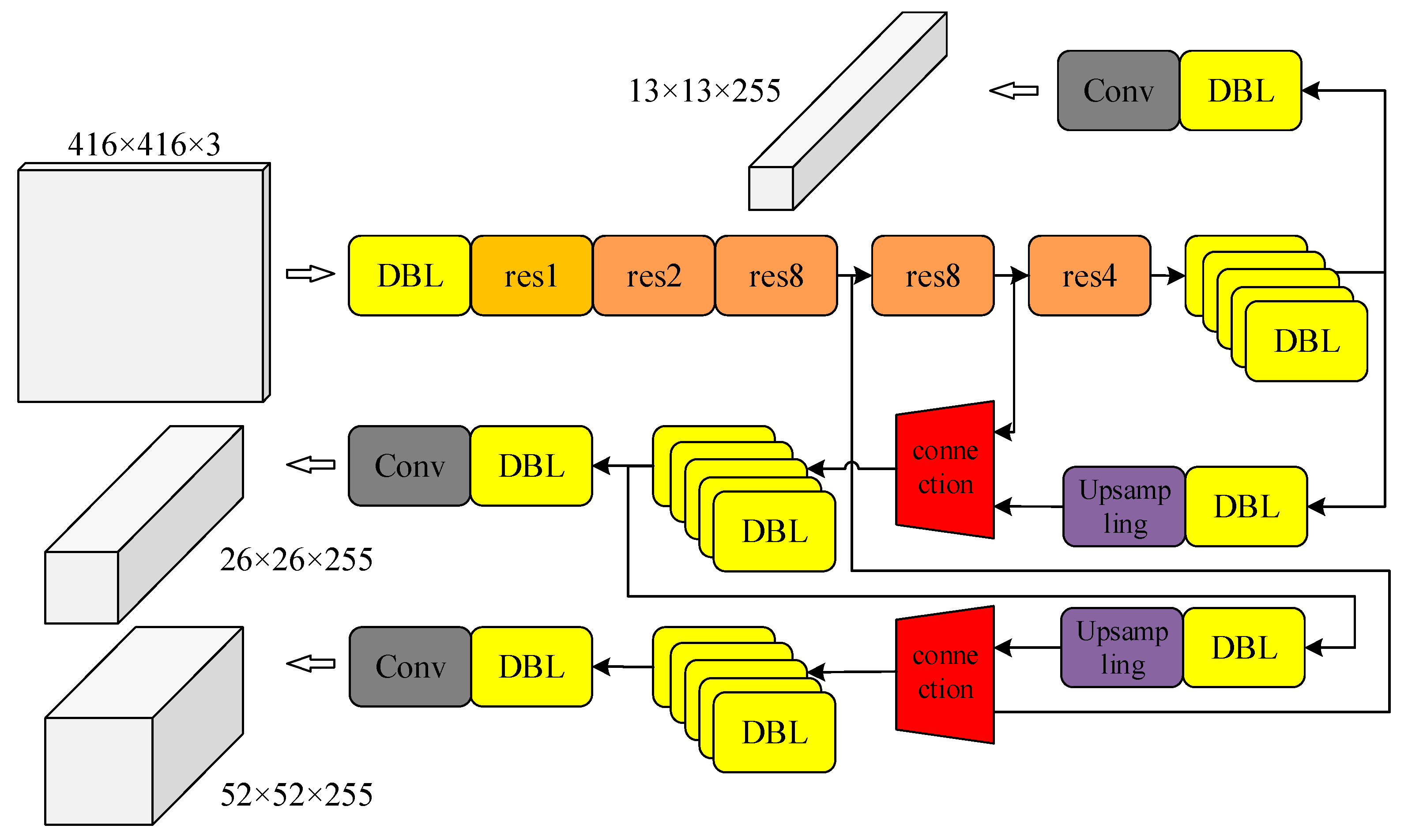

2.1.1. Basic Principle of Existing YOLOv3 Algorithm

- Area division

- 2.

- Multi-scale prediction

- 3.

- Non-maximum suppression

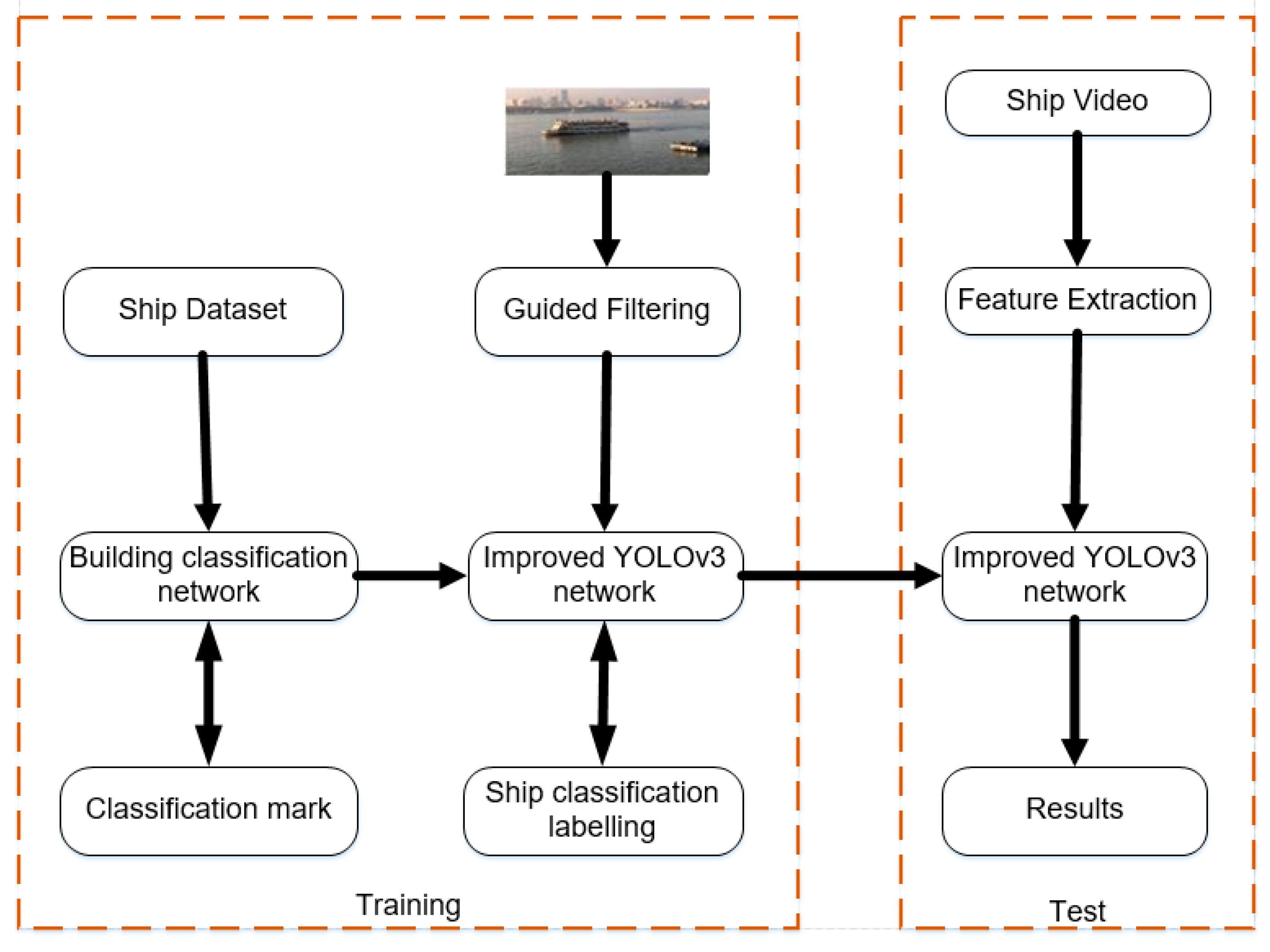

2.1.2. Improved YOLOv3

- Optimize the initial value of the anchor frame based on the Kmeans algorithm

- 2.

- Choice of classifier

- 3.

- NMS algorithm optimization

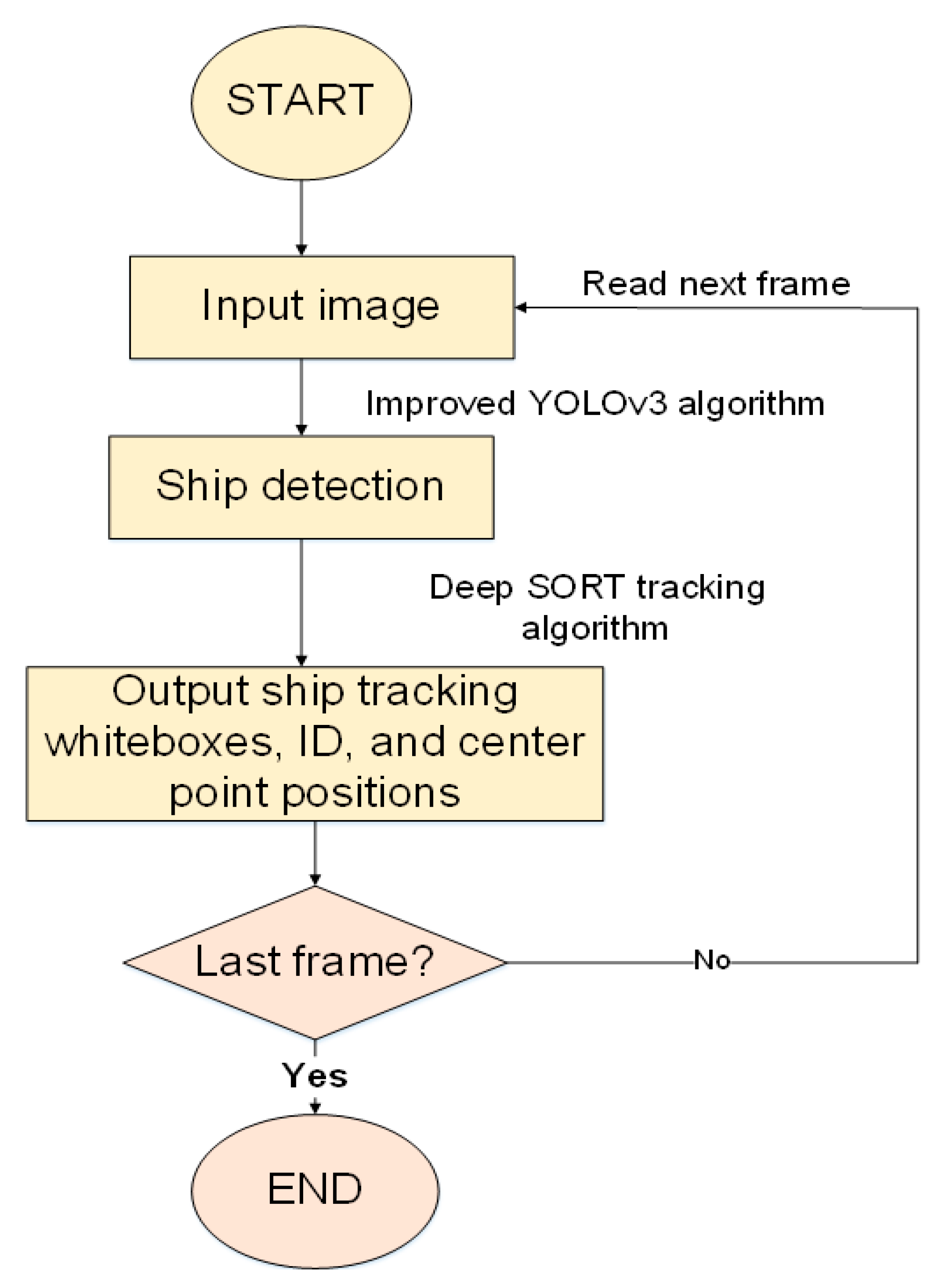

2.2. Objects Tracking Method

3. Results and Discussion

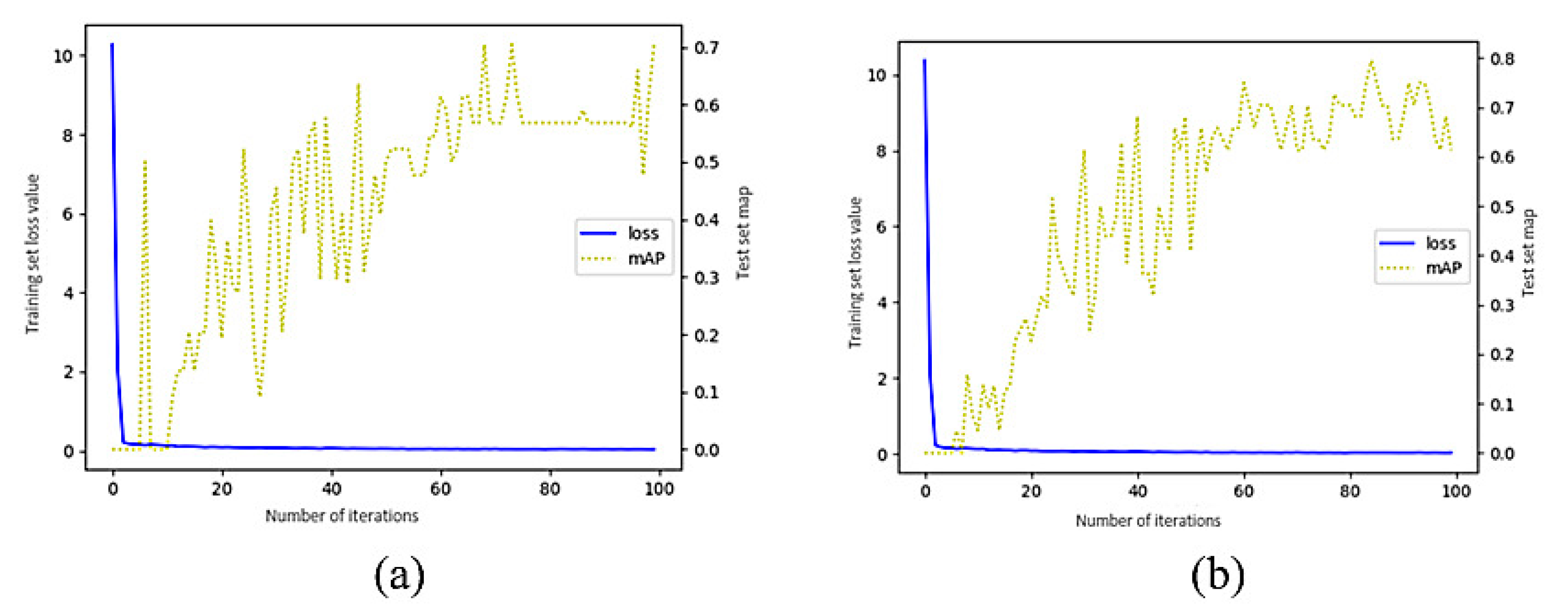

3.1. Data Training

3.2. Validation

3.3. Experimental Result and Analysis

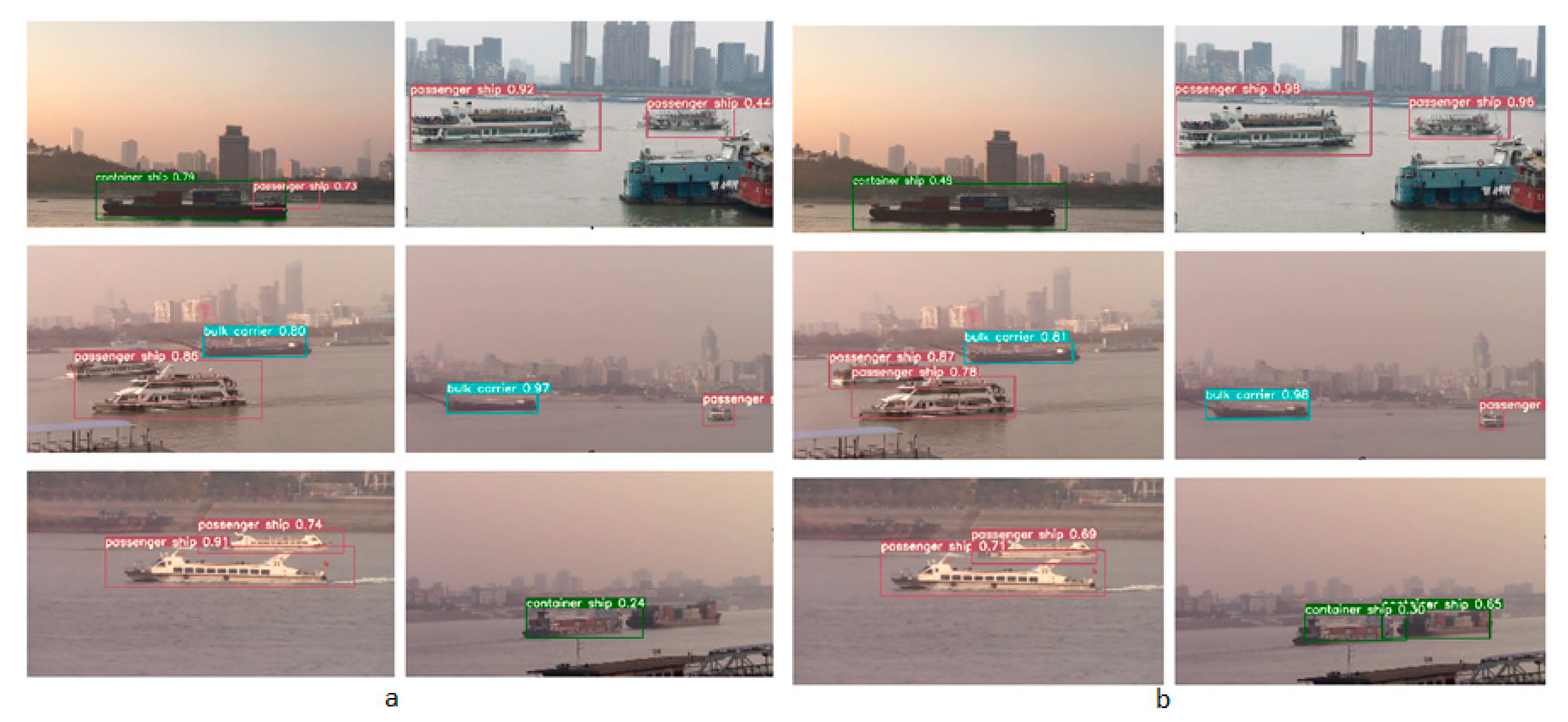

3.3.1. Contrast Experiment of Ship Detection in Real Time

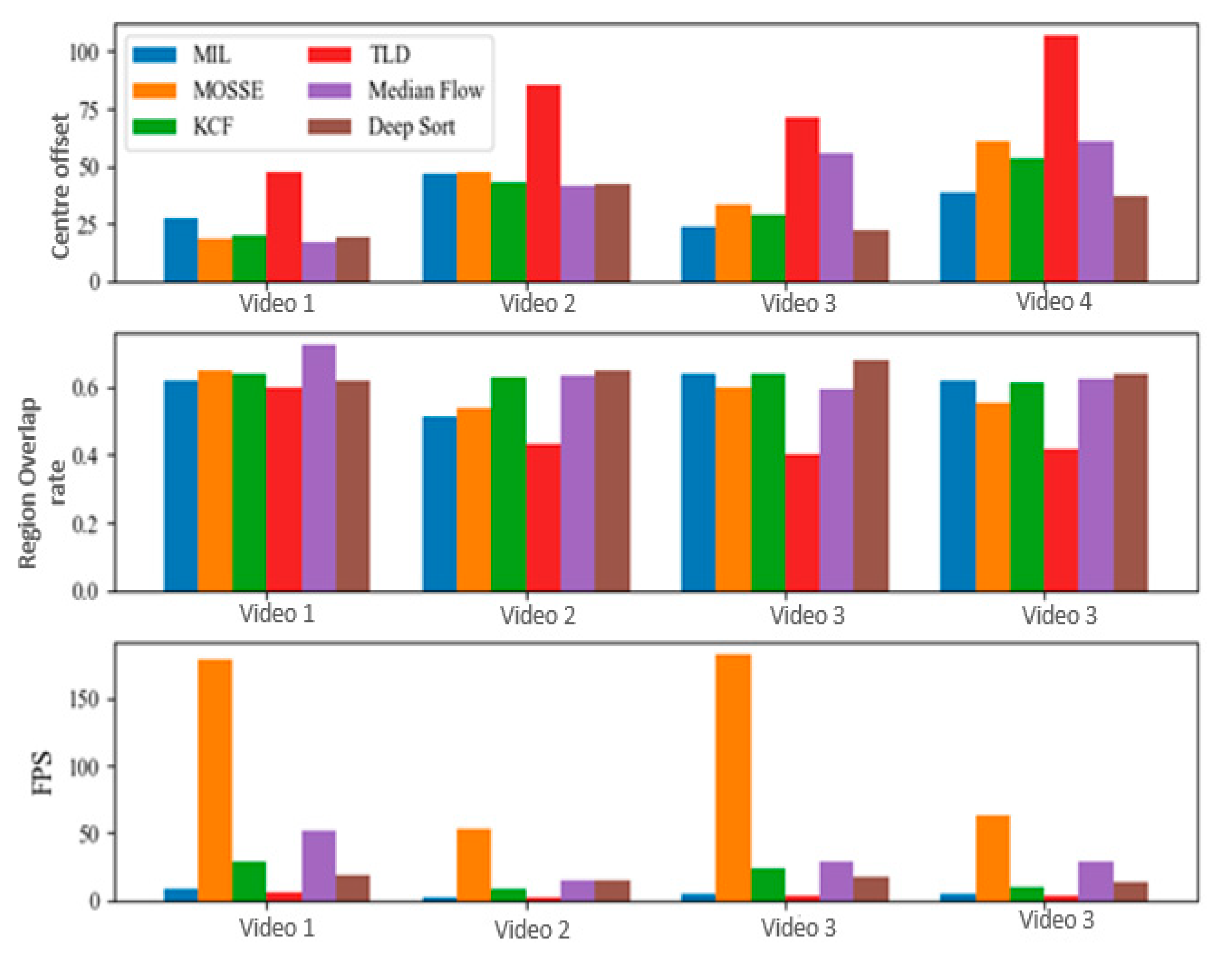

3.3.2. Experiment of Ship Tracking in Real Time

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, J.; Chen, D.; Meng, S. A Novel Region Selection Algorithm for Auto-Focusing Method Based on Depth from Focus. In Proceedings of the Fourth Euro-China Conference on Intelligent Data Analysis and Applications, Cham, Swizerland, 1 October 2018; pp. 101–108. [Google Scholar]

- Tiwari, A.; Goswami, A.K.; Saraswat, M. Feature Extraction for Object Recognition and Image Classification. Int. J. Eng. Res. 2013, 2, 9. [Google Scholar]

- Du, C.-J.; He, H.-J.; Sun, D.-W. Chapter 4—Object Classification Methods. In Computer Vision Technology for Food Quality Evaluation, 2nd ed.; Sun, D.-W., Ed.; Academic Press: San Diego, CA, USA, 2016; pp. 87–110. ISBN 978-0-12-802232-0. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Vapnik, V. The Support Vector Method of Function Estimation. In Nonlinear Modeling: Advanced Black-Box Techniques; Suykens, J.A.K., Vandewalle, J., Eds.; Springer: Boston, MA, USA, 1998; pp. 55–85. ISBN 978-1-4615-5703-6. [Google Scholar]

- Schapire, R.E. Explaining AdaBoost. In Empirical Inference; Schölkopf, B., Luo, Z., Vovk, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–52. ISBN 978-3-642-41135-9. [Google Scholar]

- Kaido, N.; Yamamoto, S.; Hashimoto, T. Examination of Automatic Detection and Tracking of Ships on Camera Image in Marine Environment. 2016 Techno-Ocean (Techno-Ocean) 2016. [Google Scholar] [CrossRef]

- Ferreira, J.C.; Branquinho, J.; Ferreira, P.C.; Piedade, F. Computer Vision Algorithms Fishing Vessel Monitoring—Identification of Vessel Plate Number. In Proceedings of the Ambient Intelligence—Software and Applications—8th International Symposium on Ambient Intelligence (ISAmI 2017), Porto, Portugal, 21–23 June 2017; De Paz, J.F., Julián, V., Villarrubia, G., Marreiros, G., Novais, P., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 9–17. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2014, arXiv:13112524. [Google Scholar]

- Girshick, R. Fast R-CNN. arXiv 2015, arXiv:150408083. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:150601497. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:180402767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Springer: Cham, Swizerland, 2016; Volume 9905, pp. 21–37. [Google Scholar] [CrossRef]

- Dong, Z.; Lin, B. Learning a Robust CNN-Based Rotation Insensitive Model for Ship Detection in VHR Remote Sensing Images. Int. J. Remote Sens. 2020, 41, 3614–3626. [Google Scholar] [CrossRef]

- Fan, W.; Zhou, F.; Bai, X.; Tao, M.; Tian, T. Ship Detection Using Deep Convolutional Neural Networks for PolSAR Images. Remote Sens. 2019, 11, 2862. [Google Scholar] [CrossRef]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A Densely Connected End-to-End Neural Network for Multiscale and Multiscene SAR Ship Detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- An, Q.; Pan, Z.; Liu, L.; You, H. DRBox-v2: An Improved Detector with Rotatable Boxes for Target Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8333–8349. [Google Scholar] [CrossRef]

- Qi, L.; Li, B.; Chen, L.; Wang, W.; Dong, L.; Jia, X.; Huang, J.; Ge, C.; Xue, G.; Wang, D. Ship Target Detection Algorithm Based on Improved Faster R-CNN. Electronics 2019, 8, 959. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Xu, C.; Lv, Y.; Fu, C.; Xiao, H.; He, Y. A Lightweight Feature Optimizing Network for Ship Detection in SAR Image. IEEE Access 2019, 7, 141662–141678. [Google Scholar] [CrossRef]

- Song, J.; Kim, D.; Kang, K. Automated Procurement of Training Data for Machine Learning Algorithm on Ship Detection Using AIS Information. Remote Sens. 2020, 12, 1443. [Google Scholar] [CrossRef]

- Imani, M.; Ghoreishi, S.F. Scalable Inverse Reinforcement Learning Through Multi-Fidelity Bayesian Optimization. IEEE Trans. Neural Netw. Learn. Syst. 2021. [Google Scholar] [CrossRef] [PubMed]

- Sr, Y.Z.; Sr, J.S.; Sr, L.H.; Sr, Q.Z.; Sr, Z.D. A Ship Target Tracking Algorithm Based on Deep Learning and Multiple Features. In Proceedings of the Twelfth International Conference on Machine Vision (ICMV 2019), Amsterdam, The Netherlands, 31 January 2020; Volume 11433, p. 1143304. [Google Scholar]

- Huang, Z.; Sui, B.; Wen, J.; Jiang, G. An Intelligent Ship Image/Video Detection and Classification Method with Improved Regressive Deep Convolutional Neural Network. Complexity 2020, 2020, 1520872. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep Learning in Neural Networks: An Overview. Neural Netw. Off. J. Int. Neural Netw. Soc. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. arXiv 2017, arXiv:170307402. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:161203144. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Li, K.; Huang, Z.; Cheng, Y.; Lee, C. A Maximal Figure-of-Merit Learning Approach to Maximizing Mean Average Precision with Deep Neural Network Based Classifiers. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4503–4507. [Google Scholar]

- Babenko, B.; Yang, M.; Belongie, S. Visual Tracking with Online Multiple Instance Learning. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 983–990. [Google Scholar]

- Bolme, D.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Forward-Backward Error: Automatic Detection of Tracking Failures. In Proceedings of the 2010 20th International Conference on Pattern Recognition, ICPR ’10, Istanbul, Turkey, 23–26 August 2010; pp. 2756–2759. [Google Scholar]

| Object Detection Algorithm | Advantage | Limitation |

|---|---|---|

| Region with CNN features(R-CNN) [10] In 2014, Ross Girshick suggested R-CNN and acquired a mean average precision (mAP) of 53.3%with improvement more than 30% over the prior best outcome on PASCAL VOC 2012. | It improves the quality of candidate bounding boxes and take a deep architecture to extract high-level features [10]. | Training of R-CNN is expensive because the features are extracted from different region proposals and stored on the disk. Also it takes much time to process relatively small training set such as VGG16. |

| Fast Region with CNN features (Fast R-CNN) [11] In 2015, Ross Girshick suggested Fast R-CNN that uses bounding boxes and multiple task on classification [11]. | It saves the extra expense on storage space. The Fast R-CNN is faster than R-CNN because the convolution process is completed once per image and a feature map is produced from it. | It’s also slow and time consuming because it uses selective search algorithm to find the regional proposal, hence affects the performance of the network. |

| Faster R-CNN [12] In 2016, Ren et al. introduced Faster R-CNN which uses a separate network to predict regional proposals instead of using selective search algorithm. | Ability of an object to be trained in an end to end way. Also, a frame rate of 5 FPS (FramePer Second) on a GPU is achieved with state-of-the-art object detection accuracy on PASCAL VOC 2007 and 2012 [12]. | Its time consuming. It is not trained to deal with objects with extreme shapes. It does not produce object instaces instead produce objects with background. |

| YOLO (You Only Look Once) [13] In 2016, Redmon et al. proposed You Only Look Once (YOLO) in this, instead of using regions to localize object it uses convolution neural network to suggest bounding boxes and probability of those boxes. | It is fast compared to prior algorithms because it uses only one step for object detection. | It has spatial constraints which result in difficulty with dealing with small objects in groups. Because of many downsampling operations it result to difficulty in generating configuration. Sometime shows less accuracy. |

| Single Shot Detector(SSD) [16] In 2016, Liu et al. suggested Single Shot MultiBox Detector (SSD).It uses default anchor boxes with different aspect ratios and scales to generate the output space of bounding boxes instead of fixed grid used in YOLO [16]. | It is fast | It has less accuracy |

| No of Clusters | 3 | 5 | 6 | 7 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|

| avg IoU(%) | 66.98 | 70.39 | 73.70 | 75.01 | 78.64 | 79.01 | 79.12 | 79.15 |

| Width | 75 | 109 | 123 | 130 | 142 | 200 | 232 | 235 | 308 |

| Height | 31 | 55 | 84 | 119 | 42 | 57 | 129 | 71 | 103 |

| Soft-NMS Algorithm |

| Algorithm: Candidate box , Target score corresponding to the candidate box Set IoU threshold Output: ,

|

| Image Size | 352 | 416 | 480 | 544 | 608 | |

|---|---|---|---|---|---|---|

| 0.1 | 0.773(17.5) | 0.795(17.6) | 0.909(15.5) | 0.864(16.4) | 0.811(14.7) | |

| 0.2 | 0.727(18.7) | 0.795(18.4) | 0.864(18.6) | 0.886(17.1) | 0.856(15.7) | |

| 0.3 | 0.750(19.0) | 0.750(19.3) | 0.841(18.6) | 0.727(17.0) | 0.750(16.2) | |

| 0.4 | 0.682(19.7) | 0.568(19.6) | 0.841(18.5) | 0.614(17.7) | 0.705(16.9) | |

| 0.5 | 0.636(19.9) | 0.432(19.4) | 0.750(17.8) | 0.500(17.3) | 0.545(16.9) | |

| Image Size | 352 | 416 | 480 | 544 | 608 | |

|---|---|---|---|---|---|---|

| 0.1 | 0.826(18.1) | 0.909(18.9) | 0.955(17.8) | 0.864(17.0) | 0.841(15.0) | |

| 0.2 | 0.864(19.3) | 0.864(19.2) | 0.932(17.6) | 0.864(14.1) | 0.742(14.4) | |

| 0.3 | 0.750(19.4) | 0.818(18.8) | 0.886(17.0) | 0.682(17.2) | 0.705(16.5) | |

| 0.4 | 0.659(19.7) | 0.750(19.1) | 0.705(18.5) | 0.727(15.8) | 0.568(17.0) | |

| 0.5 | 0.682(20.0) | 0.682(19.5) | 0.614(18.6) | 0.545(17.6) | 0.477(13.7) | |

| Algorithm | mAP | FPS |

|---|---|---|

| YOLOv3 algorithm [15] | 0.909 | 15.5 |

| Proposed Algorithm | 0.955 | 17.8 |

| Video Sequence Number | Partial Occlusion | In Middle | Multiple Target | Camera Shake |

|---|---|---|---|---|

| 1 | ||||

| 2 | ✓ | ✓ | ||

| 3 | ✓ | ✓ | ||

| 4 | ✓ | ✓ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jie, Y.; Leonidas, L.; Mumtaz, F.; Ali, M. Ship Detection and Tracking in Inland Waterways Using Improved YOLOv3 and Deep SORT. Symmetry 2021, 13, 308. https://doi.org/10.3390/sym13020308

Jie Y, Leonidas L, Mumtaz F, Ali M. Ship Detection and Tracking in Inland Waterways Using Improved YOLOv3 and Deep SORT. Symmetry. 2021; 13(2):308. https://doi.org/10.3390/sym13020308

Chicago/Turabian StyleJie, Yang, LilianAsimwe Leonidas, Farhan Mumtaz, and Munsif Ali. 2021. "Ship Detection and Tracking in Inland Waterways Using Improved YOLOv3 and Deep SORT" Symmetry 13, no. 2: 308. https://doi.org/10.3390/sym13020308

APA StyleJie, Y., Leonidas, L., Mumtaz, F., & Ali, M. (2021). Ship Detection and Tracking in Inland Waterways Using Improved YOLOv3 and Deep SORT. Symmetry, 13(2), 308. https://doi.org/10.3390/sym13020308