Abstract

Inspired by the large number of applications for symmetric nonlinear equations, this article will suggest two optimal choices for the modified Polak–Ribiére–Polyak (PRP) conjugate gradient (CG) method by minimizing the measure function of the search direction matrix and combining the proposed direction with the default Newton direction. In addition, the corresponding PRP parameters are incorporated with the Li and Fukushima approximate gradient to propose two robust CG-type algorithms for finding solutions for large-scale systems of symmetric nonlinear equations. We have also demonstrated the global convergence of the suggested algorithms using some classical assumptions. Finally, we demonstrated the numerical advantages of the proposed algorithms compared to some of the existing methods for nonlinear symmetric equations.

MSC:

90C30; 90C26

1. Introduction

Consider the problem

where is continuously differentiable mapping and its Jacobian is symmetric, i.e., . Generally, the system of nonlinear equations has various applications in modern science and technology, some examples of nonlinear problems that are symmetrical in nature include “the discretized two-point boundary value problem, the gradient mapping of unconstrained optimization problem, the Karush-Kuhn-Tucker (KKT) of equality constrained optimization problems, the saddle point problem, and the discretized elliptic boundary value problem” see [1,2,3] for more details. There are different methods for solving symmetric nonlinear equations. Starting from the classic work of Li and Fukushima [1], where they proposed a Gauss-Newton-based Broyden-Fletcher-Goldfarb-Shanno (BFGS) method for the symmetric nonlinear equations. Subsequent improvements on the performance of [1] were discussed in [2,3,4,5,6] and the references therein. However, these methods are cost-effective in computing and storing the Jacobian approximate and therefore could not handle large-scale symmetric systems efficiently. These inspired researchers to develop methods that could adequately deal with large-scale problems. The conjugate gradient (CG) method is an iterative scheme of the form:

where is a stepsize to be determined by some line search techniques and is the CG search direction defined by

, and is the CG parameter [7]. Moreover, the efficiency of the CG method is based on the appropriate selection of the parameter , see [7,8,9]. As dictated in the survey paper proposed by Hager and Zhang [7], one of the most effective CG parameters is the one suggested by Polak, Ribiére and Polyak (PRP) [8] with an outstanding restart feature given by

where and represents the Euclidean norm. However, despite the effectiveness and efficiency of the PRP method, the direction generated by the PRP method is not descent, i.e., it does not fulfill the condition

This prompted Zhang et al. [10] to propose the following modification of the PRP CG parameter given by

The modified CG parameter (6) met the following sufficient descent condition

with as positive constant.

Furthermore, in the same vein as Zhang et al [10], Babaie-Kafaki and Ghanbari [11] have recently studied the following extension of the PRP method based on the Dai and Liao [12] strategies,

is specified as a nonnegative constant. They considered the computation of in (8) as an open problem in nonlinear CG methods. They also pointed out that their adaptive formula for selecting for each iteration is better than a fixed selection [11].

Now, researchers have focused their attention on solving the symmetric systems of nonlinear equations using CG methods. Li and Wang [13] have suggested the modified Fletcher-Reeves-type (FR) CG method for solving symmetric equations. The method is a derivative and matrix-free method, therefore it could handle large dimensions of symmetric nonlinear equations efficiently. Besides, Zhou and Shen have suggested an inexact version of the PRP CG method for the systems of symmetric nonlinear equations [14]. Since then, different CG methods for the solution of symmetric nonlinear systems have been presented, see [15,16,17,18,19,20,21,22,23] for more details. Moreover, motivated by the efficiency of the extended PRP method [11] and the robustness of CG methods in solving large-scale symmetric nonlinear systems, we want to propose two optimal relations for the computation of in (8) and use the corresponding modified PRP parameters to propose algorithms for solving large-scale symmetric nonlinear systems without using the exact gradient information.

The rest of this paper is organized as follows. In Section 2, we present the modified PRP CG-type algorithm for solving symmetric nonlinear equations. In Section 3, the proposed algorithm is shown to converge globally. Section 4 provides computational experiments to demonstrate its practical performance. The paper is concluded in Section 5.

2. Modified PRP CG-Type with Optimal Choices

Li and Fukushima [1] observed that when the Jacobian is symmetric, then the following relation holds:

where is an arbitrary scalar. They utilized (9) and approximated the gradient of the function as

and the step size is to be determined as such that

where , , are real constants and is a positive sequence such that

It is important to state that the function is a merit function defined by

Byrd and Nocedal [24] presented the following measure function given by

where “” is a positive definite matrix, “” is the trace of the matrix , “ln” denotes the natural logarithm, and “” is the determinant of . The function works with the trace and the determinant of the matrix and it involves all the eigenvalues of [24]. Now, using (8) and (11) we suggest the following modified PRP CG-type parameter

where is the optimal choice of and . In what follows, we are going to propose two optimal choices for the nonnegative constant at every iteration.

2.1. The First Optimal Choice for

This subsection will presents the first optimal choice by minimizing the major function of the proposed search direction matrix over . Now, considering Perry’s point of view [25], and Equations (3), (10) and (15), we write the search directions of the MPRP CG method as follows:

with being the search direction matrix defined by

The major function of the search directions matrix is defined by

Since the matrix is a rank 2 update, its determinant is given by

and it trace as

respectively. Now, using (19) and (20) the major fuction of is

Differentiating (21) with respect to to have

Hence, by setting , and solving for to get the first optimal choice for as

Therefore the modified PRP CG-type parameter (15) becomes

2.2. The Second Optimal Choice for

Now, for the second optimal choice of the parameter in (15). Recall that using the approximate gradient (10) the Newton direction is given by

Moreover, the search direction for the modified PRP CG-type method (15) can be written as

Newton direction is considered to be among the most robust schemes [7]. Therefore by Equating (25) with (26), we get

Now, assuming that the Jacobian of is symmetric and multiplying the both sides of (27) by to obtain

Recall that, the secant equation is given by

From the fact that and the vitue of the secant equation, we rewrite (28) as

After simple algebraic simplifications we get the second optimal choice as

Now, for the prove of the global convergence of propose algorithm and promising numerical result, we selected our second optimal choice to be given as

Therefore, the modified PRP CG-type parameter with second optimal choice becomes

Below is the modified PRP CG-type algorithm for solving large system of nonlinear symmetric equations.

3. Global Convergence

To start with the optimal choice , we define the level set by

where satisfies (12).

Lemma 1.

Let be generated by the MPRP Algorithm 1. Then . In addition, converges.

| Algorithm 1: MPRP Algorithm |

| step 0 Choose , , , and set . step 1 Check if is satisfied, else go to step 2. step 2 Determine the stepsize by using (11). step 3 Compute step 4 Determine the CG direction as step 5 Set and go back to step 1. |

Proof.

We make the following assumptions, which in the rest of this section will be frequently used.

Assumption A1.

- (i)

- The set Ψ is bounded.

- (ii)

- The Jacobian is Lipschitz in some neighbourhood of Ψ. The positive constant M exists, such that

Assumptions (i) and (ii) mean that there are constants such that for all

Lemma 2.

Let Assumption K holds. Then we have

and

Proof.

The theorem below shows that MPRP algorithm converge globally.

Theorem 1.

Let Assumption K holds. Then the MPRP Algorithm 1 generated sequence converges globally, that is,

Proof.

We prove this theorem by contradiction. Suppose that (44) is not true, then there exists a positive constant such that

Since , then (45) implies that there exists a positive constant satisfying

CASE II:. Since , this case implies that

By definition of in (10) and the symmetry of the Jacobian, we have

where we used the Lipschitz assumption on the Jacobian and the boundedness on as well in the last inequality. However, (45) and (47) show that there exist a constant such that

By considering (10) and the boundness of and

Now, from Equaton (48) and the Lipschitz assuption on

This together with (40) and (47) show that . Then from (49) and (50) we get

which means that there exists a constant such that for sufficiently large k,

We assume that the inequality (52) is true for all without any loss of generality. Then from (3) and (50), we get

this shows that the sequence is bounded. Because of , then is not satisfied (11), that is to say,

which means that

By means of the mean value theorem, exists in such a way as

Because is bounded, We assume that and have the following result using (48) and the boundedness of

We have, on the other hand,

Hence, using (58) and (59) in (57) we get . Which implies . This is in contradiction with (45). This complete the proof of the theorem. □

4. Numerical Experiments

In this section, we compare the numerical performances of the modified PRP CG-type algorithm using the proposed optimal choice with the norm descent derivative-free algorithm (NDDA) [21] and the ICGM algorithm [22] for solving the nonlinear symmetric Equation (1). For the MPRP algorithm, we set: , , and . While for the remaining two methods we adopted the same parameter as in their respective papers. The codes were written in Matlab R2014a and run on a personal computer with a 1.6 GHz CPU and 8 GB RAM. If the total number of iterations exceeds 1000 or , then the iteration is stopped. On the following eight test problems, we tested all three methods with different initial points and n values:

Problem 1

([1]).

- , for .

- ,

Problem 2

([1]).

Problem 3

([14]).

- for ,

Problem 4

([27]).

- , for

Problem 5

([27]).

- for ,

Problem 6

([27]).

- for ,

Problem 7

([15]).

- for ,

Problem 8

([26]).

Table 1, Table 2, Table 3 and Table 4 contained the numerical results of the three methods for the test problems with the eight different initial points namely; , , , , , , and .

Table 1.

Numerical Comparisons of MPRP, NDDA [21] and ICGM [22].

Table 2.

Numerical Comparisons of MPRP, NDDA [21] and ICGM [22].

Table 3.

Numerical Comparisons of MPRP, NDDA [21] and ICGM [22].

Table 4.

Numerical Comparisons of MPRP, NDDA [21] and ICGM [22].

In Table 1, Table 2, Table 3 and Table 4, “ITER” indicates the number of iteration; “TIME” for the CPU time; “FEV” for the number of function evaluations, and “NORM” indicate the norm of the function at the stopping point. Table 1 contained Problems 1 and 2, although for the number of iterations MPRP with the optimal choice is the winner, followed by the NDDA methods and then the remaining two other algorithms. For the number of function evaluations and the CPU time, the proposed algorithms are also promising. The same observations can be made throughout the remaining tables concerning the number of iterations, CPU time, and the number of function evaluations.

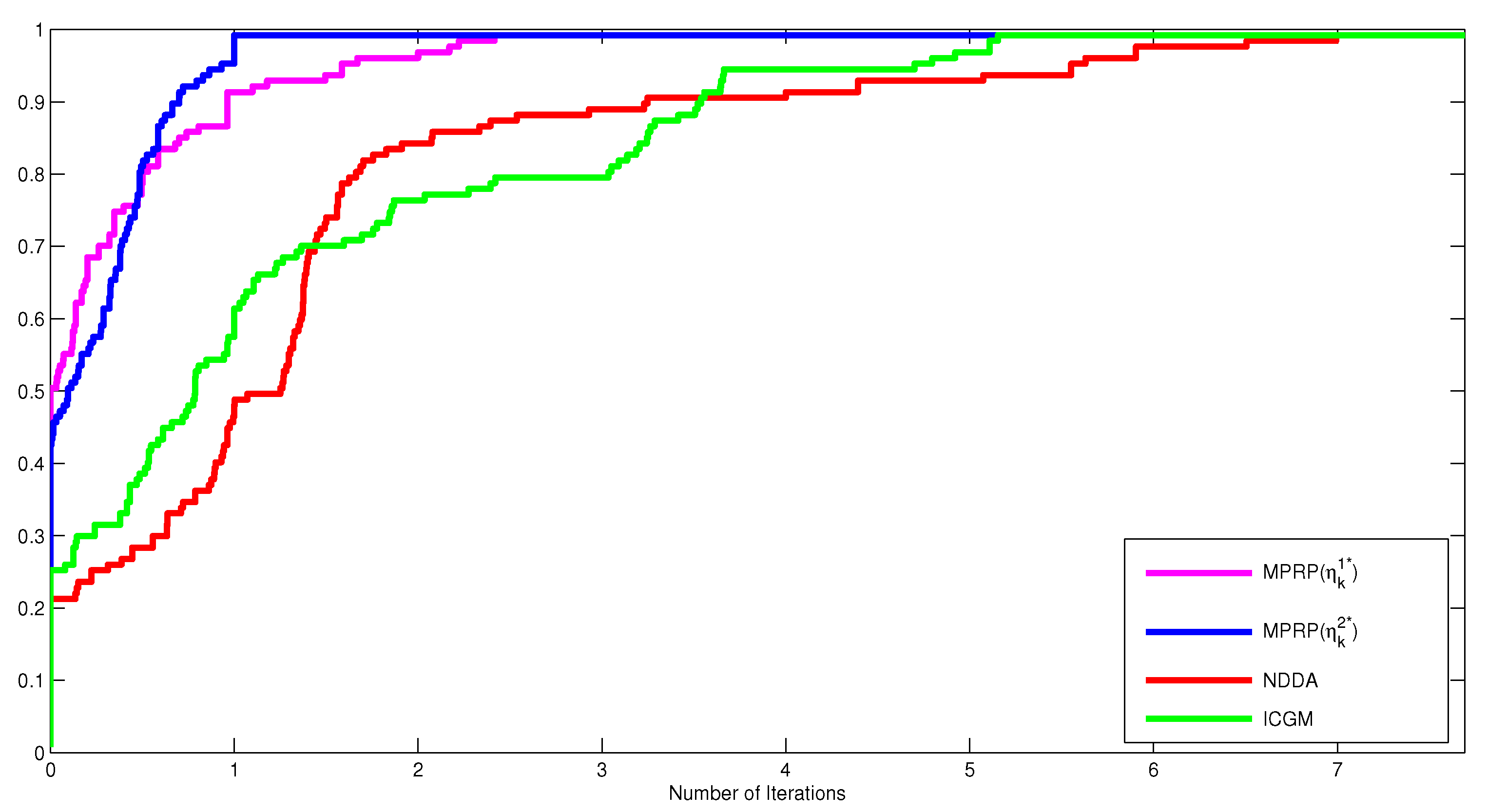

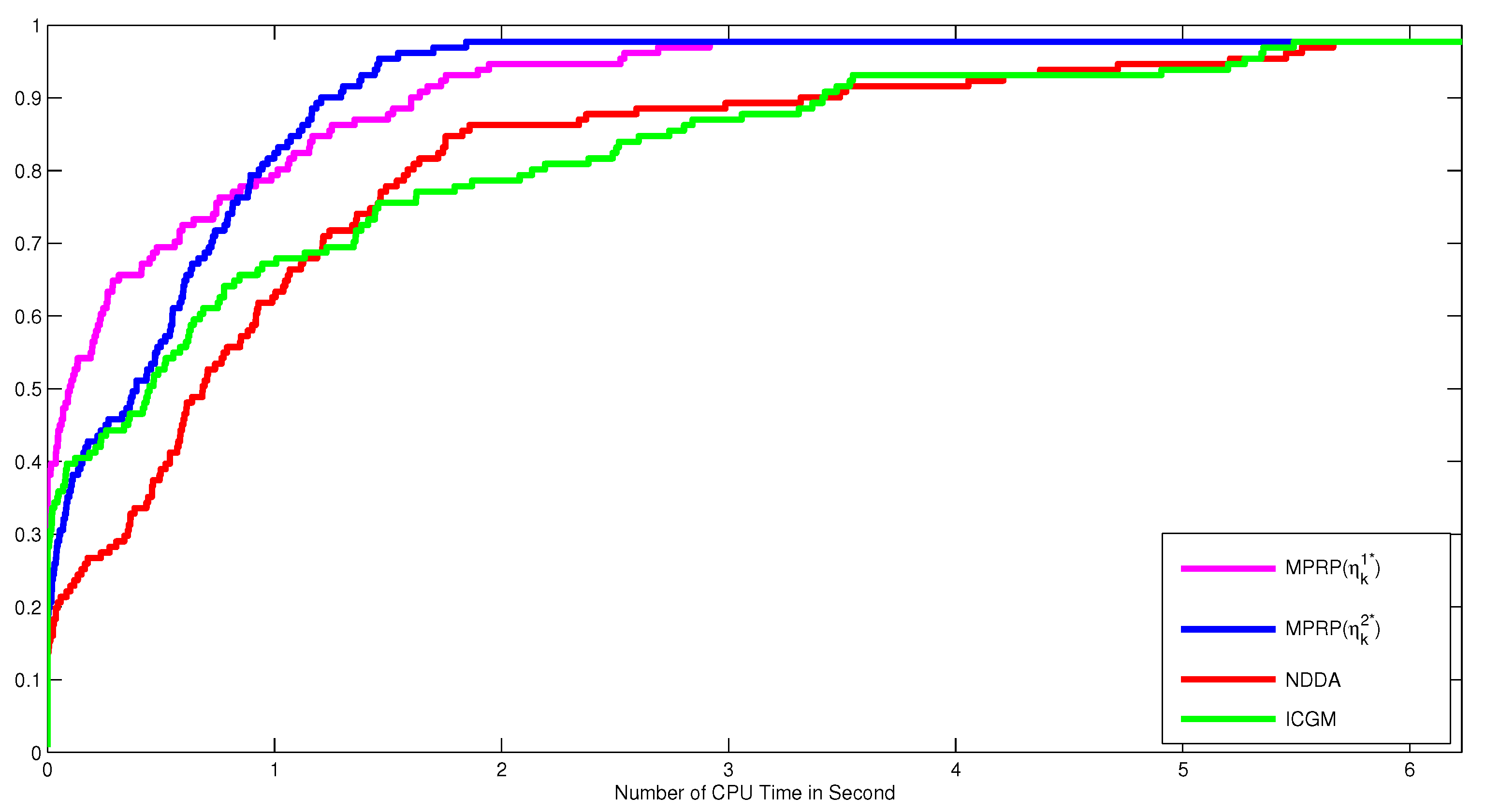

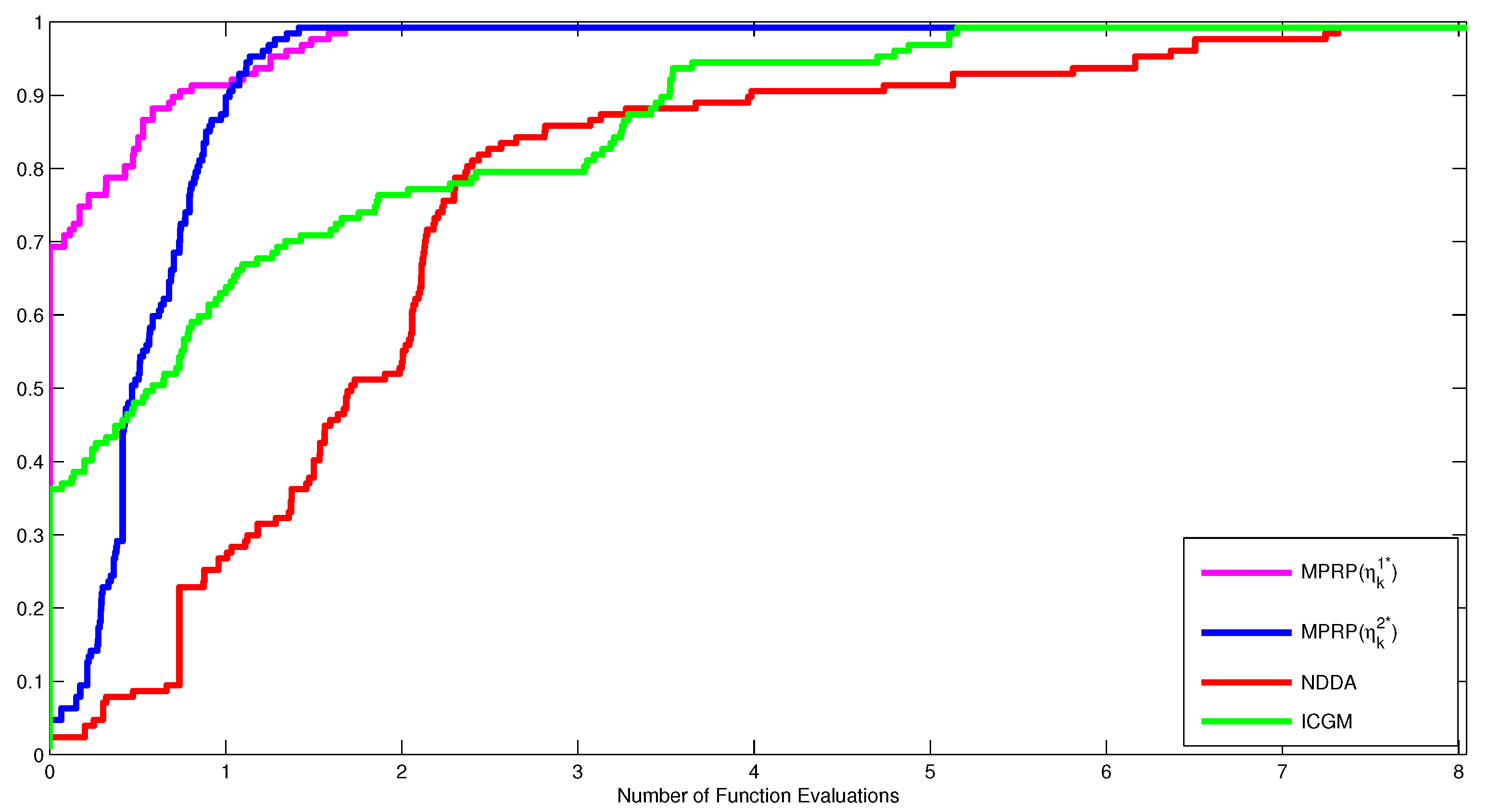

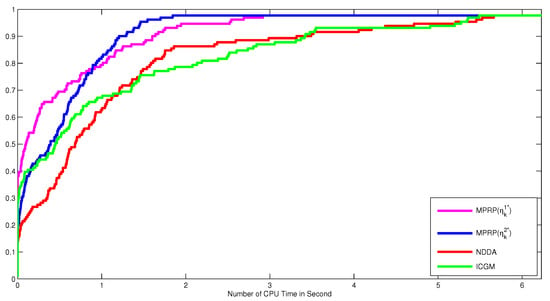

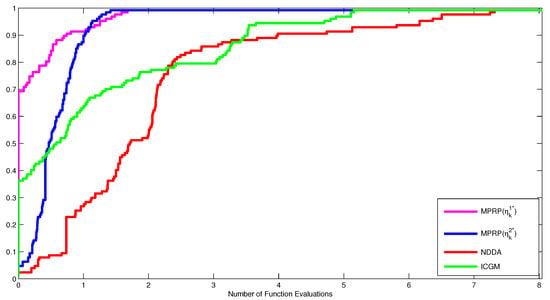

Moreover, to clearly show the performance of these algorithms, Figure 1, Figure 2 and Figure 3 were plotted according to the data in Table 1, Table 2, Table 3 and Table 4 using the Dolan and Moré performance profiles [28]. According to the Dolan and Moré performance profiles [28], the most efficient method is the one whose curve is at the top left of all curves. Therefore, We concluded from Figure 1, Figure 2 and Figure 3, that the MPRP with the optimal choice is the most effective for the number of iterations, followed by the MPRP with optimal choice , NDDA algorithm, and lastly the ICGM algorithm. Similarly, the proposed algorithm remained the most stable algorithms concerning the number of CPU time and the number of function evaluations as their curves correspond to the top left curves.

Figure 1.

Performance profiles of MPRP, NDDA [21] and ICGM [22] for number of iterations.

Figure 2.

Performance profiles of MPRP, NDDA [21] and ICGM [22] for the CPU time in second.

Figure 3.

Performance profiles of MPRP, NDDA [21] and ICGM [22] for number of function evaluations.

5. Conclusions

In this paper, we provided a derivative-free PRP CG-type algorithm for solving the symmetric nonlinear equations and proved its global convergence by using the backtracking type line search. No information on the Jacobian matrix of G is used in the entire process of the proposed algorithm. The proposed algorithm is therefore appropriate for solving large-scale symmetric nonlinear systems. Computational outcomes also show that the proposed algorithm is robust and performs better than the NDDA [21] and ICGM [22] schemes for the symmetric nonlinear equations in number of iterations and the CPU time in seconds. This is because our algorithm makes full use of the optimal choice at every iteration.

Author Contributions

Conceptualization, J.S.; methodology, J.S.; software, A.B.A.; validation, K.M. and A.S.; formal analysis, K.M. and L.O.J.; investigation, K.M. and A.B.A.; resources, K.M.; data curation, A.B.A. and A.S.; writing—original draft preparation, J.S.; writing—review and editing, A.B.A.; visualization, L.O.J.; supervision, K.M.; project administration, A.S.; funding acquisition, L.O.J. All authors have read and agreed to the published version of the manuscript.

Funding

The first author is grateful to TWAS/CUI for the Award of FR number: 3240299486.

Acknowledgments

The second author was financially supported by Rajamangala University of Technology Phra Nakhon (RMUTP) Research Scholarship.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, D.; Fukushima, M. A Globally and Superlinearly Convergent Gauss-Newton-Based BFGS Method for Symmetric Nonlinear Equations. SIAM J. Numer. Anal. 1999, 37, 152–172. [Google Scholar] [CrossRef]

- Yuan, G.; Lu, X. A new backtracking inexact BFGS method for symmetric nonlinear equations. Comput. Math. Appl. 2008, 55, 116–129. [Google Scholar] [CrossRef]

- Yuan, G.; Lu, X.; Wei, Z. BFGS trust-region method for symmetric nonlinear equations. J. Comput. Appl. Math. 2009, 230, 44–58. [Google Scholar] [CrossRef]

- Gu, G.Z.; Li, D.H.; Qi, L.; Zhou, S.Z. Descent directions of quasi-Newton methods for symmetric nonlinear equations. SIAM J. Numer. Anal. 2002, 40, 1763–1774. [Google Scholar] [CrossRef]

- Dauda, M.K.; Mamat, M.; Mohamad, F.S.; Magaji, A.S.; Waziri, M.Y. Derivative Free Conjugate Gradient Method via Broyden’s Update for solving symmetric systems of nonlinear equations. J. Phy. Conf. Ser. 2019, 1366, 012099. [Google Scholar] [CrossRef]

- Zhou, W. A modified BFGS type quasi-Newton method with line search for symmetric nonlinear equations problems. J. Comput. Appl. Math. 2020, 367, 112454. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H. A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2006, 2, 35–58. [Google Scholar]

- Polak, E.; Ribiére, G. Note on the convergence of methods of conjugate directions (in French: Note sur la convergence de méthodes de directions conjuguées). RIRO 1969, 3, 35–43. [Google Scholar]

- Andrei, N. A double parameter scaled BFGS method for unconstrained optimization. J. Comput. Appl. Math. 2018, 332, 26–44. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, W.; Li, D.H. A descent modified Polak–Ribiére–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 2006, 26, 629–640. [Google Scholar] [CrossRef]

- Babaie-Kafaki, S.; Ghanbari, R. A descent extension of the Polak–Ribiére–Polyak conjugate gradient method. Comput. Math. Appl. 2014, 68, 2005–2011. [Google Scholar] [CrossRef]

- Dai, Y.H.; Liao, L.Z. New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 2001, 43, 87–101. [Google Scholar] [CrossRef]

- Li, D.H.; Wang, X.L. A modified Fletcher-Reeves-type derivative-free method for symmetric nonlinear equations. Numer. Algebra Control Optim. 2011, 1, 71–82. [Google Scholar] [CrossRef]

- Zhou, W.; Shen, D. An inexact PRP conjugate gradient method for symmetric nonlinear equations. Numer. Func. Anal. Opt. 2014, 35, 370–388. [Google Scholar] [CrossRef]

- Zhou, W.; Shen, D. Convergence properties of an iterative method for solving symmetric non-linear equations. J. Optim. Theory Appl. 2015, 164, 277–289. [Google Scholar] [CrossRef]

- Waziri, M.Y.; Sabi’u, J. A derivative-free conjugate gradient method and its global convergence for solving symmetric nonlinear equations. Int. J. Math. Math. Sci. 2015, 2015, 1–8. [Google Scholar] [CrossRef]

- Zou, B.; Zhang, L. A nonmonotone inexact MFR method for solving symmetric nonlinear equations. AMO 2016, 18, 87–95. [Google Scholar]

- Sabi’u, J. Enhanced derivative-free conjugate gradient method for solving symmetric nonlinear equations. Int. J. Adv. Appl. Sci. 2016, 5, 50–57. [Google Scholar] [CrossRef]

- Waziri, M.Y.; Sabi’u, J. An alternative conjugate gradient approach for large-scale symmetric nonlinear equations. J. Math. Comput. Sci. 2016, 6, 855–874. [Google Scholar]

- Sabi’u, J. Effective algorithm for solving symmetric nonlinear equations. J. Contemp. Appl. Math. 2017, 7, 157–164. [Google Scholar]

- Liu, J.K.; Feng, Y.M. A norm descent derivative-free algorithm for solving large-scale nonlinear symmetric equations. J. Comput. Appl. Math. 2018, 344, 89–99. [Google Scholar] [CrossRef]

- Abubakar, A.B.; Kumam, P.; Awwal, A.M. An inexact conjugate gradient method for symmetric nonlinear equations. Comput. Math. Methods 2019, 1, e1065. [Google Scholar] [CrossRef]

- Dauda, M.K.; Mustafa, M.; Mohamad, A.; Nor, S.A. Hybrid conjugate gradient parameter for solving symmetric systems of nonlinear equations. Indones. J. Electr. Eng. Comput. Sci. (IJEECS) 2019, 16, 539–543. [Google Scholar] [CrossRef]

- Byrd, R.H.; Nocedal, J. A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 1989, 26, 727–739. [Google Scholar] [CrossRef]

- Perry, A. A modified conjugate gradient algorithm. Oper. Res. 1976, 26, 1073–1078. [Google Scholar] [CrossRef]

- Dennis, E.J.; Moré, J.J. A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comput. 1974, 28, 549–560. [Google Scholar] [CrossRef]

- La Cruz, W.; Martínez, J.; Raydan, M.W. Spectral residual method without gradient information for solving large-scale nonlinear systems of equations. Math. Comput. 2006, 75, 1429–1448. [Google Scholar] [CrossRef]

- Dennis, E.J.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 9, 201–213. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).