Abstract

We introduce a new parallel hybrid subgradient extragradient method for solving the system of the pseudomonotone equilibrium problem and common fixed point problem in real reflexive Banach spaces. The algorithm is designed such that its convergence does not require prior estimation of the Lipschitz-like constants of the finite bifunctions underlying the equilibrium problems. Moreover, a strong convergence result is proven without imposing strong conditions on the control sequences. We further provide some numerical experiments to illustrate the performance of the proposed algorithm and compare with some existing methods.

Keywords:

pseudomonotone; equilibrium problem; fixed point problem; Bregman distance; Bregman nonexpansive mappings; real reflexive Banach spaces MSC:

49J40; 58E35; 65K15; 90C33

1. Introduction

In this paper, we consider the Equilibrium Problem (EP) in the framework of a real reflexive Banach space. Let E be the real reflexive Banach space, and consider its subset C, which is nonempty, closed and convex. Let g: be a bifunction. The task of the EP with respect to g is to find a point such that:

We denote the set of solutions of (1) by . In the literature, it is common knowledge that numerous fascinating and complicated problems in nonlinear analysis, like the complementarity, the fixed point, Nash equilibrium, optimization, saddle point and variational inequality, can be written as an EP [1]. In view of the immense utilization of the EP, it has gained the interest of numerous researchers as of late (see, for example, [2,3,4,5], and the references therein).

On the other hand, let be an operator. A point is called a fixed point of T if We denote the set of fixed points of T by Fixed point theory has numerous applications both in pure and applied science. The largest category for its application is differential equations, and others are in economics, control theory, optimization and game theory. Different techniques have been presented for assessing and estimating the fixed points of nonexpansive and quasi-nonexpansive mappings; see [6,7,8,9,10,11], and the references therein.

The problem of finding a common solution of the EP and the fixed point problem, i.e.:

has become an important area of research due to its possible applications in applied science. This happens mainly in image processing, network distribution, signal processing, etc. [12,13,14,15].

Tada and Takahashi [16] presented the following hybrid technique for finding the said element of the set of solutions of the monotone EP and the set of fixed points of a nonexpansive mapping T in the framework of Hilbert spaces:

Note that at each step for finding the intermediate approximation , we need to solve a strongly monotone regularized equilibrium problem, i.e.,

If the bifunction g is not monotone, then subproblem (3) is not necessarily strongly monotone; hence, the regularization method cannot be applied to the problem. To overcome this predicament, Anh [17] presented the following iterative scheme for finding a common element of the set of fixed points of a nonexpansive mapping T and the set of solutions of the EP involving a pseudomonotone bifunction g in Hilbert spaces:

In order to establish the strong convergence of the sequences generated by (4) to , the author required that the positive sequences of the stepsize satisfy a Lipschitz-like condition, i.e.,

where and are the Lipschitz-like constants of g, that is and satisfy the following inequality: , where is the associated Bregman distance of the convex function f.

In 2016, Hieu et al. [18], following a similar trend, introduced the following parallel hybrid method, called the Parallel Modified Extragradient Method (PMEM), for solving the finite family of equilibrium problems and the common fixed point of nonexpansive mappings in the framework of real Hilbert spaces:

They also proved a strong convergence result for the sequence generated by (6) provided the stepsize satisfies the following condition:

where and , such that and are the Lipschitz-like constants for for

In 2014, Shahzad and Zegeye [11], in the framework of real reflexive Banach spaces and for approximating the common fixed point of multi-valued Bregman relatively nonexpansive mappings, presented the following iterative scheme:

where and C is a nonempty closed convex subset of int domf (i.e., the interior of the domain of f). Under some mild conditions on the parameters, the authors proved the strong convergence of the sequence to , where .

Very recently, Eskandani et al. [19], using the Hybrid Parallel extragradient method (HPA), introduced a Bregman–Lipschitz-type condition for a pseudomonotone bifunction. For estimating this common point for a finite family of multi-valued Bregman relatively nonexpansive mappings in reflexive Banach spaces, the following algorithm, called HPA, was presented:

where is the related Bregman distance of a function f. Under specific suppositions, they established that the sequence converges strongly to , where provided that the stepsize satisfies the condition:

where = , and are the Bregman–Lipschitz coefficients of bifunction for

It is worth noting that the results of Anh, Hieu and Eskandani et al., mentioned above, and other similar ones (see, e.g., [20,21,22]) involve prior knowledge of the Lipschitz-like constants, which have been proven to be very strenuous to approximate piratically. In fact, when it is possible, the estimates are often too small, which slows down the rate of convergence of the algorithms. Thus, it becomes very important to find an algorithm that does not depend on the prior knowledge of the Lipschitz-like constants. Recently, the authors of [23,24,25], introduced some modified extragradient algorithms for solving the pseudomonotone EP (when ), which does not involve the prior estimate of the Lipschitz-like constants and . Furthermore, related to our work are several methods such as [26], where the stepsize is the variable stepsize formula, that is the bifunction has a Lipschitz-like condition defined on it, and the algorithm also operates without the prior estimation of Lipschitz-type constants. The authors in [27,28] considered algorithms for solving the mixed equilibrium problem, split variational inclusion and fixed point theorems. Lastly, we also mention in passing that the authors in [29] considered a convex feasibility problem, that is finding a common element in the finite intersection of a finite family of convex sets ; whereas, in our work, we consider a finite family of pseudomonotone bifunctions, and our C is fixed.

Persuaded by the outcomes above, in this present paper, we provide another subgradient extragradient technique for finding a common element of the set of solutions of equilibrium problems for a finite family of pseudomonotone bifunctions and the set of common fixed points of a finite family of Bregman relatively nonexpansive mappings in the framework of reflexive Banach spaces. Our algorithm is designed in a way that its convergence does not need prior knowledge of the Lipschitz-like constants of the bifunctions for Under specific mild assumptions, we prove a strong convergence result for the sequence generated by our algorithm. Furthermore, we give some numerical examples to demonstrate the proficiency, competitiveness and efficiency of our algorithm with respect to other algorithms in the literature.

2. Preliminaries

Throughout this work, E and C are as defined in the Introduction, and we denote the dual space of E by . Let be a proper convex and lower semicontinuous function. We denote the domain of f by dom f, which is the set }. Letting int dom f, we define the subdifferential of the function f at x as the convex set such that:

We also define the Fenchel conjugate of f, as the function:

It is easy to note that is a proper convex and lower semicontinuous function. A function f on E is said to be coercive [30] if:

Now, considering any convex mapping , the directional derivative of f, denoted by , at int domf in the direction of y, is given by:

Suppose that the limit in (10) subsists for every , f is called Gteaux differentiable at x. The function f is referred to as Gteaux differentiable if it is Gteaux differentiable at each point x in the domain of f. When the limit t approaching zero in (10) is procured throughout for any point where , we say that f is Frchet differentiable at x. In the sequel, we assume that f is an admissible function, i.e., f is proper, convex, lower semicontinuous and Gâteaux differentiable. In this case, f is continuous in the interior of the domain of f (int dom f) (see [31,32]). Also, f is said to be Legendre if it satisfies the following two conditions:

- L1.

- int dom , and the subdifferential is single-valued on its domain;

- L2.

- int dom , and is single-valued on its domain.

According to ([33], p. 83), it is notable that in a reflexive Banach space , where denotes the gradient of f. At the point when this reality is blended together with (L1) and (L2), we get:

Likewise, according to ([34], Corollary 5.5, p. 634), f is Legendre if and only if is Legendre and the functions f and are Gteaux differentiable and strictly convex on int domf and int dom, respectively.

In 1967, Bregman [35] introduced an exquisite and efficacious tool for designing and dissecting the feasibility of optimization algorithms. In what follows, we presume that is a Gâteaux differentiable function. The Bregman distance is defined as the bifunction , where:

The Bregman distance satisfies the following important property called the three point identity: for any and ,

According to ([36], Section 1.2, p. 17), the modulus of total convexity at f is the function , defined by:

The function f is called totally convex at if for any . The function f is said to be totally convex when it is totally convex at every point . We mention in passing that f is totally convex on bounded subsets if and only if f is uniformly convex on bounded subsets (see [36], cf.). According to [37], we are reminded that given any bounded sequence and any other sequence, say in E, then f is referred to as being sequentially consistent if:

Lemma 1

([31]). If is uniformly Frchet differentiable and bounded on subsets of E, then is uniformly continuous on bounded subsets of E from the strong topology E to the strong topology of .

Lemma 2

([36]). If domf contains at least two points, then the function f is totally convex on bounded sets if and only if the function f is sequentially consistent.

Lemma 3

([32]). Let be a Gteaux differentiable and totally convex function. If and the sequence is bounded, then the sequence is also bounded.

The Bregman projection (cf. [35]) with respect to f of onto is characterized as the essentially exceptional vector , which satisfies the following:

Similar to the metric projection in Hilbert spaces, the Bregman projection with respect to totally convex and Gteaux differentiable functions has a variational characterization ([38], Corollary 4.4, p. 23).

Lemma 4

([38]). Assume that f is Gteaux differentiable and totally convex on int domf. Let and be a nonempty, closed, and convex set. If , then the following conditions are equivalent:

- M1.

- The vector is the Bregman projection of x onto C with respect to f.

- M2.

- The is the unique solution of the variational inequality:

- M3.

- The vector is the unique solution of the inequality:

Definition 1.

Let be a mapping. A point x is called a fixed point of T if . The set of fixed points of T is denoted by . Furthermore, a point is said to be an asymptotic fixed point of T if C contains a sequence that converges weakly to , and . The set of asymptotic fixed points of T is denoted by .

Definition 2.

Let C be a nonempty, closed and convex subset of E. A mapping is called:

- i.

- Bregman firmly nonexpansive (BFNE) if:

- ii.

- Bregman strongly nonexpansive (BSNE) with respect to a nonempty (T) if:for all and , and if whenever is bounded, and:

- iii.

- Bregman relatively nonexpansive (BRNE) if:

- iv.

- Quasi-Bregman nonexpansive (QBNE) if and:

It was mentioned in [39] that in the instance where , the following inclusion holds:

In this case, QBNE is called a Bregman relatively nonexpansive mapping.

Following [40,41], we define a map with respect to f by:

Then:

More so, by the subdifferential inequality, we have:

for all (see [42]). In addition, if is a proper lower semicontinuous function, then is proper weak lower semicontinuous and convex. Hence, is convex in the second variable. Then, for all , we have:

where and with .

Let B and S be the closed unit ball and the unit sphere of a Banach space E, respectively. Then, the function is said to be uniformly convex on bounded subsets (see [43]) if for all , where is defined by:

. The function is called the gauge of uniform convexity of f. It is known that is a nondecreasing function. If f is uniformly convex, then the following lemma is known:

Lemma 5

([44]). Let E be a Banach space, be a constant and be a uniformly convex function on bounded subsets of E. Then:

for all , , and with , where is the gauge of the uniform convexity of f.

Lemma 6

([45]). Suppose that is a Legendre function. The function f is totally convex on bounded subsets if and only if f is uniformly convex on bounded subsets.

Lemma 7

([46]). Let C be a nonempty convex subset of E and be a convex and subdifferentiable function on C. Then, f attains its minimum at if and only if , where is the normal cone of C at x, that is:

Throughout this paper, we assume that the following assumptions hold on any :

- A1.

- g is pseudomonotone, i.e., for all ,

- A2.

- g is a Bregman–Lipschitz-type condition, i.e., there exist two positive constants , such that:

- A3.

- for all ,

- A4.

- is continuous on C for every ,

- A5.

- is convex, lower semicontinuous and subdifferentiable on C for every fixed .

3. Results

In this section, we introduce a new parallel hybrid subgradient extragradient algorithm for finding a common element of the set of solutions of equilibrium problems for pseudomonotone bifunctions and the common fixed point of Bregman relatively nonexpansive mappings.

Let E be a real reflexive Banach space, C be a nonempty, closed and convex subset of E and be a uniformly Fréchet differentiable function, which is coercive, Legendre, totally convex and bounded on subsets of E such that For let be a finite family of bifunctions satisfying Assumptions (A1)–(A5). Furthermore, for let be a finite family of Bregman relative nonexpansive mappings. Assume that the solution set:

Furthermore, the control sequence satisfies the condition:

Now, we present our algorithm (Algorithm 1) as follows.

| Algorithm 1 Parallel hybrid Bregman subgradient extragradient method (PHBSEM). |

|

Before we start with the proof of Algorithm 1, we discuss some contributions of the algorithm compared with other methods in the literature.

- (i)

- Firstly, Algorithm 1 solves two strongly convex optimization problems in parallel for with the second convex problem solving over the half-spaces , which is simpler than the entire feasible set used in Eskandani et al. [19].

- (ii)

- Moreover, the stepsize in Eskandami et al. [19] required finding the prior estimates of the Lipschitz-like constants of the finite bifunctions, which is very cumbersome for computation. Meanwhile, in Algorithm 1, the stepsize is chosen self-adaptively and does not require the prior estimates of the Lipschitz-like constant of the finite bifunctions.

- (iii)

- Furthermore, when E is the real Hilbert space, our Algorithm 1 improves the algorithms of [18,47,48,49,50] in the setting of real Hilbert spaces.

- (iv)

- Furthermore, when E is a real Hilbert space and , , our Algorithm 1 improves and compliments the algorithms of [17,20,22,24,25,51].

Now, to prove the strong convergence of the algorithm parallel hybrid Bregman subgradient extragradient method (PHBSEM), we need the following results.

Lemma 8.

The sequence is bounded by:

where are the Lipschitz-like constants of the bifunctions for

Proof.

From the Lipschitz-like condition (17), we have:

It follows that:

for Hence, for we have:

Thus, for we have:

This implies that:

Therefore, we have that is bounded by:

□

Lemma 9.

Suppose and where are as defined in Step 1 and Step 2 of the algorithm PHBSEM. Then:

Proof.

Since , we have:

Furthermore, ; thus:

Furthermore, since it follows from (7) that:

This implies that for there exists and such that:

Since then Hence:

Furthermore, , then:

Thus,

By (29) and (30), we have:

Let . From (31), we get:

Since each is pseudomonotone, . Therefore:

Adding (28) and (32), we have:

By the three point identity, i.e., (11), we get:

Using (23), we have:

Thus, we obtain (25). This completes the proof. □

Lemma 10.

For any , the following inequality holds:

where and is the gauge of uniform convexity of the conjugate function

Proof.

From (21) and Lemma 5, we have:

Since then the desired result follows from Lemma 9. □

Lemma 11.

The sequence generated by the algorithm PHBSEM is well defined and .

Proof.

Let , then from Lemma 9 and the definition of and , we have:

Therefore, , and this implies that . Hence, for all

By induction, for , we have and thus, . Suppose is given and , for some . There exits such that . By (13), we have:

Since , we get . Therefore, . Thus, the sequence is well defined. □

Lemma 12.

Let be the sequences generated by the algorithm PHBSEM. Then, the following relations hold:

Proof.

Since for all and from the definition of then:

Hence, is bounded, and from Lemma 3, we have that is bounded. Moreover, from the definition of and and since we have:

Then,

Thus,

Hence, is increasing, and since it is also bounded, exists. Thus, it follows that:

Then, from (12), we get:

Furthermore, since , then Thus, taking the limits of both sides as and by (36), we get:

This implies that:

It follows from Lemma 2 that:

By the uniform continuity and Fréchet differentiability of f, we have:

Moreover:

Then, from Lemma 10, we have:

Moreover, by Lemma 9, we have:

Thus,

Therefore, taking the limits as and by (40), we have:

Then:

Hence:

In addition, from (21), we have:

Therefore, from (42), we get:

This implies that:

This completes the proof. □

Now, we prove that the sequence generated by Algorithm 1 converges strongly to an element in

Theorem 1.

Let E be a real reflexive Banach space, C be a nonempty, closed and convex subset of E and function be a uniformly Fréchet differentiable function, which is coercive, Legendre, totally convex and bounded on subsets of E such that For let be a finite family of bifunctions satisfying Assumptions (A1)–(A5). Furthermore, for let be a finite family of Bregman relatively nonexpansive mappings. Suppose that Let be a sequence in such that Then, the sequences generated by Algorithm 1 converge strongly to a solution where

Proof.

First, we show that every sequential weak limit point of belongs to From the previous results, we see that is well defined and for all Furthermore, from Lemma 12, is bounded. Then, there exists a subsequence of converging weakly to p. By (43) and (44), we obtain that for Hence, Since:

By Lemma 7, we obtain:

This implies that:

where and for This implies that:

Note that Hence,

Furthermore, since then:

Then:

Therefore:

Hence, for This means that Consequently,

Now, we show that converges strongly to Since then we have:

Furthermore, thus:

Passing the limit as into (46), we get:

From (13), This implies that converges strongly to Lemma 12 ensures that also converges strongly to This completes the proof. □

The following results can be obtained as consequences of our main result.

Corollary 1.

Let E be a real reflexive Banach space, C be a nonempty, closed and convex subset of E and function be a uniformly Fréchet differentiable function, which is coercive, Legendre, totally convex and bounded on subsets of E such that For let be a finite family of bifunctions satisfying Assumptions (A1)–(A5). Furthermore for let be a finite family of Bregman strongly nonexpansive (BSNE) mappings. Suppose that Let be a sequence in such that Then, the sequences generated by Algorithm 2 converges strongly to a solution where

Furthermore, by setting we obtain the following result, which extends the results of [24,25,51] to a real reflexive Banach space.

Corollary 2.

Let E be a real reflexive Banach space, C be a nonempty, closed and convex subset of E and function be a uniformly Fréchet differentiable function, which is coercive, Legendre, totally convex and bounded on subsets of E such that Let be a bifunction satisfying Assumptions (A1)–(A5) and be a Bregman relatively nonexpansive mapping. Suppose that Let be a sequence in such that Then, the sequences generated by the following Algorithm 2 converge strongly to a solution where

| Algorithm 2 Hybrid Bregman subgradient extragradient algorithm (HBSEA). |

|

Furthermore, when E is a real Hilbert space, our Algorithm 1 becomes the following algorithm, which improves the algorithm in [18,50], and the references therein.

| Algorithm 3 Parallel Subgradient Extragradient Algorithm (PSEA). |

|

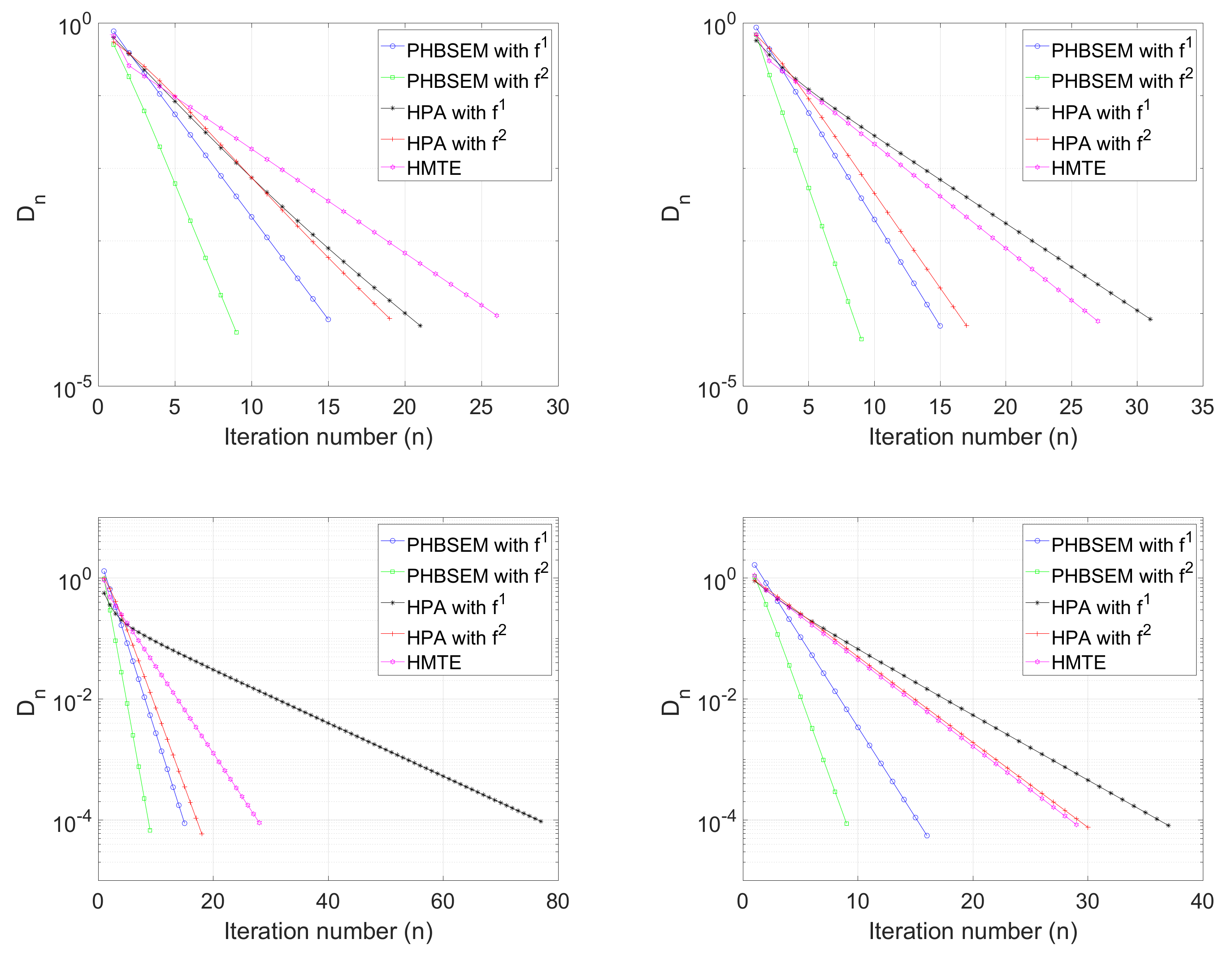

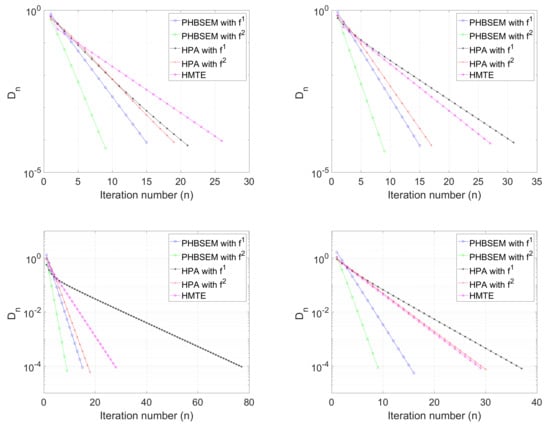

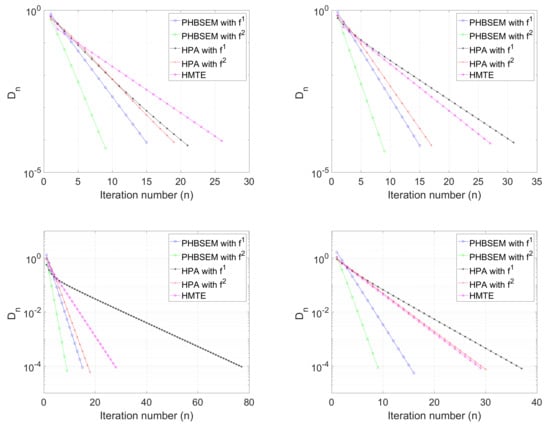

4. Numerical Examples

In this section, we give some numerical examples to show the performance and efficiency of the proposed algorithm. We compare the the efficiency of Algorithm 1 (namely PHBSEM) with that of Algorithm (23) of [19] (namely HPA) using different types of convex functions and with Algorithm 1 of [18] (PMEM)). All computations were performed using MATLAB (2019b) programming on a PC with specifications: processor AMD Ryzen 53500 U and 2.10 GHz, 8.00 GB RAM.

We employ the following convex functions:

- (1)

- in this case, and (i.e., the Euclidean squared norm);

- (2)

- with in this case, andfor all and (i.e., the Kullback–Leibler distance).

Example 1.

Let with induced norm and inner product for all and The feasible set C is given by:

Consider the following problem:

where is defined by:

where is randomly generated ; and is defined by:

It is easy to see that Conditions (A1)–(A5) are satisfied and is a Bregman relatively nonexpansive mapping for Moreover, . In what follows, for each we choose The initial value is generated randomly, and using as the stopping criterion, we compare the performance of the algorithms PHBSEM and HPA using the convex functions defined above and the algorithm HPEM for the following values of and

- Case I:

- Case II:

- Case III:

- Case IV:

Table 1.

Computational result for Example 1. PHBSEM, parallel hybrid Bregman subgradient extragradient method.

Figure 1.

Example 1. Top left: I; top right: Case II; bottom left: Case III; bottom right: Case IV.

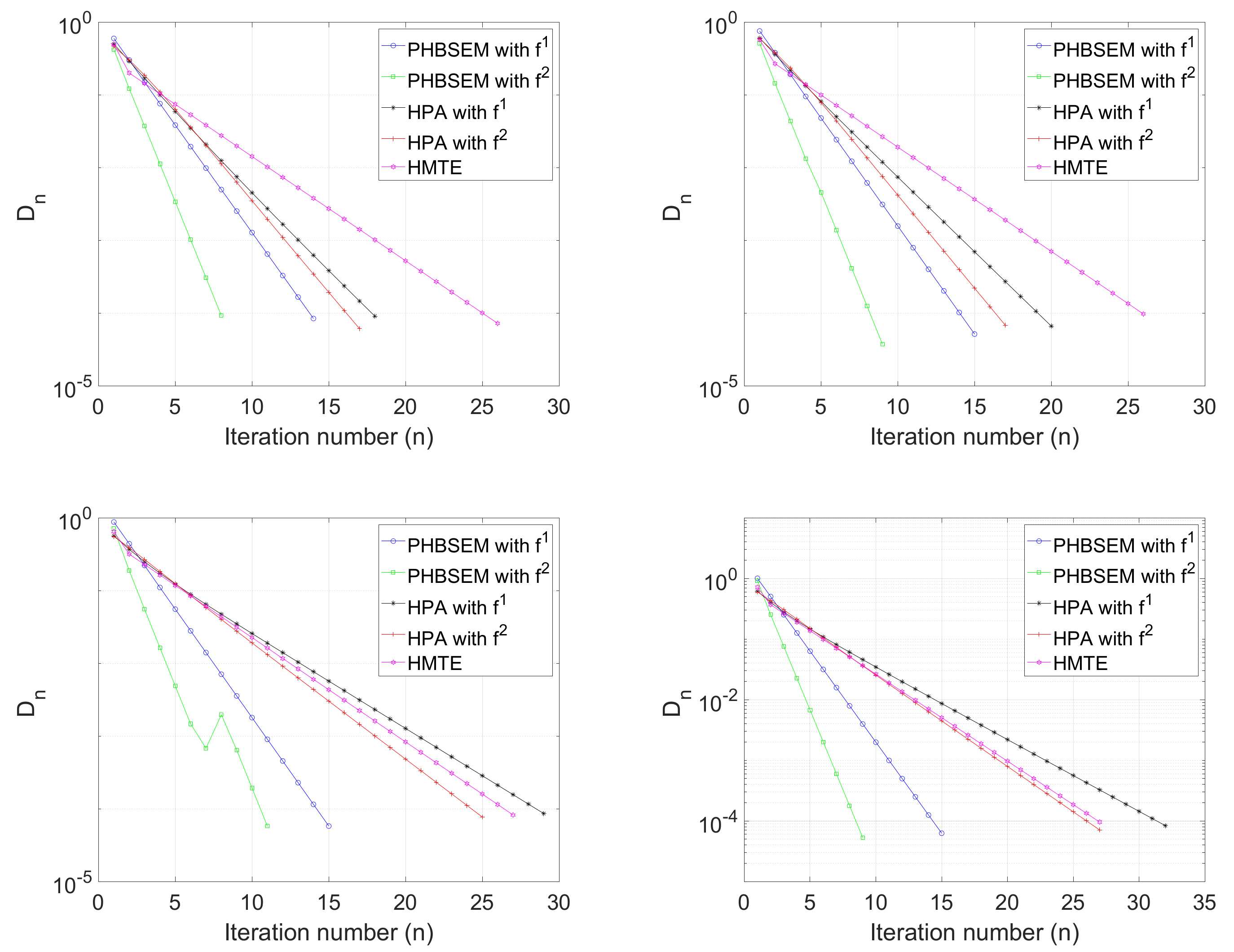

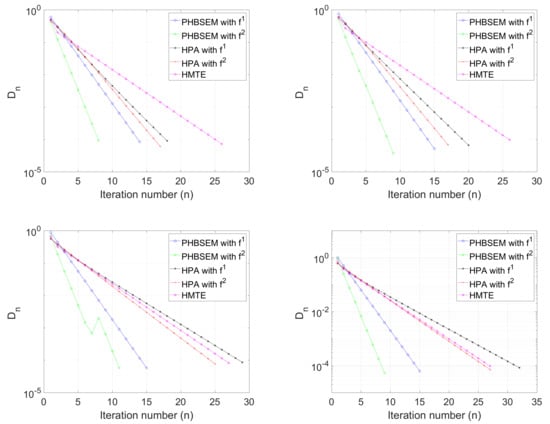

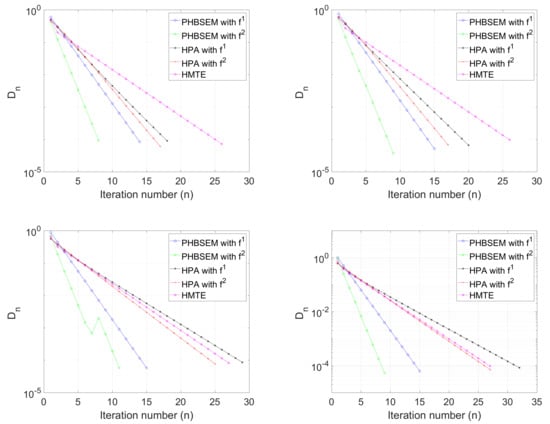

Next, we consider the Nash–Cournot oligopolistic market equilibrium model.

Example 2.

Let For let be defined by:

where are vectors in for are matrices of order such that are symmetric positive semidefinite and are symmetric negative semidefinite. Clearly, are pseudomonotone and satisfy a Lipschitz-like condition with We define the feasible set C by:

Now, for let be defined by:

where are the closed balls in centred at with radius i.e., It is easy to see that are nonexpansive and, thus, Bregman relatively nonexpansive. More over, We chose the following parameters: and compare the performance of the algorithm PHBSEM with the algorithms HPA and HPEM for the following values of and N:

- Case I:

- Case II:

- Case III:

- Case IV:

We use as the stopping criterion in each case. The computational results are shown in Table 2 and Figure 2.

Table 2.

Computational result for Example 2.

Figure 2.

Example 1: Top left: I; top right: Case II; bottom left: Case III; bottom right: Case IV.

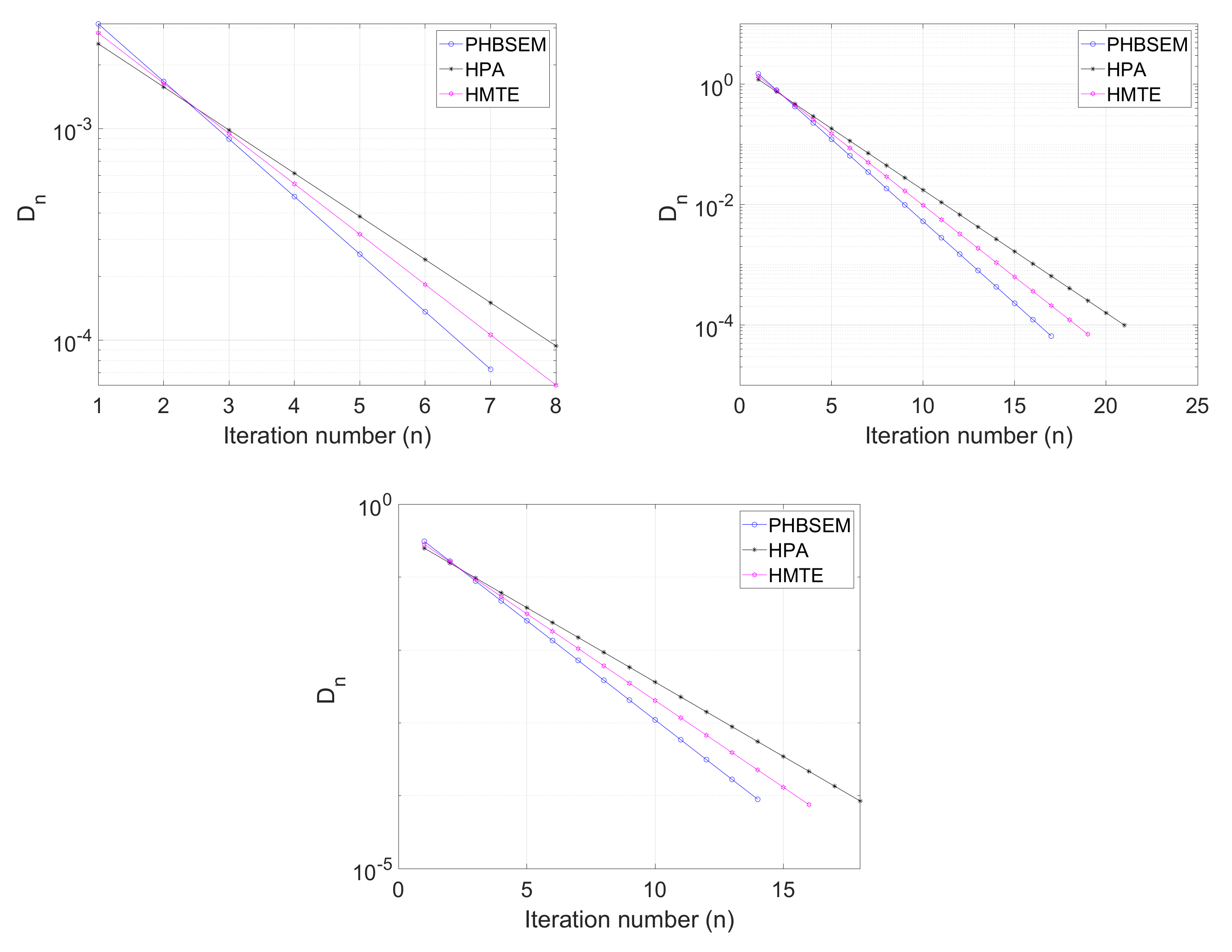

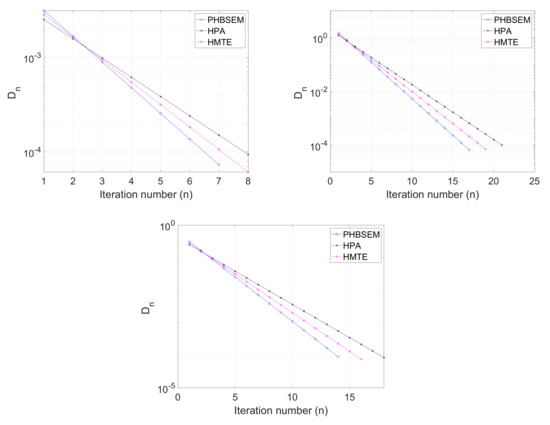

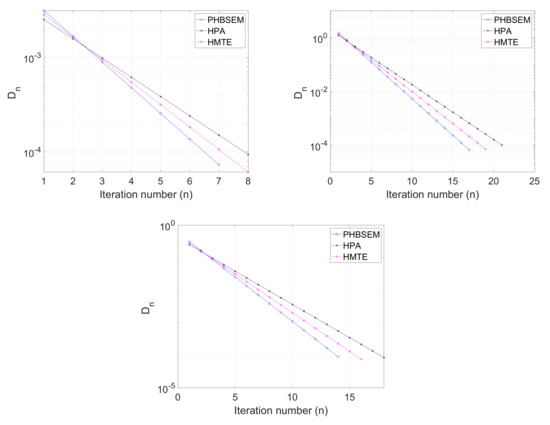

Finally, we consider the following example in an infinite-dimensional space. In this case, we chose

Example 3.

Let with the inner product and the induced norm . The feasible set is defined as:

Let be defined as with the operator given as , for It is easy to see that each is monotone, thus pseudomonotone on C for For we define the mapping by where:

Then, is a Bregman relatively nonexpansive mapping for and We take and study the performance of the algorithms PHBSEM, HPA and HPEM for the following initial value:

- Case I:

- Case II:

- Case III:

We use as the stopping criterion and plot the graphs of against the number of iterations in each case. The computation results are shown in Table 3 and Figure 3.

Table 3.

Computational result for Example 3.

Figure 3.

Example 1: Top left: I; top right: Case II; bottom: Case III.

5. Conclusions

In this paper, we present a new parallel hybrid Bregman subgradient extragradient method for finding a common solution of a finite family of the pseudomonotone equilibrium problem and common fixed point problem for Bregman relatively nonexpansive mappings in real Hilbert spaces. The algorithm is designed such that its convergence does not require prior estimates of the Lipschitz-like constant of the pseudomonotone bifunctions. Furthermore, a strong convergence result is proven under mild conditions. Some numerical examples are presented to show the efficiency and accuracy of the proposed method. This result improves and extends the results of [17,18,19,20,22,25,47,49,50,51] and many other results in the literature.

Author Contributions

Conceptualization, L.O.J.; methodology, A.T.B. and L.O.J.; validation, M.A. and L.O.J.; formal analysis, A.T.B. and L.O.J.; writing, original draft preparation, A.T.B.; writing, review and editing, L.O.J. and M.A.; visualization, L.O.J.; supervision, L.O.J. and M.A.; project administration, L.O.J. and M.A.; funding acquisition, M.A. All authors read and agreed to the published version of the manuscript.

Funding

This research was funded by Sefako Makgatho Health Sciences University Postdoctoral research fund, and the APC was funded by the Department of Mathematics and Applied Mathematics, Sefako Makgatho Health Sciences University, Pretoria, South Africa.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors acknowledge with thanks the Department of Mathematics and Applied Mathematics at the Sefako Makgatho Health Sciences University for making their facilities available for the research.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; nor in the decision to publish the results.

References

- Blum, E.; Oettli, W. From optimization and variational inequalities to equilibrium problems. Math. Stud. 1994, 63, 123–146. [Google Scholar]

- Giannessi, F.; Maugeri, A.; Pardalos, P.M. Equilibrium Problems: Nonsmooth Optimization and Variational Inequality Models; Kluwer: Dordrecht, The Netherlands, 2001. [Google Scholar]

- Iusem, A.N.; Sosa, W. Iterative algorithms for equilibrium problems. Optimization 2003, 52, 301–316. [Google Scholar] [CrossRef]

- Mastroeni, G. Gap functions for equilibrium problems. J. Glob. Optim. 2003, 27, 411–426. [Google Scholar] [CrossRef]

- Muu, L.D. Stability property of a class of variational inequalities. Optimization 1984, 15, 347–353. [Google Scholar] [CrossRef]

- Eskandani, G.Z.; Raeisi, M. On the zero point problem of monotone operators in Hadamard spaces. Numer. Algorithms 2018, 80, 1155–1179. [Google Scholar] [CrossRef]

- Azarmi, S.; Eskandani, G.Z.; Raeisis, M. Products of resolvents and multivalued hybrid mappings in CAT(0) spaces. Acta Math. Sci. 2018, 38, 791–804. [Google Scholar]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings; Marcel Dekker: New York, NY, USA, 1984. [Google Scholar]

- Martín-Márquez, V.; Reich, S.; Sabach, S. Bregman strongly nonexpansive operators in re-flexive Banach spaces. J. Math. Anal. Appl. 2013, 400, 597–614. [Google Scholar] [CrossRef]

- Reich, S. Weak convergence theorems for nonexpansive mappings in Banach spaces. J. Math. Anal. Appl. 1979, 67, 274–276. [Google Scholar] [CrossRef]

- Shahzad, N.; Zegeye, H. Convergence theorem for common fixed points of a finite family of multi-valued Bregman relatively nonexpansive mappings. Fixed Point Theory Appl. 2014, 152. [Google Scholar] [CrossRef]

- Iiduka, H. A new iterative algorithm for the variational inequality problem over the fixed point set of a firmly nonexpansive mapping. Optimization 2010, 59, 873–885. [Google Scholar] [CrossRef]

- Iiduka, H.; Yamada, I. A use of conjugate gradient direction for the convex optimiza-tion problem over the fixed point set of a nonexpansive mapping. SIAM J. Optim. 2009, 19, 1881–1893. [Google Scholar] [CrossRef]

- Iiduka, H.; Yamada, I. A subgradient-type method for the equilibrium problem over the fixed point set and its applications. Optimization 2009, 58, 251–261. [Google Scholar] [CrossRef]

- Mainge, P.E. A hybrid extragradient viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 2008, 49, 1499–1515. [Google Scholar] [CrossRef]

- Tada, A.; Takahashi, W. Strong convergence theorem for an equilibrium problem and a nonexpansive mapping. In Nonlinear Analysis and Convex Analysis; Takahashi, W., Tanaka, T., Eds.; Yokohama Publishers: Yokohama, Japan, 2006. [Google Scholar]

- Anh, P.N. A hybrid extragradient method for pseudomonotone equilibrium problems and fixed point problems. Bull. Malays. Math. Sci. Soc. 2013, 36, 107–116. [Google Scholar]

- Hieu, D.V.; Muu, L.D.; Anh, P.K. Parallel hybrid extragradient methods for pseudmono-tone equilibrium problems and nonexpansive mappings. Numer. Algorithms 2016, 73, 197–217. [Google Scholar] [CrossRef]

- Eskandani, G.Z.; Raeisi, M.; Rassias, T.M. A hybrid extragradient method for solving pseudomontone equilibrium problem using Bregman distance. J. Fixed Point Theory Appl. 2018, 20, 132. [Google Scholar] [CrossRef]

- Anh, P.N. A hybrid extragradient method extended to fixed point problems and equilibrium problems. Optimization 2013, 62, 271–283. [Google Scholar] [CrossRef]

- Eskandani, G.; Raeisi, M. A hybrid extragradient method for a general split equality problem involving resolvents and pseudomonotone bifunctions in Banach spaces. Colcolo 2019, 56, 43. [Google Scholar]

- Hieu, D.V.; Quy, P.K. Accelerated hybrid methods for solving pseudomonotone equilibrium problems. Adv. Comput. Math. 2020, 46, 1–24. [Google Scholar]

- Hussain, A.; Khanpanuk, T.; Pakkaranang, N.; Rehman, H.U.; Wairojjana, N. A self-adaptive Popov’s extragrdient method for solving equilibrium problems with applications. J. Math. Anal. 2020, 12, 523. [Google Scholar]

- Wairojjana, N.; ur Rehman, H.; Pakkaranang, N.; Khanpanuk, T. Modified Popov’s subgradient extra-gradient algorithm with inertial technique for equilibrium problems and its applications. Int. J. Appl. Math. 2020, 33, 879–901. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Aphane, M. A self-adaptive inertial subgradient extragradient method for pseudomonotone equilibrium and common fixed point problems. Fixed Point Theory Appl. 2020, 1, 1–22. [Google Scholar] [CrossRef]

- Wairojjana, N.; De la Sen, M.; Pakkaranang, N. A general inertial projection-type algorithm for solving equilibrium problem in Hilbert spaces with applications in fixed-point problems. Axioms 2020, 9, 101. [Google Scholar] [CrossRef]

- Abbas, M.; Rizvi, Y. Strong convergence of a system of generalized mixed equilibrium problem, split variational inclusion problem and fixed point problem in Banach spaces. Symmetry 2019, 11, 722. [Google Scholar] [CrossRef]

- Kazmi, R.R.; Rizvi, S.H. An iterative method for split variational inclusion problem and fixed point problem for a nonexpansive mapping. Optim. Lett. 2014, 8, 1113–1124. [Google Scholar] [CrossRef]

- Bauschke, H.; Borwein, J.M. On projection algorithms for solving convex feasibility prob-lems. SIAM Rev. 1996, 38, 367–426. [Google Scholar] [CrossRef]

- Lemmarchal, C.; Hiriat-Urruty, J.B. Convex Analysis and Minimization Algorithms II; Springer: Berlin, Germany, 1993; Volume 306. [Google Scholar]

- Reich, S.; Sabach, S. A strong convergence theorem for a proximal-type algorithm in re-flexive Banach spaces. J. Nonlinear Convex Anal. 2009, 10, 471–485. [Google Scholar]

- Reich, S.; Sabach, S. Two strong convergence theorems for a proximal method in reflexive Banach spaces. Numer. Funct. Anal. Optim. 2010, 31, 22–44. [Google Scholar] [CrossRef]

- Bonnans, J.F.; Shapiro, A. Perturbation Analysis of Optimization Problems; Springer: New York, NY, USA, 2000. [Google Scholar]

- Bauschke, H.; Borwein, J.M.; Combettes, P.L. Essential smoothness, essential strict con-vexity, and Legendre functions in Banach spaces. Commun. Contemp. Math. 2001, 3, 615–647. [Google Scholar] [CrossRef]

- Bregman, L.M. A relaxation method for finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput. Math. Math. Phys. 1967, 7, 200–217. [Google Scholar] [CrossRef]

- Butnariu, D.; Iusem, A.N. Totally Convex Functions for Fixed Points Computation and Infinite Dimensional Optimization; Kluwer Academic: Dordrecht, The Netherlands, 2000; Volume 40. [Google Scholar]

- Bauschke, H.H.; Borwein, J.M.; Combettes, P.L. Bregman monotone optimization algo-rithms. SIAM J. Control Optim. 2003, 42, 596–636. [Google Scholar] [CrossRef]

- Butnariu, D.; Resmerita, E. Bregman distances, totally convex functions and a method for solving operator equations in Banach spaces. Abstr. Appl. Anal. 2006, 2006, 084919. [Google Scholar] [CrossRef]

- Kassay, G.; Reich, S.; Sabach, S. Iterative methods for solving systems of variational ine-qualities in reflexive Banach spaces. SIAM J Optim. 2011, 21, 1319–1344. [Google Scholar] [CrossRef]

- Alber, Y.I. Metric and generalized projection operators in Banach spaces: Properties and applications. In Theory and Applications of Nonlinear Operators of Accretive and Monotone Type; Kartsatos, A.G., Ed.; Lecture Notes in Pure and Applied Mathematics; Dekker: New York, NY, USA, 1996; Volume 178, pp. 15–50. [Google Scholar]

- Censor, Y.; Lent, A. An iterative row-action method for interval convex programming. J. Optim. Theory Appl. 1981, 34, 321–353. [Google Scholar] [CrossRef]

- Kohsaka, F.; Takahashi, W. Proximal point algorithms with Bregman functions in Banach spaces. J. Nonlinear Convex Anal. 2005, 6, 505–523. [Google Scholar]

- Zalinescu, C. Convex Analysis in General Vector Spaces; World Scientific Publishing: Singapore, 2002. [Google Scholar]

- Naraghirad, E.; Yao, J.C. Bregman weak relatively nonexpansive mappings in Banach spaces. Fixed Point Theory Appl. 2013, 2013, 141. [Google Scholar] [CrossRef]

- Butnariu, D.; Iusem, A.N.; Zalinescu, C. On uniform convexity, total convexity and convergence of the proximal point and outer Bregman projection algorithms in Banach spaces. J. Convex Anal. 2003, 10, 35–61. [Google Scholar]

- Tiel, J.V. Convex Analysis: An Introductory Text; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Hieu, D.V.; Thai, B.H.; Kumam, P. Parallel modified methods for pseudomonotone equi-librium problems and fixed point problems for quasi-nonexpansive mappings. Adv. Oper. Theory 2020, 5, 1684–1717. [Google Scholar] [CrossRef]

- Bantaojai, T.; Pakkaranang, N.; Kumam, P.; Kumam, W. Convergence anal-ysis of self-adaptive inertial extra-gradient method for solving a family of pseudomonotone equilibrium problems with application. Symmetry 2020, 12, 1332. [Google Scholar] [CrossRef]

- Jolaoso, L.O.; Alakoya, T.O.; Taiwo, A.; Mewomo, O.T. A parallel combination extragradi-ent method with Armijo line searching for finding common solutions of finite families of equilibrium and fixed point problems. Rend. Circ. Mat. Palermo II. Ser. 2020, 69, 711–735. [Google Scholar] [CrossRef]

- Hieu, D.V. Common solutions to pseudomonotone equilibrium problems. Bull. Ira-Nian Math. Soc. 2016, 42, 1207–1219. [Google Scholar]

- Yang, J.; Liu, H. The subgradient extragradient method extended to pseudomonotone equi-librium problems and fixed point problems in Hilbert space. Optim. Lett. 2020, 14, 1803–1816. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).