Joint Dedusting and Enhancement of Top-Coal Caving Face via Single-Channel Retinex-Based Method with Frequency Domain Prior Information

Abstract

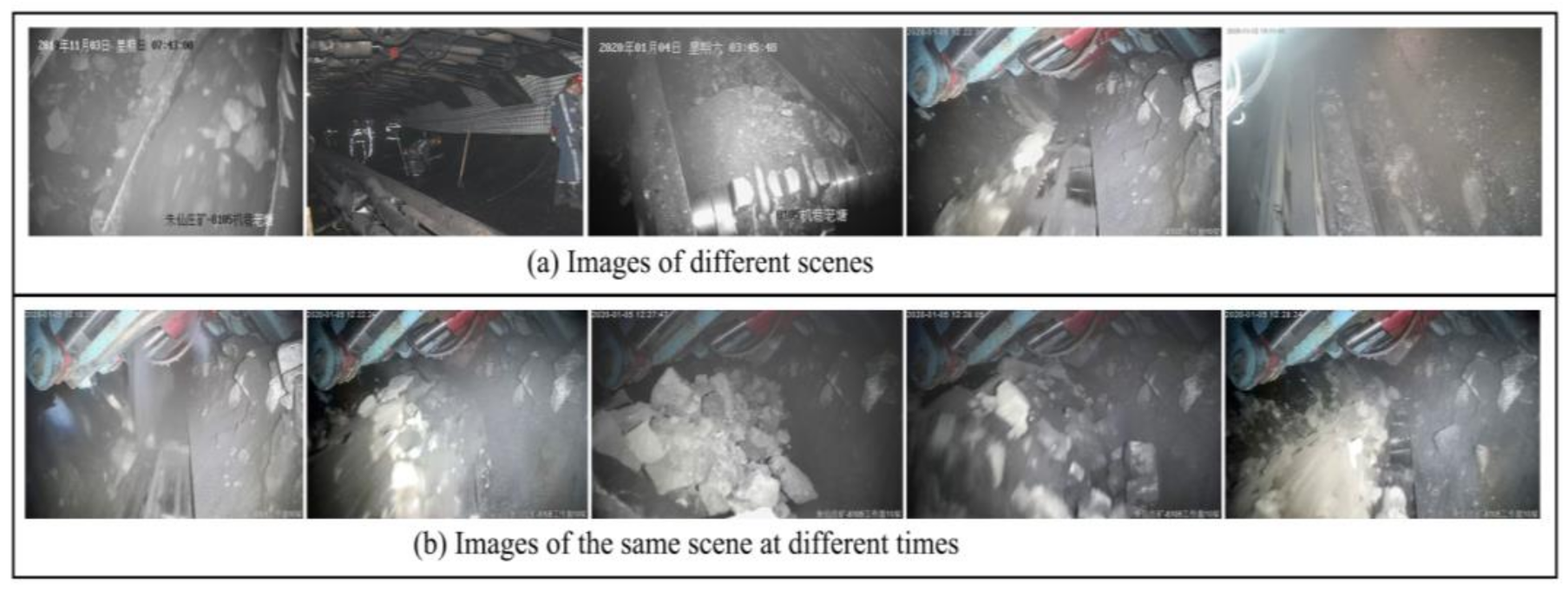

:1. Introduction

2. Related Works

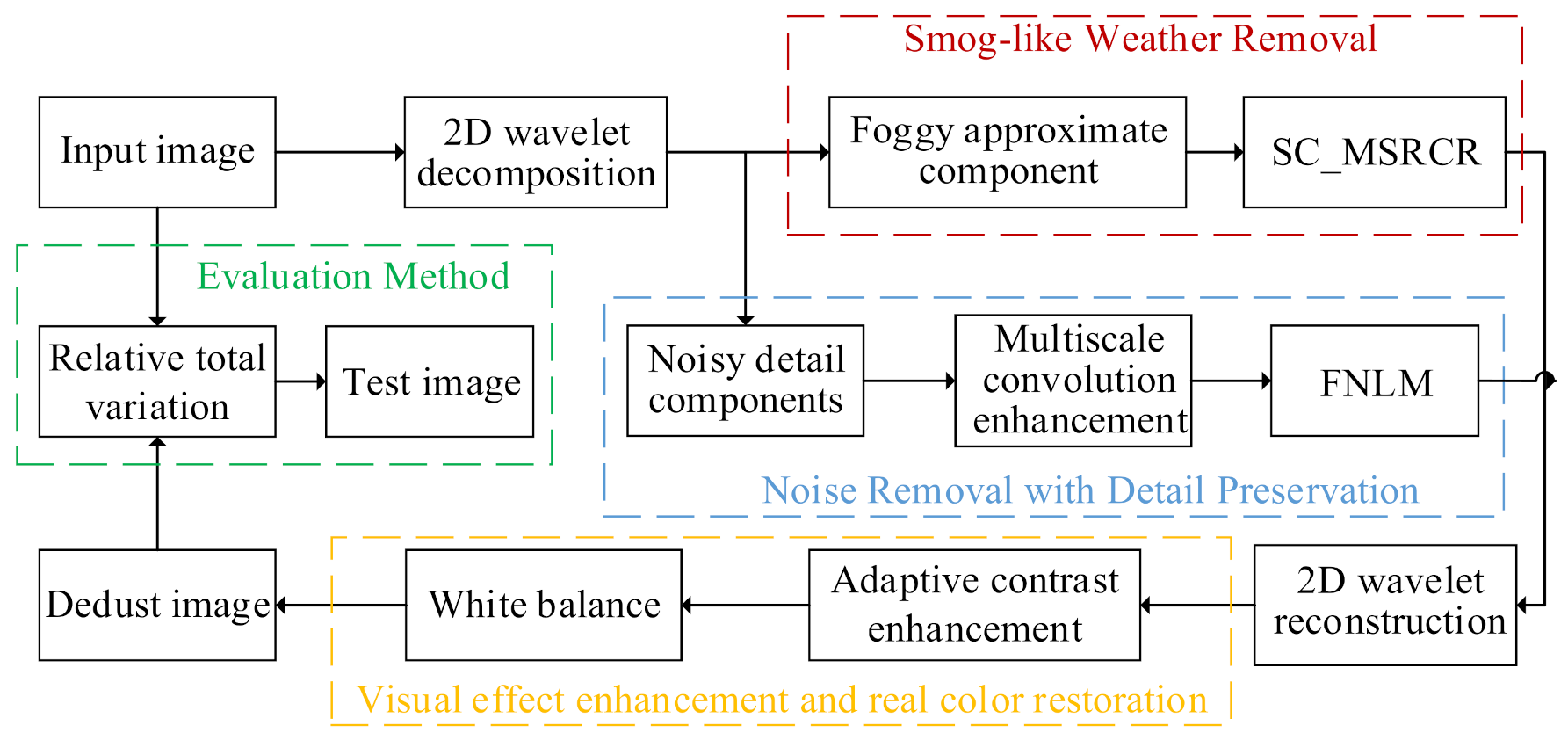

3. Joint Dedusting and Enhancement Method

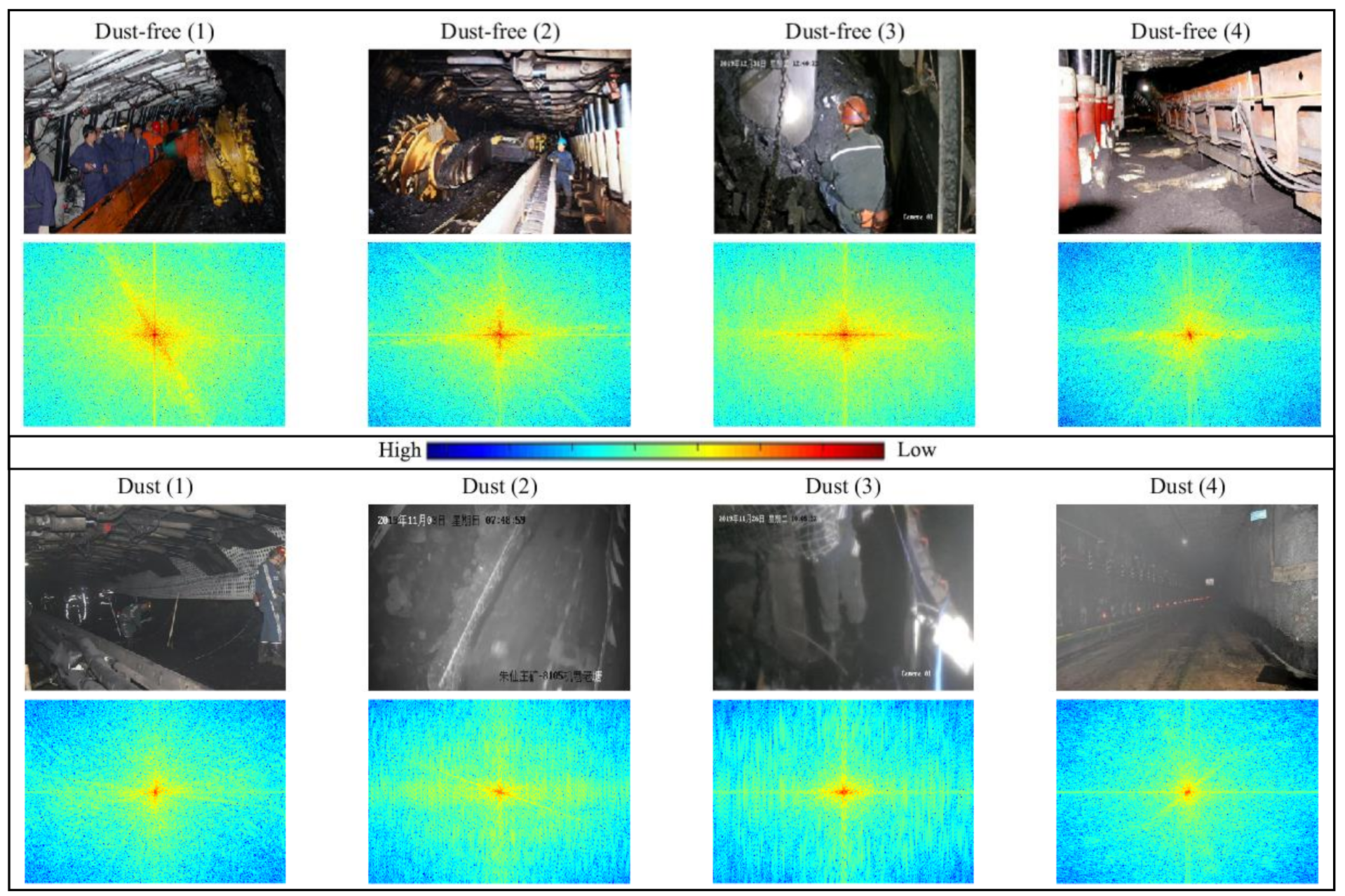

3.1. Image Analysis in Wavelet Domain

3.2. Smog-Like Weather Removal in Approximate Component

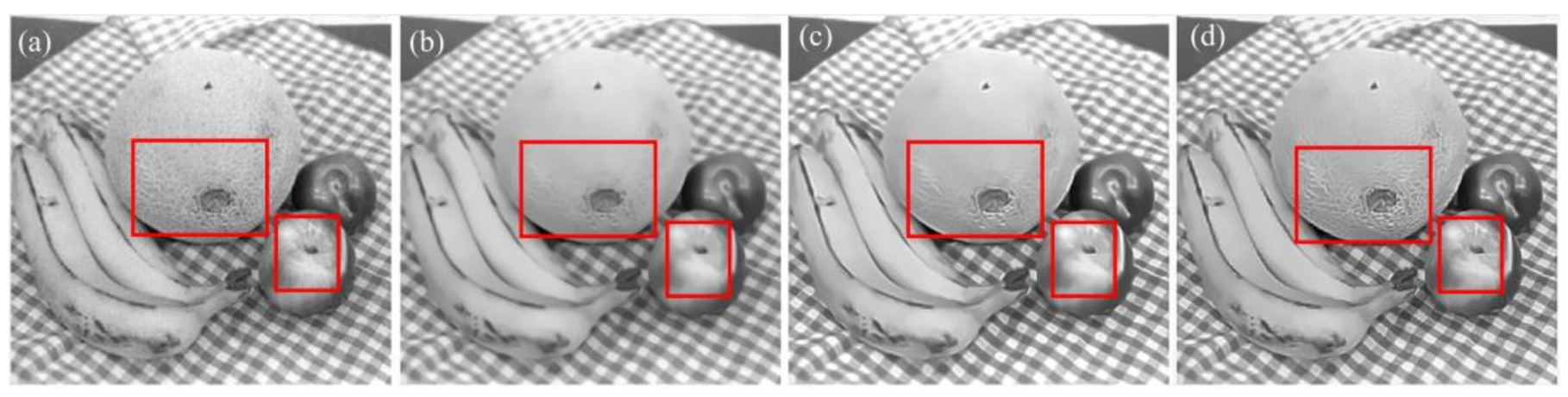

3.3. Noise Removal with Detail Preservation in Detail Components

3.4. Visual Effect Enhancement and Color Restoration

3.5. Image Acquisition and Evaluation

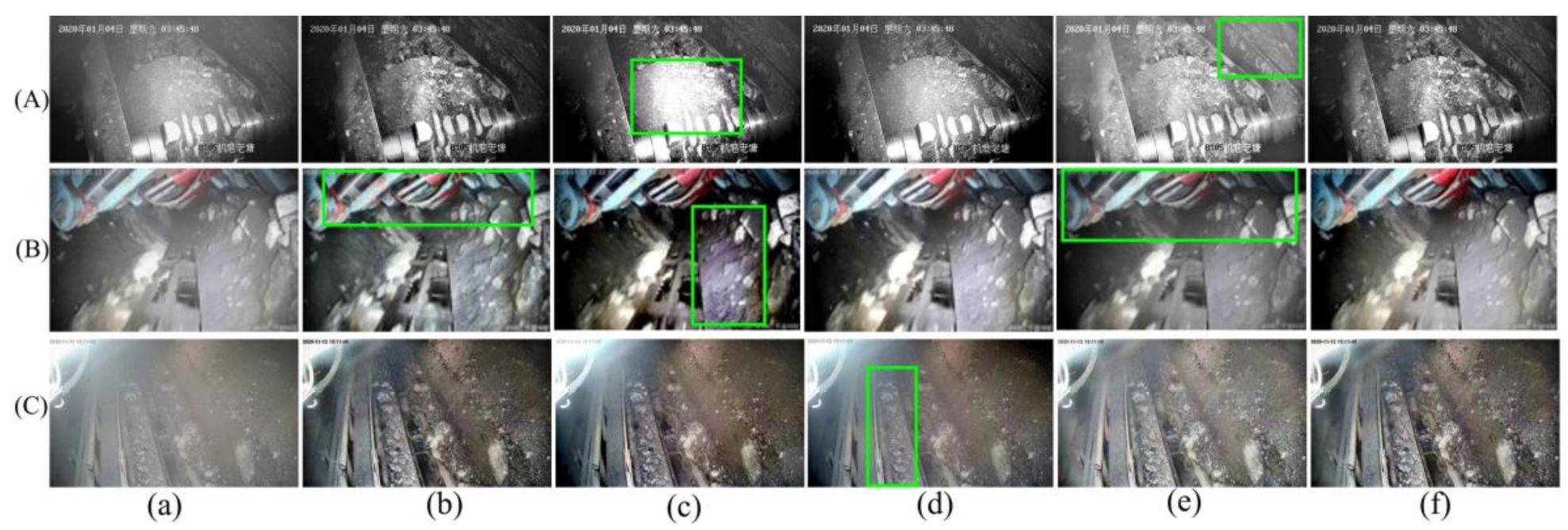

4. Experimental Results

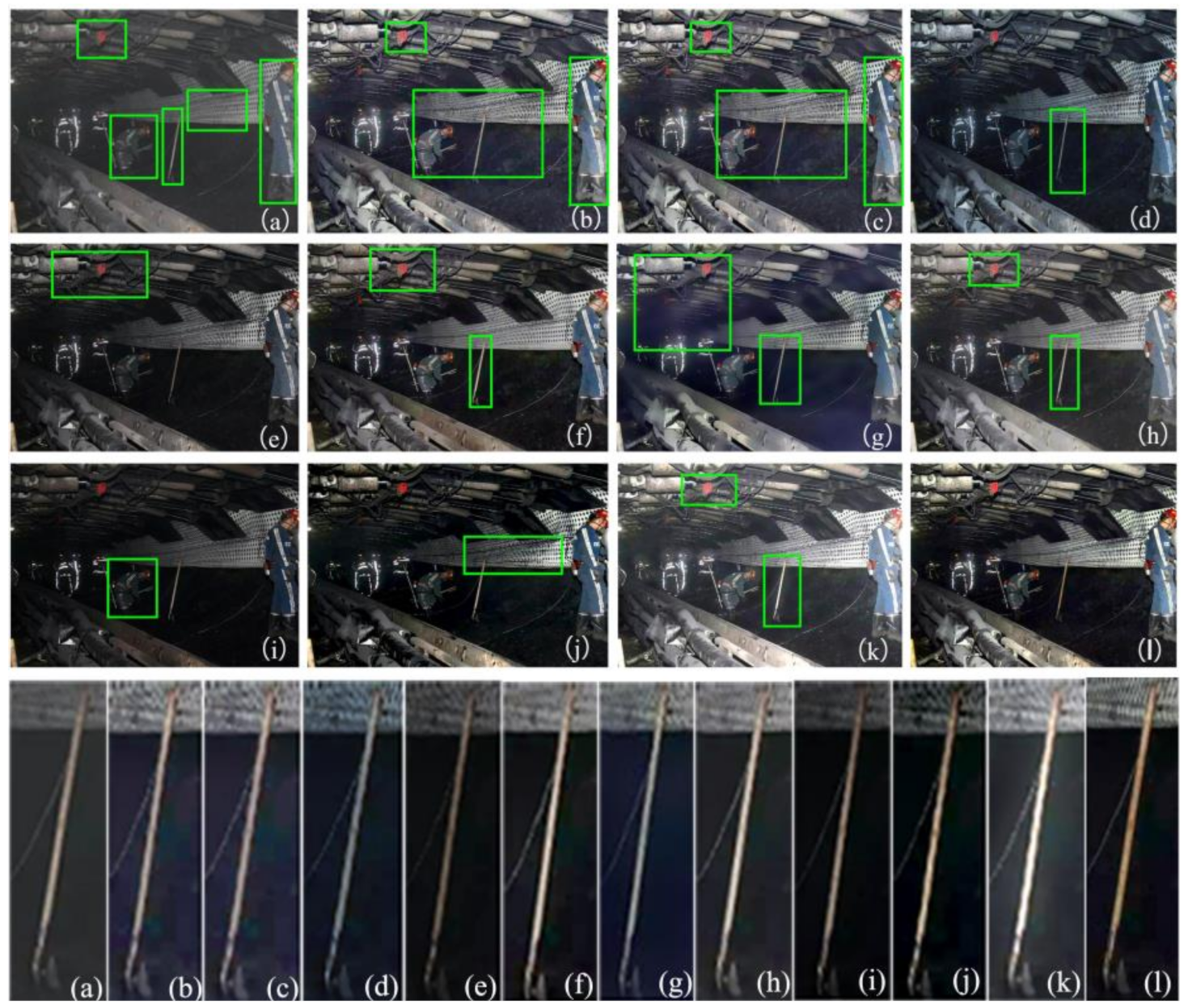

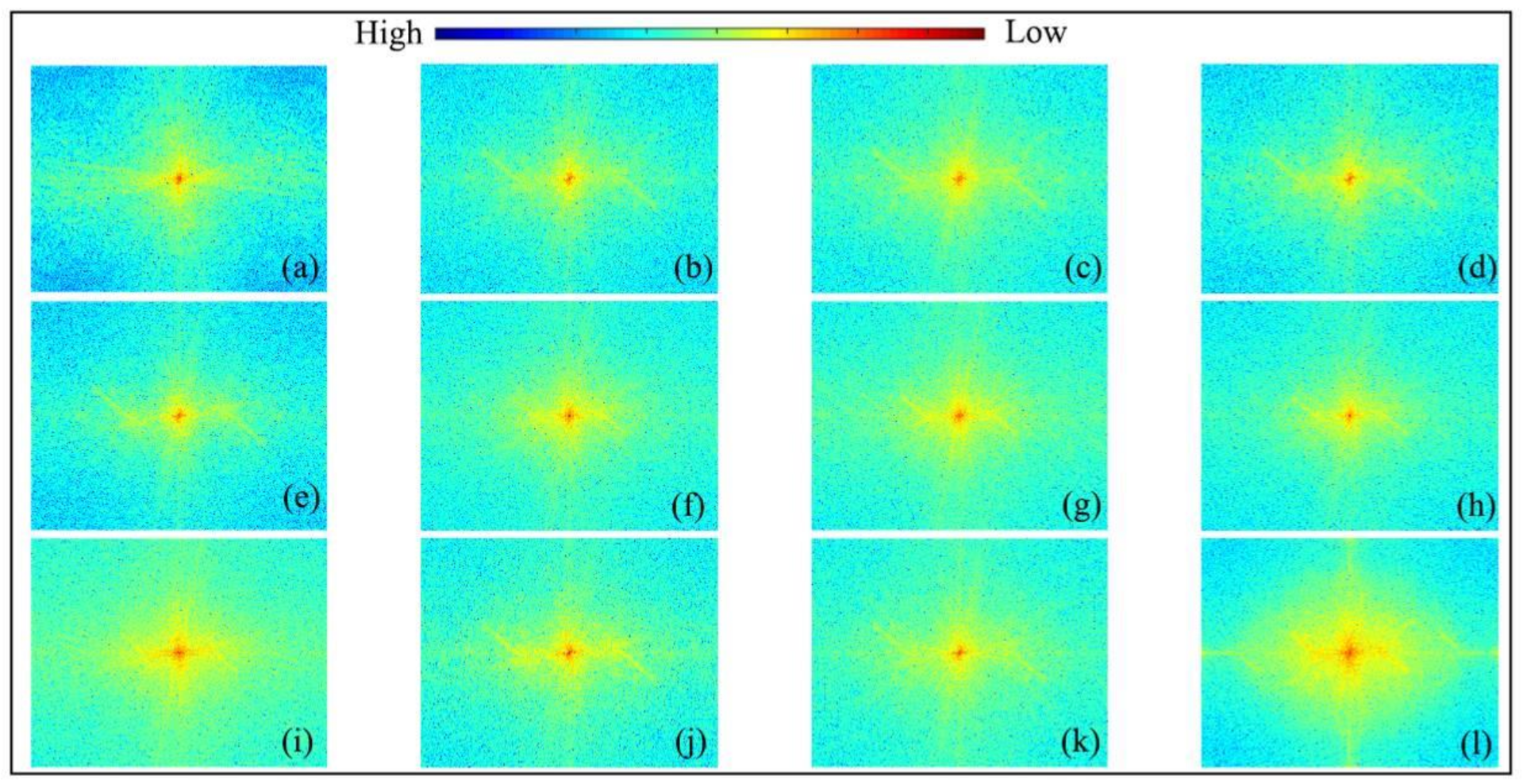

4.1. Color Images

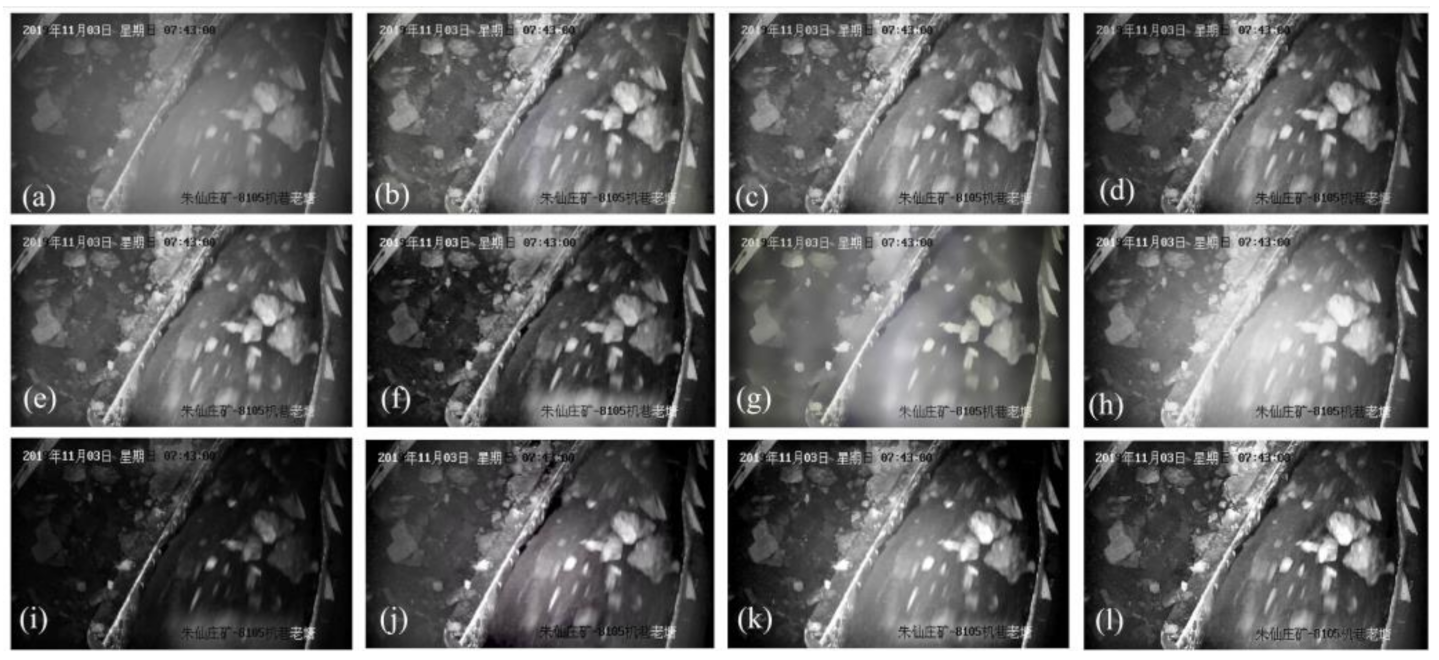

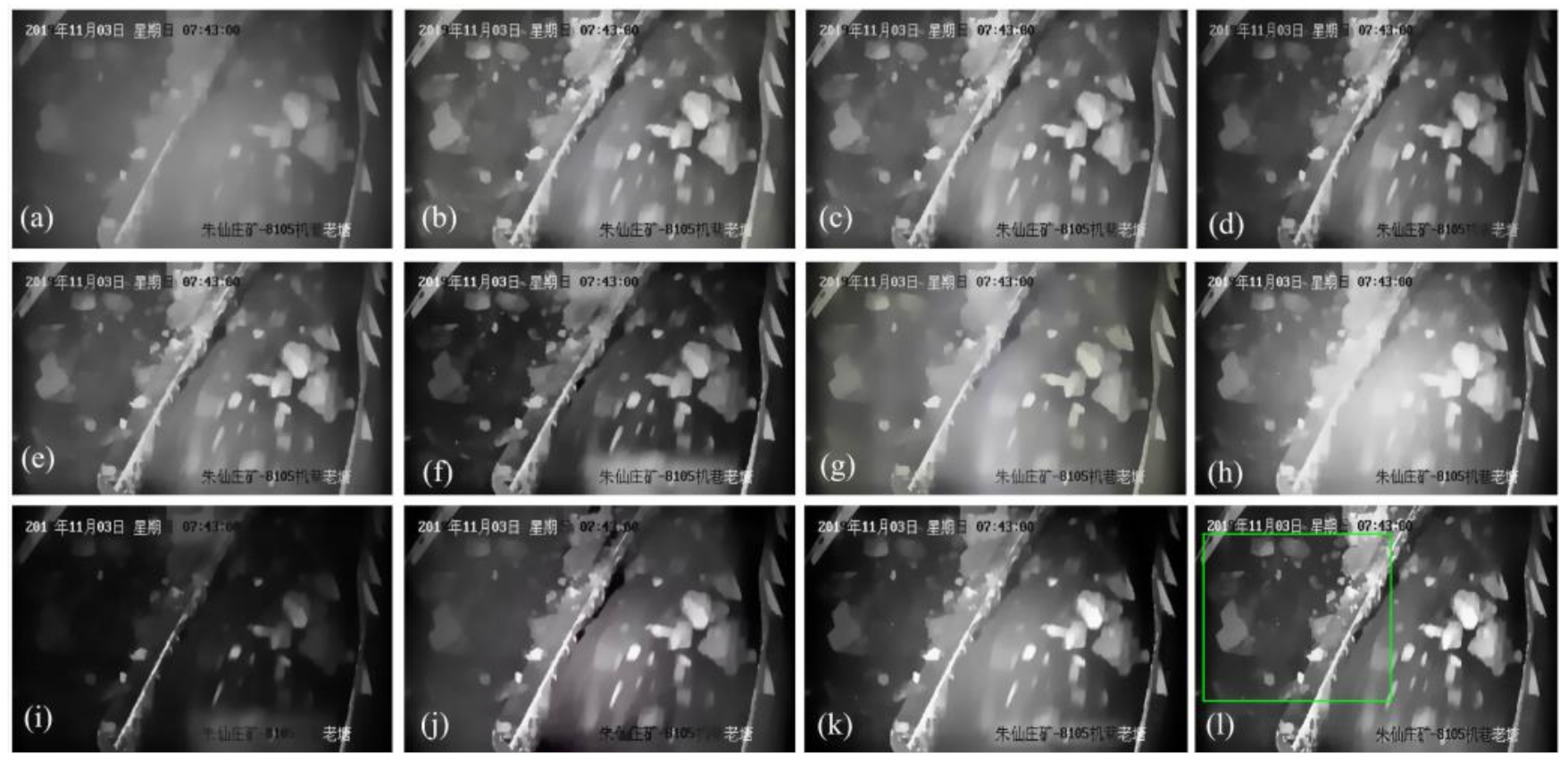

4.2. Gray Images

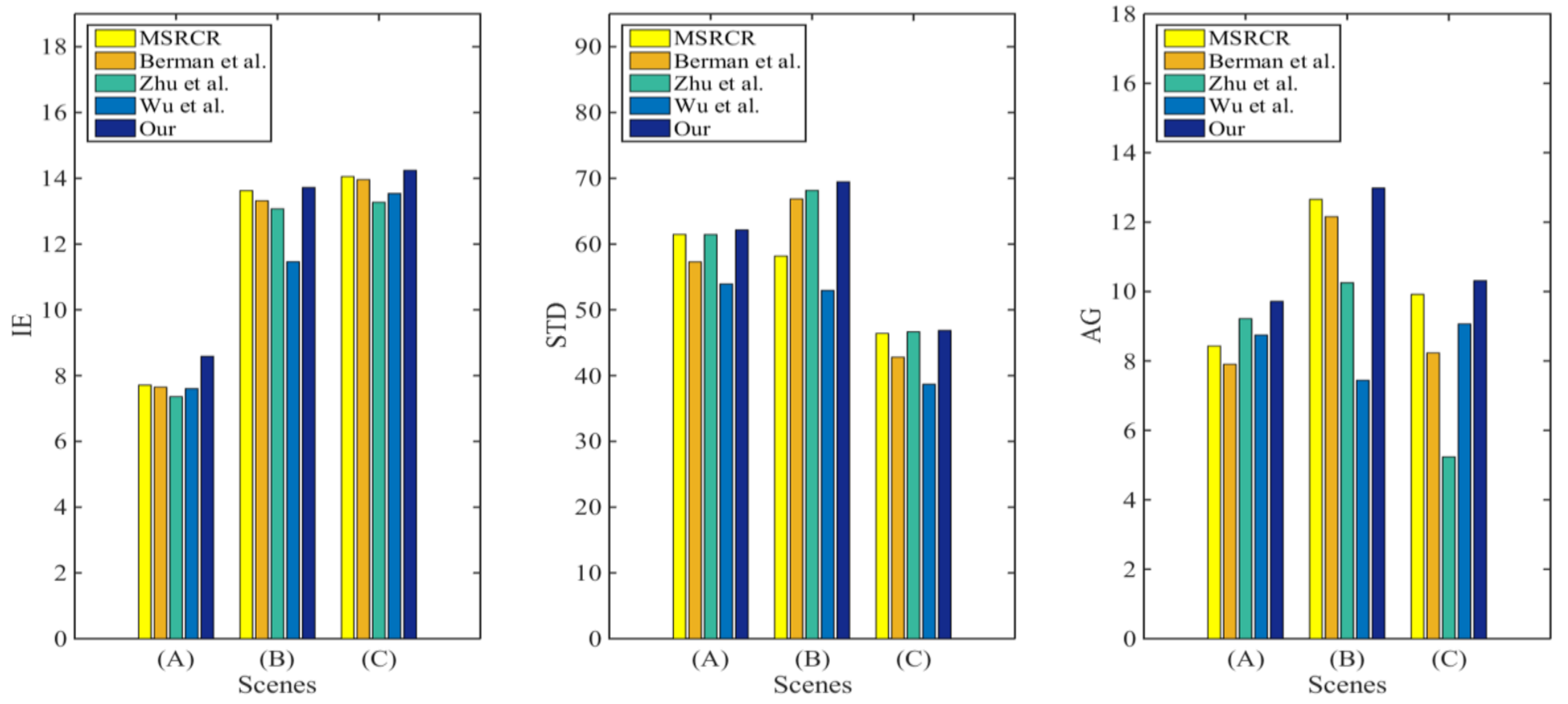

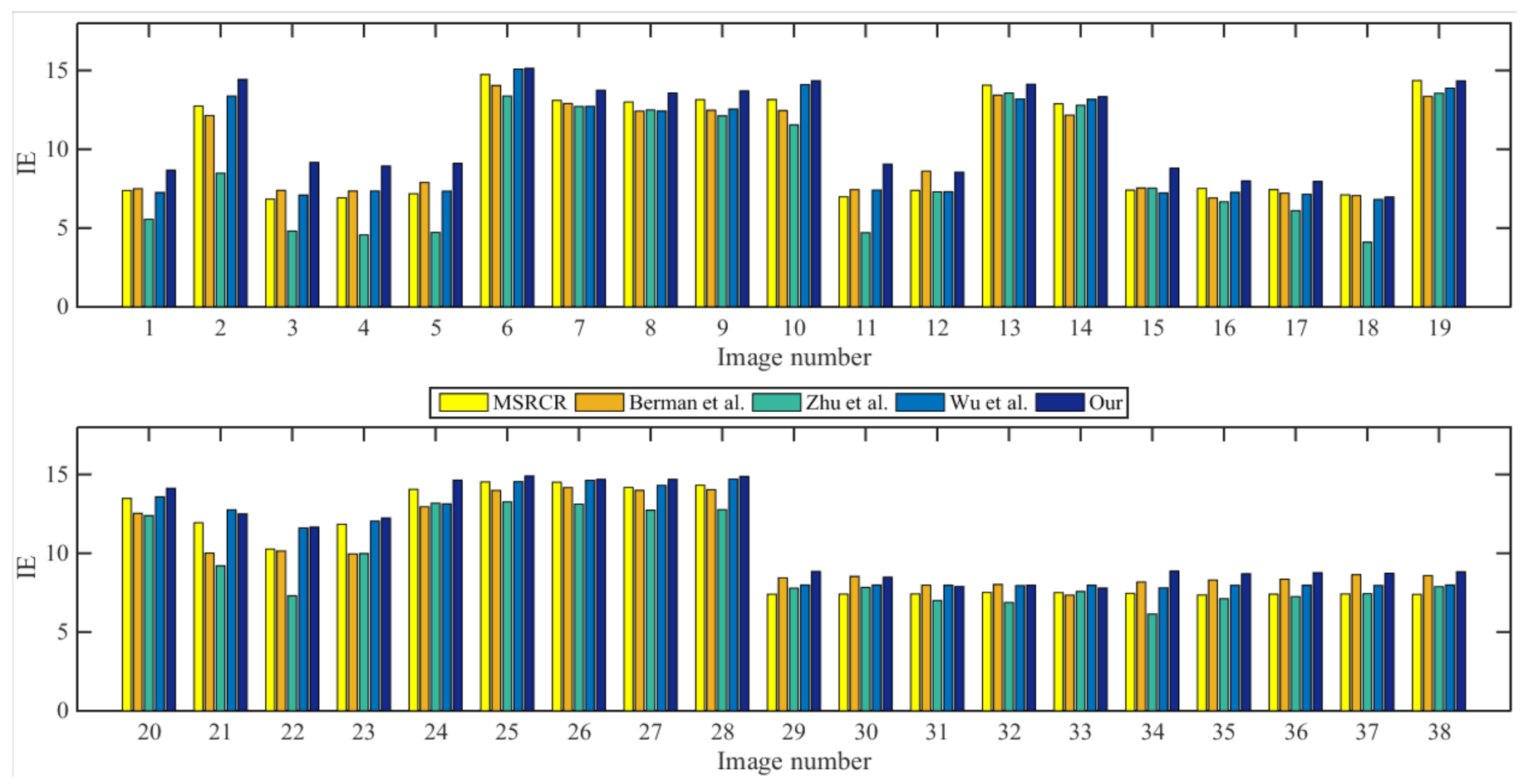

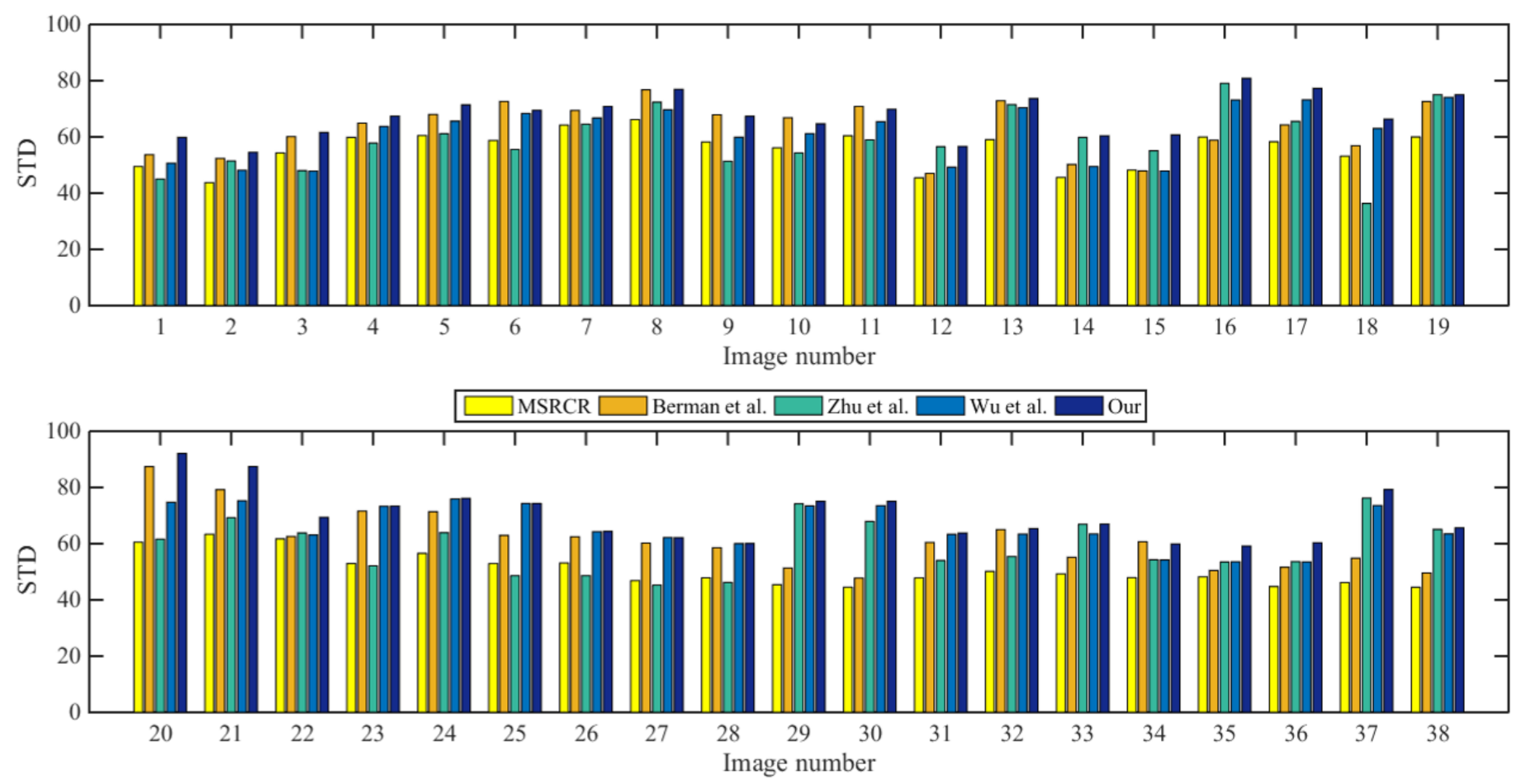

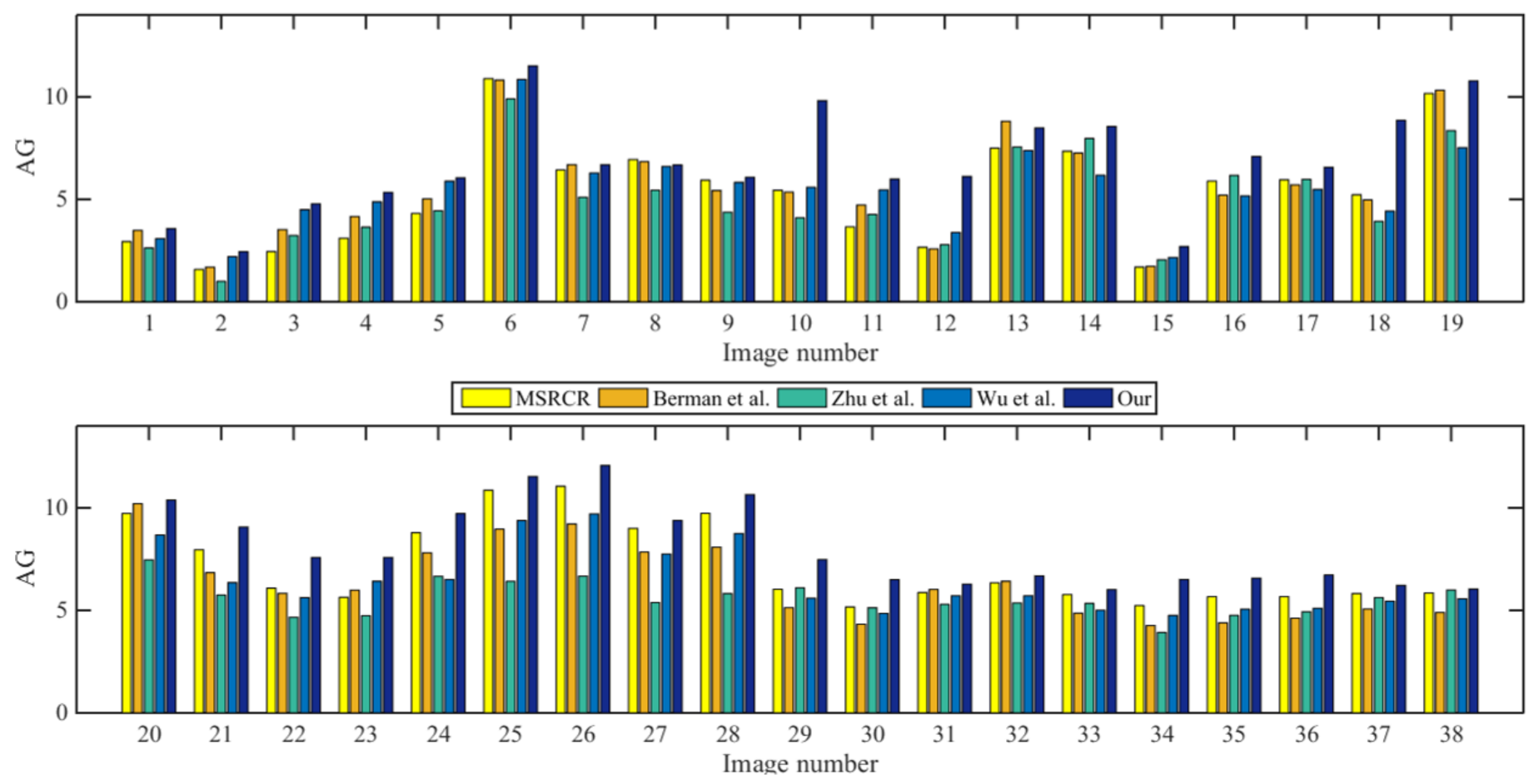

4.3. Quantitative Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wu, D.; Zhang, S. Research on image enhancement algorithm of coal mine dust. In Proceedings of the 2018 International Conference on Sensor Networks and Signal Processing (SNSP), Xian, China, 28–31 October 2018; pp. 261–265. [Google Scholar]

- Zhou, J.; Zhang, D.; Zou, P.; Zhang, W.; Zhang, W. Retinex-based laplacian pyramid method for image defogging. IEEE Access 2019, 7, 122459–122472. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Pan, X.; Zhou, J.; Qin, L.; Xu, W. Single image defogging based on multi-channel convolutional MSRCR. IEEE Access 2019, 7, 72492–72504. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Wang, W.; Chang, F.; Ji, T.; Wu, X. A fast single-image dehazing method based on a physical model and gray projection. IEEE Access 2018, 6, 5641–5653. [Google Scholar] [CrossRef]

- Jiang, B.; Zhang, W.; Zhao, J.; Ru, Y.; Liu, M.; Ma, X.; Chen, X.; Meng, H. Gray-scale image dehazing guided by scene depth information. Math. Probl. Eng. 2016, 2016, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Shen, L.; Zhao, Y.; Peng, Q.; Chan, J.C.-W.; Kong, S.G. An iterative image dehazing method with polarization. IEEE Trans. Multimedia 2019, 21, 1093–1107. [Google Scholar] [CrossRef]

- Schechner, Y.; Narasimhan, S.; Nayar, S. Instant dehazing of images using polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. 325–332. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed] [Green Version]

- Ju, M.; Ding, C.; Guo, Y.J.; Zhang, D. IDGCP: Image dehazing based on gamma correction prior. IEEE Trans. Image Process. 2020, 29, 3104–3118. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1674–1682. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar] [CrossRef]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.-H. Gated fusion network for single image dehazing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3253–3261. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-one dehazing network. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4780–4788. [Google Scholar]

- Shakeri, M.; Dezfoulian, M.; Khotanlou, H.; Barati, A.; Masoumi, Y. Image contrast enhancement using fuzzy clustering with adaptive cluster parameter and sub-histogram equalization. Digit. Signal Process. 2017, 62, 224–237. [Google Scholar] [CrossRef]

- Xiao, L.; Li, C.; Wu, Z.; Wang, T. An enhancement method for X-ray image via fuzzy noise removal and homomorphic filtering. Neurocomputing 2016, 195, 56–64. [Google Scholar] [CrossRef]

- Ye, X.; Wu, G.; Huang, L.; Fan, F.; Zhang, Y. Image enhancement for inspection of cable images based on retinex theory and fuzzy enhancement method in wavelet domain. Symmetry 2018, 10, 570. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Hou, Y.; Ren, D.; Liu, L.; Zhu, F.; Yu, M.; Wang, H.; Shao, L. STAR: A structure and texture aware retinex model. IEEE Trans. Image Process. 2020, 29, 5022–5037. [Google Scholar] [CrossRef] [Green Version]

- Si, L.; Wang, Z.; Xu, R.; Tan, C.; Liu, X.; Xu, J. Image enhancement for surveillance video of coal mining face based on single-scale retinex algorithm combined with bilateral filtering. Symmetry 2017, 9, 93. [Google Scholar] [CrossRef] [Green Version]

- Sadia, H.; Azeem, F.; Ullah, H.; Mahmood, Z.; Khattak, S.; Khan, G.Z. Color image enhancement using multiscale retinex with guided filter. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 82–87. [Google Scholar]

- Galdran, A.; Bria, A.; Alvarez-Gila, A.; Vazquez-Corral, J.; Bertalmio, M. On the duality between retinex and image dehazing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8212–8221. [Google Scholar]

- Hua, G.; Jiang, D. A new method of image denoising for underground coal mine based on the visual characteristics. J. Appl. Math. 2014, 2014, 1–7. [Google Scholar] [CrossRef]

- Shang, C. A novel analyzing method to coal mine image restoration. In Proceedings of the 2015 Asia-Pacific Energy Equipment Engineering Research Conference, Zhuhai, China, 13–14 June 2015; pp. 285–288. [Google Scholar]

- Yu, C.; Rui, G.; Li-Jie, D. Study of image enhancement algorithms in coal mine. In Proceedings of the 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 27–28 August 2016; pp. 383–386. [Google Scholar]

- Liu, X.; Zhang, H.; Cheung, Y.-M.; You, X.; Tang, Y.Y. Efficient single image dehazing and denoising: An efficient multi-scale correlated wavelet approach. Comput. Vis. Image Underst. 2017, 162, 23–33. [Google Scholar] [CrossRef]

- Jobson, D.; Rahman, Z.; Woodell, G. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Li, M.; Liu, J.; Yang, W.; Guo, Z. Joint denoising and enhancement for low-light images via retinex model. In Digital TV and Wireless Multimedia Communication; Springer: Singapore, 2018; pp. 91–99. [Google Scholar]

- Ren, X.; Li, M.; Cheng, W.-H.; Liu, J. Joint enhancement and denoising method via sequential decomposition. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018. [Google Scholar]

- Li, Z.; Li, G.; Niu, B.; Peng, F. Sea cucumber image dehazing method by fusion of retinex and dark channel. IFAC PapersOnLine 2018, 51, 796–801. [Google Scholar] [CrossRef]

- Liu, X.; Liu, C.; Lan, H.; Xie, L. Dehaze enhancement algorithm based on retinex theory for aerial images combined with dark channel. OALib 2020, 07, 1–12. [Google Scholar]

- Fu, F.; Liu, F. Wavelet-based retinex algorithm for unmanned aerial vehicle image defogging. In Proceedings of the 8th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 12–13 December 2015; pp. 426–430. [Google Scholar]

- Qin, Y.; Luo, F.; Li, M. A medical image enhancement method based on improved multi-scale retinex algorithm. J. Med Imaging Health Inform. 2020, 10, 152–157. [Google Scholar] [CrossRef]

- Fu, Q.; Jung, C.; Xu, K. Retinex-based perceptual contrast enhancement in images using luminance adaptation. IEEE Access 2018, 6, 61277–61286. [Google Scholar] [CrossRef]

- Froment, J. Parameter-free fast pixelwise non-local means denoising. Image Process. Line 2014, 4, 300–326. [Google Scholar] [CrossRef] [Green Version]

- Xu, L.; Yan, Q.; Xia, Y.; Jia, J. Structure extraction from texture via relative total variation. ACM Trans. Graph. 2012, 31, 1–10. [Google Scholar] [CrossRef]

| Method | IE | STD | AG | Method | IE | STD | AG |

|---|---|---|---|---|---|---|---|

| (a) | 9.7458 | 30.0493 | 5.6163 | (g) | 12.5630 | 34.2773 | 5.2193 |

| (b) | 12.9568 | 52.1407 | 7.4134 | (h) | 12.7541 | 51.3485 | 5.7709 |

| (c) | 12.9377 | 51.5041 | 7.3264 | (i) | 10.1574 | 31.0655 | 3.7548 |

| (d) | 12.8914 | 45.585 | 7.3484 | (j) | 12.1683 | 50.1933 | 7.2618 |

| (e) | 12.1539 | 38.9266 | 6.0602 | (k) | 12.7920 | 59.8192 | 7.9734 |

| (f) | 13.1725 | 49.462 | 6.1751 | (l) | 13.3498 | 60.3704 | 8.5584 |

| Method | IE | STD | AG | Method | IE | STD | AG |

|---|---|---|---|---|---|---|---|

| (a) | 7.1071 | 36.8321 | 1.2237 | (g) | 10.0141 | 47.1495 | 1.5816 |

| (b) | 9.2069 | 44.4457 | 1.9141 | (h) | 7.8492 | 64.775 | 1.8164 |

| (c) | 7.4423 | 45.0672 | 1.8816 | (i) | 6.1019 | 29.6587 | 1.3047 |

| (d) | 7.4085 | 48.2055 | 1.6940 | (j) | 7.5408 | 47.9058 | 1.7299 |

| (e) | 7.3660 | 44.0465 | 1.7667 | (k) | 7.5334 | 55.0708 | 2.0496 |

| (f) | 7.2324 | 47.8882 | 2.1632 | (m) | 8.8026 | 60.7208 | 2.6943 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, C.; Lu, F.; Zhang, X.; Zhang, G. Joint Dedusting and Enhancement of Top-Coal Caving Face via Single-Channel Retinex-Based Method with Frequency Domain Prior Information. Symmetry 2021, 13, 2097. https://doi.org/10.3390/sym13112097

Fu C, Lu F, Zhang X, Zhang G. Joint Dedusting and Enhancement of Top-Coal Caving Face via Single-Channel Retinex-Based Method with Frequency Domain Prior Information. Symmetry. 2021; 13(11):2097. https://doi.org/10.3390/sym13112097

Chicago/Turabian StyleFu, Chengcai, Fengli Lu, Xiaoxiao Zhang, and Guoying Zhang. 2021. "Joint Dedusting and Enhancement of Top-Coal Caving Face via Single-Channel Retinex-Based Method with Frequency Domain Prior Information" Symmetry 13, no. 11: 2097. https://doi.org/10.3390/sym13112097

APA StyleFu, C., Lu, F., Zhang, X., & Zhang, G. (2021). Joint Dedusting and Enhancement of Top-Coal Caving Face via Single-Channel Retinex-Based Method with Frequency Domain Prior Information. Symmetry, 13(11), 2097. https://doi.org/10.3390/sym13112097