A Modified jSO Algorithm for Solving Constrained Engineering Problems

Abstract

1. Introduction

2. Related Work

2.1. JADE

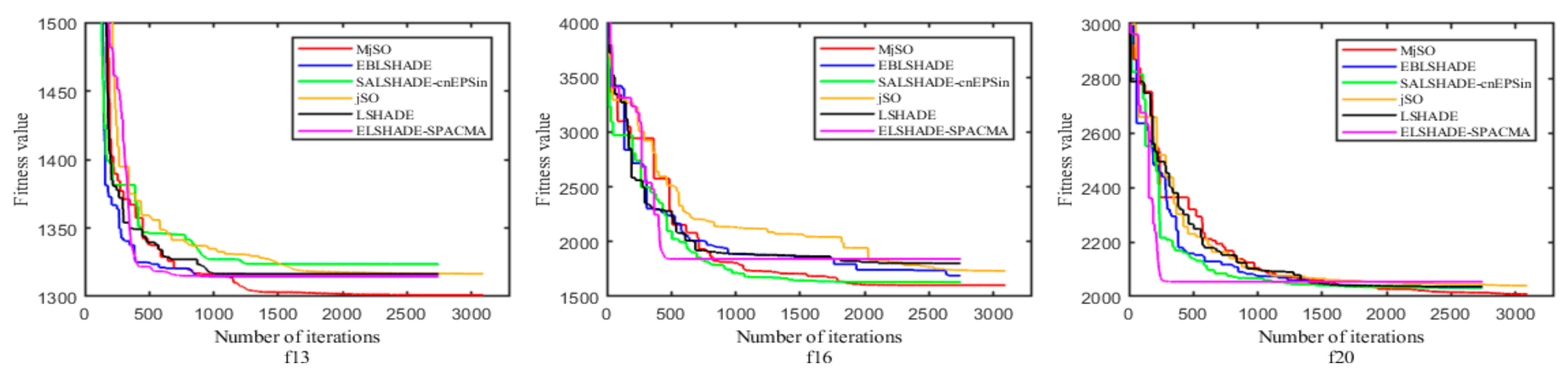

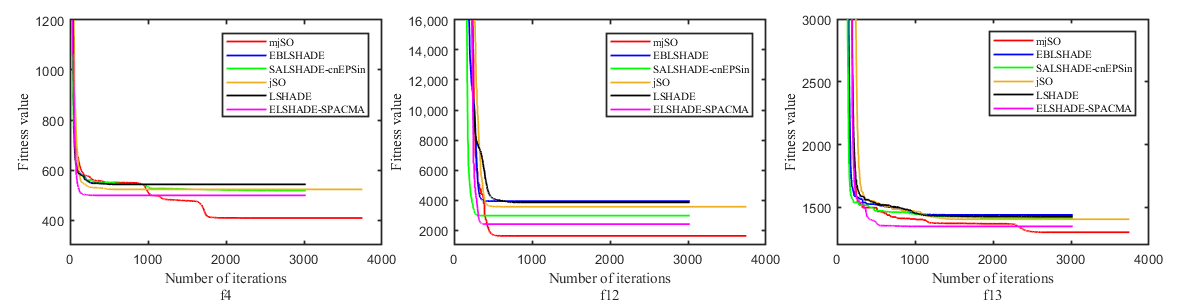

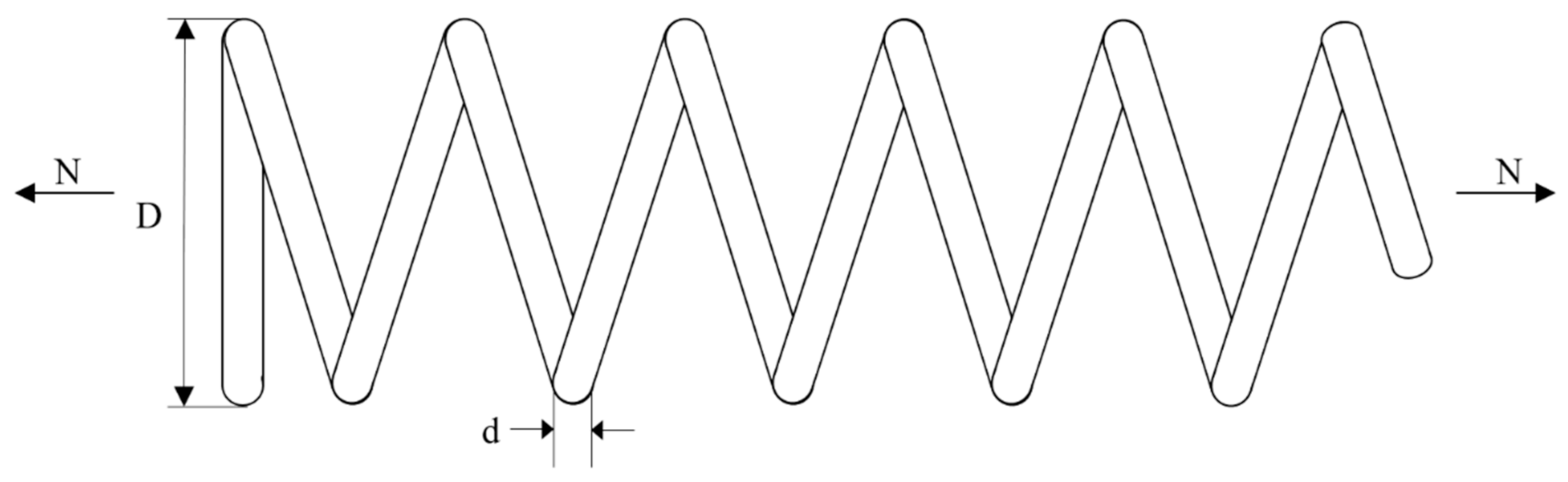

2.2. SHADE

2.3. LSHADE

2.4. iLSHADE

- iLSHADE uses a larger MF = 0.8 in the evolution of the initialization phase and a smaller population size = 12·D.

- In the iLSHADE algorithm, the last entry in the H-entry pool records constant control parameter pairs, which are MF = 0.9 and MCR = 0.9, respectively. These two parameters remain unchanged throughout evolution.

- At different stages of the evolution, the F and CR of each individual are set to different fixed values, as shown in Equations (18) and (19).

- The value of the degree of greed control parameter P of the variation strategy in iLSHADE increases linearly as the number of fitness function evaluations increases (see Equation (20)).

2.5. jSO

3. MjSO

3.1. A Parameter Control Strategy Based on a Symmetric Search Process

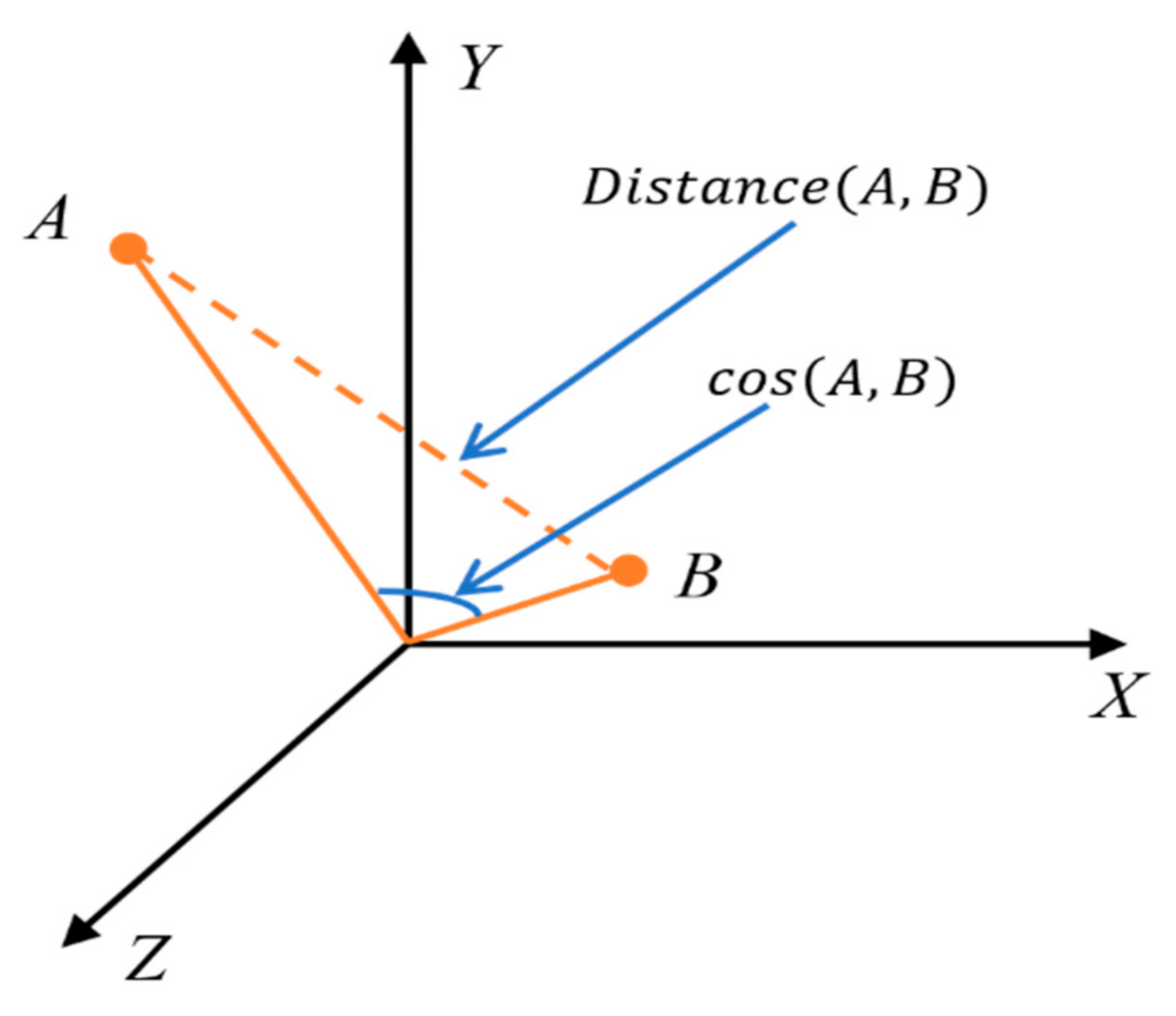

3.2. A Novel Parameter Adaptive Mechanism Based on Cosine Similarity

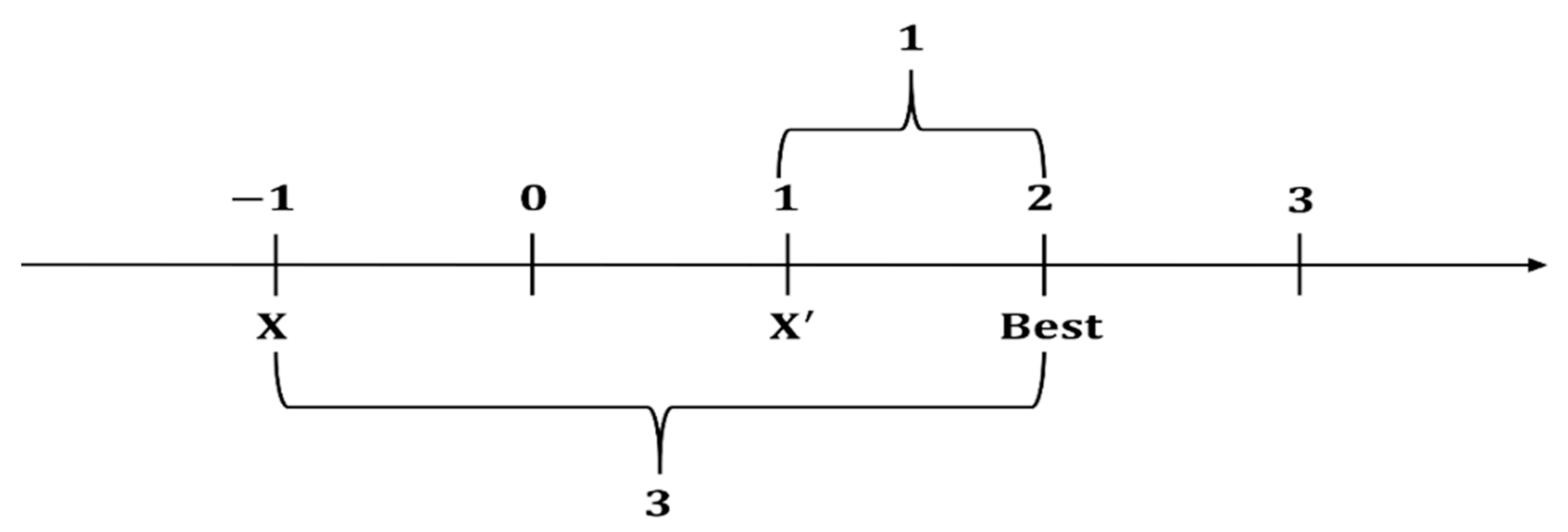

3.3. A Novel Opposition-Based Learning Restart Mechanism

| Algorithm 1: A novel OBL restart mechanism |

| 1: if λ = ξ 2: for do 3: Generate the opposite vector using Equation (27) 4: Calculate the fitness value ; 5: 6: Replace with a fitter one between and 7: end for 8: end if |

| Algorithm 2: MjSO |

| 1: Archive ← ∅ 2: Initialize population = (. . . , ) randomly 3: Set all values in to 0.5 4: Set all values in to 0.5 5: //index counter 6: while the termination criteria are not meet do 7: ← ∅, ← ∅ 8: for to do 9: ← select from randomly 10: if = then 11: ← 0.9 12: ← 0.9 13: end if 14: if < 0 then 15: 16: else 17: ← (, 0.1) 18: end if 19: if < 0.25 then 20: ← max ( 0.7) 21: else if < 0.5 then 22: ← max (, 0.6) 23: end if 24: if 25: 26: else 27: ← (, 0.1) 28: if < 0.6 and > 0.7 then 29: ← 0.7 30: end if 31: end if 32: ← current-to-pBest-w/1/bin using Equation (21) 33: end for 34: for i = 1 to do 35: if ≤ then 36: ← 37: else 38: ← 39: end if 40: if ≤ then 41: →, → , → 42: end if 43: Shrink , if necessary 44: Update and 45: Apply LPSR strategy//linear population size reduction 46: Apply Algorithm 1 47: Update using Equation (20) 48: end for 49: 50: end while |

4. The Experimental Setup

4.1. Experimental Environment

4.2. Clustering Analysis

- The core point distance is that Eps = 1% of the decision space. For the CEC2017 benchmark set, Eps = 2.

- The minimum number of clusters MinPts = 4 (minimum number of individuals with mutations).

- The distance measurement is equal to Chebyshev distance [59]. If the distance between any corresponding attributes of two individuals is greater than 1% of the decision space, they are not considered to be directly dense-reachable.

4.3. Population Diversity

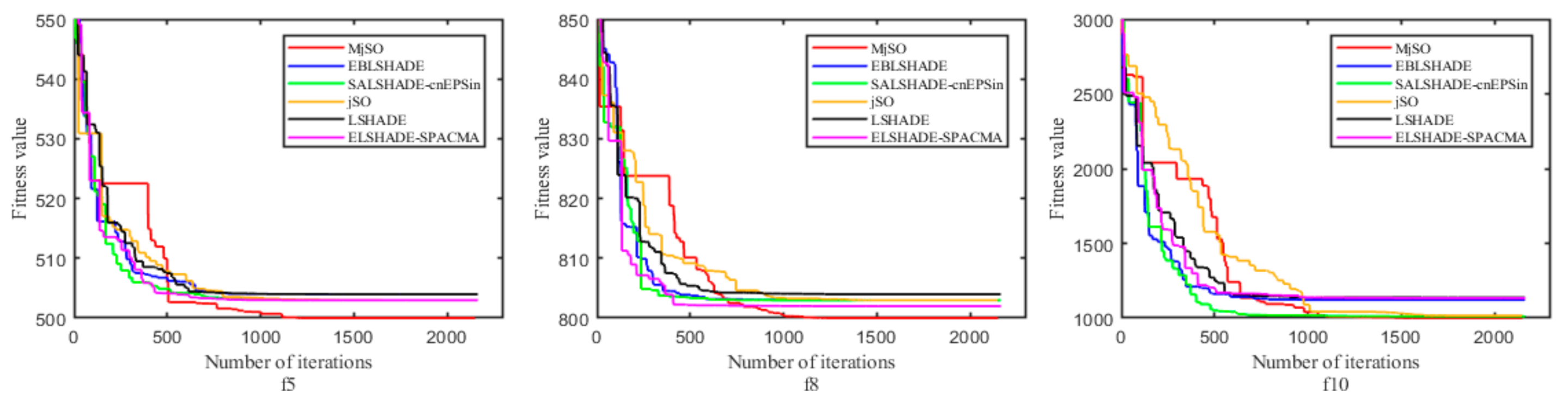

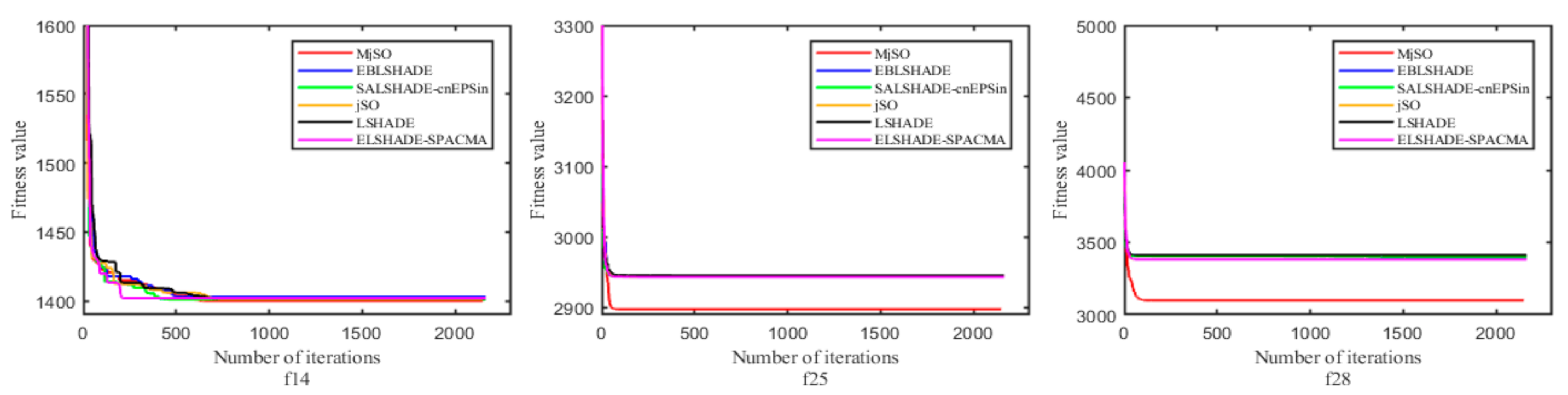

5. Experimental Results and Analysis

6. MjSO for Engineering Problems

6.1. Pressure Vessel Design Problem

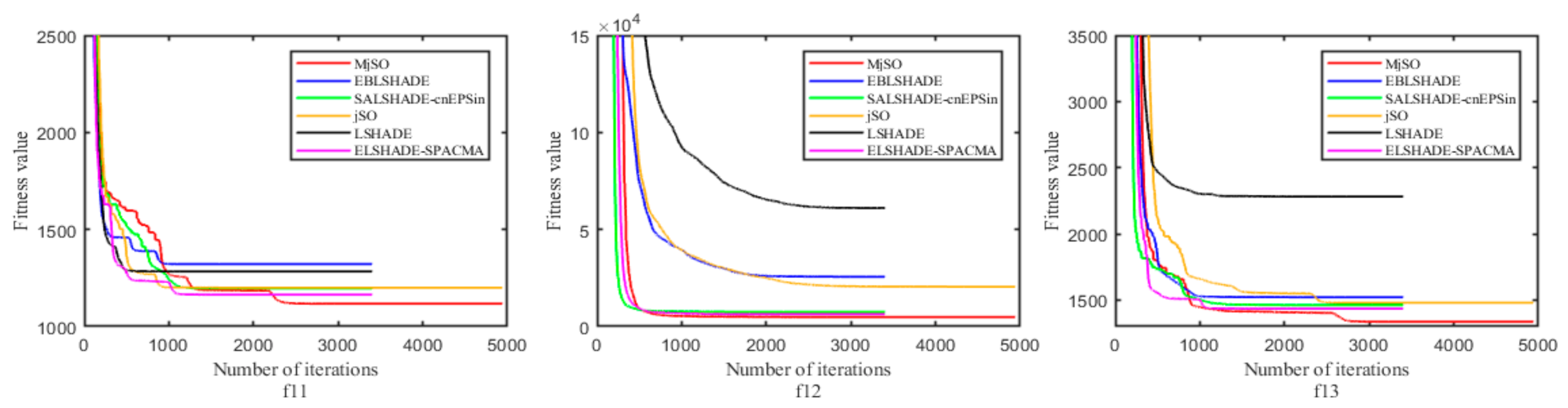

6.2. Tension/Compression Spring Design Problem

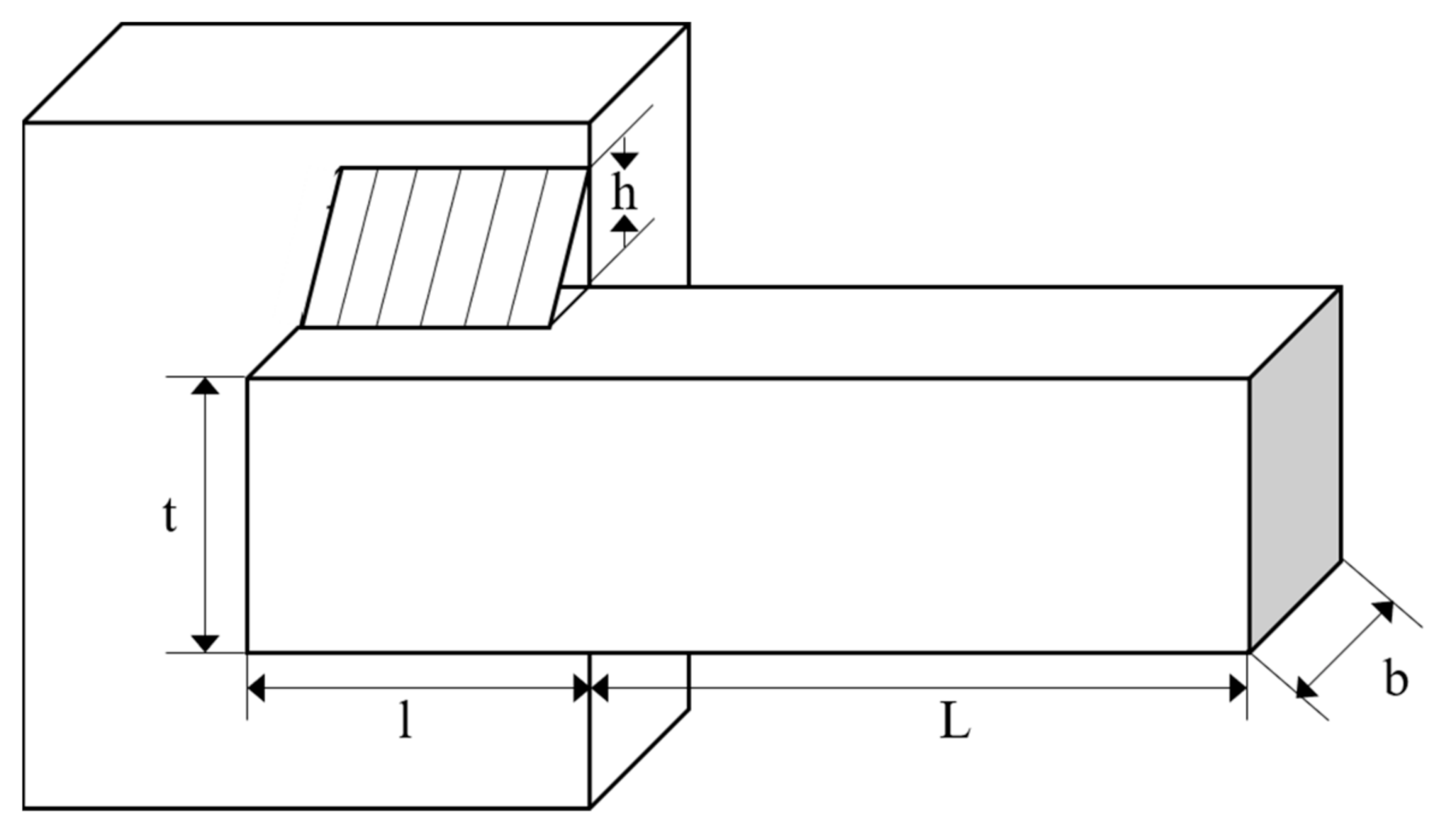

6.3. Welded Beam Design Problem

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data availability Statement

Conflicts of Interest

Appendix A

| No | jSO | MjSO | ||||

|---|---|---|---|---|---|---|

| #runs | Mean CO | Mean PD | #runs | Mean CO | Mean PD | |

| f1 | 51 | 5.67E+01 | 3.93E+01 | 51 | 4.58E+01 | 3.17E+01 |

| f2 | 51 | 8.84E+01 | 3.06E+01 | 51 | 6.99E+01 | 2.92E+01 |

| f3 | 51 | 8.64E+01 | 7.00E+00 | 51 | 6.97E+01 | 7.19E+00 |

| f4 | 51 | 6.01E+01 | 1.18E+01 | 51 | 4.70E+01 | 1.13E+01 |

| f5 | 48 | 1.19E+03 | 3.28E+01 | 50 | 1.11E+03 | 3.11E+01 |

| f6 | 51 | 9.35E+01 | 8.47E+00 | 51 | 7.21E+01 | 8.84E+00 |

| f7 | 49 | 1.40E+03 | 1.05E+01 | 46 | 1.32E+03 | 1.02E+01 |

| f8 | 50 | 1.19E+03 | 3.40E+01 | 51 | 1.06E+03 | 3.32E+01 |

| f9 | 51 | 9.00E+01 | 7.45E+00 | 51 | 6.95E+01 | 7.80E+00 |

| f10 | 47 | 1.37E+03 | 8.31E+01 | 50 | 1.29E+03 | 7.08E+01 |

| f11 | 51 | 3.90E+02 | 2.13E+01 | 51 | 3.35E+02 | 1.98E+01 |

| f12 | 51 | 2.76E+02 | 4.31E+01 | 51 | 1.86E+02 | 3.40E+01 |

| f13 | 51 | 8.30E+02 | 1.35E+01 | 51 | 6.52E+02 | 1.35E+01 |

| f14 | 51 | 8.12E+02 | 2.75E+01 | 47 | 6.06E+02 | 3.25E+01 |

| f15 | 51 | 3.26E+02 | 1.81E+01 | 51 | 2.62E+02 | 1.80E+01 |

| f16 | 49 | 8.87E+02 | 1.77E+01 | 41 | 5.95E+02 | 2.61E+01 |

| f17 | 1 | 1.89E+03 | 1.47E+01 | 2 | 1.72E+03 | 2.55E+01 |

| f18 | 51 | 3.00E+02 | 2.57E+01 | 51 | 2.40E+02 | 2.40E+01 |

| f19 | 51 | 5.11E+02 | 3.04E+01 | 51 | 3.77E+02 | 2.78E+01 |

| f20 | 47 | 7.52E+02 | 3.69E+01 | 42 | 6.50E+02 | 3.44E+01 |

| f21 | 51 | 5.70E+02 | 4.52E+01 | 51 | 5.78E+02 | 5.50E+01 |

| f22 | 51 | 5.68E+01 | 7.83E+00 | 51 | 4.50E+01 | 1.11E+01 |

| f23 | 51 | 8.08E+02 | 2.96E+01 | 51 | 6.38E+02 | 2.44E+01 |

| f24 | 51 | 8.42E+02 | 2.89E+01 | 51 | 6.77E+02 | 3.64E+01 |

| f25 | 51 | 7.75E+01 | 1.85E+01 | 51 | 6.37E+01 | 2.34E+01 |

| f26 | 51 | 6.12E+01 | 6.77E+00 | 51 | 5.09E+01 | 7.10E+00 |

| f27 | 51 | 1.05E+02 | 1.74E+01 | 51 | 8.44E+01 | 1.98E+01 |

| f28 | 51 | 8.25E+01 | 2.42E+01 | 51 | 6.89E+01 | 2.71E+01 |

| f29 | 24 | 1.59E+03 | 4.41E+01 | 24 | 1.48E+03 | 3.85E+01 |

| f30 | 51 | 1.60E+02 | 1.32E+01 | 51 | 1.24E+02 | 1.31E+01 |

| No | jSO | MjSO | ||||

|---|---|---|---|---|---|---|

| #runs | Mean CO | Mean PD | #runs | Mean CO | Mean PD | |

| f1 | 51 | 1.07E+02 | 2.37E+01 | 51 | 9.13E+01 | 2.82E+01 |

| f2 | 51 | 2.81E+02 | 1.77E+01 | 51 | 2.21E+02 | 1.77E+01 |

| f3 | 51 | 1.90E+02 | 7.05E+00 | 51 | 1.72E+02 | 6.93E+00 |

| f4 | 51 | 1.40E+02 | 8.45E+00 | 51 | 1.11E+02 | 8.20E+00 |

| f5 | 47 | 2.32E+03 | 4.46E+01 | 48 | 2.34E+03 | 4.15E+01 |

| f6 | 51 | 1.82E+02 | 7.46E+00 | 51 | 1.52E+02 | 7.31E+00 |

| f7 | 34 | 2.49E+03 | 1.47E+01 | 36 | 2.54E+03 | 1.29E+01 |

| f8 | 48 | 2.26E+03 | 5.00E+01 | 42 | 3.34E+03 | 4.45E+01 |

| f9 | 51 | 1.77E+02 | 7.19E+00 | 51 | 1.47E+02 | 7.05E+00 |

| f10 | 17 | 2.76E+03 | 1.42E+02 | 22 | 2.59E+03 | 1.48E+02 |

| f11 | 51 | 1.17E+03 | 2.70E+01 | 51 | 1.22E+03 | 2.87E+01 |

| f12 | 51 | 4.77E+02 | 1.85E+01 | 51 | 5.09E+02 | 2.00E+01 |

| f13 | 51 | 8.72E+02 | 9.56E+00 | 51 | 9.66E+02 | 9.88E+00 |

| f14 | 29 | 2.01E+03 | 2.38E+01 | 26 | 2.88E+03 | 1.80E+01 |

| f15 | 51 | 9.12E+02 | 1.45E+01 | 51 | 1.05E+03 | 1.71E+01 |

| f16 | 30 | 2.86E+03 | 1.61E+01 | 29 | 2.80E+03 | 1.65E+01 |

| f17 | 12 | 2.82E+03 | 5.36E+01 | 7 | 3.74E+03 | 7.99E+01 |

| f18 | 20 | 2.50E+03 | 6.02E+00 | 15 | 3.20E+03 | 8.67E+00 |

| f19 | 51 | 1.41E+03 | 1.46E+01 | 51 | 1.42E+03 | 1.30E+01 |

| f20 | 14 | 2.93E+03 | 3.33E+01 | 8 | 2.85E+03 | 6.11E+01 |

| f21 | 48 | 2.36E+03 | 4.28E+01 | 50 | 2.25E+03 | 4.27E+01 |

| f22 | 51 | 1.15E+02 | 6.68E+00 | 51 | 9.66E+01 | 6.54E+00 |

| f23 | 50 | 2.01E+03 | 4.25E+01 | 51 | 1.86E+03 | 3.62E+01 |

| f24 | 51 | 1.82E+03 | 4.62E+01 | 51 | 1.79E+03 | 3.94E+01 |

| f25 | 51 | 1.27E+02 | 7.39E+00 | 51 | 1.04E+02 | 7.14E+00 |

| f26 | 51 | 1.68E+03 | 3.07E+01 | 51 | 1.15E+03 | 1.80E+01 |

| f27 | 51 | 3.44E+02 | 1.59E+01 | 51 | 2.53E+02 | 2.03E+01 |

| f28 | 51 | 1.58E+02 | 1.15E+01 | 51 | 1.31E+02 | 9.79E+00 |

| f29 | 29 | 2.77E+03 | 5.36E+01 | 24 | 2.72E+03 | 4.26E+01 |

| f30 | 51 | 2.85E+02 | 1.26E+01 | 51 | 2.15E+02 | 1.34E+01 |

| No | jSO | MjSO | ||||

|---|---|---|---|---|---|---|

| #runs | Mean CO | Mean PD | #runs | Mean CO | Mean PD | |

| f1 | 51 | 1.20E+02 | 9.52E+00 | 51 | 1.32E+02 | 1.74E+01 |

| f2 | 51 | 3.95E+02 | 1.28E+01 | 51 | 3.68E+02 | 1.32E+01 |

| f3 | 51 | 2.43E+02 | 7.88E+00 | 51 | 2.52E+02 | 7.55E+00 |

| f4 | 51 | 1.84E+02 | 9.18E+00 | 51 | 1.70E+02 | 8.41E+00 |

| f5 | 44 | 2.97E+03 | 5.62E+01 | 44 | 2.83E+03 | 5.57E+01 |

| f6 | 51 | 1.95E+02 | 7.93E+00 | 51 | 1.98E+02 | 7.67E+00 |

| f7 | 35 | 2.99E+03 | 1.86E+01 | 34 | 3.03E+03 | 1.77E+01 |

| f8 | 45 | 3.00E+03 | 5.46E+01 | 44 | 2.90E+03 | 5.47E+01 |

| f9 | 51 | 1.90E+02 | 7.75E+00 | 51 | 1.89E+02 | 7.50E+00 |

| f10 | 14 | 3.49E+03 | 1.29E+02 | 17 | 3.43E+03 | 1.54E+02 |

| f11 | 51 | 1.84E+03 | 3.05E+01 | 51 | 1.85E+03 | 2.97E+01 |

| f12 | 51 | 3.67E+02 | 1.08E+01 | 51 | 4.43E+02 | 1.25E+01 |

| f13 | 51 | 7.42E+02 | 1.12E+01 | 51 | 9.27E+02 | 1.15E+01 |

| f14 | 51 | 1.67E+03 | 1.33E+01 | 50 | 1.84E+03 | 1.43E+01 |

| f15 | 51 | 8.34E+02 | 1.20E+01 | 51 | 1.13E+03 | 1.65E+01 |

| f16 | 41 | 3.47E+03 | 8.42E+00 | 37 | 3.41E+03 | 1.12E+01 |

| f17 | 15 | 3.58E+03 | 7.67E+00 | 10 | 3.52E+03 | 2.62E+01 |

| f18 | 51 | 8.67E+02 | 9.25E+00 | 51 | 1.14E+03 | 9.77E+00 |

| f19 | 51 | 1.46E+03 | 1.23E+01 | 51 | 1.63E+03 | 1.37E+01 |

| f20 | 24 | 3.43E+03 | 2.04E+01 | 20 | 4.44E+03 | 2.19E+01 |

| f21 | 47 | 2.94E+03 | 5.73E+01 | 43 | 2.93E+03 | 5.60E+01 |

| f22 | 37 | 1.64E+03 | 3.82E+01 | 36 | 9.58E+02 | 3.10E+01 |

| f23 | 48 | 2.69E+03 | 5.35E+01 | 47 | 2.64E+03 | 4.69E+01 |

| f24 | 51 | 2.59E+03 | 4.76E+01 | 50 | 2.12E+03 | 3.38E+01 |

| f25 | 51 | 1.94E+02 | 8.44E+00 | 51 | 1.69E+02 | 7.77E+00 |

| f26 | 51 | 2.36E+03 | 3.69E+01 | 51 | 1.40E+03 | 1.43E+01 |

| f27 | 51 | 3.00E+02 | 1.05E+01 | 51 | 2.15E+02 | 8.77E+00 |

| f28 | 51 | 1.71E+02 | 9.04E+00 | 51 | 2.54E+02 | 1.03E+01 |

| f29 | 32 | 3.34E+03 | 5.63E+01 | 23 | 3.34E+03 | 5.09E+01 |

| f30 | 51 | 3.28E+02 | 1.11E+01 | 51 | 4.22E+02 | 3.08E+01 |

| No | jSO | MjSO | ||||

|---|---|---|---|---|---|---|

| #runs | Mean CO | Mean PD | #runs | Mean CO | Mean PD | |

| f1 | 51 | 1.37E+02 | 9.05E+00 | 51 | 1.94E+02 | 1.15E+01 |

| f2 | 51 | 5.10E+02 | 1.09E+01 | 51 | 5.80E+02 | 1.04E+01 |

| f3 | 51 | 3.75E+02 | 9.86E+00 | 51 | 4.08E+02 | 9.16E+00 |

| f4 | 51 | 2.01E+02 | 9.29E+00 | 51 | 2.32E+02 | 9.04E+00 |

| f5 | 50 | 3.93E+03 | 7.09E+01 | 49 | 4.03E+03 | 5.59E+01 |

| f6 | 51 | 2.06E+02 | 9.48E+00 | 51 | 2.79E+02 | 8.85E+00 |

| f7 | 46 | 4.12E+03 | 2.19E+01 | 43 | 4.02E+03 | 2.07E+01 |

| f8 | 45 | 4.04E+03 | 6.76E+01 | 51 | 3.90E+03 | 6.33E+01 |

| f9 | 51 | 2.00E+02 | 9.04E+00 | 51 | 2.62E+02 | 8.70E+00 |

| f10 | 13 | 4.46E+03 | 3.06E+02 | 13 | 4.52E+03 | 2.13E+02 |

| f11 | 51 | 5.27E+02 | 9.79E+00 | 51 | 1.17E+03 | 1.14E+01 |

| f12 | 51 | 3.30E+02 | 1.02E+01 | 51 | 4.53E+02 | 9.08E+00 |

| f13 | 51 | 7.08E+02 | 1.34E+01 | 51 | 9.23E+02 | 1.39E+01 |

| f14 | 51 | 1.01E+03 | 1.02E+01 | 51 | 1.28E+03 | 1.30E+01 |

| f15 | 51 | 4.84E+02 | 1.03E+01 | 51 | 9.30E+02 | 9.53E+00 |

| f16 | 49 | 4.43E+03 | 1.16E+01 | 47 | 4.60E+03 | 9.92E+00 |

| f17 | 33 | 4.66E+03 | 2.45E+01 | 49 | 4.04E+03 | 7.65E+01 |

| f18 | 51 | 5.82E+02 | 9.80E+00 | 51 | 5.88E+02 | 1.40E+01 |

| f19 | 51 | 6.38E+02 | 1.07E+01 | 51 | 1.48E+03 | 1.20E+01 |

| f20 | 26 | 4.64E+03 | 1.16E+01 | 26 | 4.54E+03 | 7.67E+01 |

| f21 | 49 | 3.87E+03 | 6.44E+01 | 51 | 2.41E+02 | 9.42E+00 |

| f22 | 14 | 4.39E+03 | 2.67E+01 | 49 | 3.95E+03 | 6.22E+01 |

| f23 | 50 | 1.72E+03 | 2.38E+01 | 51 | 7.70E+02 | 9.25E+00 |

| f24 | 51 | 1.01E+03 | 1.18E+01 | 51 | 2.12E+02 | 8.49E+00 |

| f25 | 51 | 2.11E+02 | 9.56E+00 | 51 | 2.53E+02 | 9.72E+00 |

| f26 | 51 | 7.98E+02 | 9.50E+00 | 51 | 2.68E+02 | 8.62E+00 |

| f27 | 51 | 3.31E+02 | 9.28E+00 | 51 | 2.93E+02 | 8.93E+00 |

| f28 | 51 | 2.23E+02 | 9.42E+00 | 51 | 2.31E+02 | 8.99E+00 |

| f29 | 51 | 4.38E+03 | 4.21E+01 | 51 | 4.13E+03 | 4.22E+01 |

| f30 | 51 | 5.09E+02 | 1.08E+01 | 51 | 4.55E+02 | 1.01E+01 |

| Rank | Name | F-Rank |

|---|---|---|

| 0 | MjSO | 2.62 |

| 1 | jSO | 3.43 |

| 2 | SALSHADE-cnEPSin | 3.55 |

| 3 | EBLSHADE | 3.6 |

| 4 | ELSHADE-SPACMA | 3.82 |

| 5 | LSHADE | 3.98 |

| Rank | Name | F-Rank |

|---|---|---|

| 0 | MjSO | 2.1 |

| 1 | jSO | 3.4 |

| 2 | EBLSHADE | 3.43 |

| 3 | SALSHADE-cnEPSin | 3.95 |

| 4 | LSHADE | 4.05 |

| 5 | ELSHADE-SPACMA | 4.07 |

| Rank | Name | F-Rank |

|---|---|---|

| 0 | MjSO | 1.9 |

| 1 | ELSHADE-SPACMA | 3.27 |

| 2 | jSO | 3.4 |

| 3 | SALSHADE-cnEPSin | 4.05 |

| 4 | LSHADE | 4.15 |

| 5 | EBLSHADE | 4.23 |

| Rank | Name | F-Rank |

|---|---|---|

| 0 | MjSO | 1.87 |

| 1 | ELSHADE-SPACMA | 3.35 |

| 2 | SALSHADE-cnEPSin | 3.4 |

| 3 | jSO | 3.78 |

| 4 | EBLSHADE | 3.93 |

| 5 | LSHADE | 4.67 |

| D | Chi-sq’ | Prob > Chi-sq’(p) | Critical Value |

|---|---|---|---|

| 10 | 13.73819163 | 1.74E-02 | 11.07 |

| 30 | 31.07891492 | 9.04E-06 | 11.07 |

| 50 | 38.65745856 | 2.78E-07 | 11.07 |

| 100 | 38.16356513 | 3.50E-07 | 11.07 |

References

- Dhiman, G.; Kumar, V. Multi-objective spotted hyena optimizer: A multi-objective optimization algorithm for engineering problems. Knowl. Based Syst. 2018, 150, 175–197. [Google Scholar] [CrossRef]

- Spall, J. Introduction to Stochastic Search and Optimization; Wiley Interscience: New York, NY, USA, 2003. [Google Scholar]

- Parejo, J.A.; Ruiz-Cortés, A.; Lozano, S.; Fernandez, P. Metaheuristic optimization frameworks: A survey and benchmarking. Soft Comput. 2012, 16, 527–561. [Google Scholar] [CrossRef]

- Zhou, A.; Qu, B.-Y.; Li, H.; Zhao, S.-Z.; Suganthan, P.N.; Zhang, Q. Multiobjective evolutionary algorithms: A survey of the state of the art. Swarm Evol. Comput. 2011, 1, 32–49. [Google Scholar] [CrossRef]

- Droste, S.; Jansen, T.; Wegener, I. Upper and lower bounds for randomized search heuristics in black-box optimization. Theory Comput. Syst. 2006, 39, 525–544. [Google Scholar] [CrossRef]

- Hoos, H.H.; Stützle, T. Stochastic Local Search: Foundations and Applications; Elsevier: Amsterdam, The Netherlands, 2004. [Google Scholar]

- Holland, J. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Application to Biology; Control and artificial intelligence, University of Michigan Press: Ann Arbor, MI, USA, 1975; pp. 106–111. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE; pp. 1942–1948. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Wienholt, W. Minimizing the system error in feedforward neural networks with evolution strategy. In Proceedings of the International Conference on Artificial Neural Networks, Amsterdam, The Netherlands, 13–16 September 1993; Springer: London, UK, 1993; pp. 490–493. [Google Scholar]

- Eltaeib, T.; Mahmood, A. Differential evolution: A survey and analysis. Appl. Sci. 2018, 8, 1945. [Google Scholar] [CrossRef]

- Arafa, M.; Sallam, E.A.; Fahmy, M. An enhanced differential evolution optimization algorithm. In Proceedings of the 2014 Fourth International Conference on Digital Information and Communication Technology and Its Applications (DICTAP), Bangkok, Thailand, 6–8 May 2014; pp. 216–225. [Google Scholar]

- Liu, X.-F.; Zhan, Z.-H.; Zhang, J. Dichotomy guided based parameter adaptation for differential evolution. In Proceedings of the 2015 Annual Conference on Genetic and Evolutionary Computation, Madrid, Spain, 11–15 July 2015; pp. 289–296. [Google Scholar]

- Sallam, K.M.; Sarker, R.A.; Essam, D.L.; Elsayed, S.M. Neurodynamic differential evolution algorithm and solving CEC2015 competition problems. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 1033–1040. [Google Scholar]

- Viktorin, A.; Pluhacek, M.; Senkerik, R. Network based linear population size reduction in SHADE. In Proceedings of the 2016 International Conference on Intelligent Networking and Collaborative Systems (INCoS), Ostrawva, Czech Republic, 7–9 September 2016; pp. 86–93. [Google Scholar]

- Bujok, P.; Tvrdík, J.; Poláková, R. Evaluating the performance of shade with competing strategies on CEC 2014 single-parameter test suite. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 5002–5009. [Google Scholar]

- Viktorin, A.; Pluhacek, M.; Senkerik, R. Success-history based adaptive differential evolution algorithm with multi-chaotic framework for parent selection performance on CEC2014 benchmark set. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 4797–4803. [Google Scholar]

- Poláková, R.; Tvrdík, J.; Bujok, P. L-SHADE with competing strategies applied to CEC2015 learning-based test suite. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 4790–4796. [Google Scholar]

- Poláková, R.; Tvrdík, J.; Bujok, P. Evaluating the performance of L-SHADE with competing strategies on CEC2014 single parameter-operator test suite. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 1181–1187. [Google Scholar]

- Liu, Z.-G.; Ji, X.-H.; Yang, Y. Hierarchical differential evolution algorithm combined with multi-cross operation. Expert Syst. Appl. 2019, 130, 276–292. [Google Scholar] [CrossRef]

- Bujok, P.; Tvrdík, J. Adaptive differential evolution: SHADE with competing crossover strategies. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 14–18 June 2015; pp. 329–339. [Google Scholar]

- Viktorin, A.; Senkerik, R.; Pluhacek, M.; Kadavy, T.; Zamuda, A. Distance based parameter adaptation for differential evolution. In Proceedings of the 2017 IEEE Symposium Series on Computational Intelligence (SSCI), Honolulu, HI, USA, 27 November–1 December 2017; pp. 1–7. [Google Scholar]

- Viktorin, A.; Senkerik, R.; Pluhacek, M.; Kadavy, T. Distance vs. Improvement Based Parameter Adaptation in SHADE. In Proceedings of the Computer Science On-Line Conference, Vsetin, Czech Republic, 25–28 April 2018; 2018; pp. 455–464. [Google Scholar]

- Molina, D.; Herrera, F. Applying Memetic algorithm with Improved L-SHADE and Local Search Pool for the 100-digit challenge on Single Objective Numerical Optimization. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 7–13. [Google Scholar]

- Awad, N.H.; Ali, M.Z.; Suganthan, P.N. Ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood for solving CEC2017 benchmark problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), San Sebastian, Spain, 5–8 June 2017; pp. 372–379. [Google Scholar]

- Zhao, F.; He, X.; Yang, G.; Ma, W.; Zhang, C.; Song, H. A hybrid iterated local search algorithm with adaptive perturbation mechanism by success-history based parameter adaptation for differential evolution (SHADE). Eng. Optim. 2019. [Google Scholar] [CrossRef]

- Das, S.; Mullick, S.S.; Suganthan, P.N. Recent advances in differential evolution—An updated survey. Swarm Evol. Comput. 2016, 27, 1–30. [Google Scholar] [CrossRef]

- Hansen, N. The CMA evolution strategy: A comparing review. In Towards a New Evolutionary Computation; Springer: Berlin/Heidelberg, Germany, 2006; pp. 75–102. [Google Scholar]

- Das, S.; Suganthan, P.N. Differential evolution: A survey of the state-of-the-art. IEEE Trans. Evol. Comput. 2010, 15, 4–31. [Google Scholar] [CrossRef]

- Al-Dabbagh, R.D.; Neri, F.; Idris, N.; Baba, M.S. Algorithmic design issues in adaptive differential evolution schemes: Review and taxonomy. Swarm Evol. Comput. 2018, 43, 284–311. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A.S. Improving the search performance of SHADE using linear population size reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 1658–1665. [Google Scholar]

- Brest, J.; Maučec, M.S.; Bošković, B. Single objective real-parameter optimization: Algorithm jSO. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), San Sebastian, Spain, 5–8 June 2017; pp. 1311–1318. [Google Scholar]

- Mohamed, A.W.; Hadi, A.A.; Fattouh, A.M.; Jambi, K.M. LSHADE with semi-parameter adaptation hybrid with CMA-ES for solving CEC 2017 benchmark problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), San Sebastian, Spain, 5–8 June 2017; pp. 145–152. [Google Scholar]

- Hadi, A.A.; Wagdy, A.; Jambi, K. Single-Objective Real-Parameter Optimization: Enhanced LSHADE-SPACMA Algorithm; King Abdulaziz Univ.: Jeddah, Saudi Arabia, 2018. [Google Scholar]

- Salgotra, R.; Singh, U.; Singh, G. Improving the adaptive properties of lshade algorithm for global optimization. In Proceedings of the 2019 International Conference on Automation, Computational and Technology Management (ICACTM), London, UK, 24–26 April 2019; pp. 400–407. [Google Scholar]

- Yang, M.; Li, C.; Cai, Z.; Guan, J. Differential evolution with auto-enhanced population diversity. IEEE Trans. Cybern. 2014, 45, 302–315. [Google Scholar] [CrossRef] [PubMed]

- Hongtan, C.; Zhaoguang, L. Improved Differential Evolution with Parameter Adaption Based on Population Diversity. In Proceedings of the 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; pp. 901–905. [Google Scholar]

- Wang, J.-Z.; Sun, T.-Y. Control the Diversity of Population with Mutation Strategy and Fuzzy Inference System for Differential Evolution Algorithm. Int. J. Fuzzy Syst. 2020, 22, 1979–1992. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y. Enhancing the performance of differential evolution with covariance matrix self-adaptation. Appl. Soft Comput. 2018, 64, 227–243. [Google Scholar] [CrossRef]

- Awad, N.H.; Ali, M.Z.; Mallipeddi, R.; Suganthan, P.N. An improved differential evolution algorithm using efficient adapted surrogate model for numerical optimization. Inf. Sci. 2018, 451, 326–347. [Google Scholar] [CrossRef]

- Subhashini, K.; Chinta, P. An augmented animal migration optimization algorithm using worst solution elimination approach in the backdrop of differential evolution. Evol. Intell. 2019, 12, 273–303. [Google Scholar] [CrossRef]

- Awad, N.; Ali, M.; Liang, J.; Qu, B.; Suganthan, P.; Definitions, P. Evaluation Criteria for the CEC 2017 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), San Sebastian, Spain, 5–8 June 2017. [Google Scholar]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive differential evolution with optional external archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Brest, J.; Maučec, M.S.; Bošković, B. iL-SHADE: Improved L-SHADE algorithm for single objective real-parameter optimization. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, Canada, 24–29 July 2016; pp. 1188–1195. [Google Scholar]

- Fan, Q.; Yan, X. Self-adaptive differential evolution algorithm with zoning evolution of control parameters and adaptive mutation strategies. IEEE Trans. Cybern. 2015, 46, 219–232. [Google Scholar] [CrossRef]

- Wang, D.; Lu, H.; Bo, C. Visual tracking via weighted local cosine similarity. IEEE Trans. Cybern. 2014, 45, 1838–1850. [Google Scholar] [CrossRef]

- Van Dongen, S.; Enright, A.J. Metric distances derived from cosine similarity and Pearson and Spearman correlations. arXiv 2012, arXiv:1208.3145. [Google Scholar]

- Luo, J.; He, F.; Yong, J. An efficient and robust bat algorithm with fusion of opposition-based learning and whale optimization algorithm. Intell. Data Anal. 2020, 24, 581–606. [Google Scholar] [CrossRef]

- Shekhawat, S.; Saxena, A. Development and applications of an intelligent crow search algorithm based on opposition based learning. ISA Trans. 2020, 99, 210–230. [Google Scholar] [CrossRef] [PubMed]

- Ergezer, M.; Simon, D. Mathematical and experimental analyses of oppositional algorithms. IEEE Trans. Cybern. 2014, 44, 2178–2189. [Google Scholar] [CrossRef] [PubMed]

- Tizhoosh, H.R. Opposition-based reinforcement learning. J. Adv. Comput. Intell. Intell. Inform. 2006, 10. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M. Opposition-based differential evolution. EEE Trans. Evol. Comput. 2008, 12, 64–79. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M. Quasi-oppositional differential evolution. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, San Sebastian, Spain, 5–8 June 2017; pp. 2229–2236. [Google Scholar]

- Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Hutter, F.; Hoos, H.H.; Leyton-Brown, K.; Stützle, T. ParamILS: An automatic algorithm configuration framework. J. Artif. Intell. Res. 2009, 36, 267–306. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Jambi, K.M. Novel mutation strategy for enhancing SHADE and LSHADE algorithms for global numerical optimization. Swarm Evol. Comput. 2019, 50, 100455. [Google Scholar] [CrossRef]

- Salgotra, R.; Singh, U.; Saha, S.; Nagar, A. New improved salshade-cnepsin algorithm with adaptive parameters. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; pp. 3150–3156. [Google Scholar]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Kdd, Menlo Park, CA, USA, August 1996; pp. 226–231. [Google Scholar]

- Deza, M.M.; Deza, E. Encyclopedia of distances. In Encyclopedia of Distances; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–583. [Google Scholar]

- Poláková, R.; Tvrdík, J.; Bujok, P.; Matoušek, R. Population-size adaptation through diversity-control mechanism for differential evolution. In Proceedings of the MENDEL, 22th International Conference on Soft Computing, Brno, Czech Republic, 8–10 June 2016; pp. 49–56. [Google Scholar]

- Varaee, H.; Ghasemi, M.R. Engineering optimization based on ideal gas molecular movement algorithm. Eng. Comput. 2017, 33, 71–93. [Google Scholar] [CrossRef]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

| ID | Functions | Optima |

|---|---|---|

| F1 | Shifted and Rotated Bent Cigar Function | 100 |

| F2 | Shifted and Rotated Sum of Differential Power Function | 200 |

| F3 | Shifted and Rotated Zakharov Function | 300 |

| F4 | Shifted and Rotated Rosenbrock’s Function | 400 |

| F5 | Shifted and Rotated Rastrigin’s Function | 500 |

| F6 | Shifted and Rotated Expanded Scaffer’s F6 Function | 600 |

| F7 | Shifted and Rotated Lunacek Bi_Rastrigin Function | 700 |

| F8 | Shifted and Rotated Non-Continuous Rastrigin’s Function | 800 |

| F9 | Shifted and Rotated Levy Function | 900 |

| F10 | Shifted and Rotated Schwefel’s Function | 1000 |

| F11 | Hybrid Function 1 (N = 3) | 1100 |

| F12 | Hybrid Function 2 (N = 3) | 1200 |

| F13 | Hybrid Function 3 (N = 3) | 1300 |

| F14 | Hybrid Function 4 (N = 4) | 1400 |

| F15 | Hybrid Function 5 (N = 4) | 1500 |

| F16 | Hybrid Function 6 (N = 4) | 1600 |

| F17 | Hybrid Function 6 (N = 5) | 1700 |

| F18 | Hybrid Function 6 (N = 5) | 1800 |

| F19 | Hybrid Function 6 (N = 5) | 1900 |

| F20 | Hybrid Function 6 (N = 6) | 2000 |

| F21 | Composition Function 1 (N = 3) | 2100 |

| F22 | Composition Function 2 (N = 3) | 2200 |

| F23 | Composition Function 3 (N = 4) | 2300 |

| F24 | Composition Function 4 (N = 4) | 2400 |

| F25 | Composition Function 5 (N = 5) | 2500 |

| F26 | Composition Function 6 (N = 5) | 2600 |

| F27 | Composition Function 7 (N = 6) | 2700 |

| F28 | Composition Function 8 (N = 6) | 2800 |

| F29 | Composition Function 9 (N = 3) | 2900 |

| F30 | Composition Function 10 (N = 3) | 3000 |

| Parameter Setting |

|---|

| MjSO = , = 4, = 5, = 0.5, = 0.5, = , = 0.25 = jSO = , = 4, = 5, = 0.3, = 0.8, = , = 0.25 = LSHADE = , = 4, = 6, = 0.5, = 0.5, = , P = 0.11 EBLSAHDE = , = 4, = 5, = 0.5, = 0.5, = , P = 0.11 ELSHADE-SPACMA = , = 4, = 5, =0.5, = 0.8, = 0.3, = 0.15 SALSHADE-cnEPSin = , = 4, = 5, = 0.5, = 0.5, , , |

| NO | EBLSHADE | SALSHADE-cnEPSin | jSO | LSHADE | ELSHADE-SPACMA | MjSO | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| f1 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f2 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f3 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f4 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f5 | >2.52E+00 | 8.98E-01 | >1.99E+00 | 6.62E-01 | >1.76E+00 | 7.60E–01 | >2.57E+00 | 8.37E-01 | >3.87E+00 | 2.02E+00 | 1.35E+00 | 9.10E-01 |

| f6 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f7 | >1.22E+01 | 7.09E-01 | >1.19E+01 | 5.67E-01 | >1.18E+01 | 6.07E–01 | >1.22E+01 | 7.05E-01 | >1.33E+01 | 1.75E+00 | 1.15E+01 | 5.99E-01 |

| f8 | >2.23E+00 | 9.02E-01 | >1.99E+00 | 7.63E-01 | >1.95E+00 | 7.44E–01 | >2.52E+00 | 6.99E-01 | >4.10E+00 | 2.51E+00 | 1.37E+00 | 5.87E-01 |

| f9 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f10 | >2.50E+01 | 3.99E+01 | <7.36E+00 | 4.49E+01 | >3.59E+01 | 5.55E+01 | >3.86E+01 | 5.50E+01 | >2.27E+01 | 4.84E+01 | 1.53E+01 | 3.27E+01 |

| f11 | >2.74E-01 | 5.48E-01 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | >3.65E-01 | 6.94E-01 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f12 | <1.22E+01 | 3.59E+01 | <1.19E+02 | 7.49E+01 | <2.66E+00 | 1.68E+01 | >3.89E+01 | 5.68E+01 | >2.85E+01 | 5.14E+01 | 2.79E+01 | 5.02E+01 |

| f13 | >3.64E+00 | 2.23E+00 | >4.83E+00 | 2.30E+00 | >2.96E+00 | 2.35E+00 | >3.88E+00 | 2.37E+00 | >3.57E+00 | 2.21E+00 | 2.49E+00 | 2.50E+00 |

| f14 | >5.38E-01 | 8.03E-01 | ≈0.00E+00 | 2.36E-01 | >5.85E–02 | 2.36E–01 | >7.73E-01 | 9.05E-01 | >7.80E-02 | 2.70E-01 | 0.00E+00 | 0.00E+00 |

| f15 | ≈1.44E-01 | 2.03E-01 | ≈2.70E-01 | 2.03E+00 | ≈2.21E–01 | 2.00E–01 | ≈1.99E-01 | 2.12E-01 | ≈2.51E-01 | 2.17E-01 | 1.92E-01 | 2.20E-01 |

| f16 | ≈4.34E-01 | 2.23E-01 | ≈6.25E-01 | 2.59E-01 | ≈5.69E–01 | 2.64E–01 | ≈3.83E-01 | 1.64E-01 | ≈5.62E-01 | 2.55E-01 | 5.64E-01 | 2.76E-01 |

| f17 | ≈1.23E-01 | 1.51E-01 | ≈1.77E-01 | 2.41E-01 | ≈5.02E–01 | 3.48E–01 | ≈1.06E-01 | 1.31E-01 | ≈1.39E-01 | 1.44E-01 | 3.54E-01 | 3.11E-01 |

| f18 | ≈1.79E-01 | 1.95E-01 | ≈4.49E-01 | 5.43E+00 | ≈3.08E–01 | 1.95E–01 | ≈2.15E-01 | 1.97E-01 | ≈7.10E-01 | 2.80E+00 | 3.06E-01 | 1.99E-01 |

| f19 | ≈9.06E-03 | 1.09E-02 | ≈1.97E-02 | 3.01E-02 | ≈1.07E–02 | 1.25E–02 | ≈1.05E-02 | 1.10E-02 | ≈1.55E-02 | 1.14E-02 | 1.60E-02 | 2.30E-02 |

| f20 | <1.22E-02 | 6.12E-02 | ≈3.12E-01 | 4.01E-01 | ≈3.43E–01 | 1.29E–01 | <1.22E-02 | 6.06E-02 | ≈1.41E-01 | 1.57E-01 | 3.12E-01 | 0.00E+00 |

| f21 | >1.56E+02 | 5.12E+01 | ≈1.00E+02 | 5.13E+01 | >1.32E+02 | 4.84E+01 | >1.50E+02 | 5.14E+01 | ≈1.02E+02 | 1.48E+01 | 1.10E+02 | 3.11E+01 |

| f22 | ≈1.00E+02 | 1.01E-01 | ≈1.00E+02 | 6.26E-02 | ≈1.00E+02 | 0.00E+00 | ≈1.00E+02 | 4.01E-02 | ≈1.00E+02 | 1.21E-01 | 1.00E+02 | 8.17E-14 |

| f23 | >3.03E+02 | 1.71E+00 | >3.01E+02 | 1.43E+00 | >3.01E+02 | 1.59E+00 | >3.03E+02 | 1.65E+00 | >3.04E+02 | 2.30E+00 | 3.00E+02 | 9.63E-01 |

| f24 | >3.16E+02 | 5.46E+01 | >3.29E+02 | 7.97E+01 | >2.97E+02 | 7.93E+01 | >3.21E+02 | 4.52E+01 | >2.91E+02 | 9.54E+01 | 2.42E+02 | 1.14E+02 |

| f25 | >4.15E+02 | 2.24E+01 | >4.43E+02 | 2.22E+01 | >4.06E+02 | 1.75E+01 | >4.09E+02 | 1.95E+01 | >4.13E+02 | 2.18E+01 | 3.95E+02 | 1.37E+01 |

| f26 | ≈3.00E+02 | 0.00E+00 | ≈3.00E+02 | 0.00E+00 | ≈3.00E+02 | 0.00E+00 | ≈3.00E+02 | 0.00E+00 | ≈3.00E+02 | 0.00E+00 | 3.00E+02 | 0.00E+00 |

| f27 | >3.89E+02 | 1.39E-01 | >3.88E+02 | 1.66E+00 | >3.89E+02 | 2.26E–01 | >3.89E+02 | 1.78E-01 | >3.89E+02 | 1.67E-01 | 3.87E+02 | 1.63E+00 |

| f28 | >3.47E+02 | 1.10E+02 | ≈3.00E+02 | 1.23E+02 | >3.39E+02 | 9.65E+01 | >3.58E+02 | 1.18E+02 | >3.25E+02 | 1.04E+02 | 3.00E+02 | 0.00E+00 |

| f29 | >2.33E+02 | 2.65E+00 | ≈2.28E+02 | 1.56E+00 | >2.34E+02 | 2.96E+00 | >2.34E+02 | 2.78E+00 | ≈2.30E+02 | 2.26E+00 | 2.30E+02 | 2.10E+00 |

| f30 | <3.24E+04 | 1.60E+05 | ≈3.94E+02 | 9.42E+04 | ≈3.95E+02 | 4.50E–02 | >4.05E+02 | 2.08E+01 | >4.02E+02 | 1.77E+01 | 3.96E+02 | 9.44E+00 |

| NO | EBLSHADE | SALSHADE-cnEPSin | jSO | LSHADE | ELSHADE-SPACMA | MjSO | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| f1 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f2 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f3 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f4 | ≈5.86E+01 | 3.11E-14 | <4.90E+01 | 3.32E+00 | ≈5.87E+01 | 7.78E–01 | ≈5.86E+01 | 3.22E-14 | ≈5.86E+01 | 0.00E+00 | 5.86E+01 | 3.66E-14 |

| f5 | ≈6.26E+00 | 1.29E+00 | >1.24E+01 | 2.39E+00 | >8.56E+00 | 2.10E+00 | ≈6.41E+00 | 1.52E+00 | >1.86E+01 | 8.04E+00 | 7.45E+00 | 2.20E+00 |

| f6 | >6.04E-09 | 2.71E-08 | ≈0.00E+00 | 8.66E-08 | >6.04E–09 | 2.71E–08 | >2.68E-08 | 1.52E-07 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f7 | ≈3.73E+01 | 1.44E+00 | >4.32E+01 | 2.18E+00 | >3.89E+01 | 1.46E+00 | ≈3.71E+01 | 1.55E+00 | >3.89E+01 | 3.43E+00 | 3.75E+01 | 2.11E+00 |

| f8 | ≈6.66E+00 | 1.54E+00 | >1.36E+01 | 2.21E+00 | >9.09E+00 | 1.84E+00 | ≈7.15E+00 | 1.58E+00 | >1.61E+01 | 7.46E+00 | 7.98E+00 | 1.62E+00 |

| f9 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f10 | ≈1.42E+03 | 2.01E+02 | >1.47E+03 | 2.35E+02 | >1.53E+03 | 2.77E+02 | >1.50E+03 | 1.73E+02 | >1.70E+03 | 4.06E+02 | 1.42E+03 | 2.73E+02 |

| f11 | >2.63E+01 | 2.82E+01 | >3.93E+00 | 1.76E+01 | >3.04E+00 | 2.65E+00 | >3.22E+01 | 2.85E+01 | >7.80E+00 | 1.42E+01 | 1.56E+00 | 1.36E+00 |

| f12 | >9.45E+02 | 3.73E+02 | >3.43E+02 | 2.19E+02 | >1.70E+02 | 1.02E+02 | >1.00E+03 | 3.59E+02 | >2.47E+02 | 1.28E+02 | 1.32E+02 | 8.57E+01 |

| f13 | >1.55E+01 | 4.88E+00 | >1.70E+01 | 5.24E+00 | >1.48E+01 | 4.83E+00 | >1.61E+01 | 4.97E+00 | >1.57E+01 | 5.03E+00 | 1.25E+01 | 8.99E+00 |

| f14 | ≈2.11E+01 | 4.24E+00 | >2.20E+01 | 3.85E+00 | >2.18E+01 | 1.25E+00 | ≈2.14E+01 | 2.98E+00 | >2.37E+01 | 5.25E+00 | 2.16E+01 | 4.72E+00 |

| f15 | >2.67E+00 | 1.43E+00 | >3.65E+00 | 1.75E+00 | ≈1.09E+00 | 6.91E–01 | >3.22E+00 | 1.32E+00 | ≈1.86E+00 | 1.29E+00 | 1.80E+00 | 1.27E+00 |

| f16 | >3.84E+01 | 2.64E+01 | >1.88E+01 | 3.98E+01 | >7.89E+01 | 8.48E+01 | >6.52E+01 | 7.46E+01 | >6.68E+01 | 8.35E+01 | 1.55E+01 | 5.56E+00 |

| f17 | >3.32E+01 | 5.24E+00 | >2.83E+01 | 5.88E+00 | >3.29E+01 | 8.08E+00 | >3.28E+01 | 6.36E+00 | >2.97E+01 | 6.76E+00 | 2.70E+01 | 6.12E+00 |

| f18 | ≈2.07E+01 | 3.93E+00 | ≈2.06E+01 | 9.07E-01 | ≈2.04E+01 | 2.87E+00 | >2.21E+01 | 9.87E-01 | ≈2.09E+01 | 3.03E+00 | 2.08E+01 | 3.21E-01 |

| f19 | >5.32E+00 | 1.65E+00 | >5.91E+00 | 1.89E+00 | >4.50E+00 | 1.73E+00 | >5.21E+00 | 1.58E+00 | >4.61E+00 | 1.35E+00 | 4.22E+00 | 1.32E+00 |

| f20 | >3.08E+01 | 5.80E+00 | >3.08E+01 | 5.96E+00 | >2.94E+01 | 5.85E+00 | >3.10E+01 | 6.54E+00 | >2.73E+01 | 4.56E+00 | 2.63E+01 | 6.29E+00 |

| f21 | >2.11E+02 | 1.67E+00 | >2.13E+02 | 2.07E+00 | >2.09E+02 | 1.96E+00 | ≈2.07E+02 | 1.47E+00 | >2.22E+02 | 6.64E+00 | 2.07E+02 | 1.72E+00 |

| f22 | ≈1.00E+02 | 1.00E-13 | ≈1.00E+02 | 1.00E-13 | ≈1.00E+02 | 0.00E+00 | ≈1.00E+02 | 1.00E-13 | ≈1.00E+02 | 0.00E+00 | 1.00E+02 | 0.00E+00 |

| f23 | >3.48E+02 | 2.81E+00 | >3.54E+02 | 4.11E+00 | >3.51E+02 | 3.30E+00 | >3.50E+02 | 3.10E+00 | >3.69E+02 | 1.05E+01 | 3.45E+02 | 3.66E+00 |

| f24 | >4.25E+02 | 1.89E+00 | >4.29E+02 | 2.71E+00 | >4.26E+02 | 2.47E+00 | >4.26E+02 | 1.44E+00 | >4.41E+02 | 7.84E+00 | 4.22E+02 | 2.90E+00 |

| f25 | ≈3.87E+02 | 2.71E-02 | ≈3.87E+02 | 6.82E-03 | ≈3.87E+02 | 7.68E–03 | ≈3.87E+02 | 2.47E-02 | ≈3.87E+02 | 9.60E-03 | 3.87E+02 | 5.67E-03 |

| f26 | >8.97E+02 | 3.13E+01 | >9.51E+02 | 4.74E+01 | >9.20E+02 | 4.30E+01 | >9.51E+02 | 3.79E+01 | >1.08E+03 | 8.68E+01 | 8.91E+02 | 3.48E+01 |

| f27 | >5.01E+02 | 5.44E+00 | >5.03E+02 | 4.01E+00 | >4.98E+02 | 7.00E+00 | >5.05E+02 | 4.81E+00 | >4.99E+02 | 6.15E+00 | 4.96E+02 | 5.69E+00 |

| f28 | >3.26E+02 | 4.66E+01 | ≈3.05E+02 | 4.21E+01 | >3.09E+02 | 3.03E+01 | >3.33E+02 | 5.24E+01 | ≈3.02E+02 | 1.60E+01 | 3.02E+02 | 2.49E+01 |

| f29 | >4.38E+02 | 6.17E+00 | >4.38E+02 | 1.05E+01 | >4.34E+02 | 1.36E+01 | >4.34E+02 | 8.45E+00 | >4.33E+02 | 1.56E+01 | 4.28E+02 | 1.06E+01 |

| f30 | ≈1.98E+03 | 3.07E+01 | ≈1.97E+03 | 4.42E+01 | ≈1.97E+03 | 1.90E+01 | ≈1.99E+03 | 5.24E+01 | ≈1.98E+03 | 3.34E+01 | 1.97E+03 | 1.24E+01 |

| NO | EBLSHADE | SALSHADE-cnEPSin | jSO | LSHADE | ELSHADE-SPACMA | MjSO | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| f1 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f2 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f3 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f4 | >7.00E+01 | 4.58E+01 | >3.69E+01 | 4.17E+01 | >5.62E+01 | 4.88E+01 | >7.07E+01 | 4.97E+01 | >4.36E+01 | 3.62E+01 | 3.00E+01 | 2.70E+01 |

| f5 | ≈1.42E+01 | 1.78E+00 | >2.81E+01 | 5.30E+00 | >1.64E+01 | 3.46E+00 | ≈1.38E+01 | 2.95E+00 | ≈1.39E+01 | 5.55E+00 | 1.48E+01 | 3.28E+00 |

| f6 | >6.94E-05 | 3.32E-04 | >9.52E-07 | 1.40E-06 | >1.09E–06 | 2.62E–06 | >6.12E-05 | 3.09E-04 | <0.00E+00 | 0.00E+00 | 1.83E-08 | 4.41E-08 |

| f7 | ≈6.29E+01 | 1.98E+00 | >7.73E+01 | 5.54E+00 | ≈6.65E+01 | 3.47E+00 | ≈6.30E+01 | 1.85E+00 | ≈6.15E+01 | 3.86E+00 | 6.58E+01 | 3.26E+00 |

| f8 | ≈1.22E+01 | 2.00E+00 | >2.64E+01 | 5.85E+00 | >1.70E+01 | 3.14E+00 | ≈1.20E+01 | 2.11E+00 | >1.79E+01 | 7.47E+00 | 1.29E+01 | 2.17E+00 |

| f9 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f10 | >3.17E+03 | 3.06E+02 | >3.36E+03 | 3.02E+02 | >3.14E+03 | 3.67E+02 | >3.15E+03 | 2.59E+02 | >3.69E+03 | 6.07E+02 | 2.99E+03 | 3.14E+02 |

| f11 | >4.37E+01 | 7.27E+00 | >2.81E+01 | 1.89E+00 | >2.79E+01 | 3.33E+00 | >4.80E+01 | 8.24E+00 | >2.62E+01 | 3.76E+00 | 2.44E+01 | 2.96E+00 |

| f12 | >2.02E+03 | 5.00E+02 | >1.28E+03 | 3.64E+02 | >1.68E+03 | 5.23E+02 | >2.24E+03 | 5.22E+02 | >1.36E+03 | 3.42E+02 | 8.51E+02 | 3.87E+02 |

| f13 | >6.40E+01 | 3.47E+01 | >8.68E+01 | 2.97E+01 | >3.06E+01 | 2.12E+01 | >6.41E+01 | 2.65E+01 | >3.68E+01 | 1.72E+01 | 2.56E+01 | 1.96E+01 |

| f14 | >2.78E+01 | 2.27E+00 | >2.65E+01 | 2.35E+00 | ≈2.50E+01 | 1.87E+00 | >2.98E+01 | 3.01E+00 | >3.07E+01 | 3.95E+00 | 2.52E+01 | 2.53E+00 |

| f15 | >3.41E+01 | 9.07E+00 | >2.63E+01 | 3.57E+00 | >2.39E+01 | 2.49E+00 | >4.01E+01 | 1.06E+01 | >2.28E+01 | 2.20E+00 | 2.11E+01 | 1.67E+00 |

| f16 | >3.54E+02 | 1.09E+02 | >3.29E+02 | 1.11E+02 | >4.51E+02 | 1.38E+02 | >3.77E+02 | 1.24E+02 | >4.15E+02 | 1.77E+02 | 2.85E+02 | 1.22E+02 |

| f17 | >2.64E+02 | 6.33E+01 | >2.76E+02 | 5.33E+01 | >2.83E+02 | 8.61E+01 | ≈2.51E+02 | 5.71E+01 | ≈2.30E+02 | 9.68E+01 | 2.48E+02 | 8.68E+01 |

| f18 | >3.31E+01 | 7.86E+00 | >2.50E+01 | 2.09E+00 | >2.43E+01 | 2.02E+00 | >3.98E+01 | 8.64E+00 | >2.51E+01 | 2.56E+00 | 2.24E+01 | 1.45E+00 |

| f19 | >1.93E+01 | 3.25E+00 | >1.81E+01 | 3.41E+00 | >1.41E+01 | 2.26E+00 | >2.32E+01 | 5.94E+00 | >1.44E+01 | 2.31E+00 | 1.26E+01 | 2.58E+00 |

| f20 | >1.72E+02 | 6.94E+01 | >1.31E+02 | 2.64E+01 | >1.40E+02 | 7.74E+01 | >1.73E+02 | 7.15E+01 | >1.08E+02 | 7.31E+01 | 1.00E+02 | 3.35E+01 |

| f21 | >2.21E+02 | 2.55E+00 | >2.26E+02 | 6.04E+00 | >2.19E+02 | 3.77E+00 | ≈2.12E+02 | 2.25E+00 | >2.42E+02 | 9.52E+00 | 2.14E+02 | 3.99E+00 |

| f22 | >2.67E+03 | 1.59E+03 | >1.00E+03 | 1.70E+03 | >1.49E+03 | 1.75E+03 | >2.68E+03 | 1.62E+03 | ≈7.86E+02 | 1.64E+03 | 7.67E+02 | 1.42E+03 |

| f23 | >4.67E+02 | 4.38E+00 | >4.41E+02 | 7.07E+00 | >4.30E+02 | 6.24E+00 | >4.30E+02 | 4.91E+00 | >4.62E+02 | 1.39E+01 | 4.27E+02 | 5.86E+00 |

| f24 | >5.05E+02 | 3.51E+00 | >5.14E+02 | 6.05E+00 | >5.07E+02 | 4.13E+00 | >5.06E+02 | 2.55E+00 | >5.34E+02 | 9.14E+00 | 4.98E+02 | 3.50E+00 |

| f25 | >4.88E+02 | 2.01E+01 | >4.88E+02 | 1.54E+00 | ≈4.81E+02 | 2.80E+00 | >4.84E+02 | 1.29E+01 | ≈4.81E+02 | 2.80E+00 | 4.80E+02 | 1.81E-02 |

| f26 | >1.13E+03 | 4.45E+01 | >1.25E+03 | 9.13E+01 | >1.13E+03 | 5.62E+01 | >1.14E+03 | 4.93E+01 | >1.34E+03 | 1.38E+02 | 1.05E+03 | 4.64E+01 |

| f27 | >5.27E+02 | 1.09E+01 | >5.23E+02 | 8.58E+00 | ≈5.11E+02 | 1.11E+01 | >5.31E+02 | 1.67E+01 | ≈5.10E+02 | 9.52E+00 | 5.18E+02 | 1.43E+01 |

| f28 | >4.73E+02 | 2.23E+01 | >4.67E+02 | 6.78E+00 | ≈4.60E+02 | 6.84E+00 | >4.71E+02 | 2.15E+01 | ≈4.60E+02 | 6.84E+00 | 4.59E+02 | 2.91E-13 |

| f29 | >3.62E+02 | 1.04E+01 | >3.61E+02 | 1.07E+01 | >3.63E+02 | 1.32E+01 | ≈3.50E+02 | 1.09E+01 | >3.58E+02 | 1.78E+01 | 3.53E+02 | 1.21E+01 |

| f30 | >6.54E+05 | 7.78E+04 | >6.48E+05 | 5.85E+04 | ≈6.01E+05 | 2.99E+04 | >6.58E+05 | 8.12E+04 | ≈5.97E+05 | 2.38E+04 | 6.02E+05 | 3.07E+04 |

| NO | EBLSHADE | SALSHADE-cnEPSin | jSO | LSHADE | ELSHADE-SPACMA | MjSO | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| f1 | ≈0.00E+00 | 0.00E+00 | >1.36E-08 | 2.95E-08 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f2 | <6.44E+00 | 1.54E+01 | >1.49E+11 | 7.72E+11 | <8.94E+00 | 2.42E+01 | >8.42E+05 | 6.01E+06 | >7.52E+07 | 6.01E+07 | 3.27E+01 | 5.92E+01 |

| f3 | >1.56E-06 | 1.46E-06 | <0.00E+00 | 0.00E+00 | >2.39E–06 | 2.73E–06 | >6.90E-06 | 6.77E-06 | ≈1.60E-07 | 4.00E-07 | 8.81E-07 | 7.38E-07 |

| f4 | ≈1.84E+02 | 5.87E+01 | >2.01E+02 | 7.91E+00 | ≈1.90E+02 | 2.89E+01 | ≈1.97E+02 | 1.58E+01 | >2.01E+02 | 8.67E+00 | 1.94E+02 | 7.94E+00 |

| f5 | >4.14E+01 | 3.80E+00 | >6.19E+01 | 1.05E+01 | >4.39E+01 | 5.61E+00 | >3.83E+01 | 4.90E+00 | >3.78E+01 | 5.85E+00 | 2.82E+01 | 9.53E+00 |

| f6 | >1.22E-02 | 6.81E-03 | >5.65E-05 | 3.51E-05 | >2.02E-04 | 6.20E–04 | >5.71E-03 | 3.43E-03 | <0.00E+00 | 1.34E-08 | 1.05E-06 | 9.32E-07 |

| f7 | >1.40E+02 | 4.25E+00 | >1.71E+02 | 7.36E+00 | >1.45E+02 | 6.70E+00 | >1.41E+02 | 4.46E+00 | >1.51E+02 | 1.48E+00 | 1.34E+02 | 6.19E+00 |

| f8 | >3.73E+01 | 6.67E+00 | >6.20E+01 | 9.99E+00 | >4.22E+01 | 5.52E+00 | >3.86E+01 | 4.47E+00 | >2.98E+01 | 1.32E+01 | 2.80E+01 | 1.00E+01 |

| f9 | >6.33E-01 | 4.60E-01 | ≈0.00E+00 | 0.00E+00 | >4.59E-02 | 1.15E–01 | >4.86E-01 | 4.83E-01 | ≈0.00E+00 | 0.00E+00 | 0.00E+00 | 0.00E+00 |

| f10 | >1.03E+04 | 4.51E+02 | >1.05E+04 | 5.30E+02 | >9.70E+03 | 6.82E+02 | >1.04E+04 | 5.45E+02 | >1.08E+04 | 9.53E+02 | 9.60E+03 | 7.03E+02 |

| f11 | >3.71E+02 | 1.03E+02 | >4.54E+01 | 4.79E+01 | >1.13E+02 | 4.32E+01 | >4.52E+02 | 8.94E+01 | >7.34E+01 | 4.30E+01 | 3.01E+01 | 4.79E+00 |

| f12 | >2.28E+04 | 5.65E+03 | >6.72E+03 | 9.17E+02 | >1.84E+04 | 8.35E+03 | >2.49E+04 | 9.97E+03 | >7.79E+03 | 2.92E+03 | 5.38E+03 | 1.32E+03 |

| f13 | >2.33E+02 | 6.04E+01 | >1.04E+02 | 3.72E+01 | >1.45E+02 | 3.80E+01 | >5.70E+02 | 4.14E+02 | >1.49E+02 | 3.83E+01 | 5.58E+01 | 2.54E+01 |

| f14 | >2.36E+02 | 1.88E+01 | >5.12E+01 | 6.90E+00 | >6.43E+01 | 1.09E+01 | >2.51E+02 | 2.94E+01 | >4.75E+01 | 5.69E+00 | 3.84E+01 | 4.16E+00 |

| f15 | >2.65E+02 | 3.97E+01 | >9.37E+01 | 3.19E+01 | >1.62E+02 | 3.81E+01 | >2.57E+02 | 4.02E+01 | >1.08E+02 | 4.34E+01 | 5.55E+01 | 1.54E+01 |

| f16 | >1.50E+03 | 3.54E+02 | >1.51E+03 | 1.90E+02 | >1.86E+03 | 3.49E+02 | >1.66E+03 | 2.78E+02 | >1.76E+03 | 4.88E+02 | 1.38E+03 | 3.43E+02 |

| f17 | >1.13E+03 | 2.25E+02 | >1.14E+02 | 1.75E+02 | >1.28E+03 | 2.38E+02 | >1.16E+03 | 1.94E+02 | >1.27E+03 | 3.45E+02 | 9.17E+02 | 2.21E+02 |

| f18 | >2.65E+02 | 4.96E+01 | >6.90E+01 | 1.55E+01 | >1.67E+02 | 3.65E+01 | >2.41E+02 | 5.66E+01 | >1.05E+02 | 2.56E+01 | 6.00E+01 | 1.36E+01 |

| f19 | >1.62E+02 | 1.78E+01 | >5.71E+01 | 7.301+00 | >1.05E+02 | 2.01E+01 | >1.78E+02 | 2.42E+01 | >6.05E+01 | 7.55E+00 | 4.73E+01 | 5.00E+00 |

| f20 | >1.63E+03 | 1.86E+02 | >1.41E+03 | 1.88E+02 | >1.38E+03 | 2.43E+02 | >1.56E+03 | 2.04E+02 | >1.28E+03 | 2.54E+02 | 1.26E+03 | 2.69E+02 |

| f21 | >2.59E+02 | 3.33E+00 | >2.88E+02 | 1.45E+01 | >2.64E+02 | 6.43E+00 | >2.59E+02 | 6.03E+00 | >2.96E+02 | 1.65E+01 | 2.51E+02 | 7.79E+00 |

| f22 | >1.14E+04 | 5.60E+02 | >1.08E+04 | 5.81E+02 | >1.02E+04 | 2.18E+03 | >1.13E+04 | 5.65E+02 | >9.70E+03 | 1.20E+03 | 3.53E+01 | 6.04E+00 |

| f23 | <5.71E+02 | 6.68E+00 | <5.92E+02 | 8.64E+00 | <5.71E+02 | 1.07E+01 | <5.66E+02 | 9.02E+00 | <6.03E+02 | 2.19E+01 | 1.13E+03 | 2.49E+01 |

| f24 | >9.01E+02 | 5.70E+00 | >9.19E+02 | 1.21E+01 | >9.02E+02 | 7.89E+00 | >9.20E+02 | 6.78E+00 | >9.32E+02 | 1.90E+01 | 2.95E+02 | 2.18E+01 |

| f25 | >7.50E+02 | 2.58E+01 | >7.21E+02 | 4.62E+01 | >7.36E+02 | 3.53E+01 | >7.53E+02 | 2.58E+01 | >7.00E+02 | 3.99E+01 | 6.68E+02 | 1.80E+01 |

| f26 | >3.22E+03 | 6.88E+01 | >3.15E+03 | 1.73E+02 | >3.27E+03 | 8.02E+01 | >3.43E+03 | 8.34E+01 | >3.24E+03 | 2.19E+02 | 3.00E+02 | 3.22E-13 |

| f27 | <6.19E+02 | 1.68E+01 | <5.88E+02 | 1.75E+01 | <5.85E+02 | 2.17E+01 | <6.43E+02 | 1.70E+01 | <5.62E+02 | 1.75E+01 | 1.67E+03 | 1.35E+02 |

| f28 | >5.32E+02 | 2.58E+01 | >5.16E+02 | 1.91E+01 | >5.27E+02 | 2.73E+01 | >5.27E+02 | 2.15E+01 | >5.21E+02 | 2.38E+01 | 5.04E+02 | 1.70E+01 |

| f29 | >1.12E+03 | 1.54E+02 | >1.14E+03 | 1.40E+02 | >1.26E+03 | 1.91E+02 | >1.27E+03 | 1.76E+02 | >1.21E+03 | 1.98E+02 | 9.89E+02 | 1.84E+02 |

| f30 | <2.39E+03 | 1.39E+02 | <2.33E+03 | 1.66E+02 | <2.33E+03 | 1.19E+02 | <2.41E+03 | 1.52E+02 | <2.25E+03 | 1.11E+02 | 3.32E+03 | 8.57E+01 |

| MjSO vs. | |||||

|---|---|---|---|---|---|

| EBLSHADE | (better) | 14 | 16 | 23 | 24 |

| (no sig) | 13 | 14 | 7 | 2 | |

| (worse) | 3 | 0 | 0 | 4 | |

| SALSHADE-cnEPSin | (better) | 8 | 19 | 26 | 25 |

| (no sig) | 20 | 10 | 4 | 1 | |

| (worse) | 2 | 1 | 0 | 4 | |

| jSO | (better) | 13 | 20 | 20 | 24 |

| (no sig) | 16 | 10 | 10 | 2 | |

| (worse) | 1 | 0 | 0 | 4 | |

| LSHADE | (better) | 16 | 17 | 20 | 25 |

| (no sig) | 13 | 13 | 10 | 2 | |

| (worse) | 1 | 0 | 0 | 3 | |

| ELSHADE-SPACMA | (better) | 13 | 18 | 17 | 23 |

| (no sig) | 17 | 12 | 12 | 3 | |

| (worse) | 0 | 0 | 1 | 4 |

| Algorithm | Variable | Target Cost | |||

|---|---|---|---|---|---|

| DE | 0.8231 | 0.4453 | 42.9230 | 176.7356 | 6301.5664 |

| LSHADE | 0.8168 | 0.4472 | 42.1412 | 177.1231 | 6138.8931 |

| EBLSHADE | 0.7802 | 0.3856 | 40.4292 | 198.4964 | 5889.3216 |

| ELSHADE-SPACMA | 0.8125 | 0.4375 | 42.0913 | 176.7465 | 6061.0777 |

| SALSHADE-cnEPSin | 0.7929 | 0.3914 | 41.1773 | 188.3950 | 5912.7115 |

| jSO | 0.8036 | 0.3972 | 41.6392 | 182.4120 | 5930.3137 |

| MjSO | 0.7782 | 0.3847 | 40.3201 | 199.9975 | 5885.5226 |

| Algorithm | Variable | Target Weight | ||

|---|---|---|---|---|

| d | D | N | ||

| DE | 0.0592 | 0.4983 | 8.8980 | 0.0172 |

| LSHADE | 0.0524 | 0.3532 | 11.6824 | 0.0133 |

| EBLSHADE | 0.0500 | 0.3171 | 14.1417 | 0.0127 |

| ELSHADE-SPACMA | 0.0519 | 0.3487 | 11.8145 | 0.0129 |

| SALSHADE-cnEPSin | 0.0503 | 0.3159 | 14.250 | 0.0128 |

| jSO | 0.0562 | 0.4754 | 6.6670 | 0.0130 |

| MjSO | 0.0516 | 0.3597 | 11.2880 | 0.0126 |

| Algorithm | Variable | Target Cost | |||

|---|---|---|---|---|---|

| DE | 0.2389 | 3.4067 | 9.6383 | 0.2901 | 2.0701 |

| LSHADE | 0.2134 | 3.5601 | 8.4629 | 0.2346 | 1.8561 |

| EBLSHADE | 0.2087 | 6.7221 | 9.3673 | 0.4217 | 1.7583 |

| ELSHADE-SPACMA | 0.1947 | 3.7831 | 9.1234 | 0.2077 | 1.7796 |

| SALSHADE-cnEPSin | 0.2023 | 3.5442 | 9.0366 | 0.2057 | 1.7280 |

| jSO | 0.2147 | 3.3841 | 8.8103 | 0.2195 | 1.7890 |

| MjSO | 0.2057 | 3.4704 | 9.0366 | 0.2057 | 1.7248 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, Y.; Liang, Z.; Kang, H.; Sun, X.; Chen, Q. A Modified jSO Algorithm for Solving Constrained Engineering Problems. Symmetry 2021, 13, 63. https://doi.org/10.3390/sym13010063

Shen Y, Liang Z, Kang H, Sun X, Chen Q. A Modified jSO Algorithm for Solving Constrained Engineering Problems. Symmetry. 2021; 13(1):63. https://doi.org/10.3390/sym13010063

Chicago/Turabian StyleShen, Yong, Ziyuan Liang, Hongwei Kang, Xingping Sun, and Qingyi Chen. 2021. "A Modified jSO Algorithm for Solving Constrained Engineering Problems" Symmetry 13, no. 1: 63. https://doi.org/10.3390/sym13010063

APA StyleShen, Y., Liang, Z., Kang, H., Sun, X., & Chen, Q. (2021). A Modified jSO Algorithm for Solving Constrained Engineering Problems. Symmetry, 13(1), 63. https://doi.org/10.3390/sym13010063