An Improved Whale Optimization Algorithm for the Traveling Salesman Problem

Abstract

1. Introduction

2. Basic Theory of WOA

2.1. Encircling Prey

2.2. Bubble-Net Attacking Method

2.3. Searching for Prey

| Algorithm 1. The pseudo-code of the WOA algorithm |

| 1. Begin |

| 2. Initialize the relevant parameters of the WOA and the positions of whales; |

| 3. Calculate the fitness of each whale; |

| 4. Find the best whale (X*); |

| 5. While (t < maximum iteration) |

| 6. for each whale |

| 7. Update a, A, C, l and p; |

| 8. if 1 (p < 0.5) |

| 9. if 2 (|A| < 1) |

| 10. Update the position of the current whale by Equation (2); |

| 11. else if 2 (|A| ≥ 1) |

| 12. Select a random whale (Xrand); |

| 13. Update the position of the current whale by Equation (9); |

| 14. end if 2 |

| 15. else if 1 (p ≥ 0.5) |

| 16. Update the position of the current search by Equation (6); |

| 17. end if 1 |

| 18. end for |

| 19. Check if any whale goes beyond the search space and amend it; |

| 20. Calculate the fitness of each whale; |

| 21. Update X* if there is a better solution; |

| 22. t = t + 1; |

| 23. end while |

| 24. return X*. |

| 25. End |

3. DWOA for the TSP Problem

3.1. DWOA Improvement Strategy

3.1.1. Adaptive Weight Strategy

3.1.2. Gaussian Disturbance

3.2. Description of VDWOA

3.2.1. Variable Neighborhood Search

| Algorithm 2. The pseudo-code of Proc_VNS |

| 1. Begin |

| 2. Set the current optimal solution as the initial solution x; |

| 3. while (termination condition not met) |

| 4. k = 1; |

| 5. while (k ≤ 3) |

| 6. Generate the neighborhood solution x’ for x by the nk; |

| 7. Produce a new local optimal x″ for x’ by local search; |

| 8. if (the fitness value of x″ is better than x) |

| 9. x = x″; |

| 10. k = 1; |

| 11. else k = k + 1; |

| 12. end if |

| 13. end while |

| 14. end while |

| 15. End |

3.2.2. Pseudo-Code of the VDWOA

| Algorithm 3. The pseudo-code of the VDWOA for the TSP |

| 1. Begin |

| 2. Initialize the relevant parameters of the WOA algorithm and the positions of whales; |

| 3. Calculate the fitness of each whale according to Equation (10); |

| 4. Find the best whale(X*); |

| 5. while (t < maximum iteration) |

| 6. for each whale |

| 7. Update a, A, C, l and p; |

| 8. if1 (p < 0.5) |

| 9. if2 (|A| < 1) |

| 10. if3 (current r < 0.5) |

| 11. Update the position of the whale by Equation (15); |

| 12. else |

| 13. Update the position of the current whale by Equation (12); |

| 14. end if3 |

| 15. if4 (current r > 0.5) |

| 16. Call Proc_VNS for the current optimal whale; |

| 17. Update the local optimal solution; |

| 18. end if4 |

| 19. else if2 (|A| ≥ 1) |

| 20. Select a random whale(Xrand); |

| 21. Update the position of the current whale by Equation (14); |

| 22. end if2 |

| 23. else if1 (p ≥ 0.5) |

| 24. if5 (current r < 0.5) |

| 25. Update the position of the whale by Equation (15); |

| 26. else |

| 27. Update the position of the current whale by Equation (13); |

| 28. end if5 |

| 29. if6 (current r > 0.5) |

| 30. Call Proc_VNS for the current optimal whale; |

| 31. Update the local optimal solution; |

| 32. end if6 |

| 33. end if1 |

| 34. end for |

| 35. Check if any whale goes beyond the search space and amend it; |

| 36. Calculate the fitness of each whale; |

| 37. Update X* if there is a better solution; |

| 38. t = t + 1; |

| 39. end while |

| 40. return X*; |

| 41. End. |

4. Experiment and Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, X.S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl. Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Hosseinabadi, A.A.R.; Vahidi, J.; Saemi, B.; Sangaiah, A.K.; Elhoseny, M. Extended Genetic Algorithm for solving open-shop scheduling problem. Soft Comput. 2019, 23, 5099–5116. [Google Scholar] [CrossRef]

- Wei, B.; Xia, X.; Yu, F.; Zhang, Y.; Xu, X.; Wu, H.; Gui, L.; He, G. Multiple adaptive strategies based particle swarm optimization algorithm. Swarm Evolut. Comput. 2020, 57, 100731. [Google Scholar] [CrossRef]

- Shen, C.; Chen, Y.L. Blocking Flow Shop Scheduling Based on Hybrid Ant Colony Optimization. Int. J. Simul. Model. 2020, 19, 313–322. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Alamri, H.S.; Alsariera, Y.A.; Zamli, K.Z. Opposition-based Whale Optimization Algorithm. J. Adv. Sci. Lett. 2018, 24, 7461–7464. [Google Scholar] [CrossRef]

- Trivedi, I.N.; Pradeep, J.; Narottam, J.; Arvind, K.; Dilip, L. Novel adaptive whale optimization algorithm for global optimization. Indian J. Sci. Technol. 2016, 9, 319–326. [Google Scholar] [CrossRef]

- Ling, Y.; Zhou, Y.; Luo, Q. Lévy flight trajectory-based whale optimization algorithm for global optimization. IEEE Access 2017, 5, 6168–6186. [Google Scholar] [CrossRef]

- Kaur, G.; Arora, S. Chaotic whale optimization algorithm. J. Comput. Des. Eng. 2018, 5, 275–284. [Google Scholar] [CrossRef]

- Wu, Z.; Song, F. Whale optimization algorithm based on improved spiral update position model. Syst. Eng. Theory Pract. 2019, 39, 2928–2944. (In Chinese) [Google Scholar]

- Chen, H.; Yang, C.; Heidari, A.A.; Zhao, X. An efficient double adaptive random spare reinforced whale optimization algorithm. Expert Syst. Appl. 2019, 154, 113018. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, G.; Chen, S.; Hu, P. Whale optimization algorithm based on chaos weight and elite guidance. Sens. Microsyst. 2020, 39, 113–116. (In Chinese) [Google Scholar]

- Chu, D.L.; Chen, H.; Wang, X.G. Whale Optimization Algorithm Based on Adaptive Weight and Simulated Annealing. Acta Electron. Sin. 2019, 47, 992–999. [Google Scholar]

- Bozorgi, S.M.; Yazdani, S. IWOA: An improved whale optimization algorithm for optimization problems. J. Comput. Des. Eng. 2019, 6, 243–259. [Google Scholar]

- Abd El Aziz, M.; Ewees, A.A.; Hassanien, A.E. Whale Optimization Algorithm and Moth-Flame Optimization for multilevel thresholding image segmentation. Expert Syst. Appl. 2017, 83, 242–256. [Google Scholar] [CrossRef]

- Prakash, D.B.; Lakshminarayana, C. Optimal siting of capacitors in radial distribution network using whale optimization algorithm. Alex. Eng. J. 2017, 56, 499–509. [Google Scholar] [CrossRef]

- Aljarah, I.; Faris, H.; Mirjalili, S. Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput. 2018, 22, 1–15. [Google Scholar] [CrossRef]

- Li, Y.; He, Y.; Liu, X.; Guo, X.; Li, Z. A novel discrete whale optimization algorithm for solving knapsack problems. Appl. Intell. 2020, prepublish. [Google Scholar] [CrossRef]

- Mafarja, M.M.; Mirjalili, S. Hybrid Whale Optimization Algorithm with simulated annealing for feature selection. Neurocomputing 2017, 260, 302–312. [Google Scholar] [CrossRef]

- Oliva, D.; El Aziz, M.A.; Hassanien, A.E. Parameter estimation of photovoltaic cells using an improved chaotic whale optimization algorithm. Appl. Energy 2017, 200, 141–154. [Google Scholar] [CrossRef]

- Ahmed, O.M.; Kahramanli, H. Meta-Heuristic Solution Approaches for Traveling Salesperson Problem. Int. J. Applied Math. Electron. Comput. 2018, 6, 21–26. [Google Scholar]

- Yan, X.; Ye, C. Hybrid random quantum whale optimization algorithm for TSP problem. Microelectron. Comput. 2018, 35, 1–5, 10. (In Chinese) [Google Scholar]

- Lin, S. Computer solutions of the traveling salesman problem. Bell Syst. Tech. J. 1965, 44, 2245–2269. [Google Scholar] [CrossRef]

- Reinhelt, G. TSPLIB: A Library of Sample Instances for the TSP (and Related Problems) from Various Sources and of Various Types. 2014. Available online: http://comopt.ifi.uniheidelberg.de/software/TSPLIB95 (accessed on 25 June 2018).

- Zhao, Y.; Wu, Y.; Yu, C. The Computational Complexity of TSP & VRP. In Proceedings of the 2011 International Conference on Computers, Communications, Control and Automation Proceedings (CCCA 2011 V3), Hokkaido, Japan, 1–2 February 2011; pp. 167–170. [Google Scholar]

- Li, Y.; Han, T.; Han, B.; Zhao, H.; Wei, Z. Whale Optimization Algorithm with Chaos Strategy and Weight Factor. J. Phys. Conf. Ser. 2019, 1213, 032004. [Google Scholar] [CrossRef]

- Mladenović, N.; Hansen, P. Variable neighborhood search. Comput. Oper. Res. 1997, 24, 1097–1100. [Google Scholar] [CrossRef]

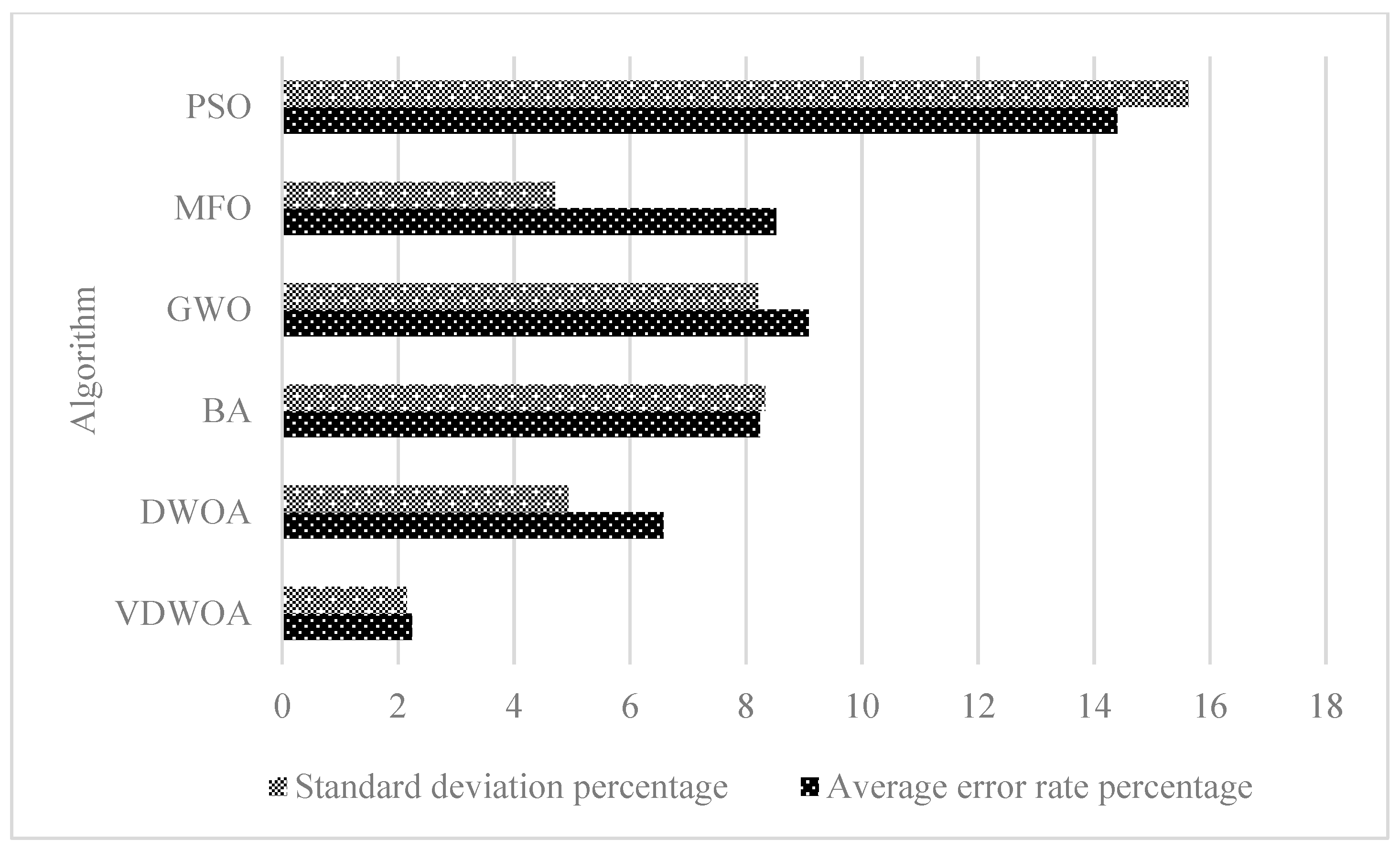

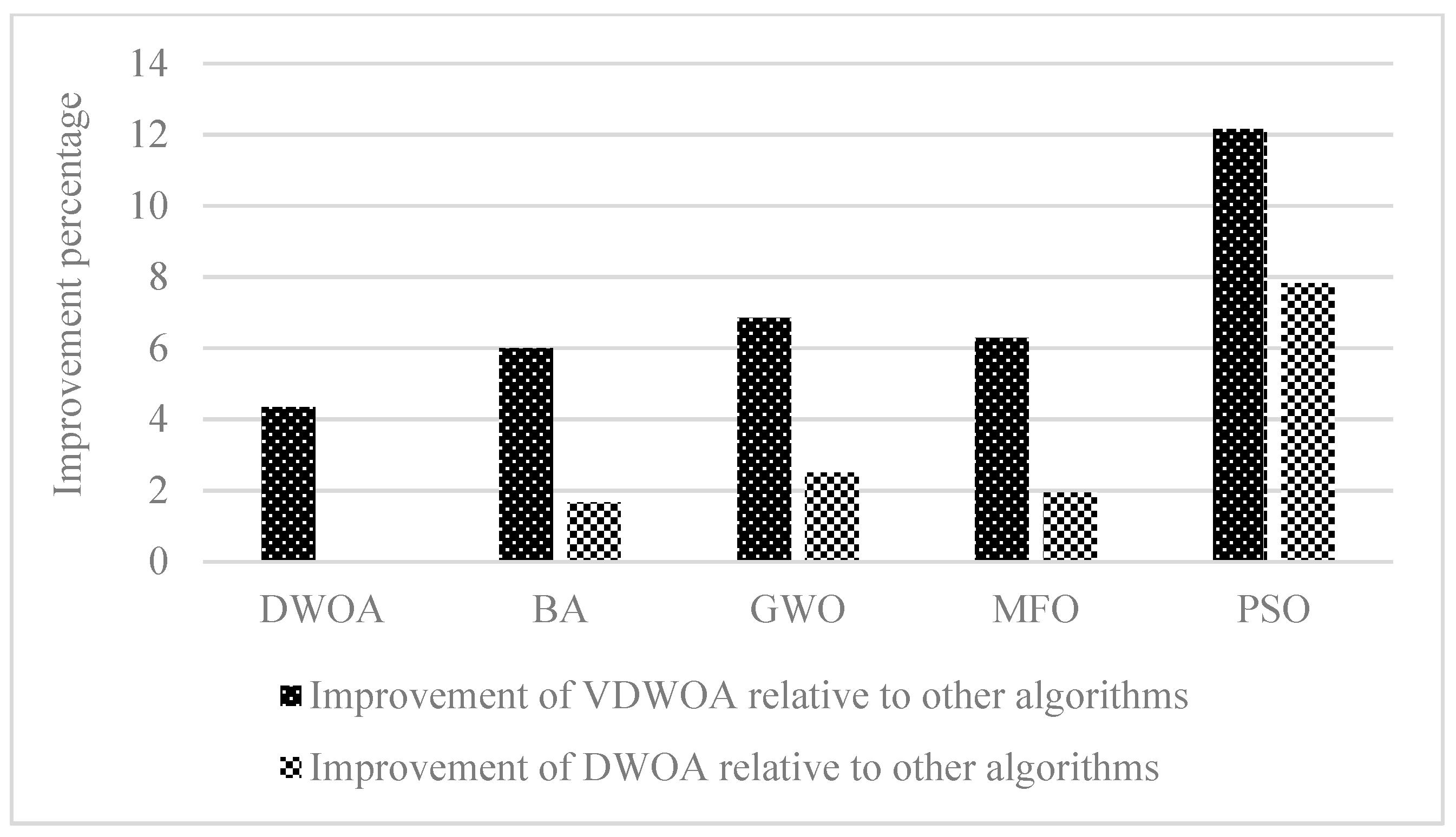

| Problem (Known Optimal Solution) | Algorithm | Optimal Solution | Average Time (Unit: Second) | Er (%) |

|---|---|---|---|---|

| Oliver30(420) | VDWOA | 420 | 4.74 | 0 |

| DWOA | 420 | 2.08 | 0 | |

| BA | 420 | 2.21 | 0 | |

| GWO | 422 | 1.42 | 0.48 | |

| MFO | 423 | 2.24 | 0.71 | |

| PSO | 424 | 2.08 | 0.95 | |

| Eil51(426) | VDWOA | 429 | 10.47 | 0.7 |

| DWOA | 445 | 3.73 | 4.46 | |

| BA | 439 | 4.27 | 3.05 | |

| GWO | 441 | 3.42 | 3.52 | |

| MFO | 449 | 4.4 | 5.4 | |

| PSO | 445 | 3.91 | 4.46 | |

| Berlin52(7542) | VDWOA | 7542 | 10.79 | 0 |

| DWOA | 7727 | 3.78 | 2.45 | |

| BA | 7694 | 4.28 | 2.02 | |

| GWO | 7898 | 3.6 | 4.72 | |

| MFO | 8184 | 4.39 | 8.51 | |

| PSO | 7862 | 3.93 | 4.24 | |

| St70(675) | VDWOA | 676 | 17.69 | 0.15 |

| DWOA | 712 | 5.31 | 5.48 | |

| BA | 718 | 6.38 | 6.37 | |

| GWO | 726 | 5.59 | 7.56 | |

| MFO | 710 | 6.57 | 5.19 | |

| PSO | 732 | 5.72 | 8.44 | |

| Eil76(538) | VDWOA | 554 | 20.5 | 2.97 |

| DWOA | 579 | 5.81 | 7.62 | |

| BA | 561 | 7 | 4.28 | |

| GWO | 565 | 6.23 | 5.02 | |

| MFO | 577 | 7.29 | 7.25 | |

| PSO | 595 | 6.3 | 10.59 | |

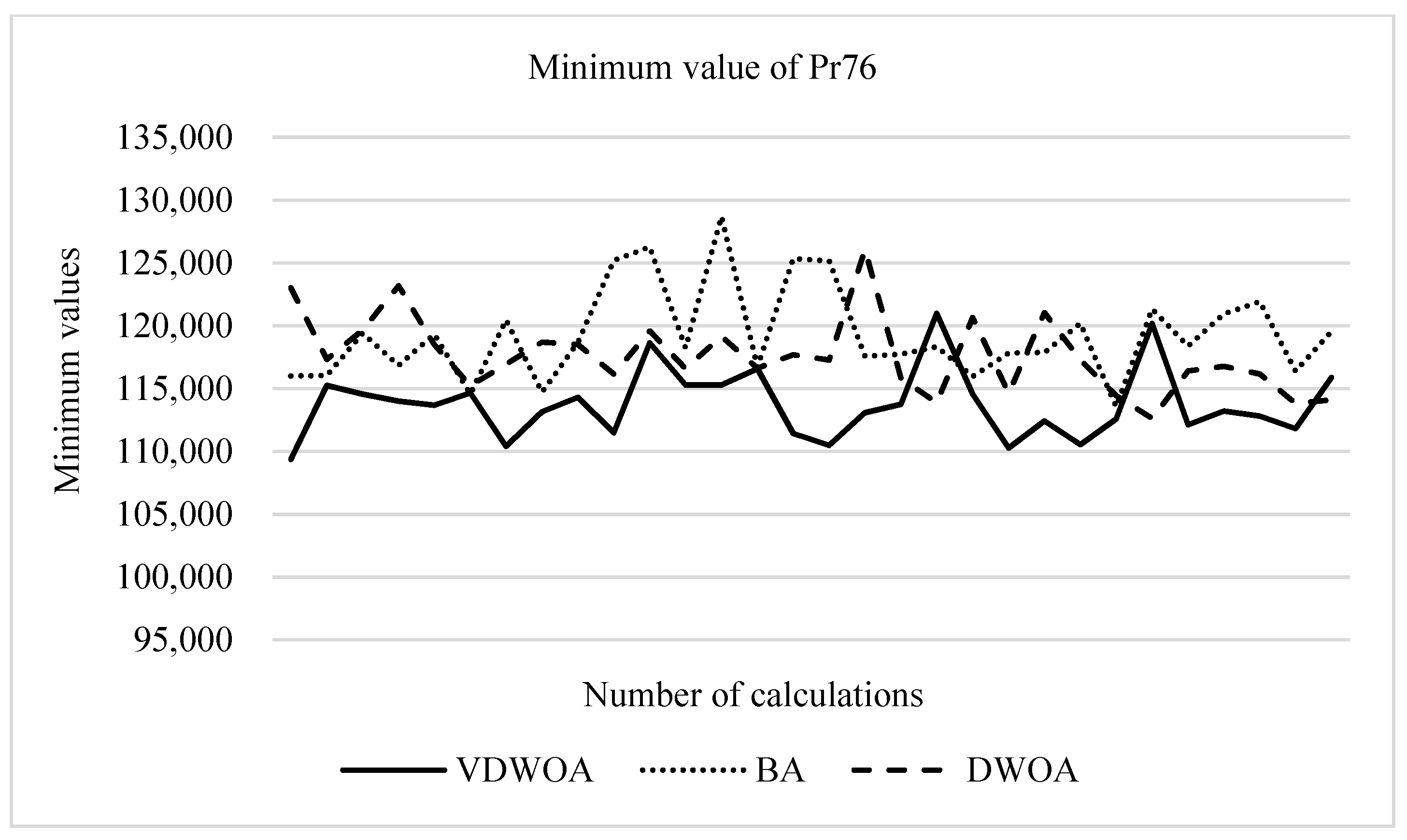

| Pr76(108159) | VDWOA | 108,353 | 20.52 | 0.18 |

| DWOA | 111,511 | 5.85 | 3.1 | |

| BA | 111,989 | 6.96 | 3.54 | |

| GWO | 114,261 | 6.19 | 5.64 | |

| MFO | 114,377 | 7.18 | 5.75 | |

| PSO | 115,265 | 6.14 | 6.57 | |

| KroA100(21282) | VDWOA | 21,721 | 35.24 | 2.06 |

| DWOA | 22,471 | 8.12 | 5.59 | |

| BA | 23,424 | 10.24 | 10.06 | |

| GWO | 22,963 | 8.77 | 7.9 | |

| MFO | 23,456 | 10.52 | 10.22 | |

| PSO | 23,480 | 8.72 | 10.33 | |

| Pr107(44303) | VDWOA | 45,030 | 38.09 | 1.64 |

| DWOA | 45,780 | 8.72 | 3.33 | |

| BA | 46,419 | 11.39 | 4.78 | |

| GWO | 46,083 | 9.62 | 4.02 | |

| MFO | 47,437 | 11.69 | 7.07 | |

| PSO | 46,919 | 9.48 | 5.9 | |

| Ch150(6528) | VDWOA | 6863 | 77.37 | 5.13 |

| DWOA | 7329 | 13.87 | 12.27 | |

| BA | 7440 | 19.59 | 13.97 | |

| GWO | 7384 | 15.26 | 13.11 | |

| MFO | 7329 | 19.57 | 12.27 | |

| PSO | 7833 | 15.1 | 19.99 | |

| D198(15780) | VDWOA | 16,313 | 145.24 | 3.38 |

| DWOA | 16,603 | 20.56 | 5.22 | |

| BA | 16,849 | 32.41 | 6.77 | |

| GWO | 17,109 | 22.48 | 8.42 | |

| MFO | 16,911 | 30.86 | 7.17 | |

| PSO | 18,130 | 22.52 | 14.89 | |

| Tsp225(3916) | VDWOA | 4136 | 195.54 | 5.62 |

| DWOA | 4399 | 25 | 12.33 | |

| BA | 4427 | 41.63 | 13.05 | |

| GWO | 4620 | 27.41 | 17.98 | |

| MFO | 4469 | 38.3 | 14.12 | |

| PSO | 5049 | 27.44 | 28.93 | |

| Fl417(11861) | VDWOA | 12,462 | 2485.61 | 5.07 |

| DWOA | 13,886 | 276.12 | 17.07 | |

| BA | 15,532 | 363.01 | 30.95 | |

| GWO | 15,492 | 286.92 | 30.61 | |

| MFO | 14,087 | 411.23 | 18.55 | |

| PSO | 18,688 | 321.63 | 57.56 |

| Instance (Known Optimal Solution) | Algorithm | Optimal Solution | Er (%) |

|---|---|---|---|

| Oliver30(420) | VDWOA | 420 | 0 |

| Min_GWO_WOA | 423 | 0.71 | |

| HSQWOA | - | - | |

| Eil51(426) | VDWOA | 429 | 0.7 |

| Min_GWO_WOA | 429 | 0.7 | |

| HSQWOA | 429 | 0.7 | |

| Berlin52(7542) | VDWOA | 7542 | 0 |

| Min_GWO_WOA | 7661 | 1.58 | |

| HSQWOA | - | - | |

| St70(675) | VDWOA | 676 | 0.15 |

| Min_GWO_WOA | 679 | 0.59 | |

| HSQWOA | 677 | 0.3 | |

| Eil76(538) | VDWOA | 554 | 2.97 |

| Min_GWO_WOA | 569 | 5.76 | |

| HSQWOA | - | - | |

| KroA100(21282) | VDWOA | 21,721 | 2.06 |

| Min_GWO_WOA | 21,954 | 3.16 | |

| HSQWOA | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Hong, L.; Liu, Q. An Improved Whale Optimization Algorithm for the Traveling Salesman Problem. Symmetry 2021, 13, 48. https://doi.org/10.3390/sym13010048

Zhang J, Hong L, Liu Q. An Improved Whale Optimization Algorithm for the Traveling Salesman Problem. Symmetry. 2021; 13(1):48. https://doi.org/10.3390/sym13010048

Chicago/Turabian StyleZhang, Jin, Li Hong, and Qing Liu. 2021. "An Improved Whale Optimization Algorithm for the Traveling Salesman Problem" Symmetry 13, no. 1: 48. https://doi.org/10.3390/sym13010048

APA StyleZhang, J., Hong, L., & Liu, Q. (2021). An Improved Whale Optimization Algorithm for the Traveling Salesman Problem. Symmetry, 13(1), 48. https://doi.org/10.3390/sym13010048