1. Introduction

High-quality images have found many applications in multimedia and computer vision applications [

1,

2]. However, due to the limitations of image acquisition technology, imaging environment, and other factors, it is sometimes very difficult to obtain high-quality images and it is almost impossible to obtain images with low illuminance all the time. Images taken under extreme weather conditions, underwater or at night often have low-visibility and blurred details, and their quality is greatly degraded. Therefore, it is necessary to enhance low-light images in order to satisfy our demand. Researchers from academia and industry have been working on various image-processing technologies. Many image enhancement methods have been proposed to enhance the degraded images in daily life, such as underwater images [

3], foggy images [

4], and nighttime images [

5]. In modern industrial production areas, these techniques have also found more and more applications. In [

6], a novel optical defect detection and classification system is proposed for real-time inspection. In [

7], a novel defect extraction and classification scheme for mobile phone screens, based on machine vision, is proposed. Another interesting application is the surface quality inspection of transparent parts [

8].

Existing approaches generally fall into two major categories—global enhancement methods and local enhancement methods. Global enhancement methods aim to process all image pixels in the same way, regardless of spatial distribution. Global enhancement methods, which are based on logarithm [

9], power-law [

10], or gamma correction [

11], are commonly used for low-quality images. However, sometimes these methods cannot output desired and gratifying results. Histogram equalization (HE) [

12] is a great way to improve the contrast of the input image by adjusting pixel values according to the cumulative distribution function. However, it may lead to over-enhancement in some regions with low contrast. To overcome this defect, in [

13] an enhancement method is proposed using layered difference representation (LDR) of 2D histograms, but the enhanced image looks a bit dark. Traditional gamma correction (GC) [

14] maps all pixels by a power exponent function, but sometimes this causes over-correction for the bright regions.

Local enhancement methods take the spatial distribution of pixels into account and usually have a better effect. Adaptive histogram equalization (AHE) achieves a better contrast by optimizing local image contrast [

15,

16]. By limiting contrast in each small local region, contrast-limited adaptive histogram equalization (CLAHE) [

17,

18] can overcome the problem of excessive noise amplification of the AHE. In [

19], a scheme for adaptive image-contrast enhancement is proposed based on a generalization of histogram equalization by selecting an alternative cumulative distribution function. In [

17], a dynamic histogram equalization method is proposed by partitioning the image histogram based on local minima before equalizing them separately. However, these methods often cause artifacts. Retinex theory [

20] decomposes an image into scene reflection and illumination, which provide a physical model for image enhancement. However, early Retinex-based algorithms [

21,

22,

23] generate enhanced images by removing estimated illumination so that final images look unnatural. Researchers have found that it is better to compress the estimated illumination rather than removing it [

24,

25,

26,

27,

28]. The naturalness-preserved enhancement algorithm (NPEA) [

24] modifies illumination by the bi-log transformation, and then combines illumination and reflectance. In [

26], a new multi-layer model is proposed to extract details based on the NPEA. However, the computation load of these models is very high because of patch-based calculation. In [

29], an efficient contrast enhancement method, which is based on adaptive gamma correction with weighting distribution (AGCWD), improves the brightness of dimmed images via traditional GC by incorporating probability distribution of luminance pixels. However, images enhanced by AGCWD look a little dim. In [

30], a new color assignment method is derived which can fit a specified, well-behaved target histogram well. In [

31], the final illumination map is obtained by imposing a structure prior to the initial estimated initial illumination map and enhancement results can be achieved accordingly. In [

32], a camera response model is built to adjust each pixel to the desired exposure. However, the enhanced image looks very bright, perhaps resulting from the model’s deviation. Some fusion-based methods can achieve good enhancement results [

33,

34]. In [

33], two input images for fusion are obtained by improving luminance and enhancing contrast from a decomposed illumination. In [

34], a multi-exposure fusion framework is proposed which blends synthesized multi-exposure images from the proposed camera response model with the weight matrix for fusion using illumination estimation techniques.

In this paper, we also propose a fusion-based method for enhancing low-light images, which is based on proposed quasi-symmetric correction functions (QCFs). Firstly, we present two couples of QCFs to obtain a globally- enhanced image for the original low-light image. Then, we employ CLAHE [

17] on the value channel of the enhanced image just derived to obtain a locally- enhanced image. Finally, we merge them by a proposed multi-scale fusion formula. Our main contributions are as follows:

- (1)

We define two couples of QCFs that can simultaneously achieve an impartial correction within dim regions and dazzling regions for low-light images through the proposed weighting-adding formula.

- (2)

We define three weight maps which are effective for contrast improvement and detail preservation by fusion. In particular, we designed a value weight map, so we could achieve an excellent overall brightness for low-light images.

- (3)

We achieved satisfactory enhancement results for low-light images based on defined QCFs by the proposed multi-scale fusion strategy, which aims to blend a globally-enhanced image and a locally-enhanced image.

The rest of the paper is organized as follows: In

Section 2, two couples of QCFs are presented after introducing GC and the preliminary comparison experiment is shown. In

Section 3, we propose a low-light image enhancement method based on QCFs by a delicately-designed fusion formula. In

Section 4, comparison experimental results of different state-of-the-art methods are provided and their performance assessments are made and discussed. Finally, the conclusion is presented in

Section 5.

4. Results and Discussion

In this section, we present our experimental results and evaluate the performance of our method. First, we present our experiment settings. Then, we evaluate the proposed method by comparing it with other seven state-of-the-art methods in both subjective and objective aspects.

4.1. Experiment Settings

To fully evaluated the proposed method, we tested hundreds of images under different low illumination conditions from various scenes. All test images came from Wang et al. [

17] and Loh et al. [

39]. We performed all the experimental images in MATLAB R2017b on a PC running Windows 10 OS with 2.1 GHz Intel Pentium Dual Core Processor and 32G RAM.

In the following, we set in (9), which denotes a moderate number of layers; and we set in (2) and (3), which is just the traditional option. At the end of this section, we will analyze the sensitivity of the parameters in (9), and in (2) and (3).

4.2. Subjective Evaluation

We compared the proposed method with seven methods proposed in recent years—naturalness-preserved enhancement algorithm (NPEA) [

24], Fast-hue and range-preserving histogram specification (FPHS) [

30], multi-scale fusion (MF) [

33], bio-inspired multi-exposure fusion (BIMEF) [

34], naturalness-preserved image enhancement using a priori multi-layer lightness statistics (NPIE) [

26], low-light image enhancement via illumination map estimation (LIME) [

31], and low-light image enhancement using the camera response model (LECARM) [

32].

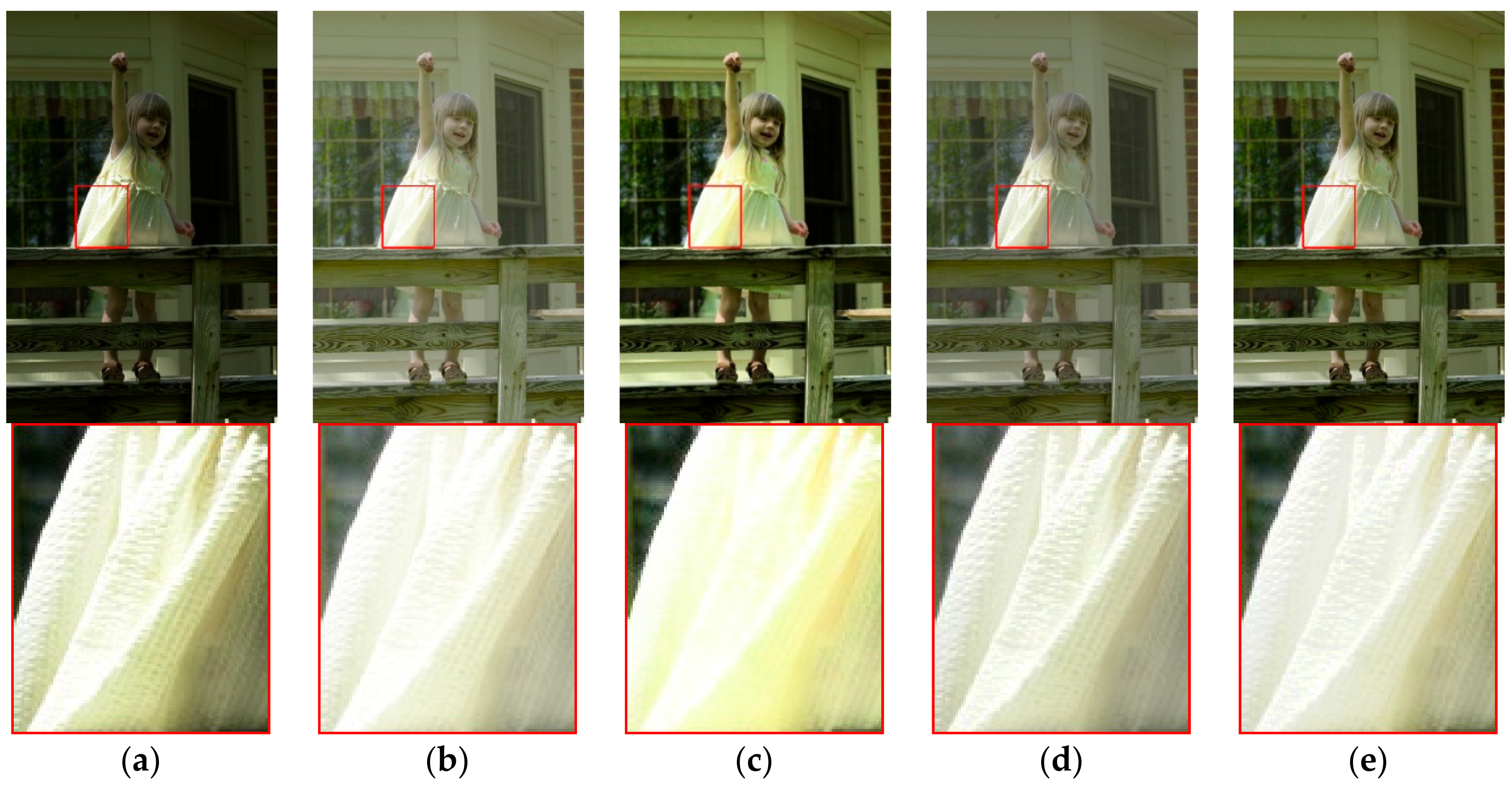

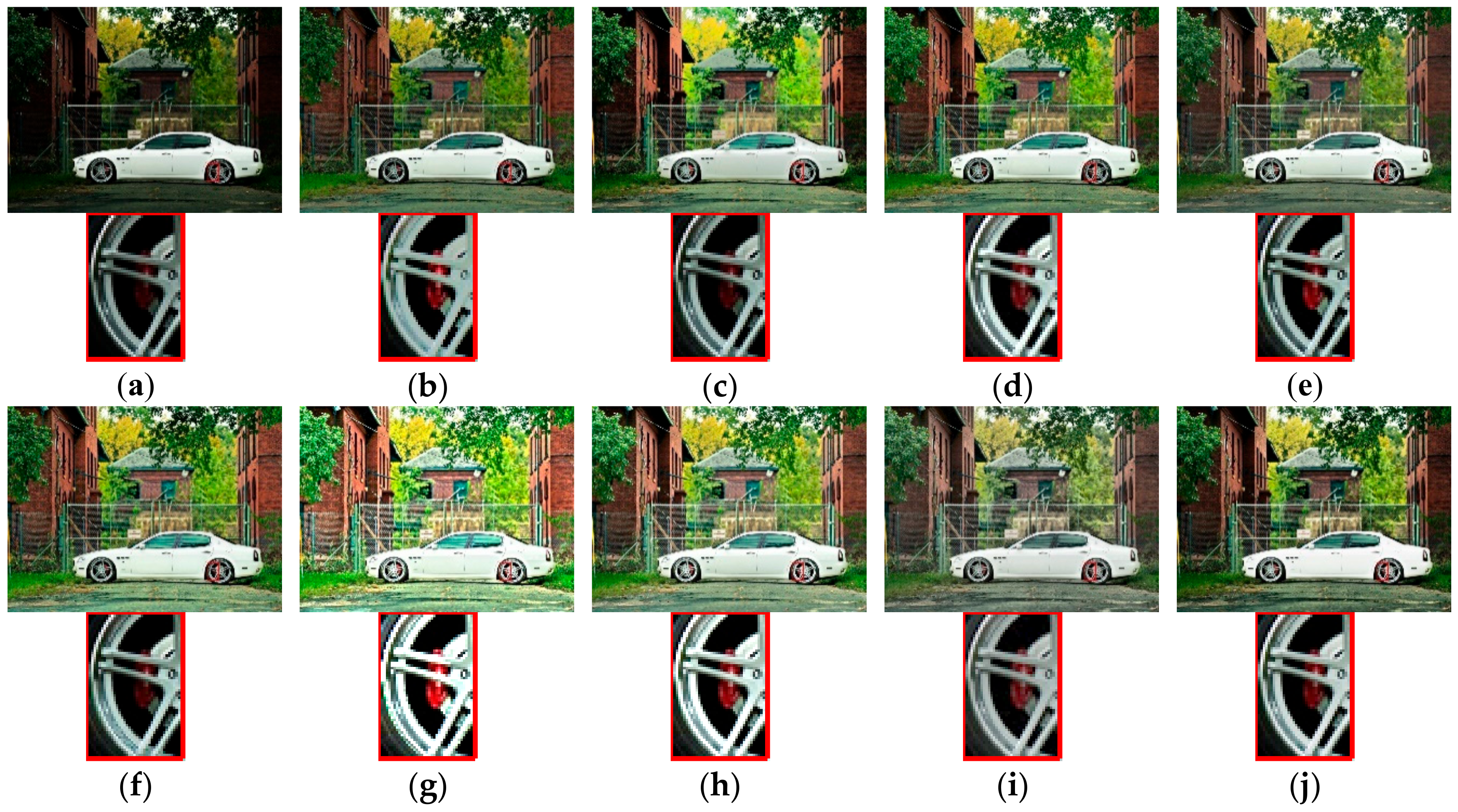

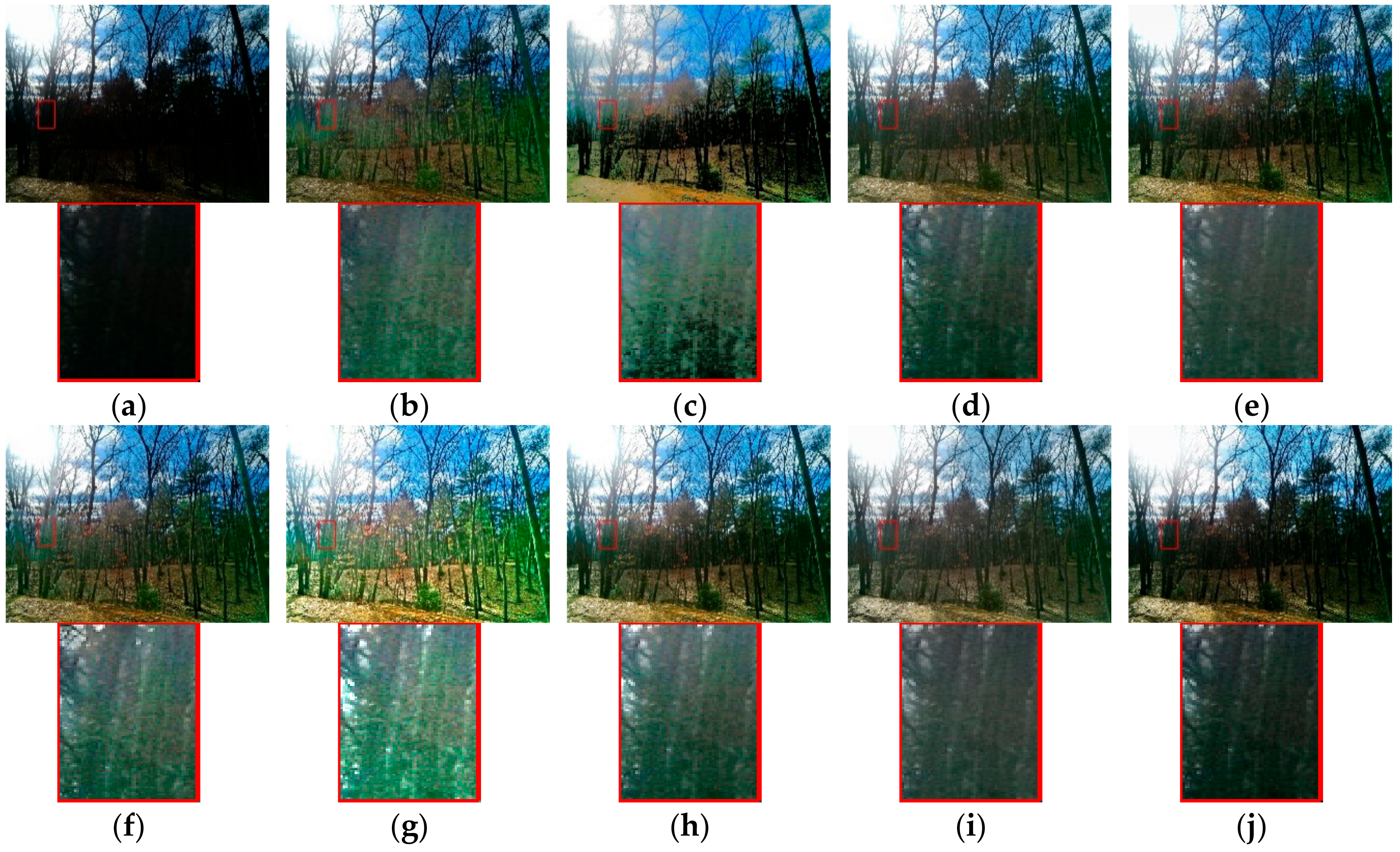

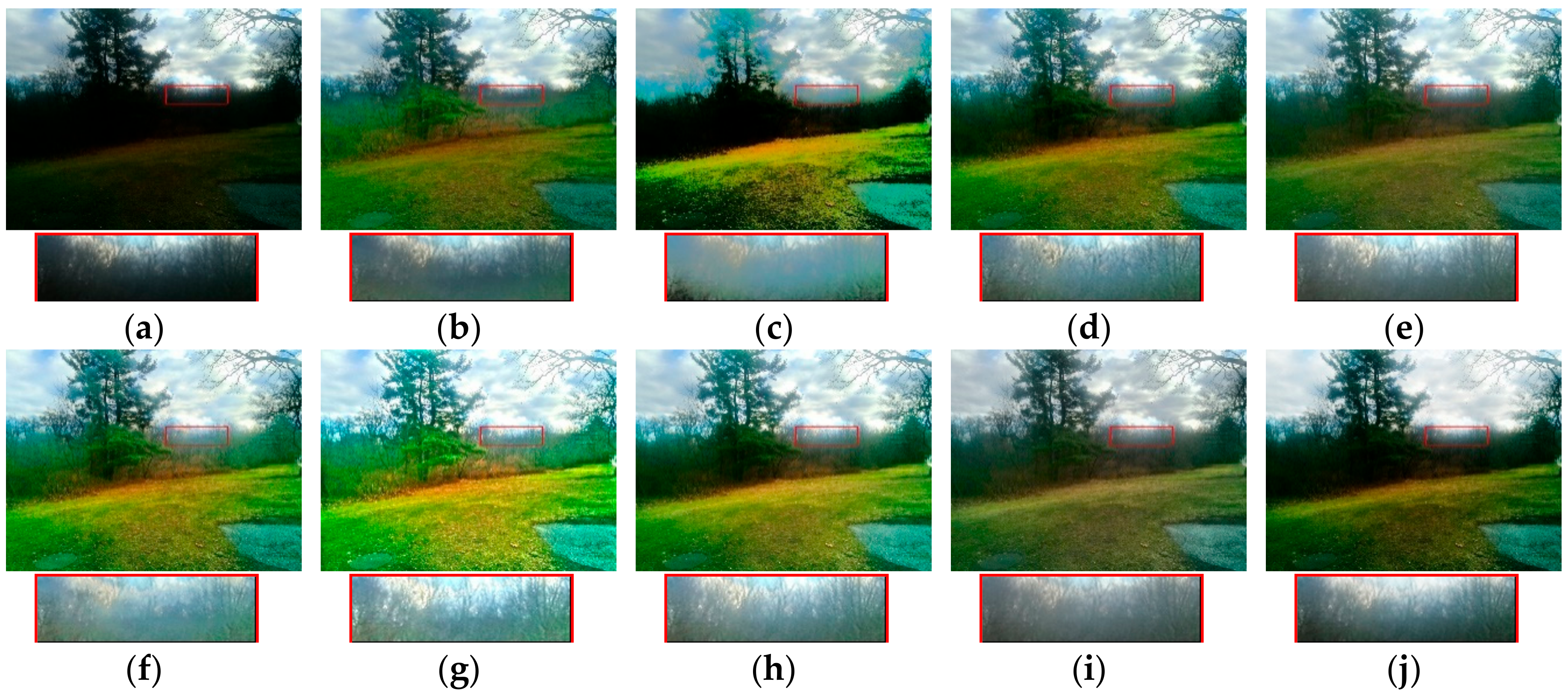

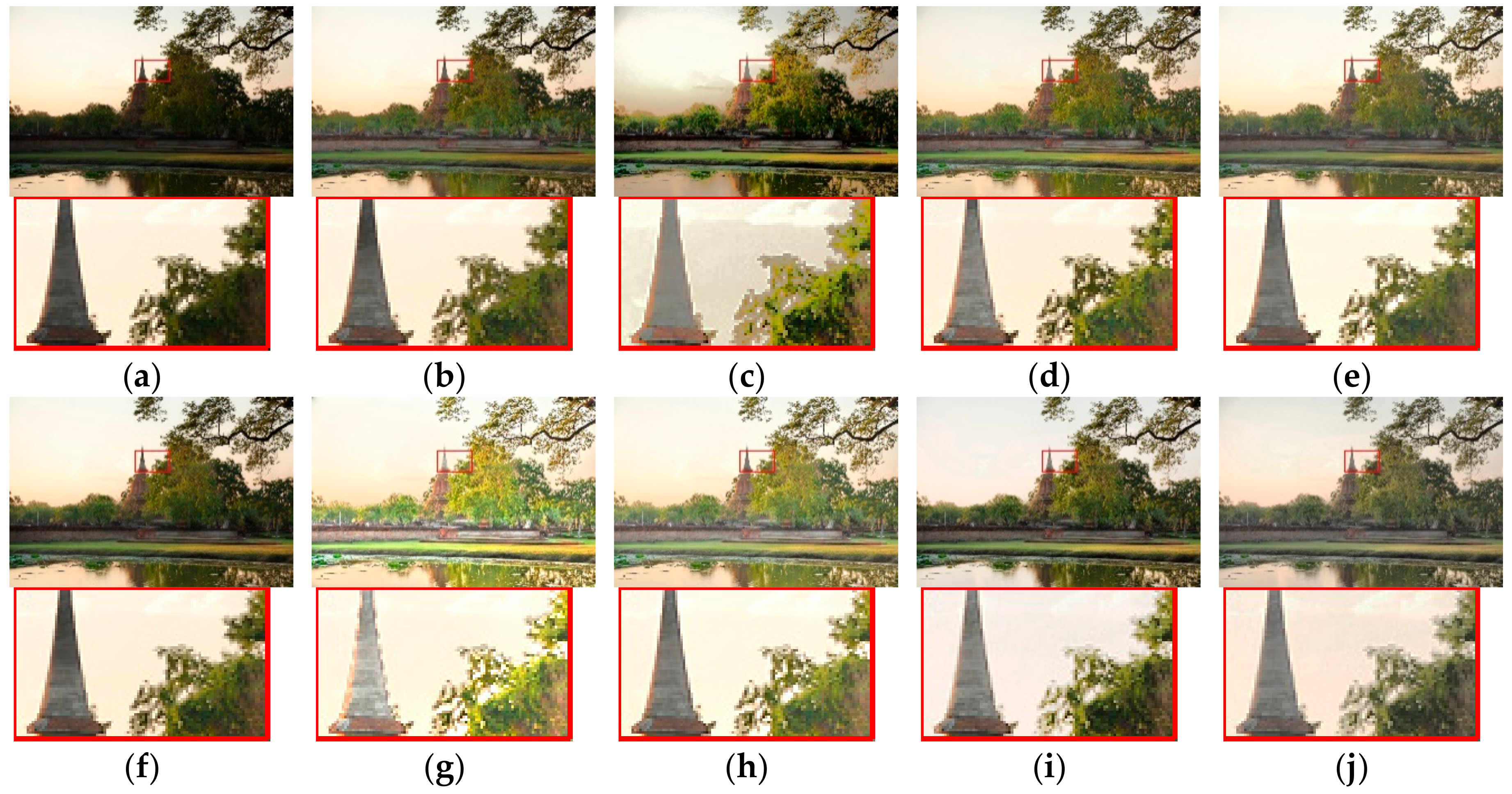

Five representative low-light images (“Car” shown in

Figure 5, “Tree” shown in

Figure 6, “Grass” shown in

Figure 7, “Flower” shown in

Figure 8, and “Tower” shown in

Figure 9) and their enhanced results by different methods are shown in

Figure 5,

Figure 6,

Figure 7,

Figure 8 and

Figure 9. Just below each original image or enhanced image are zoomed details which correspond to the small rectangular part within the bigger image.

NPEA is effective in preserving the naturalness of images, the colors of its results are vivid. However, it does not work well in very dark regions (the treetop in the third images), which results in artifacts and noise in the enhanced image. FPHS has the advantage of enlarging the difference between large regions, but it sometimes led to noise and halos (the light in the second image and the treetop in the third image) due to the merging of similar gray levels. As for MF, BIMEF, and LECARM, they all have good subjective evaluation. NPIE is similar to NPEA. Its results have a better visual effect than NPEA, but it also has the problem of artifacts (the treetop in the third images) and the runtime is too long. LIME effectively improves the overall brightness of the image especially in dark areas and enhances the details. Nevertheless, some regions such as the car in the first image suffer from over-enhancement. By contrast, the proposed method can produce satisfying results in most cases. Although the images enhanced by our method using QCFs (3) are a little dark, the details are effectively enhanced. Comparatively, our methods cannot only generate more natural results with no halo artifacts, but also successfully enhance the visibility of images with low illumination.

4.3. Objective Evaluation

Subjective evaluation alone is not convincing; therefore, we also need to evaluate the performance of our method objectively. There are a large number of objective image quality assessment (IQA) methods, including full-reference IQA [

40], reduced-reference IQA [

41], and no-reference IQA (NR-IQA) [

42,

43]. It is not easy to obtain ground truth images under ubiquitous non-uniform illumination conditions but here we adopt six no-reference IQA (NR-IQA) indexes for objective evaluation: natural image quality evaluator (NIQE) [

42], integrated local NIQE (IL-NIQE) [

43], lightness-order error (LOE) [

24], no-reference image quality metric for contrast distortion (NIQMC) [

44], colorfulness-based patch-based contrast quality index (C-PCQI) [

45], and oriented gradients image quality assessment (OG-IQA) [

46].

NIQE builds a simple and successful space domain natural scene statistic model and then extracts features from it to construct a “quality aware” collection of statistical features. It can measure deviations from statistical regularities. IL-NIQE can successfully obtain local distortion artifacts by integrating the features of natural image statistics derived from a local multivariate Gaussian model. LOE measures the error between the original image and its enhanced version to objectively evaluate the naturalness preservation. Lower values for NIQE, IL-NIQE, and LOE, all indicate better image quality. NIQMC is based on the concept of information maximization and can accurately judge which has larger contrast and better quality between two images. C-PCQI yields a measure of visual quality using a regression module of 17 features through analysis of contrast, sharpness, and brightness. OG-IQA can effective provide quality-aware sources of information by using the image relative gradient orientation. Higher values for NIQMC, C-PCQI, and OG-IQA, all indicate better image quality.

Moreover, to verify the stability of the proposed method, the average assessment results of 100 images are given in

Table 7. One can see that the scores for NIQE and IL-NIQE, that were acquired from our method based on QCFs (2), both rank first, and the scores for NIQMC, OG-IQA, and LOE rank second, third, and fourth out of nine, respectively. One can see that the score for C-PCQI, which is acquired from our method based on QCFs (3), ranks first, the scores for both IL-NIQE and LOE rank second out of nine, and the scores for OG-IQA and NIQMC are in equal third place and equal fourth place, respectively. Hence, we can come to a conclusion that our method based on QCFs (2) achieves the best high-quality for image enhancement and has medium capability of naturalness preservation. Objectively speaking, our method based on QCFs (3) also achieves good scores and hence has good potential for image enhancement.

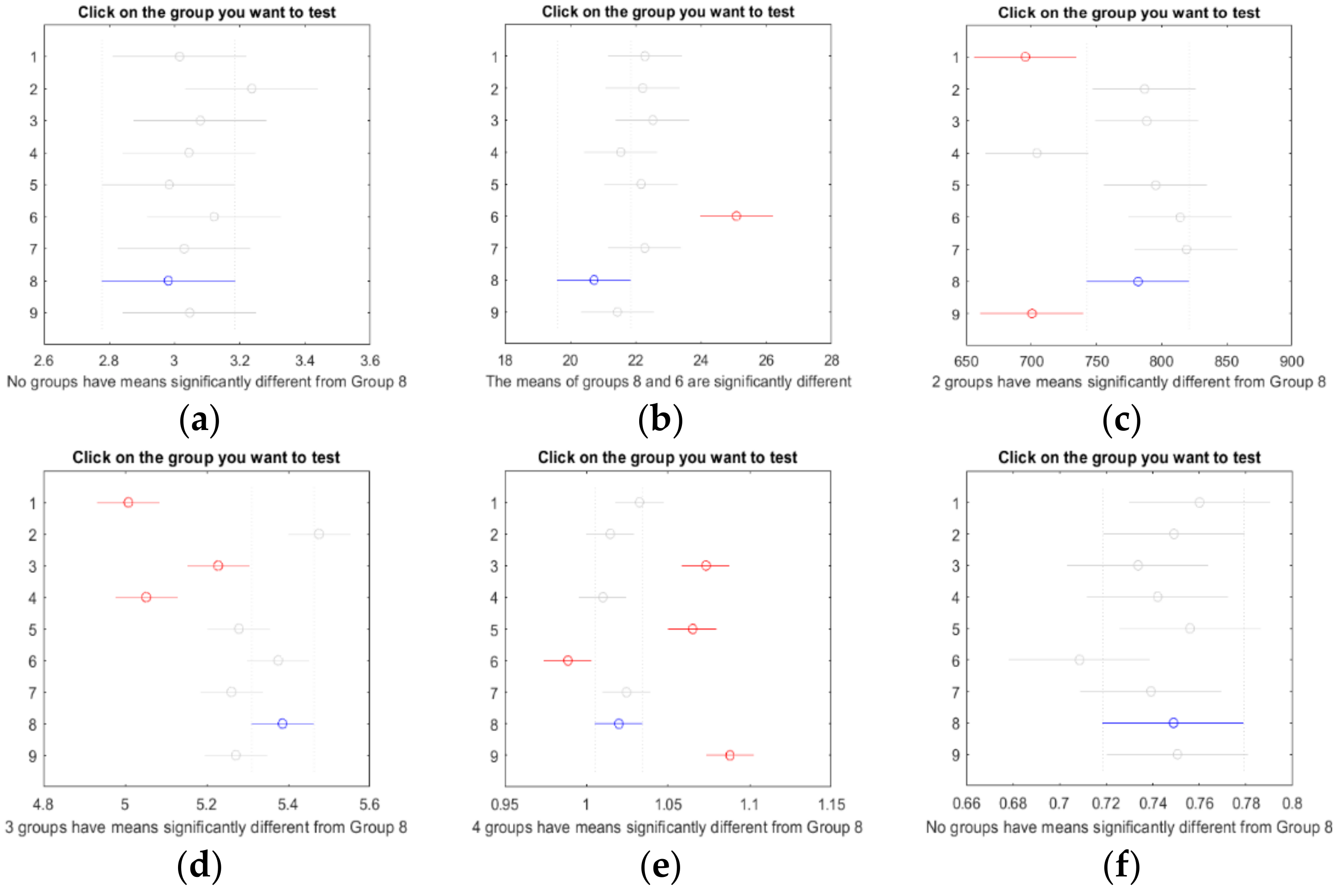

Furthermore, we present the analysis of variance (ANOVA) of the nine involved methods for the 100 test images on six IQA indexes to show the different characteristics of each method. The ANOVA table is listed in

Table 8. The results show that these methods have no significant difference for both NIQE and OG-IQA.

The post hoc multiple comparison test was also conducted using the Matlab function “multcompare”. The significant difference results from QCFs (2) and (3) for different indexes are shown in

Figure 10 and

Figure 11, respectively. The numbers of significant different groups from QCFs (2) and (3) for different indexes are listed in

Table 9.

The timing performance of different methods is given in

Table 10. In general, any algorithm, which is based on iterative learning or machine learning, such as NPEA, FHHS, NPIE, or LIME, is time-consuming. Compared to other methods, our method has a moderate computational performance, since it does not need iteration to output results.

4.4. Sensitivity of the Parameters and

The sensitivity of parameter l in (9) and parameter in (2) and (3) are also compared.

With respect to the number of layers of the pyramids in the fusion procedure (9), we set

l = 4 from the perspective of comprehensive consideration of performance and payload. From the above experiments, we can see that the choice was appropriate. Now we first fix

in QCFs (2) or (3), then enumerate a small range of values around 4 and compute the corresponding average performance indexes for QCFs (2) and (3). The results for QCFs (2) and (3) with different

l and

are reported in

Table 11 and

Table 12, respectively. There is only a slight difference between the indexes from different

l.

Figure 12 shows three low-light images and the corresponding enhanced ones with

l = 3, 4, and 5, respectively. From

Figure 12 we can see that the enhanced images from different

l do not differ much in visual effect. We can see the enhanced images from

l = 4 have high contrast and satisfactory details. Comprehensively considering indicators and visual effects, one can conclude that

l = 4 is a good selection.

As far as the parameter

is concerned, the experiments were analogous. We set its value around 0.4545, and the corresponding average performance indexes with fixed

l for QCFs (2) and (3) were calculated. The results for QCFs (2) and (3) with different

and

l = 4 are reported in

Table 13 and

Table 14, respectively. One can see that the parameter

can balance payload and performance and hence is a good selection.