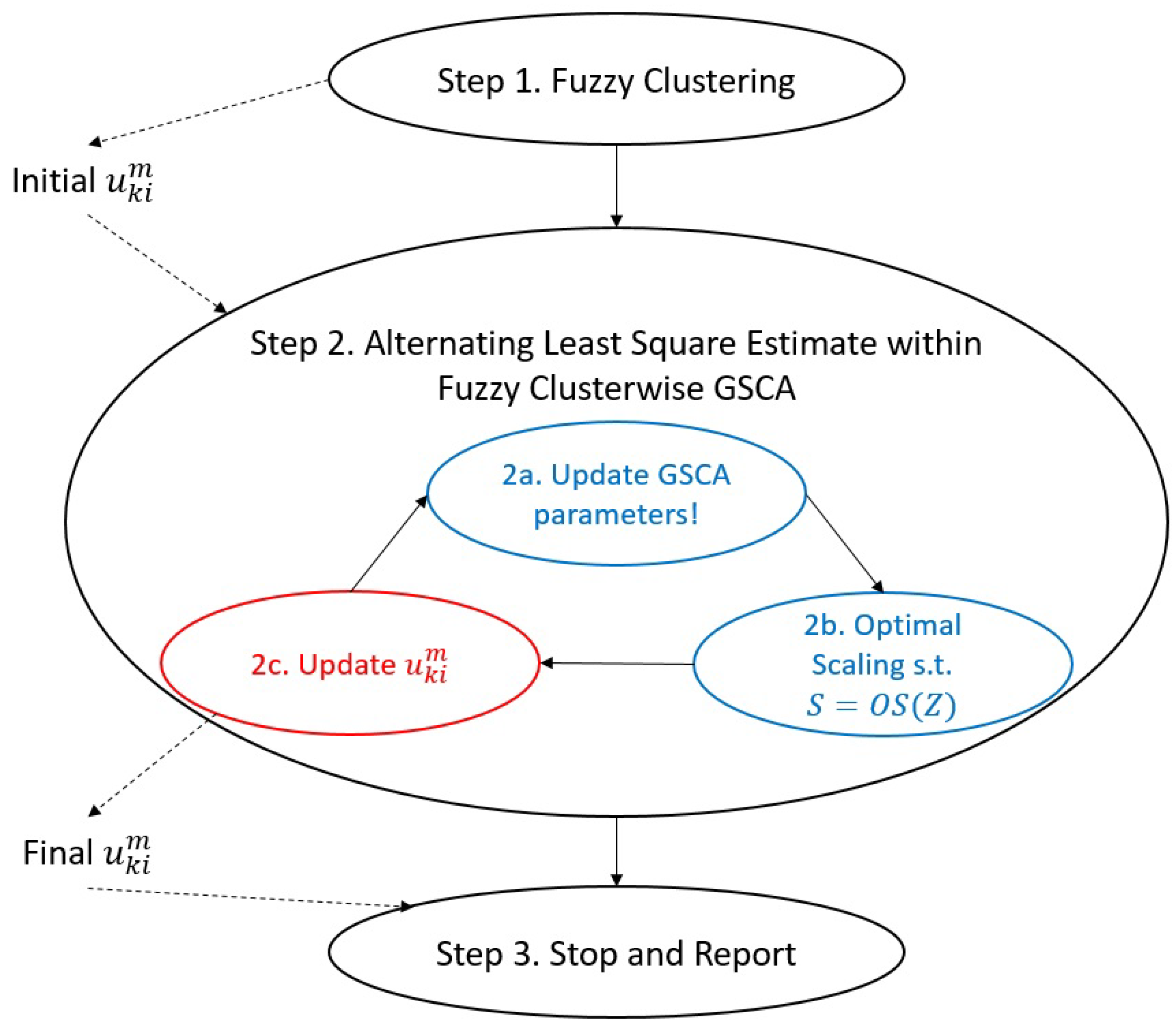

After fitting a fuzzy clusterwise GSCA into 100 replications for each condition (total of 162 conditions), we examined the efficiency of 8 cluster validity indexes, FIT, AFIT, MPC, NCE, NPE, CLVI, FS, and FHV. In this section, we summarized the results of (1) and and (2) and representing the medium number of clusters and the medium number of sample size and the large number of clusters and a small number of sample size. The first case informs the trend of our findings from the 162 conditions, which allows us to formulate our findings as well as propose a holistic criterion. The latter case informs the stability of our findings because the case is most vulnerable due to the complexity in the clusters and the sample variation from the small sample.

4.1. FIT-FHV Method

As Wang W. et al. [

6,

15] pointed out, any single index was not sufficient enough to identify the true number of clusters due to the dependency of data distribution in the efficiency. However, when the model evaluation tools in GSCA were controlled, it was clear that a holistic search performs well in identifying the true number of clusters.

Table 3 summarized the result of

,

,

items, and

over all three types of distributions,

and 3. Note that the symbol,

C, in

Table 3 is the number of clusters used for the estimation.

FIT and AFIT performed similarly and indicated a big drop after C reaches the true number of cluster K. More than 0.2 were dropped at in T1 and T3 while more than 0.1 was dropped at in T2. However, both FIT and AFIT did not show any discrepancy for , , and (true number of clusters). Although FHV showed small values when , there were several cases indicating lower values (). Interestingly, none of the FHV values for were lower than the FHV of . Thus, we found that the smallest FHV indicates the true number of clusters if we consider the index FHV within the range of C whose FIT and AFIT’s values are stable and high. Let us call this holistic criterion as a FIT-FHV method. Only 11 conditions out of 162 simulation conditions did not follow the FIT-FHV criterion. However, those 11 conditions were associated with a small sample size of where we rarely fit the fuzzy clusterwise GSCA into such relatively small data.

FS would be an index to be considered similar in the FIT-FHV method, but FS indicated a monotonicity, which was a drawback in the traditional measures of partition coefficient (PC) and partition entropy (PE) shown in [

15]. Thus, it requires further investigation regarding the index FS. MPC, NCE, NPE, and CLVI indicated neither the true number of clusters nor any consistency in the selection.

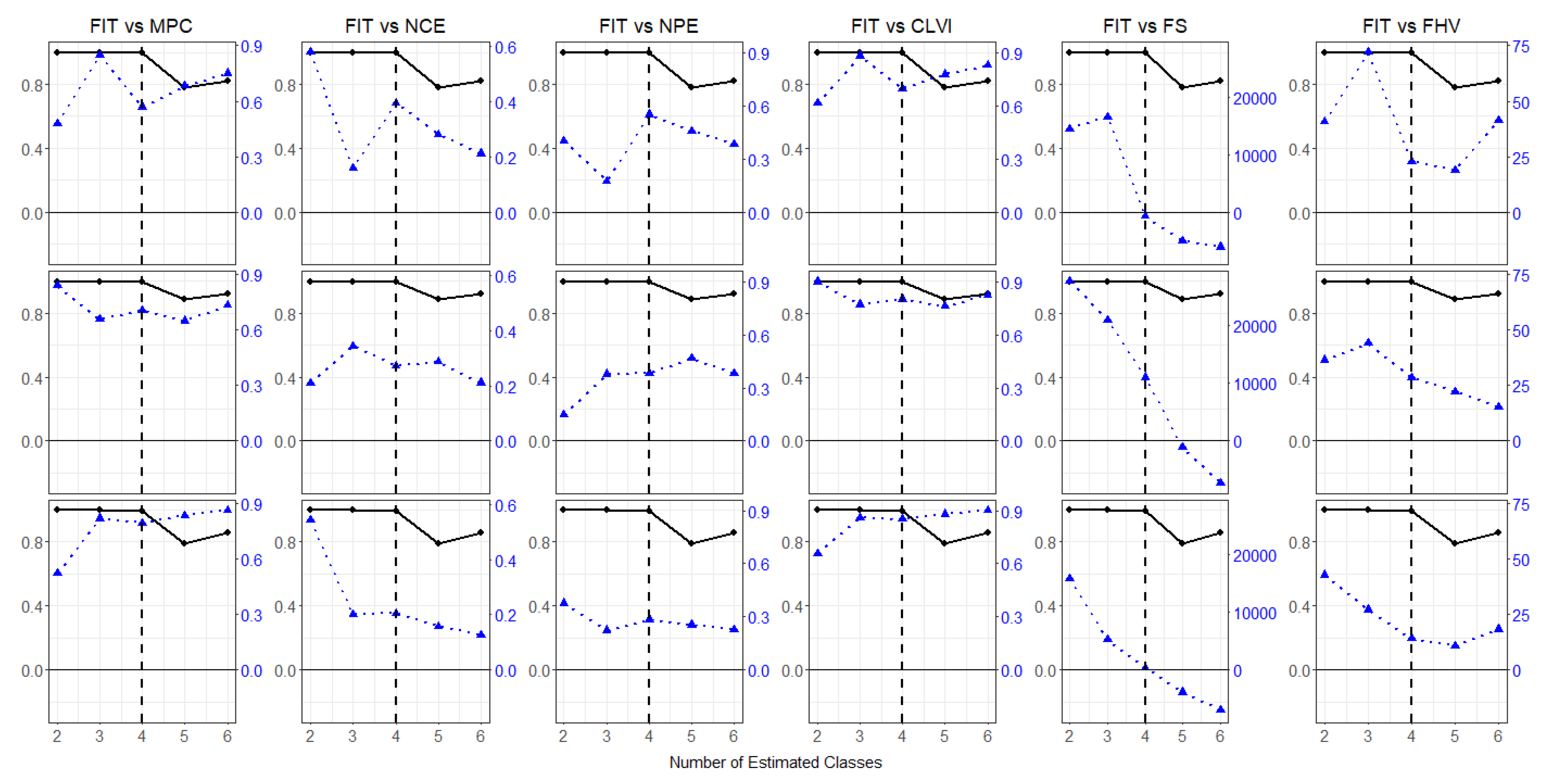

The property of the FIT-FHV criterion was also observed in

Figure 2. Six indexes (blue) were plotted with the profile of FIT (black), where the first row indicates the equally clustered (T1), the second and third row describe the one cluster that has a large proportion (T2), and one cluster has a small proportion (T3) condition, respectively. The profile of FIT clearly showed the big drop when

. If we considered

, FHV was the smallest number when

. Again, FS could also be considered, but the range of values were relatively large from negative to positive. The FIT-FHV method worked well for larger samples,

, larger indicators,

, and smaller ER such as 5% and 10%.

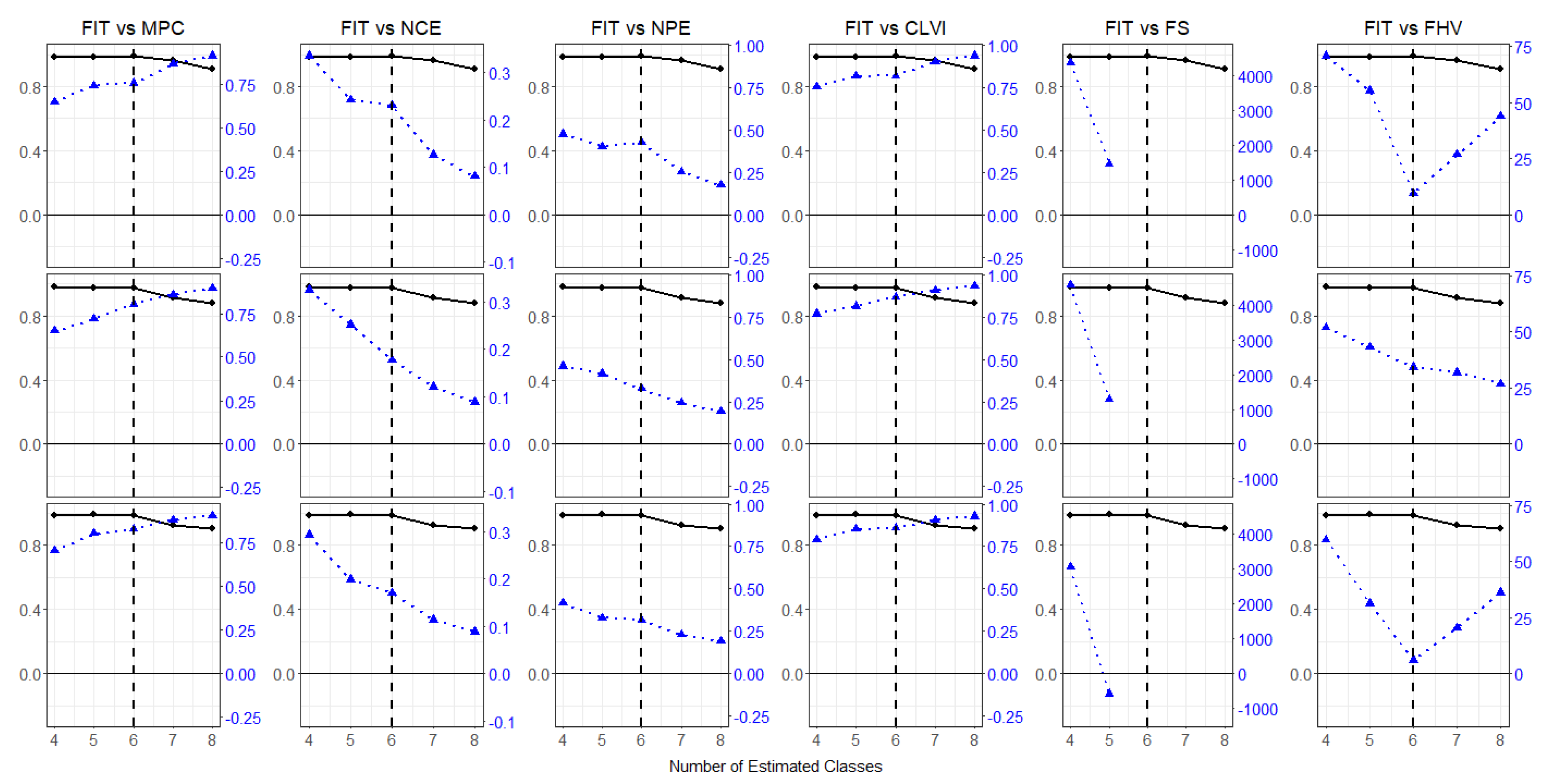

4.2. Stability of the FIT-FHV Method

Furthermore, we examined the stability of the FIT-FHV method by exploring the most vulnerable condition such that

and

(

Table 4). Compared with the previous condition, the case of

and

has two additional clusters but only 200 samples. Although the drops in FIT and AFIT were not bigger than the previous case, we observed the drops in each

T. Based on the

C selected from the FIT criterion, we easily identified the true number of clusters by examining the FHV values. The FIT-FHV method worked well even in the most vulnerable condition in the simulation study.

Figure 3 indicated that MPC, NCE, NPE, CLVI, and FS may work for a criterion, similar to the FIT-FHV method. However, all of the five fit indexes showed monotonicity. Again, FS indicated more severe negative values as

C increased. Thus, we would conclude that the FIT-FHV method outperforms in identifying the true number of clusters in this simulation.

4.3. Prevalence of Clusters When C Is Assumed to be the Optimal Number of Clusters

In fuzzy clusterwise GSCA, it is also of importance that the selected number of clusters, by the FIT-FHV method, consists of similar proportions as in the true population.

Table 5 and

Table 6 summarized the proportions for the two conditions aforementioned. For the case of

and

, T1, T2, and T3 should consist of

,

, and

. In T1, all of the

Cs except

included a very small proportion at the last cluster. In practice, it is hard to characterize a group with such a small proportion. On the other hand, the case of

included 13.38% that was much smaller than 25% but was not comparable with the others. In T2, the true dominant group consists of 60% and we found that the cases of

held a close proportion. However, the last two groups of

and

were 1.42% and 1.62%, respectively. Such lower prevalence rates would not be meaningful. These results indicate that the FIT-FHV method works well. In T3, the true last cluster should consist of 10%, which may indicate that

is a good proxy. However, its FHV was always higher than

, which means that the classification would hold weak compactness (intra-cluster consistency). It is clear that

in T3 was closer to the true prevalence than

(not identifying the fourth cluster),

(too low prevalence in the last cluster), and

(too low prevalence in the last two clusters).

Even though we consider the most vulnerable case of and , the same trend as in the case of and was observed. For the case of and , T1, T2, and T3 should consist of , , and . In T1, all of the Cs except included a very small proportion at the last cluster. On the other hand, the case of included 11.54% that was much smaller than 16.7% but was not comparable with the others. In T2, where the true dominant cluster consists of 60%, we found that the cases of held close proportions to the true ones. However, the second groups of and were relatively higher than 8% as 21.00% and 17.39%, respectively. Thus, it would not be meaningful. In T3, where the true last group consists of 10%, the results may indicate that is good proxy. However, its FHV was always higher than , which means that the classification would hold weak compactness (intra-cluster consistency). Overall, approximates the true prevalences.

4.4. Real World Application in the Field of Public Health

To investigate the applicability of the proposed FIT-FHV method in a real world data, we fitted fuzzy clusterwise GSCA into Add Health data [

26]. The Add Health data used in this study consists of five indicators which are dichotomous responses. For the detailed explanations of Add Health data, please refer to the package

gscaLCA [

4]. The sample size of the data are 5114, but we explored the smaller sample sizes cases corresponding to the sample sizes used in the simulation study. For the smaller sample sizes (250, 500, and 1000), we randomly selected observations out of the entire samples. For each sample size data, we fitted fuzzy clusterwise GSCA over the number of clusters from 2 to 8.

The fit indexes to determine the optimal number of clusters were summarized in

Table 7. The results showed that there were drops regarding FIT and AFIT at

regardless of the sample sizes. Although the drops of FIT and AFIT were not very distinguishable in the large sample size 5114, compared to the small sample size cases, they were still noticeable within the result of sample size 5114. In addition, the results showed that the FHV values were smallest at

for four different sample size data sets when considering only

based on the drops. While applying the FIT-FHV method into the results, we would conclude that

is the optimal clusters in fitting fuzzy clusterwise GSCA in this dataset regardless of sample sizes. In addition, the empirical analysis results demonstrated that MPC, NCE, NPE, CLVI, and FS showed the monotonic tendency like the simulation results. That is, the results of empirical data analysis confirmed that the proposed FIT-FHV method performed well.

In

Table 8, the results of prevalence in the empirical data analysis are presented.

Table 8 showed that the proportions of each cluster were similar overall for different sample sizes, given each number of clusters, although there is some variability. The distinguishable variabilities were usually observed with the sample size 250. When

, the selected number of clusters based on the FIT-FHV method, the ranges of prevalence except for the sample size 250 were 29.59 to 32.93%, 26.69 to 28.48%, 19.13 to 20.00%, and 18.83 to 19.58% for each cluster, respectively. With the sample size 250, the prevalence is 37.65%, 23.08%, 21.24%, and 17.81%. These differences among the results of the sample sizes would be because of either randomness or the instability of small sample size like the results of the simulation study.