Space Habitat Data Centers—For Future Computing

Abstract

1. Introduction

2. Background

3. Problem Description

4. Proposed Solution

4.1. SHDC Network Architecture

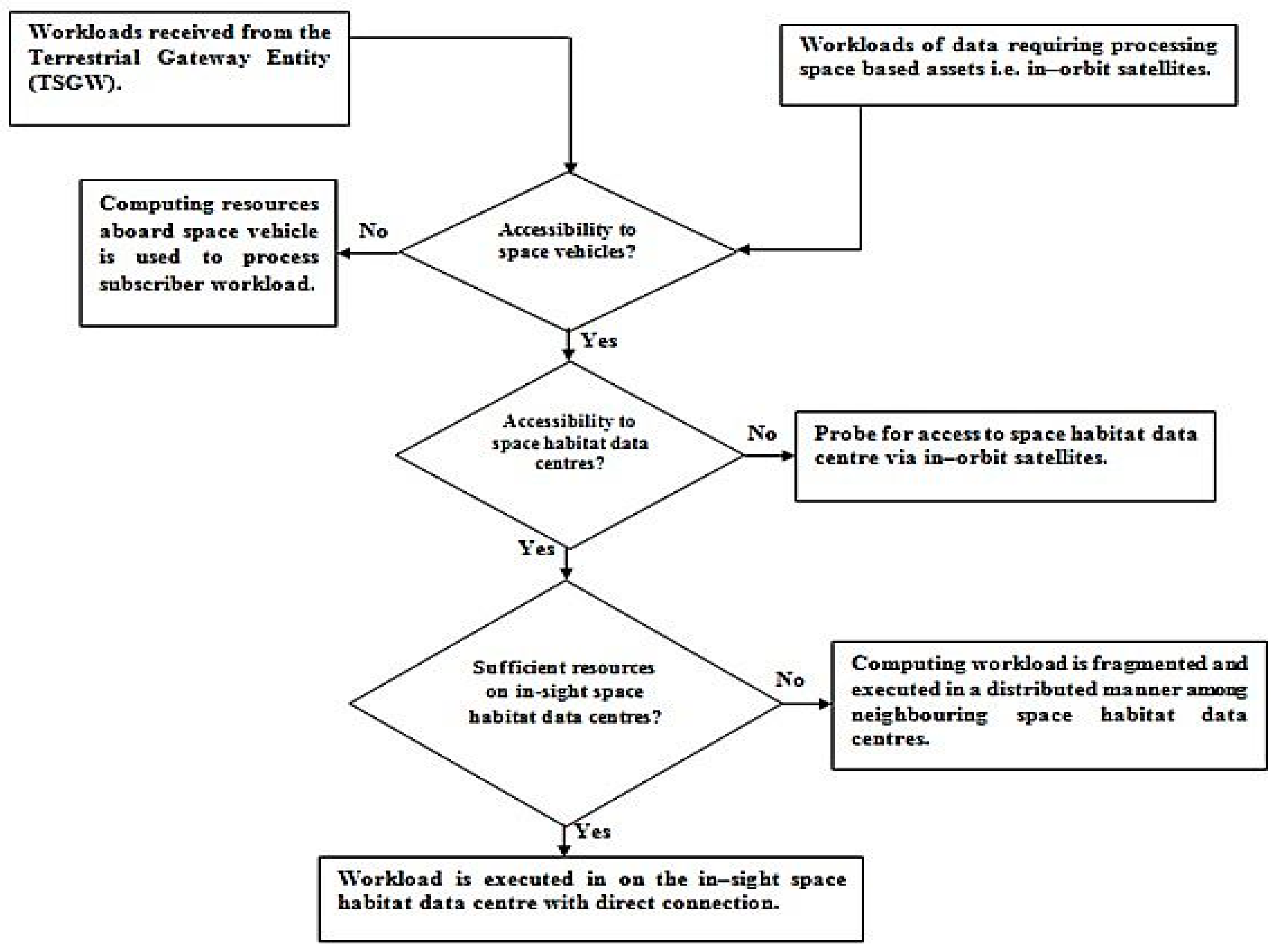

4.2. Proposed Solution—SHDC Access to Computing Resources

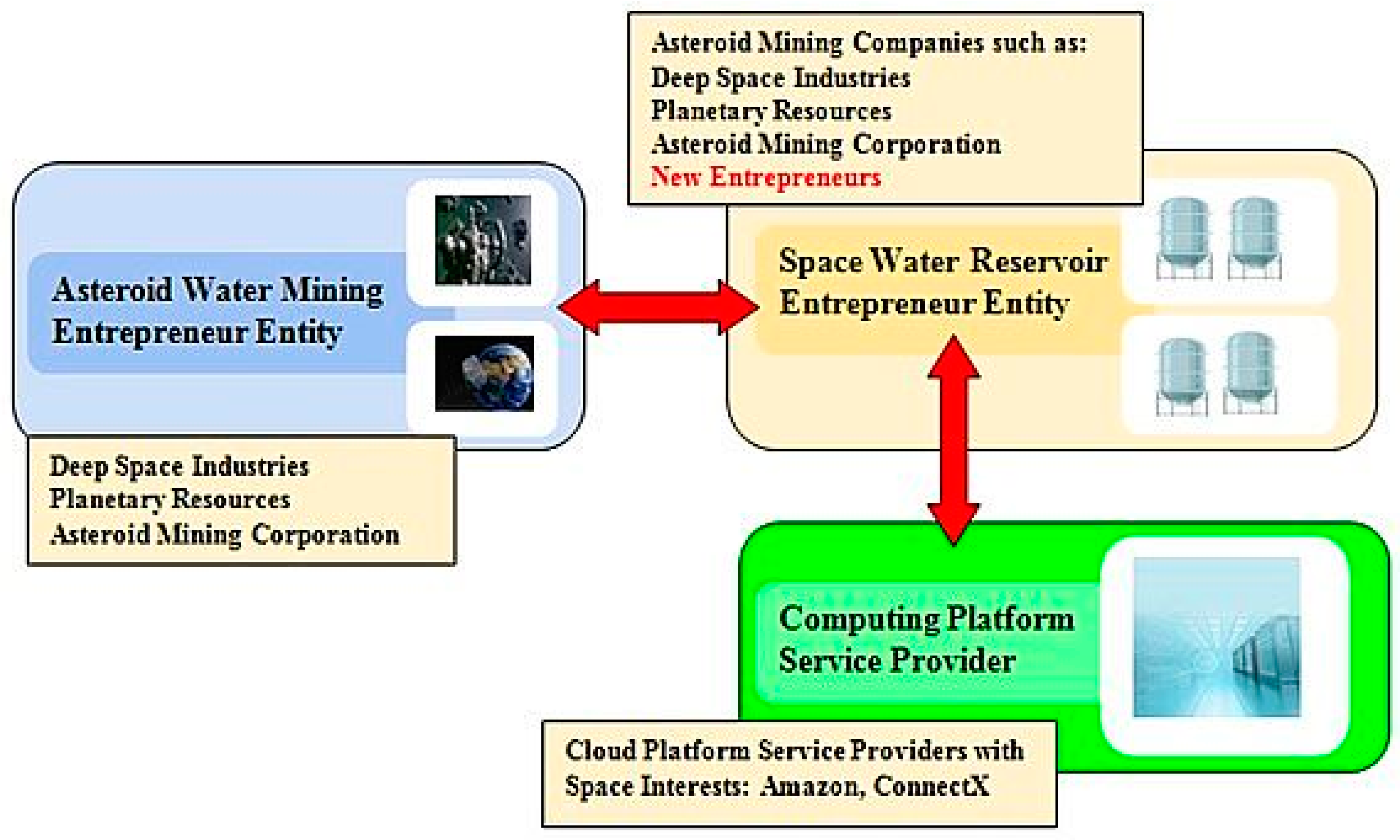

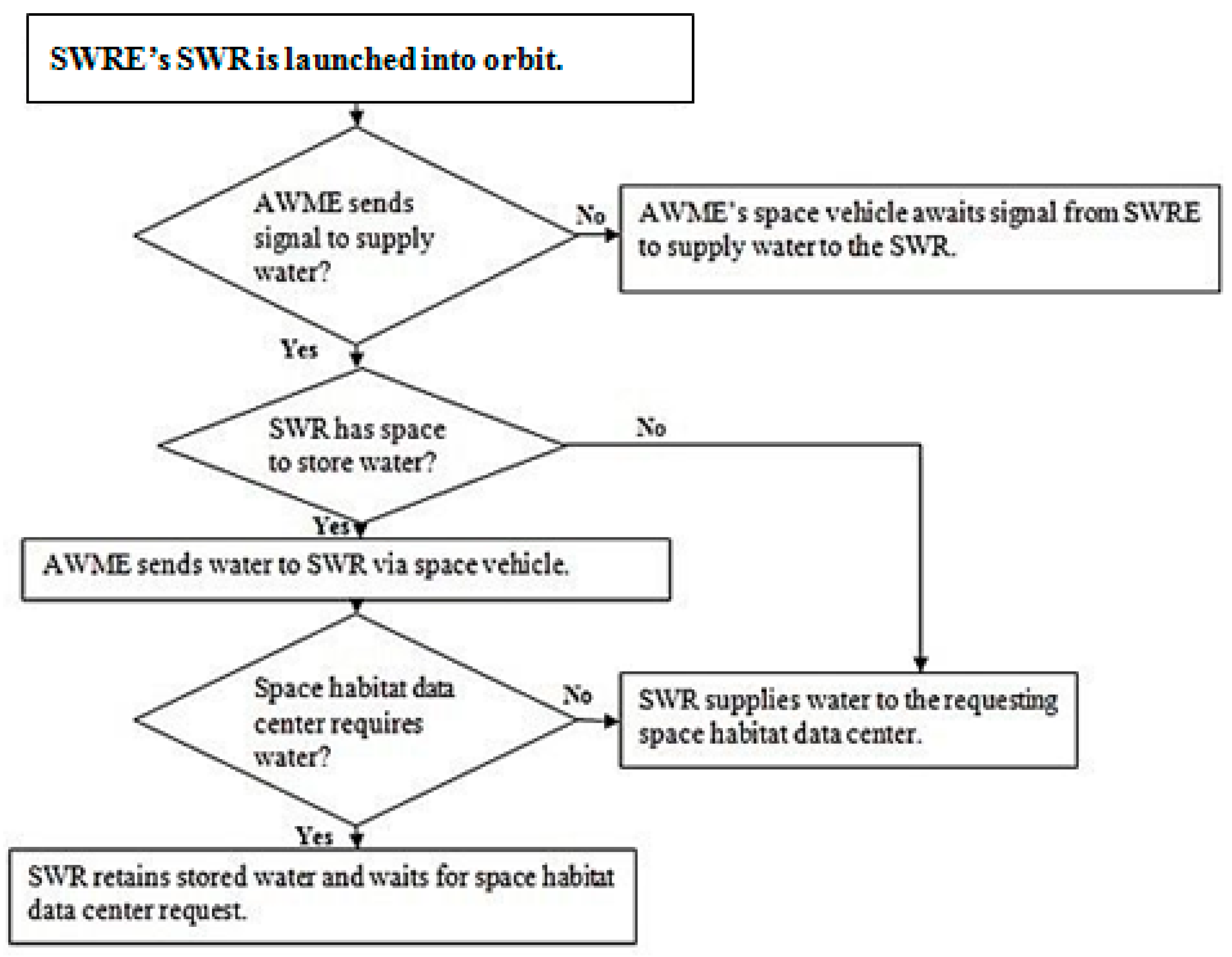

4.3. SHDC—Supplying Asteroid Water

5. Performance Formulation

5.1. Computing Platform Access Latency

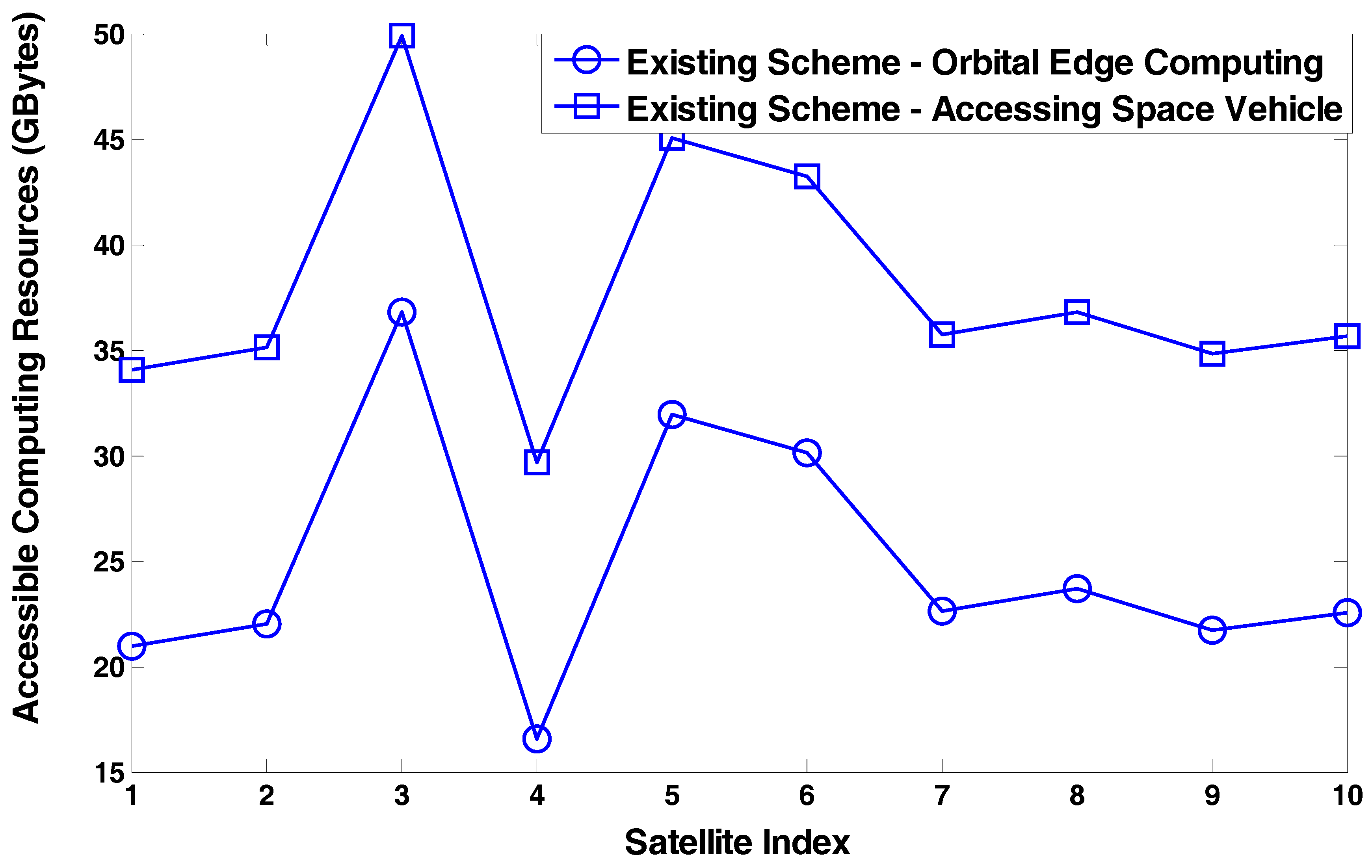

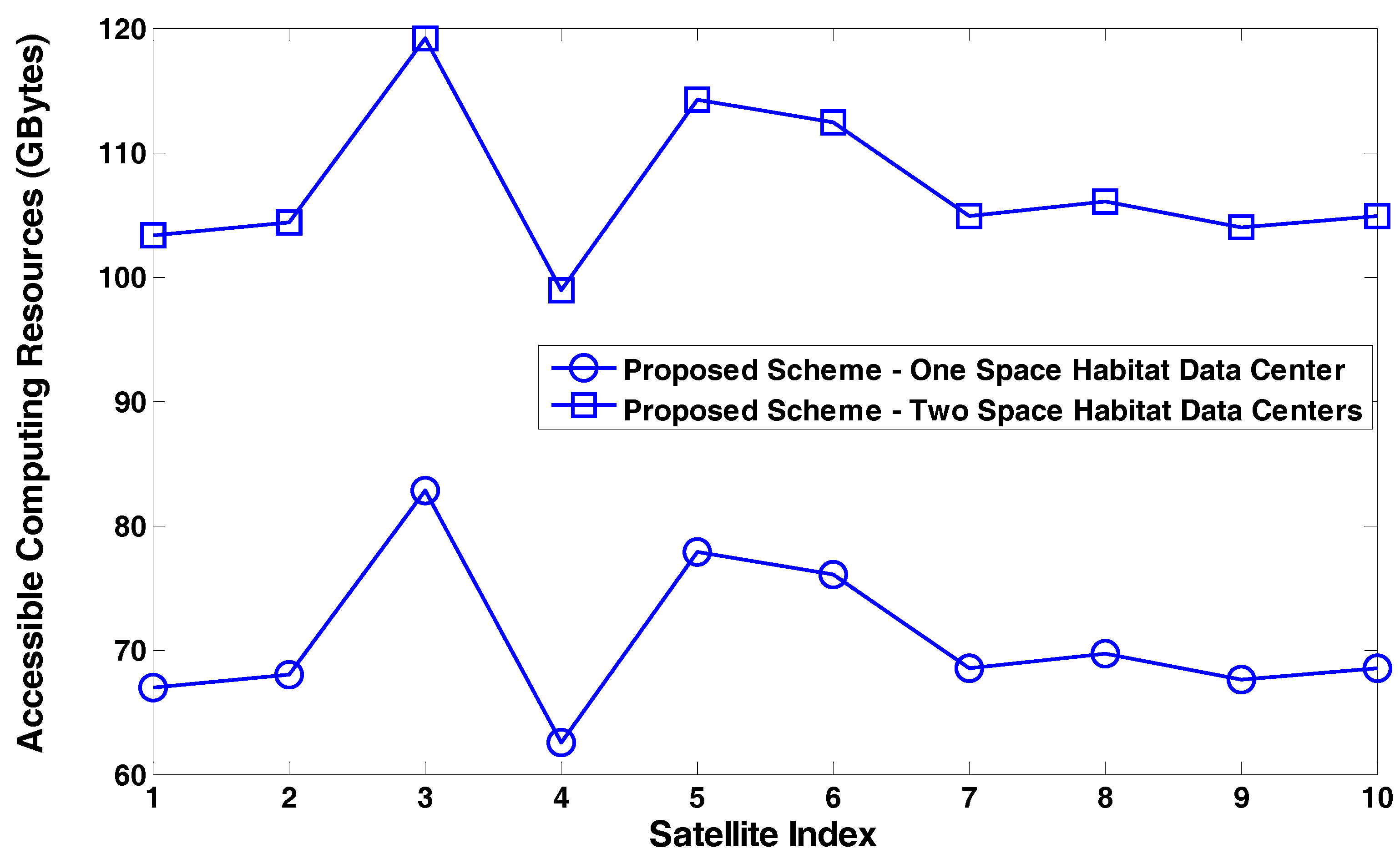

5.2. Accessible Computing Resources in Space Segment

6. Feasibility and Performance Evaluation

6.1. Feasibility of Accessing Asteroid Water Resources

6.2. Performance Evaluation and Benefits—Computing-Resource-Related QoS

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Avgerinou, M.; Bertoldi, P.; Castellazi, L. Trends in Data Centre Energy Consumption under the European code of conduct for data centre energy efficiency. Energies 2017, 10, 1470. [Google Scholar] [CrossRef]

- Hintemann, R.; Hinterholzer, S. Energy consumption of data centers worldwide—How will the internet become green? In Proceedings of the 6th International Conference on ICT for Sustainability, Lappeenranta, Finland, 10–14 June 2019; p. 16. [Google Scholar]

- Coroner, C.; Ashman, M.; Nilsson, L.J. Data Centres in Future European Energy Systems—Energy efficiency, integration and policy. Energy Effic. 2020, 13, 129–144. [Google Scholar]

- Wang, P.; Cao, Y.; Ding, Z. Resources planning strategies for data centre micro-grid considering water footprints. In Proceedings of the IEEE Conference on Energy Internet and Energy System Integration, Beijing, China, 20–22 October 2018; pp. 1–6. [Google Scholar]

- Capozzoli, A.; Primiceri, G. Cooling Systems in Data Centers: State of art and emerging technologies. Energy Procedia 2015, 83, 484–493. [Google Scholar] [CrossRef]

- Amazon. Reducing Water Used for Cooling in AWS Data Centers. Available online: https://aws.amazon.com/about-aws/sustainability/ (accessed on 22 March 2020).

- Flucker, S.; Tozer, R.; Whitehead, B. Data Centre sustainability—Beyond energy efficiency. Build. Serv. Eng. Res. Technol. 2018, 39, 173–182. [Google Scholar] [CrossRef]

- Taheri, S.; Goudarzi, M.; Yoshie, O. Learning—Based power prediction for geo-distributed data centres: Weather Parameter analysis. J. Big Data 2020, 7, 1–16. [Google Scholar] [CrossRef]

- Li, Y.; Wen, Y.; Guan, K.; Tao, D. Transforming cooling optimization for Green Data Center via Deep Reinforcement Learning. IEEE Trans. Cybern. 2019, 50, 2002–2013. [Google Scholar] [CrossRef]

- Gough, C.; Steiner, I.; Saunders, W.A. Data center management. In Energy Efficient Servers: Blueprints for Data Center Optimization—The IT Professional’s Operational Handbook; Springer: Berlin, Germany, 2015; pp. 307–318. [Google Scholar]

- Zhang, Y.; Wei, Z.; Zhang, M. Free cooling technologies for data centres: Energy saving mechanism and applications. Energy Procedia 2017, 143, 410–415. [Google Scholar] [CrossRef]

- Le, D.V.; Li, Y.; Wang, R.; Tan, R.; Wong, Y.; Wen, Y. Control of Air Free—Cooled Data Centers in Tropics via Deep Reinforcement Learning. In Proceedings of the 6th ACM International Conference on Systems for Energy—Efficient Buildings, Cities and Transportation (BuildSys’19), New York, NY, USA, 13–14 November 2019. [Google Scholar]

- Periola, A. Incorporating diversity in cloud–computing: A novel paradigm and architecture for enhancing the performance of future cloud radio access networks. Wirel. Netw. 2019, 25, 3783–3803. [Google Scholar] [CrossRef]

- Periola, A.A.; Alonge, A.A.; Ogudo, K.A. Architecture and System Design for Marine Cloud Computing Assets. Comput. J. 2020, 63, 927–941. [Google Scholar] [CrossRef]

- Cutler, B.; Fowers, S.G.; Kramer, J.; Peterson, E. Dunking the data center. IEEE Spectr. 2017, 54, 26–31. [Google Scholar] [CrossRef]

- Huang, H.; Guo, S.; Wang, K. Envisioned Wireless Big Data Storage for Low Earth Orbit Satellite Based Cloud. IEEE Wirel. Commun. 2018, 25, 26–31. [Google Scholar] [CrossRef]

- Periola, A.A.; Kolawole, M.O. Space Based Data Centres: A Paradigm for Data Processing and Scientific Investigations. Wirel. Pers. Commun. 2019, 107, 95–119. [Google Scholar] [CrossRef]

- Donoghue, A. The Idea of Data Centers in Space Just Got a Little Less Crazy. 9 February 2019. Available online: https://www.datacentreknowledge.com/edge-computing/idea-data-centers-space-just-got-little-less-crazt (accessed on 1 March 2020).

- Wang, Y.; Yang, J.; Guo, X.; Qu, Z. Satellite Edge Computing for the Internet of things in Aerospace. Sensors 2019, 19, 4375. [Google Scholar] [CrossRef] [PubMed]

- Calla, P.; Fries, D.; Welch, C. Asteroid mining with small spacecraft and its economic feasibility. arXiv 2019, arXiv:1808.05099. Available online: https://arxiv.org/pdf/1808.05099.pdf (accessed on 27 March 2020).

- MacDonald, A. Emerging Space—The Evolving Landscape of 21st Century American Spaceflight; NASA Office of the Chief Technologist: Washington, DC, USA, 2014. Available online: https://www.nasa.gov/sites/default/files/files/EmergingSpacePresentation20140829.pdf (accessed on 9 April 2014).

- akolev, V. Mars Terraforming—The Wrong Way. LP1 Contribution No. 1989. In Proceedings of the Planetary Science Vision 2050 Worksop, Washington, DC, USA, 27 February–1 March 2017; Available online: https://www.hou.usra.edu/meetings/V2050/pdf/8010.pdf (accessed on 21 March 2020).

- Smitherman, D.; Griffin, B. Habitat Concepts for Deep Space Exploration. In AIAA Space 2014 Conference and Exposition; AIAA: San Diego, CA, USA, 2014; pp. 2014–4477. [Google Scholar]

- Griffin, B.N.; Lewis, R.; Smitherman, D. SLS–Derived Lab: Precursor to Deep Space Human Exploration. In Proceedings of the AIAA Space 2015 Conference and Exposition, Pasadena, CA, USA, 31 August–2 September 2015. [Google Scholar] [CrossRef]

- Smitherman, D.V.; Needham, D.H.; Lewis, R. Research Possibilities beyond the deep space gateway. LPI Contrib No. 2063. In Proceedings of the Deep Space Gateway Science Workshop, Denver, CO, USA, 27 February–1 March 2018; Available online: https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/20180002054.pdf (accessed on 10 April 2020).

- Kalam, A.P.J.A. The Future of Space Exploration and Human Development. In the Pardee Papers; Boston University Creative Services: Boston, MA, USA, 2008. [Google Scholar]

- Lia, S.I.; Giuho, C.; Nazir, Z. Sustainable Quality: From Space Stations to Everyday Contexts on Earth: Creating Sustainable work environments. In Proceedings of the NES 2015, Nordic Ergonomics Society, 47th Annual Conference, Lillehammer, Norway, 1–4 December 2015; pp. 1–8. [Google Scholar]

- Musk, E. Making Humans a Multi-Planetary species. New Space 2017, 5, 46–61. [Google Scholar] [CrossRef]

- Morad, S.; Kalita, H.; Nallapu, R.T.; Jekan, T. Building Small Satellites to Live through the Kessler Effect. Available online: arXiv.org/pdf/1909.01342.pdf (accessed on 22 June 2020).

- Banik, J.; Chapman, D.; Kiefer, S.; Lacorte, P. International Space Station (ISS) Roll–Out Solar Array (ROSA) Spacefliers Experiment Mission and Results. In Proceedings of the IEEE 7th World Conference Photovoltaic Energy Conversion (WCPEC), Waikoloa Village, HI, USA, 10–15 June 2018; pp. 3524–3529. [Google Scholar]

- Hampson, J. The Future of Space Commercialization; Niskanen Centre Research Paper: Washington, DC, USA, 2017. [Google Scholar]

- Davis, A.G. Space commercialization: The Need to immediately renegotiate treaties implicating. Int. Environ. Law 2011, 3, 363–392. [Google Scholar]

- Gatens, R. Commercializing Low—Earth Orbit and the role of the International Space Station. In Proceedings of the 2016 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2016; pp. 1–8. [Google Scholar]

- Rutley, T.M.; Robinson, J.A.; Gerstenmeier, W.H. The International Space Station: Collaboration, Utilization and Commercialization. Soc. Sci. Q. 2016, 98, 1160–1174. [Google Scholar] [CrossRef]

- Kganyago, M.; Mhangara, P. The Role of African Emerging Space Agencies in Earth Observation Capacity Building for Facilitating the Implementation and Monitoring of the African Development Agenda: The Case of African Earth Observation Program. Int. J. Geo-Inform. 2019, 8, 292. [Google Scholar] [CrossRef]

- Shammas, L.; Hohen, T.B. One giant leap for capitalist kind: Private enterprise in outer space. Palgrave Commun. 2019, 5, 1–9. [Google Scholar] [CrossRef]

- Oluwafemi, F.A.; Torre, A.; Afolayan, E.M.; Ajayi, B.M.; Dhutal, B.; Almanza, J.G.; Potrivitu, G.; Creach, J.; Rivolta, A. Space Food and Nutrition in a long term manned mission. Adv. Astronaut. Sci. Technol. 2018, 1, 1–21. [Google Scholar] [CrossRef]

- Shkolink, E.L. On the verge of an astronomy cubesat revolution. Nat. Astron. 2018, 2, 374–378. [Google Scholar] [CrossRef]

- Gallozzi, S.; Scardia, M.; Maris, M. Concerns about ground based astronomical observations: A Step to safeguard the astronomical sky. arXiv 2020, arXiv:2001.10952. [Google Scholar]

- Beasley, T. NRAO—Statement on Starlink and Constellations of Communications Satellites. Available online: public.nrao.edu/news/nrao-statements-commsats/ (accessed on 31 May 2019).

- Seitzer, P. Mega—Constellations and astronomy. In Proceedings of the IAA Debris Meeting, Washington, DC, USA, 19 October 2019. [Google Scholar]

- Adams, C. Will the Data Centres of the Future Be in Space? Parkplace Technologies: Mayfield Heights, OH, USA, 2020. [Google Scholar]

- Lai, J.; Zhang, Y.; Zhong, L.; Qu, Y.; Liu, R. Enabling Edge Computing Ability in Mobile Satellite Communication Networks. In IOP Conference Series: Materials, Science and Engineering; IOP: London, UK, 2019; Volume 685, pp. 1–8. [Google Scholar]

- Denby, B.; Lucia, B. Orbital Edge Computing: Machine Inference in space. IEEE Comput. Arch. Lett. 2019, 18, 59–62. [Google Scholar] [CrossRef]

- Straub, J.; Mohammad, A.; Berk, J.; Nervold, A.K. Above the cloud computing: Applying cloud computing principles to create an orbital services model. In Sensors and Systems for Space Applications VI; Proceedings SPIE, 8739; SPIE Press: Bellingham, WA, USA, 2013; p. 873909. [Google Scholar]

- Fernandez, Y.R.; Li, J.Y.; Howell, E.S.; Woodney, L.M. Asteroids and Comets in Treatise in Geophysics; Schubert, G., Spohn, T., Eds.; Elsevier: Amsterdam, The Netherlands, 2015; Volume 10. [Google Scholar]

- Alexander, C.M.O.D.; McKeegan, K.D.; Altwegg, K. Water Reservoirs in small planetary bodies: Meteorites, Asteroids and Comets. Space Sci. Rev. 2018, 214, 1–63. [Google Scholar] [CrossRef]

- Molag, K.; Winter, B.D.; Toorenburgh, Z.; Versteegh, B.G.Z.; Westrenen, W.V.; Pau, K.D.; Knecht, E.; Borsten, D.; Foing, B.H. Water–I Mission Concept: Water—Rich Asteroid Technological Extraction Research. In Proceedings of the 49th Lunar and Planetary Science Conference 2018 (LPI Contrib. No. 2083), The Woodlands, TX, USA, 19–23 March 2018. [Google Scholar]

- Webster, I. ‘AsteranK Database’. Available online: https://www.asterank.com (accessed on 12 April 2020).

- Saeed, N.; Elzanaty, A.; Almorad, H.; Dahrouj, H.; Al–Naffouri, T.Y.; Alouini, M.S. CubeSat Communications: Recent Advances and Future Challenges. IEEE Commun. Surv. Tutor. 2020. [Google Scholar] [CrossRef]

| s/n | Name | Near-Earth Approach Dates |

|---|---|---|

| 1 | 1991 DB | 6 March 2027; 29 February 2036; 17 June 2083—three approaches |

| 2 | Seleucus | 24 March 2037; 6 April 2040; 8 May 2069; 27 March 2072–4 Approaches |

| 3 | 1998 KU2 | 15 October 2025; 31 July 2042; 18 September 2069p; 28 June 2086; 18 October 2096—five approaches |

| 4 | 2001 PD 1 | 3 October 2021; 23 September 2031; 1 September 2041; 1 November 2118—four approaches |

| 5 | 1992 NA | 27 October 2029; 14 August 2055; 25 October 2066; 12 October 2092; 08 August 2118—five approaches |

| 6 | 2002-AH29 | 19 January 2032; 2 April 2047; 6 April 2062; 28 January 2092; 19 February 2107—five approaches |

| 7 | David-Harvey | 16 December 2033; 10 December 2072; 17 December 2111—three approaches |

| 8 | 1999 VN6 | 27 November 2031; 22 November 2047; 25 November 2056; 1 December 2072; 01 December 2088; 30 November 2104—six approaches. |

| 9 | 2001 XS 1 | 08 December 2049; 08 December 2097—two approaches |

| 10 | 2001 SJ262 | 17 October 2057; 06 October 2062; 14 October 2103—three approaches |

| 11 | 1997AQ 18 | 11 May 2022; 21 December 2023; 16 August 2028; 14 June 2033; 16 December 2034; 1 May 2038; 30 September 2039; 31 July 2044; 30 December 2045; 31 May 2049; 14 December 2050; 26 April 2054; 18 September 2055; 5 January 2056; 24 July 2060; 28 December 2061; 27 May 2065; 14 December 2066; 26 April 2070; 18 September 2071; 5 January 2072; 27 July 2076; 29 December 2077; 1 June 2081; 15 December 2082; 30 April 2086; 28 September 2087; 1 January 2088; 6 August 2092; 17 December 2098; 7 May 2102—31 approaches |

| 12 | 2002 DH2 | 6 July 2046; 8 April 2049; 10 Mar 2052; 22 February 2055; 6 July 2108—five approaches |

| 13 | Betulia | 7 June 2028; 8 May 2090; 13 May 2103—three approaches |

| 14 | Sigurd | 12 October 2022; 18 September 2027; 7 August 2032; 2 October 2045, 30 August 2050; 20 September 2068; 19 August 2073; 15 October 2086; 9 September 2091; 8 August 2096; 30 September 2109—11 approaches |

| 15 | 1991XB | 30 November 2067; 28 November 2118–2 Approaches |

| 16 | 2000-YO-29 | 24 December 2027; 10 June 2040; 26 December 2049; 17 June 2062; 28 December 2071; 21 June 2084; 29 December 2093; 26 June 2106; 31 December 2115—eight approaches |

| s/n | Access Dates | Access Intervals | Access Dates | Access Intervals |

|---|---|---|---|---|

| 1 | 3 October 2021 | [248;181] | 27 November 2031 | [81;227] |

| 2 | 11 May 2022 | 19 January 2032 | ||

| 3 | 12 October 2022 | [435;688] | 7 August 2032 | [322;212] |

| 4 | 21 December 2023 | 14 June 2033 | ||

| 5 | 15 October 2025 | [536;223] | 16 December 2033 | [390;469] |

| 6 | 6 Mar 2027 | 16 December 2034 | ||

| 7 | 18 September 2027 | [127;192] | 29 February 2036 | [414;431] |

| 8 | 24 December 2027 | 24 Mar 2037 | ||

| 9 | 7 June 2028 | [100;437] | 1 May 2038 | [539;215] |

| 10 | 16 August 2028 | 30 September 2039 | ||

| 12 | 23 September 2031 | 6 2April 2040 | 95 | |

| 10 June 2040 |

| S/N | Parameter | Value |

|---|---|---|

| 1 | Number of satellites in space segment | 10 |

| 2 | Number of epochs | 15 |

| 3 | Maximum Size of Data–Satellite [1, 2, 3, 4, 5] | [1.48, 1.47, 1.25, 1.33, 1.45] Mbytes |

| 4 | Maximum Size of Data–Satellite [6, 7, 8, 9, 10] | [1.42, 1.47, 1.41, 1.46, 1.33] Mbytes |

| 5 | Minimum Size of Data–Satellite [1, 2, 3, 4, 5] | [1.04, 148.9, 353.6, 317.9, 852.81] Kbytes |

| 6 | Minimum Size of Data–Satellite [6, 7, 8, 9, 10] | [12.37, 82.31, 92.30, 113.00, 6.71] Kbytes |

| 7 | Mean Size of Data–Satellite [1, 2, 3, 4, 5] | [866.37,777.78,567.04,917.37,811.12] Kbytes |

| 8 | Mean Size of Data–Satellite [6,7, 8, 9, 10] | [718,665.93, 920.20, 844.61, 763.45] Kbytes |

| 9 | Maximum Link Speed–Satellite [1, 2, 3, 4, 5] | [2.99, 2.98, 2.92, 2.93, 2.68] Gbps |

| 10 | Maximum Link Speed–Satellite [6, 7, 8, 9, 10] | [2.82, 2.73, 2.69, 2.76, 2.83] Gbps |

| 11 | Minimum Link Speed–Satellite [1, 2, 3, 4, 5] | [380.17, 380.57, 930, 280.8, 124.44] Mbps |

| 12 | Minimum Link Speed–Satellite [6 7 8 9 10] | [32.67, 237.97, 776.48, 107.31,156.90] Mbps |

| 13 | Mean Link Speed–Satellite [1, 2, 3, 4, 5] | [1.47, 1.87, 2.01, 1.69, 1.28] Gbps |

| 14 | Mean Link Speed–Satellite [6, 7, 8, 9, 10] | [1.395, 1.49, 1.26, 1.25, 1.35] Gbps |

| 15 | Altitude–Satellite [1, 2, 3, 4, 5] | [780.5, 791.0, 711.6, 631.04, 766.63] km |

| 16 | Altitude–Satellite [6, 7, 8, 9, 10] | [798.7, 760.4, 614.1, 574.4, 759.9] km |

| 21 | Number of Space Habitat Data Centers | 3 |

| 22 | Space Habitat Data Center Altitude–[1, 2, 3] | [921.1, 252.5, 383.7] km |

| 23 | Number of High Altitude Platforms | 4 |

| 24 | High Altitude Platform Altitude (Mesosphere)–[1, 2, 3, 4] | [25.4, 74.1, 67.5, 71.9] km |

| 25 | Size of Data Transmitted by High Altitude Platforms–[1, 2. 3, 4] | [1.4, 0.997, 0.45, 1.96] Mbps |

| 26 | Inter–High Altitude Platform Link Speed | [1.92, 0.69, 0.08] Mbps |

| S/N | Parameter | Value |

|---|---|---|

| 1 | Number of Satellites | 10 |

| 2 | Number of Epochs | 15 |

| 3 | Number of Space Vehicles and Space Habitat Data Centres | 5, 3 |

| 4 | Maximum Satellite Computational Resources [1, 2, 3, 4, 5] | [85.7, 90.2, 97.7, 97.2,96.9] Gbytes |

| 5 | Maximum Satellite Computational Resources [6, 7, 8, 9, 10] | [96.2, 94.0, 84.8,90.7, 88.7] Gbytes |

| 6 | Minimum Satellite Computational Resources [1, 2, 3, 4, 5] | [1,1.76, 13.9, 0.32, 4.94] Gbytes |

| 7 | Minimum Satellite Computational Resources [6, 7, 8, 9, 10] | [7,1,1.02,1.27,0.45] Gbytes |

| 8 | Mean Computational Resources on Satellites [1, 2, 3, 4, 5] | [52.6, 52.4, 58.4, 39.2, 61.3] Gbytes |

| 9 | Mean Computational Resources on Satellites [6, 7, 8, 9, 10] | [50.3, 38.7, 43.2, 39.3, 57.0] Gbytes |

| 10 | Number of servers on Space Habitat Data Centres 1, 2, 3 | 3 Servers per SHDC |

| 11 | Computing Capability of Servers on SHDC 1-[1, 2, 3] | [65.5, 22.3, 50.1] Gbytes |

| 12 | Computing Capability of Servers on SHDC 2-[1, 2, 3] | [43.9, 24.3, 40.8] Gbytes |

| 13 | Computing Capability of Servers on SHDC 3-[1, 2, 3] | [11.0, 5.7, 42.0] Gbytes |

| 14 | Compute utilization of Servers on SHDC 1-[1, 2, 3] | [36.3%, 21.3%, 7%] |

| 15 | Compute utilization of Servers on SHDC 2-[1, 2, 3] | [47.7%, 72.5%, 24.8%] |

| 16 | Compute utilization of Servers on SHDC 3-[1, 2, 3] | [18.8%, 5.2%, 43.6%] |

| 17 | Compute Resources on Space Vehicles-[1, 2, 3, 4, 5] | [49.8, 32.6, 72.2, 7.5, 51.1] Gbytes |

| 18 | Space Vehicle 1 Fully utilised (No computing resources) | Yes |

| 19 | Space Vehicle 2 Fully utilised (No computing resources) | Yes |

| 20 | Space Vehicle 3 Fully utilised (No computing resources) | No |

| 21 | Space Vehicle 4 Fully utilised (No computing resources) | No |

| 22 | Space Vehicle 5 Fully utilised (No computing resources) | No |

| 23 | Compute Resource Utilisation on Space Vehicles-[3, 4, 5] | [63.8%, 37.2%, 28.8%] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Periola, A.; Alonge, A.; Ogudo, K. Space Habitat Data Centers—For Future Computing. Symmetry 2020, 12, 1487. https://doi.org/10.3390/sym12091487

Periola A, Alonge A, Ogudo K. Space Habitat Data Centers—For Future Computing. Symmetry. 2020; 12(9):1487. https://doi.org/10.3390/sym12091487

Chicago/Turabian StylePeriola, Ayodele, Akintunde Alonge, and Kingsley Ogudo. 2020. "Space Habitat Data Centers—For Future Computing" Symmetry 12, no. 9: 1487. https://doi.org/10.3390/sym12091487

APA StylePeriola, A., Alonge, A., & Ogudo, K. (2020). Space Habitat Data Centers—For Future Computing. Symmetry, 12(9), 1487. https://doi.org/10.3390/sym12091487