Enhancement in Quality Estimation of Resistance Spot Welding Using Vision System and Fuzzy Support Vector Machine

Abstract

1. Introduction

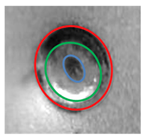

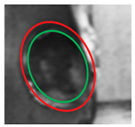

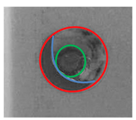

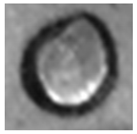

- Improve the image processing methods to cover the non-circular weld nuggets. More accurate results in extracting the characteristics from the weld nugget surface. Major and minor diameters, center coordinates, and angle of both “the fusion zone and heat-affected zone” are considered.

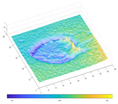

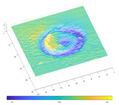

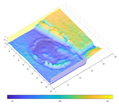

- Show more details of the weld nugget’s surface: the appearance’s accuracy of the topography of the weld nugget’s surface is enhanced in the concluded three-dimensional (3D) model.

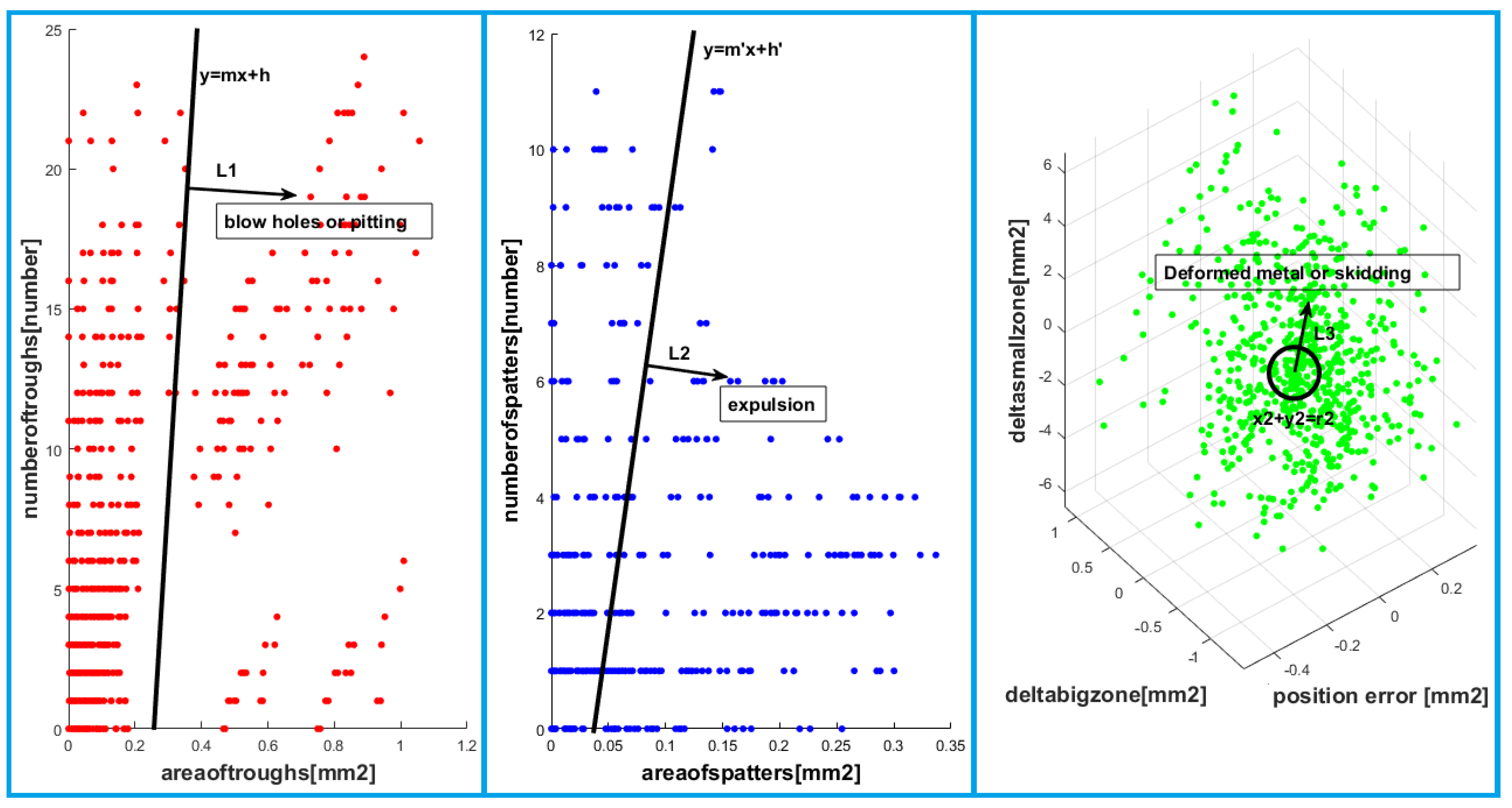

- Increase the efficiency of the proposed system by using an excessive number of samples for data training. Also, the FSVM machine learning method is carried out by applying the fuzzy functions to three groups of the extracted data to improve data training and classify the failure modes of the RSW effectively.

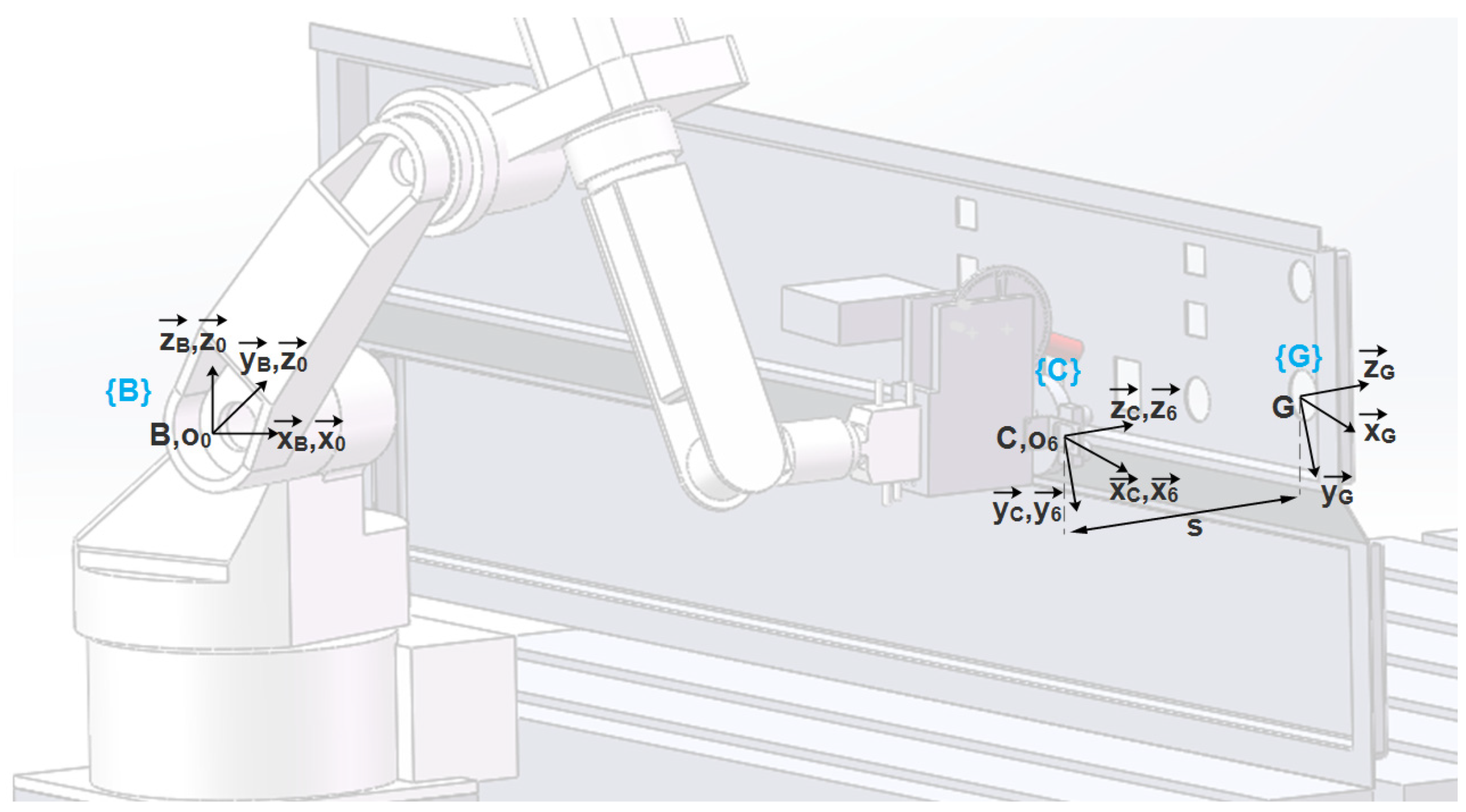

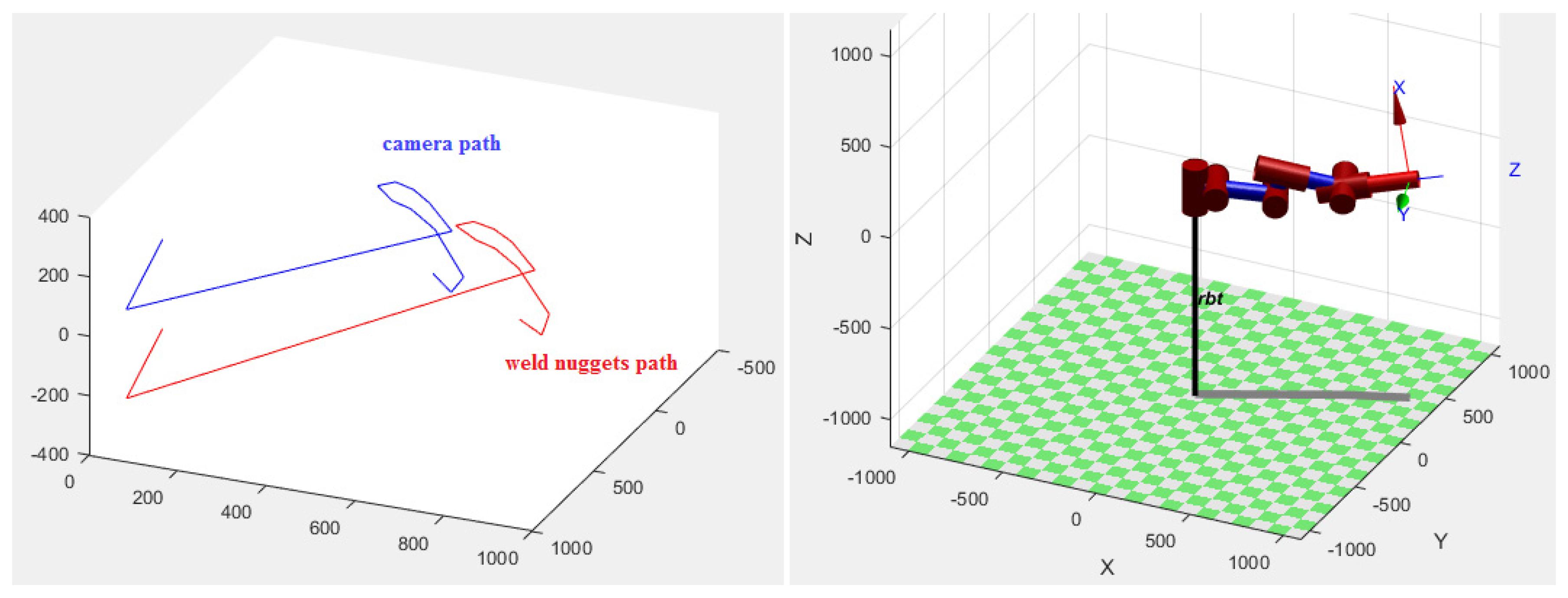

- The weld nuggets’ position detection is taken into consideration mathematically and the required end-effector path of the 6-degrees of freedom (6-DOF) robotic arm is planned to take a perfect picture of each weld nugget.

2. Hardware and Software Interface

3. Positions Detection of the Resistance Spot Welding

3.1. Weld Nugget’s Position Description

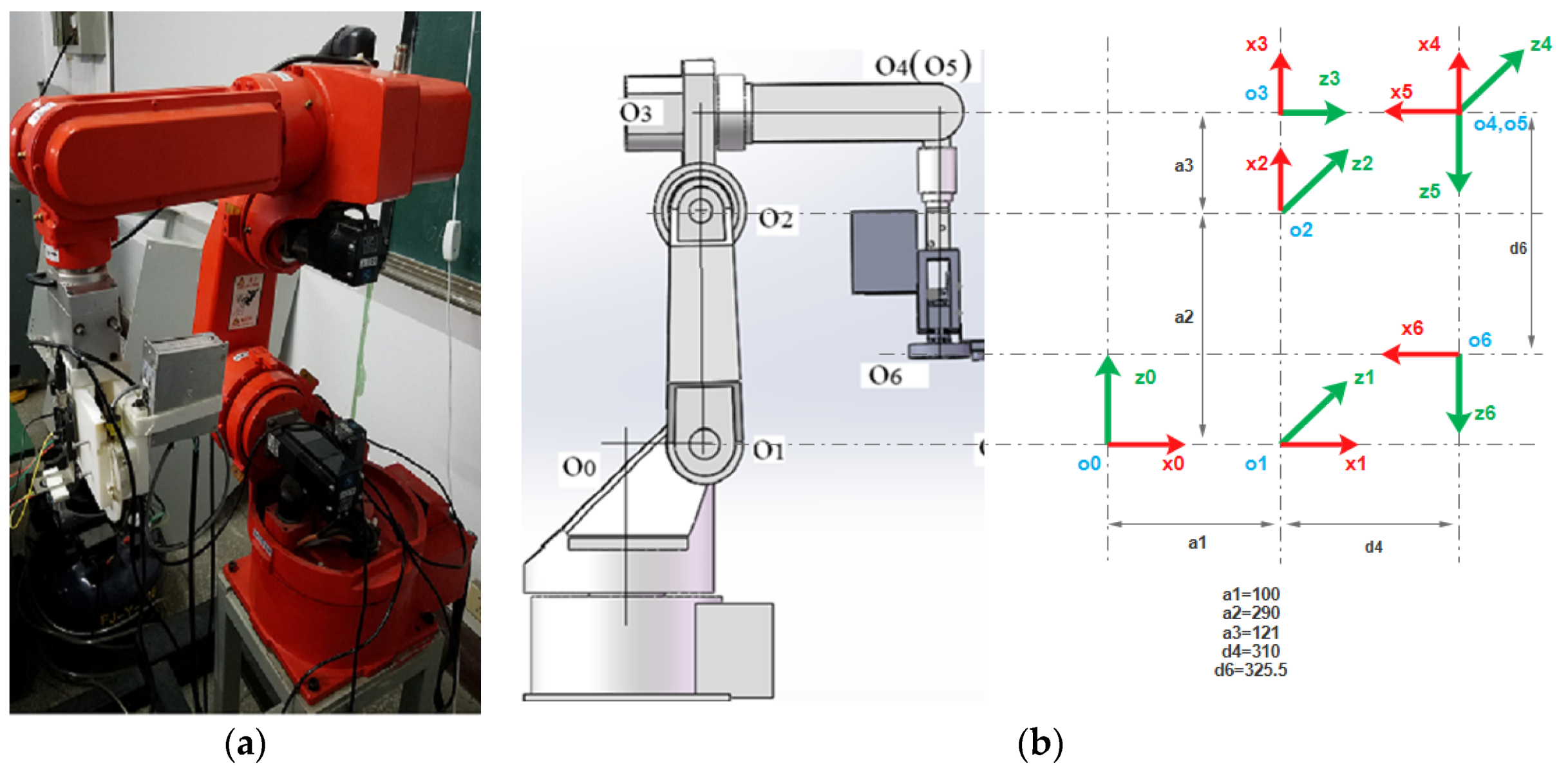

3.2. Establishing the Forward Kinematics of the Robotic Arm

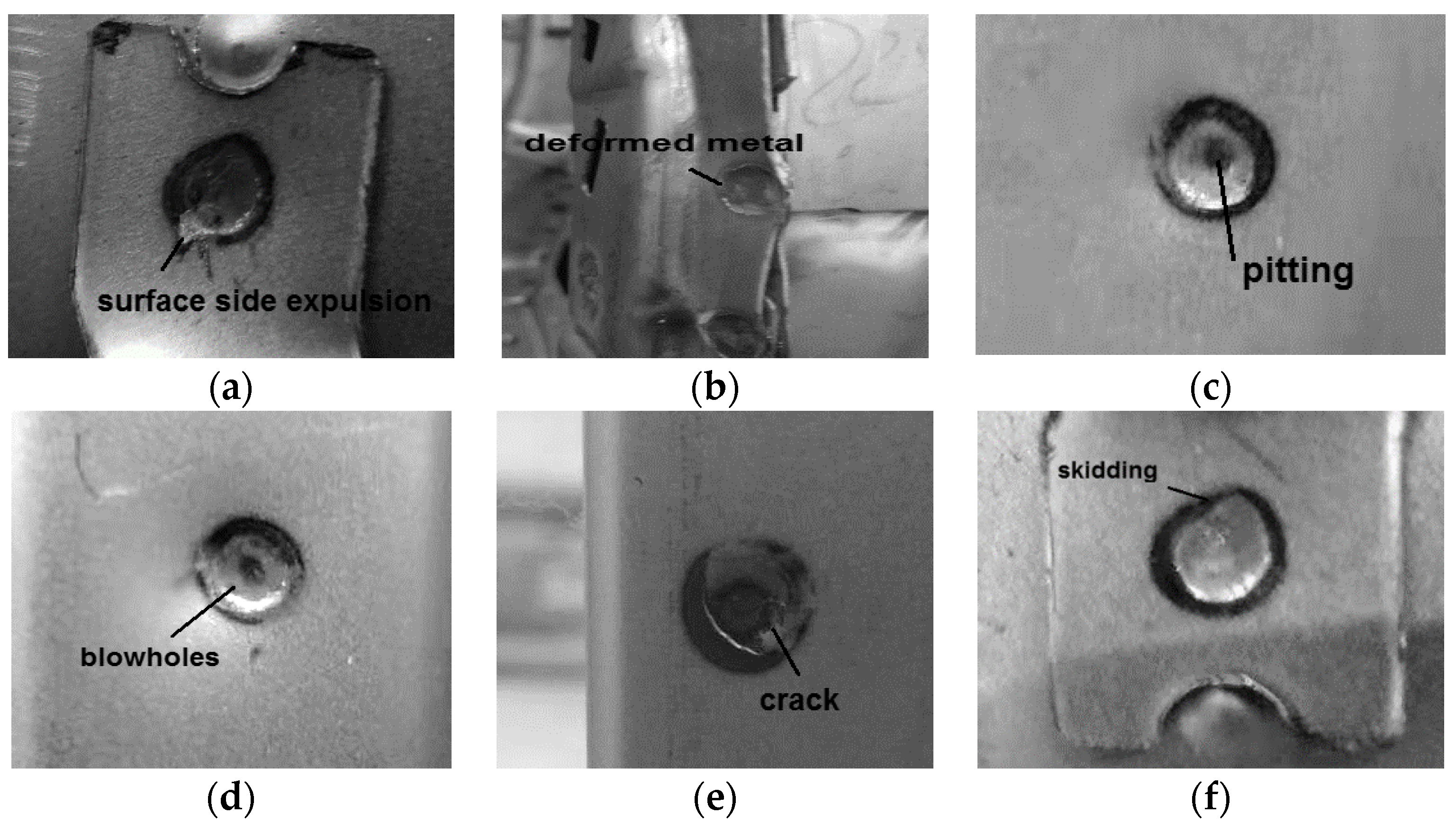

4. Resistance Spot Welding’s Failure Modes

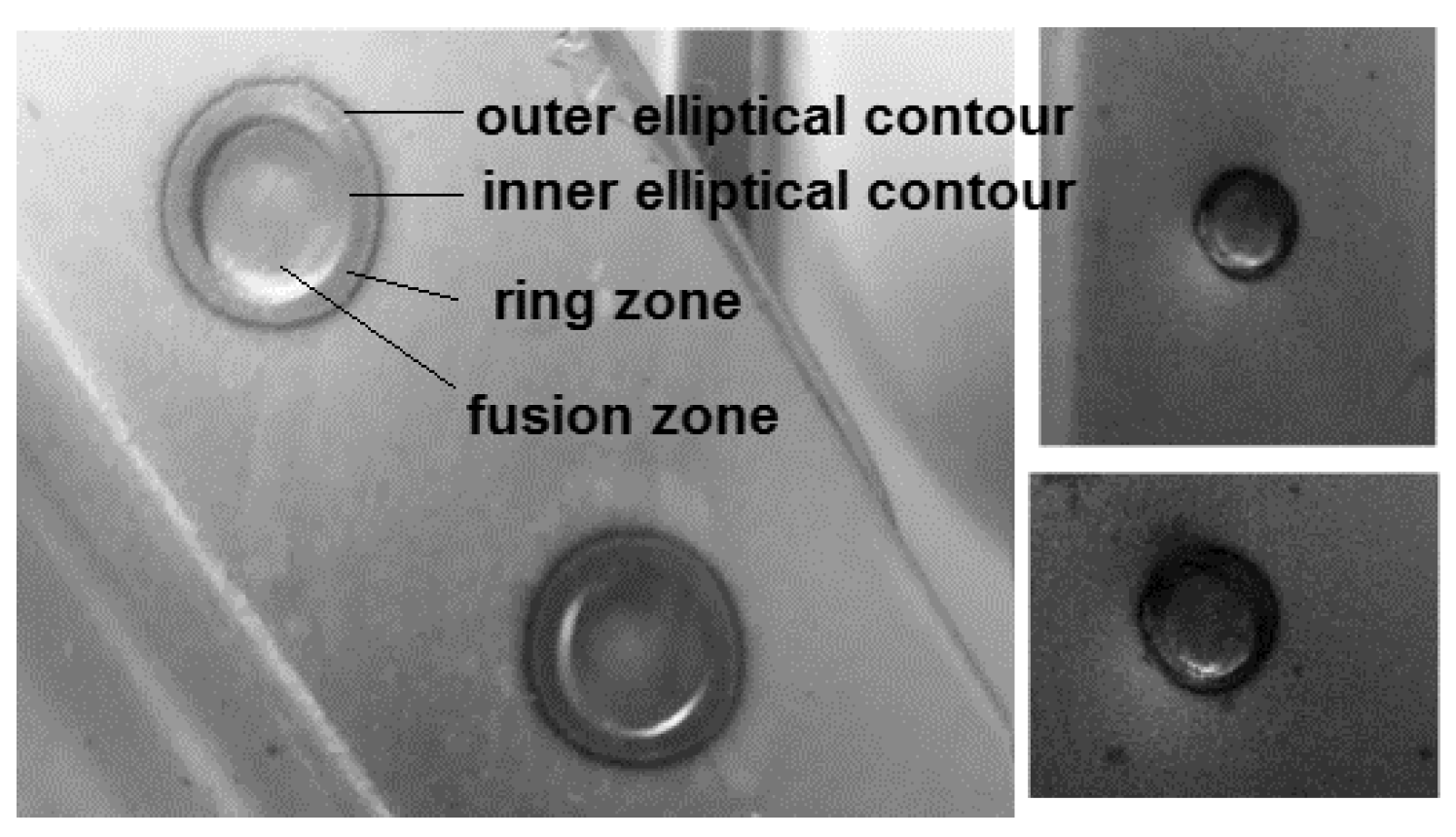

5. Data Extraction from the Weld Nugget’s Surface Using Improved Image Processing Methods

5.1. Calibration

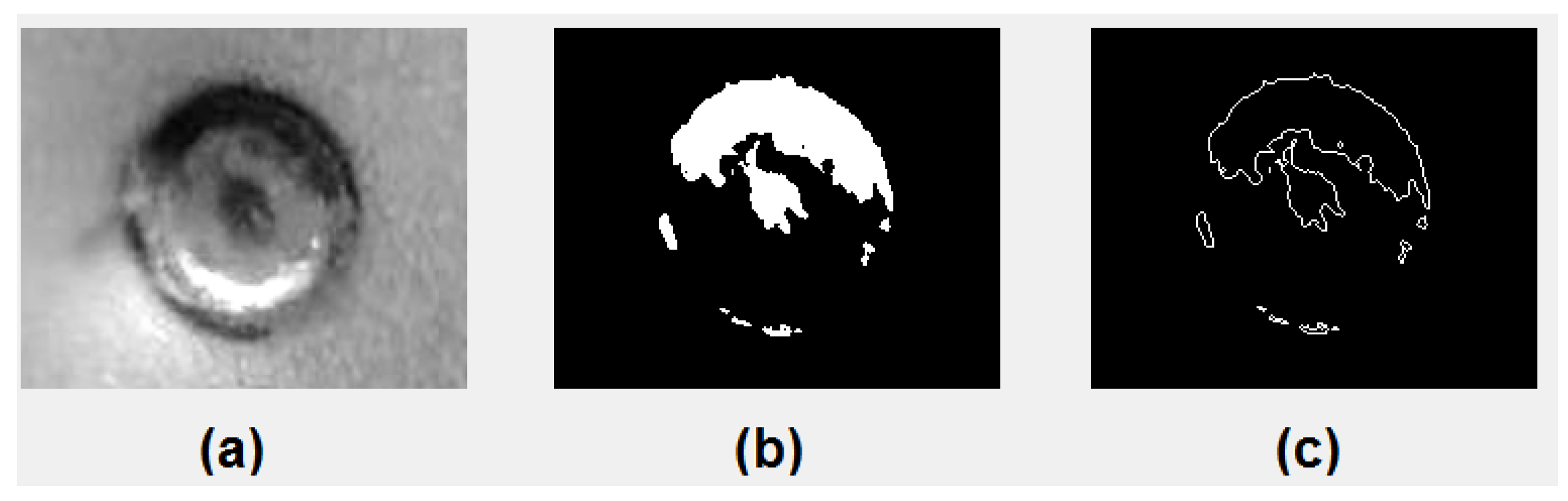

5.2. Heat-Affected Zone Contour Detection

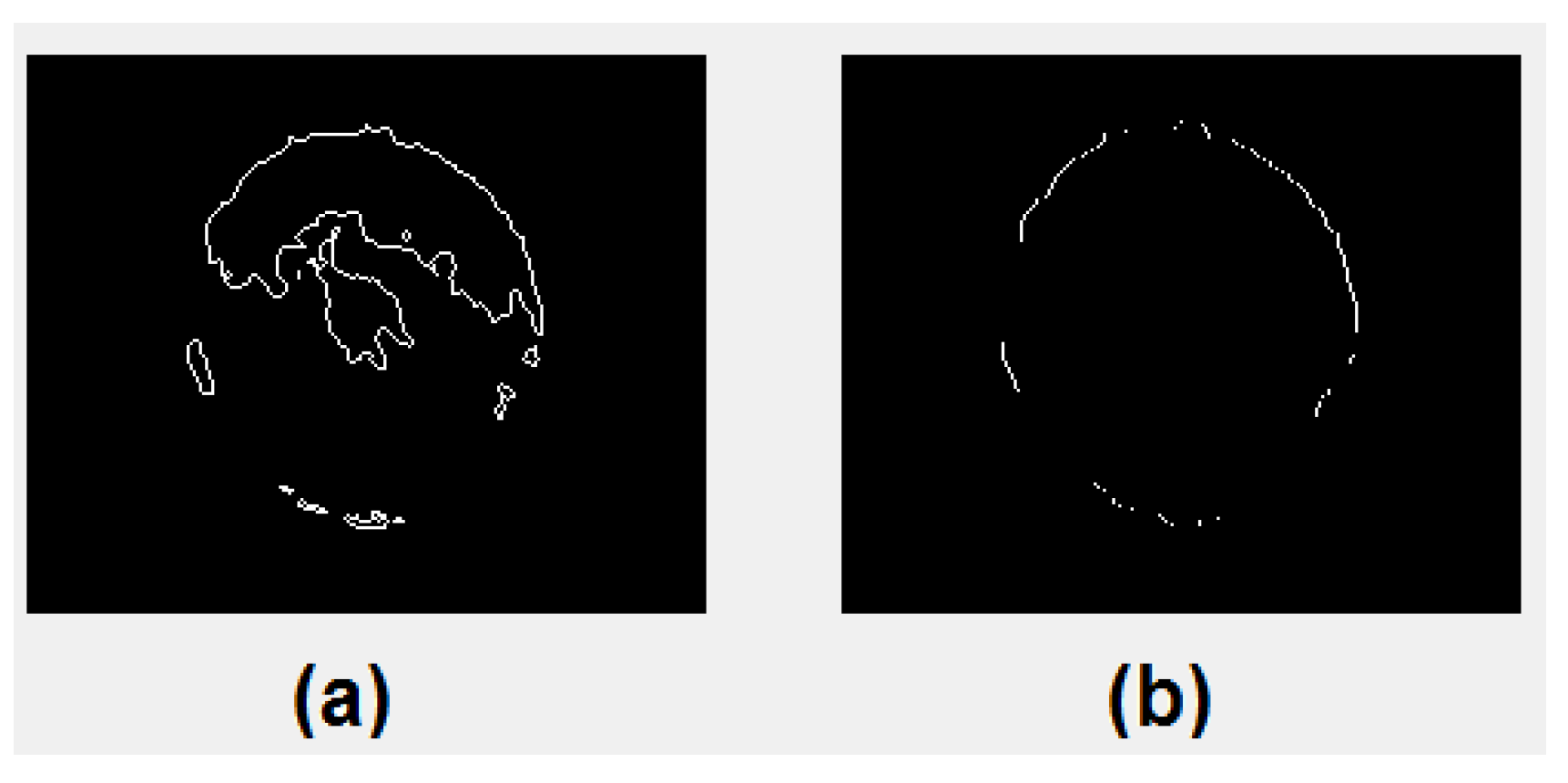

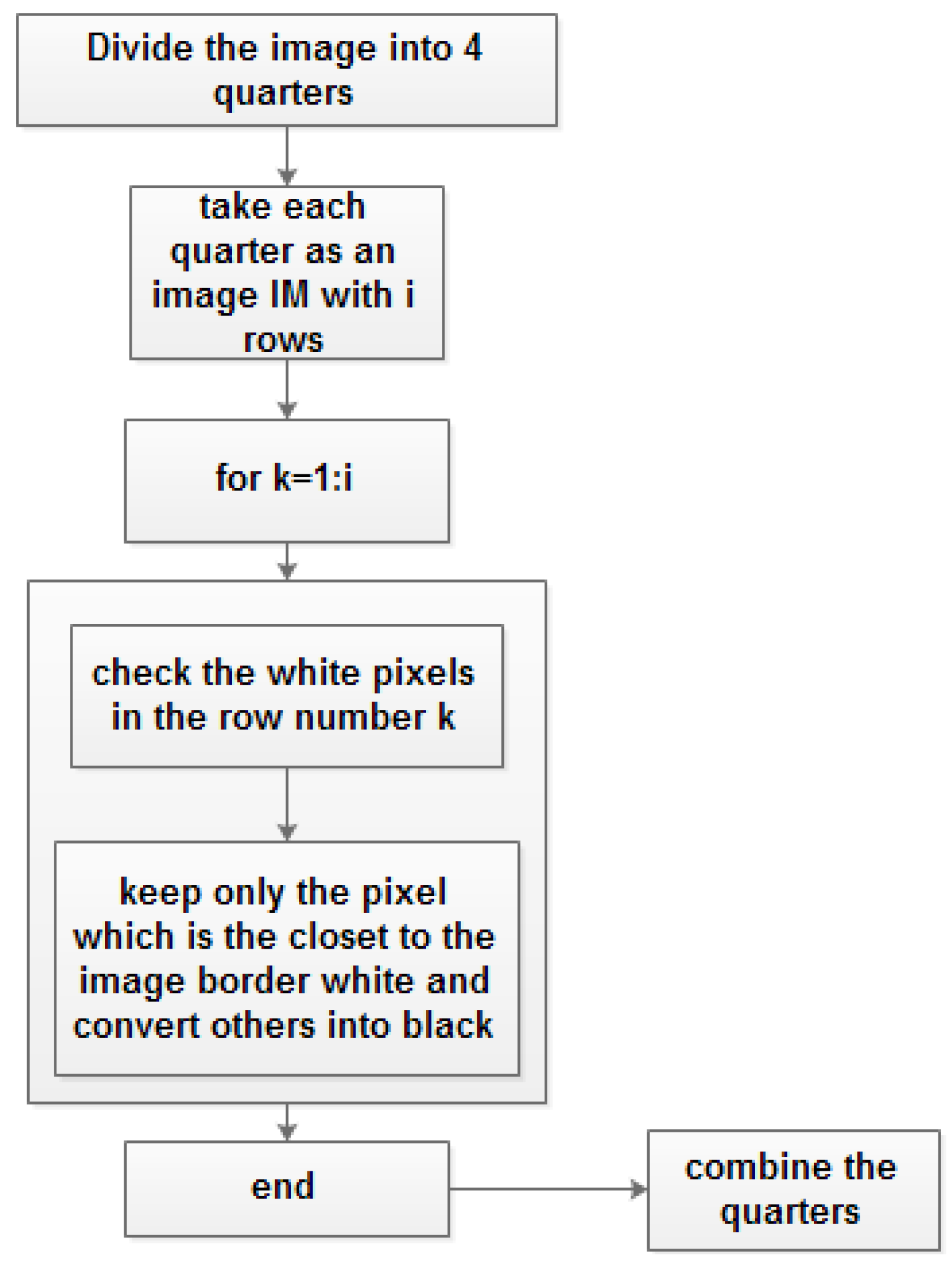

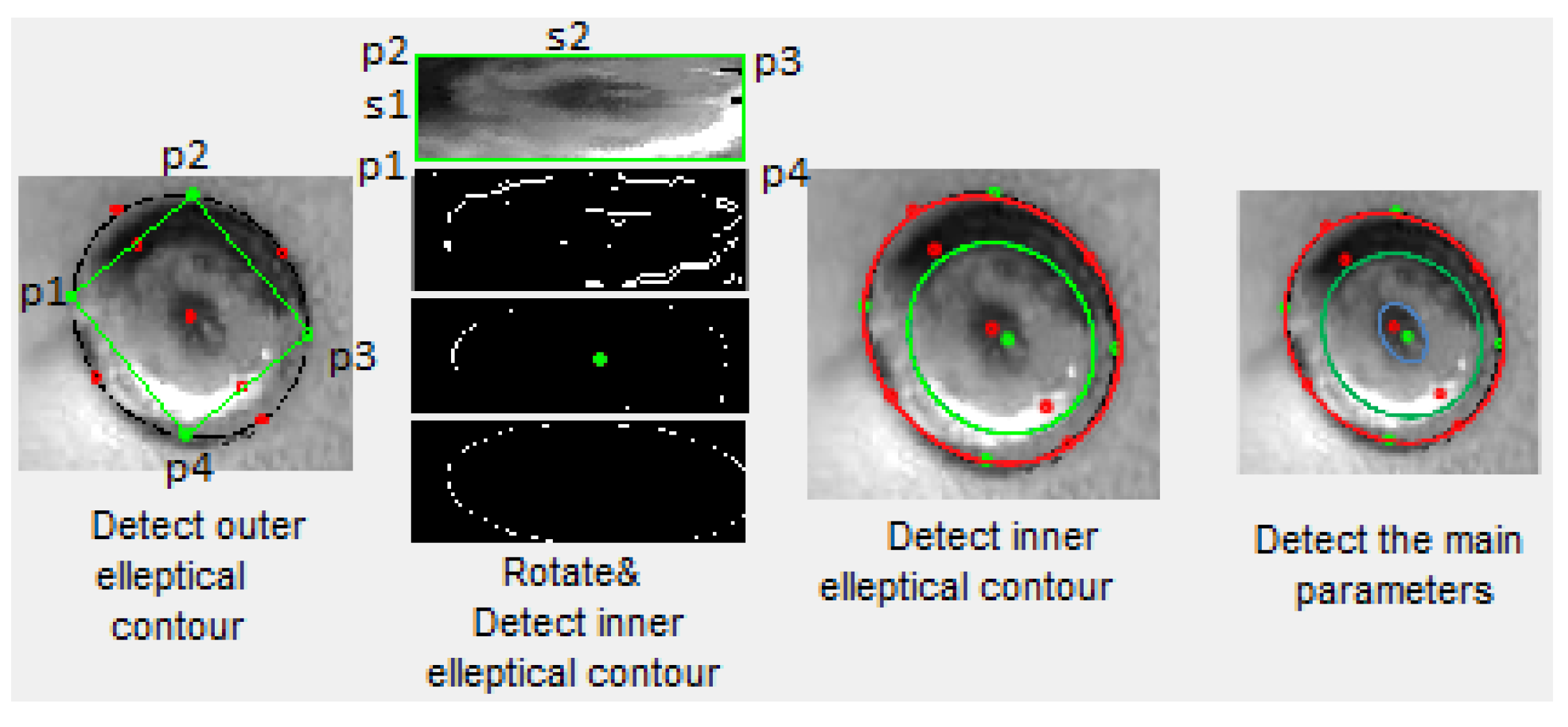

5.3. Detect the Biggest Rectangle Area Inside the Outer Contour of the Weld Nugget

5.4. Rotate the Irregular Rectangle Area and Inner Contour Detection

5.5. 3D Model

6. Estimation Methods Based on Fuzzy Support Vector Machine

7. Experimental Work

7.1. Path Planning Simulation and Execution

7.2. Experimental Results

8. Conclusions

- (1)

- In this study, the vision system for detecting the defects and quality estimation of RSW has been developed using image processing methods and FSVM to evaluate an elliptical-shaped nugget’s surface on the car underbody.

- (2)

- Different kinds of failure modes, such as surface side expulsion, deformed metal, pitting, blowholes, crack, and skidding, were utilized as the input for the system built in this research to estimate the weld quality of RSW.

- (3)

- The full experimental results were developed and successfully tested in the car underbody in the Dongfeng Motor (DFM) factory in China. The experimental results conclude that the estimation of the 3D reconstruction model of the weld’s surface and the automatic quality inspection of RSW surfaces can reach higher accuracy based on the proposed methods.

- (4)

- In our future work, the inner quality of the RSW will be considered by developing our system and installing a suitable measuring ultrasonic sensor to the end-effector of the robotic arm with a CCD camera. Using visual inspection and ultrasonic inspection with a robotic arm will help to create a valuable quality inspection technology of RSW.

Author Contributions

Funding

Conflicts of Interest

References

- Tianhu, S. Application of welding technology in automotive manufacturing. Aeronaut. Manuf. Technol. 2004, 3, 24–27. [Google Scholar]

- Akkaş, N. Welding time effect on tensile-shear loading in resistance spot welding of SPA-H weathering steel sheets used in railway vehicles. Acta Phys. Pol. A 2017, 131, 52–54. [Google Scholar] [CrossRef]

- Kaars, J.; Mayr, P.; Koppe, K. Generalized dynamic transition resistance in spot welding of aluminized 22MnB5. Mater. Des. 2016, 106, 139–145. [Google Scholar] [CrossRef]

- Boriwal, L.; Sarviya, R.M.; Mahapatra, M.M. Failure modes of spot welds in quasi—Static tensile—Shear loading of coated steel sheets. Mater. Today Proc. 2017, 4, 3672–3677. [Google Scholar] [CrossRef]

- Nieto, J.; Guerrero-Mata, M.P.; Colás, R.; Maní, A. Experimental investigation on resistance spot welding of galvannealed HSLA steel. Sci. Technol. Weld. Join. 2006, 11, 717–722. [Google Scholar] [CrossRef]

- Pouranvari, M.; Marashi, S.P.H. Key factors influencing mechanical performance of dual phase steel resistance spot welds. Sci. Technol. Weld. Join. 2010, 15, 149–155. [Google Scholar] [CrossRef]

- Lee, H.T.; Wang, M.; Maev, R.; Maeva, E. A study on using scanning acoustic microscopy and neural network techniques to evaluate the quality of resistance spot welding. Int. J. Adv. Manuf. Technol. 2003, 22, 727–732. [Google Scholar] [CrossRef]

- Baradarani, A.; Khanli, L.M.; Chertov, A.M.; Regalado, P.W.; Maev, R.G. Efficient Feature Extraction in Ultrasonic Spot Weld Inspection. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017. [Google Scholar]

- Tsai, C.L.; Papritan, J.C.; Dickinson, D.W.; Jammal, O. Modeling of resistance spot weld nugget growth. Weld. J. 1992, 71, 47–54. [Google Scholar]

- Needham, J.C.; Johnson, K.I. New design of resistance spot welding machine for quality control. Weld. J. 1972, 51, 122–131. [Google Scholar]

- Patange, S.; Anjaneyulu, T.; Reddy, G. Microprocessor-based resistance welding monitor. Weld. J. 1985, 25, 33–38. [Google Scholar]

- Brown, J.D.; Rodd, M.G.; Williams, N.T. Application of artificial intelligence techniques to resistance spot welding. Ironmak. Steelmak. 1998, 25, 199–204. [Google Scholar]

- Shimamoto, A.; Yamashita, K.; Inoue, H.; Yang, S.; Iwata, M.; Ike, N. A Nondestructive evaluation method: Measuring the fixed strength of spot-welded joint points by surface electrical resistivity. J. Press. Vessel Technol. 2013, 135, 21501. [Google Scholar] [CrossRef] [PubMed]

- Yu, J. Adaptive resistance spot welding process that reduces the shunting effect for automotive high-strength steels. Metals 2018, 8, 775. [Google Scholar] [CrossRef]

- Duan, R.; Luo, Z.; Li, Y.; Zhang, Y.; Liu, Z.M. Novel postweld heat treatment method for improving mechanical properties of resistance spot weld. Sci. Technol. Weld. Join. 2015, 20, 100–105. [Google Scholar] [CrossRef]

- Tsukada, K.; Miyake, K.; Harada, D.; Sakai, K.; Kiwa, T. Magnetic nondestructive t-Test for resistance spot welds using magnetic flux penetration and eddy current methods. J. Nondestruct. Eval. 2013, 32, 286–293. [Google Scholar] [CrossRef]

- Ruisz, J.; Biber, J.; Loipetsberger, M. Quality evaluation in resistance spot welding by analysing the weld fingerprint on metal bands by computer vision. Int. J. Adv. Manuf. Technol. 2007, 33, 952–960. [Google Scholar] [CrossRef]

- Hongwei, Z.; Zhigang, F. Computing method of fractal dimension of imageand its application. J. Jiansu Sci. Technol. 2001, 22, 92–95. [Google Scholar]

- Zhenguo, S.; Yong, X.; Qiang, C. Application of fractal theory in welding image processing. J. Image Graph. 2002, 7, 86–90. [Google Scholar]

- Wang, Y.; Sun, Y.; Lv, P.; Wang, H. Detection of line weld defects based on multiple thresholds and support vector machine. Indep. Nondestruct. Test. Eval. Int. 2008, 41, 517–524. [Google Scholar] [CrossRef]

- Lashkia, V. Defect detection in X-ray images using fuzzy reasoning. Image Vision Comput. 2001, 19, 261–269. [Google Scholar] [CrossRef]

- Boersch, I.; Füssel, U.; Gresch, C.; Großmann, C.; Hoffmann, B. Data mining in resistance spot welding. Int. J. Adv. Manuf. Technol. 2018, 99, 1085–1099. [Google Scholar] [CrossRef]

- Alghannam, E.; Lu, H.; Ma, M.; Cheng, Q.; Gonzalez, A.A.; Zang, Y.; Li, S. A Novel Method of Using Vision System and Fuzzy Logic for Quality Estimation of Resistance Spot Welding. Symmetry 2019, 11, 990. [Google Scholar] [CrossRef]

- Bottin, M.; Giulio, R. Trajectory Optimization of a Redundant Serial Robot Using Cartesian via Points and Kinematic Decoupling. Robotics 2019, 8, 101. [Google Scholar] [CrossRef]

- Craig, J.J. Introduction to Robotics: Mechanics and Control, 3rd ed.; Pearson Education: London, UK, 2009. [Google Scholar]

- Lozano-Pérez, T.; Wesley, M.A. An algorithm for planning collision-free paths among polyhedral obstacles. Commun. ACM 1979, 22, 560–570. [Google Scholar] [CrossRef]

- Seereeram, S.; Wen, J.T. A global approach to path planning for redundant manipulators. IEEE Trans. Robotics Automation 1995, 11, 152–160. [Google Scholar] [CrossRef]

| Initial Configuration Angle | Initial End-Effector Position/Base | |||||

|---|---|---|---|---|---|---|

| O0O1 = a1 = 100 | −90° | 0 | 0° | X = a1 + d4 = 410 mm | ||

| O1O2 = a2 = 290 | 0° | 0 | 0° | Y = 0 mm | ||

| O2O3 = a3 = 121 | −90° | 0 | 0° | Z = a2 + a3 − d6 = 85.5 mm | ||

| 0 | 90° | O3O4 = d4 = 310 | 0° | φ = 0° | ||

| 0 | 90° | 0 | 0° | θ = 180° | ||

| 0 | 0° | O5O6 = d6 = 325.5 | 0° | ψ = 180° |

| Sheet Metal Thickness t (mm) | Electrode Force F (kN) | Weld Current I (A) | Weld Time (Cycles) | Hold Time (Cycles) | Electrode Diameter d (mm) | |

|---|---|---|---|---|---|---|

| 1.00 + 1.00 | 2.50 | 9500 | 10 | 2 | 6 | |

| 1.12 + 1.12 | 2.80 | 9750 | 11 | 2 | 6 | |

| 1.25 + 1.25 | 3.15 | 10,000 | 13 | 3 | 6 | 7 |

| 1.40 + 1.40 | 3.55 | 10,300 | 14 | 3 | 6 | 7 |

| 1.50 + 1.50 | 3.65 | 10,450 | 15 | 3 | 6 | 7 |

| 1.60 + 1.60 | 4.00 | 10,600 | 16 | 3 | 6 | 7 |

| 1.80 + 1.80 | 4.50 | 10,900 | 18 | 3 | 6 | 7 |

| 2.00 + 2.00 | 5.00 | 11,200 | 23 | 4 | 6 | 8 |

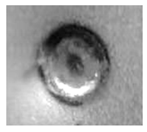

| Weld No. | Input Gray Image | Nugget Features Extractions Contours Detection | 3D Model | Failure Mode |

|---|---|---|---|---|

| 1 |  |  |  | Normal weld |

| 2 |  |  |  | expulsion |

| 3 |  |  |  | Blowholes |

| 4 |  |  |  | pitting |

| 5 |  |  |  | Deformed metal |

| 6 |  |  |  | cracks |

| 7 |  |  |  | skidding |

| Ultrasonic Diameter (mm) | Ultrasonic Indentation Depth (mm) | Ultrasonic Detection of the Plate Thickness (mm) | Ultrasonic Inspection | |

|---|---|---|---|---|

| 1 | 5.51 | 0.16 | 2.11 | no defects |

| 2 | 5.42 | 0.08 | 2.11 | no defects |

| 3 | 5.51 | 0.13 | 2.12 | no defects |

| 4 | 5.42 | 0.06 | 2.12 | no defects |

| 5 | 5.51 | 0.11 | 2.30 | no defects |

| 6 | 5.11 | 0.18 | 2.31 | no defects |

| 7 | 5.13 | 0.12 | 2.30 | defective |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Younes, D.; Alghannam, E.; Tan, Y.; Lu, H. Enhancement in Quality Estimation of Resistance Spot Welding Using Vision System and Fuzzy Support Vector Machine. Symmetry 2020, 12, 1380. https://doi.org/10.3390/sym12081380

Younes D, Alghannam E, Tan Y, Lu H. Enhancement in Quality Estimation of Resistance Spot Welding Using Vision System and Fuzzy Support Vector Machine. Symmetry. 2020; 12(8):1380. https://doi.org/10.3390/sym12081380

Chicago/Turabian StyleYounes, Dima, Essa Alghannam, Yuegang Tan, and Hong Lu. 2020. "Enhancement in Quality Estimation of Resistance Spot Welding Using Vision System and Fuzzy Support Vector Machine" Symmetry 12, no. 8: 1380. https://doi.org/10.3390/sym12081380

APA StyleYounes, D., Alghannam, E., Tan, Y., & Lu, H. (2020). Enhancement in Quality Estimation of Resistance Spot Welding Using Vision System and Fuzzy Support Vector Machine. Symmetry, 12(8), 1380. https://doi.org/10.3390/sym12081380