Animated Character Style Investigation with Decision Tree Classification

Abstract

1. Introduction

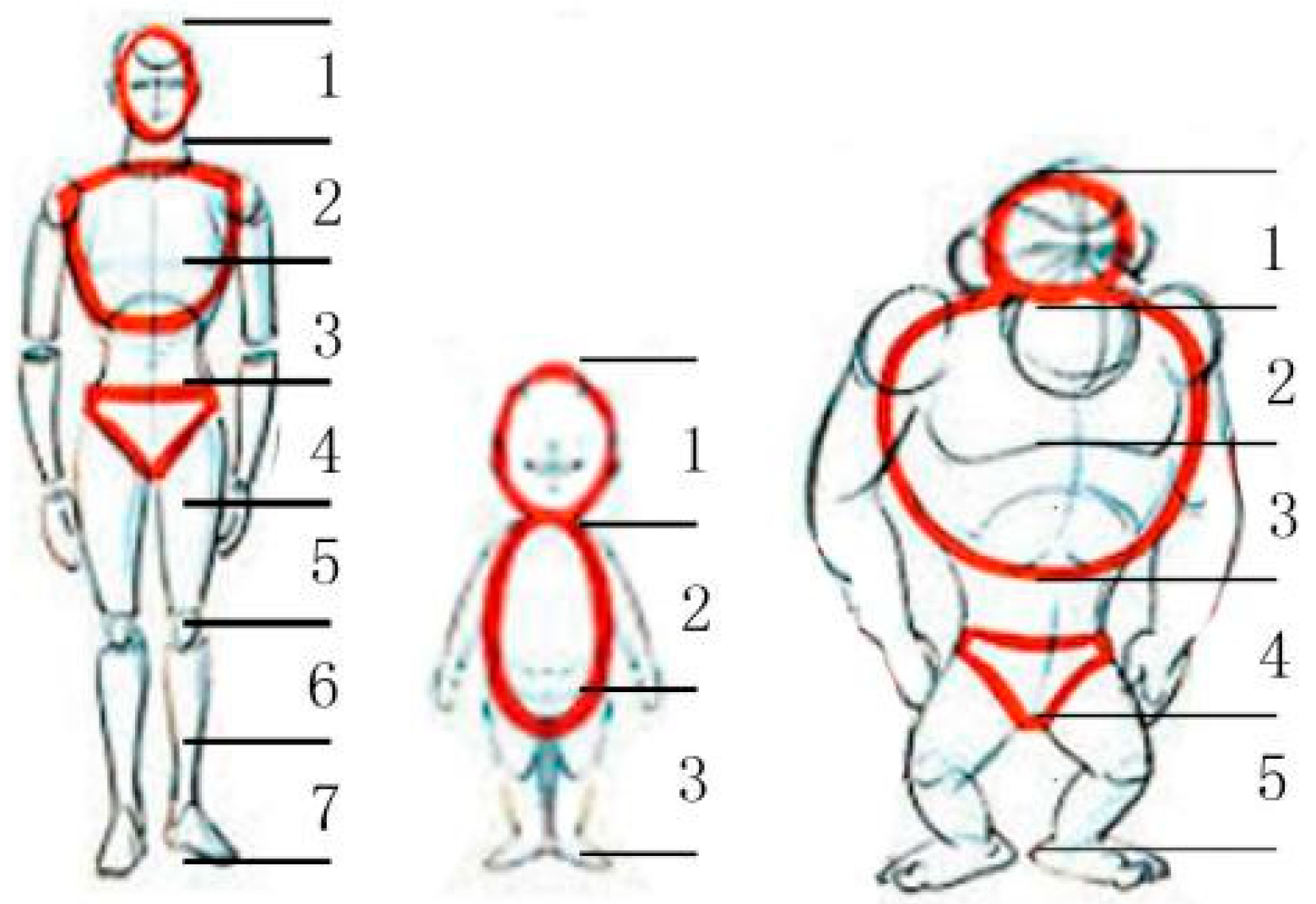

1.1. Exaggerated Body Proportions in Animated Characters

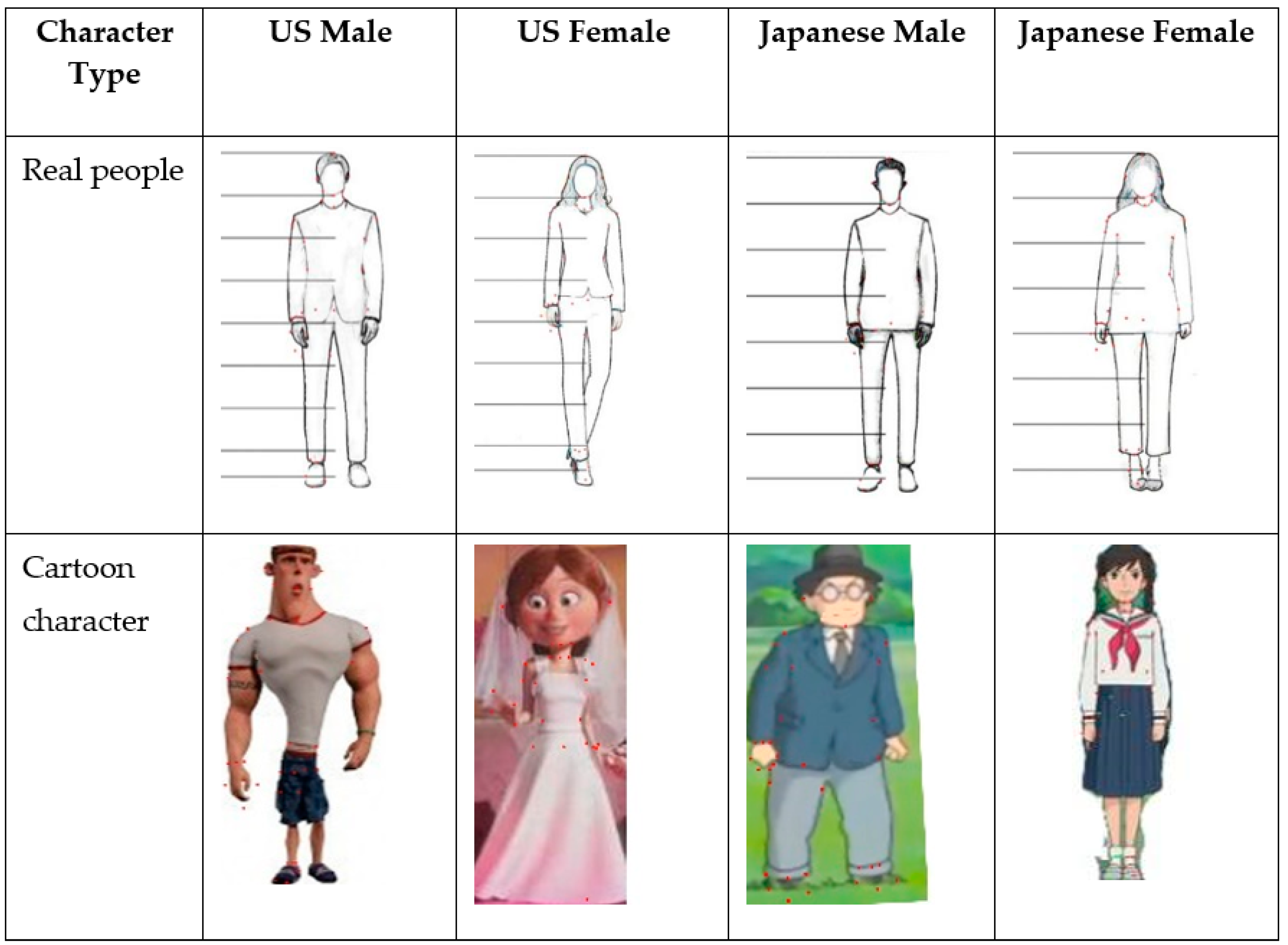

1.2. US and Japanese Animated Character Differences

1.3. AI Identification

- We prove that AI can automatically recognize animated characters. The results of the AI automatic recognition of character image categories in animation also provide some algorithm references for AI to automatically generate animation images.

- We find the rules of animation character shapes and proportion designs, which can let beginners follow these rules to learn the designs of certain animation character images as soon as possible. Some mature designers can also avoid these rules to design some more innovative animation characters.

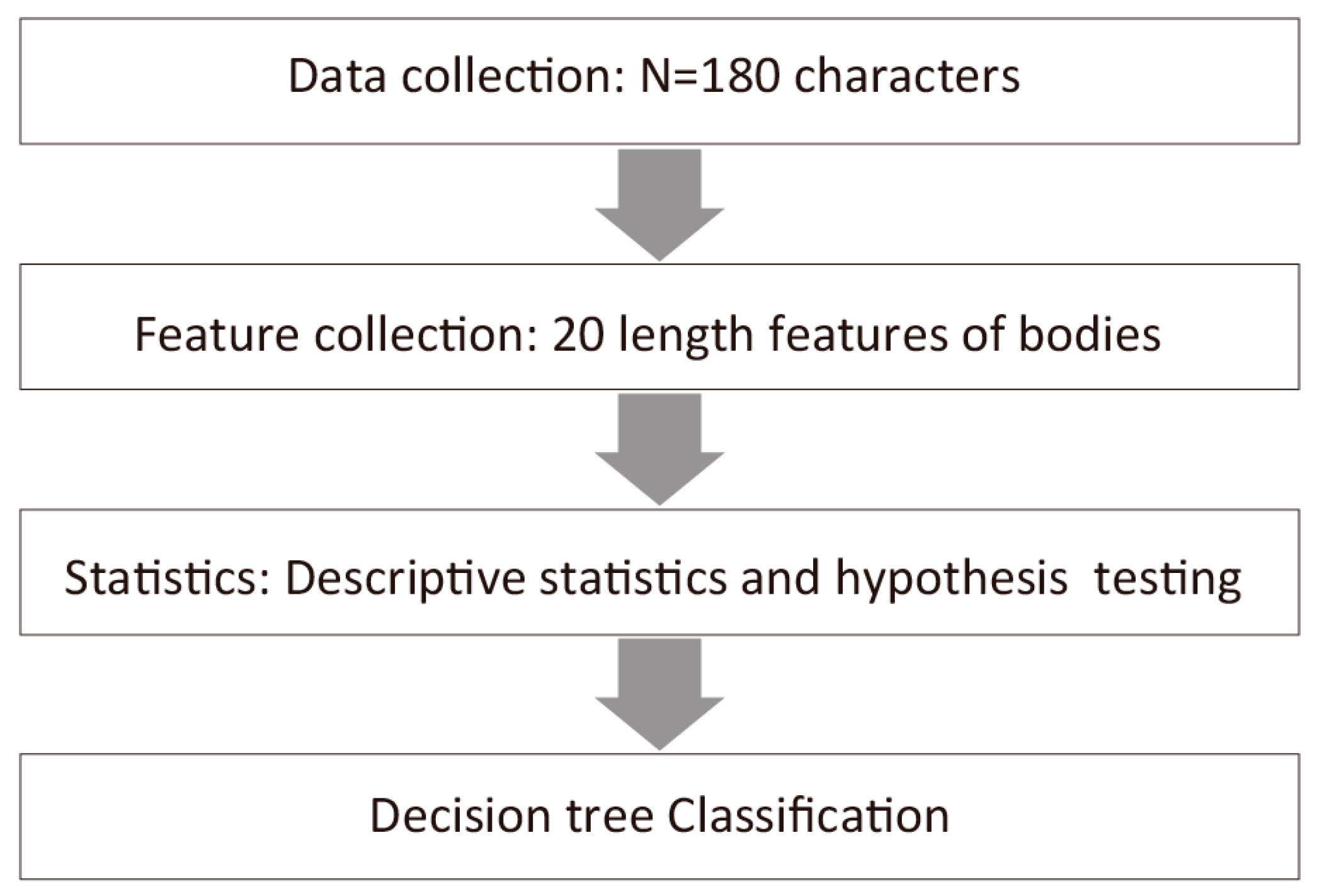

2. Methods

2.1. Data Collection of US, Japanese, and Regular Models

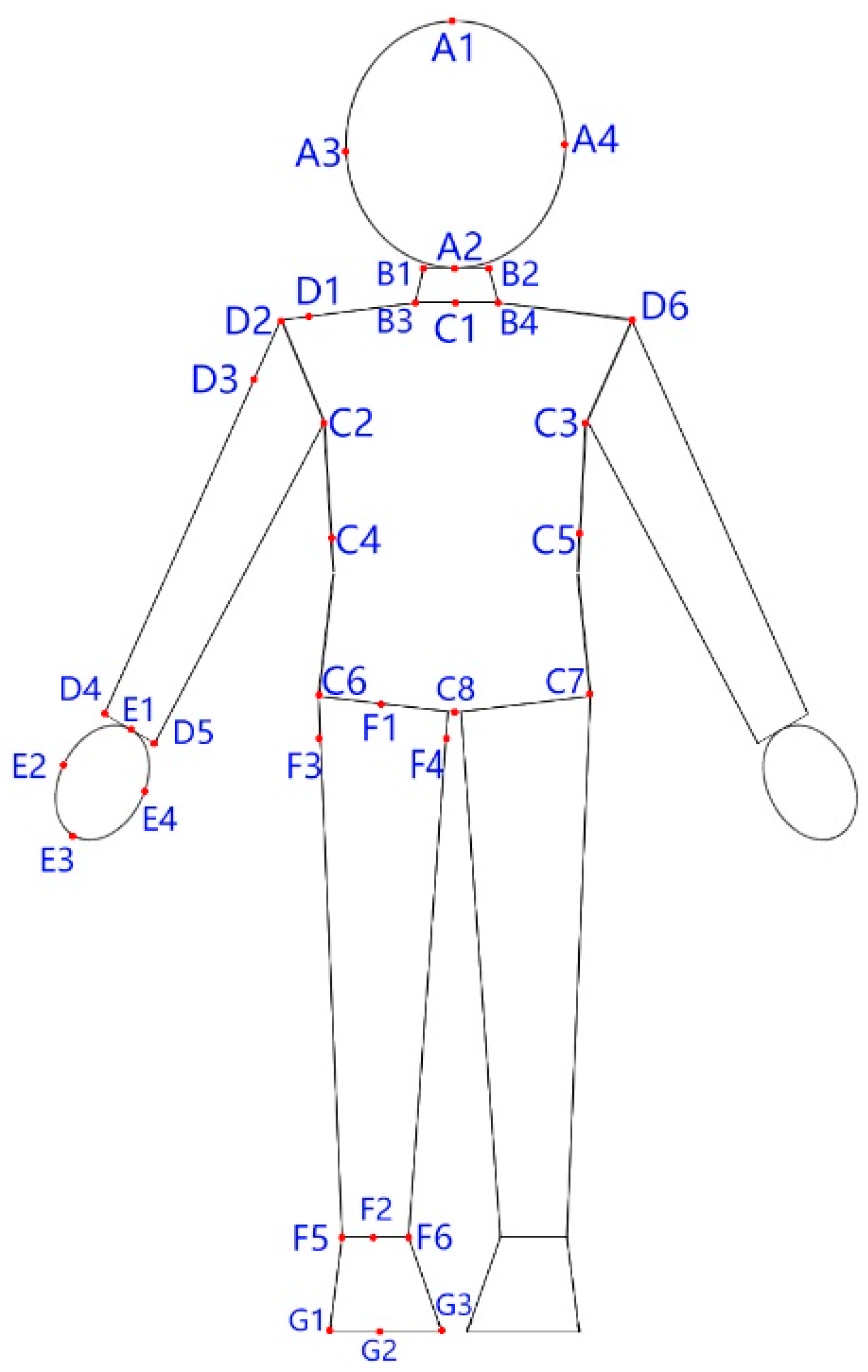

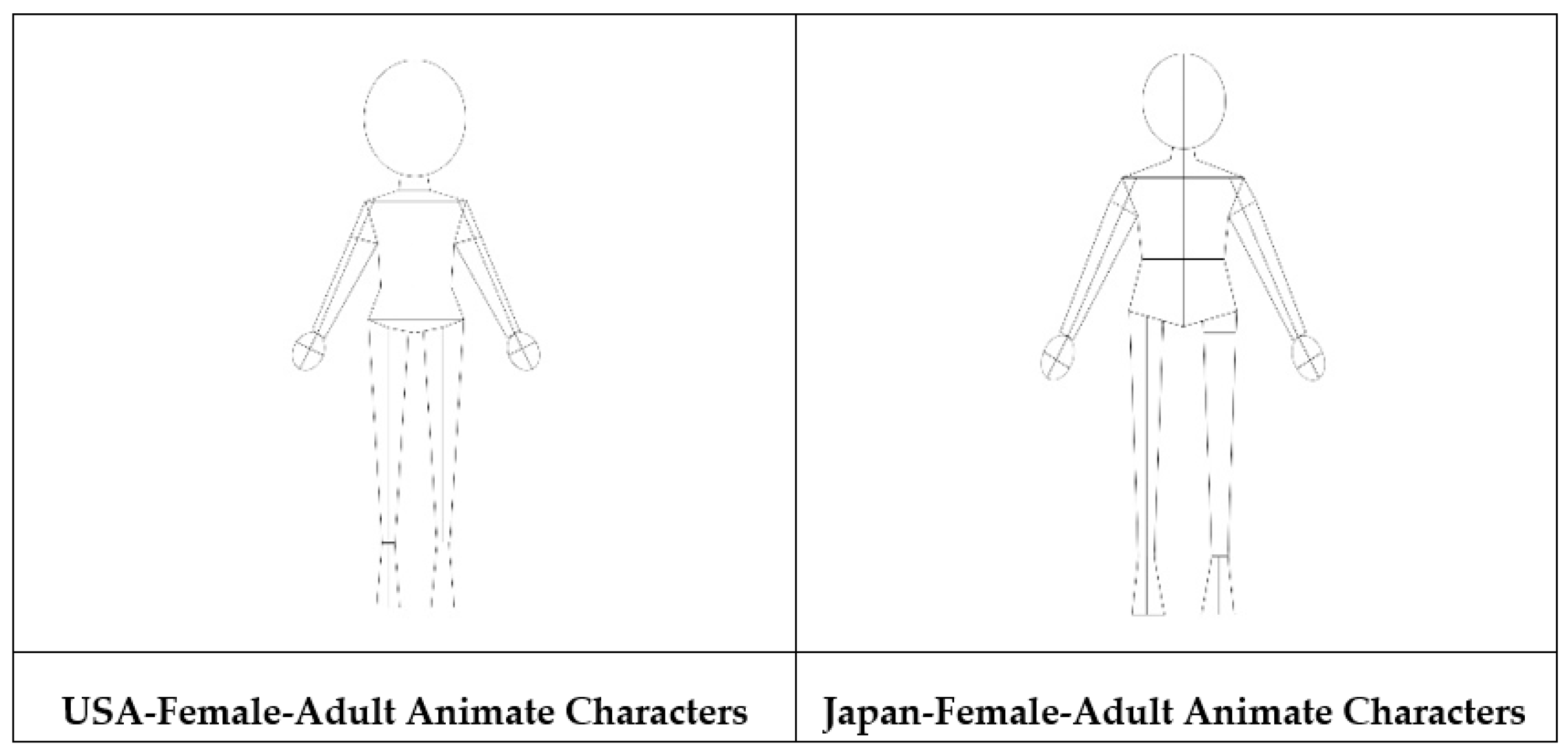

2.2. Physique Parameter Definitions and Regular Models

2.3. Decision Tree Implementation

2.4. Statistics

- Descriptive statistics: The means and standard deviations of 20 body lengths by country, sex, and leading or supporting roles were calculated. In addition, the averages and standard deviations of the results after 1000 iterations of decision tree analysis were calculated by country, sex, and leading or supporting roles.

- Difference tests: Single sample t-tests and t-tests were used to compare animated characters and the real-people reference pictures with respect to the classification categories in Table 2. The α-value for significance in the t-test was 0.05.

3. Results

3.1. Parameter Statistics and Tests

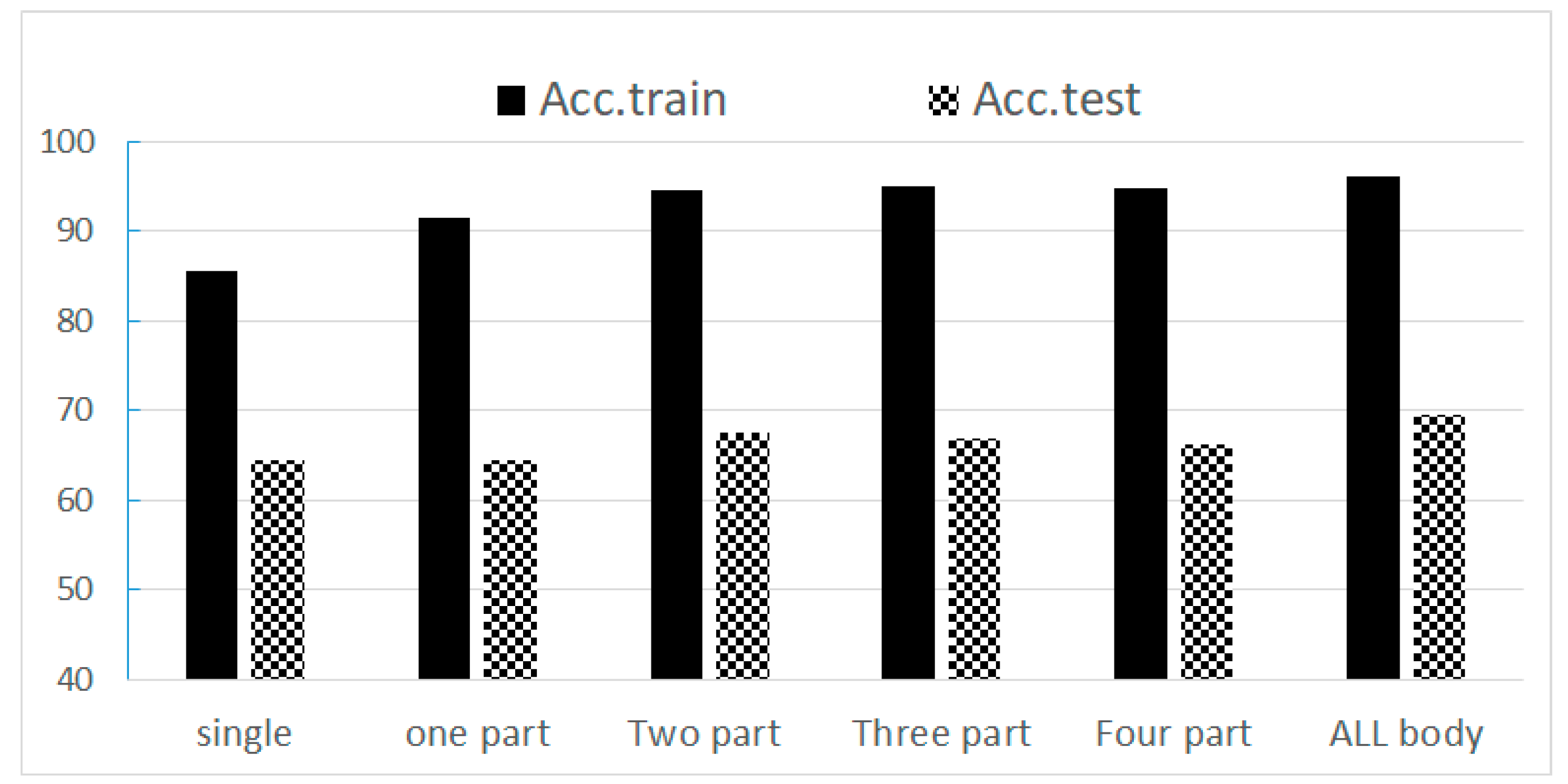

3.2. Decision Tree Classification Results

4. Discussion

- We proved that AI, in addition to the facial features, posture, gender, age, and facial features of humans, the recognizable content can also identify in animated characters which country or region it comes from, as well as its gender and age, through a basic body proportion form.

- We found that different types of animation characters have different design rules. The cognition of this law can help designers to break some rules to design more innovative character images or help novices to follow a certain pattern to design animation characters that some groups like.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

References

- Lu, A.S. What race do they represent and does mine have anything to do with it? Perceived racial categories of anime characters. Animation 2009, 4, 169–190. [Google Scholar] [CrossRef]

- Gard, T. Building Character. Available online: http://nideffer.net/classes/270-08/week_05_design/BuildingCharacter.doc (accessed on 20 June 2000).

- Gombrich, E. Art and Illusion (New York). Pantheon 1960, 20, 1–46. [Google Scholar]

- Islam, M.T.; Nahiduzzaman, K.M.; Peng, W.Y.; Ashraf, G. Learning from humanoid cartoon designs. In Proceedings of the Industrial Conference on Data Mining, Berlin, Germany, 12–14 July 2010; pp. 606–616. [Google Scholar]

- Lasseter, J. Principles of traditional animation applied to 3D computer animation. In Proceedings of the 14th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 21–25 July 2013; pp. 35–44. [Google Scholar]

- Redman, L. How to Draw Caricatures; McGraw-Hill Education: New York, NY, USA, 1984. [Google Scholar]

- Blair, P. Animation: Learning How to Draw Animated Cartoons. In Walter T. Foster Art Books; Foster Art Service: Laguna Beach, CA, USA, 1949. [Google Scholar]

- Hughes, A. Learn to Draw Caricatures; HarperCollins: New York, NY, USA, 1999. [Google Scholar]

- Cohn, N. A different kind of cultural frame: An analysis of panels in American comics and Japanese manga. Image Narrat. 2011, 12, 120–134. [Google Scholar]

- Cavalier, S.; Chomet, S. The World History of Animation; University of California Press Berkeley: Berkeley, CA, USA, 2011. [Google Scholar]

- Meisner, G. The Golden Ratio is Stephen Silver’s Secret Weapon of Character Design. Available online: https://www.goldennumber.net/golden-ratio-cartoon-character-design/ (accessed on 31 October 2016).

- Liu, M.; Wang, P. Study on image design in animation. Asian Soc. Sci. 2010, 6, 39. [Google Scholar] [CrossRef][Green Version]

- Liu, K.; Chen, J.-H.; Chang, K.-M. Study of Facial Features of American and Japanese Cartoon Characters. Symmetry 2019, 11, 664. [Google Scholar] [CrossRef]

- Yu, H.; Yang, J. A direct LDA algorithm for high-dimensional data—with application to face recognition. Pattern Recognit. 2001, 34, 2067–2070. [Google Scholar] [CrossRef]

- Larrain, T.; Bernhard, J.S.; Mery, D.; Bowyer, K. Face recognition using sparse fingerprint classification algorithm. IEEE Trans. Inf. Forensics Secur. 2017, 12, 1646–1657. [Google Scholar] [CrossRef]

- Lee, P.-H.; Hung, J.-Y.; Hung, Y.-P. Automatic gender recognition using fusion of facial strips. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 1140–1143. [Google Scholar]

- Mahmood, S.F.; Marhaban, M.H.; Rokhani, F.Z.; Samsudin, K.; Arigbabu, O.A. A fast adaptive shrinkage/thresholding algorithm for extreme learning machine and its application to gender recognition. Neurocomputing 2017, 219, 312–322. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, Z. Facial Eigen-Feature based gender recognition with an improved genetic algorithm. J. Intell. Fuzzy Syst. 2019, 37, 4891–4902. [Google Scholar] [CrossRef]

- Wang, L.; Tan, T.; Ning, H.; Hu, W. Silhouette analysis-based gait recognition for human identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1505–1518. [Google Scholar] [CrossRef]

- Gadaleta, M.; Rossi, M. Idnet: Smartphone-based gait recognition with convolutional neural networks. Pattern Recognit. 2018, 74, 25–37. [Google Scholar] [CrossRef]

- Gunes, H.; Schuller, B.; Pantic, M.; Cowie, R. Emotion representation, analysis and synthesis in continuous space: A survey. In Proceedings of the Face and Gesture 2011, Santa Barbara, CA, USA, 21–25 March 2011; pp. 827–834. [Google Scholar]

- Oyedotun, O.K.; Khashman, A.J. Deep learning in vision-based static hand gesture recognition. Neural Comput. Appl. 2017, 28, 3941–3951. [Google Scholar] [CrossRef]

- Song, Y.-Y.; Ying, L.U. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar] [PubMed]

- Hoai, M.; Lan, Z.-Z.; De la Torre, F. Joint segmentation and classification of human actions in video. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 3265–3272. [Google Scholar]

- Wang, L. Support Vector Machines: Theory and Applications; Springer Science & Business Media: Berlin, Germany, 2005. [Google Scholar]

- Thanh Noi, P.; Kappas, M. Comparison of random forest, k-nearest neighbor, and support vector machine classifiers for land cover classification using Sentinel-2 imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef] [PubMed]

- Da Silva, I.N.; Spatti, D.H.; Flauzino, R.A.; Liboni, L.H.; dos Reis Alves, S.F. Artificial Neural Networks; Springer International Publishing: Cham, Switzerland, 2017; pp. 21–28. [Google Scholar]

- Rauber, P.E.; Fadel, S.G.; Falcao, A.X.; Telea, A.C. Visualizing the hidden activity of artificial neural networks. IEEE Trans. Vis. Comput. Graph. 2016, 23, 101–110. [Google Scholar] [CrossRef]

- Schofield, D.; Nagrani, A.; Zisserman, A.; Hayashi, M.; Matsuzawa, T.; Biro, D.; Carvalho, S. Chimpanzee face recognition from videos in the wild using deep learning. Sci. Adv. 2019, 5, eaaw0736. [Google Scholar] [CrossRef]

- Sarker, I.H.; Kayes, A.; Watters, P. Effectiveness analysis of machine learning classification models for predicting personalized context-aware smartphone usage. J. Big Data 2019, 6, 57. [Google Scholar] [CrossRef]

- Gordon, C.C.; Churchill, T.; Clauser, C.E.; Bradtmiller, B.; McConville, J.T. Anthropometric Survey of US Army Personnel: Methods and Summary Statistics 1988; Anthropology Research Project Inc: Yellow Springs, OH, USA, 1989. [Google Scholar]

- Hatakeyama, K.; Fukui, Y.; Okumura, S. On the proportion of the japanese according to the change of the period. Jpn. J. Ergon. 1990, 26, 378–379. [Google Scholar] [CrossRef]

- Terry Therneau, Beth Atkinson, Brian Ripley. CARN-Package Rpart. Available online: https://cran.r-project.org/web/packages/rpart/index.html (accessed on 12 April 2019).

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Jain, A.; Zongker, D. Feature selection: Evaluation, application, and small sample performance. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 153–158. [Google Scholar] [CrossRef]

- Sarker, I.H.; Abushark, Y.B.; Alsolami, F.; Khan, A.I. IntruDTree: A Machine Learning Based Cyber Security Intrusion Detection Model. Symmetry 2020, 12, 754. [Google Scholar] [CrossRef]

- Sarker, I.H.; Colman, A.; Han, J.; Khan, A.I.; Abushark, Y.B.; Salah, K. Behavdt: A behavioral decision tree learning to build user-centric context-aware predictive model. Mob. Netw. Appl. 2020, 25, 1151–1161. [Google Scholar] [CrossRef]

- Sarker, I.H. Context-aware rule learning from smartphone data: Survey, challenges and future directions. J. Big Data 2019, 6, 95. [Google Scholar] [CrossRef]

| Length Code | Tracking Point Code | Length Definition |

|---|---|---|

| L1 | A1-A2 | Head length |

| L2 | A3-A4 | Head width |

| L3 | B1-B2 | Neck upper width |

| L4 | B3-B4 | Neck bottom width |

| L5 | A2-C1 | Neck length |

| L6 | C1-C8 | Body length |

| L7 | C2-C3 | Chest width |

| L8 | C4-C5 | Waist width |

| L9 | C6-C7 | Buttock width |

| L10 | D1-E1 | Arm length |

| L11 | D3-C2 | Upper arm width |

| L12 | D4-D5 | Forearm width |

| L13 | D2-D6 | Shoulder width |

| L14 | E1-E3 | Hand length |

| L15 | E2-E4 | Hand width |

| L16 | F1-F2 | Leg length |

| L17 | F3-F4 | Thigh width |

| L18 | F5-F6 | Calf width |

| L19 | F2-G2 | Feet length |

| L20 | G1-G3 | Feet width |

| Class numbers and features | Class Number | Class Features |

| 2 | USA (N = 90); Japan (N = 90) Male (N = 105); Female (N = 75) USA—adult animated characters (N = 71); Japan—adult animated characters (N = 77) USA—male adult animated characters (N = 42); Japan—male adult animated characters (N = 41) USA—female adult animated characters (N = 29); Japan—female adult animated characters (N = 36) | |

| 4 | USA—male adult animated characters; Japan—male adult animated characters USA—female adult animated characters; Japan—female adult animated characters | |

| 5 | USA—male adult animated characters; Japan—male adult animated characters USA—female adult animated characters; Japan—female adult animated characters All children animated characters (N = 32) | |

| 6 | USA—male adult animated characters; Japan—male adult animated characters USA—female adult animated characters Japan—female adult animated characters USA—children animated characters (N = 19); Japan—children animated characters (N = 13) | |

| 8 | USA—male adult animated characters; Japan—male adult animated characters USA—female adult animated characters; Japan—female adult animated characters USA—boy animated characters (N = 15); USA—girl animated characters (N = 4) Japan—boy animated characters (N = 7); Japan—girl animated characters (N = 6) | |

| Body part | All body features | |

| All head features | ||

| All chest features | ||

| All feet features | ||

| All hand features | ||

| Code Number | Length Definitions | USA | JAPAN | USA_M | USA_MN | JAPAN_M | JAPAN_MN | USA_F | USA_FN | JAPAN_F | JAPAN_FN | USA-Child | JAPAN-Child |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L1 | Head length | 314.52 (135.22) | 226.77 *** (95.28) | 259.39 (95.13) | 151 C | 214.20 * (96.24) | 169 β | 277.73 (108.72) | 152 C | 201.03 ** (93.55) | 149 β | 458.63 (131.87) | 293.85 *** (54.29) |

| L2 | Head width | 282.75 (137.87) | 193.13 *** (133.78) | 222.98 (95.98) | 114.35 C | 192.52 (161.21) | 119 β | 242.78 (90.00) | 118 C | 172.76 * (121.15) | 115 β | 446.51 (135.01) | 241.72 *** (60.95) |

| L3 | Neck upper width | 61.02 (65.26) | 52.07 (28.77) | 66.43 (76.39) | 62.00 | 58.91 (31.79) | 67.03 | 63.35 (49.15) | 40 A | 44.57 (24.02) | 46.27 | 46.33 (53.98) | 58.95 (11.23) |

| L4 | Neck bottom width | 71.86 (80.78) | 63.72 (39.66) | 79.18 (92.68) | 73.03 | 73.47 (47.23) | 67.00 | 75.64 (68.01) | 50.00 A | 53.64 (29.56) | 52.00 | 50.14 (55.62) | 72.35 (16.01) |

| L5 | Neck length | 38.58 (59.06) | 27.46 (16.10) | 36.98 (43.01) | 28.28 | 29.4 (20.11) | 28.02 | 39.85 (21.15) | 30.36 A | 26.82 ** (10.75) | 23.09 | 48.29 (111.99) | 27.96 (7.05) |

| L6 | Body length | 413.27 (142.32) | 359.50 ** (76.07) | 473.07 (143.03) | 366 C | 357.49 *** (68.99) | 373.00 | 338.10 (63.00) | 328.00 | 350.49 (65.74) | 398 γ | 399.32 (140.78) | 354.38 (37.33) |

| L7 | Chest width | 260.72 (154.51) | 215.60 * (84.17) | 294.90 (163.23) | 210 B | 229.48 * (72.28) | 206 α | 181.43 (103.86) | 176.05 | 191.59 (90.53) | 196.43 | 277.34 (143.62) | 193.56 * (35.45) |

| L8 | Waist width | 267.29 (180.42) | 204.69 ** (88.59) | 306.60 (204.66) | 197.04 B | 214.72 * (76.4) | 188 α | 168.03 (121.45) | 141.00 | 170.71 (95.81) | 167.00 | 301.23 (154.24) | 214.00 (39.29) |

| L9 | Buttock width | 282.36 (159.73) | 243.73 * (99.78) | 302.81 (187.64) | 234.07 A | 238.99 * (66.55) | 239.00 | 230.46 (111.57) | 200.00 | 226.13 (124.81) | 242.10 | 297.38 (156.32) | 254.36 (61.51) |

| L10 | Arm length | 386.24 (106.39) | 368.18 (79.62) | 433.07 (102.92) | 356.05 C | 384.55 * (77.93) | 360.14 | 349.32 (44.80) | 350.07 | 363.32 (64.28) | 371.56 | 336.50 (100.62) | 319.28 (41.99) |

| L11 | Upper arm width | 86.11 (66.17) | 66.97 (73.98) | 91.43 (56.75) | 50.21 C | 72.69 (67.96) | 53.71 | 67.62 (41.99) | 44.40 B | 68.86 (97.66) | 40.02 | 90.59 (70.82) | 46.35 * (12.82) |

| L12 | Forearm width | 52.80 (30.85) | 45.78 (32.41) | 60.44 (34.15) | 45.89 A | 52.02 (44.65) | 39.81 | 35.22 (11.91) | 35.13 | 39.27 (16.03) | 36.67 | 57.59 (25.04) | 38.96 (10.82) |

| L13 | Shoulder width | 332.64 (178.71) | 285.88 * (122.55) | 384.49 (199.07) | 295.81 B | 307.13 (142.69) | 271.64 | 239.43 (113.02) | 239.01 | 256.81 (99.23) | 250.24 | 337.92 (155.60) | 250.76 (44.35) |

| L14 | Hand length | 115.91 (41.14) | 97.42 *** (29.83) | 123.85 (35.76) | 120.00 | 105.19 * (27.14) | 107.02 | 92.59 (23.36) | 100.18 | 94.66 (31.76) | 93.94 | 118.14 (48.10) | 79.45 ** (12.80) |

| L15 | Hand width | 91.90 (38.73) | 68.33 *** (32.55) | 91.25 (36.29) | 65.62 C | 75.84 * (39.77) | 61.98 α | 71.98 (18.09) | 60 C | 61.09 (24.74) | 58.05 | 110.19 (45.34) | 59.27 *** (12.84) |

| L16 | Leg length | 413.44 (151.32) | 491.92 *** (146.41) | 413.49 (124.90) | 538.00 C | 492.71 ** (88.51) | 478.00 | 510.13 (120.07) | 542.53 | 505.04 (155.26) | 478.65 | 267.44 (104.75) | 478.21 ** (221.70) |

| L17 | Thigh width | 110.13 (62.03) | 90.06 * (40.45) | 115.49 (70.81) | 101.02 C | 110.43 (30.16) | 86 | 89.23 (45.64) | 90.14 | 65.46 * (35.34) | 102.02 γ | 120.42 (61.37) | 83.96 (30.37) |

| L18 | Calf width | 54.04 (40.20) | 48.78 (24.69) | 59.51 (48.06) | 48.04 | 59.66 (26.09) | 42.10 | 31.93 (16.71) | 44.18 C | 36.91 (18.39) | 46.04 β | 67.91 (33.56) | 47.52 (17.33) |

| L19 | Feet length | 109.19 (158.63) | 98.99 (125.31) | 85.15 (31.43) | 83.02 | 86.24 (22.86) | 98.23 | 153.61 (279.30) | 104.92 | 122.17 (208.88) | 104.24 | 99.53 (39.10) | 86.94 (15.89) |

| L20 | Feet width | 89.01 (49.12) | 86.48 (32.92) | 96.48 (48.94) | 71.56 B | 99.49 (31.74) | 71.17 γ | 56.91 (36.15) | 59.23 | 70.35 (31.68) | 48.04 γ | 120.28 (45.80) | 84.35 * (18.70) |

| Code Number | Length of Body Parts | Male | Female | M_A | F_A | USA_M | USA_F | JAPNA_M | JAPAN_F |

|---|---|---|---|---|---|---|---|---|---|

| L1 | Head length | 275.62 (127.85) | 263.83 (113.78) | 235.60 (97.75) | 241.89 (106.25) | 259.39 (95.13) | 277.73 (108.72) | 214.20 (96.24) | 201.03 (93.55) |

| L2 | Head width | 247.14 (154.76) | 225.34 (119.96) | 206.95 (134.34) | 205.44 (112.52) | 222.98 (95.98) | 242.78 (90.00) | 192.52 (161.21) | 172.76 (121.15) |

| L3 | Neck upper width | 61.37 (57.40) | 49.94 (38.27) | 62.47 (57.12) | 53.33 (38.70) | 66.43 (76.39) | 63.35 (49.15) | 58.91 (31.79) | 44.57 * (24.02) |

| L4 | Neck bottom width | 73.48 (71.36) | 60.01 (50.35) | 76.17 (71.95) | 63.90 (51.95) | 79.18 (92.68) | 75.64 (68.01) | 73.47 (47.23) | 53.64 * (29.56) |

| L5 | Neck length | 35.41 (55.21) | 29.74 (18.34) | 32.99 (32.98) | 32.90 (17.57) | 36.98 (43.01) | 39.85 (21.15) | 29.4 (20.11) | 26.82 (10.75) |

| L6 | Body Length length | 409.99 (127.63) | 354.09 *** (69.49) | 412.24 (124.08) | 344.71 *** (64.23) | 473.07 (143.03) | 338.10 *** (63.00) | 357.49 (68.99) | 350.49 (65.74) |

| L7 | Chest width | 265.11 (132.72) | 201.28 *** (101.41) | 260.47 (127.41) | 186.85 *** (96.27) | 294.90 (163.23) | 181.43 ** (103.86) | 229.48 (72.28) | 191.59 (90.53) |

| L8 | Waist width | 266.59 (154.08) | 194.12 *** (116.85) | 258.24 (157.21) | 169.46 *** (107.59) | 306.60 (204.66) | 168.03 ** (121.45) | 214.72 (76.4) | 170.71* (95.81) |

| L9 | Buttock width | 273.06 (138.15) | 249.34 (122.82) | 269.22 (140.58) | 228.15 (117.84) | 302.81 (187.64) | 230.46 (111.57) | 238.99 (66.55) | 226.13 (124.81) |

| L10 | Arm length | 391.66 (96.62) | 357.44 ** (64.21) | 407.54 (93.25) | 356.79 *** (56.03) | 433.07 (102.92) | 349.32 *** (44.80) | 384.55 (77.93) | 363.32 (64.28) |

| L11 | Upper arm width | 83.85 (71.45) | 66.54 (68.55) | 81.57 (63.19) | 68.28 (76.28) | 91.43 (56.75) | 67.62 (41.99) | 72.69 (67.96) | 68.86 (97.66) |

| L12 | Forearm width | 56.15 (37.81) | 39.91 *** (15.85) | 56.01 (39.98) | 37.38 *** (14.28) | 60.44 (34.15) | 35.22 *** (11.91) | 52.02 (44.65) | 39.27 (16.03) |

| L13 | Shoulder width | 341.17 (168.96) | 265.60 *** (112.13) | 343.77 (174.91) | 248.70 *** (105.34) | 384.49 (199.07) | 239.43 ** (113.02) | 307.13 (142.69) | 256.81 (99.23) |

| L14 | Hand length | 115.78 (37.08) | 94.19 *** (28.98) | 114.03 (32.68) | 93.70 *** (27.94) | 123.85 (35.76) | 92.59 *** (23.36) | 105.19 (27.14) | 94.66 (31.76) |

| L15 | Hand width | 87.94 (42.01) | 69.41 *** (24.62) | 83.14 (38.69) | 66.17 ** (22.39) | 91.25 (36.29) | 71.98 * (18.09) | 75.84 (39.77) | 61.09 (24.74) |

| L16 | Leg length | 424.78 (127.35) | 490.86 ** (163.16) | 455.19 (113.75) | 507.41 * (138.82) | 413.49 (124.90) | 510.13 ** (120.07) | 492.71 (88.51) | 505.04 (155.26) |

| L17 | Thigh width | 113.51 (52.99) | 81.73 *** (45.92) | 112.83 (53.10) | 76.55 *** (41.86) | 115.49 (70.81) | 89.23 (45.64) | 110.43 (30.16) | 65.46 *** (35.34) |

| L18 | Calf width | 61.37 (37.31) | 37.78 *** (19.51) | 59.59 (37.84) | 34.59 *** (17.66) | 59.51 (48.06) | 31.93 ** (16.71) | 59.66 (26.09) | 36.91 *** (18.39) |

| L19 | Feet length | 88.73 (28.67) | 125.11 (216.59) | 85.73 (27.08) | 136.84 (242.64) | 85.15 (31.43) | 153.61 (279.30) | 86.24 (22.86) | 122.17 (208.88) |

| L20 | Feet width | 100.48 (40.21) | 70.32 *** (35.27) | 98.07 (40.54) | 64.08 *** (34.22) | 96.48 (48.94) | 56.91 ** (36.15) | 99.49 (31.74) | 70.35 *** (31.68) |

| Code Number | Length of Body Parts | Leading Role | Supporting Role | AL_r | AS_r | USA_AL_r | USA_AS_r | JAPAN_AL_r | JAPAN_AS_r |

|---|---|---|---|---|---|---|---|---|---|

| L1 | Head length | 260.31 (115.85) | 276.07 (125.12) | 216.32 (60.22) | 245.94 (115.90) | 251.37 (68.63) | 273.21 (110.32) | 195.04 (43.31) | 216.82 (115.83) |

| L2 | Head width | 226.14 (113.12) | 244.14 (153.96) | 184.85 (67.97) | 216.88 (144.01) | 234.42 (68.58) | 230.64 (101.28) | 154.75 (47.49) | 202.19 (178.90) |

| L3 | Neck upper width | 54.45 (43.71) | 57.65 (53.75) | 61.50 (46.46) | 56.93 (51.67) | 76.86 (67.86) | 60.82 (64.72) | 52.17 (23.47) | 52.77 (32.73) |

| L4 | Neck bottom width | 68.61 (58.33) | 67.36 (66.35) | 77.56 (63.03) | 67.40 (64.52) | 97.85 (93.97) | 70.31 (77.32) | 65.24 (28.71) | 64.28 (47.94) |

| L5 | Neck length | 30.56 (17.11) | 34.31 (52.48) | 34.06 (15.70) | 32.41 (31.42) | 39.52 (21.60) | 37.77 (38.85) | 30.74 (9.73) | 26.67 (19.67) |

| L6 | Body length | 369.94 (84.69) | 395.03 (121.04) | 369.50 (85.42) | 388.85 (116.37) | 416.87 (112.94) | 412.99 (140.33) | 340.73 (45.40) | 363.06 (77.20) |

| L7 | Chest width | 218.46 (110.81) | 248.51 (130.05) | 207.97 (103.69) | 237.89 (126.75) | 250.82 (155.18) | 243.24 (150.40) | 181.95 (37.29) | 232.17 * (96.76) |

| L8 | Waist width | 205.97 (108.91) | 251.77 * (157.20) | 187.04 (104.40) | 234.91 (158.19) | 234.87 (151.88) | 249.99 (197.52) | 158.00 (42.15) | 218.80 ** (100.63) |

| L9 | Buttock width | 239.12 (104.77) | 275.62 (143.17) | 224.56 (98.76) | 264.22 (144.59) | 269.01 (147.04) | 271.93 (168.42) | 197.57 (32.90) | 255.99 * (115.23) |

| L10 | Arm length | 362.68 (72.74) | 384.85 (91.50) | 376.58 (64.46) | 389.38 (90.43) | 401.64 (85.94) | 394.55 (95.17) | 361.37 (41.95) | 383.86 (85.83) |

| L11 | Upper arm width | 60.91 (34.66) | 84.75 * (82.43) | 62.96 (36.26) | 82.00 (80.27) | 84.75 (49.28) | 79.66 (53.18) | 49.74 (14.92) | 84.51 (102.22) |

| L12 | Forearm width | 47.03 (27.69) | 50.48 (33.38) | 45.63 (26.37) | 48.86 (35.38) | 55.40 (39.61) | 47.24 (24.99) | 39.69 (10.31) | 50.59 (44.12) |

| L13 | Shoulder width | 286.62 (140.78) | 321.16 (156.80) | 282.10 (145.39) | 311.58 (159.72) | 336.21 (224.69) | 315.53 (164.86) | 249.26 (39.61) | 307.36 (155.83) |

| L14 | Hand length | 105.35 (32.32) | 107.36 (37.12) | 106.12 (30.92) | 104.53 (32.98) | 116.34 (39.37) | 107.94 (32.70) | 99.92 (23.10) | 100.88 (33.25) |

| L15 | Hand width | 74.83 (31.60) | 82.89 (39.09) | 71.90 (31.76) | 77.51 (34.35) | 89.41 (42.21) | 80.43 (26.07) | 61.28 (16.65) | 74.38 (41.51) |

| L16 | Leg length | 458.46 (101.73) | 449.64 (166.01) | 491.65 (72.00) | 471.59 (147.52) | 450.01 (90.8) | 457.85 (143.68) | 516.94 (42.50) | 486.26 (151.79) |

| L17 | Thigh width | 93.36 (46.12) | 103.63 (55.29) | 91.66 (44.11) | 99.38 (54.93) | 102.75 (62.11) | 104.46 (62.70) | 84.92 (27.57) | 93.96 (45.30) |

| L18 | Calf width | 50.77 (31.62) | 51.75 (34.00) | 48.72 (28.91) | 48.48 (34.96) | 48.82 (41.68) | 46.95 (39.71) | 48.65 (18.23) | 50.11 (29.42) |

| L19 | Feet length | 89.48 (28.48) | 111.77 (175.21) | 89.61 (31.05) | 117.51 (198.61) | 89.64 (38.43) | 124.31 (217.49) | 89.59 (26.39) | 110.24 (178.48) |

| L20 | Feet width | 87.90 (35.69) | 87.67 (43.57) | 82.66 (37.51) | 83.27 (43.36) | 79.89 (54.04) | 78.91 (45.93) | 84.35 (23.54) | 87.94 (40.44) |

| Two Class | Acc. Train | Sen. Train | Spe. Train | Acc. Test | Sen. Test | Spe. Test |

|---|---|---|---|---|---|---|

| USA all vs. Japan all | 96.20 | 95.73 | 96.67 | 69.52 | 69.92 | 69.51 |

| male vs. female | 95.64 | 94.41 | 96.52 | 60.71 | 53.24 | 66.53 |

| USA-adult vs. Japan-adult | 96.30 | 96.10 | 96.51 | 67.07 | 68.77 | 65.77 |

| USA_M vs. JAPAN_M | 96.00 | 95.71 | 96.20 | 65.79 | 67.43 | 64.98 |

| USA_F vs. JAPAN_F | 95.25 | 96.00 | 94.10 | 68.13 | 73.04 | 63.12 |

| Four class: | 90.18 | 43.03 | ||||

| Five class: | 89.24 | 43.23 | ||||

| Six class: | 88.24 | 38.29 | ||||

| Eight class: | 79.24 | 19.82 |

| Feature | Importance | Feature | Importance | Feature | Importance |

|---|---|---|---|---|---|

| L2 | 10.06 | L18 | 4.67 | L5 | 2.73 |

| L1 | 9.54 | L6 | 4.13 | L15 | 1.73 |

| L8 | 6.67 | L10 | 3.93 | L4 | 1.32 |

| L16 | 6.00 | L11 | 3.40 | L3 | 1.07 |

| L13 | 5.64 | L20 | 3.13 | L14 | 1.06 |

| L9 | 5.37 | L17 | 3.11 | ||

| L7 | 5.33 | L12 | 2.90 |

| Body Part | Features | Acc. Train | Sen. Train | Spe. Train | Acc. Test | Sen. Test | Spe. Test |

|---|---|---|---|---|---|---|---|

| H | L1 | 83.96 | 86.74 | 80.83 | 56.31 | 60.55 | 52.46 |

| L2 | 82.52 | 83.01 | 81.90 | 61.49 | 64.85 | 58.39 | |

| L1 + L2 | 89.41 | 90.26 | 88.42 | 63.44 | 66.16 | 60.99 | |

| C | L8 | 84.90 | 86.57 | 83.01 | 58.61 | 63.45 | 54.28 |

| L9 | 82.66 | 83.94 | 81.13 | 57.27 | 59.85 | 55.35 | |

| L13 | 85.66 | 85.01 | 86.29 | 64.34 | 66.60 | 62.34 | |

| L8 + L9 | 88.79 | 89.12 | 88.37 | 57.95 | 61.90 | 54.61 | |

| L8 + L13 | 90.66 | 92.21 | 88.92 | 61.53 | 64.20 | 59.26 | |

| L9 + L13 | 89.04 | 89.59 | 88.39 | 60.84 | 64.39 | 57.49 | |

| L8 + L9 + L13 | 91.42 | 91.95 | 90.82 | 60.72 | 63.84 | 57.79 | |

| L | L16 + L17 | 81.84 | 82.01 | 81.54 | 55.04 | 58.90 | 51.36 |

| L18 + L19 | 72.75 | 79.08 | 65.60 | 45.60 | 51.89 | 39.95 | |

| L16 + L18 | 88.05 | 88.90 | 87.07 | 57.27 | 60.12 | 54.74 | |

| Ha | L10 + L11 | 83.60 | 86.49 | 80.37 | 57.48 | 60.45 | 55.00 |

| H + C | L1 + L2 + L8 + L9 + L13 | 94.48 | 95.48 | 93.37 | 67.46 | 68.96 | 66.27 |

| H + L | L1 + L2 + L16 + L18 | 92.88 | 93.21 | 92.50 | 65.28 | 67.93 | 62.70 |

| H + Ha | L1 + L2 + L10 | 92.09 | 92.22 | 91.91 | 63.97 | 67.53 | 60.72 |

| C + L | 93.56 | 93.88 | 93.18 | 65.26 | 67.33 | 63.32 | |

| C + Ha | 92.55 | 92.89 | 92.14 | 59.06 | 62.33 | 56.12 | |

| L + Ha | 90.69 | 90.67 | 90.66 | 58.43 | 60.03 | 57.30 | |

| H + C + L | 94.58 | 95.18 | 93.91 | 66.20 | 69.03 | 63.55 | |

| H + C + Ha | 94.96 | 95.97 | 93.86 | 66.83 | 69.28 | 64.95 | |

| C + L + Ha | 93.78 | 93.99 | 93.49 | 64.20 | 66.45 | 62.40 | |

| H + C + L + Ha | 94.79 | 95.58 | 93.91 | 66.28 | 69.83 | 62.88 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Chang, K.-M.; Liu, Y.-J.; Chen, J.-H. Animated Character Style Investigation with Decision Tree Classification. Symmetry 2020, 12, 1261. https://doi.org/10.3390/sym12081261

Liu K, Chang K-M, Liu Y-J, Chen J-H. Animated Character Style Investigation with Decision Tree Classification. Symmetry. 2020; 12(8):1261. https://doi.org/10.3390/sym12081261

Chicago/Turabian StyleLiu, Kun, Kang-Ming Chang, Ying-Ju Liu, and Jun-Hong Chen. 2020. "Animated Character Style Investigation with Decision Tree Classification" Symmetry 12, no. 8: 1261. https://doi.org/10.3390/sym12081261

APA StyleLiu, K., Chang, K.-M., Liu, Y.-J., & Chen, J.-H. (2020). Animated Character Style Investigation with Decision Tree Classification. Symmetry, 12(8), 1261. https://doi.org/10.3390/sym12081261